Paper Reviews

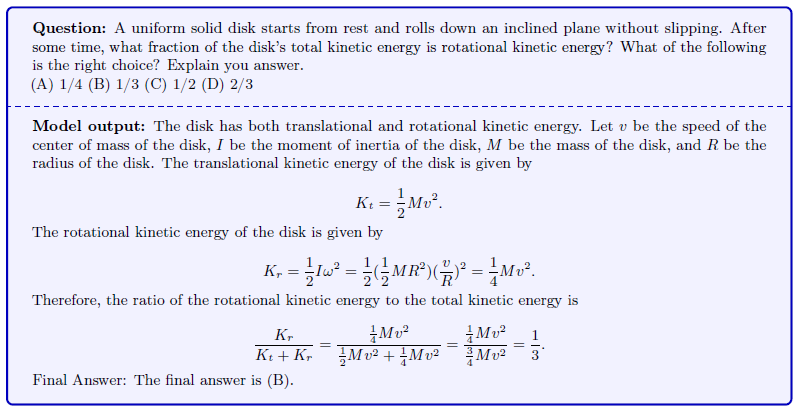

1.[논문리뷰] Solving Quantitative Reasoning Problems with Language Models : Minerva

다양한 자연어 task에서 성공을 거둔 거대 언어 모델.하지만 양적 추론(quantitative reasoning)이 필요한 task에서 고전하였다. ex) 수학, 과학, 공학 문제들…💡 Quantitative Reasoning 여러 방면에서 모델의 능력을 시험할

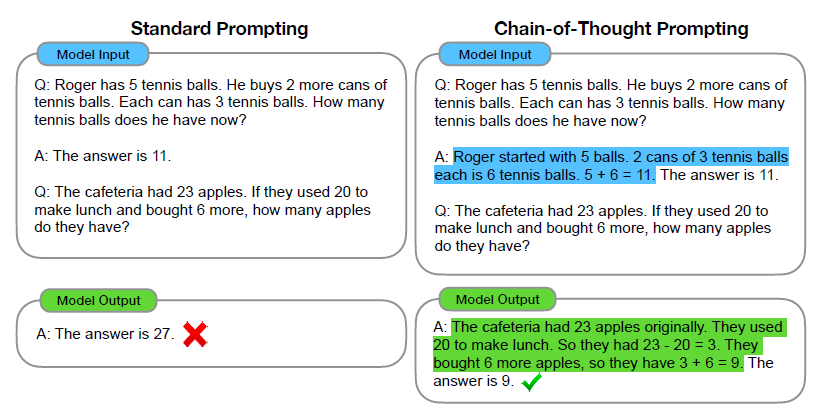

2.[논문리뷰] Chain-of-Thought Prompting Elicits Reasoning in Large Language Models

We explore how generating a chain of thought—a series of intermediate reasoningsteps—significantly improves the ability of large language models to pe

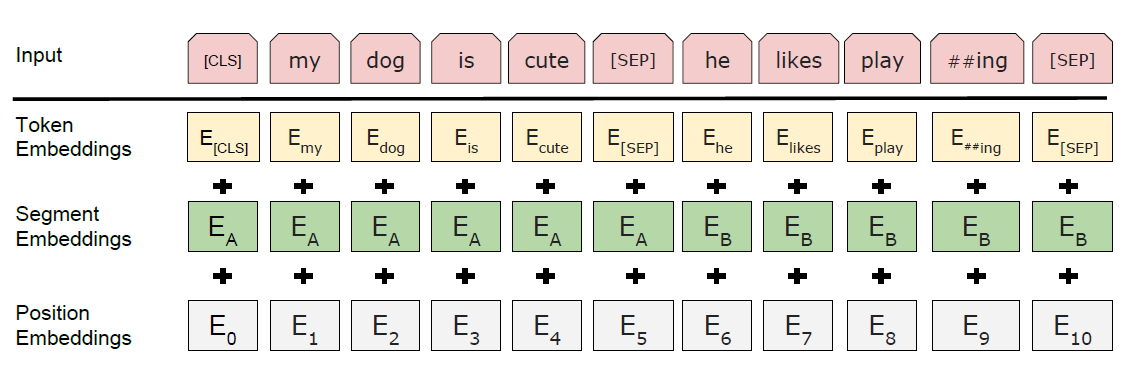

3.[논문리뷰] BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding

We introduce a new language representation model called BERT, which stands for Bidirectional Encoder Representations from Transformers. Unlike recent

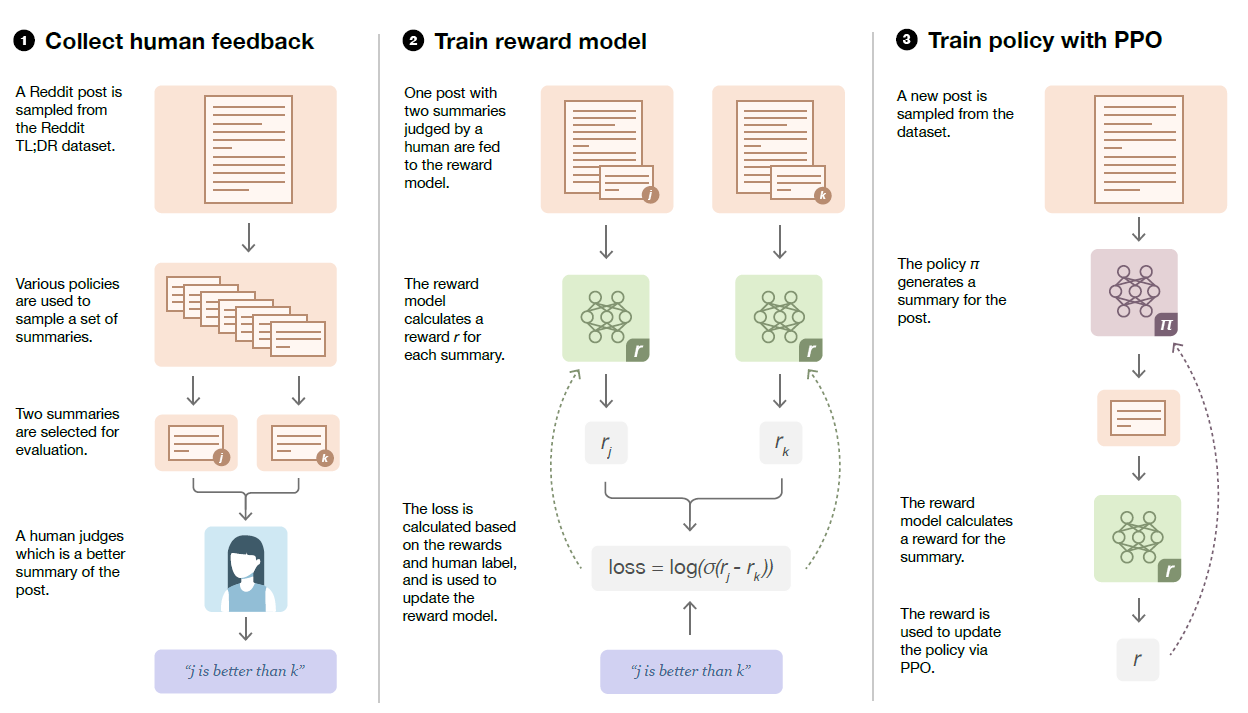

4.[논문리뷰] Learning to summarize from human feedback

As language models become more powerful, training and evaluation are increasinglybottlenecked by the data and metrics used for a particular task. For

5.[논문리뷰] Attention is all you need

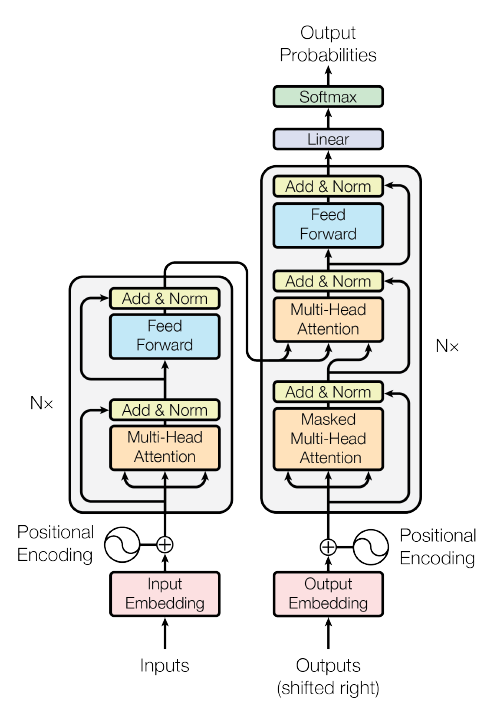

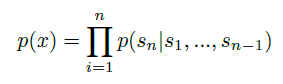

The dominant sequence transduction models are based on complex recurrent orconvolutional neural networks that include an encoder and a decoder. The be

6.[논문리뷰] Improving Language Understanding by Generative Pre-Training

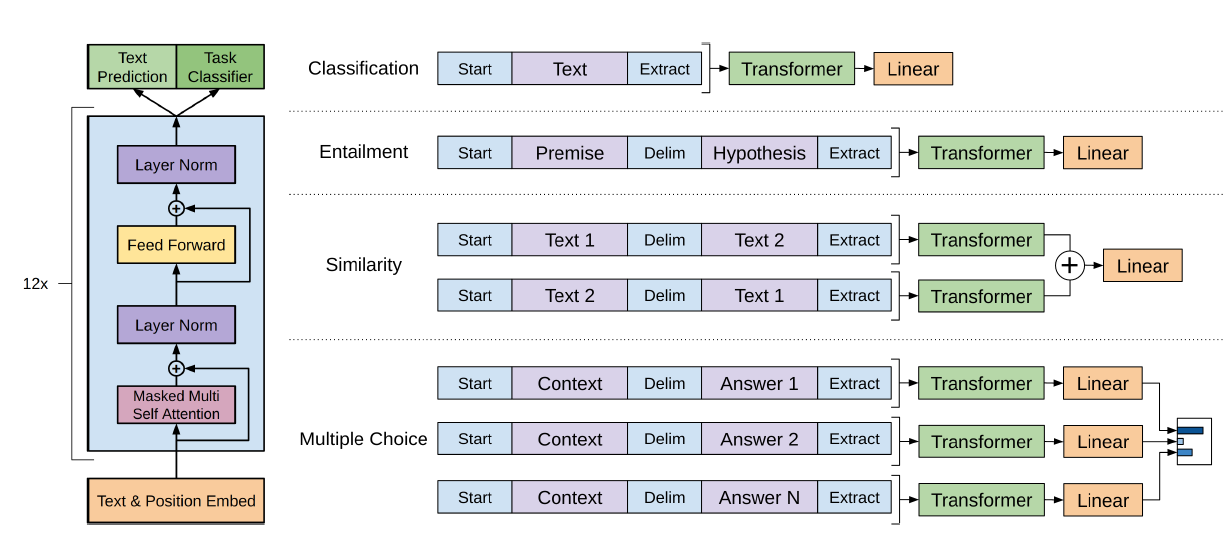

Natural language understanding comprises a wide range of diverse tasks suchas textual entailment, question answering, semantic similarity assessment,

7.[논문리뷰] An Improved Baseline for Sentence-level Relation Extraction

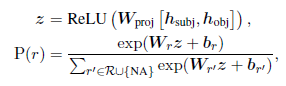

Sentence-level relation extraction (RE) aims at identifying the relationship between two entities in a sentence. Many efforts have been devoted to thi

8.[논문 리뷰] Language Models are Unsupervised Multitask Learners

거대 생성모델의 시초가 되는 GPT의 변천 과정을 살펴보기 위해 선택했다.언어 모델이 다운스트림 태스크에서 zero-shot setting 만으로 다양한 분야에서 좋은 성능을 보일 수 있음을 증명하였다.지금까지의 연구는 단일 과제에 특화된 단일 모델을 개발하는데 치중되

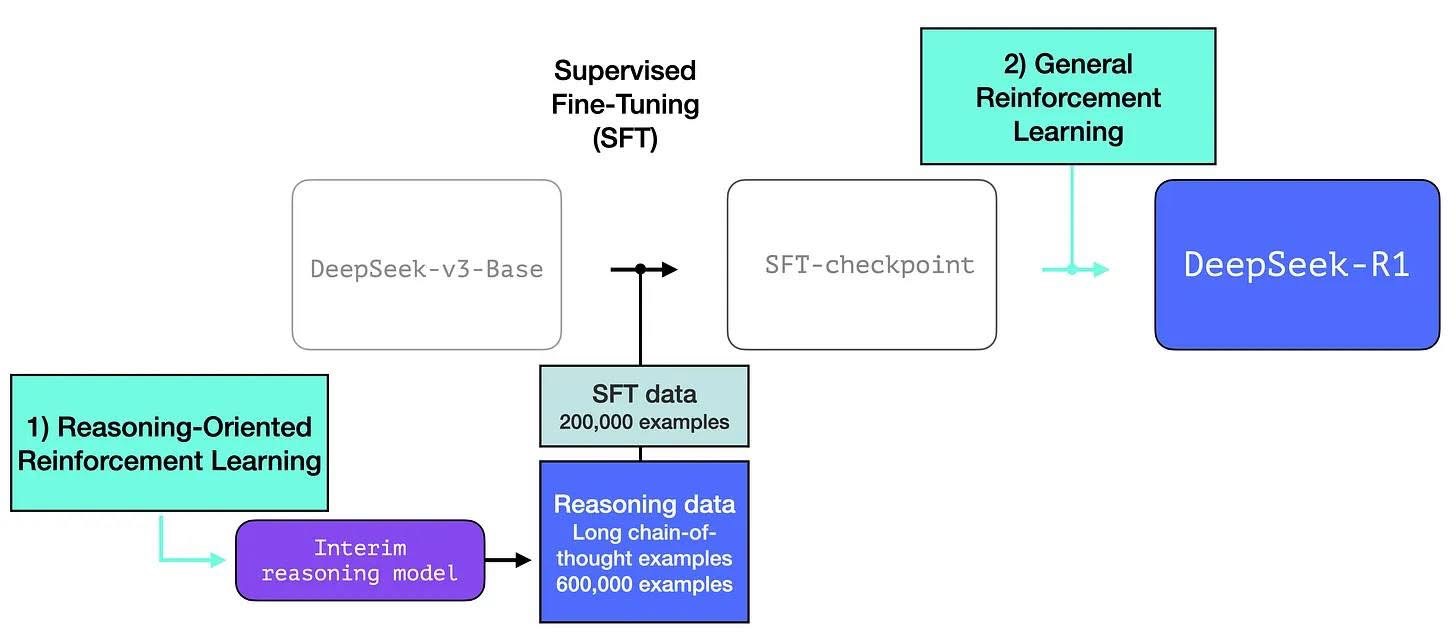

9.[논문리뷰] DeepSeek-R1: Incentivizing Reasoning Capability in LLMs via Reinforcement Learning

지난 몇 년 동안 LLM의 post training은 중요한 구성 요소로 부상됨추론 작업의 정확도사회적 가치에 부합사용자 선호도에 적응함과 동시에 사전 학습에 비해 상대적으로 최소한의 컴퓨팅 리소스만 필요OpenAI o1 시리즈가 최초 도입, CoT process의 길

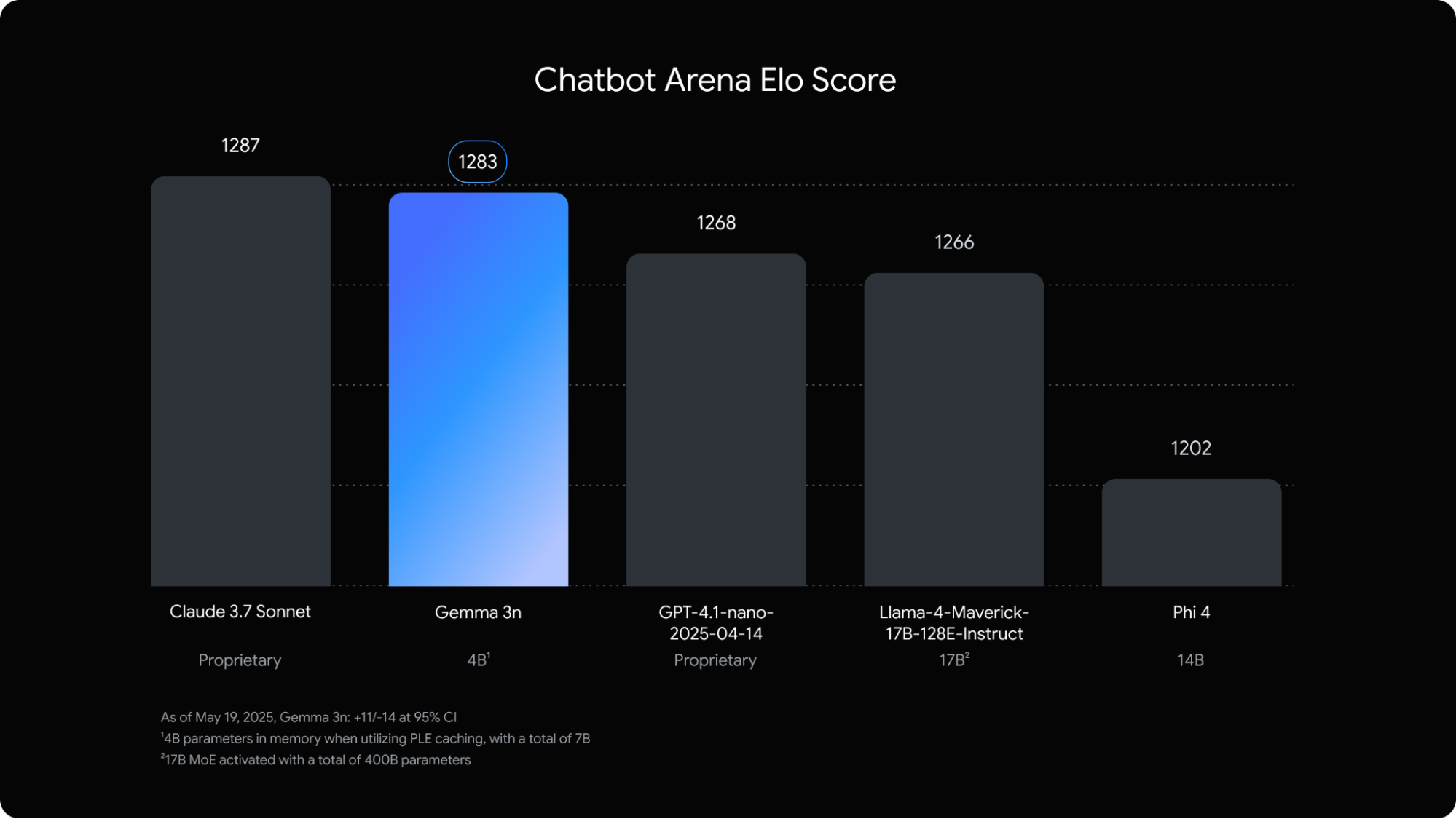

10.Google I/O 2025 - Gemma 3n

Google I/O 2025에서 새롭게 공개한 온디바이스 지향 gemma 모델개인정보 보호와 오프라인 실행에 초점텍스트, 오디오, 이미지, 영상 등 다중 모달 처리 지원현재는 텍스트랑 이미지만Per-Layer Embeddings 기술과 MatFormer 모델 아키텍쳐를