[Coursera | DL Specialization | 2 ] Improving Deep Neural Networks: Hyperparameter Tuning, Regularization and Optimization

1.Lecture Information

https://www.coursera.org/learn/deep-neural-network

2.Week 1 | Practical Aspects of Deep Learning | Setting up your Machine Learning Application

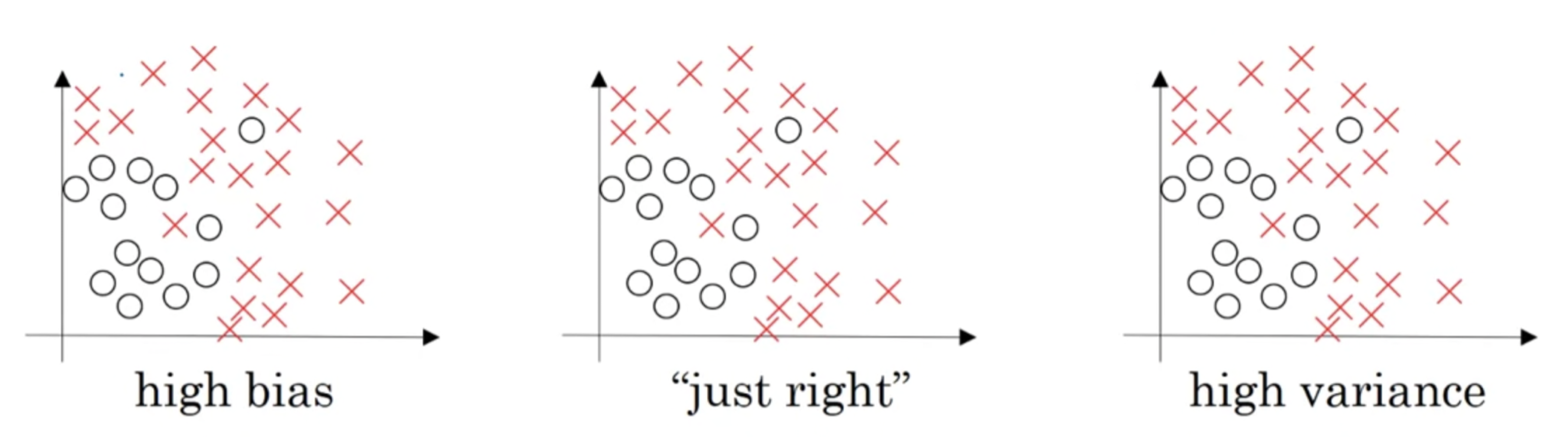

When training a neural network, you have to make a lot of decisions,such asthe number of layersthe number of hidden unitslearning ratesactivation func

3.Week 2 | Optimization Algorithms

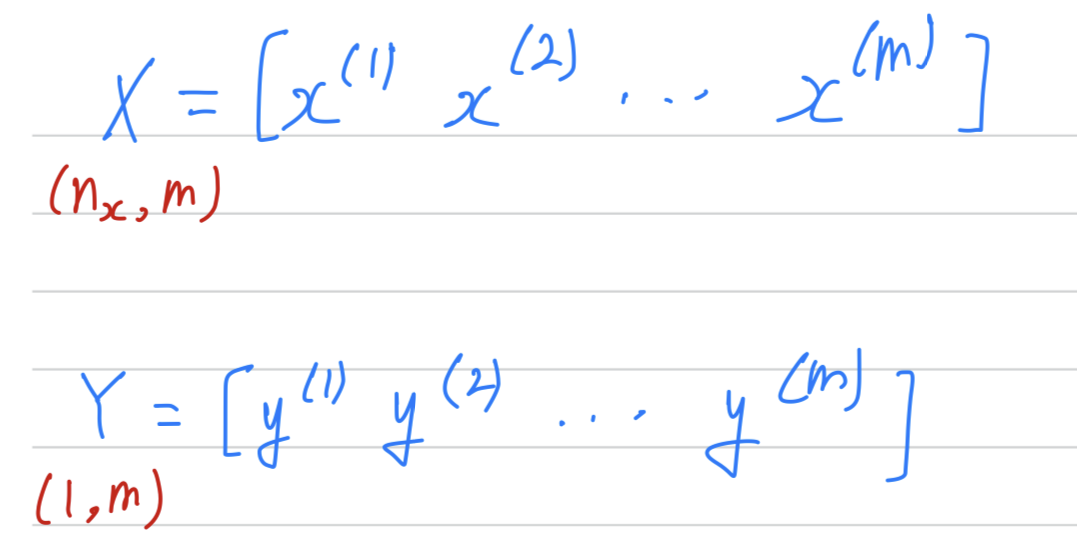

You've learned previously that vectorization allows you to efficiently compute on all $m$ examples, that allows you to process your whole training set

4.Week 3 | Hyperparameter Tuning

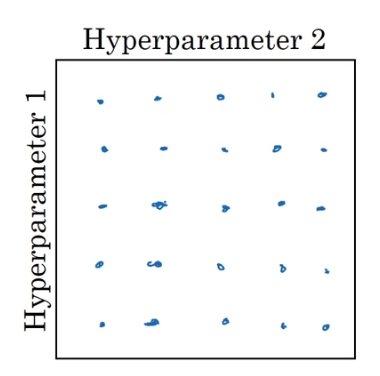

Tuning Process

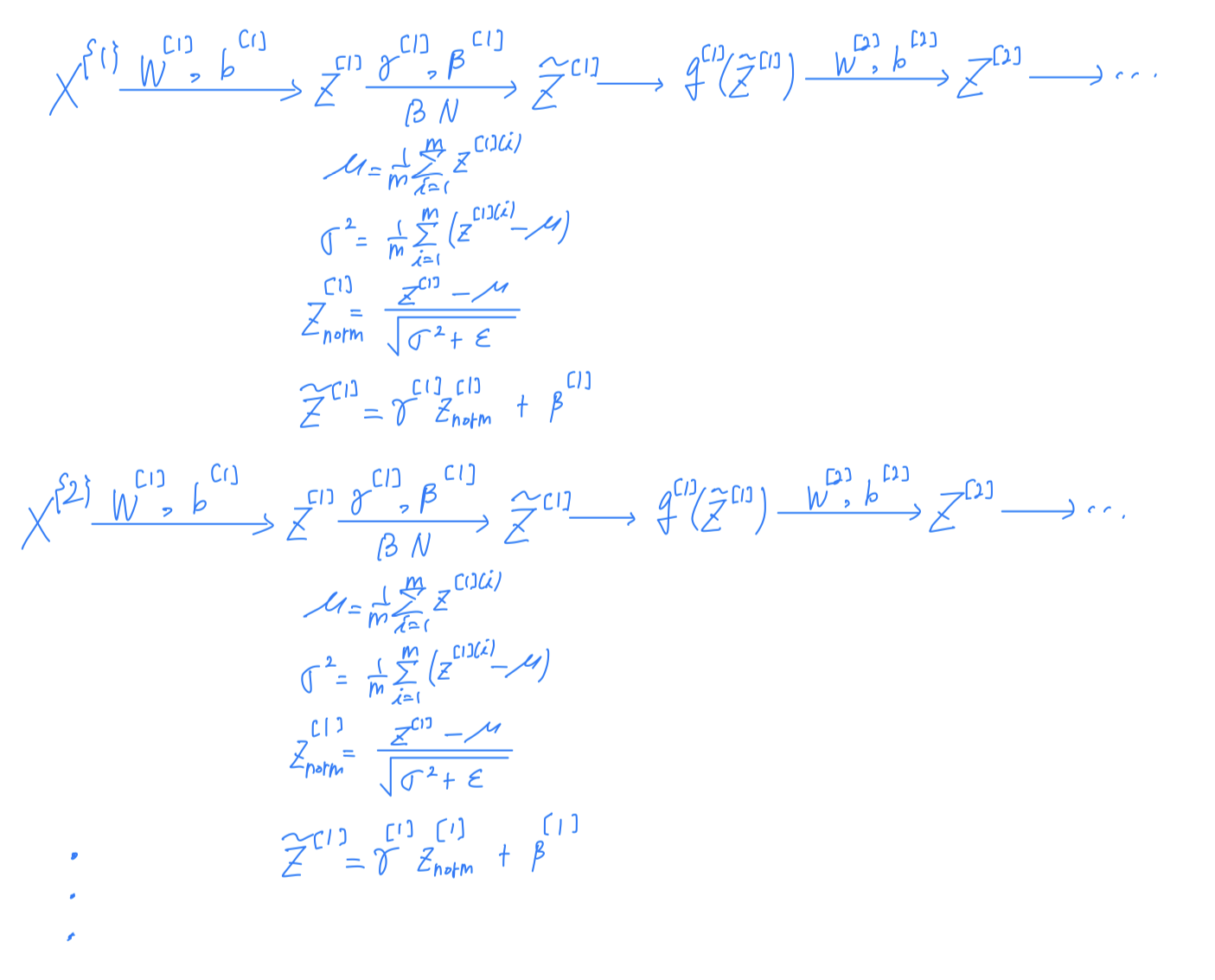

5.Week 3 | Batch Normalization

Batch normalization makes your hyperparameter search problem much easier,makes your neural network much more robust(튼튼한).And it will also enable you t

6.Week 3 | Multi-class Classification

So far, the classification examples we've talked about have used binary calssification, where you had two possible labels, 0 or 1.What if we have mult

7.Week 3 | Introduction to Programming Frameworks

Today, there are many deep learning frameworks that makes it easy for you to implement neural networks.So rather than too strongly endorsing any of th

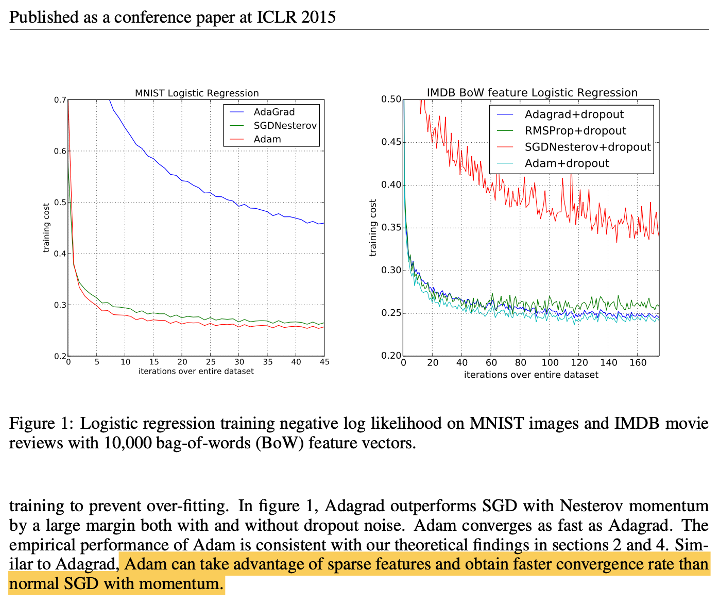

8.Week 3 | Seminar Discussion

Adam papaer에서 Adam Optimization의 성능이 momentum보다 훨씬 빠른 속도로 수렴한다는 emirical performance를 보였다고 작성이 되어있는데,하지만 이는 경험적인 것이기 때문에 아무도 모른다보통 momentum이 default로