5-1. 들어가며

학습 목표

- 딥러닝 모델(네트워크) 구성 레이어 개념

- 딥러닝 모델 구성 방법 실습

학습 내용

-

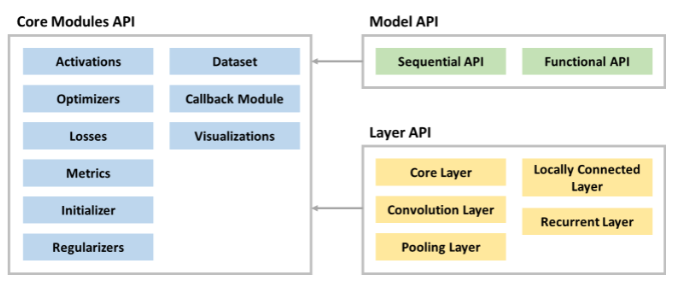

딥러닝 구조

- Core Modules API

- Model API

- Layer API

-

레이어

- Input 객체

- Dense 레이어

- Activation 레이어

- Flatten 레이어

-

딥러닝 모델

- Sequential API

- Functional API

- Subclassing API

5-2. 딥러닝 구조와 레이어(Layer)

딥러닝 구조

모델 구조

- Model API, Layer API

- 필요한 모듈 : Modules API 호출을 통해 사용

- 여러 레이어로 구성됨

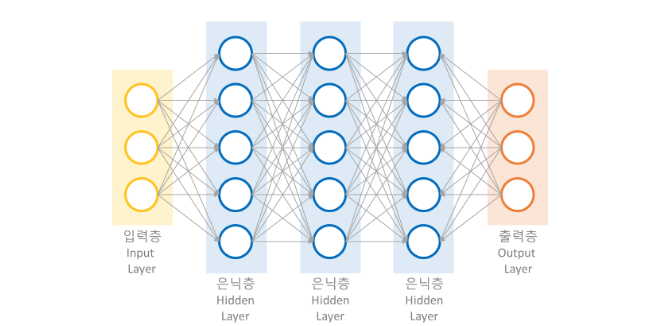

레이어(Layer)

- TensorFlow, keras, layers(keras layser 사용) import

import tensorflow as tf

from tensorflow import keras

from tensorflow.keras import layers- 딥러닝은 여러 개의 layer로 구성

- 레이어: 하나 이상의 텐서 입력 -> 하나 이상의 텐서 출력하는 데이터 처리 모듈

- 입력층(Input Layer), 은닉층(Hidden Layer), 출력층(Output Layer)

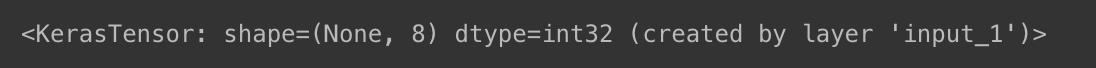

Input 객체

- 딥러닝 모델 입력 정의 시 사용

- 입력 데이터 모양 :

shape - 데이터 유형 :

dtype

keras.Input(shape=(8,), dtype=tf.int32)

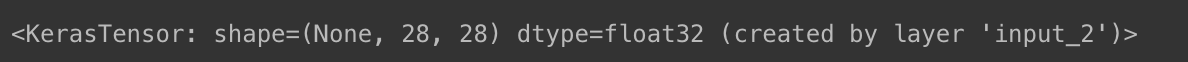

keras.Input(shape=(28, 28), dtype=tf.float32)

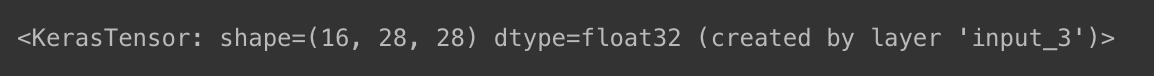

- 배치 크기 지정 :

batch_size

keras.Input(shape=(28, 28), dtype=tf.float32, batch_size=16)

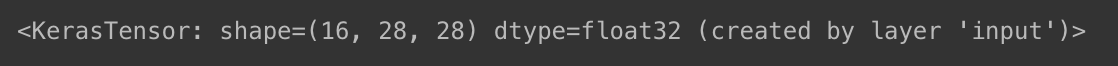

- 이름 지정 :

name

keras.Input(shape=(28, 28), dtype=tf.float32, batch_size=16, name='input')

Dense 레이어

- 완전연결계층(Fully-Connected Layer)

- 노드수 지정 시 생성됨

- https://keras.io/api/layers/core_layers/dense/

layers.Dense(10)

- 레이어 간 구분 :

name

layers.Dense(10, name='layer1')

- 활성화 함수 지정 생성 가능 :

activation

layers.Dense(10, activation='softmax')

- 레이어에 노드 수, 활성화 함수, 이름 지정 모두 사용 가능

layers.Dense(10, activation='relu', name='Dense Layer')

- 랜덤 값 생성, 생성된 레이어에 그 값을 입력으로 사용 -> 레이어 가중치 값, 결과 값 확인

inputs = tf.random.uniform(shape=(5, 2))

print(inputs)

layer = layers.Dense(10, activation='relu')

outputs = layer(inputs)

print(layer.weights)

print(layer.bias)

print(outputs)tf.Tensor(

[[0.80537975 0.92845726]

[0.533079 0.10212851]

[0.57029676 0.18509209]

[0.49353302 0.30987203]

[0.54413784 0.7344079 ]], shape=(5, 2), dtype=float32)

[<tf.Variable 'dense_2/kernel:0' shape=(2, 10) dtype=float32, numpy=

array([[-0.57165575, -0.68205637, 0.00455189, -0.58416474, 0.42667395,

-0.16340786, -0.2905978 , -0.6576975 , 0.2588241 , -0.52066463],

[-0.18635076, -0.20929787, 0.42931348, 0.5086815 , 0.67551774,

0.18558776, 0.18993276, 0.25130683, 0.02367187, 0.37568623]],

dtype=float32)>, <tf.Variable 'dense_2/bias:0' shape=(10,) dtype=float32, numpy=array([0., 0., 0., 0., 0., 0., 0., 0., 0., 0.], dtype=float32)>]

<tf.Variable 'dense_2/bias:0' shape=(10,) dtype=float32, numpy=array([0., 0., 0., 0., 0., 0., 0., 0., 0., 0.], dtype=float32)>

tf.Tensor(

[[0. 0. 0.40226522 0.00181456 0.9708239 0.04070492

0. 0. 0.23043002 0. ]

[0. 0. 0.04627166 0. 0.29644054 0.

0. 0. 0.14039129 0. ]

[0. 0. 0.08205846 0. 0.36836377 0.

0. 0. 0.15198803 0. ]

[0. 0. 0.13527875 0. 0.41990173 0.

0. 0. 0.13507348 0. ]

[0. 0. 0.31776807 0.05571355 0.728275 0.04738072

0. 0. 0.1582208 0. ]], shape=(5, 10), dtype=float32)Activation 레이어

- 활성화 함수

- 이전 레이어 결과값 변환 ➡️ 다른 레이어로 전달

- 성화 함수(Linear activation function), 비선형 활성화 함수(Non-linear activation function)

- 모델 표현력을 고려해 비선형 활성화 함수 사용

- https://keras.io/api/layers/activations/

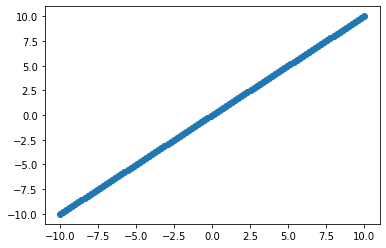

- -10 ~ 10 사이 숫자 중 100개의 값을 입력 데이터로 사용

import numpy as np

import matplotlib.pyplot as plt

input = np.linspace(-10, 10, 100)

x = np.linspace(-10, 10, 100)

plt.scatter(x, input)

plt.show()

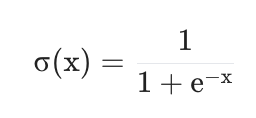

- 시그모이드 함수

- 확률 예측 모델에 사용

- 출력값 : 0 ~ 1 사이로 나옴

- 비선형 함수이나, 0과 1 사이에서는 선형 함수임!

- 문제점 : 0과 1사이의 saturate(포화) 문제 발생

- 입력값이 작아도 출력값이 0 이하가 될 수 없음

- 입력값이 커져도 1 이상이 될 수 없음

- 훈련 시간 과다 사요아 gradient가 0에 가까워짐 ➡️ 가중치 업데이트 어려움

layer = layers.Activation('sigmoid')

output = layer(input)

plt.scatter(x, output)

plt.show()

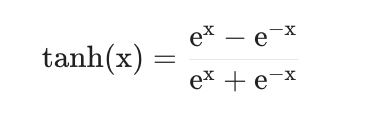

- 하이퍼볼릭 탄젠트(tanh, Hyperbolic tangent)

- 0을 중심으로, -1 ~ 1 사이의 값

- 시그모이드보다는 빠르지만, 여전히 사이 값에서의 포화 문제가 있음

- 역시 비선형 함수이나, 사이 값에서는 선형 함수임!

layer = layers.Activation('tanh')

output = layer(input)

plt.scatter(x, output)

plt.show()

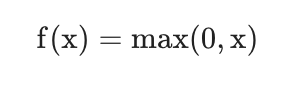

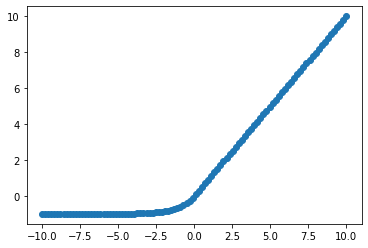

- ReLU

- 출력값 : 0 ~ ∞

- 빠른 훈련 가능

- 출력값이 0 중심이 아님

- Learing rate가 크면 ReLU를 사용한 노드에서는 출력이 0으로만 나옴

layer = layers.Activation('relu')

output = layer(input)

plt.scatter(x, output)

plt.show()

- Leaky ReLU

- 노드 출력이 0으로만 나오던 ReLU 문제점 개선 ➡️ 아주 작은 음수로 나옴

layer = layers.LeakyReLU()

output = layer(input)

plt.scatter(x, output)

plt.show()

- ELU

- Exponential Linear Unit

- ReLu의 2가지 문제점 해결

- 중심점이 0이 아님

- 노드 출력이 0

- 0 이하 : exponential 연산 수행(계산 비용이 높음)

layer = layers.ELU()

output = layer(input)

plt.scatter(x, output)

plt.show()

Flatten 레이어

- 레이어 배치 크기(or 데이터 크기) 제외

- 데이터를 1차원 형태로 평평하게 변환하는 것을 의미

inputs = keras.Input(shape=(28, 28, 1))

layer = layers.Flatten(input_shape=(28, 28, 1))(inputs)

print(layer.shape)

Q. Input 값 (224, 224, 1) -> Flatten 레이어에 넣으면, 어떤 크기의 1차원 형태 데이터가 나오는지?

A. 224×224×1=50176

inputs = keras.Input(shape=(224, 224, 1)) layer = layers.Flatten()(inputs) print(layer.shape)

5-3. 딥러닝 모델

라이브러리 import

- models, utils import

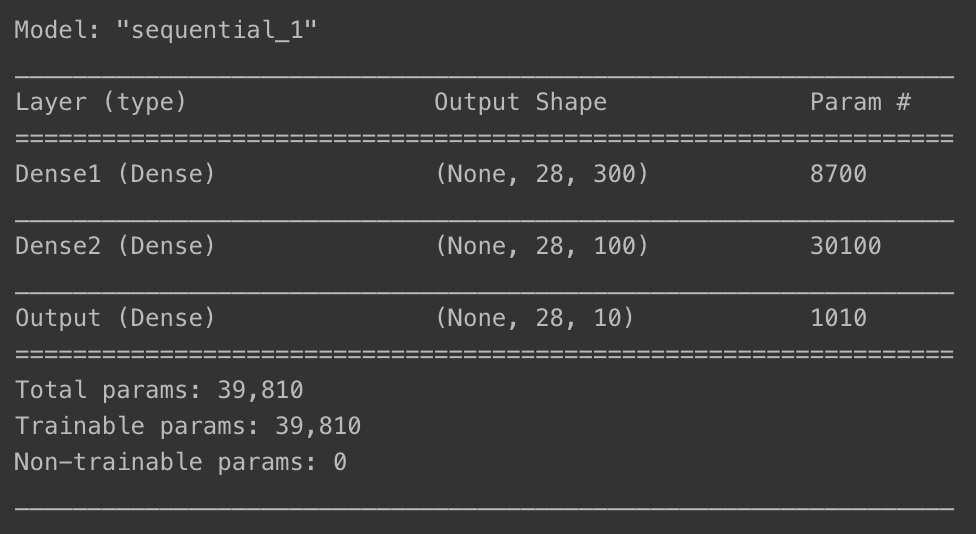

from tensorflow.keras import models, utilsSequential API

- 모델이 순차 구조 진행 시 사용

- 다중 입력 및 다중 출력시 사용 불가

- 방법 1) Sequential 객체 생성 후,add() 이용

model = models.Sequential()

model.add(layers.Input(shape=(28, 28)))

model.add(layers.Dense(300, activation='relu'))

model.add(layers.Dense(100, activation='relu'))

model.add(layers.Dense(10, activation='softmax'))

model.summary()

- 시각화 :

plot_model()

utils.plot_model(model)

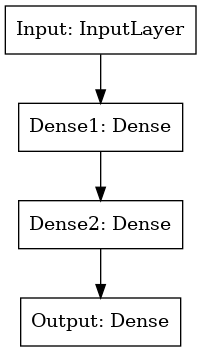

- 방법 2) Sequential에 한번에 추가

model = models.Sequential([layers.Input(shape=(28, 28), name='Input'),

layers.Dense(300, activation='relu', name='Dense1'),

layers.Dense(100, activation='relu', name='Dense2'),

layers.Dense(10, activation='softmax', name='Output')])

model.summary()

utils.plot_model(model)

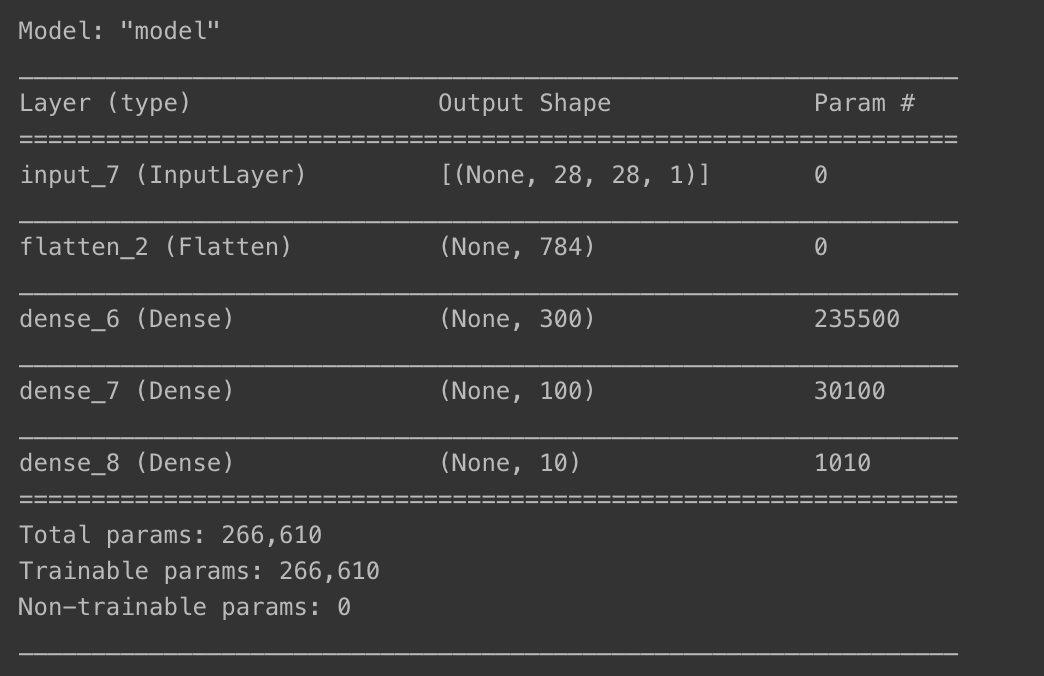

Functional API

- 딥러닝 모델의 복잡성, 유연성 보장 가능

- 다중 입출력도 다룰 수 있음

inputs = layers.Input(shape=(28, 28, 1))

x = layers.Flatten(input_shape=(28, 28, 1))(inputs)

x = layers.Dense(300, activation='relu')(x)

x = layers.Dense(100, activation='relu')(x)

x = layers.Dense(10, activation='softmax')(x)

model = models.Model(inputs=inputs, outputs=x)

model.summary()

utils.plot_model(model)

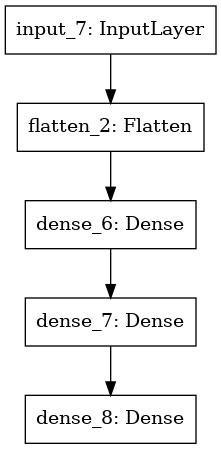

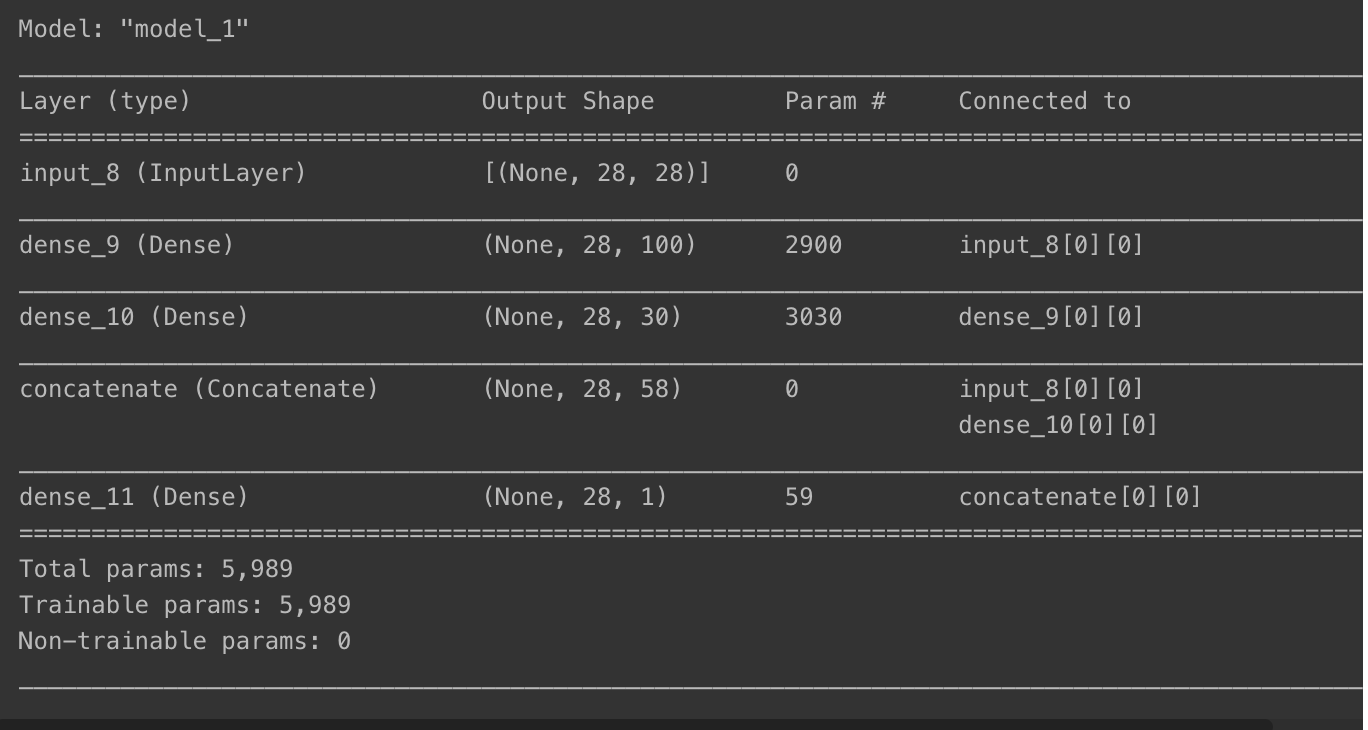

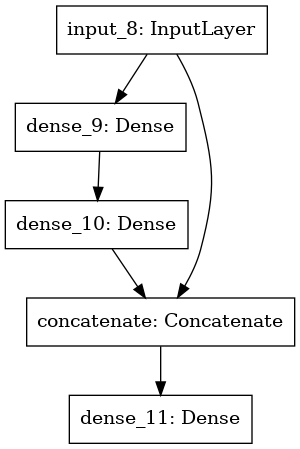

- Input 객체를 여러 레이어에 사용할 수 있음

# Concatenate()를 이용해 Dense 레이어 결과, Input 결합

inputs = keras.Input(shape=(28, 28))

hidden1 = layers.Dense(100, activation='relu')(inputs)

hidden2 = layers.Dense(30, activation='relu')(hidden1)

concat = layers.Concatenate()([inputs, hidden2])

output = layers.Dense(1)(concat)

model = models.Model(inputs=[inputs], outputs=[output])

model.summary()

utils.plot_model(model)

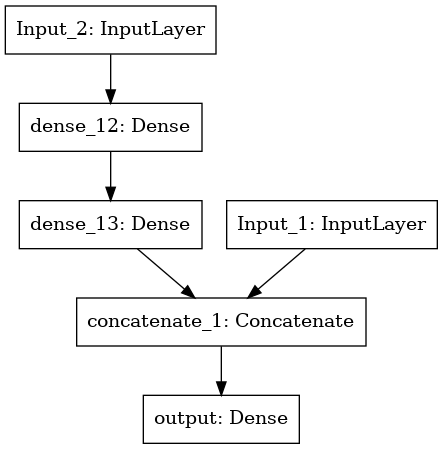

- 여러 Input 객체 사용도 가능

input_1 = keras.Input(shape=(10, 10), name='Input_1')

input_2 = keras.Input(shape=(10, 28), name='Input_2')

hidden1 = layers.Dense(100, activation='relu')(input_2)

hidden2 = layers.Dense(10, activation='relu')(hidden1)

concat = layers.Concatenate()([input_1, hidden2])

output = layers.Dense(1, activation='sigmoid', name='output')(concat)

model = models.Model(inputs=[input_1, input_2], outputs=[output])

model.summary()

utils.plot_model(model)

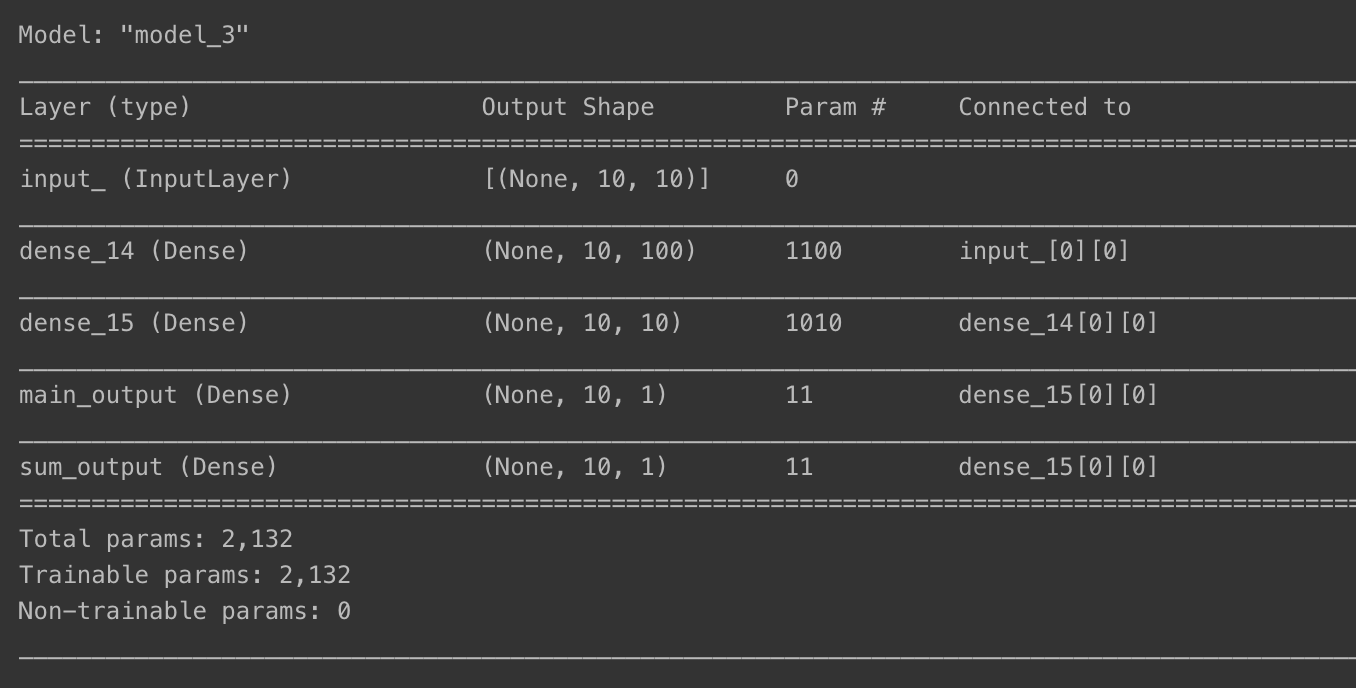

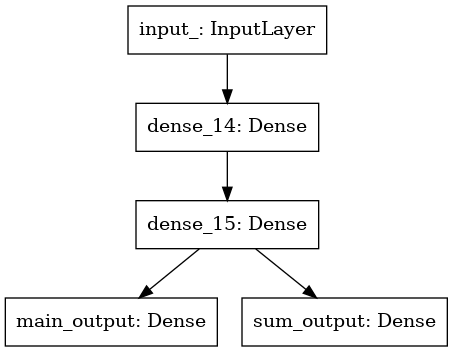

- 결과를 여러 개로 나누는 것도 가능

input_ = keras.Input(shape=(10, 10), name='input_')

hidden1 = layers.Dense(100, activation='relu')(input_)

hidden2 = layers.Dense(10, activation='relu')(hidden1)

output = layers.Dense(1, activation='sigmoid', name='main_output')(hidden2)

sub_out = layers.Dense(1, name='sum_output')(hidden2)

model = models.Model(inputs=[input_], outputs=[output, sub_out])

model.summary()

utils.plot_model(model)

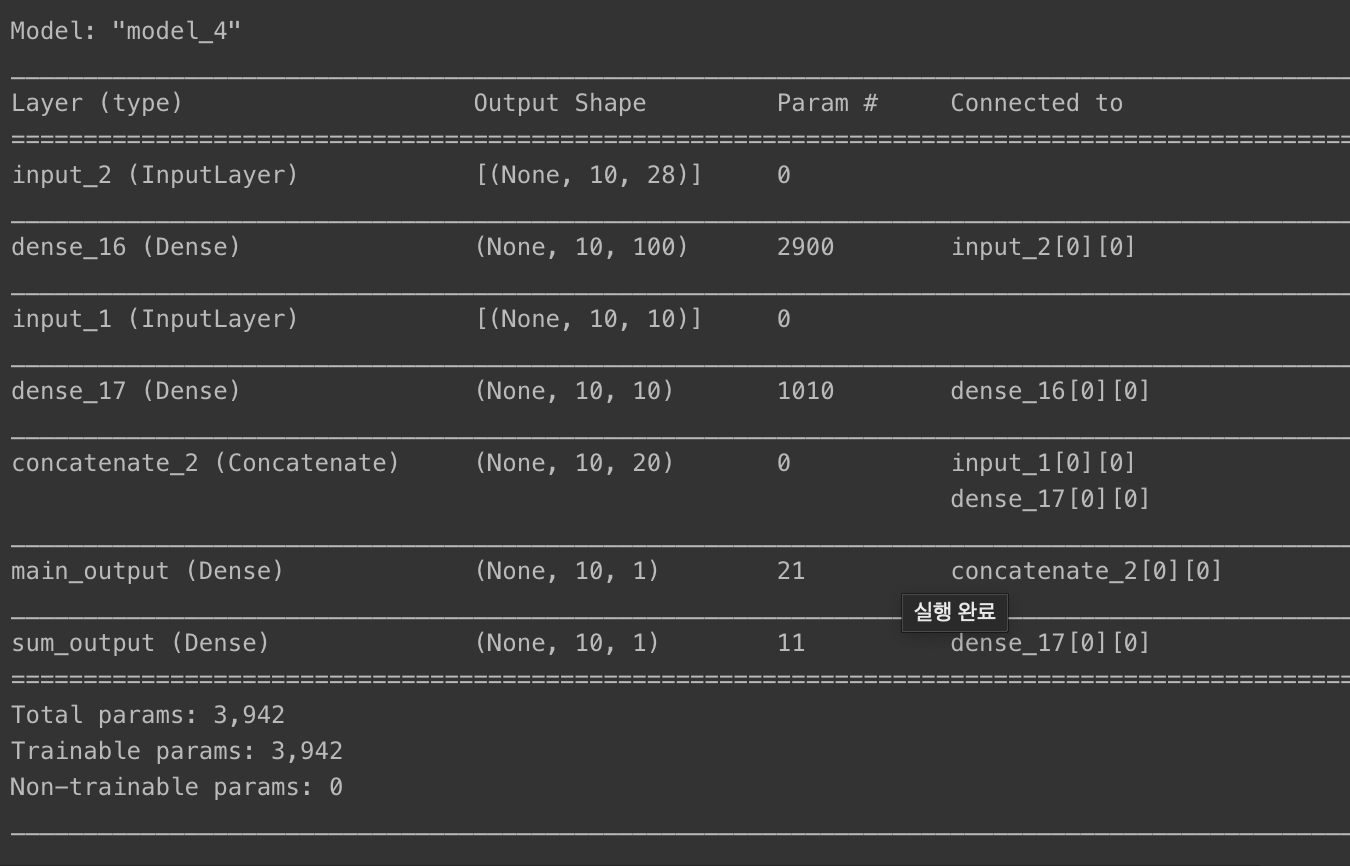

- 다중 입력, 다중 출력 모델

input_1 = keras.Input(shape=(10, 10), name='input_1')

input_2 = keras.Input(shape=(10, 28), name='input_2')

hidden1 = layers.Dense(100, activation='relu')(input_2)

hidden2 = layers.Dense(10, activation='relu')(hidden1)

concat = layers.Concatenate()([input_1, hidden2])

output = layers.Dense(1, activation='sigmoid', name='main_output')(concat)

sub_out = layers.Dense(1, name='sum_output')(hidden2)

model = models.Model(inputs=[input_1, input_2], outputs=[output, sub_out])

model.summary()

utils.plot_model(model)

Subclassing API

- 커스터마이징

- Model 클래스를 상속받아 사용, 모델에 포함되는 기능 사용 가능

fit(): 모델 학습evaluate(): 모델 평가predict(): 모델 예측save(): 모델 저장load(): 모델 로드call(): 메소드 내 원하는 계산 가능

# Subclassing API의 예시

class MyModel(models.Model):

def __init__(self, units=30, activation='relu', **kwargs):

super(MyModel, self).__init__(**kwargs)

self.dense_layer1 = layers.Dense(300, activation=activation)

self.dense_layer2 = layers.Dense(100, activation=activation)

self.dense_layer3 = layers.Dense(units, activation=activation)

self.output_layer = layers.Dense(10, activation='softmax')

def call(self, inputs):

x = self.dense_layer1(inputs)

x = self.dense_layer2(x)

x = self.dense_layer3(x)

x = self.output_layer(x)

return x실습

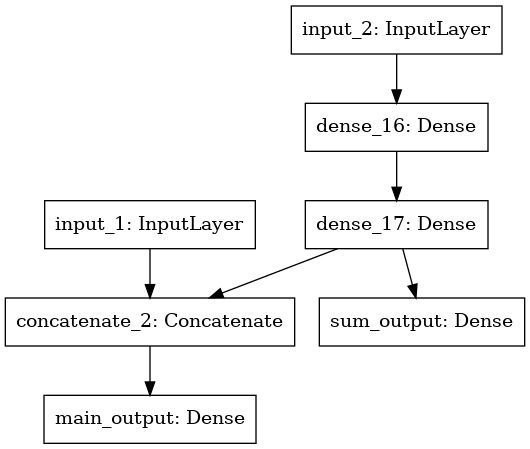

- 조건에 따른 Seqential API 모델 만들기

model = models.Sequential()

# (100, 100, 3) 형태의 데이터를 받는 Input 레이어

model.add(layers.Input(shape=(100, 100, 3)))

# Flatten 레이어

model.add(layers.Flatten())

# Unit의 수는 400, 활성화함수는 ReLU를 사용하는 Dense 레이어

model.add(layers.Dense(400, activation='relu'))

# Unit의 수는 200, 활성화함수는 ReLU를 사용하는 Dense 레이어

model.add(layers.Dense(200, activation='relu'))

# Unit의 수는 100, 활성화함수는 Softmax를 사용하는 Dense 레이어

model.add(layers.Dense(100, activation='softmax'))

model.summary()

- Functional API 모델

# (100, 100, 3) 형태의 데이터를 받는 Input 레이어

inputs = layers.Input(shape=(100, 100, 3))

# Flatten 레이어를 쌓으세요.

x = layers.Flatten()(inputs)

# Unit의 수는 400, 활성화함수는 ReLU를 사용하는 Dense 레이어

x = layers.Dense(400, activation='relu')(x)

# Unit의 수는 200, 활성화함수는 ReLU를 사용하는 Dense 레이어

x = layers.Dense(200, activation='relu')(x)

# Unit의 수는 100, 활성화함수는 Softmax를 사용하는 Dense 레이어

x = layers.Dense(100, activation='softmax')(x)

model = models.Model(inputs = inputs, outputs = x)

model.summary()

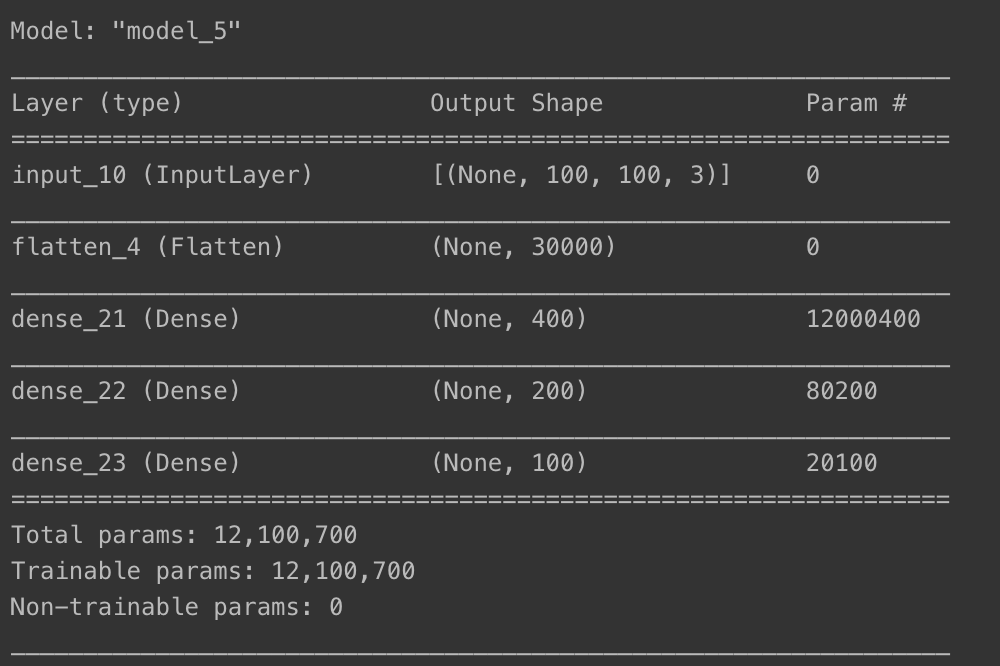

- Subclassing API 모델

# Custom Model 클래스

class YourModel(models.Model):

def __init__(self, **kwargs):

super(YourModel, self).__init__(**kwargs)

# Flatten 레이어

self.flat_layer = layers.Flatten()

# Unit의 수는 400, 활성화함수는 ReLU를 사용하는 Dense 레이어

self.dense_layer1 = layers.Dense(400, activation='relu')

# Unit의 수는 200, 활성화함수는 ReLU를 사용하는 Dense 레이어를

self.dense_layer2 = layers.Dense(200, activation='relu')

# Unit의 수는 100, 활성화함수는 Softmax를 사용하는 Dense 레이어

self.output_layer = layers.Dense(100, activation='softmax')

def call(self, inputs):

# Flatten 레이어를 통과한 뒤 Dense 레이어를 400 -> 200 -> 100 순으로 통과

x = self.flat_layer(inputs)

x = self.dense_layer1(x)

x = self.dense_layer2(x)

x = self.output_layer(x)

return x

# (100, 100, 3) 형태를 가진 임의의 텐서를 생성

data = tf.random.normal([100, 100, 3])

# 데이터는 일반적으로 batch 단위로 들어가기 때문에 batch 차원을 추가

data = tf.reshape(data, (-1, 100, 100, 3))

model = YourModel()

model(data)

model.summary()

5-4. 마무리하며

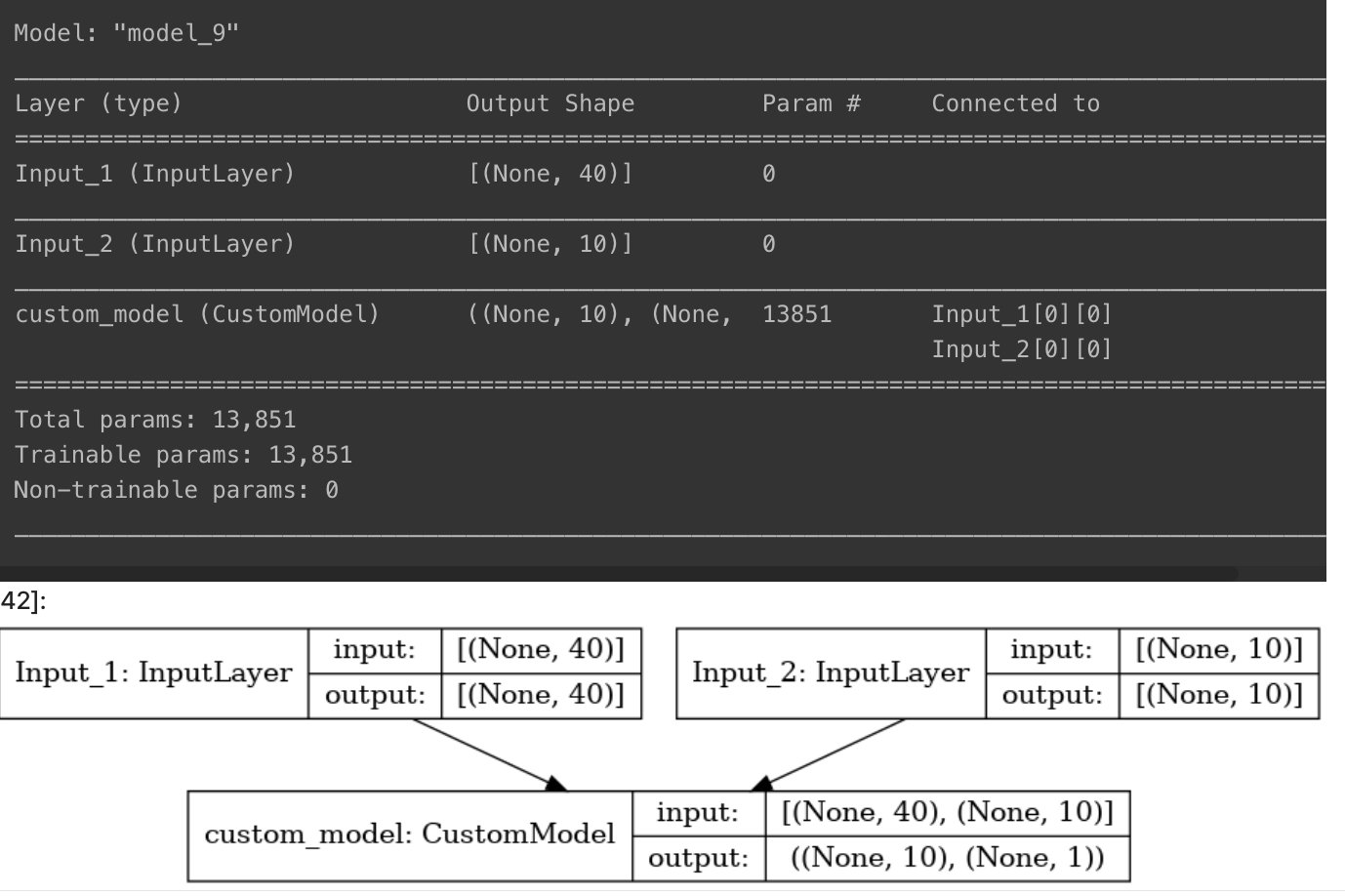

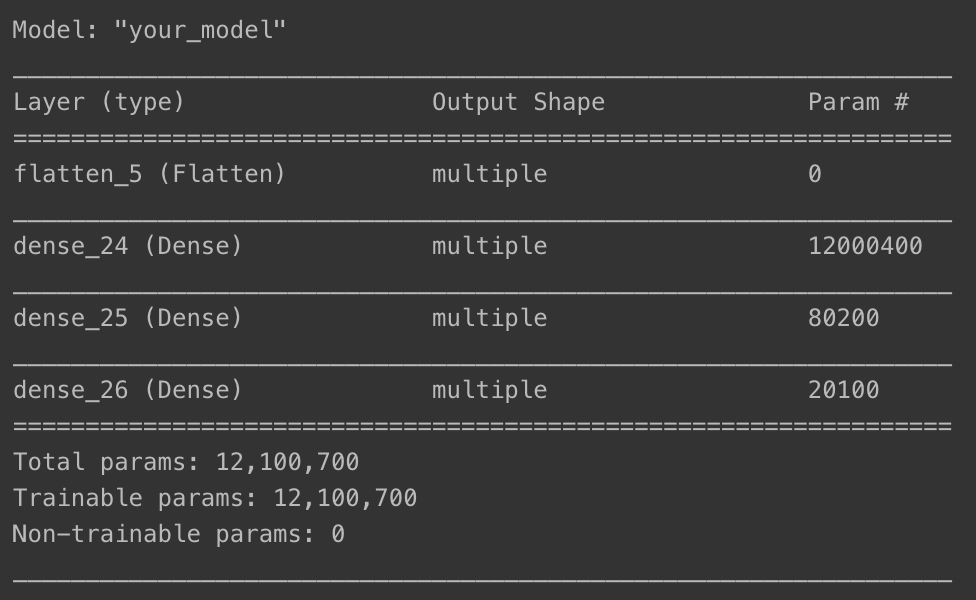

종합 퀴즈

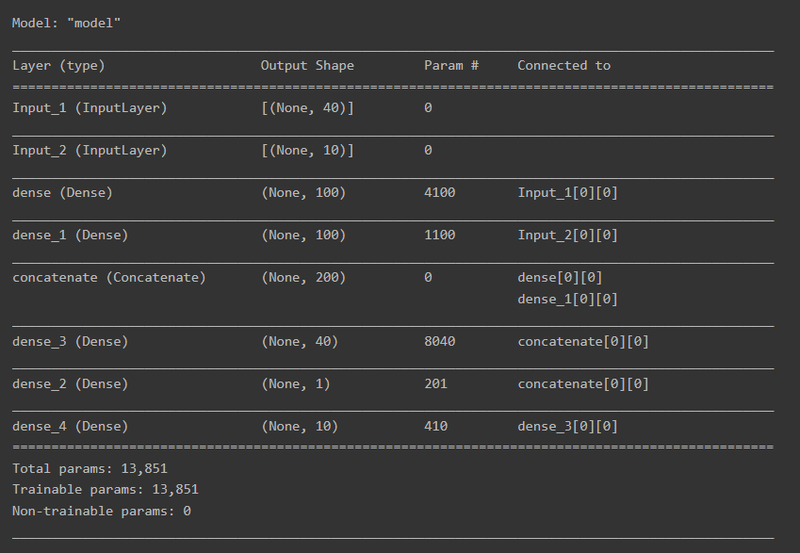

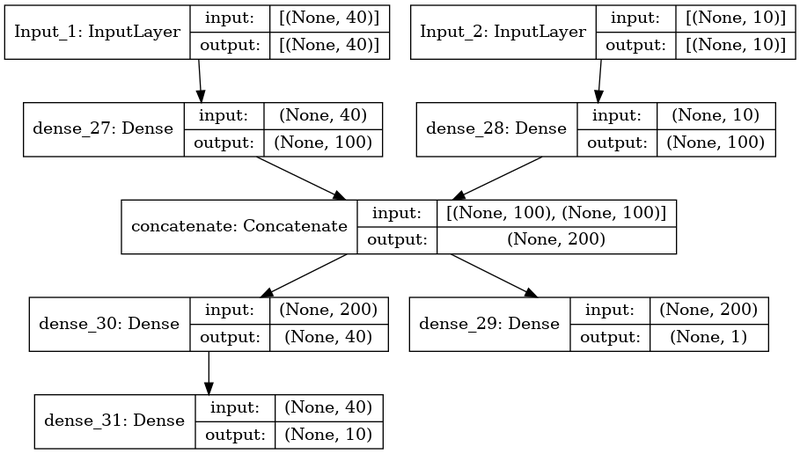

- Functional API 방식 혹은 Subclassing API 방식으로 구현

- Functional API

input_1 = Input(shape=(40,), name='Input_1')

input_2 = Input(shape=(10,), name='Input_2')

dense_1 = Dense(100, name='dense_1')(input_1)

dense_2 = Dense(100, name='dense_2_input2')(input_2)

concat = Concatenate(name='concatenate')([dense_1, dense_2])

dense_3 = Dense(40, name='dense_3')(concat)

output_1 = Dense(10, name='dense_4')(dense_3)

output_2 = Dense(1, name='dense_5')(concat)

model = Model(inputs=[input_1, input_2], outputs=[output_1, output_2])

model.summary()

from tensorflow.keras.utils import plot_model

plot_model(model, show_shapes=True)

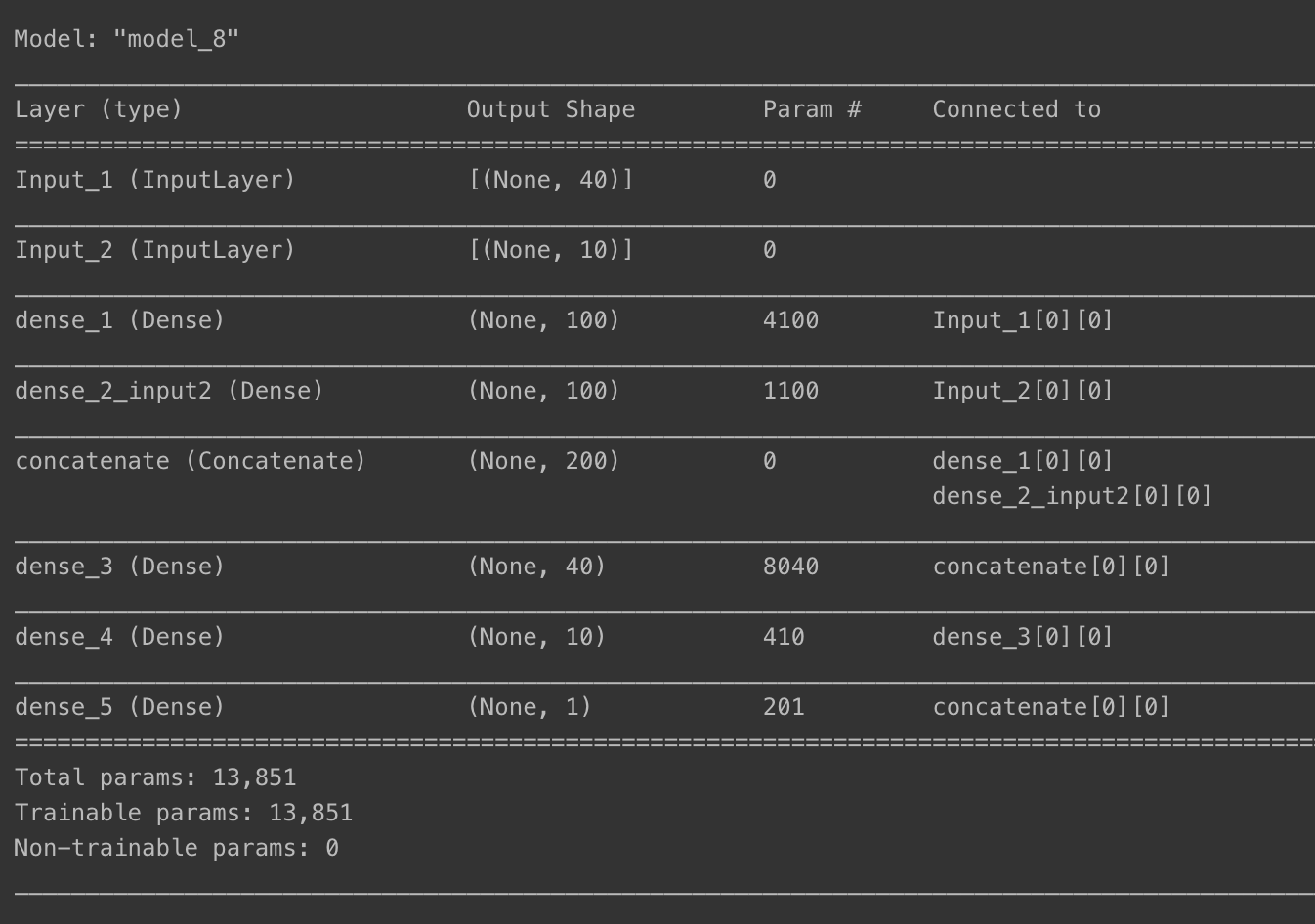

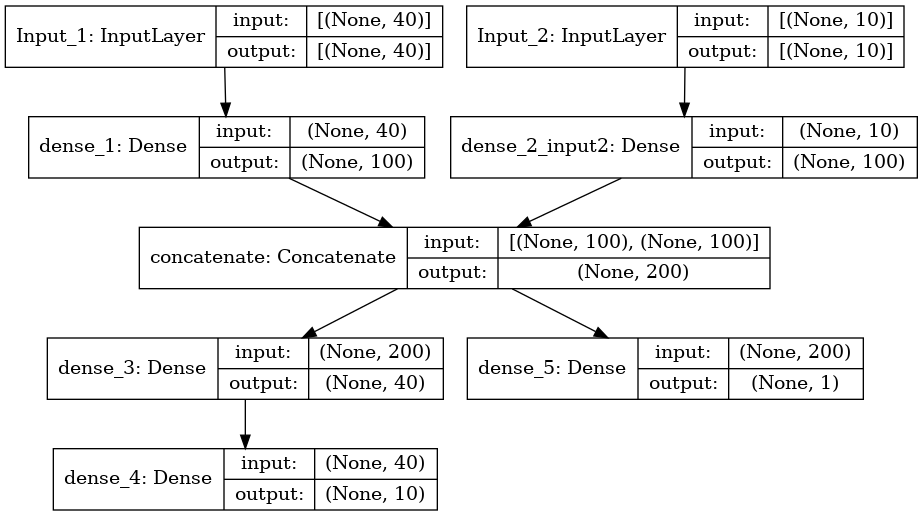

- Subclassing API

class CustomModel(Model):

def __init__(self):

super(CustomModel, self).__init__()

self.dense_1 = Dense(100, name='dense_1')

self.dense_2 = Dense(100, name='dense_2')

self.concat = Concatenate(name='concatenate')

self.dense_3 = Dense(40, name='dense_3')

self.output_1 = Dense(10, name='dense_4')

self.output_2 = Dense(1, name='dense_2')

def call(self, inputs):

input_1, input_2 = inputs

x1 = self.dense_1(input_1)

x2 = self.dense_2(input_2)

x = self.concat([x1, x2])

output_1 = self.output_1(self.dense_3(x))

output_2 = self.output_2(x)

return output_1, output_2

input_1 = tf.keras.Input(shape=(40,), name='Input_1')

input_2 = tf.keras.Input(shape=(10,), name='Input_2')

model = CustomModel()

outputs = model([input_1, input_2])

final_model = Model(inputs=[input_1, input_2], outputs=outputs)

final_model.summary()

tf.keras.utils.plot_model(final_model, show_shapes=True)