Mask man classification - dive to cnn

# MNIST 데이터 가져오기

import tensorflow as tf

mnist = tf.keras.datasets.mnist

(X_train, y_train), (X_test, y_test) = mnist.load_data()

X_train, X_test = X_train / 255, X_test /255

X_train = X_train.reshape((60000, 28, 28, 1))

X_test = X_test.reshape((10000, 28, 28, 1))

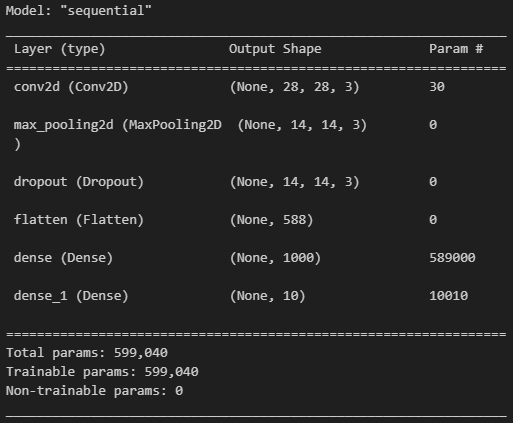

# 구조 가져오기

from tensorflow.keras import layers, models

model = models.Sequential([

layers.Conv2D(3, kernel_size=(3, 3), strides=(1, 1), padding='same',

activation='relu', input_shape=(28, 28, 1)), # 3: 특성

layers.MaxPool2D(pool_size=(2, 2), strides=(2, 2)),

layers.Dropout(0.25),

layers.Flatten(),

layers.Dense(1000, activation='relu'),

layers.Dense(10, activation='softmax')

])

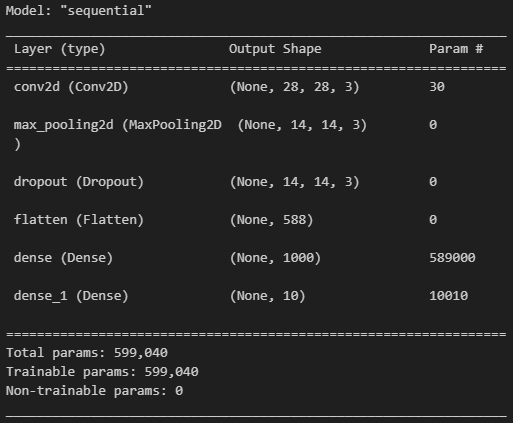

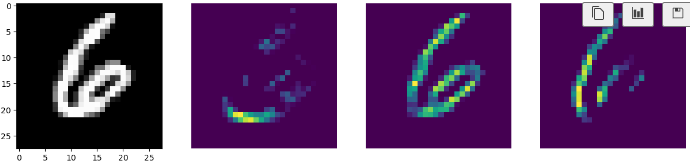

model.summary()

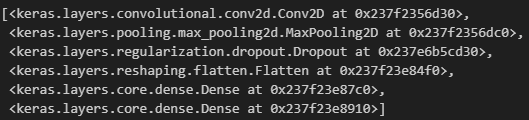

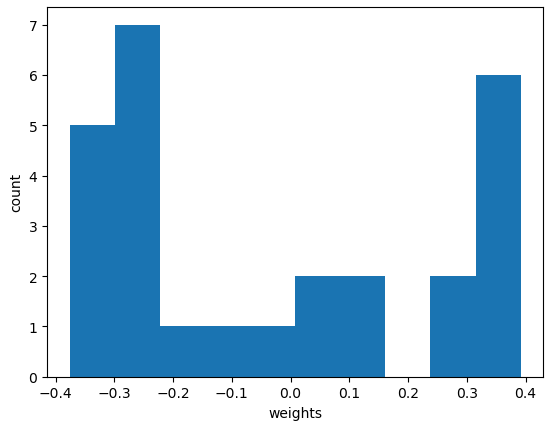

# 내가 구성한 layers들을 호출할 수 있다

model.layers

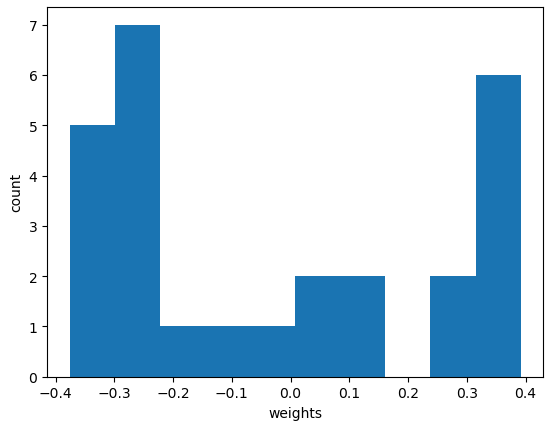

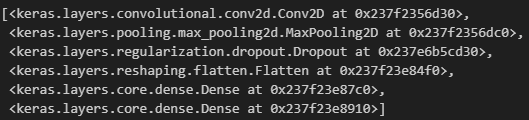

# 아직 학습하지 않은 conv 레이어의 웨이트의 평균

conv = model.layers[0]

conv_weights = conv.weights[0].numpy()

conv_weights.mean(), conv_weights.std()

import matplotlib.pyplot as plt

plt.hist(conv_weights.reshape(-1, 1))

plt.xlabel('weights')

plt.ylabel('count')

plt.show()

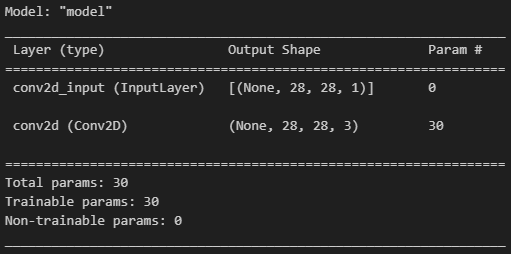

# conv_weights, filter

fig, ax = plt.subplots(1, 3, figsize=(15, 5))

for i in range(3):

ax[i].imshow(conv_weights[:, :, 0, i], vmin=-0.5, vmax=0.5) # vmin, vmax : 같은 값의 색상을 보이게 하기 위해(?..)

ax[i].axis('off')

plt.show()

# 학습

import time

%time

model.compile(optimizer='adam', loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

hist = model.fit(X_train, y_train, epochs=5, verbose=1,

validation_data = (X_test, y_test))

# 학습 후 conv filter의 변화

fig, ax = plt.subplots(1, 3, figsize=(15, 5))

for i in range(3):

ax[i].imshow(conv_weights[:, :, 0, i], vmin=-0.5, vmax=0.5)

ax[i].axis('off')

plt.show()

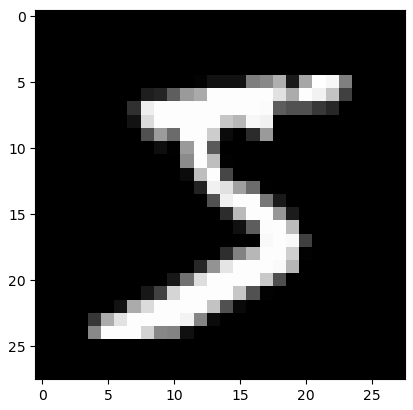

# 0번 데이터는 5

plt.imshow(X_train[0], cmap='gray')

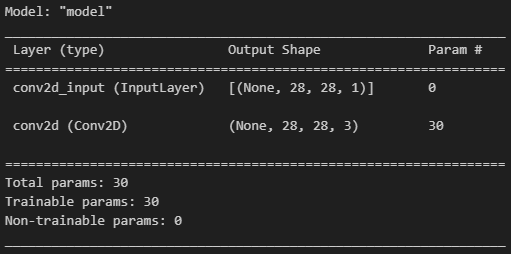

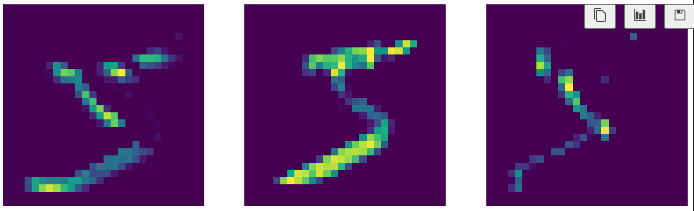

# Conv 레이어에서 출력 뽑기

inputs = X_train[0].reshape(-1, 28, 28, 1)

conv_layer_output = tf.keras.Model(model.input, model.layers[0].output) # 앞에서 한 모델의 input, output을 모델로 연결..

conv_layer_output.summary()

# 입력에 대한 feature map 뽑기

feature_maps = conv_layer_output.predict(inputs)

feature_maps.shape

feature_maps[0, :, :, 0].shape

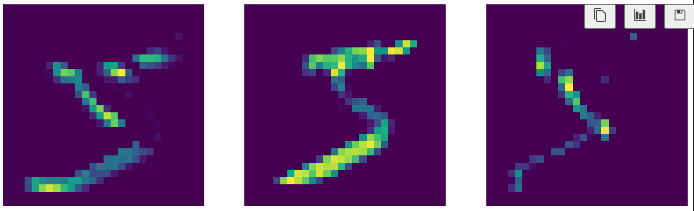

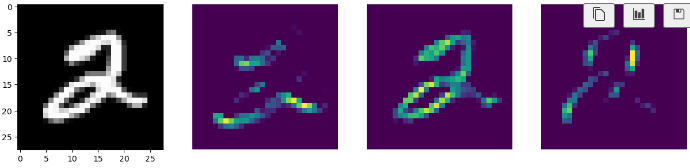

# Feature map이 본 숫자 5

fig, ax = plt.subplots(1, 3, figsize=(15, 5))

for i in range(3):

ax[i].imshow(feature_maps[0, :, :, i])

ax[i].axis('off')

plt.show()

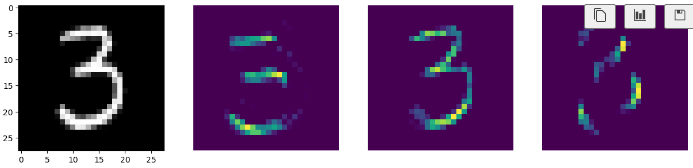

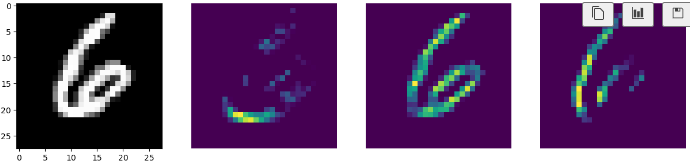

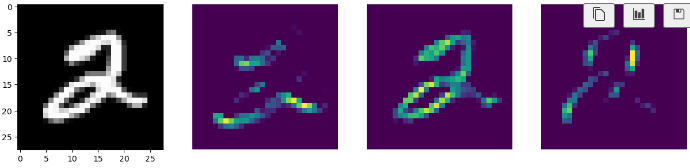

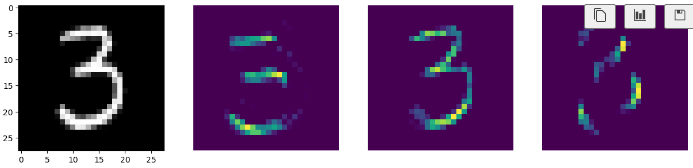

# 방금의 과정 함수 생성

# reshape : https://rfriend.tistory.com/345

def draw_feature_maps(n):

inputs = X_train[n].reshape(-1, 28, 28, 1)

feature_maps = conv_layer_output.predict(inputs)

fig, ax = plt.subplots(1, 4, figsize=(15, 5))

ax[0].imshow(inputs[0, :, :, 0], cmap='gray')

for i in range(1, 4):

ax[i].imshow(feature_maps[0, :, :, i-1])

ax[i].axis('off')

plt.show()

draw_feature_maps(50)

draw_feature_maps(13)

draw_feature_maps(5)

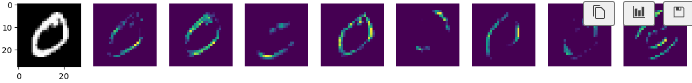

# 모델 채널 증가

model1 = models.Sequential([

layers.Conv2D(8, kernel_size=(3, 3), strides=(1, 1), padding='same',

activation='relu', input_shape=(28, 28, 1)), # 3: 특성

layers.MaxPool2D(pool_size=(2, 2), strides=(2, 2)),

layers.Dropout(0.25),

layers.Flatten(),

layers.Dense(1000, activation='relu'),

layers.Dense(10, activation='softmax')

])

# 학습

import time

%time

model1.compile(optimizer='adam', loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

hist = model1.fit(X_train, y_train, epochs=5, verbose=1,

validation_data = (X_test, y_test))

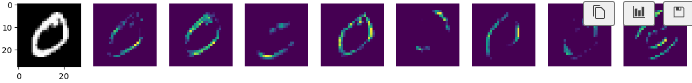

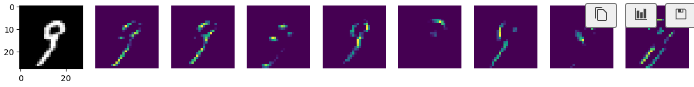

conv_layer_output = tf.keras.Model(model1.input,model1.layers[0].output)

conv_layer_output

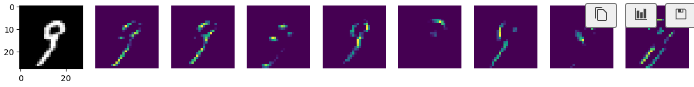

def draw_feature_maps(n):

inputs = X_train[n].reshape(-1, 28, 28, 1)

feature_maps = conv_layer_output.predict(inputs)

fig, ax = plt.subplots(1, 9, figsize=(15, 5))

ax[0].imshow(inputs[0, :, :, 0], cmap='gray')

for i in range(1, 9):

ax[i].imshow(feature_maps[0, :, :, i-1])

ax[i].axis('off')

plt.show()

draw_feature_maps(1)

draw_feature_maps(19)