Deep Learning from scratch - 예제 숫자맞추기

# import

import numpy as np

import matplotlib.pyplot as plt

from tqdm import tqdm_notebook

%matplotlib inline# softmax

def Softmax(x):

x = np.subtract(x, np.max(x)) # prevent overflow 연산의 값이 커지는 것을 방어할 수 있음

ex = np.exp(x)

return ex / np.sum(ex) # ex의 값이 왼쪽으로 갈 수록 0에 점점 가까워지니..?..- 훈련용 데이터

# 훈련용 데이터

X = np.zeros((5, 5, 5))

X[:, :, 0] = [[0, 1, 1, 0, 0], [0, 0, 1, 0, 0], [0, 0, 1, 0, 0], [0, 0, 1, 0, 0], [0, 1, 1, 1, 0]]

X[:, :, 1] = [[1, 1, 1, 1, 0], [0, 0, 0, 0, 1], [0, 1, 1, 1, 0], [1, 0, 0, 0, 0], [1, 1, 1, 1, 1]]

X[:, :, 2] = [[1, 1, 1, 1, 0], [0, 0, 0, 0, 1], [0, 1, 1, 1, 0], [0, 0, 0, 0, 1], [1, 1, 1, 1, 0]]

X[:, :, 3] = [[0, 0, 0, 1, 0], [0, 0, 1, 1, 0], [0, 1, 0, 1, 0], [1, 1, 1, 1, 1], [0, 0, 0, 1, 0]]

X[:, :, 4] = [[1, 1, 1, 1, 1], [1, 0, 0, 0, 0], [1, 1, 1, 1, 0], [0, 0, 0, 0, 1], [1, 1, 1, 1, 0]]

D = np.array([[[1, 0, 0, 0, 0]],

[[0, 1, 0, 0, 0]],

[[0, 0, 1, 0, 0]],

[[0, 0, 0, 1, 0]],

[[0, 0, 0, 0, 1]]

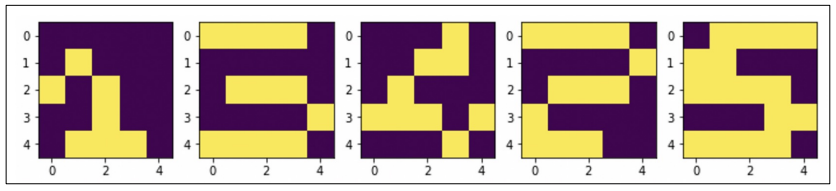

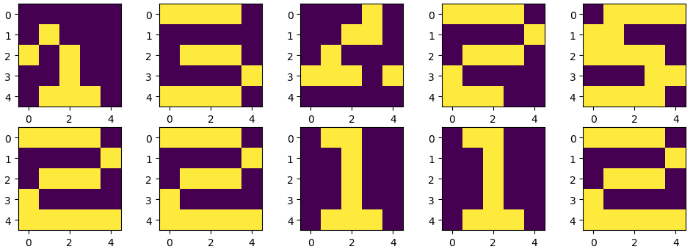

])# 생김새 확인

plt.figure(figsize=(12, 4))

for n in range(5):

plt.subplot(1, 5, n+1)

plt.imshow(X[:, :, n])

plt.show()# ReLU

def ReLU(x):

return np.maximum(0, x)# ReLU를 이용한 정방향 계산

def calcOutput_ReLU(W1, W2, W3, W4, x):

v1 = np.matmul(W1, x)

y1 = ReLU(v1)

v2 = np.matmul(W2, y1)

y2 = ReLU(v2)

v3 = np.matmul(W3, y2)

y3 = ReLU(v3)

v = np.matmul(W4, y3)

y = Softmax(v)

return y, v1, v2, v3, y1 , y2, y3# 역전파

def backpropagation_ReLU(d, y, W2, W3, W4, v1, v2, v3):

e = d - y

delta = e

e3 = np.matmul(W4.T, delta)

delta3 = (v3>0)*e3

e2 = np.matmul(W3.T, delta3)

delta2 = (v2>0)*e2

e1 = np.matmul(W2.T, delta2)

delta1 = (v1>0)*e1

return delta, delta1, delta2, delta3# 가중치 계산

def calcWs(alpha, delta, delta1, delta2, delta3, y1, y2, y3, x, W1, W2, W3, W4):

dW4 = alpha * delta * y3.T

W4 = W4 + dW4

dW3 = alpha * delta3 * y2.T

W3 = W3 + dW3

dW2 = alpha * delta2 * y1.T

W2 = W2 + dW2

dW1 = alpha * delta1 * x.T

W1 = W1 + dW1

return W1, W2, W3, W4

# 가중치 업데이트

def DeepReLU(W1, W2, W3, W4, X, D, alpha):

for k in range(5): # epoch의 모든 데이터 수만큼 돈다, gradient descent

x = np.reshape(X[:, :, k], (25, 1))

d = D[k, :].T

y, v1, v2, v3, y1, y2, y3 = calcOutput_ReLU(W1, W2, W3, W4, x)

delta, delta1, delta2, delta3 = backpropagation_ReLU(d, y, W2, W3, W4, v1, v2, v3)

W1, W2, W3, W4 = calcWs(alpha, delta, delta1, delta2, delta3,

y1, y2, y3, x, W1, W2, W3, W4)

return W1, W2, W3, W4

# 학습 시작

W1 = 2*np.random.random((20, 25)) -1

W2 = 2*np.random.random((20, 20)) -1

W3 = 2*np.random.random((20, 20)) -1

W4 = 2*np.random.random((5, 20)) -1

alpha = 0.01

for epoch in tqdm_notebook(range(10000)):

W1, W2, W3, W4 = DeepReLU(W1, W2, W3, W4, X, D, alpha)# 훈련 데이터 검증

def verify_algorithm(x, W1, W2, W3, W4):

v1 = np.matmul(W1, x)

y1 = ReLU(v1)

v2 = np.matmul(W2, y1)

y2 = ReLU(v2)

v3 = np.matmul(W3, y2)

y3 = ReLU(v3)

v = np.matmul(W4, y3)

y = Softmax(v)

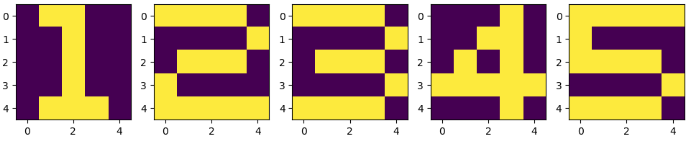

return y# 결과

N = 5

for k in range(N):

x = np.reshape(X[:, :, k], (25, 1))

y = verify_algorithm(x, W1, W2, W3, W4)

print('Y = {} :'.format(k+1))

print(np.argmax(y, axis=0)+1)

print(y)

print('------------------------')- 테스트 데이터 만들기

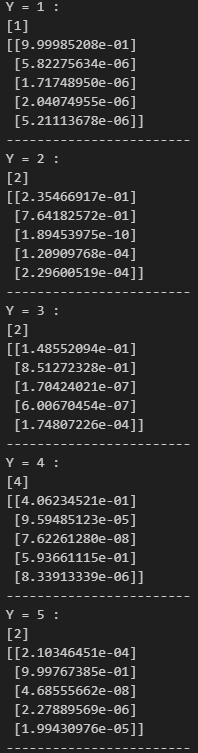

# 테스트 데이터 만들기

X_test = np.zeros((5, 5, 5))

X_test[:, :, 0] = [[0, 0, 0, 0, 0], [0, 1, 0, 0, 0], [1, 0, 1, 0, 0], [0, 0, 1, 0, 0], [0, 1, 1, 1, 0]]

X_test[:, :, 1] = [[1, 1, 1, 1, 0], [0, 0, 0, 0, 0], [0, 1, 1, 1, 0], [0, 0, 0, 0, 1], [1, 1, 1, 1, 0]]

X_test[:, :, 2] = [[0, 0, 0, 1, 0], [0, 0, 1, 1, 0], [0, 1, 0, 0, 0], [1, 1, 1, 0, 1], [0, 0, 0, 0, 0]]

X_test[:, :, 3] = [[1, 1, 1, 1, 0], [0, 0, 0, 0, 1], [0, 1, 1, 1, 0], [1, 0, 0, 0, 0], [1, 1, 1, 0, 0]]

X_test[:, :, 4] = [[0, 1, 1, 1, 1], [1, 1, 0, 0, 0], [1, 1, 1, 1, 0], [0, 0, 0, 1, 1], [1, 1, 1, 1, 0]]

plt.figure(figsize=(12, 4))

for n in range(5):

plt.subplot(1, 5, n+1)

plt.imshow(X_test[:, :, n])

plt.show()# 테스트

learning_result = [0, 0, 0, 0, 0]

for k in range(5):

x = np.reshape(X_test[:, :, k], (25, 1))

y = verify_algorithm(x, W1, W2, W3, W4)

learning_result[k] = np.argmax(y, axis=0) + 1

print('Y = {} :'.format(k+1))

print(np.argmax(y, axis=0)+1)

print(y)

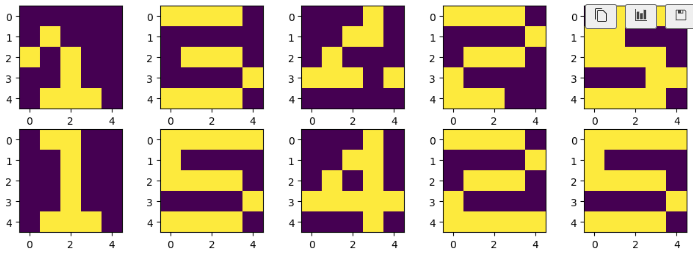

print('------------------------')# 그리기

plt.figure(figsize=(12, 4))

N = 5

for k in range(5):

plt.subplot(2, 5, k+1)

plt.imshow(X_test[:, :, k])

plt.subplot(2, 5, k+6)

plt.imshow(X[:, :, learning_result[k][0]-1])

plt.show()- dropout 생성

# dropout 함수 생성

def Dropout(y, ratio):

ym =np.zeros_like(y)

num = round(y.size*(1-ratio))

idx = np.random.choice(y.size, num, replace=False)

ym[idx] = 1.0 / (1.0 - ratio)

return ymy = np.array([0.1, 0.2, 0.5, 0.8, 0.6, 0.4, 0.3, 1, 2, 3, 4])

y.size, y.size*(1-0.8), round(y.size*(1-0.8)) # 20%만 남겨놔라num = round(y.size*(1-0.8))

np.random.choice(y.size, num) # 랜덤으로 y에서 20% 해당되는 값만 남겨놔라# 활성화 함수 준비 - sigmoid 함수

def sigmoid(x):

return 1.0 / (1.0 + np.exp(-x))# dropout 적용

# 정방향 출력 계산

def calcOutput_Dropout(W1, W2, W3, W4, x):

v1 = np.matmul(W1, x)

y1 = sigmoid(v1)

y1 = y1 * Dropout(y1, 0.2)

v2 = np.matmul(W2, y1)

y2 = sigmoid(v2)

y2 = y2 * Dropout(y2, 0.2)

v3 = np.matmul(W3, y2)

y3 = sigmoid(v3)

y3 = y3 * Dropout(y3, 0.2)

v = np.matmul(W4, y3)

y = Softmax(v)

return y, y1, y2, y3, v1, v2, v3# 역전파

# 역전파로 델타 계산

def backpropagation_Dropout(d, y, y1, y2, y3, W2, W3, W4, v1, v2, v3):

e = d-y

delta = e

e3 = np.matmul(W4.T, delta)

delta3 = y3*(1-y3)*e3

e2 = np.matmul(W3.T, delta3)

delta2 = y2*(1-y2)*e2

e1 = np.matmul(W2.T, delta2)

delta1 = y1*(1-y1)*e1

return delta, delta1, delta2, delta3# dropout 적용해서 다시 계산

def DeepDropout(W1, W2, W3, W4, X, D):

for k in range(5):

x = np.reshape(X[:, :, k], (25, 1))

d = D[k,:].T

y, y1, y2, y3, v1, v2, v3 = calcOutput_Dropout(W1, W2, W3, W4, x)

delta, delta1, delta2, delta3 = backpropagation_Dropout(d, y, y1, y2, y3,

W2, W3, W4, v1, v2, v3)

W1, W2, W3, W4 = calcWs(alpha, delta, delta1, delta2, delta3,

y1, y2, y3, x, W1, W2, W3, W4)

return W1, W2, W3, W4

# 다시 학습

W1 = 2*np.random.random((20, 25)) -1

W2 = 2*np.random.random((20, 20)) -1

W3 = 2*np.random.random((20, 20)) -1

W4 = 2*np.random.random((5, 20)) -1

for epoch in tqdm_notebook(range(10000)):

W1, W2, W3, W4 = DeepDropout(W1, W2, W3, W4, X, D)# 데이터 검증

def verify_algorithm(x, W1, W2, W3, W4):

v1 = np.matmul(W1, x)

y1 = ReLU(v1)

v2 = np.matmul(W2, y1)

y2 = ReLU(v2)

v3 = np.matmul(W3, y2)

y3 = ReLU(v3)

v = np.matmul(W4, y3)

y = Softmax(v)

return y# 결과 출력

N = 5

for k in range(N):

x = np.reshape(X_test[:,:,k], (25, 1))

y = verify_algorithm(x, W1, W2, W3, W4)

learning_result[k] = np.argmax(y, axis=0)+1

print('Y = {} :'.format(k+1))

print(np.argmax(y, axis=0)+1)

print(y)

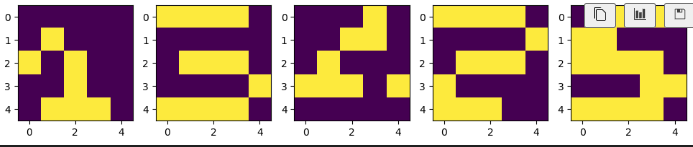

print('------------------------')# 그리기

plt.figure(figsize=(12, 4))

N = 5

for k in range(5):

plt.subplot(2, 5, k+1)

plt.imshow(X_test[:, :, k])

plt.subplot(2, 5, k+6)

plt.imshow(X[:, :, learning_result[k][0]-1])

plt.show()어렵다...ㅠㅠ

💻 출처 : 제로베이스 데이터 취업 스쿨