이미지 출처는 링크 or 아이펠 교육 자료입니다.

3-1. Google Cloud Platform 소개

학습 목표

- Google Cloud 소개

- BigQuery 학습(데이터 웨어하우스 도구)

- 예제 학습(BigQuery를 통한 대규모 데이터 처리, 실시간 처리를 다룸)

- Vertex AI

Google Cloud Platform(GCP)

- Google Cloud 제공 클라우드 서비스

- AI, ML, Big Data, 데이터 분석, 집계 관련 등 다양한 분야에 서비스가 많음

Cloud Skill Boost

- https://www.cloudskillsboost.google/paths

- 클라우드에 대한 개념 학습에 용이

Google Cloud Platform 시작

해당 강의 수강 후, 반드시 해당 프로젝트 삭제할 것!

1. 구글 계정 생성(기존 GCP 사용자의 경우)

2. GCP 가입(무료 체험판)

데이터 웨어하우스 시작하기

- 데이터 소스 ➡️ 수집, 통합, 적재, 관리를 함

- 데이터 웨어하우스에 적재를 하기 위한 ETL or Pipeline 생성

- 대량 데이터 분석, 가공 시에 데이터 웨어하우스 OLAP 쿼리로 분석

Online Analytical Processing(OLAP)

- 복잡한 쿼리로 다차원 데이터 분석

- OLTP가 트랜잭션을 지원하는 것과는 다름

- 거대 데이터를 더 빠르고 효율적으로 분석

- 로그 데이터 분석에 유리

- 대부분의 데이터 웨어 하우스 및 쿼리 엔진의 경우 이러한 형태

데이터 웨어하우스 종류

Google BigQuery

- Fully-managed 데이터 웨어하우스

- 비용 청구 형식

- 사용은 용이하지만, 비용적 측면에서는 부담이 큼

Databricks

- 컴퓨트 용량 선택 혹은 서버리스 운용의 선택지가 있는 형태

- 비용적 측면에서 유리

- CSP 지원 및 레이크 기능 지원

- 워크로드의 도입 난이도가 높은 단점이 있음

Amazon Redshift

- 컴퓨트 용량을 선택 & 할당한 인스턴스를 비용 청구를 통해 결제

- 비용 문제가 크고, 인스턴스를 조정하면 서비스가 중단되고 재실행되는 문제가 있음

Snowflake

- 컴퓨트 용량 선택 & 할당한 인스턴스를 비용 청구를 통해 결제

- 비용적 이점이 있고, 여러 CSP를 지원

- 데이터 레이크 기능이 없음

3-2. BigQuery 소개 및 조회

BigQuery 사용 설정

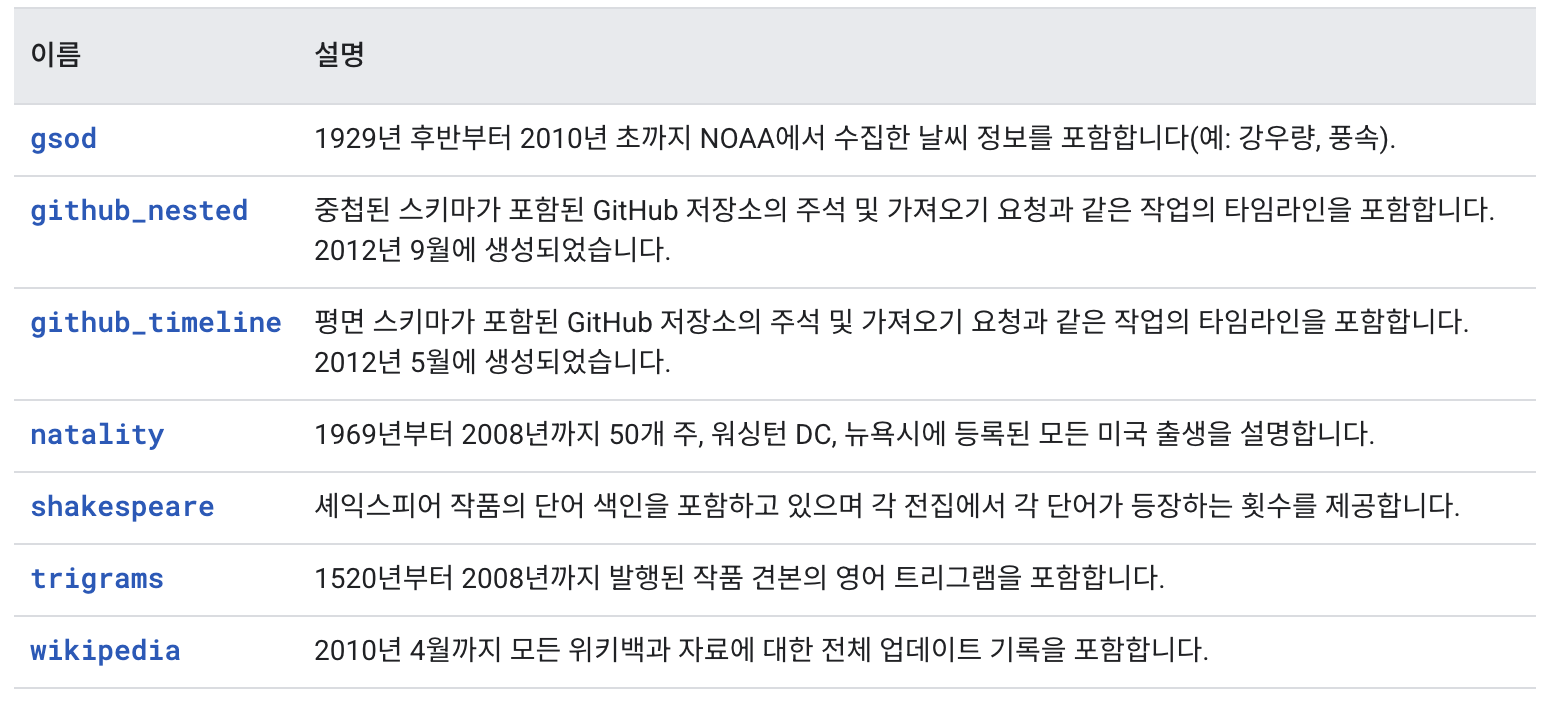

BigQuery 공개 데이터셋

실습

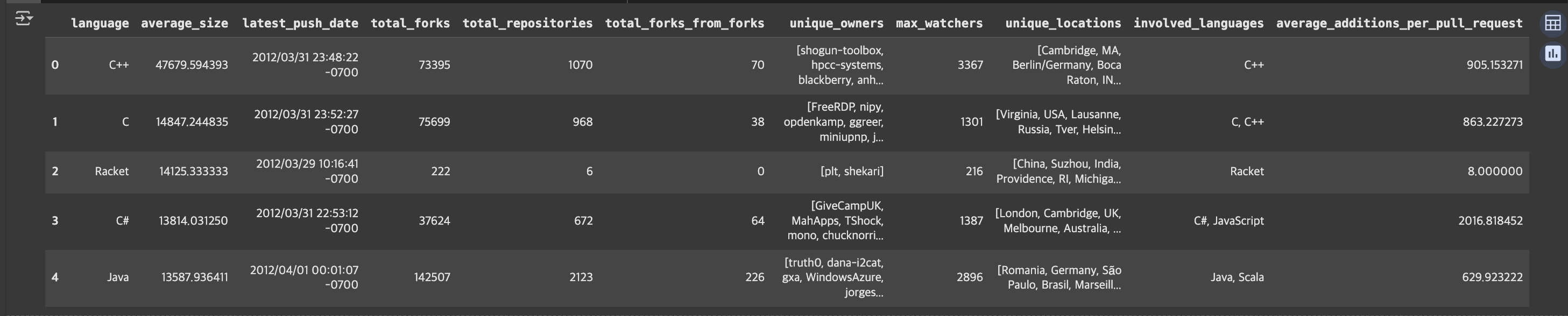

데이터셋 조회

- Colab과 BigQuery 연결

from google.cloud import bigqueryfrom google.colab import auth

auth.authenticate_user()PROJECT_ID = "{ID 입력}" # @param { "type": "string" }client = bigquery.Client(PROJECT_ID)- Colab에서 쿼리 실행

데이터 웨어하우스와 데이터 레이크

데이터 웨어하우스

- Database, Files, Logs 등의 타입이 정해져 있는 형태의 데이터를 차곡차곡 모아놓은 형태

- 어느정도 체계화가 되어 있는 형태의 데이터를 적재

- 데이터가 가지고 있는 특징이나 형태가 명확하기 때문에 표준 쿼리문 사용 시 편하게 사용 가능

- 대신, 그 데이터가 어느정도 정리가 잘되어 있어야만 사용할 수 있다는 단점 존재

데이터 레이크

- 데이터에 특정한 형태가 존재하지 않음(바이너리 데이터가 그대로 들어가거나, 이미지도 카피해서 그대로 들어가는 형식)

- 데이터 가공 과정이 필수

- 표준 쿼리문이 아닌, 프로그래밍 언어로 별도 수행해야 함

- 대신, 데이터 입력 과정에서 데이터 소실 우려 없음

- ML에서는 이러한 형태가 집계에는 더 유리

데이터 적재 방식: ETL vs ELT

ETL(Extract-Transform-Load)

- 데이터 추출 ➡️ 데이터 저장소에 형식에 맞추기 위한 변환

- 원본 데이터는 소실됨 즉, 원본 데이처 복구가 어려움

ELT(Extract-Lodad-Transform)

- 데이터 추출 ➡️ 데이터 저장소에 적재 ➡️ 적재 데이터의 추가 가공

- 원본 데이터 존재(재사용됨)

- 다만, 데이터 레이크 형식이어야 가능!

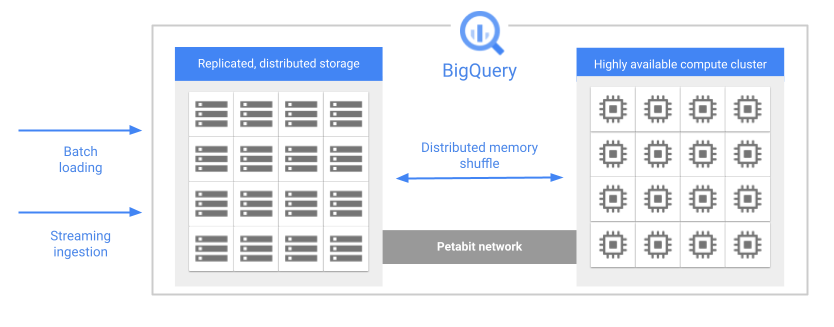

BigQuery 아키텍쳐

- https://cloud.google.com/bigquery/docs/storage_overview

- 빅쿼리의 경우, 요청 이전까지는 CPU가 붙어있지 않다가 실행 시에 유동적으로 함께 실행되는 형태

- 분산 형태이기 때문에 완전한 확장과 축소가 가능한 이점이 있음

- 애드온 퀴리뿐만 아니라, 스트리밍 처리에도 적합함

분산 데이터 시스템

- 분할 정복: 큰 작업을 잘게 쪼개고 작은 단위로 나눠진 다음, 처리가 된 후 다시 모이는 형태

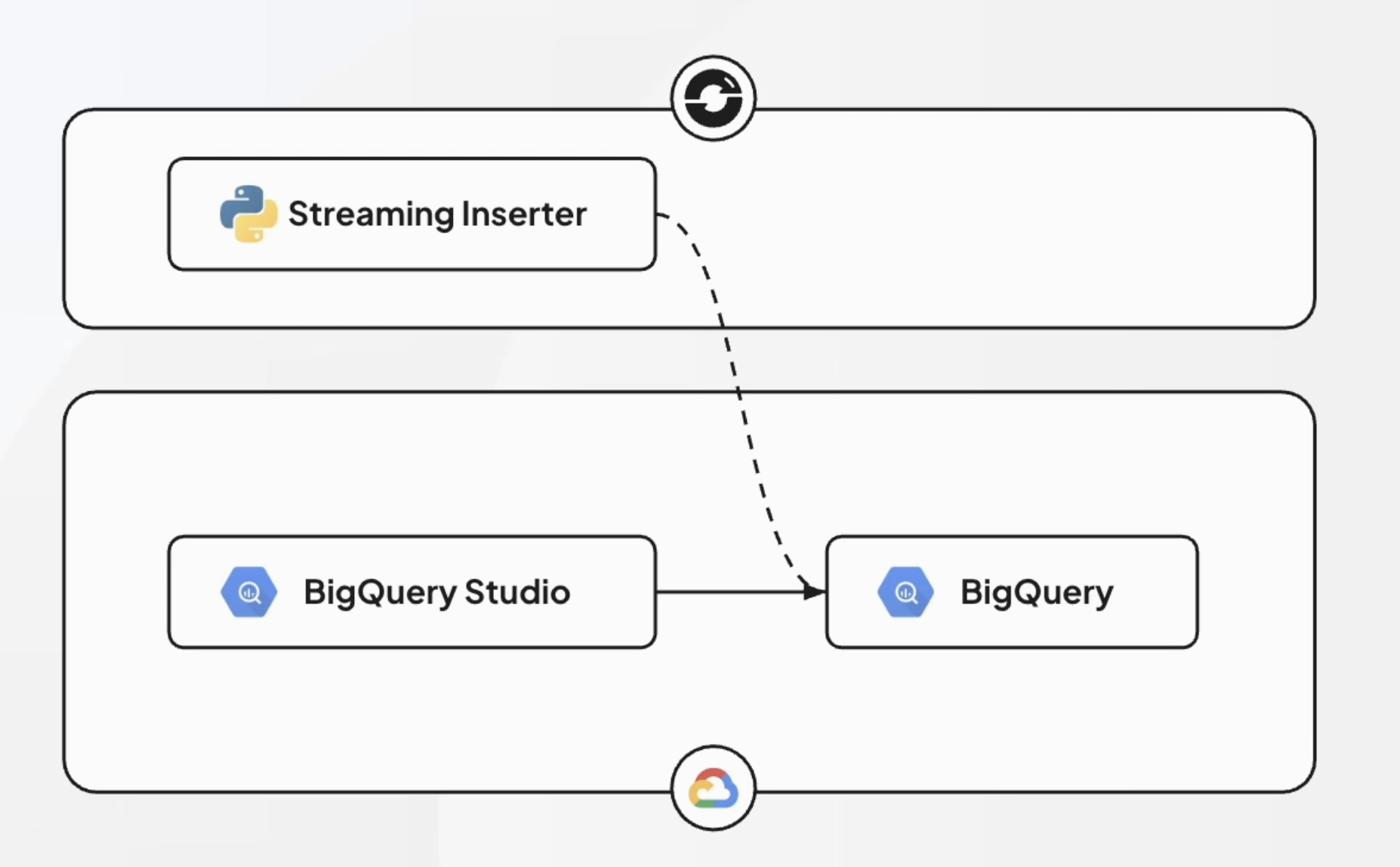

3-3. BigQuery 실시간 처리

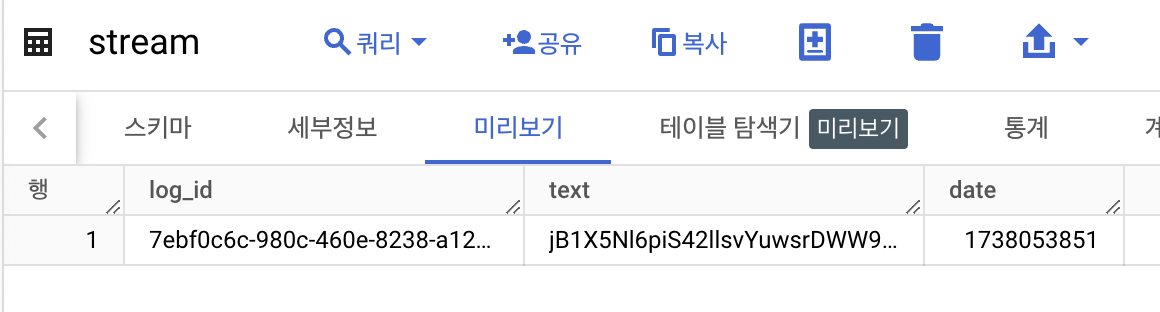

스트림 데이터 처리

- Streaming Inserter라는 파이썬 코드가 BigQuery에 지속적으로 스트림 데이터를 넣어주는 형태

실습

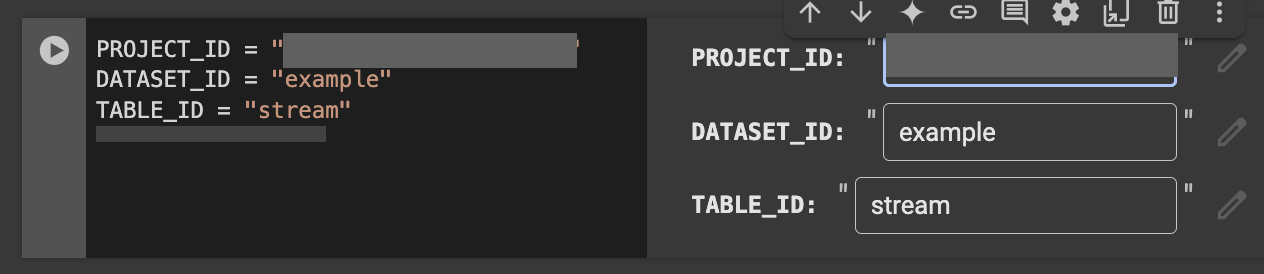

- Project ID는 본인 프로젝트 ID 넣을 것

- Dataset ID, Table ID는 변경할 필요없이 그대로 코드 돌리면 됨

generate_random_text: 랜덤으로 스트링을 생성해주는 함수

def generate_random_text(length: int=10) -> str:

letters = string.ascii_letters + string.digits

return "".join(random.choice(letters) for i in range(length))- 데이터셋 생성

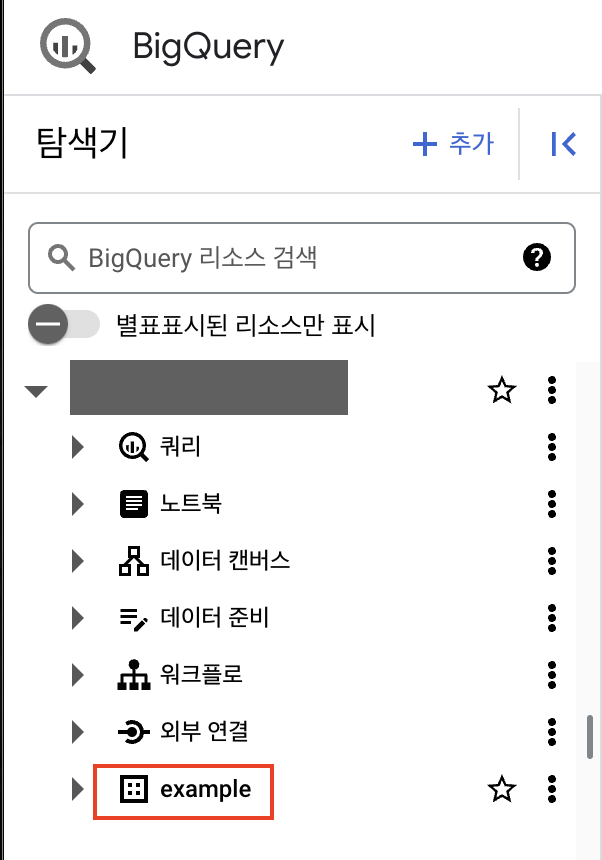

client.create_dataset(DATASET_ID, exists_ok=True)

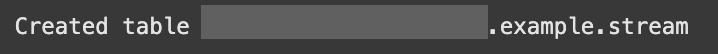

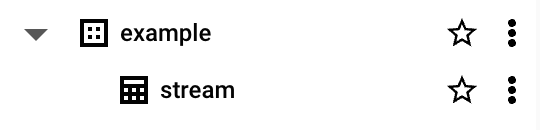

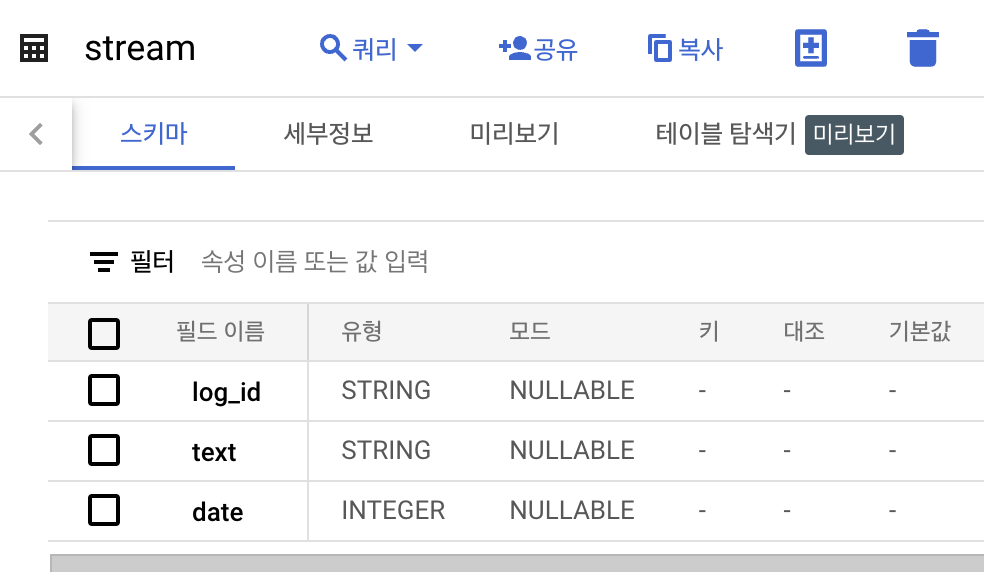

- BigQuery에서의 데이터 테이블 생성

- 빅쿼리의 테이블 호출 형태 :

{PROJECT_ID}.{DATASET_ID}.{TABLE_ID}

- 빅쿼리의 테이블 호출 형태 :

full_table_id = f"{PROJECT_ID}.{DATASET_ID}.{TABLE_ID}"

try:

client.get_table(full_table_id)

print("Table {} already exists.".format(full_table_id))

except:

schema=[

bigquery.SchemaField(name="log_id", field_type="STRING"),

bigquery.SchemaField(name="text", field_type="STRING"),

bigquery.SchemaField(name="date", field_type="INTEGER"),

]

table = bigquery.Table(full_table_id, schema=schema)

table = client.create_table(table)

print(

"Created table {}.{}.{}".format(table.project, table.dataset_id, table.table_id)

)

insert_new_line()- 랜덤으로 데이터를 집어넣는 함수

def insert_new_line() -> None:

rows_to_insert = [

{

"log_id": str(uuid.uuid4()),

"text": generate_random_text(50),

"date": int(time.time()),

},

]

errors = client.insert_rows_json(full_table_id, rows_to_insert)

if errors == []:

print("New rows have been added.")

else:

print("Encountered errors while inserting rows: {}".format(errors))insert_new_line()

- 반복

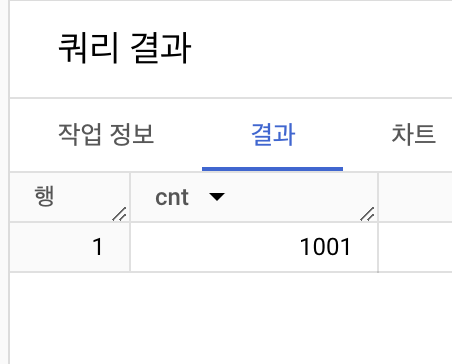

for _ in range(1000):

insert_new_line()

BigQuery에서는 String 데이터에 대한 반복 처리가 비용적 측면에서 매우 효율적으로 이뤄짐을 알 수 있음

스트림 처리의 사용

- 모델 서빙 과정에서 발생하는 로그 데이터 ➡️ BigQuery에 적재 ➡️ 지속 학습 Fly-wheel(모델 드리프트 방지)

3-4. 모델 학습 및 서빙 도입

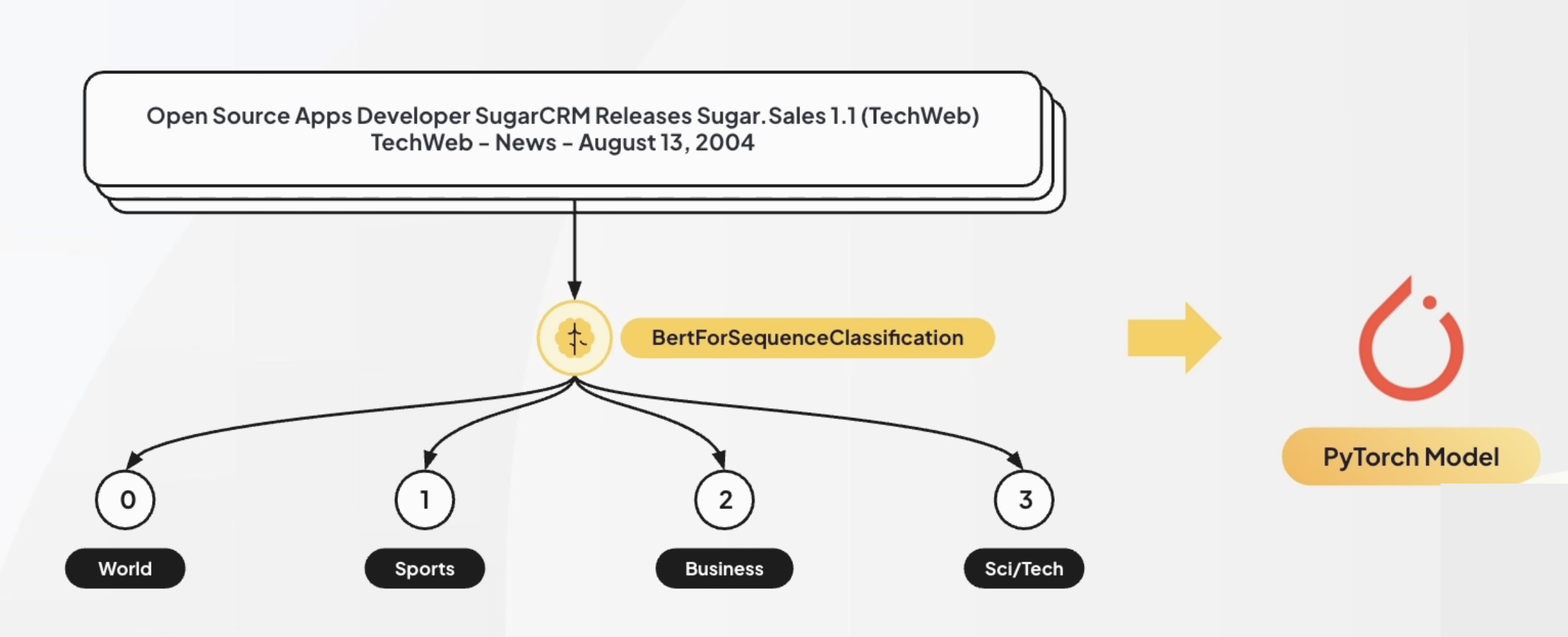

실습: 카테고리 분류 모델 만들기

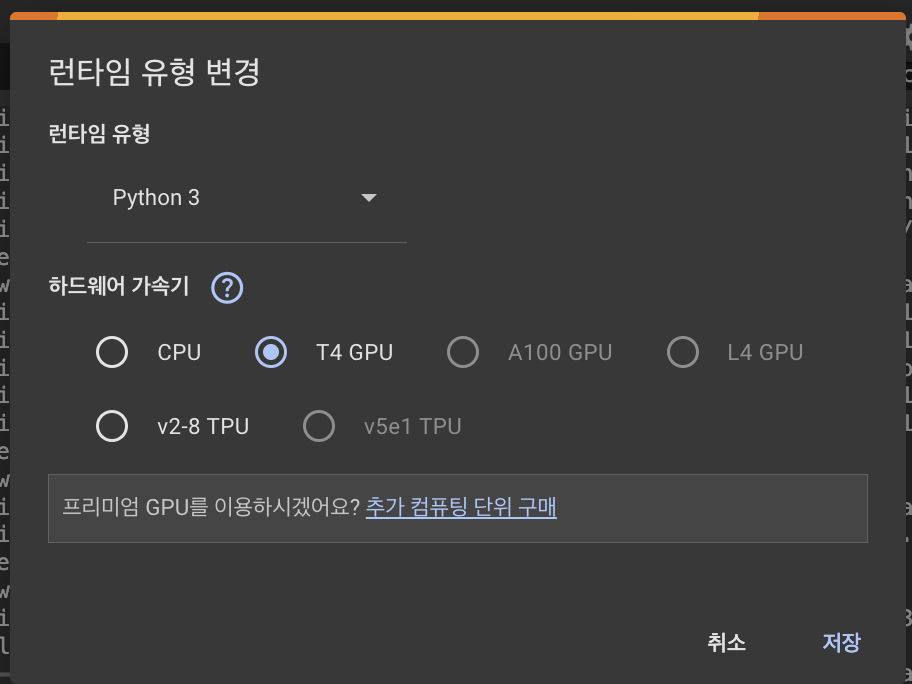

런타임: T4-GPU로 변경

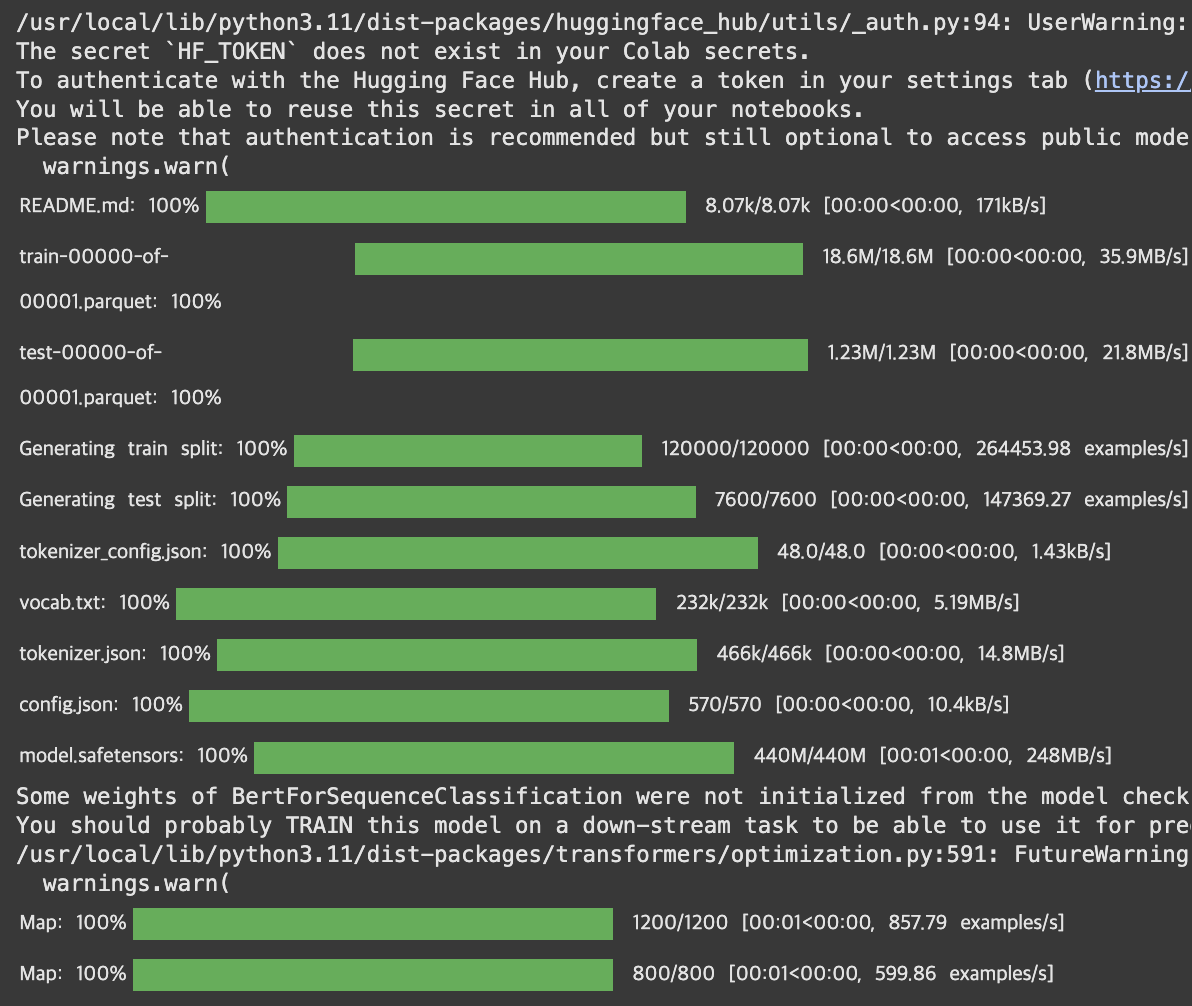

허깅 페이스에 있는 ag_news 데이터셋 사용

import torch

import torch.nn.functional as F

import matplotlib.pyplot as plt

import seaborn as sns

from torch.utils.data import DataLoader

from transformers import BatchEncoding, BertTokenizer, BertForSequenceClassification, AdamW

from sklearn.metrics import confusion_matrix

from datasets import load_dataset

from tqdm import tqdm

from typing import TypedDict- 데이터셋 로드 & Bert 토크나이저 사용 & 사전 학습된 모델 사용

dataset = load_dataset("ag_news")

tokenizer = BertTokenizer.from_pretrained("bert-base-uncased")

model = BertForSequenceClassification.from_pretrained("bert-base-uncased", num_labels=4)

optimizer = AdamW(model.parameters(), lr=5e-5)

criterion = torch.nn.CrossEntropyLoss()

class DatasetItem(TypedDict):

text: str

label: str

def preprocess_data(dataset_item: DatasetItem) -> dict[str, torch.Tensor]:

return tokenizer(dataset_item["text"], truncation=True, padding="max_length", return_tensors="pt")

train_dataset = dataset["train"].select(range(1200)).map(preprocess_data, batched=True)

test_dataset = dataset["test"].select(range(800)).map(preprocess_data, batched=True)

train_dataset.set_format("torch", columns=["input_ids", "attention_mask", "label"])

test_dataset.set_format("torch", columns=["input_ids", "attention_mask", "label"])

train_loader = DataLoader(train_dataset, batch_size=8, shuffle=True)

test_loader = DataLoader(test_dataset, batch_size=8, shuffle=False)

cuda GPU 사용

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

model.to(device)

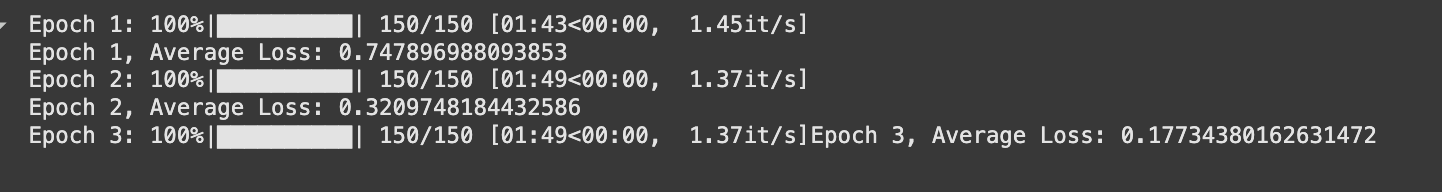

num_epochs = 3

losses: list[float] = []

for epoch in range(num_epochs):

model.train()

total_loss = 0

for batch in tqdm(train_loader, desc=f"Epoch {epoch + 1}"):

inputs = {key: batch[key].to(device) for key in batch}

labels = inputs.pop("label")

outputs = model(**inputs, labels=labels)

loss = outputs.loss

total_loss += loss.item()

losses.append(loss.item())

optimizer.zero_grad()

loss.backward()

optimizer.step()

average_loss = total_loss / len(train_loader)

print(f"Epoch {epoch + 1}, Average Loss: {average_loss}")

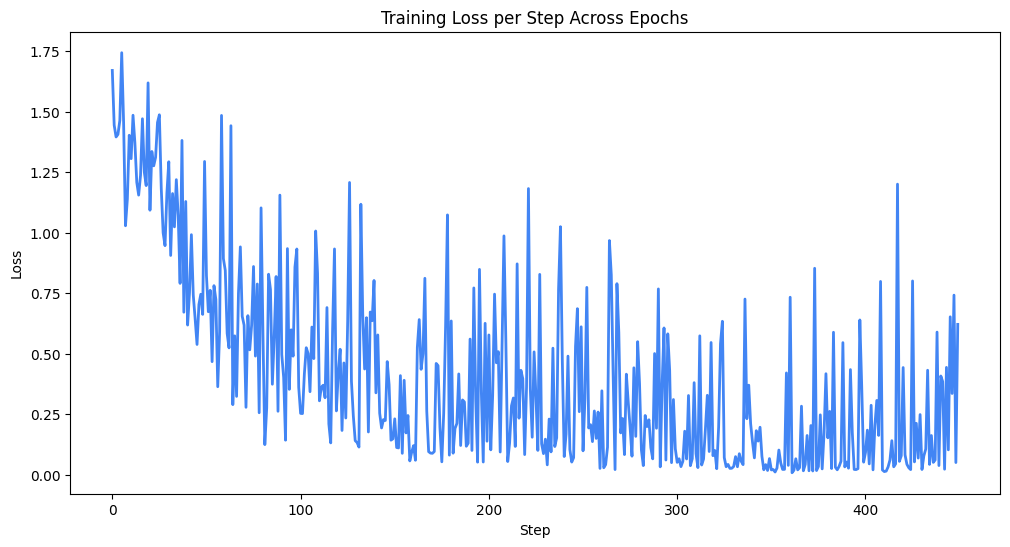

성능 지표 확인

- Loss가 줄어드는 경향을 보임

plt.figure(figsize=(12, 6))

plt.plot(losses, color="#4285f4", linewidth=2)

plt.xlabel("Step")

plt.ylabel("Loss")

plt.title("Training Loss per Step Across Epochs")

plt.show()

모델 평가

- test 데이터 사용

- Accuracy: 약 87%

model.eval()

correct = 0

total = 0

with torch.no_grad():

for batch in tqdm(test_loader, desc="Evaluating"):

inputs = {key: batch[key].to(device) for key in batch}

labels = inputs.pop("label")

outputs = model(**inputs, labels=labels)

logits = outputs.logits

predicted_labels = torch.argmax(logits, dim=1)

correct += (predicted_labels == labels).sum().item()

total += labels.size(0)

accuracy = correct / total

print("")

print(f"Test Accuracy: {accuracy * 100:.2f}%")

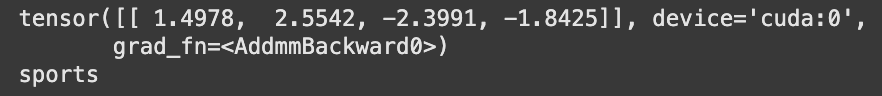

실제 결과값 확인

test_input = "[Official] 'Legendary Coach Resigns → Appoints New Commander' Suwon Completes Coaching Staff... Scout Bae Ki-jong Joins + Coach Shin Hwa-yong Remains"

test_input_processed = tokenizer(test_input, truncation=True, padding="max_length", return_tensors="pt").to(device)

logits = model(**test_input_processed).logits

print(logits)

predicted_labels = torch.argmax(logits, dim=1)

labeling_mapper = ["world", "sports", "business", "sci/tech"]

print(labeling_mapper[predicted_labels[0]])

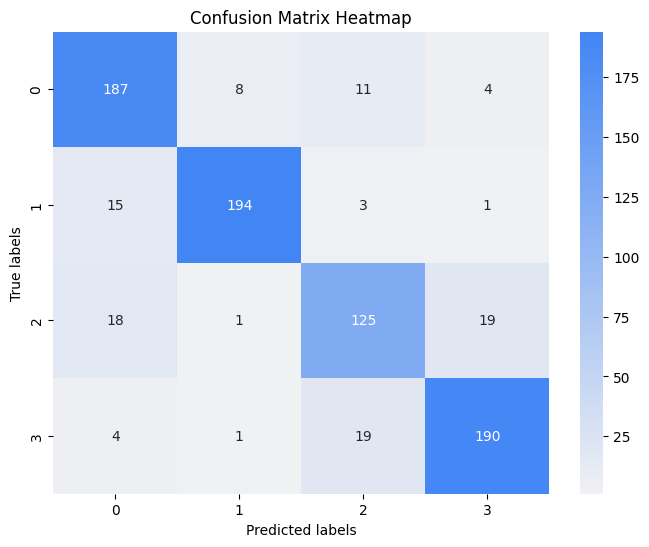

혼동 행렬

all_predictions: list[int] = []

all_labels: list[int] = []

with torch.no_grad():

for batch in tqdm(test_loader, desc="Evaluating"):

inputs = {key: batch[key].to(device) for key in batch}

labels = inputs.pop("label")

outputs = model(**inputs)

logits = outputs.logits

predicted_labels = torch.argmax(logits, dim=1)

all_predictions.extend(predicted_labels.cpu().numpy())

all_labels.extend(labels.cpu().numpy())

- 0, 1, 3번 레이블의 경우 예측이 잘 됨

conf_matrix = confusion_matrix(all_labels, all_predictions)

plt.figure(figsize=(8, 6))

sns.heatmap(conf_matrix, annot=True, fmt="g", cmap=sns.light_palette("#4285f4", as_cmap=True))

plt.xlabel("Predicted labels")

plt.ylabel("True labels")

plt.title("Confusion Matrix Heatmap")

plt.show()

모델 서빙

PyTorch Model➡️ 로컬 모델 아티팩트 ➡️ Torch Serve

- 모델 체크포인트 저장

model_save_path = "bert_news_classification_model.pth"

torch.save(model.state_dict(), model_save_path)

- 모델 프로세스를 위한 핸들링하는 함수 설정

BaseHandler상속받아 정의

%%writefile model_handler.py

import json

import torch

from ts.context import Context

from ts.torch_handler.base_handler import BaseHandler

from transformers import BatchEncoding, BertTokenizer, BertForSequenceClassification

class ModelHandler(BaseHandler):

def __init__(self):

self.initialized = False

self.tokenizer = None

self.model = None

def initialize(self, context: Context):

self.initialized = True

self.tokenizer = BertTokenizer.from_pretrained("bert-base-uncased")

self.model = BertForSequenceClassification.from_pretrained("bert-base-uncased", num_labels=4)

self.model.load_state_dict(torch.load("bert_news_classification_model.pth"))

self.model.to(torch.device("cuda" if torch.cuda.is_available() else "cpu"))

self.model.eval()

def preprocess(self, data: list[dict[str, bytearray]]) -> BatchEncoding:

model_input_texts: list[str] = sum([json.loads(item.get("body").decode("utf-8"))["data"] for item in data], [])

inputs = self.tokenizer(model_input_texts, truncation=True, padding=True, max_length=512, return_tensors="pt")

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

return inputs.to(device)

def inference(self, input_batch: BatchEncoding) -> torch.Tensor:

with torch.no_grad():

outputs = self.model(**input_batch)

return outputs.logits

def postprocess(self, inference_output: torch.Tensor) -> list[dict[str, float]]:

probabilities = torch.nn.functional.softmax(inference_output, dim=1)

return [{"label": int(torch.argmax(prob)), "probability": float(prob.max())} for prob in probabilities]

- 파이토치 서버에 연결

%%writefile config.properties

inference_address=http://0.0.0.0:5000

management_address=http://0.0.0.0:5001

metrics_address=http://0.0.0.0:5002

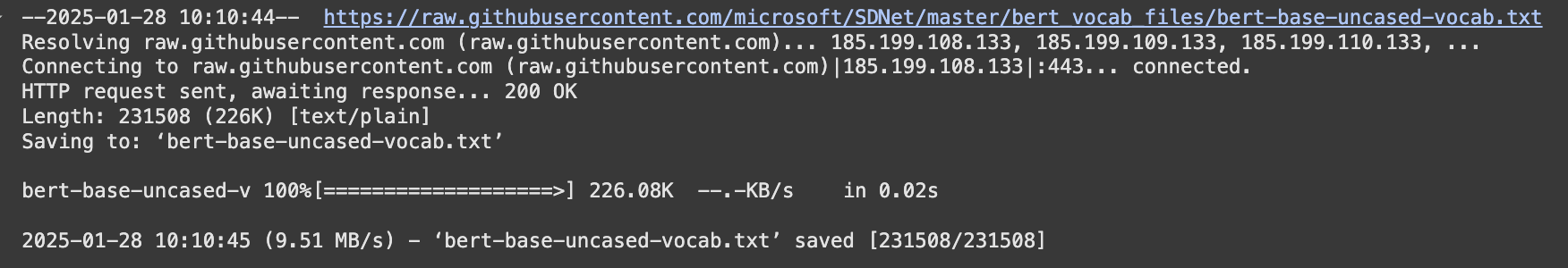

- 파일 다운로드

# bert vocab 파일 (아티팩트)

!wget https://raw.githubusercontent.com/microsoft/SDNet/master/bert_vocab_files/bert-base-uncased-vocab.txt \

-O bert-base-uncased-vocab.txt

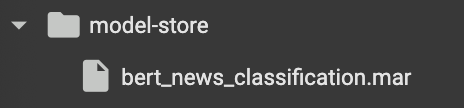

- 모델 패키징

!mkdir -p model-store!torch-model-archiver \

--model-name bert_news_classification \

--version 1.0 \

--serialized-file bert_news_classification_model.pth \

--handler ./model_handler.py \

--extra-files "bert-base-uncased-vocab.txt" \

--export-path model-store \

-f

- 모델 서빙(백그라운드)

%%script bash --bg

PYTHONPATH=/usr/lib/python3.10 torchserve \

--start \

--ncs \

--ts-config config.properties \

--model-store model-store \

--models bert_news_classification=bert_news_classification.mar \

--disable-token-auth!curl -X GET localhost:5000/ping{

"status": "Healthy"

}- 모델 평가를 위한 외부 뉴스 기사 데이터셋 파일 생성

%%shell

# 모델 실제 평가를 위해 외부 뉴스 기사 데이터 셋 파일 생성.

cat > request_sports.json <<EOF

{

"data": [

"Bleary-eyed from 16 hours on a Greyhound bus, he strolled into the stadium running on fumes. He’d barely slept in two days. The ride he was supposed to hitch from Charlotte to Indianapolis canceled at the last minute, and for a few nervy hours, Antonio Barnes started to have his doubts. The trip he’d waited 40 years for looked like it wasn’t going to happen.ADVERTISEMENTBut as he moved through the concourse at Lucas Oil Stadium an hour before the Colts faced the Raiders, it started to sink in. His pace quickened. His eyes widened. His voice picked up.“I got chills right now,” he said. “Chills.”Barnes, 57, is a lifer, a Colts fan since the Baltimore days. He wore No. 25 on his pee wee football team because that’s the number Nesby Glasgow wore on Sundays. He was a talent in his own right, too: one of his old coaches nicknamed him “Bird” because of his speed with the ball.Back then, he’d catch the city bus to Memorial Stadium, buy a bleacher ticket for $5 and watch Glasgow and Bert Jones, Curtis Dickey and Glenn Doughty. When he didn’t have any money, he’d find a hole in the fence and sneak in. After the game was over, he’d weasel his way onto the field and try to meet the players. “They were tall as trees,” he remembers.He remembers the last game he went to: Sept. 25, 1983, an overtime win over the Bears. Six months later the Colts would ditch Baltimore in the middle of the night, a sucker-punch some in the city never got over. But Barnes couldn’t quit them. When his entire family became Ravens fans, he refused. “The Colts are all I know,” he says.For years, when he couldn’t watch the games, he’d try the radio. And when that didn’t work, he’d follow the scroll at the bottom of a screen.“There were so many nights I’d just sit there in my cell, picturing what it’d be like to go to another game,” he says. “But you’re left with that thought that keeps running through your mind: I’m never getting out.”It’s hard to dream when you’re serving a life sentence for conspiracy to commit murder.It started with a handoff, a low-level dealer named Mickey Poole telling him to tuck a Ziploc full of heroin into his pocket and hide behind the Murphy towers. This was how young drug runners were groomed in Baltimore in the late 1970s. This was Barnes’ way in.ADVERTISEMENTHe was 12.Back then he idolized the Mickey Pooles of the world, the older kids who drove the shiny cars, wore the flashy jewelry, had the girls on their arms and made any working stiff punching a clock from 9 to 5 look like a fool. They owned the streets. Barnes wanted to own them, too.“In our world,” says his nephew Demon Brown, “the only successful people we saw were selling drugs and carrying guns.”So whenever Mickey would signal for a vial or two, Barnes would hurry over from his hiding spot with that Ziploc bag, out of breath because he’d been running so hard."

]

}

EOF

cat > request_business.json <<EOF

{

"data": [

"DETROIT – America maintained its love affair with pickup trucks in 2023 — but a top-selling vehicle from Toyota Motor nearly ruined their tailgate party.Sales of the Toyota RAV4 compact crossover came within 10,000 units of Stellantis’ Ram pickup truck last year, a near-No. 3 ranking that would have marked the first time since 2014 that a non-pickup claimed one of the top three U.S. sales podium positions.The RAV4 has rapidly closed the gap: In 2020, the vehicle undersold the Ram truck by more than 133,000 units. Last year, it lagged by just 9,983. Stellantis sold 444,926 Ram pickups last year, a 5% decline from 2022.“Trucks are always at the top because they’re bought by not only individuals, but also fleet buyers and we saw heavy fleet buying last year,” said Michelle Krebs, an executive analyst at Cox Automotive. “The RAV4 shows that people want affordable, smaller SUVs, and the fact that there’s also a hybrid version of that makes it popular with people.”"

]

}

EOF

cat > request_sci_tech.json <<EOF

{

"data": [

"OpenVoice comprises two AI models working together for text-to-speech conversion and voice tone cloning.The first model handles language style, accents, emotion, and other speech patterns. It was trained on 30,000 audio samples with varying emotions from English, Chinese, and Japanese speakers. The second “tone converter” model learned from over 300,000 samples encompassing 20,000 voices.By combining the universal speech model with a user-provided voice sample, OpenVoice can clone voices with very little data. This helps it generate cloned speech significantly faster than alternatives like Meta’s Voicebox.Californian startup OpenVoice comes from California-based startup MyShell, founded in 2023. With $5.6 million in early funding and over 400,000 users already, MyShell bills itself as a decentralised platform for creating and discovering AI apps. In addition to pioneering instant voice cloning, MyShell offers original text-based chatbot personalities, meme generators, user-created text RPGs, and more. Some content is locked behind a subscription fee. The company also charges bot creators to promote their bots on its platform.By open-sourcing its voice cloning capabilities through HuggingFace while monetising its broader app ecosystem, MyShell stands to increase users across both while advancing an open model of AI development."

]

}

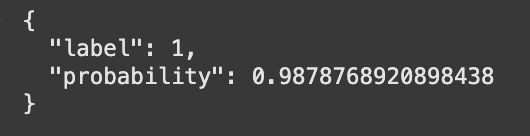

EOF- 스포츠 기사

# 스포츠 기사 평가 (레이블: 1)

!curl -X POST \

-H "Accept: application/json" \

-T "request_sports.json" \

http://localhost:5000/predictions/bert_news_classification

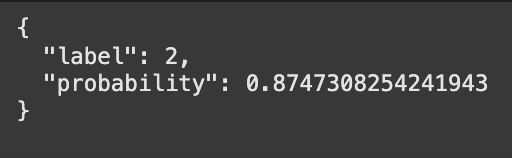

- 비즈니스 기사

# 비즈니스 기사 평가 (레이블: 2)

!curl -X POST \

-H "Accept: application/json" \

-T "request_business.json" \

http://localhost:5000/predictions/bert_news_classification

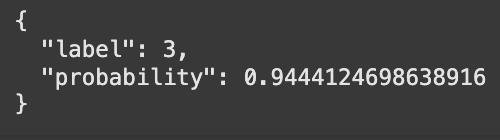

- 테크 기사

# 테크 기사 평가 (레이블: 3)

!curl -X POST \

-H "Accept: application/json" \

-T "request_sci_tech.json" \

http://localhost:5000/predictions/bert_news_classification

ngrok로 외부 연결(필요시)

!pip install pyngrok- 토큰 정보는 실제로 발급받아 사용

from pyngrok import ngrok

ngrok.set_auth_token("{Token}")

inference_tunnel = ngrok.connect("5000")

inference_tunnel

!curl -X POST \

-H "Accept: application/json" \

-T "request_sci_tech.json" \

https://1c1d-34-29-238-199.ngrok-free.app/predictions/bert_news_classification

3-5. Vertex AI 소개

기존 방식의 문제점

- 파라미터 값 하드코딩

- 최적 파라미터 값이 무엇인지 알 수가 없음

- 데이터 변경 시마다 노트북의 모든 과정을 재실행해야 함

- 모델을 로컬에 저장

- 버전 관리가 잘 되어 있지 않은 경우, 그 내용을 파악하기 어려워짐

- 환경 설정 정보에 대한 내용 부재

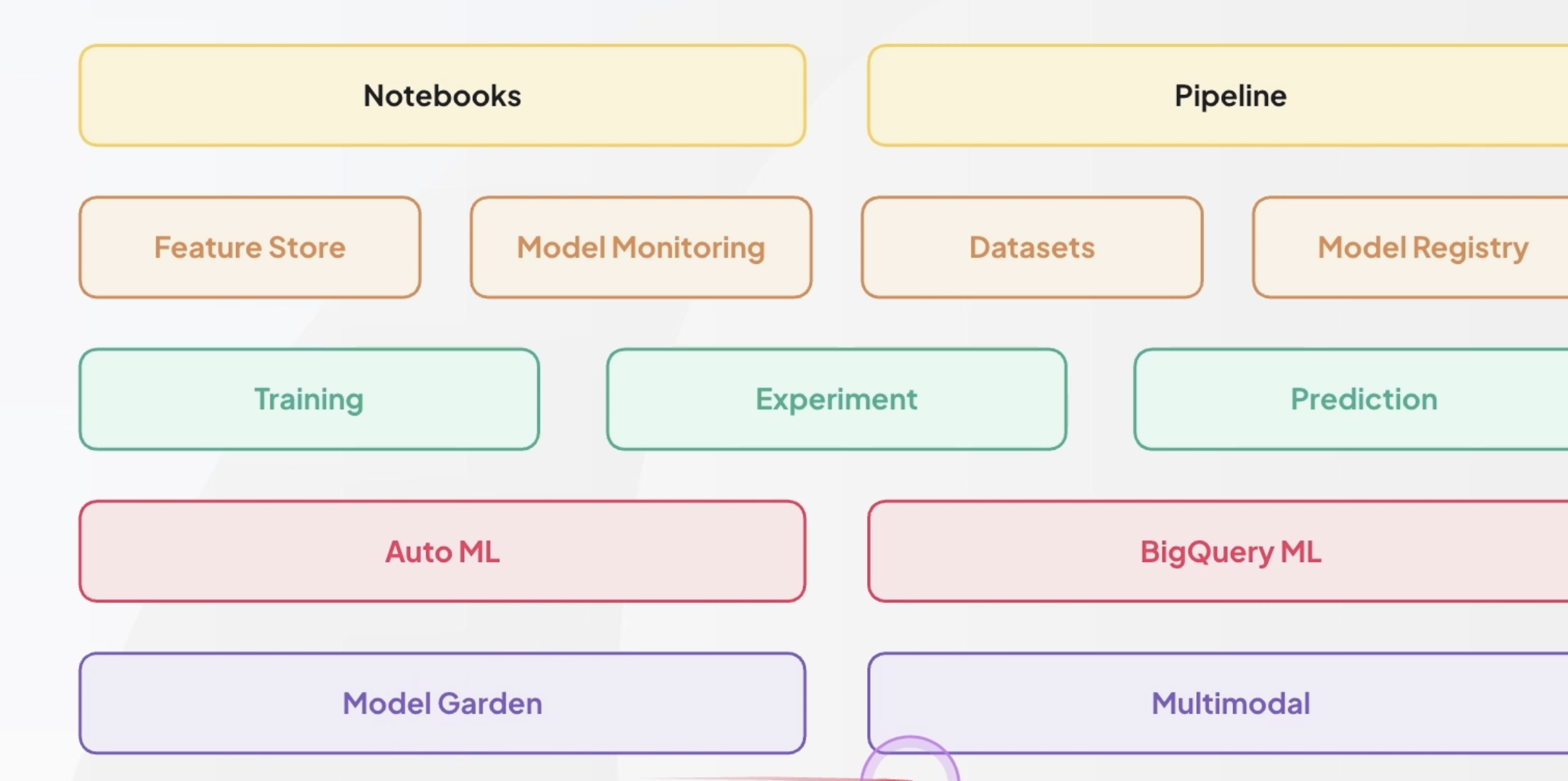

Vertex AI

- ML 모델의 학습, 서빙, 평가, 운영까지의 모든 과정을 한 곳에서 진행할 수 있음

- 패키징이 되어 있는 형태

Vertex AI 컴포넌트

GCP에서 Vertex AI 지원중