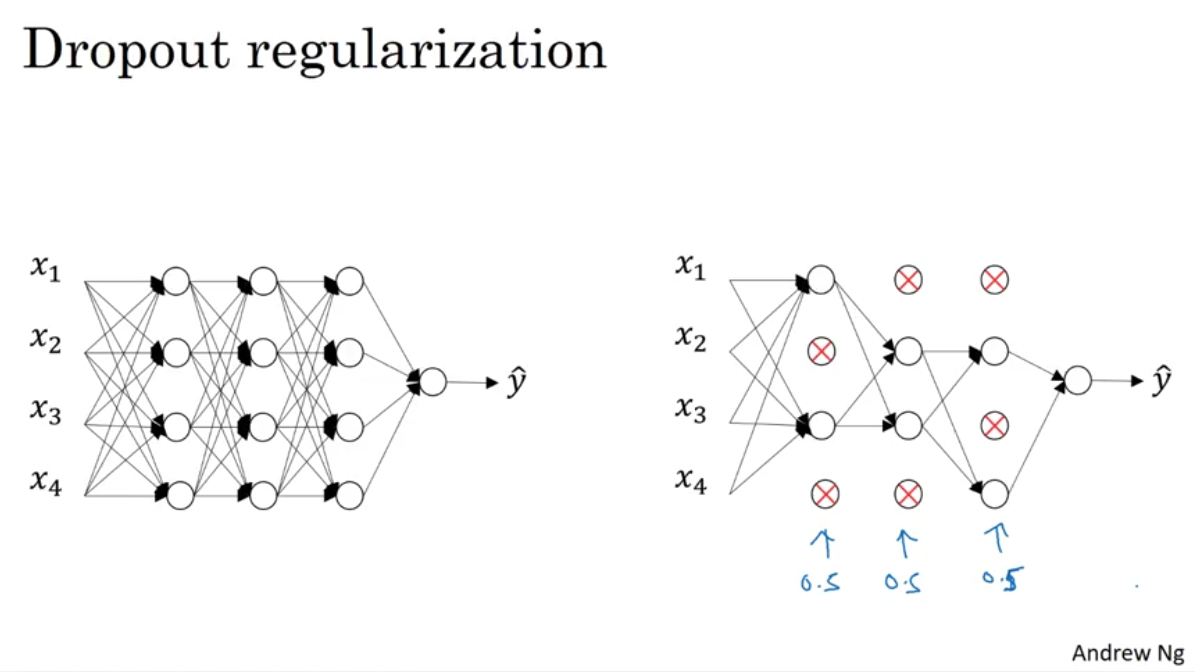

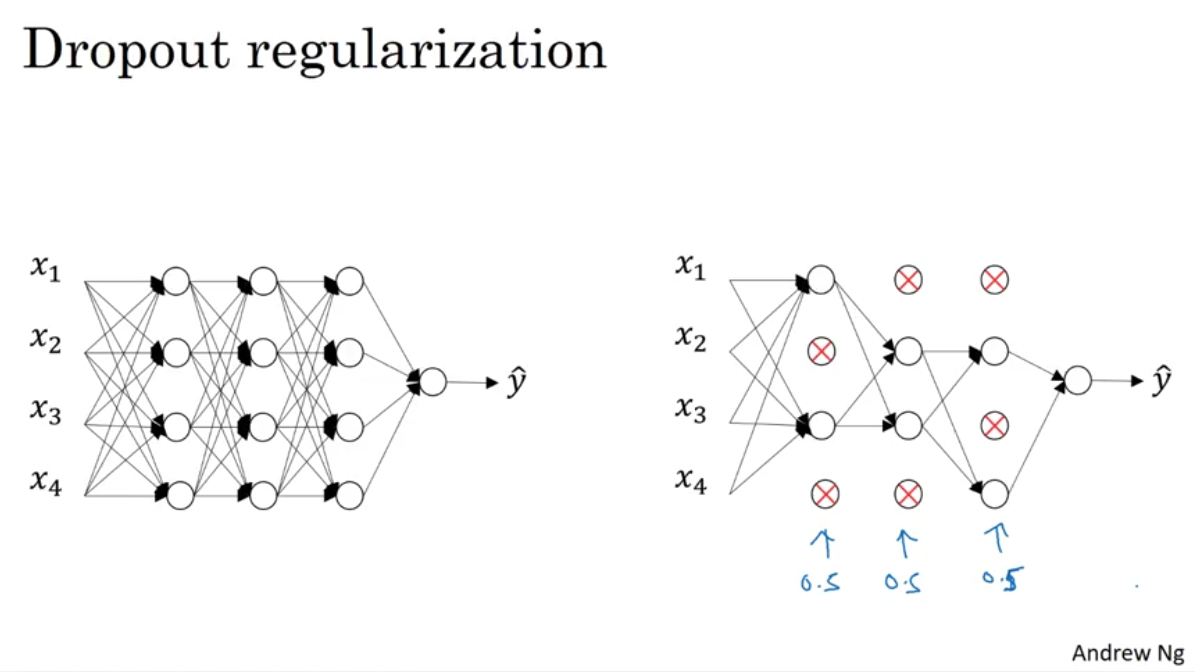

[DNN] Dropout Regularization

- Using dropout, you can set the probability of keeping nodes to each layer. The most common way to implement this is Inverted dropout.

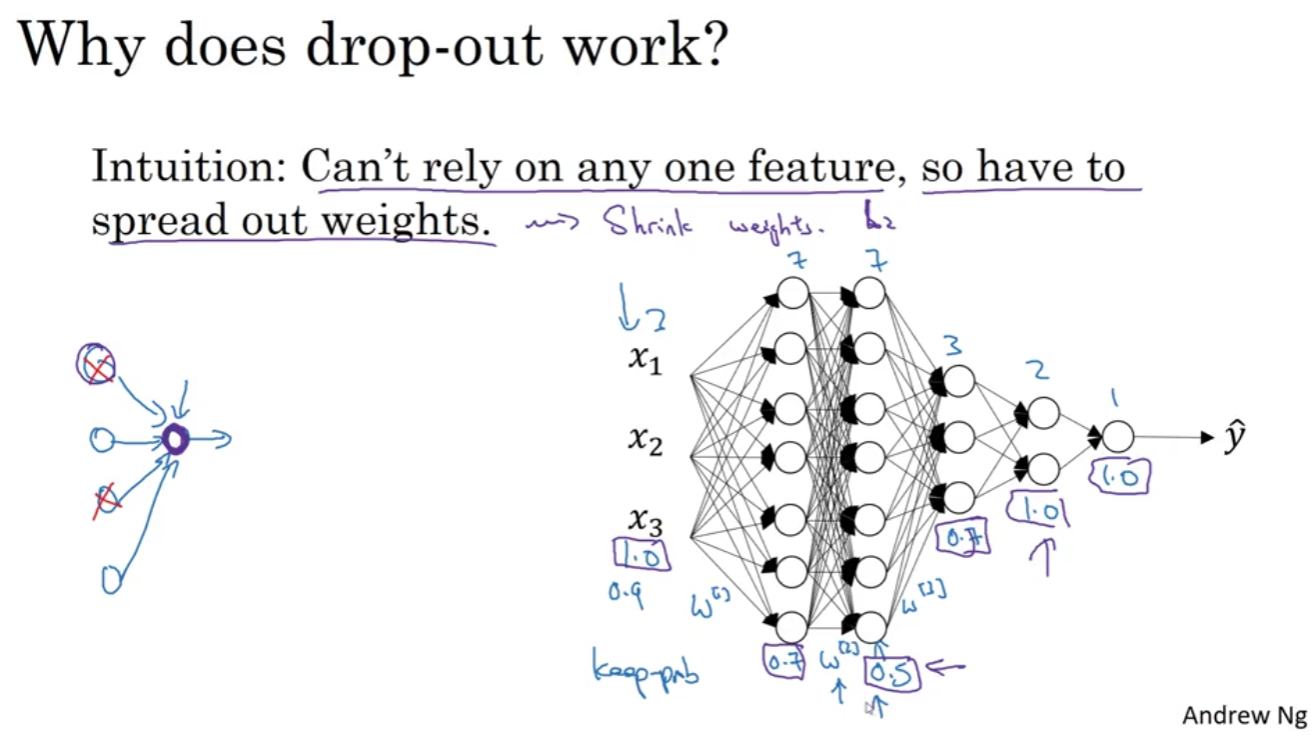

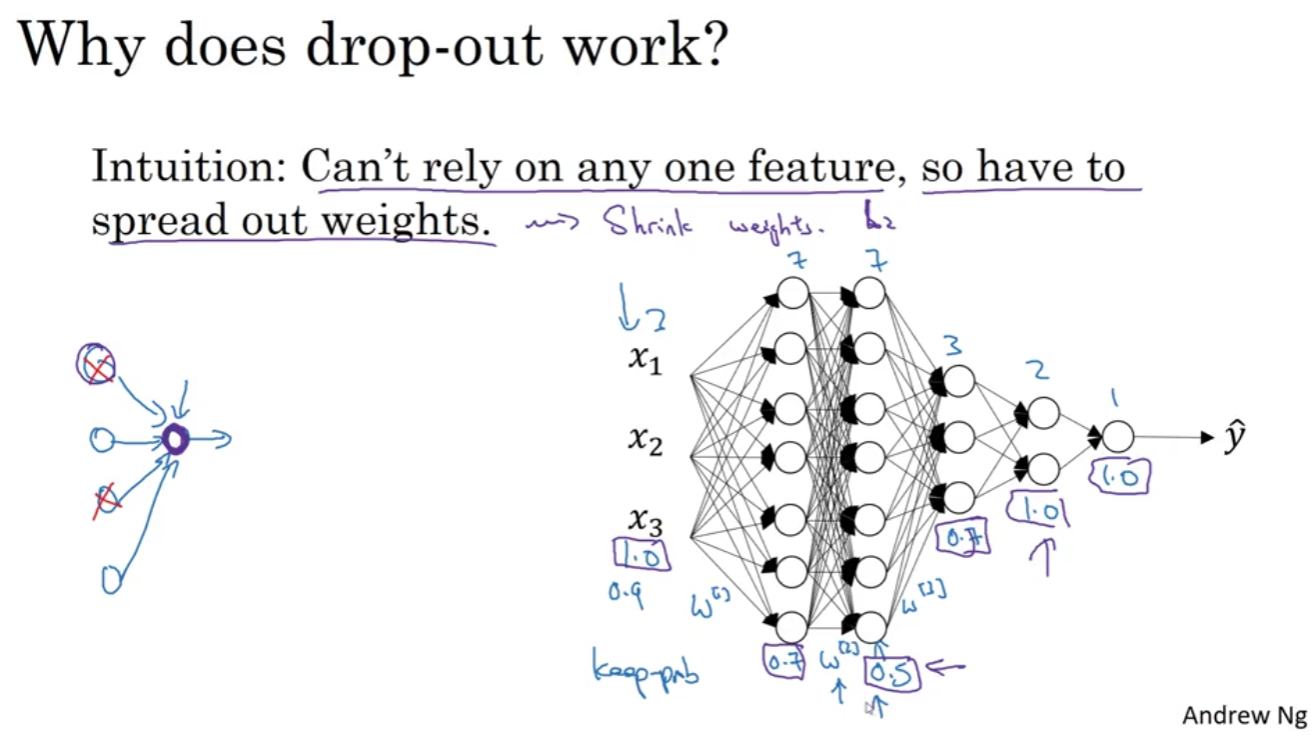

- Intuition: Can't rely on any one feature, so have to spread out weights.

- The result is shrinking weights.

- Similar effect to L2 regularization.

- It is a convention to set lower keep_prob to larger weight matrices.

- Downside: With dropouts, the cost function is not defined well. So, If you want to check if the cost monotocally decreasing with each iteration, you turn off dropouts and check it. If it decreases well, you turn on dropouts and hope it does better.