오늘의 수업

K8S 버전 업그레이드 ! (1.24.5 -> 1.24.6)

6443번 포트 (kube-apiserver)를 이용해서 보안 접근

📕 GKE Dashboard 연결

kube proxy로 연결한다 !

# 토큰 자동 생성 !

kubectl apply -f ClusterRoleBind.yaml

C:\k8s\dashboard_token>kubectl -n kubernetes-dashboard get sa

NAME SECRETS AGE

default 1 6m48s

kubernetes-dashboard 1 6m48s

강사님이 주신 url로 들어가서 토큰 들어가 ~

C:\k8s\dashboard_token>kubectl -n kubernetes-dashboard get secret

NAME TYPE DATA AGE

default-token-fkhfl kubernetes.io/service-account-token 3 7m9s

kubernetes-dashboard-certs Opaque 0 7m8s

kubernetes-dashboard-csrf Opaque 1 7m8s

kubernetes-dashboard-key-holder Opaque 2 7m8s

kubernetes-dashboard-token-r2q4x kubernetes.io/service-account-token 3 7m9s

C:\k8s\dashboard_token>kubectl -n kubernetes-dashboard describe secret kubernetes-dashboard-token-r2q4x

Name: kubernetes-dashboard-token-r2q4x

Namespace: kubernetes-dashboard

Labels: <none>

Annotations: kubernetes.io/service-account.name: kubernetes-dashboard

kubernetes.io/service-account.uid: 37778b7d-cf5f-42ee-9fee-11b7db211890

Type: kubernetes.io/service-account-token

Data

====

namespace: 20 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6Imw5UXJ5VmVkYXNvMnZRTzZ5STBtTXpPb25FNU9BQUFicGFhNTVQZDdYNDAifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZC10b2tlbi1yMnE0eCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6IjM3Nzc4YjdkLWNmNWYtNDJlZS05ZmVlLTExYjdkYjIxMTg5MCIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDprdWJlcm5ldGVzLWRhc2hib2FyZDprdWJlcm5ldGVzLWRhc2hib2FyZCJ9.V6pXYqb7fhItOGzZFaKYP-K9afDJcKqCgxQz8S5ihMbhqf_994C1kw6eElV0R9uMsPXHj5HTxGNkQDKV8O-XVjwPXfgSNF8kGi2zdO2bNjtFpPkL8NOCV2QtEsRBZADLCOIk9RqvpUjdQ5LjxvuOQD4DpvQe2iCbnB9gOCsKb7jrGmzGF8ikDK8dQ1ro5QCMWbAR4tm0Ks3jeGb9S3q7G8lkOZlU7XYxFytQbyXRRGZVBPWy11EtrPoel6FEha7qlvTxqhl87X0sVuhaduWos2L1q6VIXeFgnNhiba7t0XwlBv8KdBzID2sZQ_5Vv4N0pyacGBGsAZvx2nNFcOlaBw

ca.crt: 1509 bytes

📗 Kubernetes Components

-> 5 binary components

아직 프로메테우스랑 그라파나 구축 X -> 이따가 yaml 코드 돌리자

kube-apiserver-k8s-master

etcd-k8s-master

kube-scheduler-k8s-master

kube-controller-manager-k8s-master

kubelet

kubelet은 systemctl ~.service로 running 확인

kube-proxy

calico

시간동기화 for 프로메테우스

sudo apt -y install rdate

sudo rdate -s time.bora.net

date

[시간 정확한지 확인 ]30003 : 프로메테우스

30004: 그라파나

📘 프로메테우스 + 그라파나

Prometheus (프로메테우스)

-> node에 각각 exporter를 붙이고, metric 정보를 수집

Grafana (그라파나)

-> 시각화 도구, Prometheus와 연결하여 metric 정보를 시각화할 수 있다.

ramu@k8s-master:~/LABs$ mkdir k8s-prometheus && cd $_

ramu@k8s-master:~/LABs$ git clone https://github.com/brayanlee/k8s-prometheus.git

# Prometheus

kubectl create namespace monitoring

kubectl create -f prometheus/prometheus-ConfigMap.yaml

kubectl create -f prometheus/prometheus-ClusterRoleBinding.yaml

kubectl create -f prometheus/prometheus-ClusterRole.yaml

kubectl create -f prometheus/prometheus-Deployment.yaml

kubectl create -f prometheus/prometheus-Service.yaml

kubectl create -f prometheus/prometheus-DaemonSet-nodeexporter.yaml

# kube-state

kubectl create -f kube-state/kube-state-ClusterRoleBinding.yaml

kubectl create -f kube-state/kube-state-ClusterRole.yaml

kubectl create -f kube-state/kube-state-Service

kubectl create -f kube-state/kube-state-ServiceAccount.yaml

kubectl create -f kube-state/kube-state-Deployment.yaml

kubectl create -f kube-state/kube-state-Service.yaml

# grafana

kubectl create -f grafana/grafana-Deployment.yaml

kubectl create -f grafana/grafana-Service.yaml

🐳 Kubernetes architecture

-

k8s object = k8s api-resource

-

Pod, ReplicaSet, Deployment, DaemonSet, Job, CronJob(분시일월요일) ...

-

kubectl api-resource | grep -i network

-

yaml code 구성, CLI( kubectl run... -> 이건 자격증용 ... / 이런 쉬운거 지금 하지마! yaml 직접 작성해)

생성/삭제

- kubectl {create | apply | delete } -f pod.yaml

- create yaml에 지정된 object(api-resource)를 생성, update X

- apply yaml에 지정된 object(api-resource)를 생성, 모두는 아니고 일부는 update도 가능

RoleBinding : 이런건 변경될 일이 없으니까 create로 하는듯 - delete

조회

- kubectl {get | describe} {object type} object_name [-n, namespace | default]

namespace의 default == default namespace, 실제 업무에서는 namespace 단위로 분리해서 쓴다.

gke, eks -> master는 클라우드 소속 우리꺼아님 ~

전체 프로세스

etcd 접속 및 백업 backup, snapshot

카카오에서도 5일마다인가 etcd를 snapshot(복제)해놓은다구 합니다.

yji@k8s-master:~$ pall | grep -i etcd

⭐kube-system ⭐etcd-k8s-master 1/1 Running 2 (3h1m ago) 22h

# etcd 파드에 접속~

yji@k8s-master:~$ kubectl -n ⭐kube-system exec -it ⭐etcd-k8s-master -- sh

sh-5.1#

# 여기는 echo * 이 ls ~ ls가 따로 없어서 가볍다

sh-5.1# echo *

bin boot dev etc home lib proc root run sbin sys tmp usr var

sh-5.1# cd pki/etcd/

ca.crt healthcheck-client.key server.crt

ca.key peer.crt server.key

healthcheck-client.crt peer.key

sh-5.1# cd pki/etcd/

# 여기 키가 다 있다.

sh-5.1# echo *

ca.crt ca.key healthcheck-client.crt healthcheck-client.key peer.crt peer.key server.crt server.key

🤔🤔🤔🤔🤔

kubectl -n kube-system exec -it etcd-k8s-master -- sh -c "ETCDCTL_API=3 ETCDCTL_CACERT=/etc/kubernetes/pki/etcd/ca.crt ETCDCTL_CERT=/etc/kubernetes/pki/etcd/server.crt ETCDCTL_KEY=/etc/kubernetes/pki/etcd/server.key etcdctl endpoint health"

127.0.0.1:2379 is healthy: successfully committed proposal: took = 20.267606ms

kubectl -n kube-system exec -it etcd-k8s-master -- sh -c "ETCDCTL_API=3 etcdctl \

--cacert=/etc/kubernetes/pki/etcd/ca.crt \

--cert=/etc/kubernetes/pki/etcd/server.crt \

--key=/etc/kubernetes/pki/etcd/server.key \

member list"

76335a6259872c1a, started, k8s-master, https://192.168.56.100:2380, https://192.168.56.100:2379, false

## 위 정보를 스냅샷 형태로 저장 , snapshot => 복제

kubectl -n kube-system exec -it etcd-k8s-master -- sh -c "ETCDCTL_API=3 etcdctl \

--cacert=/etc/kubernetes/pki/etcd/ca.crt \

--cert=/etc/kubernetes/pki/etcd/server.crt \

--key=/etc/kubernetes/pki/etcd/server.key \

snapshot save /var/lib/etcd/snapshot.db"

{"level":"info","ts":"2022-09-30T03:27:15.306Z","caller":"snapshot/v3_snapshot.go:65","msg":"created temporary db file","path":"/var/lib/etcd/snapshot.db.part"}

{"level":"info","ts":"2022-09-30T03:27:15.334Z","logger":"client","caller":"v3/maintenance.go:211","msg":"opened snapshot stream; downloading"}

{"level":"info","ts":"2022-09-30T03:27:15.334Z","caller":"snapshot/v3_snapshot.go:73","msg":"fetching snapshot","endpoint":"127.0.0.1:2379"}

{"level":"info","ts":"2022-09-30T03:27:15.888Z","logger":"client","caller":"v3/maintenance.go:219","msg":"completed snapshot read; closing"}

{"level":"info","ts":"2022-09-30T03:27:15.968Z","caller":"snapshot/v3_snapshot.go:88","msg":"fetched snapshot","endpoint":"127.0.0.1:2379","size":"7.6 MB","took":"now"}

{"level":"info","ts":"2022-09-30T03:27:15.969Z","caller":"snapshot/v3_snapshot.go:97","msg":"saved","path":"/var/lib/etcd/snapshot.db"}

Snapshot saved at /var/lib/etcd/snapshot.db

## 확인

yji@k8s-master:~$ sudo ls -l /var/lib/etcd

drwx------ 4 root root 29 9월 30 09:10 member

-rw------- 1 root root 7634976 9월 30 12:27 snapshot.db

📘 etcd 정기적으로 백업하기. 백업 스케줄링 ?

백업 자동으로 스케줄링할텐데. how? kubernetes cronjob

이름이 snapshot.db로 계속 덮어써지면 안된다. 자동 백업 기술을 써라 !

yji@k8s-master:~$ mkdir backup

yji@k8s-master:~$ sudo ls -lh /var/lib/etcd

total 7.3M

drwx------ 4 root root 29 9월 30 09:10 member

-rw------- 1 root root 7.3M 9월 30 12:27 snapshot.db

yji@k8s-master:~$ sudo cp /var/lib/etcd/snapshot.db $HOME/backup/snapshot.db~$(date +%m-%d-%y)

yji@k8s-master:~$ cd backup/

yji@k8s-master:~/backup$ ls -lh

total 7.3M

-rw------- 1 root root 7.3M 9월 30 12:31 snapshot.db~09-30-22📘 네트워크

-

Kubernetes master

- kubeadm init --pod-network-cidr=10.96.0.0/12 --advertise-address=192.168.56.100

... kubeadm join ~ (key 사용해서..) # joinkey는 새로 발급 받을 수 있습니당.

-> addon- 각 노드의 BGP(= gateway)- vRouter(가상 라우터) (L3)

- calico : network plugin

- 이 안에는 여러 개의 interface(tool), module로 구성되어 있음.

- calico.yaml을 까보면 4700 line. -> 이 4700줄 안에 interface와 module이 구성되어있다.

- 이 안에는 여러 개의 interface(tool), module로 구성되어 있음.

-

기억할 것: 우리는 네크워크 대역을 100만개로 가져갔다. 10.96.0.0/12

📘 calico 까보기

🤔 calico -> daemon set... 🤔

calico = daemon set으로 만들어진 네트워크 관련 pod !

- calico가 하는 일

- 파드 생성, 파드 조회하는 모든 routing 정보를 다 가지고 있다. !

- 라우팅 정보를 이용한 network 수행

- 그러면 라우팅 정보는 어디서 가져와 ?

- etcd에 저장되어 있다 ~

- 매번 etcd에 저장된 정보를 가져오는 건 아니고,

etcd의 정보를 가져오는 모듈들이 있다.

- DaemonSet: pod를 모든 노드에 하나씩 배포 !

- 노드하나 추가하면 데몬셋도 하나 더 생김

calico-node-585qf

calico-node-nw4z6

calico-node-qwvqz

- 노드하나 추가하면 데몬셋도 하나 더 생김

# calico -> 각 노드마다 존재

yji@k8s-master:~/backup$ pall | grep -i calico

kube-system calico-kube-controllers-6799f5f4b4-vdgcb 1/1 Running 1 (5h9m ago) 23h

kube-system calico-node-585qf 1/1 Running 1 (5h9m ago) 23h

kube-system calico-node-nw4z6 1/1 Running 1 (5h9m ago) 23h

kube-system calico-node-qwvqz 1/1 Running 2 (5h1m ago) 23h

# 자세히 볼라면 describe를 이용한다.

yji@k8s-master:~/backup$ kubectl describe po calico-node-qwvqz -n kube-system | grep 192

Node: k8s-master/192.168.56.100

IP: 192.168.56.100

IP: 192.168.56.100

calico/node is not ready: BIRD is not ready: BGP not established with 192.168.56.101,192.168.56.102

calico/node is not ready: BIRD is not ready: BGP not established with 192.168.56.101,192.168.56.102

calico/node is not ready: BIRD is not ready: BGP not established with 192.168.56.101,192.168.56.102

calico/node is not ready: BIRD is not ready: BGP not established with 192.168.56.101,192.168.56.102

### calicoctl 사용하기

curl -L https://github.com/projectcalico/calico/releases/download/v3.24.1/calicoctl-linux-amd64 -o calicoctl

sudo mv calicoctl /usr/bin/calicoctl

yji@k8s-master:~/LABs$ sudo chmod +x /usr/bin/calicoctl

yji@k8s-master:~/LABs$ calicoctl ipam show

+----------+--------------+------------+------------+-------------------+

| GROUPING | CIDR | IPS TOTAL | IPS IN USE | IPS FREE |

+----------+--------------+------------+------------+-------------------+

| IP Pool | 10.96.0.0/12 | 1.0486e+06 | ⭐ 13 (0%) | 1.0486e+06 (100%) |

+----------+--------------+------------+------------+-------------------+

⭐ : 13 = 백만개 중에 13개 쓰고있다.

# 이건 뭘까. ⭐ block = 각 노드. 각 노드에 할당된 ip확인. 노드이 block단위로 IP를 관리한다 ! ⭐

yji@k8s-master:~/LABs$ calicoctl ipam show --show-blocks

+----------+------------------+------------+------------+-------------------+

| GROUPING | CIDR | IPS TOTAL | IPS IN USE | IPS FREE |

+----------+------------------+------------+------------+-------------------+

| IP Pool | 10.96.0.0/12 | 1.0486e+06 | 13 (0%) | 1.0486e+06 (100%) |

| Block | 10.108.82.192/26 | 64 | 1 (2%) | 63 (98%) |

| Block | 10.109.131.0/26 | 64 | 7 (11%) | 57 (89%) |

| Block | 10.111.156.64/26 | 64 | 5 (8%) | 59 (92%) |

+----------+------------------+------------+------------+-------------------+

yji@k8s-master:~/LABs/mynode$ kubectl get po -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

mynode-pod 1/1 Running 0 50m 10.111.156.76 k8s-node1 <none> <none>

yji@k8s-master:~/LABs/mynode$ calicoctl get workloadendpoints

WORKLOAD NODE NETWORKS INTERFACE

mynode-pod k8s-node1 10.111.156.76/32 calif3d7c5625ac

mynode-pod2 k8s-node1 10.111.156.77/32 cali16d3a4711da

mynode-pode3 k8s-node2 10.109.131.14/32 calif099a764438

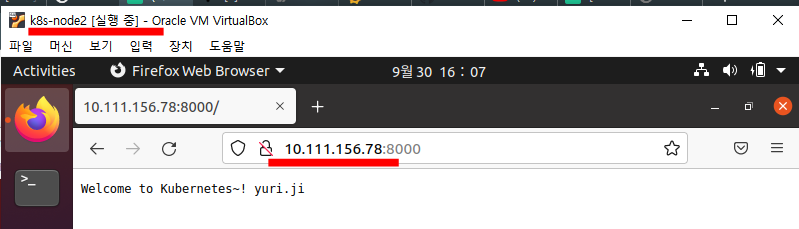

yji@k8s-master:~/LABs/mynode$ curl 10.111.156.76:8000

Welcome to Kubernetes~! by kevin.lee

실습 kevin.lee -> 본인 이름으로 바꿔서 올리기

### 1. runapp.js 파일 작성

var http = require('http');

var content = function(req, resp) {

resp.end("Welcome to Kubernetes~! yuri.ji" + "\n");

resp.writeHead(200);

}

var web = http.createServer(content);

web.listen(8000);

### 2. Dockerfile 작성

FROM node:slim

EXPOSE 8000

COPY runapp.js .

CMD node runapp.js

### 3. yaml 파일 작성

apiVersion: v1

kind: Pod

metadata:

name: mynode-pod1

spec:

containers:

- name: nodejs-container

image: ur2e/mynode:1.0

ports:

- containerPort: 8000

### 4. 이미지 빌드 후 docker run test

sudo docker -t mynode:1.0 build .

sudo docker run -it --name mynode -p 10000:8000 ur2e/mynode:1.0

브라우저에서 192.168.56.100:10000으로 접속하면 잘 뜬다.

### 5. 이미지 docker hub에 올리기

sudo docker tag mynode:1.0 ur2e/mynode:1.0

sudo docker push ur2e/mynode:1.0

### 6. pod생성

yji@k8s-master:~/LABs/mynode$ kubectl apply -f yurinode.yaml

pod/mynode-pod1 created

yji@k8s-master:~/LABs/mynode$ kubectl get po -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

mynode-pod1 0/1 ContainerCreating 0 17s <none> ⭐ k8s-node1 <none> <none>

yji@k8s-master:~/LABs/mynode$ calicoctl get workloadendpoints

WORKLOAD NODE NETWORKS INTERFACE

mynode-pod1 ⭐ k8s-node1 ⭐10.111.156.78/32 cali852df8396ae

yji@k8s-master:~/LABs/mynode$ curl 10.111.156.78:10000

curl: (7) Failed to connect to 10.111.156.78 port 10000: Connection refused

yji@k8s-master:~/LABs/mynode$ curl 10.111.156.78:⭐8000

Welcome to Kubernetes~! yuri.jiMaster node와 Workernode2에서 Workernode1의 pod에 접근이 된다.

# serveice 와 연결 위해서 label 추가

yji@k8s-master:~/LABs/mynode$ cat yurinode.yaml

apiVersion: v1

kind: Pod

metadata:

name: mynode-pod1

labels:

run: nodejs

spec:

containers:

- name: nodejs-container

image: ur2e/mynode:1.0

ports:

- containerPort: 8000

# pod 생성

yji@k8s-master:~/LABs/mynode$ kubectl apply -f yurinode.yaml

pod/mynode-pod1 configured

# 조회

yji@k8s-master:~/LABs/mynode$ kubectl get po -o wide --show-labels

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES LABELS

mynode-pod1 1/1 Running 0 28m 10.111.156.78 k8s-node1 <none> <none> run=nodejs

## 서비스 만들기 (⭐ why? 외부에서 접근 가능하도록 ⭐)

yji@k8s-master:~/LABs/mynode$ kubectl apply -f mynode-svc.yaml

service/mynode-svc created

## 서비스의 External-ip 확인

yji@k8s-master:~/LABs/mynode$ kubectl get po,svc -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/mynode-pod1 1/1 Running 0 35m 10.111.156.78 k8s-node1 <none> <none>

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 26h <none>

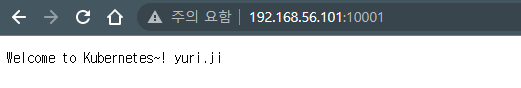

service/mynode-svc ClusterIP 10.102.226.52 192.168.56.101 10001/TCP 4m50s run=nodejs

# 윈도우에서도 접근된다 !

yji@k8s-master:~/LABs/mynode$ curl 192.168.56.101:10001

Welcome to Kubernetes~! yuri.ji

# 이건 안된다 ! -> 리눅스(Host OS)에서는 가능하다 !

yji@k8s-master:~/LABs/mynode$ curl 10.102.226.52:10001

Welcome to Kubernetes~! yuri.ji

GKE

apiVersion: v1

kind: Pod

metadata:

name: mynode-pod

labels:

run: nodejs

spec:

containers:

- name: nodejs-container

image: ur2e/mynode:1.0

ports:

- containerPort: 8000

---

apiVersion: v1

kind: Service

metadata:

name: mynode-svc

spec:

selector:

run: nodejs

ports:

- port: 10001

targetPort: 8000

type: LoadBalancer

C:\k8s\LABs>kubectl get po,svc -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/mynode-pod 1/1 Running 0 54s 10.12.1.4 gke-k8s-cluster-k8s-node-pool-3e9e423a-gfwg <none> <none>

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/kubernetes ClusterIP 10.16.0.1 <none> 443/TCP 23h <none>

service/mynode-svc LoadBalancer 10.16.11.137 34.64.136.182 10001:32279/TCP 53s run=nodejs

C:\k8s\LABs>curl 34.64.136.182:10001

Welcome to Kubernetes~! yuri.ji

C:\k8s\LABs>curl 10.16.11.137:10001

curl: (28) Failed to connect to 10.16.11.137 port 10001 after 21008 ms: Timed out

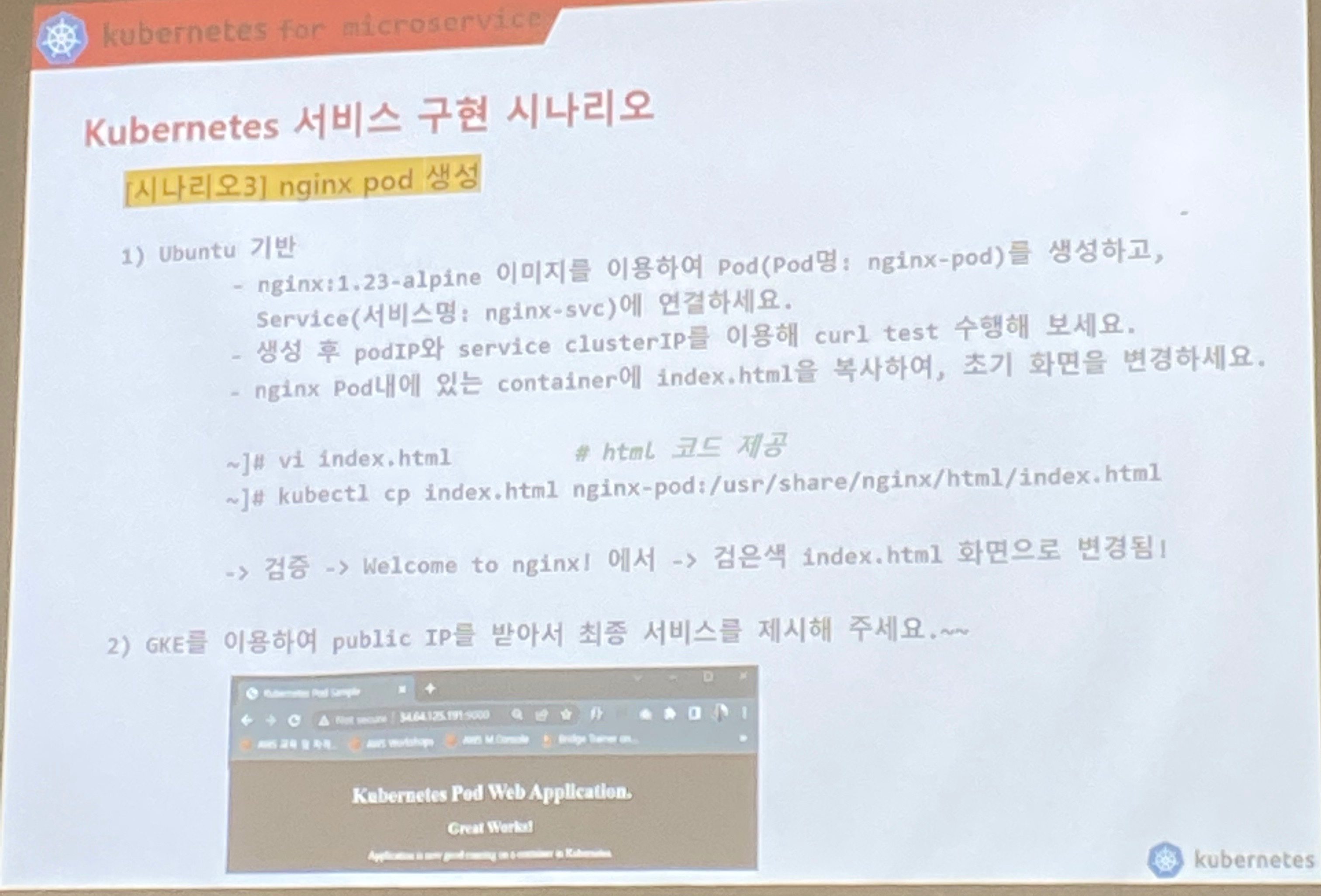

📘 과제

## 1. html 파일작성

## 2. nginx 컨테이너 위한 dockerfile 작성

FROM nginx:1.23-alpine

COPY . /usr/share/nginx/html

## 3. docker build

## 4. image push (docker push)

## 5. pod로 띄우기위한 yaml 파일작성 & 실행

apiVersion: v1

kind: Pod

metadata:

name: nginx-pod

labels:

run: nginx

spec:

containers:

- name: myweb04

image: ur2e/myweb:0.4

ports:

- containerPort: 80

## 6. kubectl get po -o wide 로 파드가 올라간 노드 확인

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-pod 1/1 Running 0 6m7s 10.111.156.79 ⭐k8s-node1⭐ <none> <none>

## 7. svc 띄우기위한 yaml 파일 작성 & 생성

apiVersion: v1

kind: Service

metadata:

name: nginx-svc

spec:

selector:

run: nginx

ports:

- port: 81

targetPort: 80

externalIPs:

- ⭐ 192.168.56.101

⭐ 파드가 올라간 노드의 IP를 적는다.

## 8. 서비스의 External-ip & port 확인

kubectl get svc -o wide

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

nginx-svc ClusterIP 10.101.230.108 ⭐192.168.56.101 81/TCP 6m34s run=nginx

## 9. 브라우저에 접속하여 확인

192.168.56.101:81

## 10. google GKE 위한 yaml 파일 수정

apiVersion: v1

kind: Pod

metadata:

name: nginx-pod

labels:

run: nginx

spec:

containers:

- name: myweb04

image: ur2e/myweb:0.4

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: nginx-svc

spec:

selector:

run: nginx

ports:

- port: 81

targetPort: 80

type: LoadBalancer

## 11. kubectl get svc -o wide에서 확인한 IP로 접속 ~

메모장

⭐ 📘 📗 💭 🤔 📕 📔 🐳 ✍ 🥳 ⭐

sa : service acccount

-> 처음엔 권한 없어 !

-> 기본 sa는 cluster-admin.애가 가진 권한은 all(*)

kubectl get clusterrole cluster-admin

NAME CREATED AT

cluster-admin 2022-09-29T08:09:38Z

권한 어떻게 봐?

$ kubectl describe clusterrole cluster-admin

Name: cluster-admin

Labels: kubernetes.io/bootstrapping=rbac-defaults

Annotations: rbac.authorization.kubernetes.io/autoupdate: true

PolicyRule:

Resources Non-Resource URLs Resource Names Verbs

--------- ----------------- -------------- -----

*.* [] [] [*]

[*] [] [*]회사에서는 ~ root로 일 할 일 없다 ~~

항상 사용자로 일하자