Principal Component Analysis

- PCA : 데이터 집합 내에 존재하는 각 데이터의 차이를 가장 잘 나타내주는 요소를 찾아내는 방법. 통계 데이터 분석(주성분 찾기), 데이터 압축(차원 감소), 노이즈 제거 등 다양한 분야에서 사용

- 차원축소와 변수추출 기법으로 널리 쓰이고 있는 주성분 분석

- PCA는데이터의 분산을 최대한 보존하면서 서로 직교하는 새 기저(축)를 찾아, 고차원 공간의 표본들을 선형 연관성이 없는 저차원 공간으로 변환하는 기법

- 변수추출은 기존 변수를 조합해 새로운 변수를 만드는 기법(변수 선택과 구분할 것)

# 간단한 데이터 생성

import numpy as np

import seaborn as sns

sns.set_style('whitegrid')

rng = np.random.RandomState(13) # numpy.random.RandomState는 class명

X = np.dot(rng.rand(2, 2), rng.randn(2, 200)).T

X.shaperng.rand(2, 2) # rand : 0 ~ 1사이의 난수rng.randn(2, 200) # randn : 평균은 0, 표준편차는 1인 표준정규분포를 따르는 숫자를 나열import matplotlib.pyplot as plt

plt.scatter(X[:,0], X[:, 1])

plt.axis('equal')# fit

from sklearn.decomposition import PCA

pca = PCA(n_components = 2, random_state = 13)

# n_component = 2 : 2개의 주성분으로 표현해라

pca.fit(X)# 벡터와 분산값

pca.components_ # 2개의 행 -> 2개의 벡터pca.explained_variance_ # 벡터의 설명력# 주성분 벡터 그릴 준비

def draw_vector(v0, v1, ax=None):

ax = ax or plt.gca()

# ax=None값(특정 값이 없을 경우), plt.gca()를 적용 그렇지 않으면 ax값 적용

arrowprops = dict(arrowstyle='->', # 화살표 스타일

linewidth=2, color = 'black', shrinkA=0, shrinkB=0)

ax.annotate('', v1, v0, arrowprops=arrowprops)# 그리기

plt.scatter(X[:, 0], X[:, 1], alpha=0.4) # alpha : 투명도

for length, vector in zip(pca.explained_variance_, pca.components_):

v = vector *3 * np.sqrt(length) # 3 : 임의의 값, 적당한 크기로 변환하려고

draw_vector(pca.mean_, pca.mean_ + v)

plt.axis('equal')

plt.show()pca.mean_ # 데이터의 중심, 가장 큰 영향력을 끼치는 벡터(?)- 데이터의 주성분을 찾은 다음 주축을 변경하는 것도 가능하다

# n_components = 1로 설정

pca = PCA(n_components=1, random_state=13)

pca.fit(X)X_pca = pca.transform(X)

X_pcaprint(pca.components_)

print(pca.explained_variance_)pca.mean_pca.explained_variance_ratio_ # 전체 데이터의 93%정도 반영할 수 있다# linear regression과 같은 결과일지도

X_new = pca.inverse_transform(X_pca) # 원래의 데이터로 변환, 2차원의 형태로...

plt.scatter(X[:, 0], X[:, 1], alpha=0.3)

plt.scatter(X_new[:,0], X_new[:, 1], alpha=0.9)

plt.axis('equal')

plt.show()Principal Component Analysis - iris

import pandas as pd

from sklearn.datasets import load_iris

iris = load_iris()

iris_pd = pd.DataFrame(iris.data, columns=iris.feature_names)

iris_pd['species'] = iris.target

iris_pd.head()# 특성 4개를 한 번에 확인하기 어렵다

sns.pairplot(iris_pd, hue='species', height=3,

x_vars=['sepal length (cm)', 'sepal width (cm)'],

y_vars=['petal length (cm)', 'petal width (cm)'])# Scaler 적용

from sklearn.preprocessing import StandardScaler

iris_ss = StandardScaler().fit_transform(iris.data)

iris_ss[:3]# pca 결과를 return하는 함수 생성

from sklearn.decomposition import PCA

def get_pca_data(ss_data, n_components = 2): # n_components = 2 : 4개의 feature를 다 보고 2개로 벡터를 추출해라(?..)

pca = PCA(n_components=n_components)

pca.fit(ss_data)

return pca.transform(ss_data), pcairis_pca, pca = get_pca_data(iris_ss, 2)

iris_pca.shapepca.mean_pca.components_

# 4개의 요소를 값은 나오지만 실제로는 2개의 벡터가 나온다

# 이름은 알 수 없지만 4개의 크기에 pca 2개의 벡터로 줄어든다# pca 결과를 pandas로 정리

def get_pd_from_pca(pca_data, cols=['pca_component_1', 'pca_component_2']):

return pd.DataFrame(pca_data, columns=cols)

# 4개의 특성을 두 개의 특성으로 정리

iris_pd_pca = get_pd_from_pca(iris_pca)

iris_pd_pca['species'] = iris.target

iris_pd_pca.head()# 두 개의 특성 그리기

sns.pairplot(iris_pd_pca, hue='species', height=5,

x_vars=['pca_component_1'], y_vars=['pca_component_2'])# 4개의 특성을 모두 사용해서 randomforest에 적용

from sklearn.ensemble import RandomForestClassifier

from sklearn.model_selection import cross_val_score

def rf_scores(X ,y, cv=5):

rf = RandomForestClassifier(random_state=13, n_estimators=100)

# n_estimators=100 : decision tree의 개수

scores_rf = cross_val_score(rf, X, y, scoring = 'accuracy', cv = cv)

print('Score : ', np.mean(scores_rf))

rf_scores(iris_ss, iris.target)# 두 개의 특성만 적용했을 때

pca_X = iris_pd_pca[['pca_component_1', 'pca_component_2']]

rf_scores(pca_X, iris.target)Principal Component Analysis - wine

wine_url = 'https://raw.githubusercontent.com/PinkWink/ML_tutorial/master/dataset/wine.csv'

wine = pd.read_csv(wine_url, sep=',', index_col=0)

wine.head()# 와인 색상 분류(red/white)

wine_y = wine['color']

wine_X = wine.drop(['color'], axis=1)

wine_X.head()# StandardScaler 적용

wine_ss = StandardScaler().fit_transform(wine_X)

wine_ss[:3]def print_variance_ratio(pca):

print('variance_ratio : ' , pca.explained_variance_ratio_)

print('sum of variance_ratio : ' , np.sum(pca.explained_variance_ratio_))

# 두 개의 주성분으로 줄이는 것은 데이터의 50%가 안된다

pca_wine, pca = get_pca_data(wine_ss, n_components=2)

print_variance_ratio(pca)# 그래프

pca_columns = ['pca_component_1','pca_component_2']

pca_wine_pd = pd.DataFrame(pca_wine, columns=pca_columns)

pca_wine_pd['color'] = wine_y.values

pca_wine_pdsns.pairplot(pca_wine_pd, hue='color', height=5,

x_vars=['pca_component_1'], y_vars=['pca_component_2'])# Random Forest에 적용했을 때 원데이터와 큰 차이가 없다.

rf_scores(wine_ss, wine_y)pca_X = pca_wine_pd[['pca_component_1', 'pca_component_2']]

rf_scores(pca_X, wine_y)# 주성분 3개로 표현했더니 98% 이상 표현 가능하다

pca_wine, pca = get_pca_data(wine_ss, n_components=3)

print_variance_ratio(pca)cols = ['pca_1', 'pca_2', 'pca_3']

pca_wine_pd = get_pd_from_pca(pca_wine, cols=cols)

pca_X = pca_wine_pd[cols]

pca_Xrf_scores(pca_X, wine_y)# 주성분 3개로 표현한 것 정리

pca_wine_plot = pca_X

pca_wine_plot['color'] = wine_y.values

pca_wine_plot.head()# 3D로 그리기

from mpl_toolkits.mplot3d import Axes3D

markers = ['^', 'o']

fig = plt.figure(figsize=(10, 8))

ax = fig.add_subplot(111, projection='3d')

for i, marker in enumerate(markers):

x_axis_data = pca_wine_plot[pca_wine_plot['color']==i]['pca_1']

y_axis_data = pca_wine_plot[pca_wine_plot['color']==i]['pca_2']

z_axis_data = pca_wine_plot[pca_wine_plot['color']==i]['pca_3']

ax.scatter(x_axis_data, y_axis_data, z_axis_data, s = 20, alpha = 0.5, marker = marker)

ax.view_init(30, 80)

plt.show()

# plotly

import plotly.express as px

fig = px.scatter_3d(pca_wine_plot, x = 'pca_1', y='pca_2', z='pca_3',

color = 'color', symbol='color', opacity = 0.4)

fig.update_layout(margin = dict(l=0, r=0, b= 0, t= 0))

fig.show()Principal Component Analysis - PCA eigenface

- Olivetti 데이터 : 얼굴 인식용으로 사용할 수 있지만, 특정 인물의 데이터(10장)만 이용해서 PCA 실습용으로 사용

# 데이터 읽기

from sklearn.datasets import fetch_olivetti_faces

faces_all = fetch_olivetti_faces()

print(faces_all.DESCR)# 특정 샘플만 선택

K = 20

faces = faces_all.images[faces_all.target == K]

facesimport matplotlib.pyplot as plt

N = 2

M = 5

fig = plt.figure(figsize=(10, 5))

plt.subplots_adjust(top=1, bottom=0, hspace=0, wspace=0.05)

for n in range(N*M):

ax = fig.add_subplot(N, M, n+1)

ax.imshow(faces[n], cmap=plt.cm.bone)

ax.grid(False)

ax.xaxis.set_ticks([]) #좌표를 없애버려라(?..)

ax.yaxis.set_ticks([])

plt.suptitle('Olivetti')

plt.tight_layout()

plt.show()

# 두 개의 성분으로 분석

from sklearn.decomposition import PCA

pca = PCA(n_components=2)

X = faces_all.data[faces_all.target == K]

W = pca.fit_transform(X) # 10장의 사진을 표현하는 벡터

WX.shapeimport numpy as np

np.sqrt(4096) # 64*64픽셀의 그림이 10개가 있다X_inv = pca.inverse_transform(W)

X_inv# 분석된 결과 확인

N = 2

M = 5

fig = plt.figure(figsize=(10, 5))

plt.subplots_adjust(top=1, bottom=0, hspace=0, wspace=0.05)

for n in range(N*M):

ax = fig.add_subplot(N, M, n+1)

ax.imshow(X_inv[n].reshape(64, 64), cmap=plt.cm.bone) # reshape : 배열의 재구조화 혹은 변경

ax.grid(False)

ax.xaxis.set_ticks([])

ax.yaxis.set_ticks([])

plt.suptitle('PCA result')

plt.tight_layout()

plt.show()

# 원점과 두 개의 eigen face

# 10장의 사진을 이 세장으로 모두 표현 가능

face_mean = pca.mean_.reshape(64, 64)

face_p1 = pca.components_[0].reshape(64, 64)

face_p2 = pca.components_[1].reshape(64, 64)

plt.figure(figsize=(12,7))

plt.subplot(131)

plt.imshow(face_mean, cmap=plt.cm.bone)

plt.grid(False) ; plt.xticks([]); plt.yticks([]); plt.title('mean')

plt.subplot(132)

plt.imshow(face_p1, cmap=plt.cm.bone)

plt.grid(False) ; plt.xticks([]); plt.yticks([]); plt.title('face_p1')

plt.subplot(133)

plt.imshow(face_p2, cmap=plt.cm.bone)

plt.grid(False) ; plt.xticks([]); plt.yticks([]); plt.title('face_p2')

plt.show()

# 가중치 선정

import numpy as np

N = 2

M = 5

w = np.linspace(-5, 10, N*M)

w# 첫번째 성분의 변화(mean과 face_p1의 결과 이용)

fig = plt.figure(figsize=(10, 5))

plt.subplots_adjust(top=1, bottom=0, hspace=0, wspace=0.05)

for n in range(N*M):

ax = fig.add_subplot(N, M, n+1)

ax.imshow(face_mean + w[n] * face_p1, cmap=plt.cm.bone) # 원점에서 가중치가 face_p1과 곱해짐

plt.grid(False)

plt.xticks([])

plt.yticks([])

plt.title('Weight : ' + str(round(w[n])))

plt.tight_layout()

plt.show()# 두 번째 성분의 변화(mean과 face_p2의 결과 이용)

fig = plt.figure(figsize=(10, 5))

plt.subplots_adjust(top=1, bottom=0, hspace=0, wspace=0.05)

for n in range(N*M):

ax = fig.add_subplot(N, M, n+1)

ax.imshow(face_mean + w[n] * face_p2, cmap=plt.cm.bone) # 원점에서 가중치가 face_p1과 곱해짐

plt.grid(False)

plt.xticks([])

plt.yticks([])

plt.title('Weight : ' + str(round(w[n])))

plt.tight_layout()

plt.show()# 두 개의 성분 다 표현

nx, ny = (5, 5)

x = np.linspace(-5, 8, nx)

y = np.linspace(-5, 8, ny)

w1, w2 = np.meshgrid(x, y)

w1, w2w1.shapew1 = w1.reshape(-1, ) # reshape(-1, ) : 앞은 신경쓰지 말고 뒤에 것을 바꾸어라

w2 = w2.reshape(-1, )

w1, w2# 다시 합성

fig = plt.figure(figsize=(12, 10))

plt.subplots_adjust(top=1, bottom=0, hspace=0, wspace=0.05)

N = 5

M = 5

for n in range(N*M):

ax = fig.add_subplot(N, M, n+1)

ax.imshow(face_mean + w1[n] * face_p1 + w2[n] * face_p2, cmap=plt.cm.bone) # 원점에서 가중치가 face_p1과 곱해짐

plt.grid(False)

plt.xticks([])

plt.yticks([])

plt.title('Weight : ' + str(round(w1[n],1)) + ', ' + str(round(w2[n],1)))

plt.tight_layout()

plt.show()Principal Component Analysis - HAR data

# HAR 데이터 읽기

import pandas as pd

url = 'https://raw.githubusercontent.com/PinkWink/ML_tutorial/master/dataset/HAR_dataset/features.txt'

feature_name_df = pd.read_csv(url, sep='\s+', header=None, names=['column_index', 'comumn_name'])

feature_name = feature_name_df.iloc[:, 1].values.tolist()X_train_url = 'https://raw.githubusercontent.com/PinkWink/ML_tutorial/' +\

'master/dataset/HAR_dataset/train/X_train.txt'

X_test_url = 'https://raw.githubusercontent.com/PinkWink/ML_tutorial/' +\

'master/dataset/HAR_dataset/test/X_test.txt'

X_train = pd.read_csv(X_train_url, sep='\s+', header=None)

X_test = pd.read_csv(X_test_url, sep='\s+', header=None)

X_train.columns = feature_name

X_test.columns = feature_namey_train_url = 'https://raw.githubusercontent.com/PinkWink/ML_tutorial/' +\

'master/dataset/HAR_dataset/train/y_train.txt'

y_test_url = 'https://raw.githubusercontent.com/PinkWink/ML_tutorial/' +\

'master/dataset/HAR_dataset/test/y_test.txt'

y_train = pd.read_csv(y_train_url, sep='\s+', header=None)

y_test = pd.read_csv(y_test_url, sep='\s+', header=None)X_train.shape, X_test.shape, y_train.shape, y_test.shape# 재사용을 위해 함수 만들기

from sklearn.decomposition import PCA

def get_pca_data(ss_data, n_components=2):

pca = PCA(n_components=n_components)

pca.fit(ss_data)

return pca.transform(ss_data), pca# PCA fit

HAR_pca, pca = get_pca_data(X_train, n_components=2)

HAR_pca.shapepca.mean_.shape, pca.components_.shapecols = ['pca_'+str(n) for n in range(pca.components_.shape[0])]

cols# PCA 결과를 저장하는 함수

def get_pd_from_pca(pca_data, col_num):

cols = ['pca_' + str(n) for n in range(col_num)]

return pd.DataFrame(pca_data, columns=cols)# components 2개

HAR_pca, pca = get_pca_data(X_train, n_components=2)

HAR_pd_pca = get_pd_from_pca(HAR_pca, pca.components_.shape[0])

HAR_pd_pca['action'] = y_train

HAR_pd_pca.head()import seaborn as sns

sns.pairplot(HAR_pd_pca, hue='action', height=5, x_vars=['pca_0'], y_vars=['pca_1'])# 전체 500개가 넘는 특성을 단 두 개로 줄이면 이정도이다

import numpy as np

def print_variance_ratio(pca):

print('variance_ratio : ', pca.explained_variance_ratio_)

print('sum of variance_ratio : ', np.sum(pca.explained_variance_ratio_))

print_variance_ratio(pca)# 컴포넌트 3개 진행

HAR_pca, pca = get_pca_data(X_train, n_components=3)

HAR_pd_pca = get_pd_from_pca(HAR_pca, pca.components_.shape[0])

HAR_pd_pca['action'] = y_train

print_variance_ratio(pca)# 컴포넌트 10개

HAR_pca, pca = get_pca_data(X_train, n_components=10)

HAR_pd_pca = get_pd_from_pca(HAR_pca, pca.components_.shape[0])

HAR_pd_pca['action'] = y_train

print_variance_ratio(pca)%%time

from sklearn.model_selection import GridSearchCV

from sklearn.ensemble import RandomForestClassifier

params = {

'max_depth' : [6, 8, 10],

'n_estimators' : [50, 100, 200],

'min_samples_leaf' : [8, 12],

'min_samples_split' : [8, 12]

}

rf_clf = RandomForestClassifier(random_state=13, n_jobs=-1)

grid_cv = GridSearchCV(rf_clf, param_grid=params, cv=2, n_jobs=-1)

grid_cv.fit(HAR_pca, y_train.values.reshape(-1,))# 성능은 조금 나쁘다

cv_results_df = pd.DataFrame(grid_cv.cv_results_)

cv_results_df.columnstarget_col = ['rank_test_score', 'mean_test_score', 'param_n_estimators', 'param_max_depth']

cv_results_df[target_col].sort_values('rank_test_score').head()# best 파라미터

grid_cv.best_params_grid_cv.best_score_# 테스트 데이터에 적용

from sklearn.metrics import accuracy_score

rf_clf_best = grid_cv.best_estimator_

rf_clf_bestrf_clf_best.fit(HAR_pca, y_train.values.reshape(-1,))

pred1 = rf_clf_best.predict(pca.transform(X_test))

# (pca.transform(X_test)) : 별도로 fit을 하면 오히려 꼬임,

# pca.transform으로 잡아둔 파라미터를 가지고 X_test를 transform해야 한다 ★★★

accuracy_score(y_test, pred1)- xgboost 안될 경우 버전 낮춰서 재 다운 진행

# xgboost 안될 경우 버전 낮춰서 재 다운 진행

# https://stackoverflow.com/questions/71996617/invalid-classes-inferred-from-unique-values-of-y-expected-0-1-2-3-4-5-got

#!pip uninstall xgboost

#!pip install xgboost==1.5.0# xgboost

import time

from xgboost import XGBClassifier

evals = [(pca.transform(X_test), y_test)]

start_time = time.time()

xgb = XGBClassifier(n_estimators = 400, learning_rate=0.1, max_depth = 3)

xgb.fit(HAR_pca, y_train.values.reshape(-1,),

early_stopping_rounds=10, eval_set=evals)

print('Fit time : ', time.time() - start_time)# accuracy

accuracy_score(y_test, xgb.predict(pca.transform(X_test)))Principal Component Analysis - PCA and kNN

-

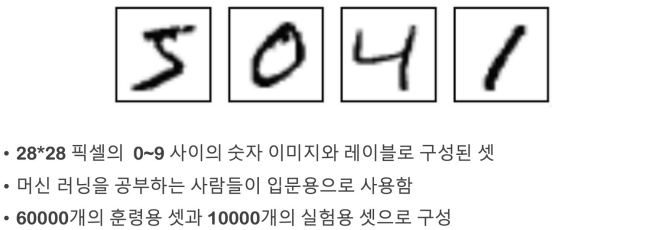

NMIST : 28*28 픽셀의 0 ~ 9 사이의 숫자 이미지와 레이블로 구성된 셋. 60000개의 훈련용 셋과 10000개의 실험용 셋으로 구성

# 데이터 읽기

import pandas as pd

df_train = pd.read_csv('./MNIST/mnist_train.csv')

df_test = pd.read_csv('./MNIST/mnist_test.csv')

df_train.shape, df_test.shape# train 데이터

df_train.head()# test 데이터

df_test# 데이터 정리

import numpy as np

X_train = np.array(df_train.iloc[:, 1:])

y_train = np.array(df_train['label'])

X_test = np.array(df_test.iloc[:, 1:])

y_test = np.array(df_test['label'])

X_train.shape, y_train.shape, X_test.shape, y_test.shape# 데이터 확인

import random

samples = random.choices(population=range(0, 60000), k=16)

samples# random하게 16개만 확인

import matplotlib.pyplot as plt

plt.figure(figsize=(14, 12))

for idx, n in enumerate(samples):

plt.subplot(4, 4, idx+1)

plt.imshow(X_train[n].reshape(28, 28), cmap='Greys', interpolation='nearest')

plt.title(y_train[n])

plt.show()# fit

from sklearn.neighbors import KNeighborsClassifier

import time

start_time = time.time()

clf = KNeighborsClassifier(n_neighbors=5)

clf.fit(X_train, y_train)

print('Fit time : ', time.time() - start_time)# test 데이터 predict

# kNN은 거리를 모두 구해야 한다..시간이 오래 걸림

# pca로 차원을 줄여보자

from sklearn.metrics import accuracy_score

start_time = time.time()

pred = clf.predict(X_test)

print('Fit time : ', time.time() - start_time)

print(accuracy_score(y_test, pred))# pca로 차원을 줄여보자

from sklearn.pipeline import Pipeline

# Pipeline : 데이터 전처리에서 학습까지의 과정을 하나로 연결

from sklearn.decomposition import PCA

from sklearn.model_selection import GridSearchCV, StratifiedKFold

# GridSearchCV : 예측 모형의 파라미터 최적값을 교차검증으로 확인, 하이퍼 파라미터 튜닝 수행

# 작업 등록

pipe = Pipeline([

('pca', PCA()), ('clf', KNeighborsClassifier())])

parameters = {

'pca__n_components':[2, 5, 10], 'clf__n_neighbors':[5, 10, 15]}

kf = StratifiedKFold(n_splits=5, shuffle=True, random_state=13)

grid = GridSearchCV(pipe, parameters, cv=kf, n_jobs=-1, verbose=1)

grid.fit(X_train, y_train)# best score

print('Best score : %0.3f' % grid.best_score_)

print('Best parameters set : ')

best_parameters = grid.best_estimator_.get_params()

for param_name in sorted(parameters.keys()):

print('\t%s : %r' %(param_name, best_parameters[param_name]))# 약 93%의 acc가 확보된다

accuracy_score(y_test, grid.best_estimator_.predict(X_test))# 결과 확인

# ex) 9 : precision 0.90 -> 9라고 한 것중에 9인 확률 0.90

def results(y_pred, y_test):

from sklearn.metrics import classification_report, confusion_matrix

print(classification_report(y_test, y_pred))

results(grid.predict(X_train), y_train)# 숫자를 다시 확인하고 싶다면

n = 1000

plt.imshow(X_test[n].reshape(28, 28), cmap='Greys', interpolation='nearest')

plt.show()

print('Answer is : ', grid.best_estimator_.predict(X_test[n].reshape(1,784)))

print('Real Label is : ', y_test[n])# 틀린 데이터 확인

preds = grid.best_estimator_.predict(X_test)

predsy_test# 틀린 데이터 추리기

wrong_results = X_test[y_test != pred]

wrong_resultswrong_results.shape[0]samples = random.choices(population=range(0, wrong_results.shape[0]), k=16)

plt.figure(figsize=(14, 12))

for idx, n in enumerate(samples):

plt.subplot(4, 4, idx+1)

plt.imshow(wrong_results[n].reshape(28, 28), cmap='Greys', interpolation='nearest')

# interpolation='nearest' : 보간법, 픽셀들의 축 위치 간격을 보정하여 이미지가 자연스러운 모양으로 보일 수 있게 하는 방법. 'nearest'는 가장 고해상도인 보간법

# 출처 : https://bentist.tistory.com/23

plt.title(grid.best_estimator_.predict(wrong_results[n].reshape(1, 784))[0])

plt.show()titanic data using PCA and kNN

# 타이타닉 데이터

import pandas as pd

titanic_url = 'https://raw.githubusercontent.com/PinkWink/ML_tutorial/master/dataset/titanic.xls'

titanic = pd.read_excel(titanic_url)

titanic.head()# 이름 분리해서 title 만들기(사회적 신분이 이름에 반영되어 있어서..)

import re

title = []

for idx, dataset in titanic.iterrows():

title.append(re.search('\,\s\w+(\s\w+)?\.', dataset['name']).group()[2:-1])

titanic['title'] = title

titanic.head()# 귀족과 평민 등급 구별

titanic['title'] = titanic['title'].replace('Mlle', 'Miss')

titanic['title'] = titanic['title'].replace('Ms', 'Miss')

titanic['title'] = titanic['title'].replace('Mme', 'Mrs')

Rare_f = ['Dona', 'Dr', 'Lady', 'the Countess']

Rare_m = ['Capt', 'Col', 'Don', 'Major', 'Rev', 'Sir', 'Jonkheer', 'Master']

for each in Rare_f:

titanic['title'] = titanic['title'].replace(each, 'Rare_f')

for each in Rare_m:

titanic['title'] = titanic['title'].replace(each, 'Rare_m')

titanic['title'].unique()# gender 컬럼 생성

from sklearn.preprocessing import LabelEncoder

le_sex = LabelEncoder()

le_sex.fit(titanic['sex'])

titanic['gender'] = le_sex.transform(titanic['sex'])# grade 컬럼 생성(숫자로 바꿔주어야 함)

from sklearn.preprocessing import LabelEncoder

le_grade = LabelEncoder()

le_grade.fit(titanic['title'])

titanic['grade'] = le_grade.transform(titanic['title'])

le_grade.classes_# 데이터 정리

titanic.head()# null이 아닌 데이터만

titanic = titanic[titanic['age'].notnull()]

titanic = titanic[titanic['fare'].notnull()]

titanic.info()# 데이터 나누기

from sklearn.model_selection import train_test_split

X = titanic[['pclass', 'age', 'sibsp', 'parch', 'fare', 'gender', 'grade']].astype('float')

y = titanic['survived']

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=13)# pca 적용

from sklearn.decomposition import PCA

def get_pca_data(ss_data, n_components=2):

pca = PCA(n_components=n_components)

pca.fit(ss_data)

return pca.transform(ss_data), pcadef get_pd_from_pca(pca_data, col_num):

cols = ['pca_'+str(n) for n in range(col_num)]

return pd.DataFrame(pca_data, columns=cols)

import numpy as np

def print_variance_ratio(pca, only_sum=False):

if only_sum == False:

print('variance_ratio : ', pca.explained_variance_ratio_)

print('sum of variance_ratio : ', np.sum(pca.explained_variance_ratio_))

# 두 개의 축으로 변환

pca_data, pca = get_pca_data(X_train, n_components=2)

print_variance_ratio(pca)# 그래프

import seaborn as sns

pca_columns = ['pca_1', 'pca_2']

pca_pd = pd.DataFrame(pca_data, columns=pca_columns)

pca_pd['survived'] = y_train

sns.pairplot(pca_pd, hue = 'survived', height=5, x_vars=['pca_1'], y_vars=['pca_2'])# 3개로 진행

# 세 개의 축으로 변환

pca_pd = get_pd_from_pca(pca_data, 3)

pca_pd['survived'] = y_train.values

pca_pd.head()

# 그래프

# plotly.express

import plotly.express as px

fig = px.scatter_3d(pca_pd,

x='pca_0', y='pca_1', z='pca_2',

color='survived', symbol='survived',

opacity=0.4)

fig.update_layout(margin = dict(l=0, r=0, b=0, t=0))

fig.show()# pipeline 구축

from sklearn.neighbors import KNeighborsClassifier

from sklearn.metrics import accuracy_score

from sklearn.pipeline import Pipeline

from sklearn.preprocessing import StandardScaler

estimators = [('scaler', StandardScaler()),

('pca', PCA(n_components=3)),

('clf', KNeighborsClassifier(n_neighbors=20))]

pipe = Pipeline(estimators)

pipe.fit(X_train, y_train)

pred = pipe.predict(X_test)

print(accuracy_score(y_test, pred))# 다시 확인

dicaprio = np.array([[3, 18, 0, 0, 5, 1, 1]])

print('Dicaprio : ', pipe.predict_proba(dicaprio)[0, 1])

winslet = np.array([[1, 16, 1, 1, 100, 0, 3]])

print('Winslet : ', pipe.predict_proba(winslet)[0, 1])많이 어렵..ㅠㅠ

💻 출처 : 제로베이스 데이터 취업 스쿨