Clustering

- 비지도 학습

- 군집 Clustering 비슷한 샘플 모음

- 이상치 탐지 Outier detection : 정상 데이터가 어떻게 보이는지 학습, 비정상 샘플을 감지

- 밀도 추정 : 데이터셋의 확률 밀도 함수 Probability Density Function PDF를 추정. 이상치 탐지 등에 사용 - K-Means :

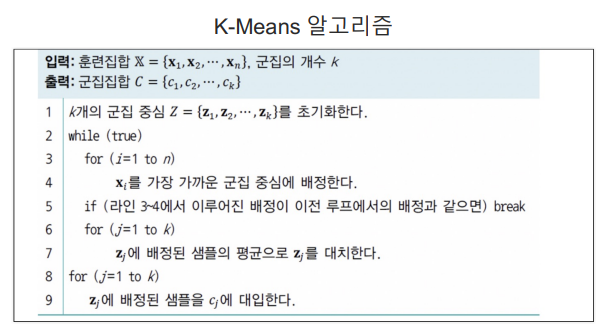

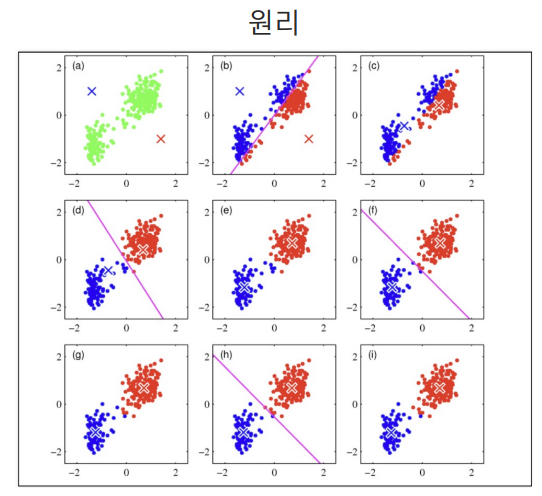

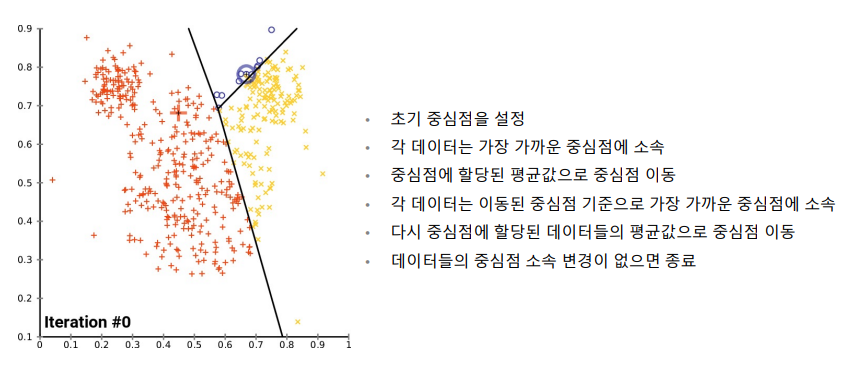

- 군집화에서 가장 일반적인 알고리즘

- 군집 중심(centroid)이라는 임의의 지점을 선택해서 해당 중심에 가장 가까운 포인트들을 선택하는 군집화

- 일반적인 군집화에서 가장 많이 사용되는 기법

- 거리 기반 알고리즘으로 속성의 개수가 매우 많을 경우 군집화의 정확도가 떨어짐

# iris 데이터로 실습

from sklearn.preprocessing import scale

from sklearn.datasets import load_iris

from sklearn.cluster import KMeans

import matplotlib.pyplot as plt

import numpy as np

import pandas as pd

%matplotlib inline

iris = load_iris()# 특징 이름 - 항상 뒤에 (cm)가 불편함

iris.feature_names# 뒷글자 자르기

cols = [each[:-5] for each in iris.feature_names]

cols# iris 데이터 정리

iris_df = pd.DataFrame(data = iris.data, columns=cols)

iris_df.head()# 편의상 두 개의 특성만

feature = iris_df[['petal length', 'petal width']]

feature.head()군집화

- 군집화

- n_clusters : 군집화 할 개수, 즉 군집 중심점의 개수

- init : 초기 군집 중심점의 좌표를 설정하는 방식을 결정

- max_iter : 최대 반복 횟수, 모든 데이터의 중심점 이동이 없으면 종료

# 군집화

# n_clusters : 군집화 할 개수, 즉 군집 중심점의 개수

# init : 초기 군집 중심점의 좌표를 설정하는 방식을 결정

# max_iter : 최대 반복 횟수, 모든 데이터의 중심점 이동이 없으면 종료

model = KMeans(n_clusters=3)

model.fit(feature)# 결과 라벨 (지도학습의 라벨과 다름, 군집 중심을 구분하기 위한 것, 순서가 아님)

model.labels_# 군집 중심값

model.cluster_centers_

# 다시 정리 (그림을 그리기 위해)

predict = pd.DataFrame(model.predict(feature), columns=['cluster'])

feature = pd.concat([feature, predict], axis=1)

feature.head()# 결과 확인

centers = pd.DataFrame(model.cluster_centers_, columns=['petal length', 'petal width'])

center_x = centers['petal length']

center_y = centers['petal width']

plt.figure(figsize=(12, 8))

plt.scatter(feature['petal length'], feature['petal width'], c = feature['cluster'], alpha=0.5)

plt.scatter(center_x, center_y, s=50, marker='D', c='r')

plt.show()make_blobs

- make_blobs : 군집화 연습을 위한 데이터 생성기

# make_blobs - 군집화 연습을 위한 데이터 생성기

from sklearn.datasets import make_blobs

X, y = make_blobs(n_samples=200, n_features=2, centers=3,

cluster_std=0.8, random_state=0)

print(X.shape, y.shape)

unique, counts = np.unique(y, return_counts = True)

print(unique, counts)# 데이터 정리

cluster_df = pd.DataFrame(data=X, columns=['ftr1', 'ftr2'])

cluster_df['target'] = y

cluster_df.head()# 군집화

kmeans = KMeans(n_clusters=3, init='k-means++', max_iter=200, random_state=13)

cluster_labels = kmeans.fit_predict(X)

cluster_df['kmeans-label'] = cluster_labels# 결과 도식화

centers = kmeans.cluster_centers_

unique_labels = np.unique(cluster_labels)

markers = ['o', 's', '^', 'P', 'D', 'H', 'x']

for label in unique_labels:

label_cluster = cluster_df[cluster_df['kmeans-label']==label]

center_x_y = centers[label]

plt.scatter(x=label_cluster['ftr1'], y=label_cluster['ftr2'],

edgecolors='k', marker=markers[label])

plt.scatter(x=center_x_y[0], y=center_x_y[1], s=200, color = 'white',

alpha=0.9, edgecolors='k', marker=markers[label])

plt.scatter(x=center_x_y[0], y=center_x_y[1], s=70, color = 'k',

edgecolors='k', marker='$%d$' % label)

plt.show()

# 결과 확인

print(cluster_df.groupby('target')['kmeans-label'].value_counts())군집 평가

-

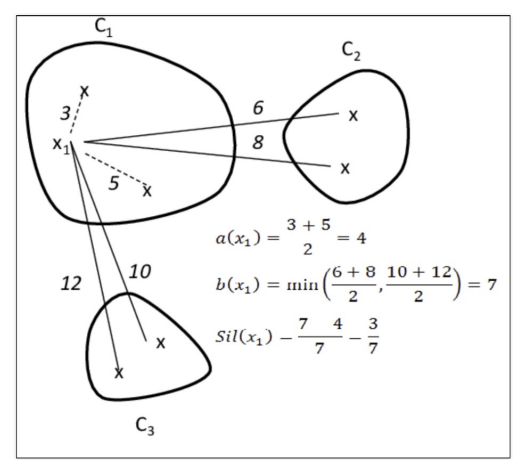

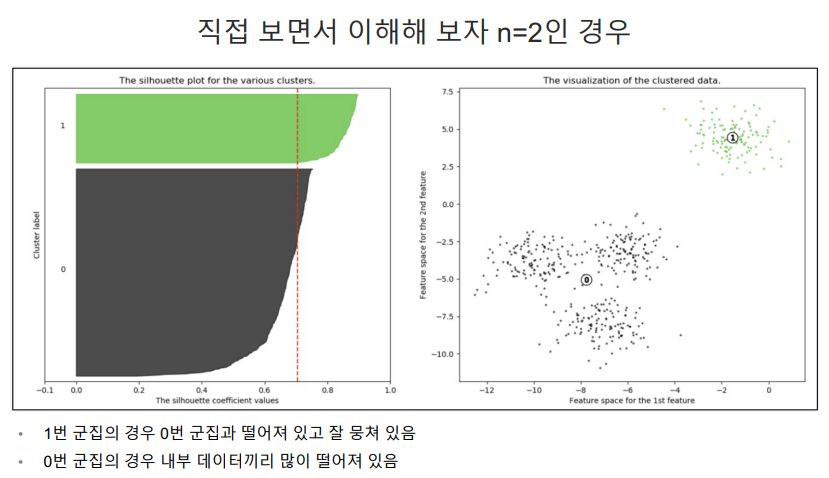

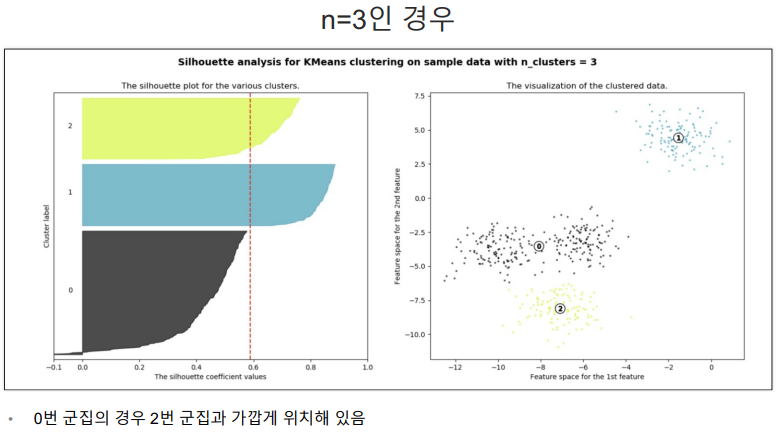

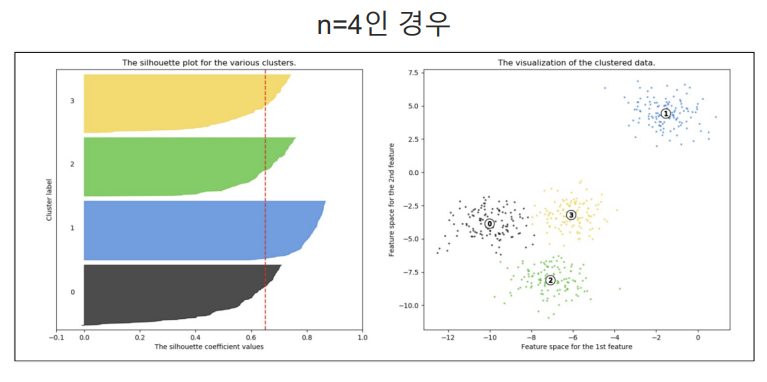

군집 결과의 평가 : 분류기는 평가 기준을 가지고 있지만, 군집은 그렇지 않다. 군집 결과를 평가하기 위해 실루엣 분석을 많이 활용한다

-

실루엣 분석 : 실루엣 분석은 각 군집 간의 거리가 얼마나 효율적으로 분리되어 있는지 나타낸다. 다른 군집과는 거리가 떨어져 있고, 동일 군집간의 데이터는 서로 가깝게 잘 뭉쳐 있는지 확인한다. 군집화가 잘 되어 있을수록 개별 군집은 비슷한 정도의 여유공간을 가지고 있다.

-

실루엣 계수 : 개별 데이터가 가지는 군집화의 지표

# 데이터 읽기

from sklearn.datasets import load_iris

from sklearn.cluster import KMeans

import pandas as pd

iris = load_iris()

feature_names = ['sepal_length', 'sepal_width', 'patal_length', 'petal_width']

iris_df = pd.DataFrame(data=iris.data, columns=feature_names)

kmeans = KMeans(n_clusters=3, init='k-means++', max_iter=300, random_state=0).fit(iris_df)# 군집 결과 정리

iris_df['cluster'] = kmeans.labels_

iris_df.head()# 군집 평가를 위한 작업

from sklearn.metrics import silhouette_samples, silhouette_score

avg_value = silhouette_score(iris.data, iris_df['cluster'])

score_values = silhouette_samples(iris.data, iris_df['cluster'])

print('avg_value', avg_value)

print('silhouette_samples() return 값의 shape', score_values.shape)# yellowbrick 설치

#!pip install yellowbrick# 실루엣 플랏의 결과 (직선형으로 구분된 것이 잘 된 것)

from yellowbrick.cluster import silhouette_visualizer

silhouette_visualizer(kmeans, iris.data, colors='yellowbrick')이미지 분할 image segmentation

- 이미지 분할

- 이미지 분할 image segmentation은 이미지를 여러 개로 분할하는 것

- 시맨틱 분할 semantic segmentation은 동일 종류의 물체에 속한 픽셀을 같은 세그먼트로 할당

- 시맨틱 분할에서 최고의 성능을 내려면 역시 CNN 기반

- 지금은 단순히 색상 분할로 시도

# 이미지 읽기

from matplotlib.image import imread

image = imread('./ladybug.png')

image.shapeplt.imshow(image)# 색상별로 클러스터링

from sklearn.cluster import KMeans

X = image.reshape(-1, 3)

kmeans = KMeans(n_clusters=8, random_state=13).fit(X) # 색상을 8개로 구분 시도

segmented_img = kmeans.cluster_centers_[kmeans.labels_]

segmented_img = segmented_img.reshape(image.shape)

# 결과 - 색상의 종류가 단순해짐

plt.imshow(segmented_img)# 이번에는 여러 개의 군집을 비교

segmented_imgs = []

n_colors = (10, 8, 6, 4, 2)

for n_clusters in n_colors:

kmeans = KMeans(n_clusters=n_clusters, random_state=13).fit(X)

segmented_img = kmeans.cluster_centers_[kmeans.labels_]

segmented_imgs.append(segmented_img.reshape(image.shape))# 이번에는 좀 복잡하게 결과를 시각화

plt.figure(figsize=(10, 5))

plt.subplots_adjust(wspace=0.05, hspace=0.1)

plt.subplot(231)

plt.imshow(image)

plt.title('Original image')

plt.axis('off')

for idx, n_clusters in enumerate(n_colors):

plt.subplot(232 + idx)

plt.imshow(segmented_imgs[idx])

plt.title('{} colors' .format(n_clusters))

plt.axis('off')

plt.show()# MNIST 데이터

from sklearn.datasets import load_digits

from sklearn.model_selection import train_test_split

X_digits, y_digits = load_digits(return_X_y=True)

X_train, X_test, y_train, y_test = train_test_split(X_digits, y_digits, random_state=13)# 로지스틱 회귀

from sklearn.linear_model import LogisticRegression

log_reg = LogisticRegression(multi_class='ovr', solver='lbfgs', max_iter=5000, random_state=13)

log_reg.fit(X_train, y_train)# 결과

log_reg.score(X_test, y_test)# pipeline 전처리 느낌으로 kmeans를 통과

from sklearn.pipeline import Pipeline

pipline = Pipeline([

('kmeans', KMeans(n_clusters=50, random_state=13)),

('log_reg', LogisticRegression(multi_class='ovr', solver='lbfgs',

max_iter=5000, random_state=13))

])

pipline.fit(X_train, y_train)pipline.score(X_test, y_test)# Gridsearch

from sklearn.model_selection import GridSearchCV

param_grid = dict(kmeans__n_clusters = range(2, 100))

grid_clf = GridSearchCV(pipline, param_grid, cv=3, verbose=2)

grid_clf.fit(X_train, y_train)

grid_clf.best_params_grid_clf.score(X_test, y_test)💻 출처 : 제로베이스 데이터 취업 스쿨