🧩Tensorflow Certification 취득하기 - part 10. 실전 (House Hold Electric Power Consumption)

🌕 AI/DL -Tenserflow Certification

House Hold Electric Power Consumption

Individual House Hold Electric Power Consumption Dataset을 활용한 예측

ABOUT THE DATASET

Original Source:

https://archive.ics.uci.edu/ml/datasets/individual+household+electric+power+consumption

The original 'Individual House Hold Electric Power Consumption Dataset'

has Measurements of electric power consumption in one household with

a one-minute sampling rate over a period of almost 4 years.

Different electrical quantities and some sub-metering values are available.

For the purpose of the examination we have provided a subset containing

the data for the first 60 days in the dataset. We have also cleaned the

dataset beforehand to remove missing values. The dataset is provided as a

csv file in the project.The dataset has a total of 7 features ordered by time.

INSTRUCTIONS

Complete the code in following functions:

1. windowed_dataset()

2. solution_model()

The model input and output shapes must match the following

specifications.

1. Model input_shape must be (BATCH_SIZE, N_PAST = 24, N_FEATURES = 7),

since the testing infrastructure expects a window of past N_PAST = 24

observations of the 7 features to predict the next 24 observations of

the same features.

2. Model output_shape must be (BATCH_SIZE, N_FUTURE = 24, N_FEATURES = 7)

3. DON'T change the values of the following constants

N_PAST, N_FUTURE, SHIFT in the windowed_dataset()

BATCH_SIZE in solution_model() (See code for additional note on

BATCH_SIZE).

4. Code for normalizing the data is provided - DON't change it.

Changing the normalizing code will affect your score.

HINT: Your neural network must have a validation MAE of approximately 0.055 or

less on the normalized validation dataset for top marks.

WARNING: Do not use lambda layers in your model, they are not supported

on the grading infrastructure.

WARNING: If you are using the GRU layer, it is advised not to use the

'recurrent_dropout' argument (you can alternatively set it to 0),

since it has not been implemented in the cuDNN kernel and may

result in much longer training times.

Solution

순서 요약

- import: 필요한 모듈 import

- 전처리: 학습에 필요한 데이터 전처리를 수행합니다.

- 모델링(model): 모델을 정의합니다.

- 컴파일(compile): 모델을 생성합니다.

- 학습 (fit): 모델을 학습시킵니다.

1. import 하기

필요한 모듈을 import 합니다.

import urllib

import os

import zipfile

import pandas as pd

import tensorflow as tf

from tensorflow.keras.layers import Dense, Conv1D, LSTM, Bidirectional

from tensorflow.keras.models import Sequential

from tensorflow.keras.callbacks import ModelCheckpoint2.1 전처리 (Load dataset)

tensorflow-datasets를 활용합니다.

def download_and_extract_data():

url = 'https://storage.googleapis.com/download.tensorflow.org/data/certificate/household_power.zip'

urllib.request.urlretrieve(url, 'household_power.zip')

with zipfile.ZipFile('household_power.zip', 'r') as zip_ref:

zip_ref.extractall()download_and_extract_data()df = pd.read_csv('household_power_consumption.csv', sep=',', infer_datetime_format=True, index_col='datetime', header=0)

df.head(10)2.2 전처리 (데이터 정규화)

데이터의 스케일(Scale)을 0 ~ 1 사이로 정규화 합니다.

def normalize_series(data, min, max):

data = data - min

data = data / max

return data# FEATURES에 데이터프레임의 Column 개수 대입

N_FEATURES = len(df.columns)

# 데이터프레임을 numpy array으로 가져와 data에 대입

data = df.values

# 데이터 정규화

data = normalize_series(data, data.min(axis=0), data.max(axis=0))

data2.3 전처리 (데이터 분할)

# 데이터셋 분할 (0.8).

# 기존 0.5 -> 0.8로 변경 // 다른 비율로 변경 가능

split_time = int(len(data) * 0.8)x_train = data[:split_time]

x_valid = data[split_time:]2.4 전처리 (Windowed Dataset 생성)

This line converts the dataset into a windowed dataset where a

window consists of both the observations to be included as features and the targets.

Don't change the shift parameter. The test windows are

created with the specified shift and hence it might affect your

scores. Calculate the window size so that based on the past 24 observations (observations at time steps t=1,t=2,...t=24) of the 7 variables

in the dataset, you predict the next 24 observations

(observations at time steps t=25,t=26....t=48) of the 7 variables of the dataset.

Hint: Each window should include both the past observations and

the future observations which are to be predicted. Calculate the

window size based on n_past and n_future.

def windowed_dataset(series, batch_size, n_past=24, n_future=24, shift=1):

ds = tf.data.Dataset.from_tensor_slices(series)

ds = ds.window(size=(n_past + n_future), shift = shift, drop_remainder = True)

ds = ds.flat_map(lambda w: w.batch(n_past + n_future))

ds = ds.shuffle(len(series))

ds = ds.map(

lambda w: (w[:n_past], w[n_past:])

)

return ds.batch(batch_size).prefetch(1)train_set과 valid_set을 생성합니다.

validation_generator에 대한 from_from_directory를 정의합니다.

# 다음 4개의 옵션은 주어 집니다.

BATCH_SIZE = 32 # 변경 가능하나 더 올리는 것은 비추 (내리는 것은 가능하나 시간 오래 걸림)

N_PAST = 24 # 변경 불가.

N_FUTURE = 24 # 변경 불가.

SHIFT = 1 # 변경 불가.train_set = windowed_dataset(series=x_train,

batch_size=BATCH_SIZE,

n_past=N_PAST,

n_future=N_FUTURE,

shift=SHIFT)

valid_set = windowed_dataset(series=x_valid,

batch_size=BATCH_SIZE,

n_past=N_PAST,

n_future=N_FUTURE,

shift=SHIFT)3. 모델 정의 (Sequential)

이제 Modeling을 할 차례입니다.

model = tf.keras.models.Sequential([

Conv1D(filters=32,

kernel_size=3,

padding="causal",

activation="relu",

input_shape=[N_PAST, 7],

),

Bidirectional(LSTM(32, return_sequences=True)),

Dense(32, activation="relu"),

Dense(16, activation="relu"),

Dense(N_FEATURES)

])모델 결과 요약

model.summary()4. 컴파일 (compile)

# learning_rate=0.0005, Adam 옵치마이저

optimizer = tf.keras.optimizers.Adam(learning_rate=0.0005)

model.compile(loss='mae',

optimizer=optimizer,

metrics=["mae"]

)ModelCheckpoint: 체크포인트 생성

val_loss 기준으로 epoch 마다 최적의 모델을 저장하기 위하여, ModelCheckpoint를 만듭니다.

checkpoint_path는 모델이 저장될 파일 명을 설정합니다.ModelCheckpoint을 선언하고, 적절한 옵션 값을 지정합니다.

checkpoint_path='model/my_checkpoint.ckpt'

checkpoint = ModelCheckpoint(checkpoint_path,

save_weights_only=True,

save_best_only=True,

monitor='val_loss',

verbose=1,

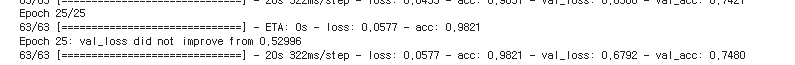

)5. 학습 (fit)

model.fit(train_set,

validation_data=(valid_set),

epochs=20,

callbacks=[checkpoint],

)

학습 완료 후 Load Weights (ModelCheckpoint)

학습이 완료된 후에는 반드시 load_weights를 해주어야 합니다.

그렇지 않으면, 열심히 ModelCheckpoint를 만든 의미가 없습니다.

model.load_weights(checkpoint_path)검증이 하고싶다면..

# HINT: Your neural network must have a validation MAE of approximately 0.055 or

# less on the normalized validation dataset for top marks.

model.evaluate(valid_set)