😂 머신러닝

😭 XOR 실습

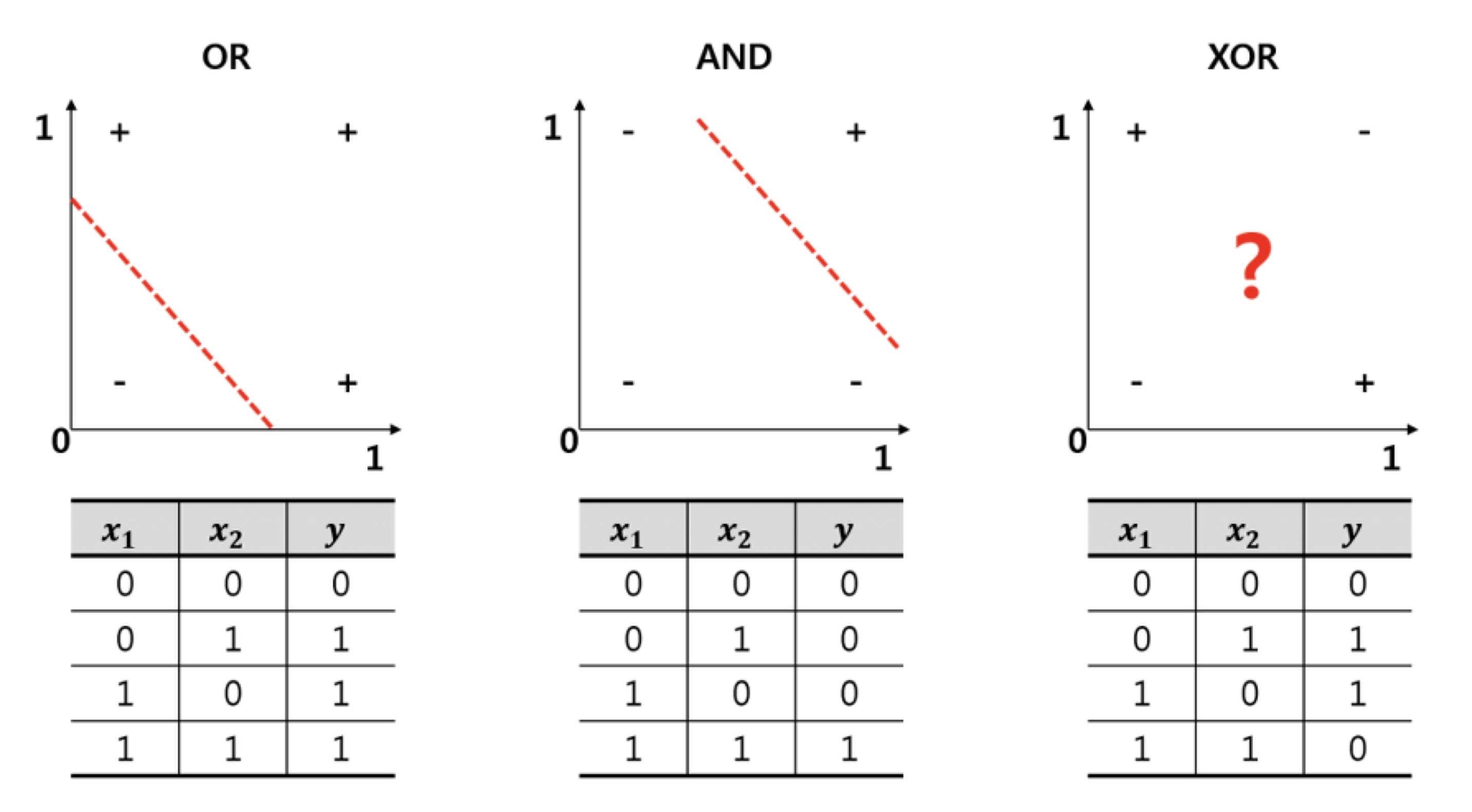

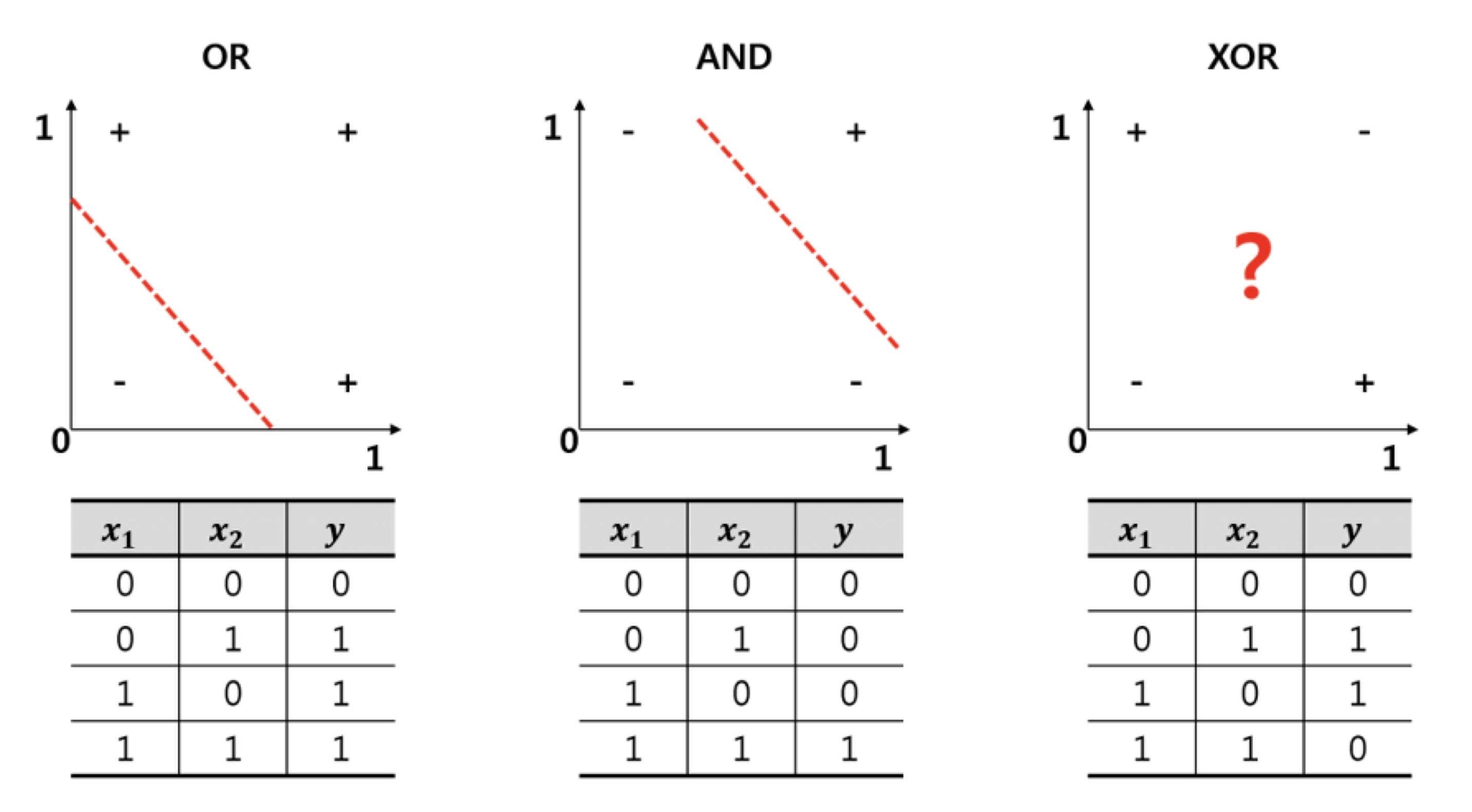

- XOR은 아래와 같은 특징을 지니고 있다.

- 실습에 필요한 것들을 import 해준다.

import numpy as np

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense

from tensorflow.keras.optimizers import Adam, SGD

- 우리가 만들 모델이 XOR의 값을 제대로 출력할 수 있는지 알고 싶기 때문에 다음과 같이 입력값과 출력값을 정해주었다. 추가적으로 keras를 사용할 것이기 때문에 float32로 데이터의 타입을 설정해 주었다.

x_data = np.array([[0, 0], [0, 1], [1, 0], [1, 1]], dtype=np.float32)

y_data = np.array([[0], [1], [1], [0]], dtype=np.float32)

- 이진 논리 회귀

- 우선,

이진 논리 회귀를 사용해 모델을 생성해보기로 했다.

- 결과적으로,

이진 논리 회귀를 통해 XOR의 값을 제대로 출력할 수 있는 모델은 생성 불가능하다.

- 모델을 피팅하는 과정에서 epoch을 1000으로 설정하였고, 이 경우 모든 결과값을 출력하게 되면 1000개가 출력되므로

verbose를 0으로 세팅해 결과값 출력을 막았다. (vervose=1이면 학습결과 출력함)

model = Sequential([

Dense(1, activation='sigmoid')

])

model.compile(loss='binary_crossentropy', optimizer=SGD(lr=0.1))

model.fit(x_data, y_data, epochs=1000, verbose=0)

- 학습한 모델에 대한 결과값을 예측해 보았다.

- 우리가 원했던 값은

0 1 1 0이다. 아래의 결과값을 보면 별로 근접하지 않다는 것을 알 수 있다. 따라서 해당 모델은 실패다.

y_pred = model.predict(x_data)

print(y_pred)

- XOR 딥러닝(MLP)

- 필요한 것들은 import 하였으니 바로 모델을 생성하겠다.

- 현재 만드려고 하는 모델은

Multilayer Perceptrons으로 이진 논리 회귀의 경우와 달리 여려 겹의 레이어로 이루어져 있다.

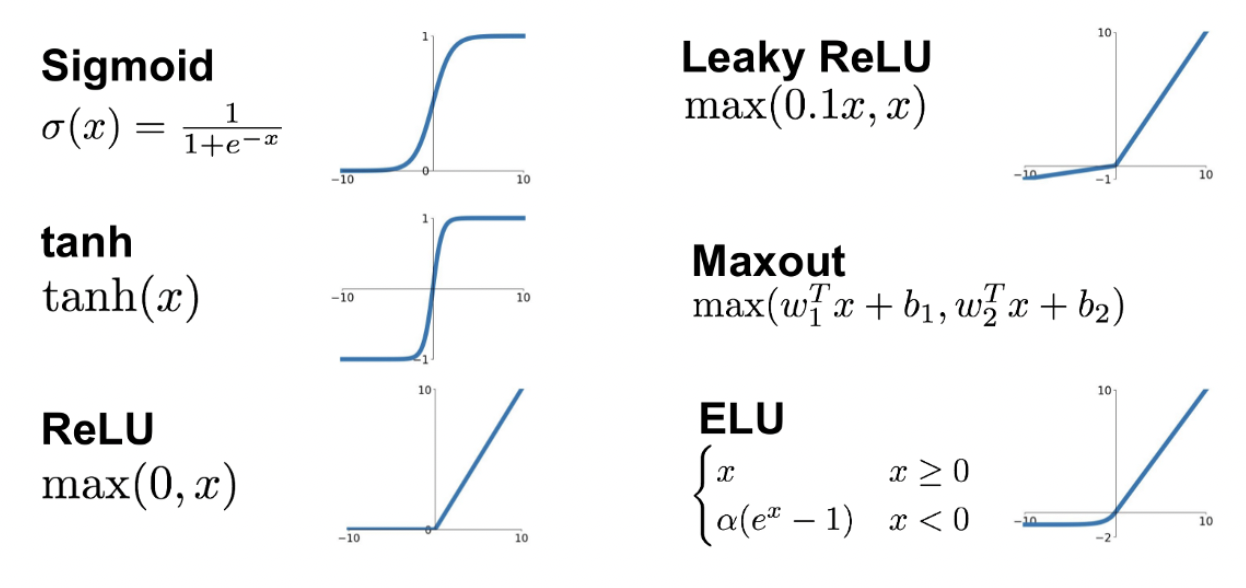

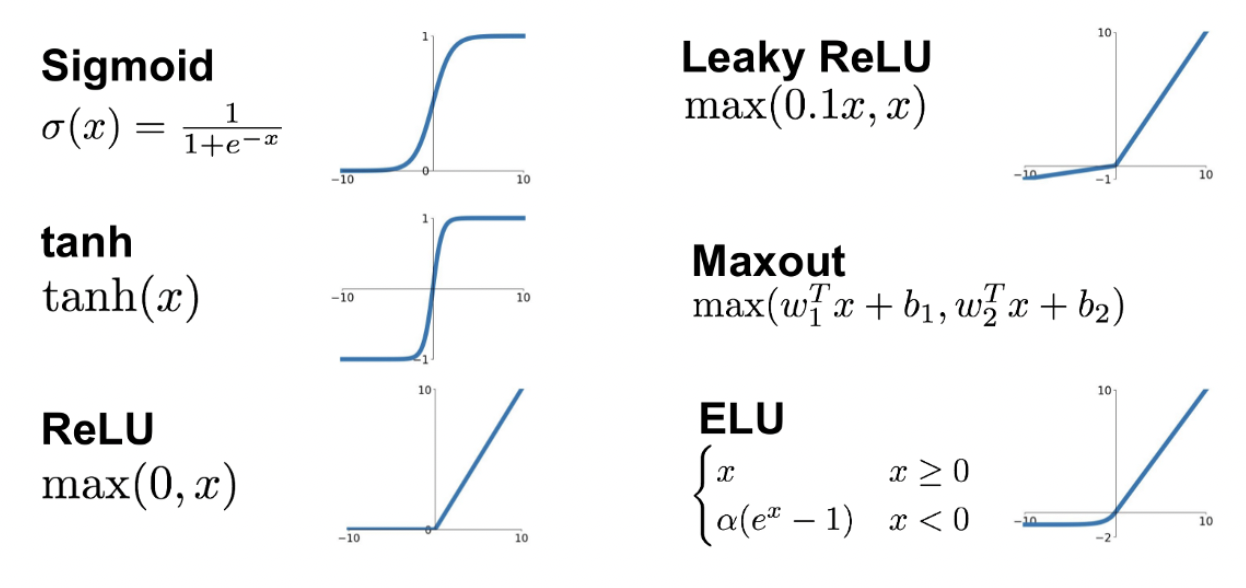

relu 함수를 활성화함수로 사용했다.

0보다 작은 값이 들어오면 0으로 출력하고(y=0), 0보다 큰 값이 들어오면 입력과 똑같은 값을 내는 그래프(y=x)

model = Sequential([

Dense(8, activation='relu'),

Dense(1, activation='sigmoid'),

])

model.compile(loss='binary_crossentropy', optimizer=SGD(lr=0.1))

model.fit(x_data, y_data, epochs=1000, verbose=0)

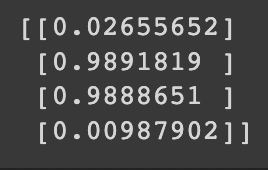

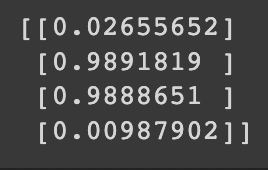

- 모델을 생성했으니 모델을 통해 결과를 예측해 보도록 하겠다.

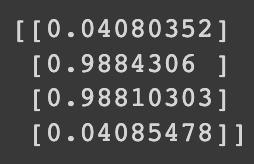

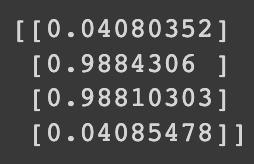

- 우리가 원했던

0 1 1 0과 유사한 결과를 얻을 수 있었다.

y_pred = model.predict(x_data)

print(y_pred)

- Keras Functional API

Sequential API는 순차적인 모델 설계에는 편리한 API 이지만, 복잡한 네트워크를 설계하기에는 한계가 있기 때문에 실무에서는 Functional API를 주로 사용하므로 해당 실습을 진행해 보았다.Functional API를 사용하기 위해 필요한 것들을 import 하였다.

import numpy as np

from tensorflow.keras.models import Sequential, Model

from tensorflow.keras.layers import Dense, Input

from tensorflow.keras.optimizers import Adam, SGD

Functional API를 사용해 모델을 생성해보았다.

hidden layer를 사용하였으며, relu를 활성화 함수로 사용했다. - 맨 위에서 선언한 input 변수가 다음 줄 hidden의 맨 마지막에 적용되며, hidden의 경우에도 마찬가지인 것을 알 수 있다.

- argument명인

inputs의 경우 2개 이상이 들어가고(실제로 2개 들어감) outputs의 경우 여러 개의 결과가 나올 수 있기 때문에 써야 한다.

- 이진 논리 회귀의 경우와 다르게

Model을 사용해 모델을 선언한다.

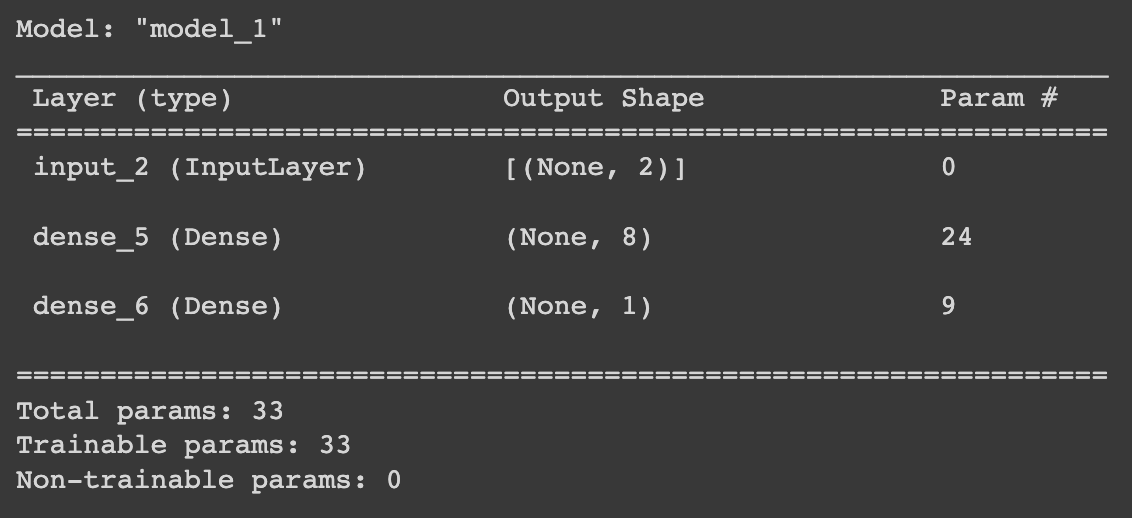

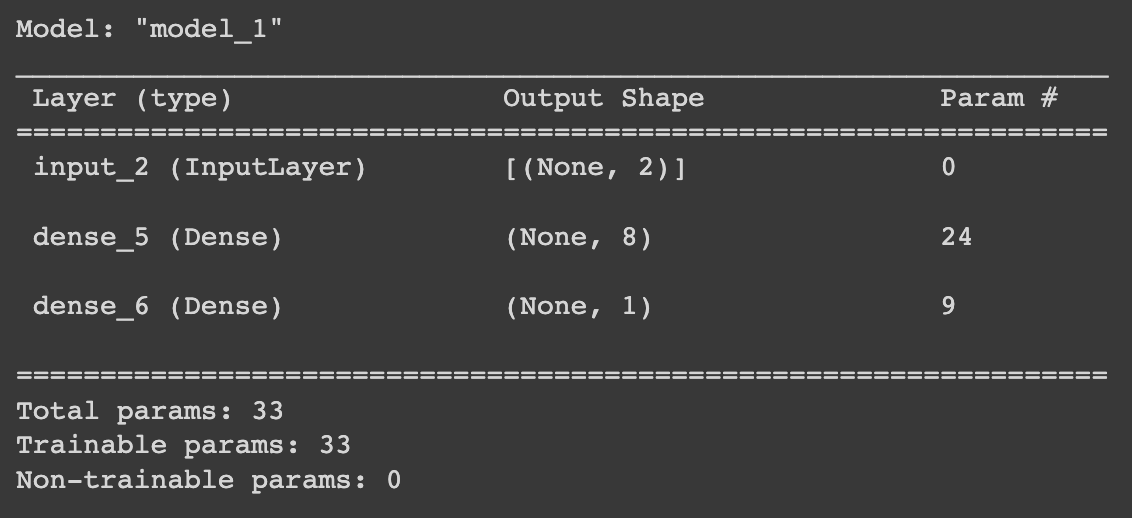

model.summary()를 통해 모델의 개요를 알 수 있다.

input = Input(shape=(2,))

hidden = Dense(8, activation='relu')(input)

output = Dense(1, activation='sigmoid')(hidden)

model = Model(inputs=input, outputs=output)

model.compile(loss='binary_crossentropy', optimizer=SGD(lr=0.1))

model.summary()

<summary 결과>

- input의 경우

Output Shape에 2가 써있는데 input이 2개가 들어간다는 뜻이다.

dense의 경우(hidden layer)도 8개가 들어가기 때문에 저렇게 쓰고 output layer의 경우도 1개 이기 때문에 저렇게 나옴- 여기서 중요한 것은 바로

'None'인데, 이것은 batch size이다.

내가 정하기 나름이기 때문에 저렇게 쓰임

- 파라미터의 개수와 총 개수도 나옴

Non-trainable params : 보통 dropout 이나 normalization layer들이 트레이닝을 안해서 여기에 나타나게 됨

- 생성된 모델을 통해 결과값 예측을 진행해 보았다.

model.fit(x_data, y_data, epochs=1000, verbose=0)

y_pred = model.predict(x_data)

print(y_pred)

😭 영어 알파벳 수화 데이터셋 실습

- 이미지가 있어 속도가 좀 느릴 수 있다. 따라서 다음과 같이 세팅을 하였다.

[런타임] - [런타임 유형 변경] - [하드웨어 가속기] GPU 선택 - [저장]

- kaggle의 데이터를 사용했기 때문에 아래와 같이 정보 입력을 한다. 그리고 데이터셋의 압축을 해제하였다.

import os

os.environ['KAGGLE_USERNAME'] = 'username'

os.environ['KAGGLE_KEY'] = 'key'

!kaggle datasets download -d datamunge/sign-language-mnist

!unzip sign-language-mnist.zip

from tensorflow.keras.models import Model

from tensorflow.keras.layers import Input, Dense

from tensorflow.keras.optimizers import Adam, SGD

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import seaborn as sns

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import StandardScaler

from sklearn.preprocessing import OneHotEncoder

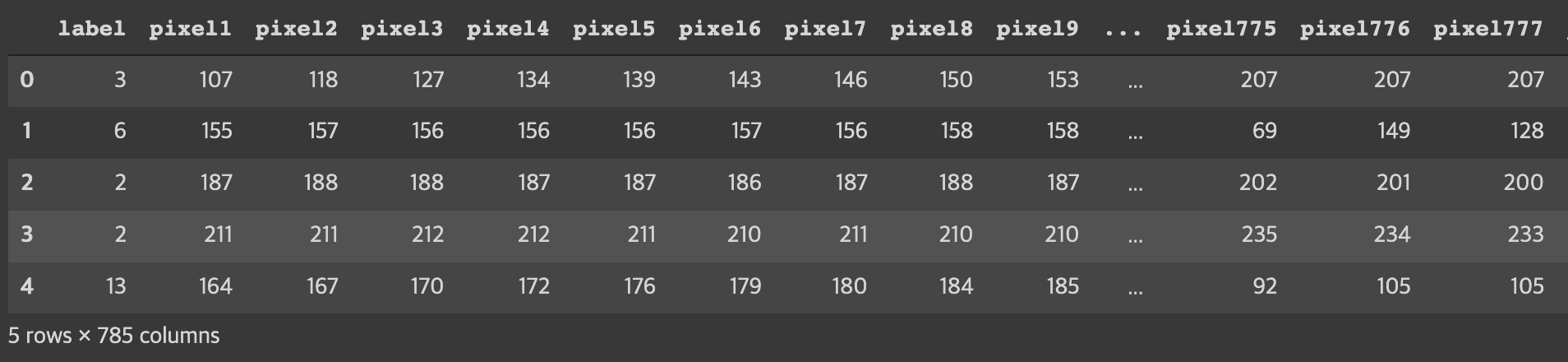

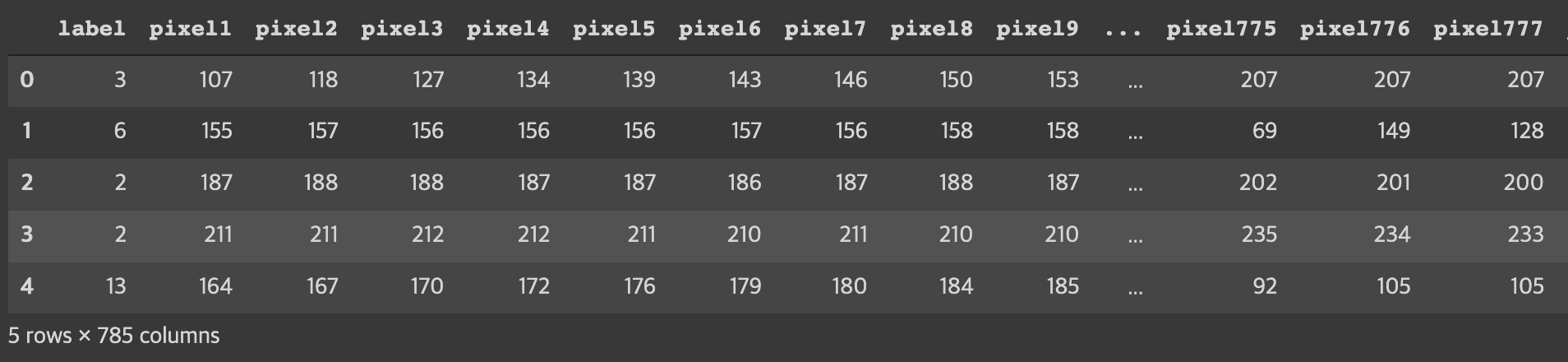

- 이번 데이터셋은 train_set과 test_set이 각각 따로 존재한다. 먼저 train_set을 적용하도록 하겠다.

train_df = pd.read_csv('sign_mnist_train.csv')

train_df.head()

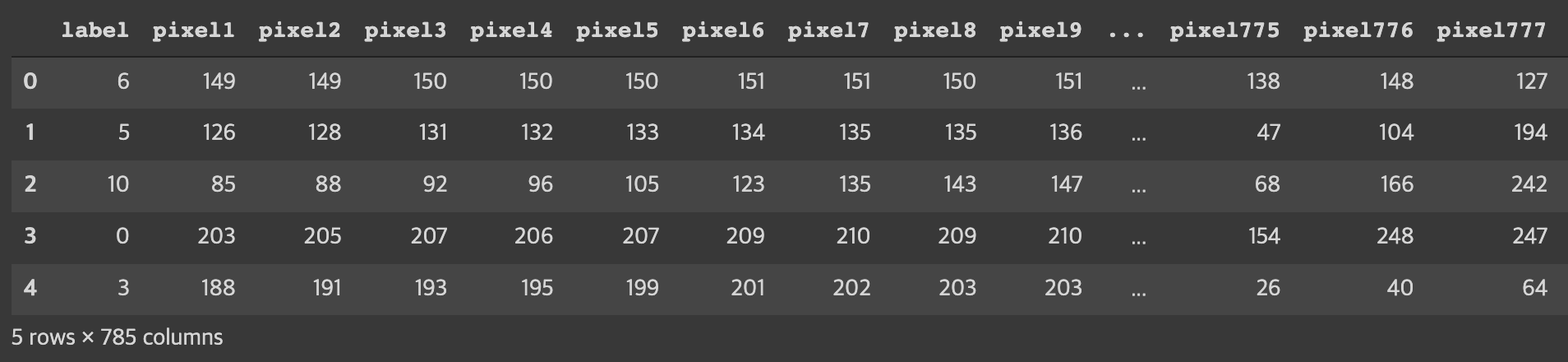

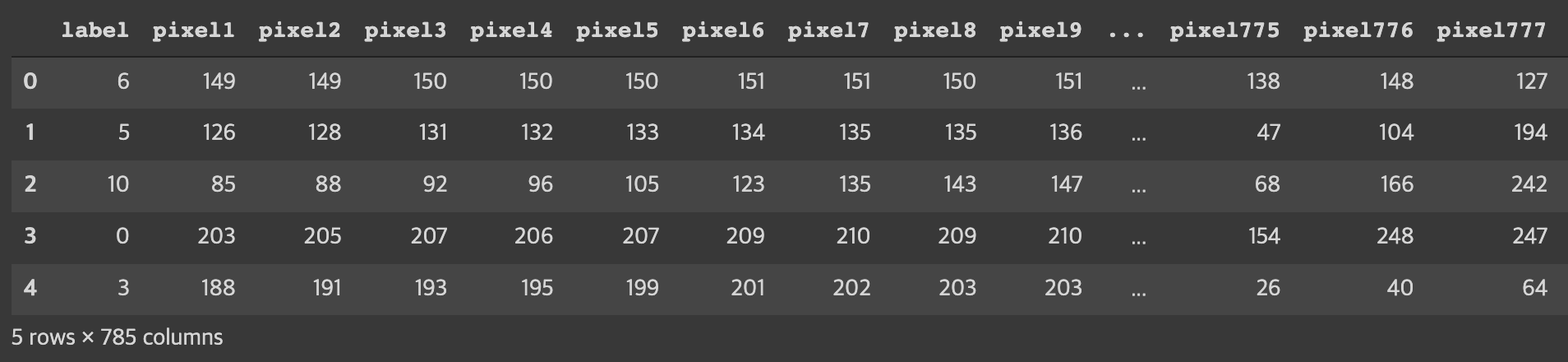

test_df = pd.read_csv('sign_mnist_test.csv')

test_df.head()

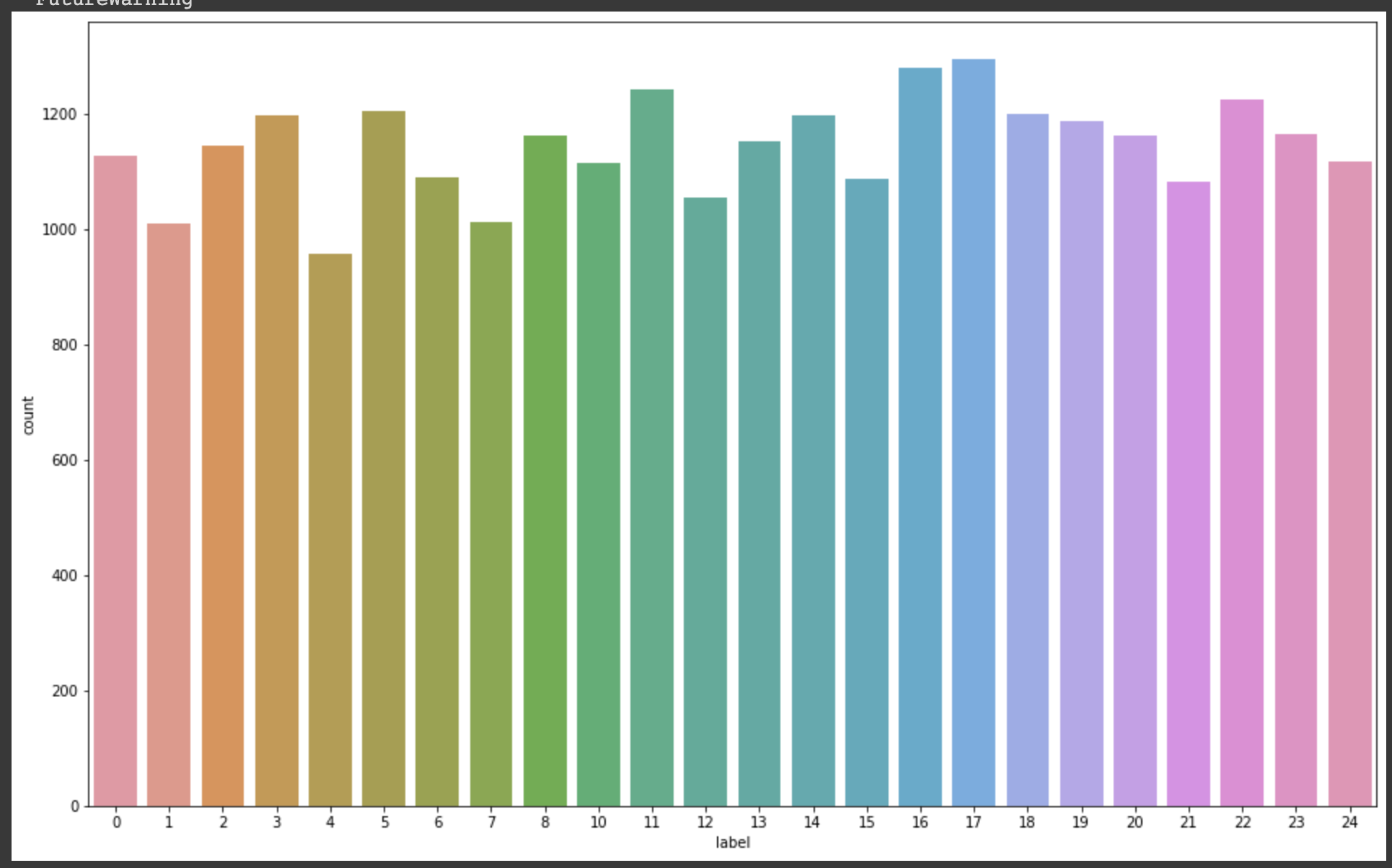

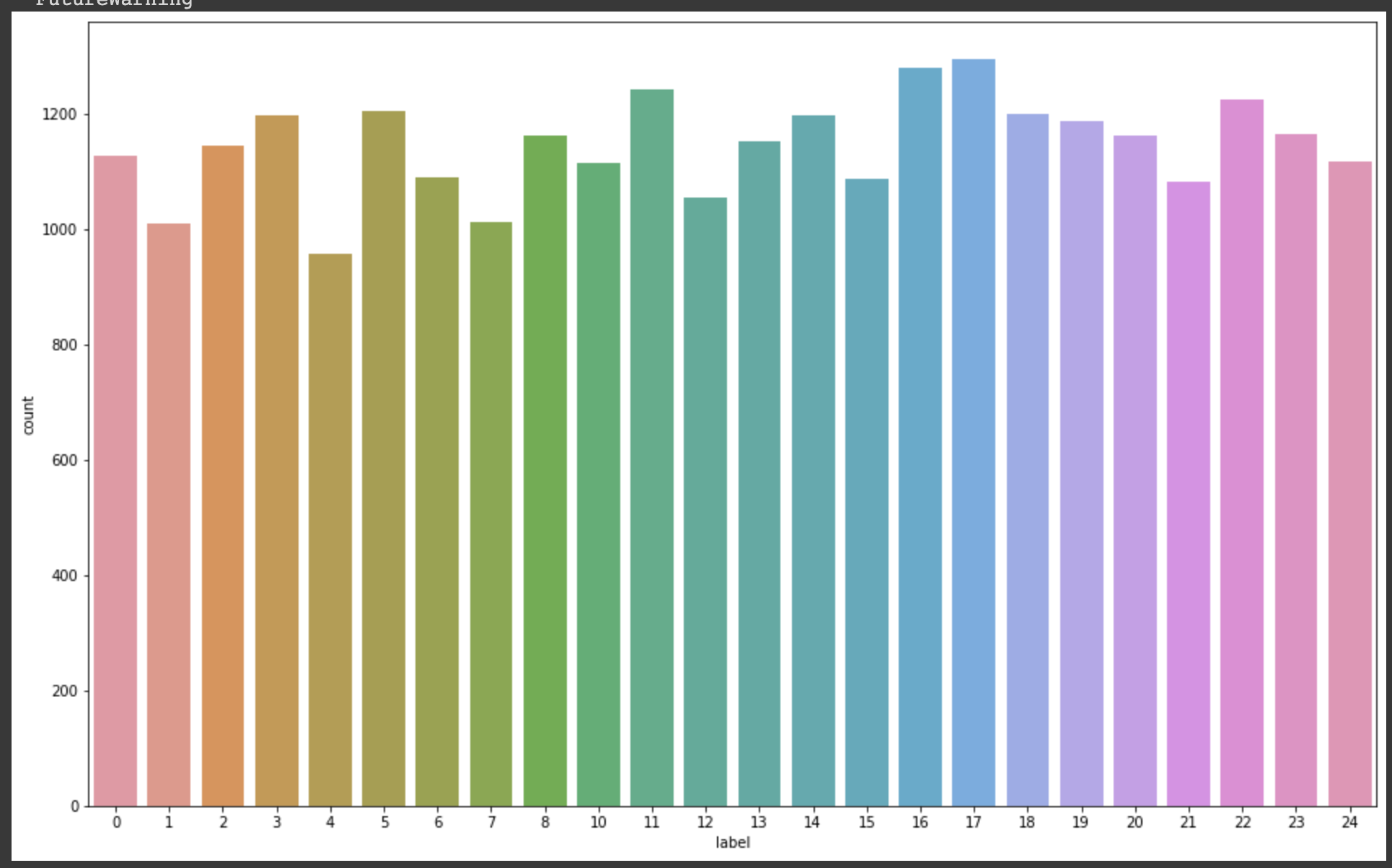

- 우리가 결과값으로 얻어야 하는 정보는 알파벳을 나타내는

label이다. 그러므로 먼저 label이 어떻게 분포되어 있는지 확인해보도록 하겠다.

- 9=J or 25=Z 는 동작이 들어가므로 제외하였다.

-> 즉 알파벳이 26개지만, 현 데이터셋에는 24가 들어가있음!

- 현재 label은 골고루 분포되어 있는 것을 알 수 있다.

plt.figure(figsize=(16, 10))

sns.countplot(train_df['label'])

plt.show()

train_df = train_df.astype(np.float32)

x_train = train_df.drop(columns=['label'], axis=1).values

y_train = train_df[['label']].values

test_df = test_df.astype(np.float32)

x_test = test_df.drop(columns=['label'], axis=1).values

y_test = test_df[['label']].values

print(x_train.shape, y_train.shape)

print(x_test.shape, y_test.shape)

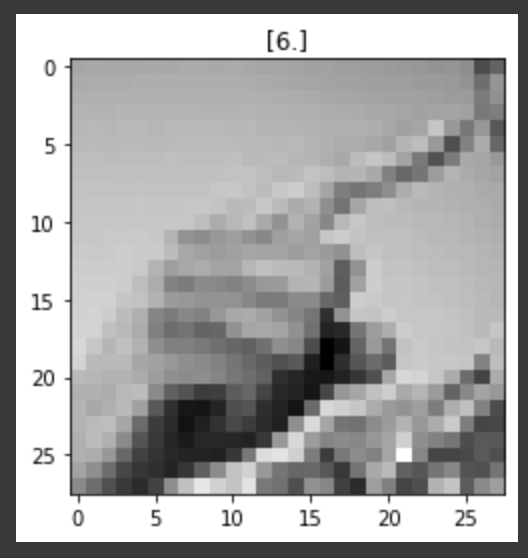

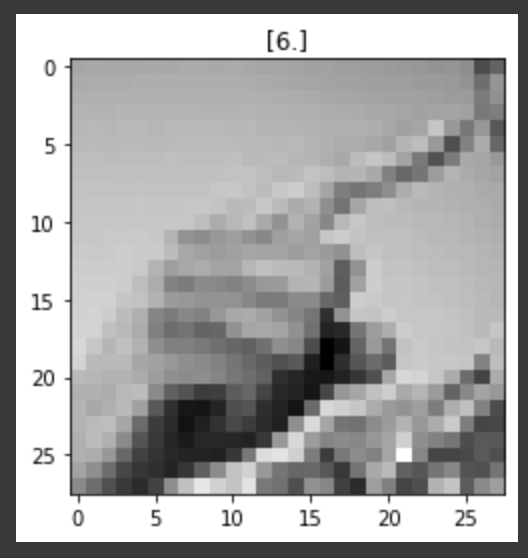

- 데이터를 미리보기해보았다.

- 맨 위의 표를 보면 알겠지만, 우리는 이미지 픽셀에 대입되는 각 값을 한 줄로 가지고 있다. 하지만 이미지로 보여지게 하려면 2차원으로 변경해주어야 한다. 따라서 이미지의 크기가 784 이므로 각

28 x 28로 만들어 주었다.

index = 1

plt.title(str(y_train[index]))

plt.imshow(x_train[index].reshape((28, 28)), cmap='gray')

plt.show()

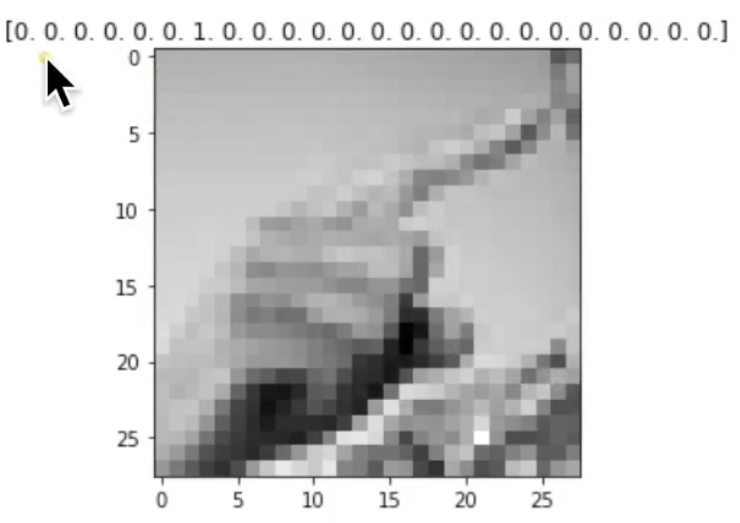

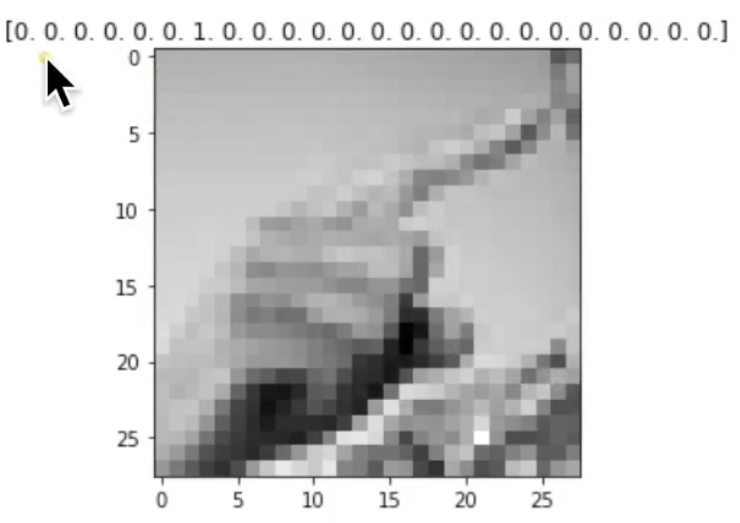

- 위의 label을 countplot으로 그린 부분을 보면 각 label의 숫자가 1에서 24까지인 것을 알 수 있다. 우리는 이 숫자들을 컴퓨터가 이해하기 쉽게 해주려고

OneHotEncoding을 진행하였다.

- 원핫인코딩을 통해 각 label들의 값이

0 ~ 1 사이의 값으로 변경되었다.

- 이 작업을 거치고 바로 위의 사진을 다시 가져와보면 아래와 같이 변하게 된다.

encoder = OneHotEncoder()

y_train = encoder.fit_transform(y_train).toarray()

y_test = encoder.fit_transform(y_test).toarray()

print(y_train.shape)

- 이미지 데이터 또한

0 ~ 255 사이의 값을 가지고 있기 때문에 255를 나누어줘 일반화시키는 작업을 진행하였다.

x_train = x_train / 255.

x_test = x_test / 255.

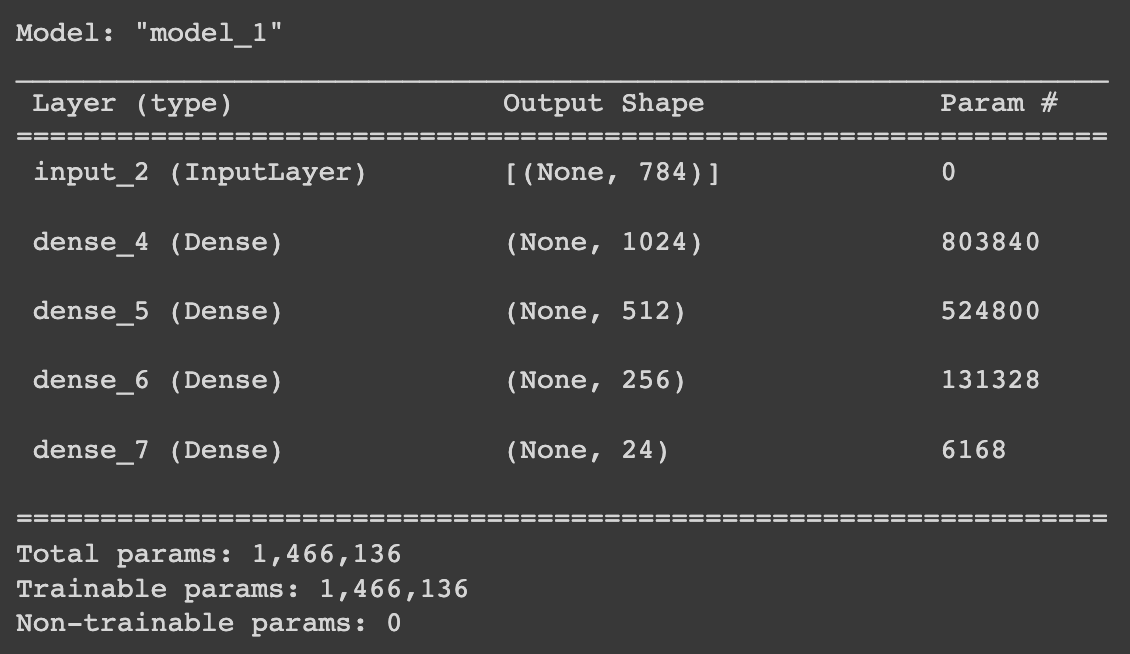

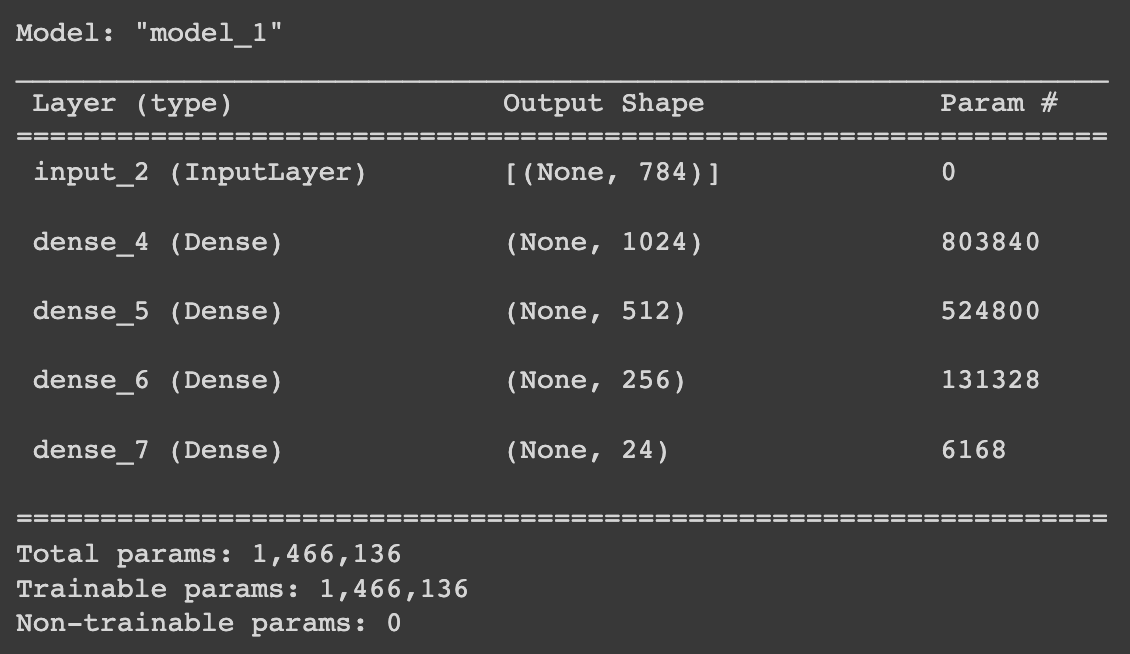

input = Input(shape=(784,))

hidden = Dense(1024, activation='relu')(input)

hidden = Dense(512, activation='relu')(hidden)

hidden = Dense(256, activation='relu')(hidden)

output = Dense(24, activation='softmax')(hidden)

model = Model(inputs=input, outputs=output)

model.compile(loss='categorical_crossentropy', optimizer=Adam(lr=0.001), metrics=['acc'])

model.summary()

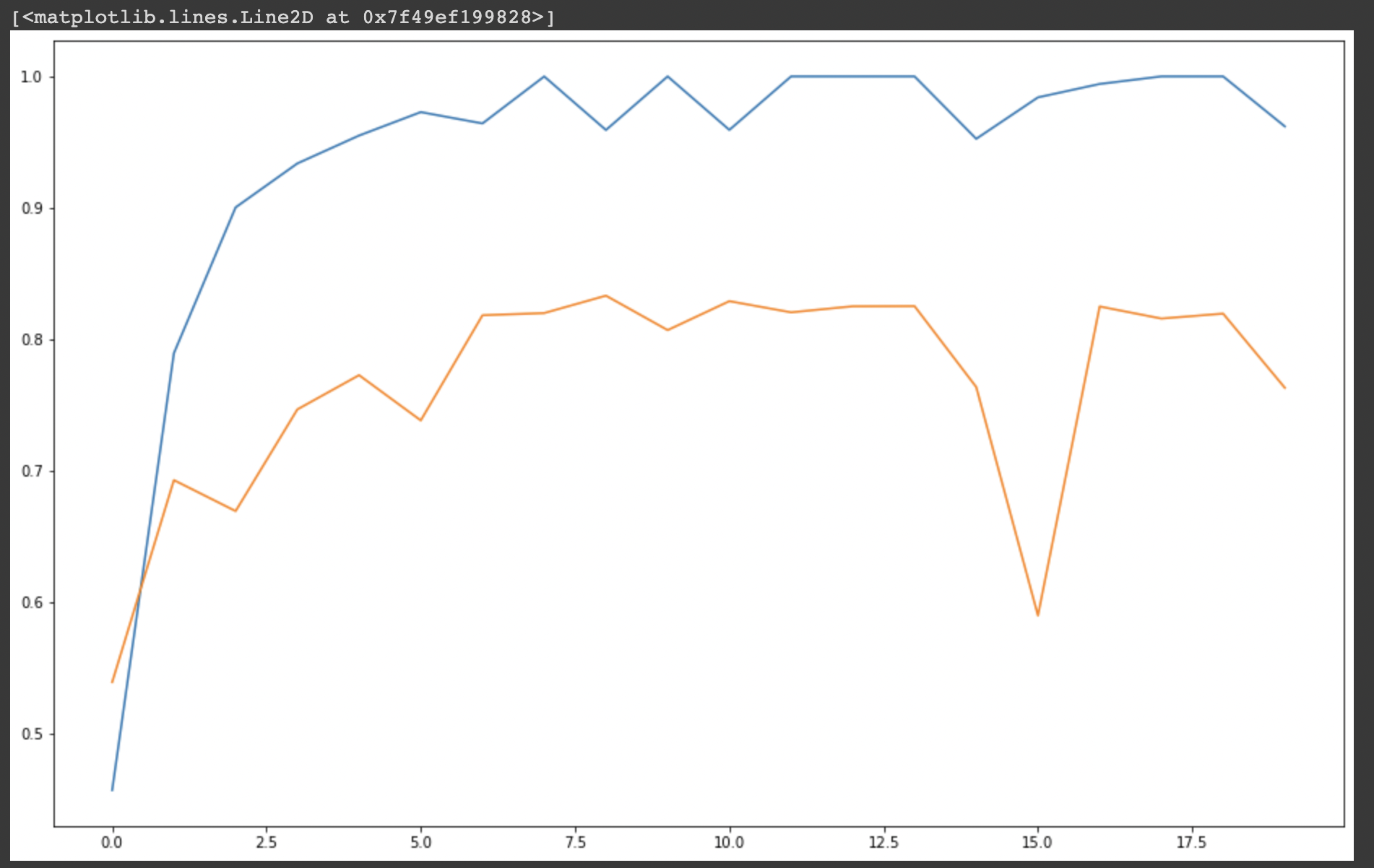

history = model.fit(

x_train,

y_train,

validation_data=(x_test, y_test),

epochs=20

)

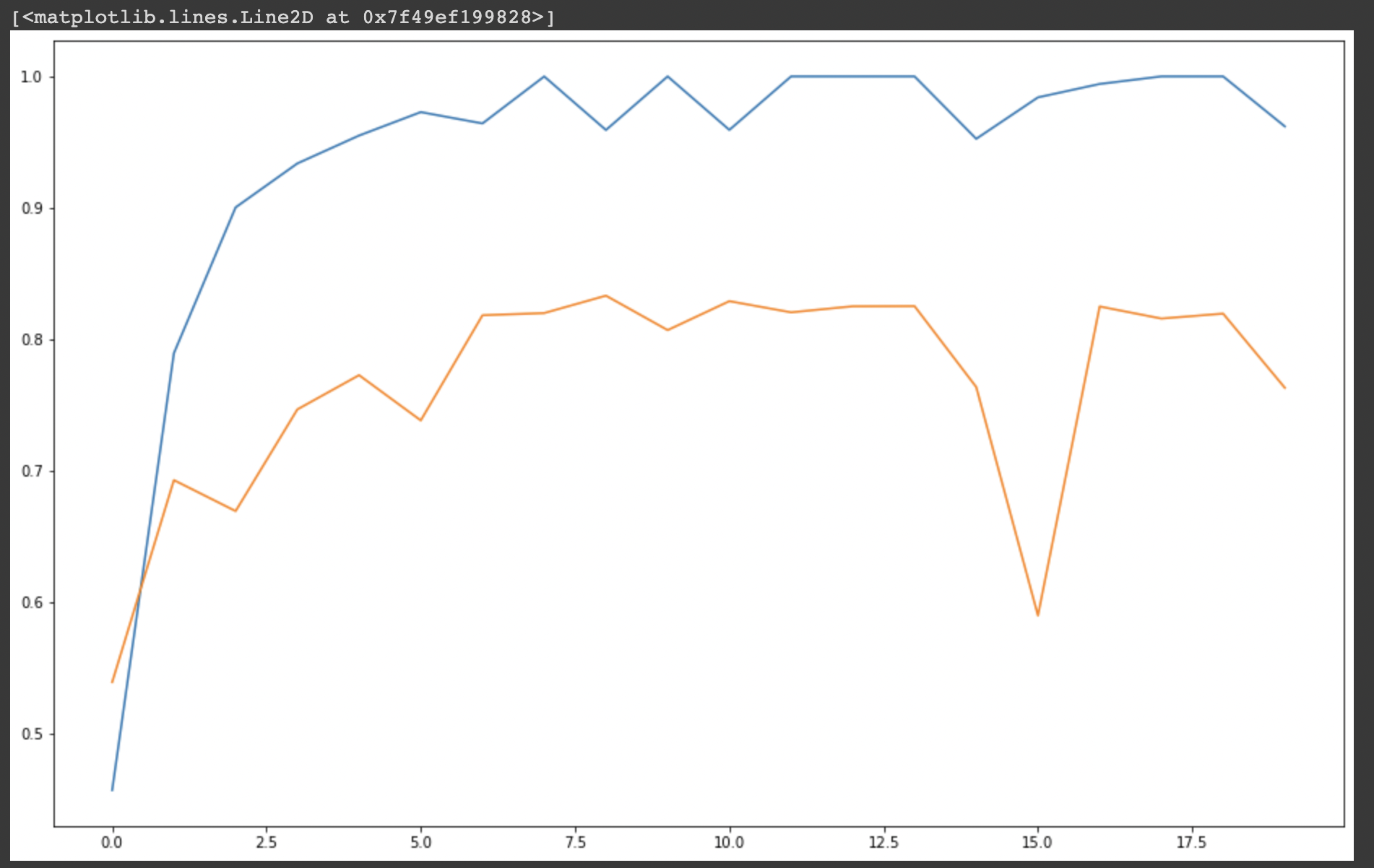

plt.figure(figsize=(16, 10))

plt.plot(history.history['loss'])

plt.plot(history.history['val_loss'])

plt.figure(figsize=(16, 10))

plt.plot(history.history['acc'])

plt.plot(history.history['val_acc'])

😭 3주차 숙제

3주차 숙제