Beautiful soup options

- using row data

03.Web Data.ipynb2. install

- conda install -c anaconda beautifulsoup4

- pip install beautifulsoup4# import

from bs4 import BeautifulSouppage = open('../data/03. zerobase.html', 'r').read()

soup = BeautifulSoup(page, 'html.parser') #(페이지, 확인 엔진)

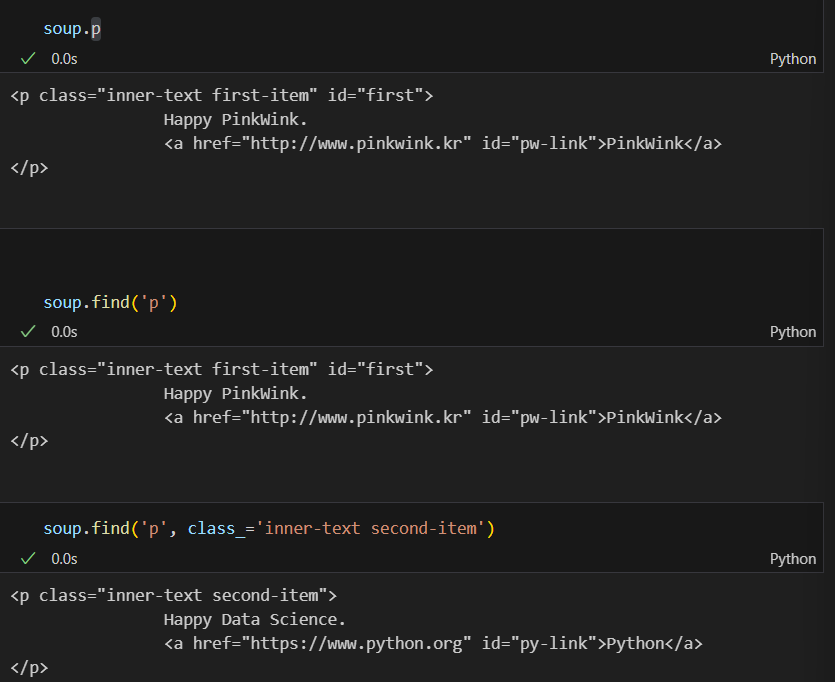

print(soup.prettify()) # prettyfy() : html code를 보기 좋게 만들어 줌3. find() : 태그 확인

- 특정 태그 확인 방법

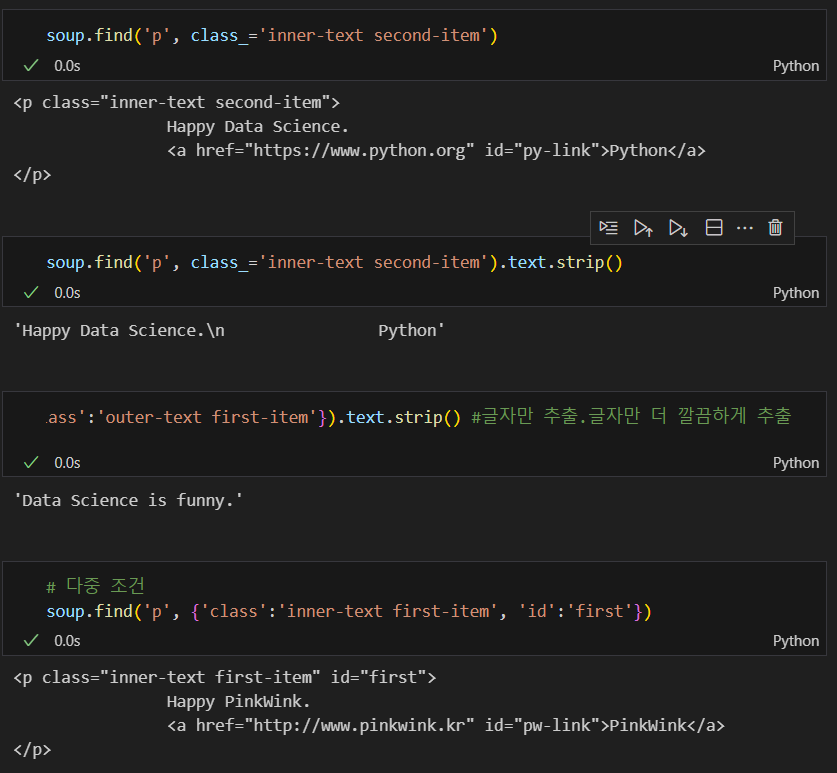

soup.psoup.find('p')soup.find('p', class_='inner-text second-item')soup.find('p', class_='inner-text second-item').text.strip()soup.find('p', {'class':'outer-text first-item'}).text.strip()

#글자만 추출.글자만 더 깔끔하게 추출- 다중 조건

soup.find('p', {'class':'inner-text first-item', 'id':'first'})

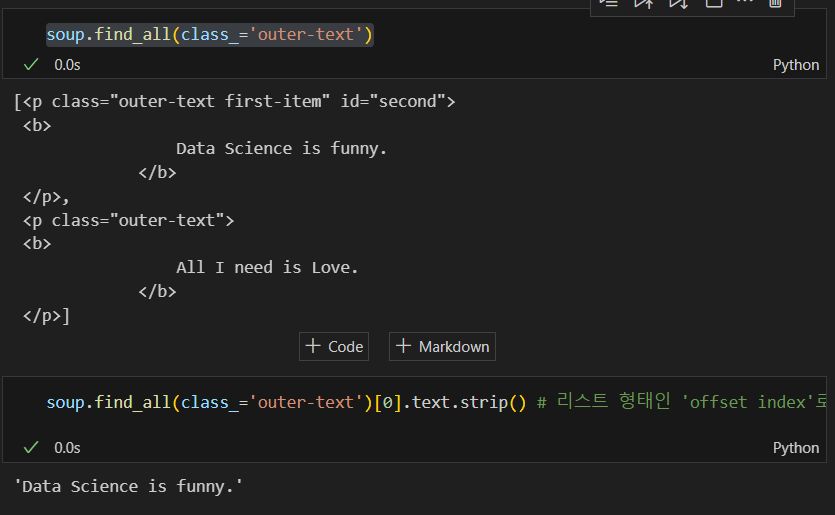

4.find_all() : 다중 태그 반환

- 여러개의 태그를 반환

- 리스트 형태로 반환

soup.find_all(class_='outer-text')soup.find_all(class_='outer-text')[0].text.strip()

# 리스트 형태인 'offset index'로 명시해 줘야 함

▼

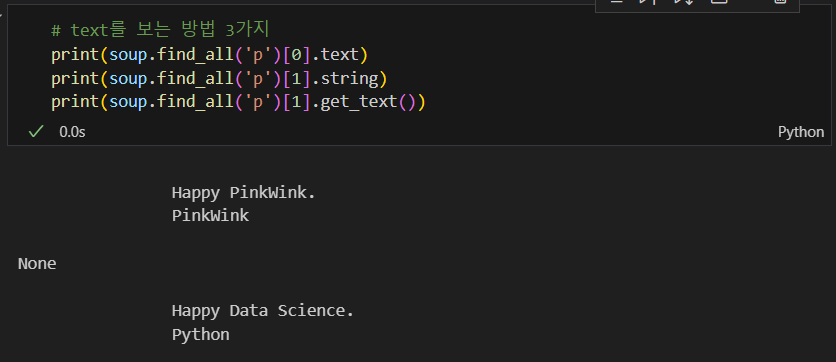

# text를 보는 방법 3가지

print(soup.find_all('p')[0].text)

print(soup.find_all('p')[1].string)

print(soup.find_all('p')[1].get_text())

▼

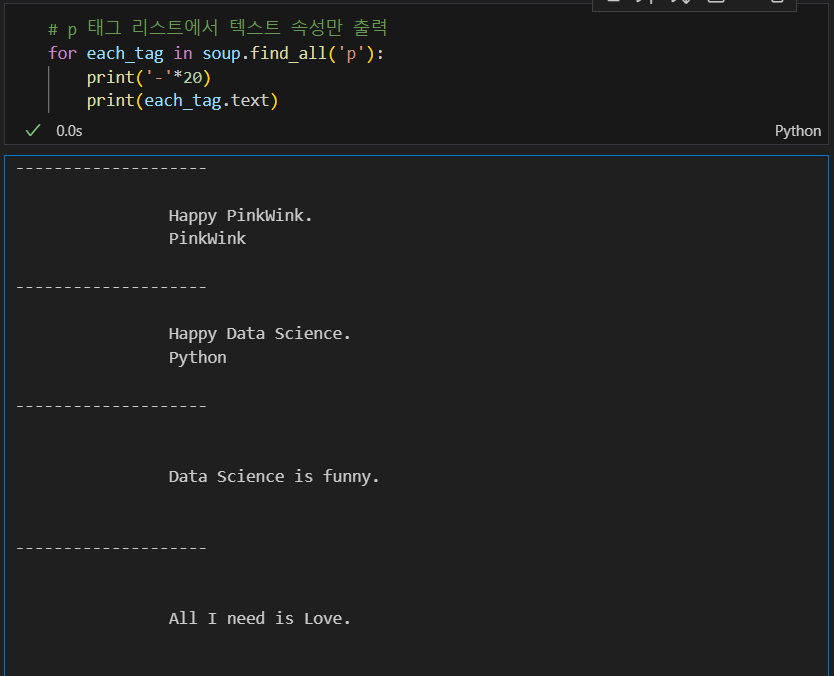

# p 태그 리스트에서 텍스트 속성만 출력

for each_tag in soup.find_all('p'):

print('-'*20)

print(each_tag.text)

▼

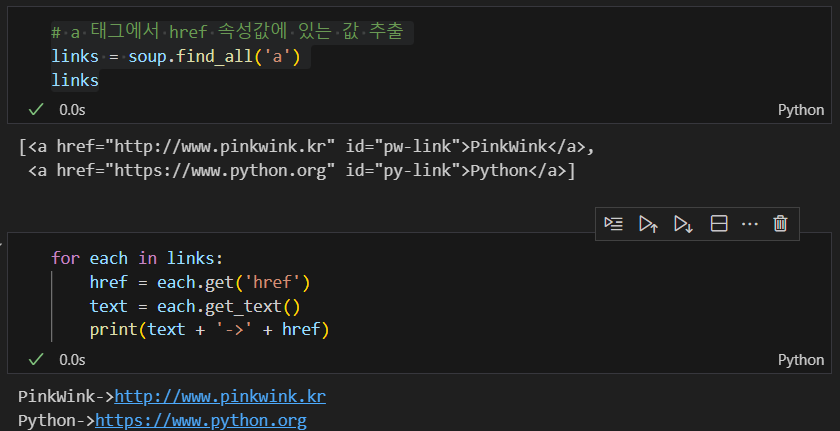

# a 태그에서 href 속성값에 있는 값 추출

links = soup.find_all('a')

linksfor each in links:

href = each.get('href')

text = each.get_text()

print(text + '->' + href)

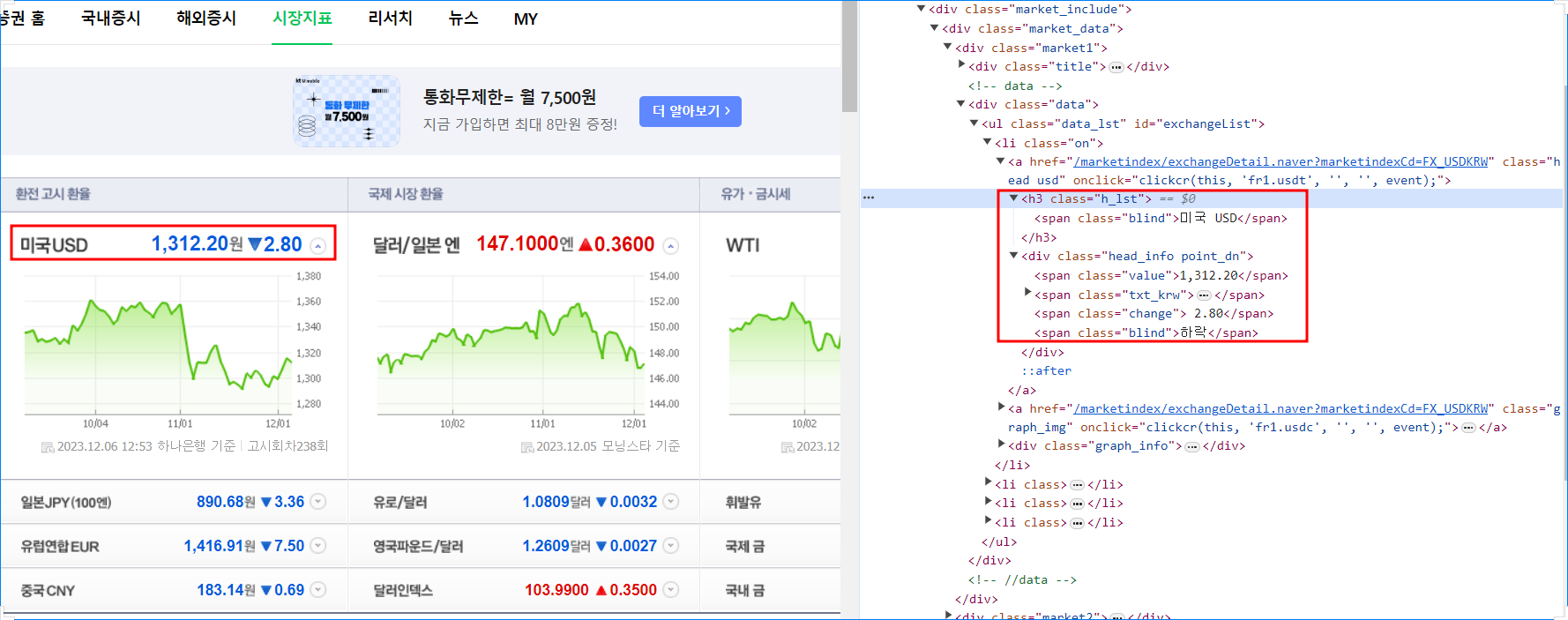

예제1. 네이버 금융

# import

from urllib.request import urlopen

# urllib 에서 request 라는 이름의 모듈에서 urlopen 기능을 가져옴

from bs4 import BeautifulSoup## http 상태 코드 확인 = 200 (문제 없음)

url = 'https://finance.naver.com/marketindex/'

response = urlopen(url)

response.statusurl = 'https://finance.naver.com/marketindex/'

page = urlopen(url)

# 종류 : page, response, res

soup = BeautifulSoup(page, 'html.parser') # 엔진 넣어주고

print(soup.prettify())soup.find_all('span', 'value'), len(soup.find_all('span', 'value'))

# 네이버 소스 : <span class="value">1,312.20</span># 값 만 추출하는 방법

soup.find_all('span', {'class':'value'})[0].text

soup.find_all('span', {'class':'value'})[0].string

soup.find_all('span', {'class':'value'})[0].get_text()

1. requests 설치

!pip install requests

!pip list | findstr xlimport requests

# from urllib.request.Request

from bs4 import BeautifulSoupimport requests

url = 'https://finance.naver.com/marketindex/'

response = requests.get(url)

soup = BeautifulSoup(response.text, 'html.parser')

print(soup.prettify())import requests

url = 'https://finance.naver.com/marketindex/'

response = requests.get(url)

# response.text

soup = BeautifulSoup(response.text, 'html.parser')

print(soup.prettify())2. text 선택

- find, select_one : 단일선택

- find_all, select : 다중선택

exchangelist = soup.select('#exchangeList > li')

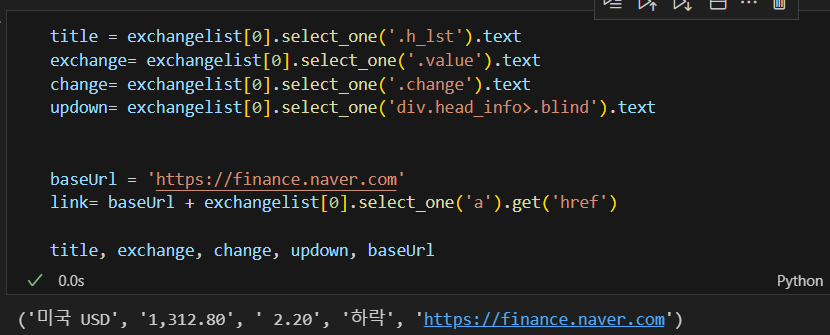

len(exchangelist), exchangelisttitle = exchangelist[0].select_one('.h_lst').text

exchange= exchangelist[0].select_one('.value').text

change= exchangelist[0].select_one('.change').text

updown= exchangelist[0].select_one('div.head_info>.blind').text

baseUrl = 'https://finance.naver.com'

link= baseUrl + exchangelist[0].select_one('a').get('href')

title, exchange, change, updown, baseUrl

- 4개의 데이터 수집

import pandas as pd

exchange_datas = []

baseUrl = 'https://finance.naver.com'

for item in exchangelist:

data = {

'title' : item.select_one('.h_lst').text,

'exchange' : item.select_one('.value').text,

'change' : item.select_one('.change').text,

'undown' : item.select_one('div.head_info>.blind').text,

'link' : baseUrl + item.select_one('a').get('href')

}

exchange_datas.append(data)

df = pd.DataFrame(exchange_datas) # Dic.타입 -> List.타입으로 변경해 줌 : 작업 용이성을 위해

df

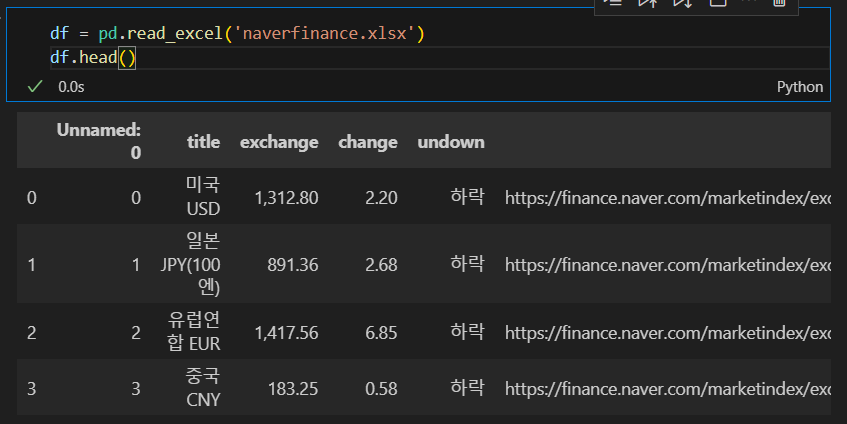

df.to_excel("naverfinance.xlsx")df = pd.read_excel('naverfinance.xlsx')

df.head()

예제2. 위키백과 정보 가져오기

1. url 포맷 변경

import urllib

from urllib.request import urlopen, Request

html = 'https://ko.wikipedia.org/wiki/{search_words}'

#인코딩이 깨짐 : https://ko.wikipedia.org/wiki/%EC%97%AC%EB%AA%85%EC%9D%98_%EB%88%88%EB%8F%99%EC%9E%90

# 구글 검색 > decode : 깨진 url 넣으면 'https://ko.wikipedia.org/wiki/여명의_눈동자' 잘 나옴

req = Request(html.format(search_words = urllib.parse.quote('여명의_눈동자')))

# format : 문자 포맷 기능을 이용해 변경

# urllib.parse.quote 기능 : 글자를 url로 인코딩하는 기능

response = urlopen(req)

response.status

soup = BeautifulSoup(response, 'html.parser')

print(soup.prettify())

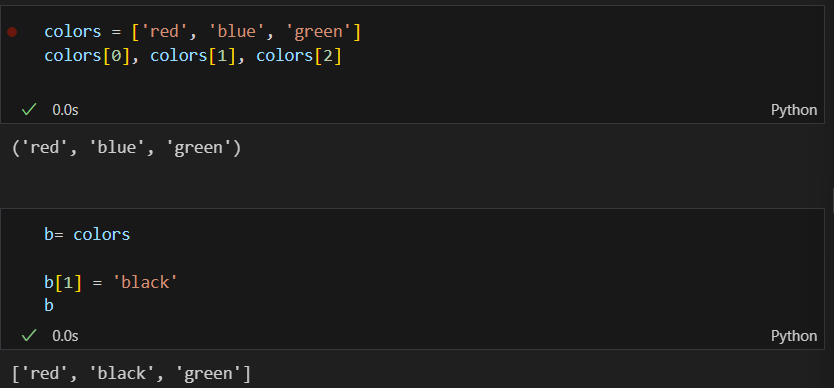

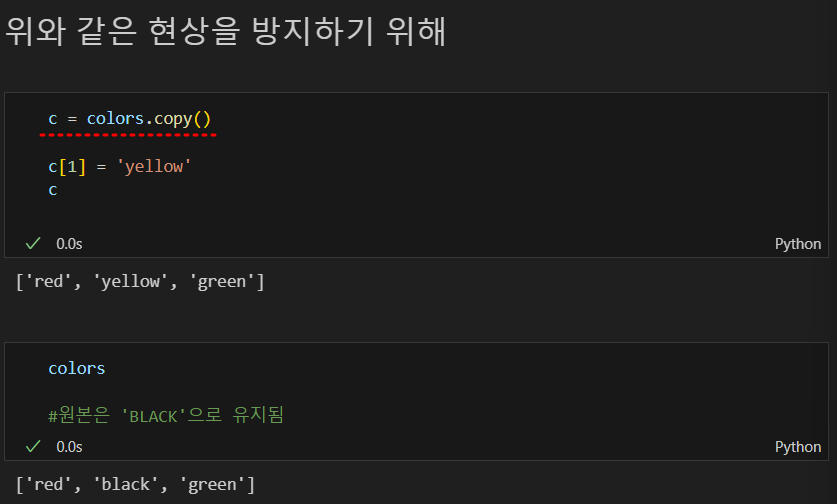

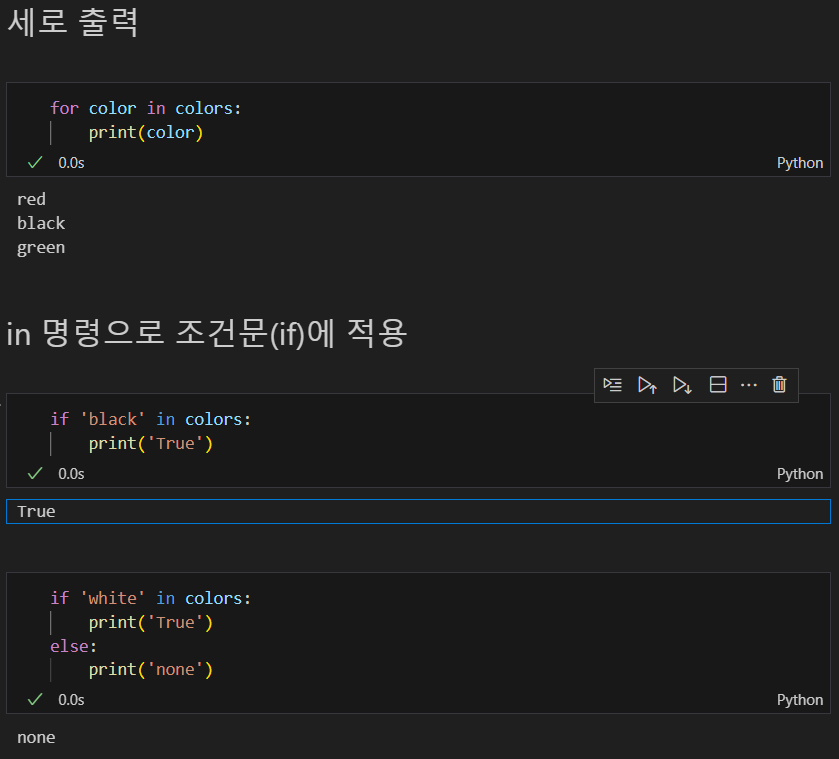

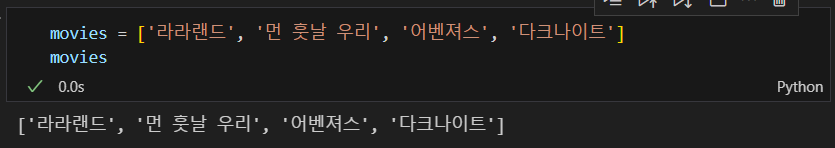

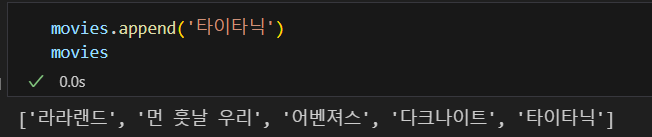

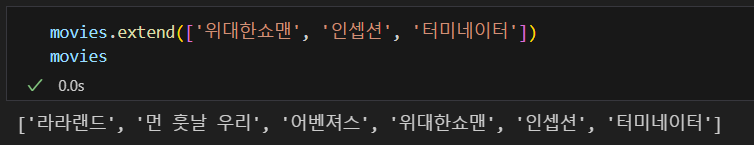

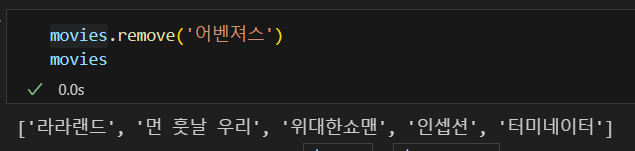

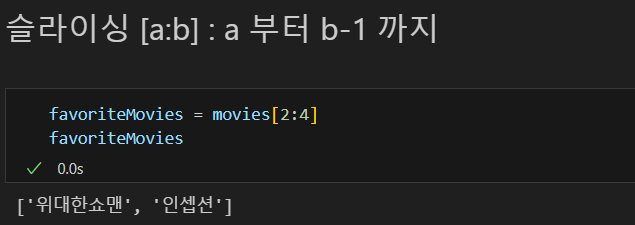

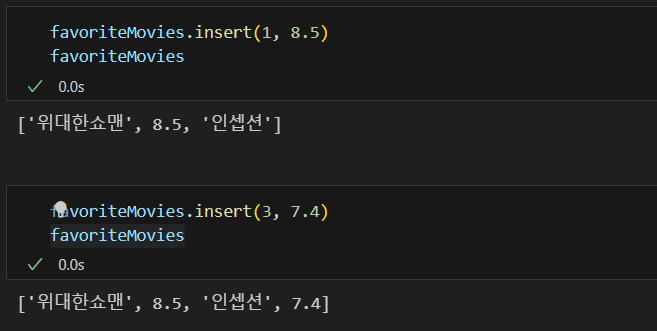

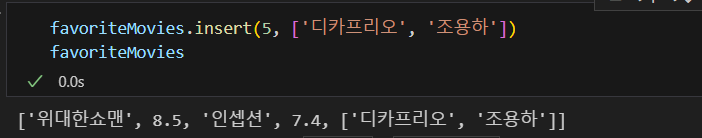

2.python list 데이터형

- python list 예제

- append : 뒤에 추가

- pop : 뒤에서 부터 삭제

- extend : 뒤에 자료 추가

- remove : 자료삭제

- [a:b] : a ~ b-1 까지

- insert(a:b) : a번째 b삽입

- list에 list 삽입

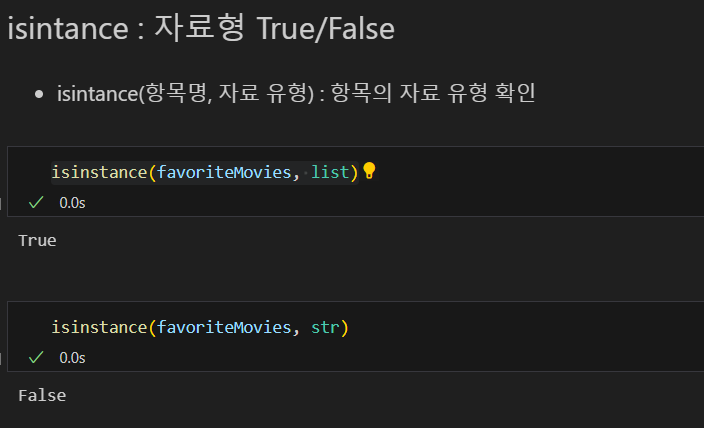

- isintance : 자료형 T/F

예제3. 맛집 데이터 수집/파악

- raw data

-

https://www.chicagomag.com/chicago-magazine/november-2012/best-sandwiches-chicago/

-

검색어 : chicago magazine the 50 best sandwiches

-

목표 : 가게이름, 메뉴, 가격, 주소 수집

- 메인 페이지

from urllib.request import Request, urlopen

from bs4 import BeautifulSoup

from fake_useragent import UserAgent

url_base = 'https://www.chicagomag.com/'

url_sub = 'chicago-magazine/november-2012/best-sandwiches-chicago/'

url = url_base + url_sub

ua = UserAgent()

ua.ie # 여러가지 환경을 랜덤하게 만들어 줌

req = Request(url, headers={'User-Agent':ua.ie})

html = urlopen(req)

soup = BeautifulSoup(html, 'html.parser')

print(soup.prettify())

# ▼ 403 error 발생 시

# req = Request(url, headers=('User-Agent:'Chrome'))

# response = urlopen(req)

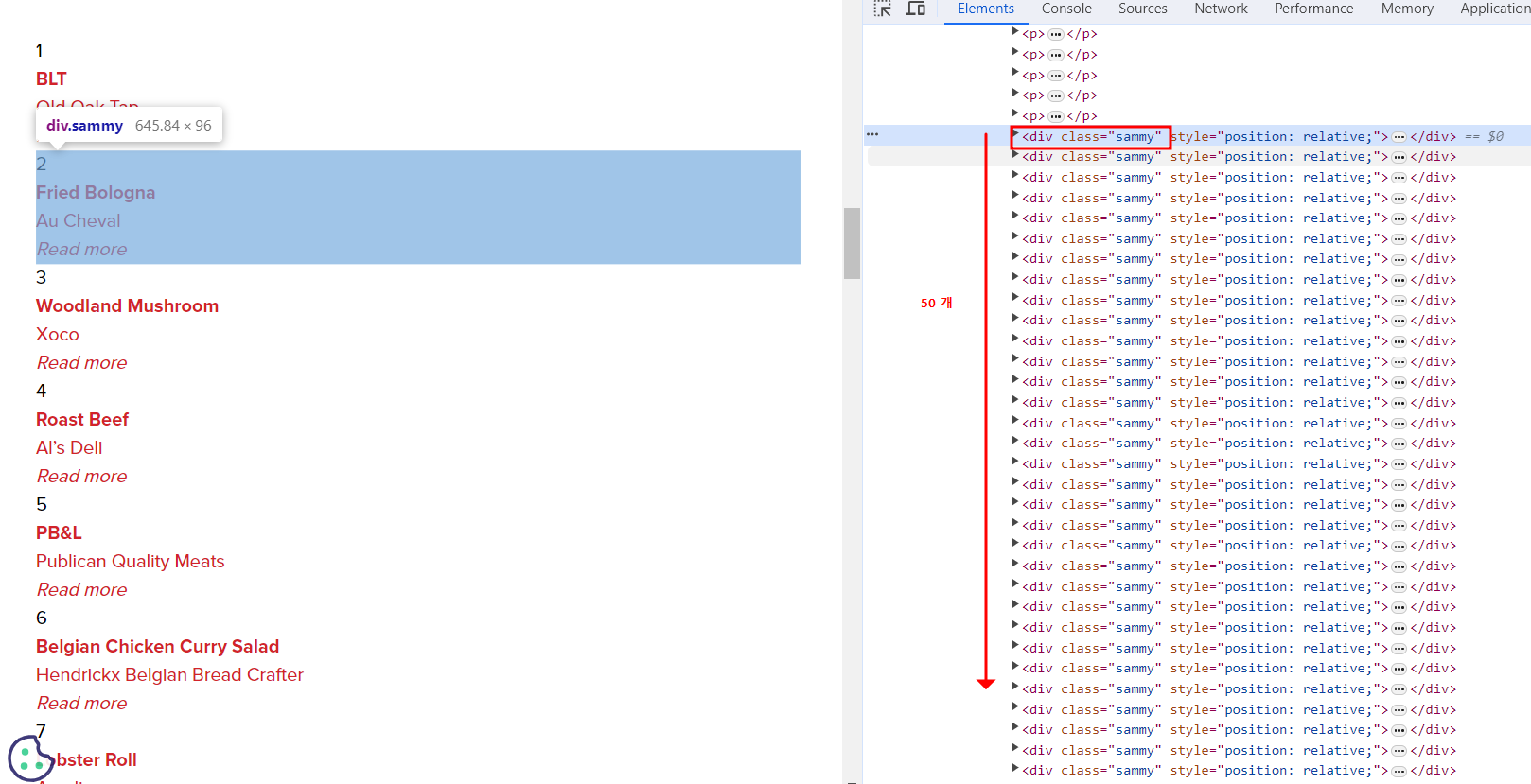

# response.statussoup.find_all('div', 'sammy'), len(soup.find_all('div', 'sammy'))

soup.select('.sammy'), len(soup.select('.sammy'))

- 태그 : div

- 클래스 : sammy

- 선택하면 50개 데이터 수집이 가능

- 전체 데이터를 가져오기 위한 [샘플코드]

tmp_one = soup.find_all('div','sammy')[0]

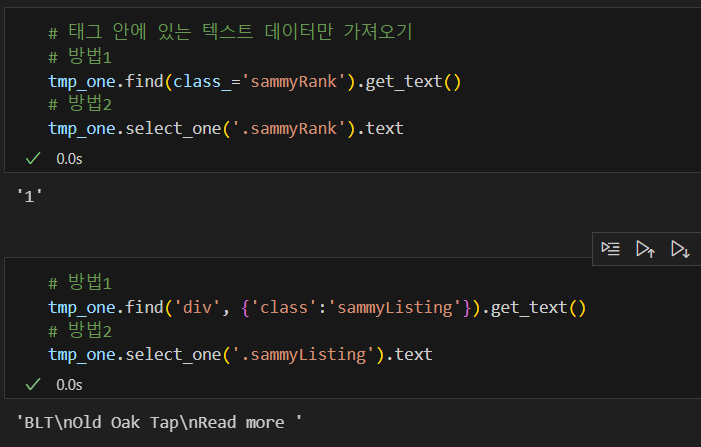

tmp_one- 태그 안에 있는 텍스트 데이터만 가져오기

# 방법1

tmp_one.find(class_='sammyRank').get_text()

# 방법2

tmp_one.select_one('.sammyRank').text# 방법1

tmp_one.find('div', {'class':'sammyListing'}).get_text()

# 방법2

tmp_one.select_one('.sammyListing').text

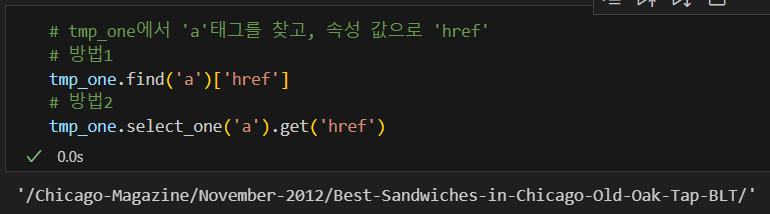

- tmp_one에서 'a'태그를 찾고, 속성 값으로 'href'

# 방법1

tmp_one.find('a')['href']

# 방법2

tmp_one.select_one('a').get('href')

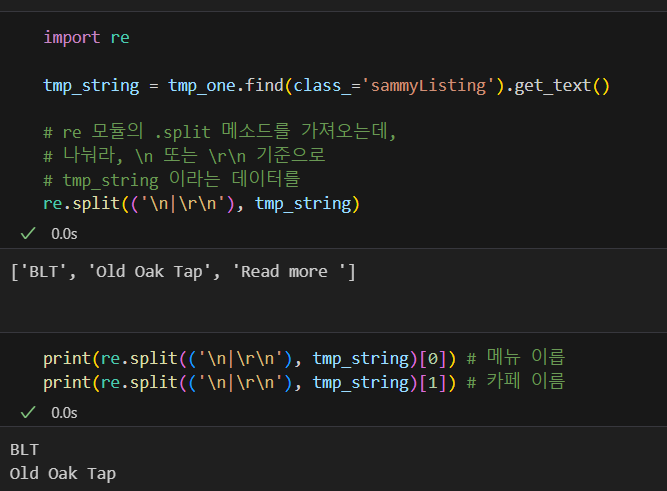

- 메뉴/카페 이름 가져오기

import re

tmp_string = tmp_one.find(class_='sammyListing').get_text()

# re 모듈의 .split 메소드를 가져오는데,

# 나눠라, \n 또는 \r\n 기준으로

# tmp_string 이라는 데이터를

re.split(('\n|\r\n'), tmp_string)print(re.split(('\n|\r\n'), tmp_string)[0]) # 메뉴 이릅

print(re.split(('\n|\r\n'), tmp_string)[1]) # 카페 이름

6. 전체 데이터(50개) 수집

from urllib.parse import urljoin

url_base = 'https://www.chicagomag.com/'

# 필요한 내용을 담을 빈리스트 만들기

# 리스트로 하나씩 컬럼을 만듬

# DataFrame 으로 합칠 예정

rank =[]

main_menu =[]

cafe_name =[]

url_add =[]

list_soup = soup.find_all('div', 'sammy')

# 동일한 코드 : soup.select('.sammy')

# for문

for item in list_soup:

rank.append(item.find(class_='sammyRank').get_text())

tmp_string = item.find(class_='sammyListing').get_text()

main_menu.append(re.split(('\n|\r\n'), tmp_string)[0])

cafe_name.append(re.split(('\n|\r\n'), tmp_string)[1])

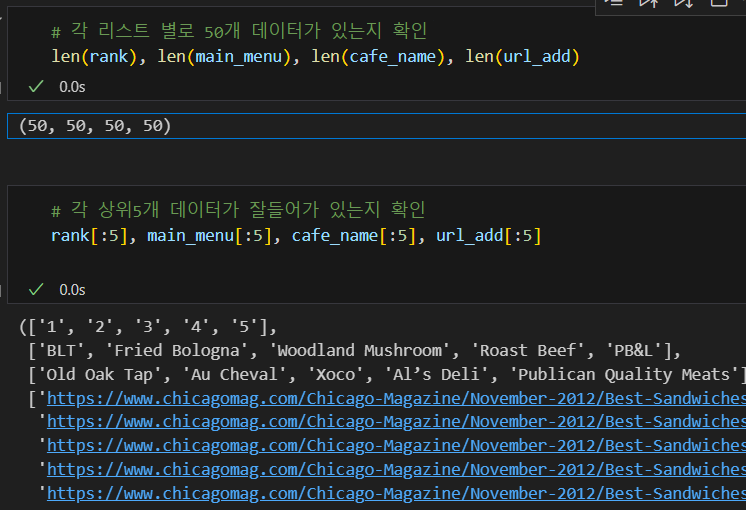

url_add.append(urljoin(url_base, item.find('a')['href']))- 각 리스트 별로 50개 데이터가 있는지 확인

len(rank), len(main_menu), len(cafe_name), len(url_add)- 각 상위5개 데이터가 잘들어가 있는지 확인

rank[:5], main_menu[:5], cafe_name[:5], url_add[:5]

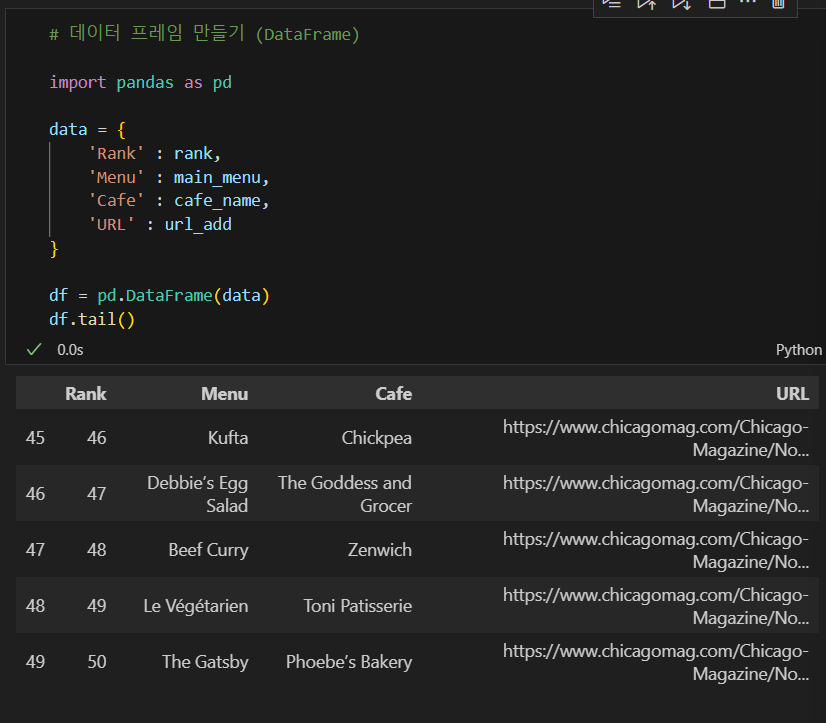

7. DataFrame 만들기

import pandas as pd

data = {

'Rank' : rank,

'Menu' : main_menu,

'Cafe' : cafe_name,

'URL' : url_add

}

df = pd.DataFrame(data)

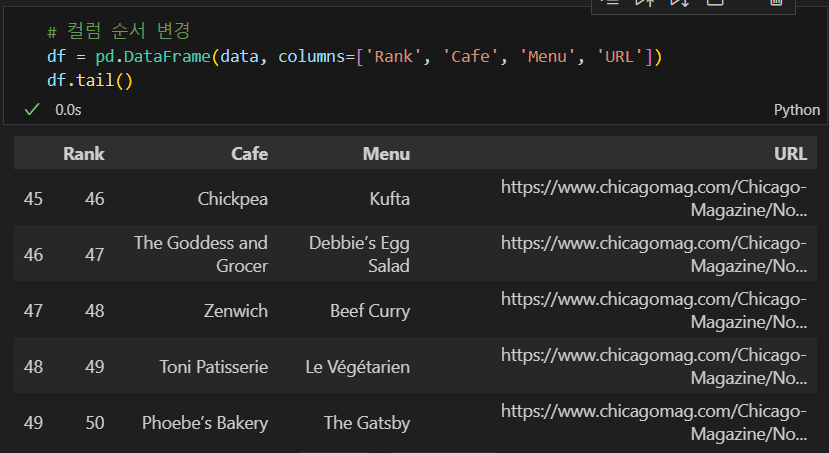

df.tail()

8. columns 순서 변경

df = pd.DataFrame(data, columns=['Rank', 'Cafe', 'Menu', 'URL'])

df.tail()

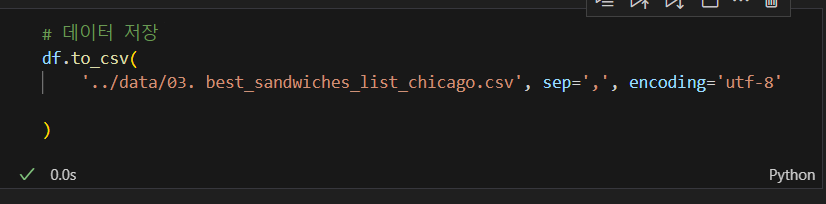

- 데이터 저장

df.to_csv(

'../data/03. best_sandwiches_list_chicago.csv', sep=',', encoding='utf-8'

)

예제4. 맛집 데이터 분석_하위 페이지

# requirements

import pandas as pd

from urllib.request import urlopen, Request

from fake_useragent import UserAgent

from bs4 import BeautifulSoupdf = pd.read_csv('../data/03. best_sandwiches_list_chicago.csv', index_col=0)

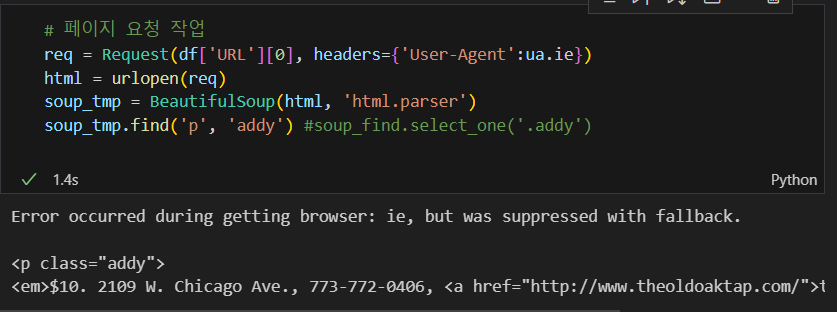

df.tail()- 페이지 요청 작업

req = Request(df['URL'][0], headers={'User-Agent':ua.ie})

html = urlopen(req)

soup_tmp = BeautifulSoup(html, 'html.parser')

soup_tmp.find('p', 'addy') #soup_find.select_one('.addy')

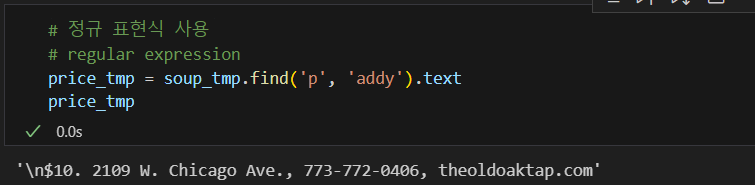

- 정규 표현식 사용

# 정규 표현식 사용

# regular expression

price_tmp = soup_tmp.find('p', 'addy').text

price_tmp

- 주소 추출

tmp = re.search('\$\d+\.(\d+)?', price_tmp).group()

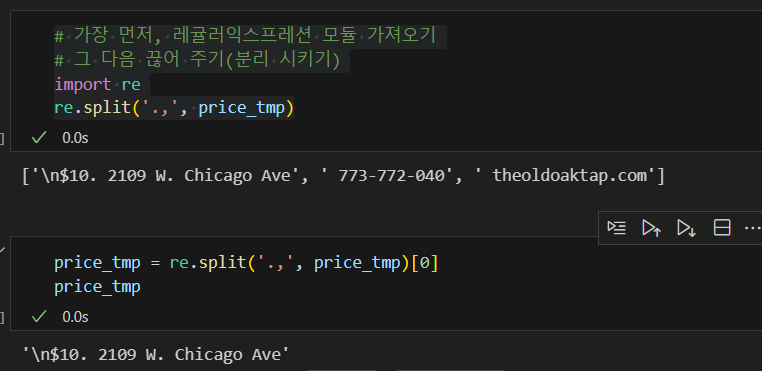

price_tmp[len(tmp) + 2:]4. regular expression; re

# 가장 먼저, 레귤러익스프레션 모듈 가져오기

# 그 다음 끊어 주기(분리 시키기)

import re

re.split('.,', price_tmp)price_tmp = re.split('.,', price_tmp)[0]

price_tmp

- 최종 출력

- test ver. : 상위 3개 데이터만 뽑아보기

import re

price = []

address = []

for n in df.index[:3]:

req = Request(df['URL'][n], headers={'User-Agent':ua.ie})

html = urlopen(req).read()

soup_tmp = BeautifulSoup(html, 'html.parser')

gettings = soup_tmp.find('p', 'addy').get_text()

price_tmp = re.split('.,', gettings)[0]

tmp = re.search('\$\d+\.(\d+)?', price_tmp).group()

price.append(tmp)

address.append(price_tmp[len(tmp) +2:])

print(n)price, address- iterrrows() 이용

- test ver. : 상위 3개 데이터만 뽑아보기

price = []

address = []

for idx, row in df[:3].iterrows():

req = Request(row['URL'], headers={'User-Agent':ua.ie})

html = urlopen(req).read()

soup_tmp = BeautifulSoup(html, 'html.parser')

gettings = soup_tmp.find('p', 'addy').get_text()

price_tmp = re.split('.,', gettings)[0]

tmp = re.search('\$\d+\.(\d+)?', price_tmp).group()

price.append(tmp)

address.append(price_tmp[len(tmp) + 2:])

print(idx)price, address- tqdm 모듈 이용

- 최종 출력

from tqdm import tqdm

price = []

address = []

for idx, row in df.iterrows():

req = Request(row['URL'], headers={'User-Agent':ua.ie})

html = urlopen(req).read()

soup_tmp = BeautifulSoup(html, 'html.parser')

gettings = soup_tmp.find('p', 'addy').get_text()

price_tmp = re.split('.,', gettings)[0]

tm```

코드를 입력하세요

```p = re.search('\$\d+\.(\d+)?', price_tmp).group()

price.append(tmp)

address.append(price_tmp[len(tmp) + 2:])

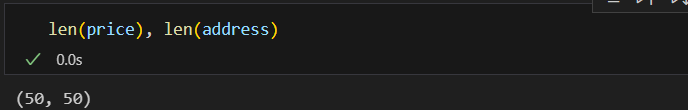

print(idx)len(price), len(address)

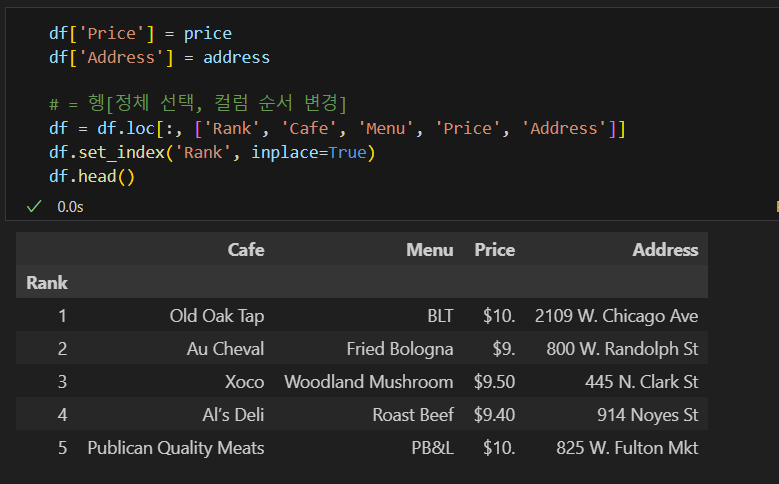

- 표로 보기

df['Price'] = price

df['Address'] = address

# = 헹[정체 선택, 컬럼 순서 변경]

df = df.loc[:, ['Rank', 'Cafe', 'Menu', 'Price', 'Address']]

df.set_index('Rank', inplace=True)

df.head()

- 데이터 저장

# 데이터 저장

df.to_csv(

'../data/03. best_sandwiches_list_chicago_2.csv', sep=',', encoding='utf-8'

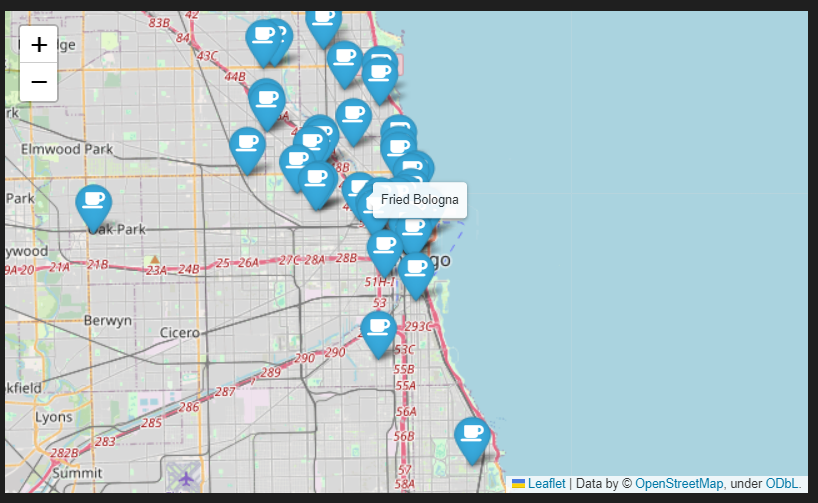

)예제5. 맛집 시각화

- import

import folium

import pandas as pd

import numpy as np

import googlemaps

from tqdm import tqdm- raw data read

df = pd.read_csv('../data/03. best_sandwiches_list_chicago_2.csv', index_col=0)

df.tail()- googlemaps key in

gmaps_key = '내 정보 넣기'

gmaps = googlemaps.Client(key=gmaps_key)lat =[] #위도

lng =[] #경도

for idx, row in tqdm(df[:5].iterrows()):

# Address가 Multiple location이 아니면

if not row['Address'] == 'Multiple location':

# target_name을 실행해라

target_name = row['Address'] + ', ' + 'Chicago'

print(target_name)- 50개 데이터 확인

lat =[] #위도

lng =[] #경도

for idx, row in tqdm(df.iterrows()):

# Address가 Multiple location이 아니면

if not row['Address'] == 'Multiple location':

# target_name을 실행해라

target_name = row['Address'] + ', ' + 'Chicago'

# geocode 메소드를 불러와서 target_name을 던져준다

# 그럼, 검색을 해서 맞는 경도/위도 값을 출력 함

gmaps_output = gmaps.geocode(target_name)

# 0번째를 선택하고 geometry(지리) 정보 속성 값만 가져 옴

location_output = gmaps_output[0].get('geometry')

lat.append(location_output['location']['lat'])

lng.append(location_output['location']['lng'])

else:

lat.append(np.nan)

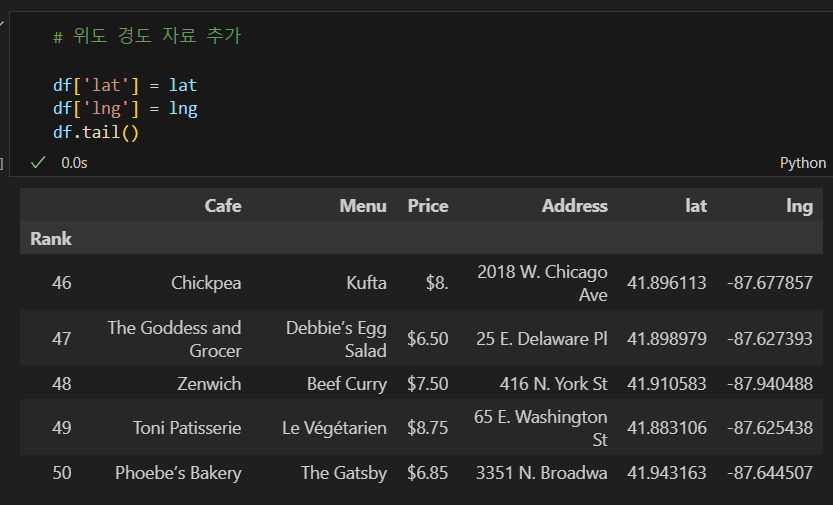

lng.append(np.nan)len(lat), len(lng)- 뽑힌 경도/위도 데이터 리스트 추가

# 위도 경도 자료 추가

df['lat'] = lat

df['lng'] = lng

df.tail()

6. folium Marker

# folium을 이용해 마커 찍어주기

mapping = folium.Map(location=[41.8781136, -87.6297982], zoom_start=11)

for idx, row in df.iterrows():

if not row['Address'] == 'Multiple location':

folium.Marker(

location=[row['lat'], row['lng']],

popup=row['Cafe'],

tooltip=row['Menu'],

icon=folium.Icon(

icon='coffee',

prefix='fa'

)

).add_to(mapping)

mapping