0. 실습 환경 배포

- Amazon EKS 윈클릭 배포 (ebs addon, ec2 iam role add, irsa lb, preCmd 추가) & 기본 설정 : 노드 t3.xlarge(기본값) 사용

# YAML 파일 다운로드

curl -O https://s3.ap-northeast-2.amazonaws.com/cloudformation.cloudneta.net/K8S/eks-oneclick3.yaml

# CloudFormation 스택 배포

예시) aws cloudformation deploy --template-file eks-oneclick3.yaml --stack-name myeks --parameter-overrides KeyName=kp-gasida SgIngressSshCidr=$(curl -s ipinfo.io/ip)/32 MyIamUserAccessKeyID=AKIA5... MyIamUserSecretAccessKey='CVNa2...' ClusterBaseName=myeks --region ap-northeast-2

# CloudFormation 스택 배포 완료 후 작업용 EC2 IP 출력

aws cloudformation describe-stacks --stack-name myeks --query 'Stacks[*].Outputs[0].OutputValue' --output text

# 작업용 EC2 SSH 접속

ssh -i ~/.ssh/kp-gasida.pem ec2-user@$(aws cloudformation describe-stacks --stack-name myeks --query 'Stacks[*].Outputs[0].OutputValue' --output text)

or

ssh -i ~/.ssh/kp-gasida.pem root@$(aws cloudformation describe-stacks --stack-name myeks --query 'Stacks[*].Outputs[0].OutputValue' --output text)

~ password: qwe123

ssh -i ./ejl-eks.pem ec2-user@$(aws cloudformation describe-stacks --stack-name myeks --query 'Stacks[*].Outputs[0].OutputValue' --output text --profile ejl-personal)

Last failed login: Fri Mar 29 20:47:22 KST 2024 from 210.92.14.119 on ssh:notty

There were 3 failed login attempts since the last successful login.

, #_

~\_ ####_ Amazon Linux 2

~~ \_#####\

~~ \###| AL2 End of Life is 2025-06-30.

~~ \#/ ___

~~ V~' '->

~~~ / A newer version of Amazon Linux is available!

~~._. _/

_/ _/ Amazon Linux 2023, GA and supported until 2028-03-15.

_/m/' https://aws.amazon.com/linux/amazon-linux-2023/

- 기본 설정 및 EFS 확인

# default 네임스페이스 적용

(leeeuijoo@myeks:N/A) [root@myeks-bastion ~]# kubectl ns default

Context "leeeuijoo@myeks.ap-northeast-2.eksctl.io" modified.

Active namespace is "default".

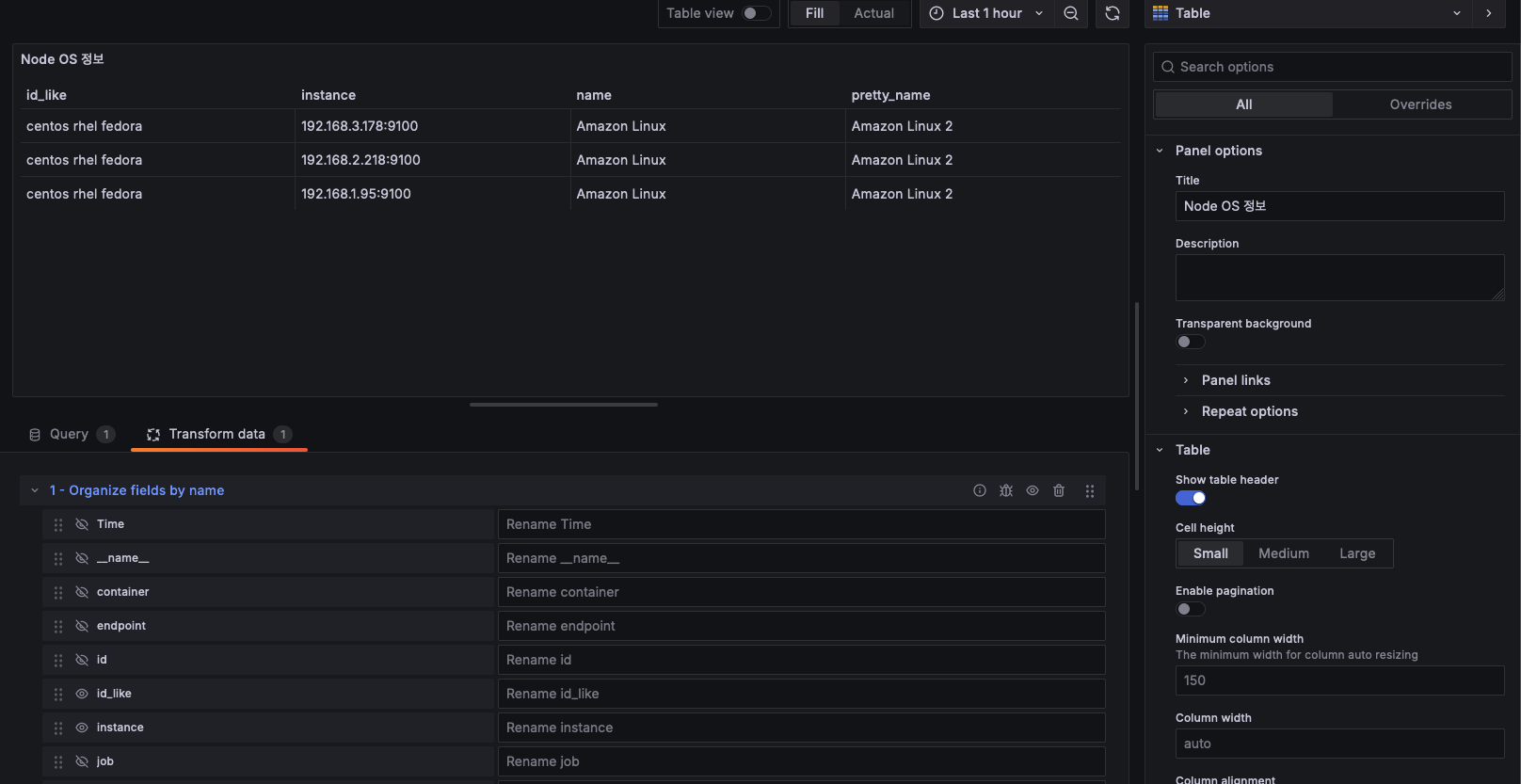

# 노드 정보 확인 : t3.xlarge

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl get node --label-columns=node.kubernetes.io/instance-type,eks.amazonaws.com/capacityType,topology.kubernetes.io/zone

NAME STATUS ROLES AGE VERSION INSTANCE-TYPE CAPACITYTYPE ZONE

ip-192-168-1-95.ap-northeast-2.compute.internal Ready <none> 3m11s v1.28.5-eks-5e0fdde t3.xlarge ON_DEMAND ap-northeast-2a

ip-192-168-2-218.ap-northeast-2.compute.internal Ready <none> 3m9s v1.28.5-eks-5e0fdde t3.xlarge ON_DEMAND ap-northeast-2b

ip-192-168-3-178.ap-northeast-2.compute.internal Ready <none> 3m8s v1.28.5-eks-5e0fdde t3.xlarge ON_DEMAND ap-northeast-2c

# 노드 IP 확인 및 PrivateIP 변수 지정

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# N1=$(kubectl get node --label-columns=topology.kubernetes.io/zone --selector=topology.kubernetes.io/zone=ap-northeast-2a -o jsonpath={.items[0].status.addresses[0].address})

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# N2=$(kubectl get node --label-columns=topology.kubernetes.io/zone --selector=topology.kubernetes.io/zone=ap-northeast-2b -o jsonpath={.items[0].status.addresses[0].address})

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# N3=$(kubectl get node --label-columns=topology.kubernetes.io/zone --selector=topology.kubernetes.io/zone=ap-northeast-2c -o jsonpath={.items[0].status.addresses[0].address})

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# echo "export N1=$N1" >> /etc/profile

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# echo "export N2=$N2" >> /etc/profile

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# echo "export N3=$N3" >> /etc/profile

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# echo $N1, $N2, $N3

192.168.1.95, 192.168.2.218, 192.168.3.178

# 노드 보안그룹 ID 확인 및 변수 지정

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# NGSGID=$(aws ec2 describe-security-groups --filters Name=group-name,Values=*ng1* --query "SecurityGroups[*].[GroupId]" --output text)

# 워커 노드 SSH 접속

yes * 3

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# for node in $N1 $N2 $N3; do ssh ec2-user@$node hostname; done

ip-192-168-1-95.ap-northeast-2.compute.internal

ip-192-168-2-218.ap-northeast-2.compute.internal

ip-192-168-3-178.ap-northeast-2.compute.internal

- AWS LB/ExternalDNS/EBS, kube-ops-view 설치

- Domain : 22joo.shop

# ExternalDNS Install

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# MyDomain=22joo.shop

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# echo "export MyDomain=22joo.shop" >> /etc/profile

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# MyDnzHostedZoneId=$(aws route53 list-hosted-zones-by-name --dns-name "${MyDomain}." --query "HostedZones[0].Id" --output text)

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# echo $MyDomain, $MyDnzHostedZoneId

22joo.shop, /hostedzone/Z07798463AFECYTX1ODP4

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# curl -s -O https://raw.githubusercontent.com/gasida/PKOS/main/aews/externaldns.yaml

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# MyDomain=$MyDomain MyDnzHostedZoneId=$MyDnzHostedZoneId envsubst < externaldns.yaml | kubectl apply -f -

serviceaccount/external-dns created

clusterrole.rbac.authorization.k8s.io/external-dns created

clusterrolebinding.rbac.authorization.k8s.io/external-dns-viewer created

deployment.apps/external-dns created

# kube-ops-view Install

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# helm repo add geek-cookbook https://geek-cookbook.github.io/charts/

"geek-cookbook" has been added to your repositories

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# helm install kube-ops-view geek-cookbook/kube-ops-view --version 1.2.2 --set env.TZ="Asia/Seoul" --namespace kube-system

NAME: kube-ops-view

LAST DEPLOYED: Fri Mar 29 21:03:30 2024

NAMESPACE: kube-system

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

1. Get the application URL by running these commands:

export POD_NAME=$(kubectl get pods --namespace kube-system -l "app.kubernetes.io/name=kube-ops-view,app.kubernetes.io/instance=kube-ops-view" -o jsonpath="{.items[0].metadata.name}")

echo "Visit http://127.0.0.1:8080 to use your application"

kubectl port-forward $POD_NAME 8080:8080

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl patch svc -n kube-system kube-ops-view -p '{"spec":{"type":"LoadBalancer"}}'

service/kube-ops-view patched

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl annotate service kube-ops-view -n kube-system "external-dns.alpha.kubernetes.io/hostname=kubeopsview.$MyDomain"

service/kube-ops-view annotated

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# echo -e "Kube Ops View URL = http://kubeopsview.$MyDomain:8080/#scale=1.5"

Kube Ops View URL = http://kubeopsview.22joo.shop:8080/#scale=1.5

# AWS LB Controller

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# helm repo add eks https://aws.github.io/eks-charts

"eks" has been added to your repositories

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# helm repo update

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "eks" chart repository

...Successfully got an update from the "geek-cookbook" chart repository

Update Complete. ⎈Happy Helming!⎈

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# helm install aws-load-balancer-controller eks/aws-load-balancer-controller -n kube-system --set clusterName=$CLUSTER_NAME \

> --set serviceAccount.create=false --set serviceAccount.name=aws-load-balancer-controller

NAME: aws-load-balancer-controller

LAST DEPLOYED: Fri Mar 29 21:04:15 2024

NAMESPACE: kube-system

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

AWS Load Balancer controller installed!

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# k get pods -n kube-system

NAME READY STATUS RESTARTS AGE

aws-load-balancer-controller-5f7b66cdd5-4xdsv 1/1 Running 0 26s

aws-load-balancer-controller-5f7b66cdd5-7tdkg 1/1 Running 0 26s

aws-node-mntqr 2/2 Running 0 9m35s

aws-node-pzbwp 2/2 Running 0 9m37s

aws-node-qtwjb 2/2 Running 0 9m36s

coredns-55474bf7b9-5wrr7 1/1 Running 0 7m14s

coredns-55474bf7b9-q7w75 1/1 Running 0 7m14s

ebs-csi-controller-7df5f479d4-2fkct 6/6 Running 0 5m35s

ebs-csi-controller-7df5f479d4-7242q 6/6 Running 0 5m35s

ebs-csi-node-285rr 3/3 Running 0 5m35s

ebs-csi-node-ppjzz 3/3 Running 0 5m35s

ebs-csi-node-r5qrs 3/3 Running 0 5m35s

external-dns-7fd77dcbc-2zrwl 1/1 Running 0 111s

kube-ops-view-9cc4bf44c-7g746 1/1 Running 0 72s

kube-proxy-cqqcv 1/1 Running 0 7m53s

kube-proxy-rlkls 1/1 Running 0 7m54s

kube-proxy-wqx6h 1/1 Running 0 7m56s

# EBS csi driver 설치 확인

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# eksctl get addon --cluster ${CLUSTER_NAME}

2024-03-29 21:05:36 [ℹ] Kubernetes version "1.28" in use by cluster "myeks"

2024-03-29 21:05:36 [ℹ] getting all addons

2024-03-29 21:05:38 [ℹ] to see issues for an addon run `eksctl get addon --name <addon-name> --cluster <cluster-name>`

NAME VERSION STATUS ISSUES IAMROLE UPDATE AVAILABLE CONFIGURATION VALUES

aws-ebs-csi-driver v1.29.1-eksbuild.1 ACTIVE 0 arn:aws:iam::236747833953:role/eksctl-myeks-addon-aws-ebs-csi-driver-Role1-BrfcwPViqFnO

coredns v1.10.1-eksbuild.7 ACTIVE 0

kube-proxy v1.28.6-eksbuild.2 ACTIVE 0

vpc-cni v1.17.1-eksbuild.1 ACTIVE 0 arn:aws:iam::236747833953:role/eksctl-myeks-addon-vpc-cni-Role1-KSZWTaLgYiSF enableNetworkPolicy: "true"

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl get pod -n kube-system -l 'app in (ebs-csi-controller,ebs-csi-node)'

NAME READY STATUS RESTARTS AGE

ebs-csi-controller-7df5f479d4-2fkct 6/6 Running 0 6m31s

ebs-csi-controller-7df5f479d4-7242q 6/6 Running 0 6m31s

ebs-csi-node-285rr 3/3 Running 0 6m31s

ebs-csi-node-ppjzz 3/3 Running 0 6m31s

ebs-csi-node-r5qrs 3/3 Running 0 6m31s

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl get csinodes

NAME DRIVERS AGE

ip-192-168-1-95.ap-northeast-2.compute.internal 1 10m

ip-192-168-2-218.ap-northeast-2.compute.internal 1 10m

ip-192-168-3-178.ap-northeast-2.compute.internal 1 10m

# gp3 스토리지 클래스 생성

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

gp2 (default) kubernetes.io/aws-ebs Delete WaitForFirstConsumer false 19m

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl apply -f https://raw.githubusercontent.com/gasida/PKOS/main/aews/gp3-sc.yaml

storageclass.storage.k8s.io/gp3 created

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

gp2 (default) kubernetes.io/aws-ebs Delete WaitForFirstConsumer false 19m

gp3 ebs.csi.aws.com Delete WaitForFirstConsumer true 3s- 설치 정보 확인

# 이미지 정보 확인

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl get pods --all-namespaces -o jsonpath="{.items[*].spec.containers[*].image}" | tr -s '[[:space:]]' '\n' | sort | uniq -c

3 602401143452.dkr.ecr.ap-northeast-2.amazonaws.com/amazon/aws-network-policy-agent:v1.1.0-eksbuild.1

3 602401143452.dkr.ecr.ap-northeast-2.amazonaws.com/amazon-k8s-cni:v1.17.1-eksbuild.1

5 602401143452.dkr.ecr.ap-northeast-2.amazonaws.com/eks/aws-ebs-csi-driver:v1.29.1

2 602401143452.dkr.ecr.ap-northeast-2.amazonaws.com/eks/coredns:v1.10.1-eksbuild.7

2 602401143452.dkr.ecr.ap-northeast-2.amazonaws.com/eks/csi-attacher:v4.5.0-eks-1-29-7

3 602401143452.dkr.ecr.ap-northeast-2.amazonaws.com/eks/csi-node-driver-registrar:v2.10.0-eks-1-29-7

2 602401143452.dkr.ecr.ap-northeast-2.amazonaws.com/eks/csi-provisioner:v4.0.0-eks-1-29-7

2 602401143452.dkr.ecr.ap-northeast-2.amazonaws.com/eks/csi-resizer:v1.10.0-eks-1-29-7

2 602401143452.dkr.ecr.ap-northeast-2.amazonaws.com/eks/csi-snapshotter:v7.0.1-eks-1-29-7

3 602401143452.dkr.ecr.ap-northeast-2.amazonaws.com/eks/kube-proxy:v1.28.6-minimal-eksbuild.2

5 602401143452.dkr.ecr.ap-northeast-2.amazonaws.com/eks/livenessprobe:v2.12.0-eks-1-29-7

1 hjacobs/kube-ops-view:20.4.0

2 public.ecr.aws/eks/aws-load-balancer-controller:v2.7.2

1 registry.k8s.io/external-dns/external-dns:v0.14.0

# eksctl 설치/업데이트 addon 확인

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# eksctl get addon --cluster $CLUSTER_NAME

2024-03-29 21:06:56 [ℹ] Kubernetes version "1.28" in use by cluster "myeks"

2024-03-29 21:06:56 [ℹ] getting all addons

2024-03-29 21:06:57 [ℹ] to see issues for an addon run `eksctl get addon --name <addon-name> --cluster <cluster-name>`

NAME VERSION STATUS ISSUES IAMROLE UPDATE AVAILABLE CONFIGURATION VALUES

aws-ebs-csi-driver v1.29.1-eksbuild.1 ACTIVE 0 arn:aws:iam::236747833953:role/eksctl-myeks-addon-aws-ebs-csi-driver-Role1-BrfcwPViqFnO

coredns v1.10.1-eksbuild.7 ACTIVE 0

kube-proxy v1.28.6-eksbuild.2 ACTIVE 0

vpc-cni v1.17.1-eksbuild.1 ACTIVE 0 arn:aws:iam::236747833953:role/eksctl-myeks-addon-vpc-cni-Role1-KSZWTaLgYiSF enableNetworkPolicy: "true"

(leeeuijoo@myeks:default) [root@myeks-bastion ~]#

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# # IRSA 확인

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# eksctl get iamserviceaccount --cluster $CLUSTER_NAME

NAMESPACE NAME ROLE ARN

kube-system aws-load-balancer-controller arn:aws:iam::236747833953:role/eksctl-myeks-addon-iamserviceaccount-kube-sys-Role1-Ld2VLBADravG

# EC2 Instance Profile에 IAM Role 정보 확인

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# cat myeks.yaml | grep managedNodeGroups -A20 | yh

managedNodeGroups:

- amiFamily: AmazonLinux2

desiredCapacity: 3

disableIMDSv1: true

disablePodIMDS: false

iam:

withAddonPolicies:

albIngress: false

appMesh: false

appMeshPreview: false

autoScaler: false

awsLoadBalancerController: false

certManager: true

cloudWatch: true

ebs: true

efs: false

externalDNS: true

fsx: false

imageBuilder: true

xRay: true

instanceSelector: {}1. Logging In EKS

- 여기서 말하는 Logging In EKS 의 뜻은 컨트롤 플레인, 노드, k8s 의 애플리케이션을 Logging 한다는 것을 의미합니다.

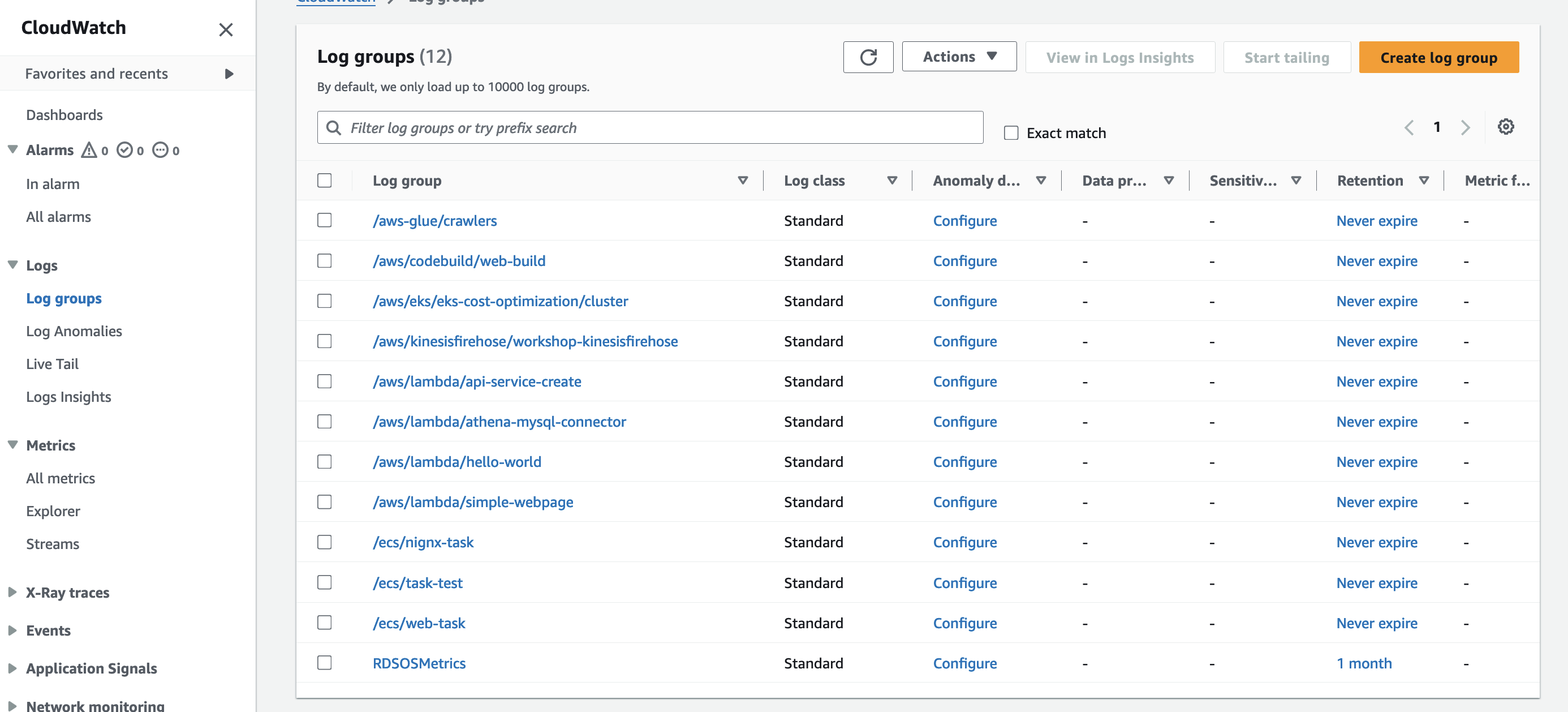

- 기본적으로 CloudWatch 에는 로그 그룹이라는 것이 존재하고 이러한 로그 그룹에 로그 스트림이 생성되며 여기에 Logging 이 기록 됩니다.

- 현재는 EKS 자체의 Logging 기능을 Off한 상태이기 때문에 아래와 같이 로그 그룹이 생성되어 있지 않습니다.

- Kubernetes API server component logs (

*pi) –kube-apiserver-<nnn...> - Audit (

audit) –kube-apiserver-audit-<nnn...> - Authenticator (

authenticator) –authenticator-<nnn...> - Controller manager (

controllerManager) –kube-controller-manager-<nnn...> - Scheduler (

scheduler) –kube-scheduler-<nnn...>

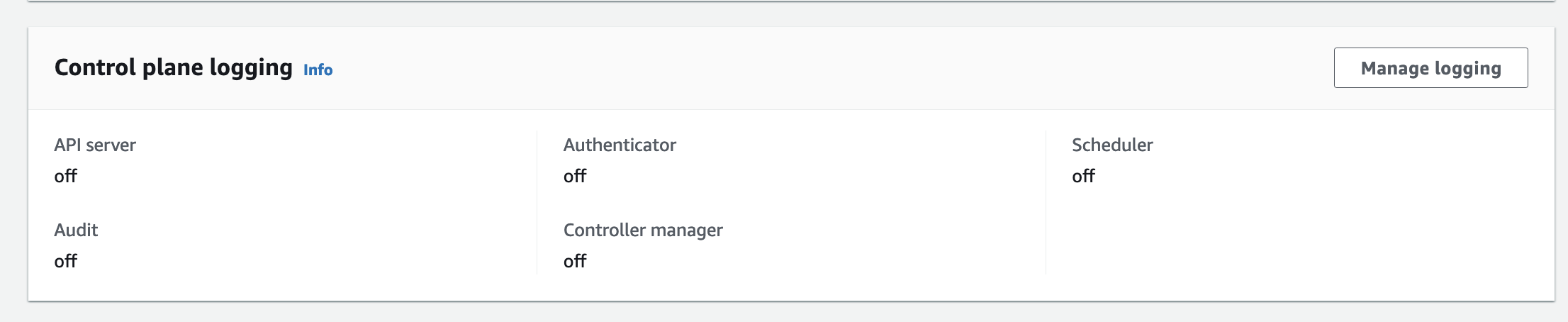

- 아래는 현재 EKS Logging 설정입니다.

- 이제, AWS EKS 의 모든 로깅 기능을 활성화 시키겠습니다.

- AWS Console 로 활성화가 가능하지만, CLI 를 활용하여 로깅 기능을 활성화 시키겠습니다.

# EKS 클러스터 로깅 기능 활성화

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# aws eks update-cluster-config --region $AWS_DEFAULT_REGION --name $CLUSTER_NAME \

> --logging '{"clusterLogging":[{"types":["api","audit","authenticator","controllerManager","scheduler"],"enabled":true}]}'

{

"update": {

"id": "79c9d120-af27-44ec-a932-e86814d9e6ef",

"status": "InProgress",

"type": "LoggingUpdate",

"params": [

{

"type": "ClusterLogging",

"value": "{\"clusterLogging\":[{\"types\":[\"api\",\"audit\",\"authenticator\",\"controllerManager\",\"scheduler\"],\"enabled\":true}]}"

}

],

"createdAt": "2024-03-29T21:14:04.742000+09:00",

"errors": []

}

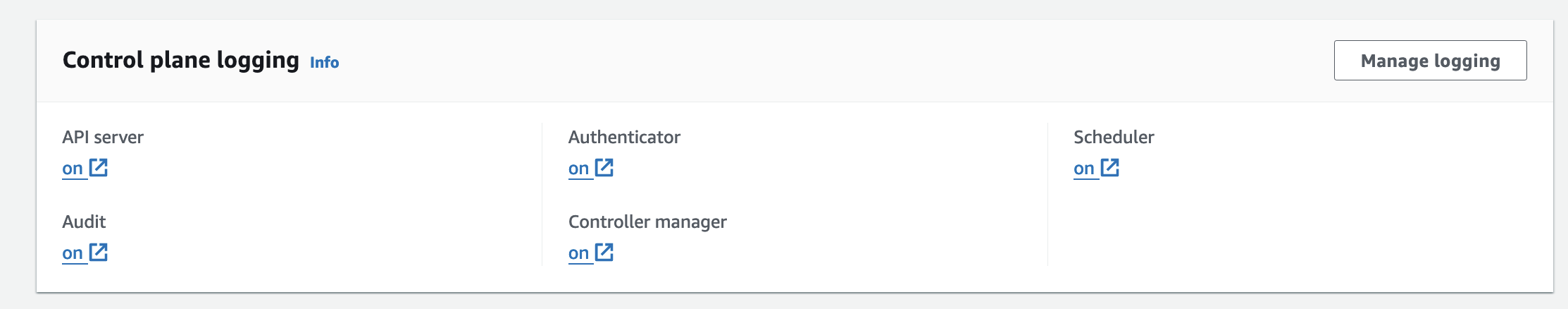

}- EKS 클러스터 Logging 을 활성화 하게 되면 콘솔에서도 확인할 수 있습니다.

# 로그 그룹 생성 확인

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# aws logs describe-log-groups | jq

.

.

.

{

"logGroupName": "/aws/eks/myeks/cluster",

"creationTime": 1711714463532,

"metricFilterCount": 0,

"arn": "arn:aws:logs:ap-northeast-2:236747833953:log-group:/aws/eks/myeks/cluster:*",

"storedBytes": 0,

"logGroupClass": "STANDARD",

"logGroupArn": "arn:aws:logs:ap-northeast-2:236747833953:log-group:/aws/eks/myeks/cluster"

},

.

.

.

# 신규 로그를 바로 출력

aws logs tail /aws/eks/$CLUSTER_NAME/cluster --follow

# 필터 패턴

aws logs tail /aws/eks/$CLUSTER_NAME/cluster --filter-pattern <필터 패턴>

# 로그 모니터링

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# aws logs tail /aws/eks/$CLUSTER_NAME/cluster --log-stream-name-prefix kube-controller-manager --follow

# coredns pod 레플리카를 1개로 줄여봅니다.

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl scale deployment -n kube-system coredns --replicas=1

## 모니터링

2024-03-29T12:21:58.000000+00:00 kube-controller-manager-a5774b16902dfae8018336d4e299c0d7 I0329 12:21:58.813716 10 replica_set.go:621] "Too many replicas" replicaSet="kube-system/coredns-55474bf7b9" need=1 deleting=1

2024-03-29T12:21:58.000000+00:00 kube-controller-manager-a5774b16902dfae8018336d4e299c0d7 I0329 12:21:58.813759 10 replica_set.go:248] "Found related ReplicaSets" replicaSet="kube-system/coredns-55474bf7b9" relatedReplicaSets=["kube-system/coredns-56dfff779f","kube-system/coredns-55474bf7b9"]

2024-03-29T12:21:58.000000+00:00 kube-controller-manager-a5774b16902dfae8018336d4e299c0d7 I0329 12:21:58.813849 10 controller_utils.go:609] "Deleting pod" controller="coredns-55474bf7b9" pod="kube-system/coredns-55474bf7b9-q7w75"

2024-03-29T12:21:58.000000+00:00 kube-controller-manager-a5774b16902dfae8018336d4e299c0d7 I0329 12:21:58.813967 10 event.go:307] "Event occurred" object="kube-system/coredns" fieldPath="" kind="Deployment" apiVersion="apps/v1" type="Normal" reason="ScalingReplicaSet" message="Scaled down replica set coredns-55474bf7b9 to 1 from 2"

2024-03-29T12:21:58.000000+00:00 kube-controller-manager-a5774b16902dfae8018336d4e299c0d7 I0329 12:21:58.834315 10 event.go:307] "Event occurred" object="kube-system/coredns-55474bf7b9" fieldPath="" kind="ReplicaSet" apiVersion="apps/v1" type="Normal" reason="SuccessfulDelete" message="Deleted pod: coredns-55474bf7b9-q7w75"

2024-03-29T12:21:58.000000+00:00 kube-controller-manager-a5774b16902dfae8018336d4e299c0d7 I0329 12:21:58.857272 10 replica_set.go:676] "Finished syncing" kind="ReplicaSet" key="kube-system/coredns-55474bf7b9" duration="43.650298ms"

2024-03-29T12:21:58.000000+00:00 kube-controller-manager-a5774b16902dfae8018336d4e299c0d7 I0329 12:21:58.872284 10 replica_set.go:676] "Finished syncing" kind="ReplicaSet" key="kube-system/coredns-55474bf7b9" duration="14.94268ms"

2024-03-29T12:21:58.000000+00:00 kube-controller-manager-a5774b16902dfae8018336d4e299c0d7 I0329 12:21:58.872391 10 replica_set.go:676] "Finished syncing" kind="ReplicaSet" key="kube-system/coredns-55474bf7b9" duration="67.252µs"

2024-03-29T12:22:04.000000+00:00 kube-controller-manager-a5774b16902dfae8018336d4e299c0d7 I0329 12:22:04.117399 10 replica_set.go:676] "Finished syncing" kind="ReplicaSet" key="kube-system/coredns-55474bf7b9" duration="102.591µs"

2024-03-29T12:22:04.000000+00:00 kube-controller-manager-a5774b16902dfae8018336d4e299c0d7 I0329 12:22:04.148736 10 replica_set.go:676] "Finished syncing" kind="ReplicaSet" key="kube-system/coredns-55474bf7b9" duration="68.632µs"

2024-03-29T12:22:04.000000+00:00 kube-controller-manager-a5774b16902dfae8018336d4e299c0d7 I0329 12:22:04.452841 10 replica_set.go:676] "Finished syncing" kind="ReplicaSet" key="kube-system/coredns-55474bf7b9" duration="93.332µs"

2024-03-29T12:22:04.000000+00:00 kube-controller-manager-a5774b16902dfae8018336d4e299c0d7 I0329 12:22:04.469035 10 replica_set.go:676] "Finished syncing" kind="ReplicaSet" key="kube-system/coredns-55474bf7b9" duration="94.389µs"

## Pod 상태

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# k get pods -n kube-system | grep coredns

coredns-55474bf7b9-5wrr7 1/1 Running 0 26m- CloudWatch Log Insights

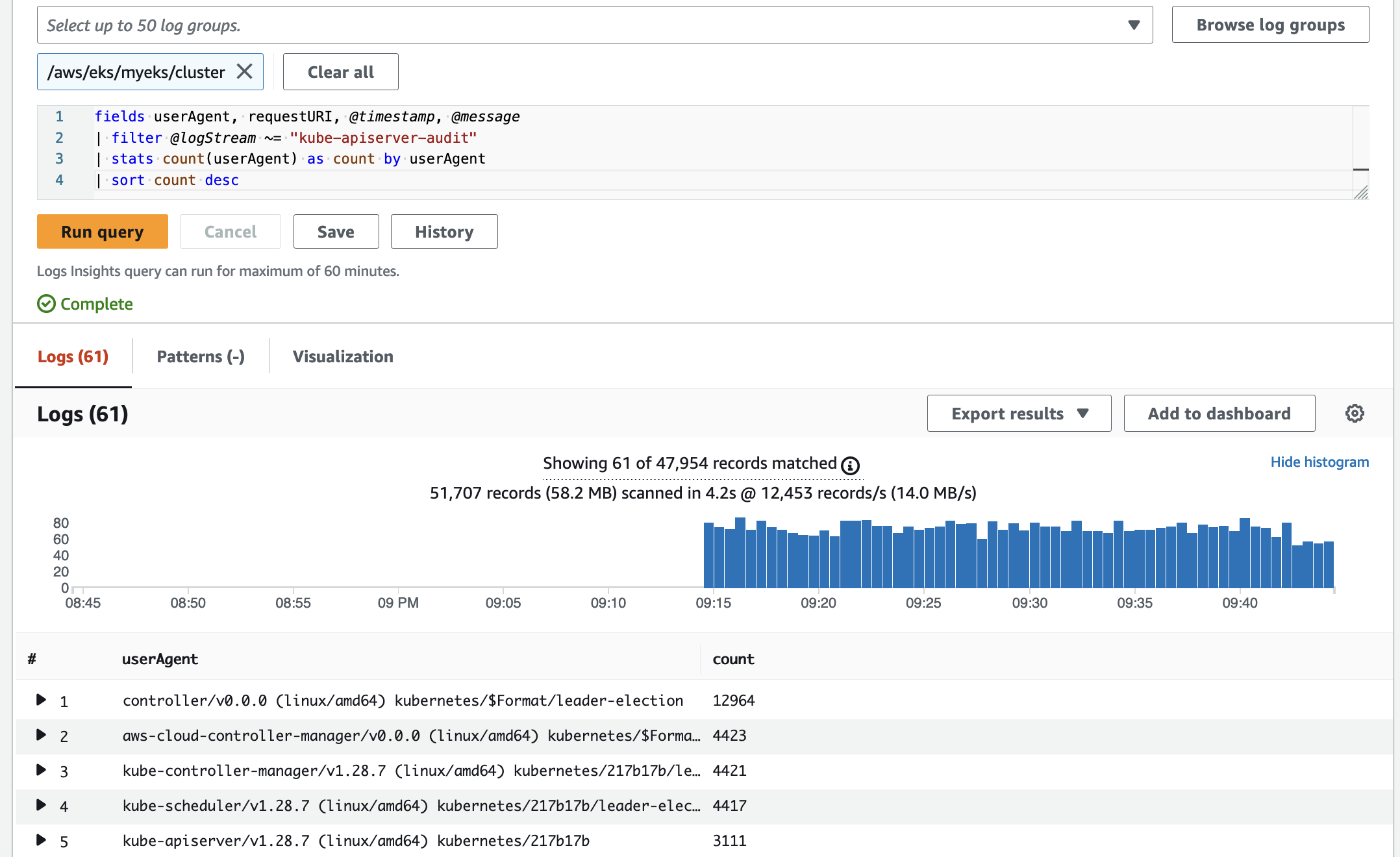

- 콘솔에서 쿼리를 통해 로그를 분석할 수 있습니다.

# kube-apiserver-audit 로그에서 userAgent 정렬해서 아래 4개 필드 정보 검색

fields userAgent, requestURI, @timestamp, @message

| filter @logStream ~= "kube-apiserver-audit"

| stats count(userAgent) as count by userAgent

| sort count desc

- 또한, Console 뿐만 아니라 AWS CLI 로 쿼리를 날려 로그를 검색할 수 있습니다.

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# aws logs get-query-results --query-id $(aws logs start-query \

> --log-group-name '/aws/eks/myeks/cluster' \

> --start-time `date -d "-1 hours" +%s` \

> --end-time `date +%s` \

> --query-string 'fields @timestamp, @message | filter @logStream ~= "kube-scheduler" | sort @timestamp desc' \

> | jq --raw-output '.queryId')

{

"results": [],

"statistics": {

"recordsMatched": 0.0,

"recordsScanned": 53664.0,

"bytesScanned": 63276174.0

},

"status": "Running"

}- 로깅 비활성화

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# eksctl utils update-cluster-logging --cluster $CLUSTER_NAME --region $AWS_DEFAULT_REGION --disable-types all --approve

2024-03-29 21:47:56 [ℹ] will update CloudWatch logging for cluster "myeks" in "ap-northeast-2" (no types to enable & disable types: api, audit, authenticator, controllerManager, scheduler)

2024-03-29 21:49:00 [✔] configured CloudWatch logging for cluster "myeks" in "ap-northeast-2" (no types enabled & disabled types: api, audit, authenticator, controllerManager, scheduler)

# 로그 그룹 삭제

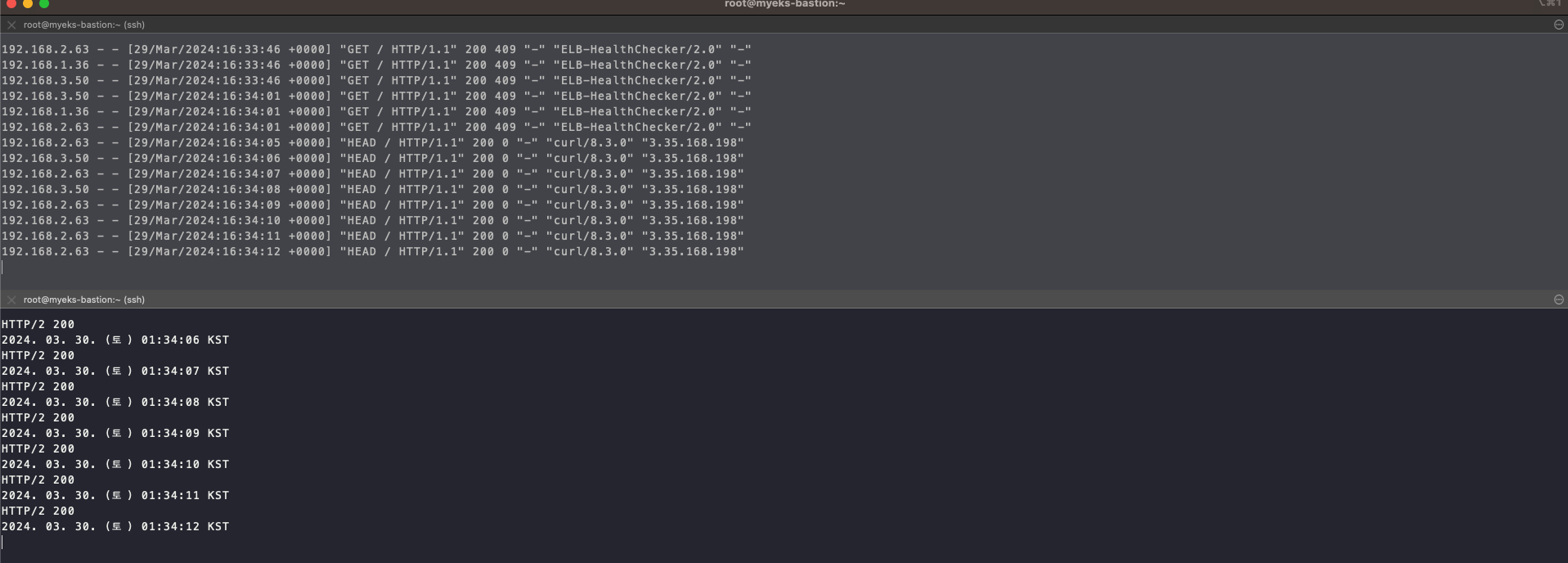

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# aws logs delete-log-group --log-group-name /aws/eks/$CLUSTER_NAME/cluster- Pod 로깅

- Nginx 서버를 배포해서 Pod 를 logging 해보겠습니다.

- Helm 을 사용하여 배포합니다.

# NGINX 웹서버 배포

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# helm repo add bitnami https://charts.bitnami.com/bitnami

"bitnami" has been added to your repositories

# 사용 리전의 인증서 ARN 확인

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# CERT_ARN=$(aws acm list-certificates --query 'CertificateSummaryList[].CertificateArn[]' --output text)

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# echo $CERT_ARN

arn:aws:acm:ap-northeast-2:236747833953:certificate/47d1f443-4321-467d-a7e1-927caaa97f52

# 도메인 확인

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# echo $MyDomain

22joo.shop

# 파라미터 파일 생성 : 인증서 ARN 지정하지 않아도 가능! 혹시 https 리스너 설정 안 될 경우 인증서 설정 추가(주석 제거)해서 배포해줍니다.

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# cat <<EOT > nginx-values.yaml

> service:

> type: NodePort

>

> networkPolicy:

> enabled: false

>

> ingress:

> enabled: true

> ingressClassName: alb

> hostname: nginx.$MyDomain

> pathType: Prefix

> path: /

> annotations:

> alb.ingress.kubernetes.io/scheme: internet-facing

> alb.ingress.kubernetes.io/target-type: ip

> alb.ingress.kubernetes.io/listen-ports: '[{"HTTPS":443}, {"HTTP":80}]'

> #alb.ingress.kubernetes.io/certificate-arn: $CERT_ARN

> alb.ingress.kubernetes.io/success-codes: 200-399

> alb.ingress.kubernetes.io/load-balancer-name: $CLUSTER_NAME-ingress-alb

> alb.ingress.kubernetes.io/group.name: study

> alb.ingress.kubernetes.io/ssl-redirect: '443'

> EOT

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# cat nginx-values.yaml | yh

service:

type: NodePort

networkPolicy:

enabled: false

ingress:

enabled: true

ingressClassName: alb

hostname: nginx.22joo.shop

pathType: Prefix

path: /

annotations:

alb.ingress.kubernetes.io/scheme: internet-facing

alb.ingress.kubernetes.io/target-type: ip

alb.ingress.kubernetes.io/listen-ports: '[{"HTTPS":443}, {"HTTP":80}]'

#alb.ingress.kubernetes.io/certificate-arn arn:aws:acm:ap-northeast-2:236747833953:certificate/47d1f443-4321-467d-a7e1-927caaa97f52

alb.ingress.kubernetes.io/success-codes: 200-399

alb.ingress.kubernetes.io/load-balancer-name: myeks-ingress-alb

alb.ingress.kubernetes.io/group.name: study

alb.ingress.kubernetes.io/ssl-redirect: '443'

# 배포

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# helm install nginx bitnami/nginx --version 15.14.0 -f nginx-values.yaml

NAME: nginx

LAST DEPLOYED: Fri Mar 29 21:53:03 2024

NAMESPACE: default

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

CHART NAME: nginx

CHART VERSION: 15.14.0

APP VERSION: 1.25.4

** Please be patient while the chart is being deployed **

NGINX can be accessed through the following DNS name from within your cluster:

nginx.default.svc.cluster.local (port 80)

To access NGINX from outside the cluster, follow the steps below:

1. Get the NGINX URL and associate its hostname to your cluster external IP:

export CLUSTER_IP=$(minikube ip) # On Minikube. Use: `kubectl cluster-info` on others K8s clusters

echo "NGINX URL: http://nginx.22joo.shop"

echo "$CLUSTER_IP nginx.22joo.shop" | sudo tee -a /etc/hosts

WARNING: There are "resources" sections in the chart not set. Using "resourcesPreset" is not recommended for production. For production installations, please set the following values according to your workload needs:

- cloneStaticSiteFromGit.gitSync.resources

- resources

+info https://kubernetes.io/docs/concepts/configuration/manage-resources-containers/

# 확인

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl get ingress,deploy,svc,ep nginx

NAME CLASS HOSTS ADDRESS PORTS AGE

ingress.networking.k8s.io/nginx alb nginx.22joo.shop myeks-ingress-alb-874849752.ap-northeast-2.elb.amazonaws.com 80 7m11s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/nginx 1/1 1 1 7m11s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/nginx NodePort 10.100.106.198 <none> 80:31887/TCP 7m11s

NAME ENDPOINTS AGE

endpoints/nginx 192.168.2.168:8080 7m11s

# 서비스 접속 확인

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# echo -e "Nginx WebServer URL = https://nginx.$MyDomain"

Nginx WebServer URL = https://nginx.22joo.shop

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# curl -s https://nginx.$MyDomain

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl logs deploy/nginx -f

Defaulted container "nginx" out of: nginx, preserve-logs-symlinks (init)

nginx 13:51:05.19 INFO ==>

nginx 13:51:05.20 INFO ==> Welcome to the Bitnami nginx container

nginx 13:51:05.20 INFO ==> Subscribe to project updates by watching https://github.com/bitnami/containers

nginx 13:51:05.20 INFO ==> Submit issues and feature requests at https://github.com/bitnami/containers/issues

nginx 13:51:05.21 INFO ==>

nginx 13:51:05.21 INFO ==> ** Starting NGINX setup **

nginx 13:51:05.23 INFO ==> Validating settings in NGINX_* env vars

Certificate request self-signature ok

subject=CN = example.com

nginx 13:51:09.82 INFO ==> No custom scripts in /docker-entrypoint-initdb.d

nginx 13:51:09.83 INFO ==> Initializing NGINX

realpath: /bitnami/nginx/conf/vhosts: No such file or directory

nginx 13:51:09.88 INFO ==> ** NGINX setup finished! **

nginx 13:51:09.90 INFO ==> ** Starting NGINX **

192.168.2.32 - - [29/Mar/2024:13:53:15 +0000] "GET / HTTP/1.1" 200 409 "-" "ELB-HealthChecker/2.0" "-"

192.168.2.32 - - [29/Mar/2024:13:53:30 +0000] "GET / HTTP/1.1" 200 409 "-" "ELB-HealthChecker/2.0" "-"

192.168.3.149 - - [29/Mar/2024:13:53:36 +0000] "GET / HTTP/1.1" 200 409 "-" "ELB-HealthChecker/2.0" "-"

192.168.2.32 - - [29/Mar/2024:13:53:45 +0000] "GET / HTTP/1.1" 200 409 "-" "ELB-HealthChecker/2.0" "-"

...- 컨테이너 로그 환경의 로그는 표준 출력 stdout과 표준 에러 stderr로 보내는 것을 권고

- 해당 권고에 따라 작성된 컨테이너 애플리케이션의 로그는 해당 파드 안으로 접속하지 않아도 사용자는 외부에서 kubectl logs 명령어로 애플리케이션 종류에 상관없이,애플리케이션마다 로그 파일 위치에 상관없이, 단일 명령어로 조회합니다.

# 로그 모니터링

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl logs deploy/nginx -f

...

ico HTTP/1.1", host: "nginx.22joo.shop", referrer: "https://nginx.22joo.shop/"

192.168.2.32 - - [29/Mar/2024:14:01:11 +0000] "GET /favicon.ico HTTP/1.1" 404 180 "https://nginx.22joo.shop/" "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/123.0.0.0 Safari/537.36" "210.92.14.119"- 접속 시도

# 컨테이너 로그 파일 위치 확인

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl exec -it deploy/nginx -- ls -l /opt/bitnami/nginx/logs/

Defaulted container "nginx" out of: nginx, preserve-logs-symlinks (init)

total 0

lrwxrwxrwx 1 1001 1001 11 Mar 29 13:51 access.log -> /dev/stdout

lrwxrwxrwx 1 1001 1001 11 Mar 29 13:51 error.log -> /dev/stderr- 종료된 파드의 로그는 kubectl logs로 조회 할 수 없습니다.

- kubelet 기본 설정은 로그 파일의 최대 크기가 10Mi로 10Mi를 초과하는 로그는 전체 로그 조회가 불가능하다는 점이 있습니다.

- 하지만, 아래와 같은 설정으로 modify 할 수 있습니다.

apiVersion: eksctl.io/v1alpha5

kind: ClusterConfig

metadata:

name: <cluster-name>

region: eu-central-1

nodeGroups:

- name: worker-spot-containerd-large-log

labels: { instance-type: spot }

instanceType: t3.large

minSize: 2

maxSize: 30

desiredCapacity: 2

amiFamily: AmazonLinux2

containerRuntime: containerd

availabilityZones: ["eu-central-1a", "eu-central-1b", "eu-central-1c"]

kubeletExtraConfig:

containerLogMaxSize: "500Mi"

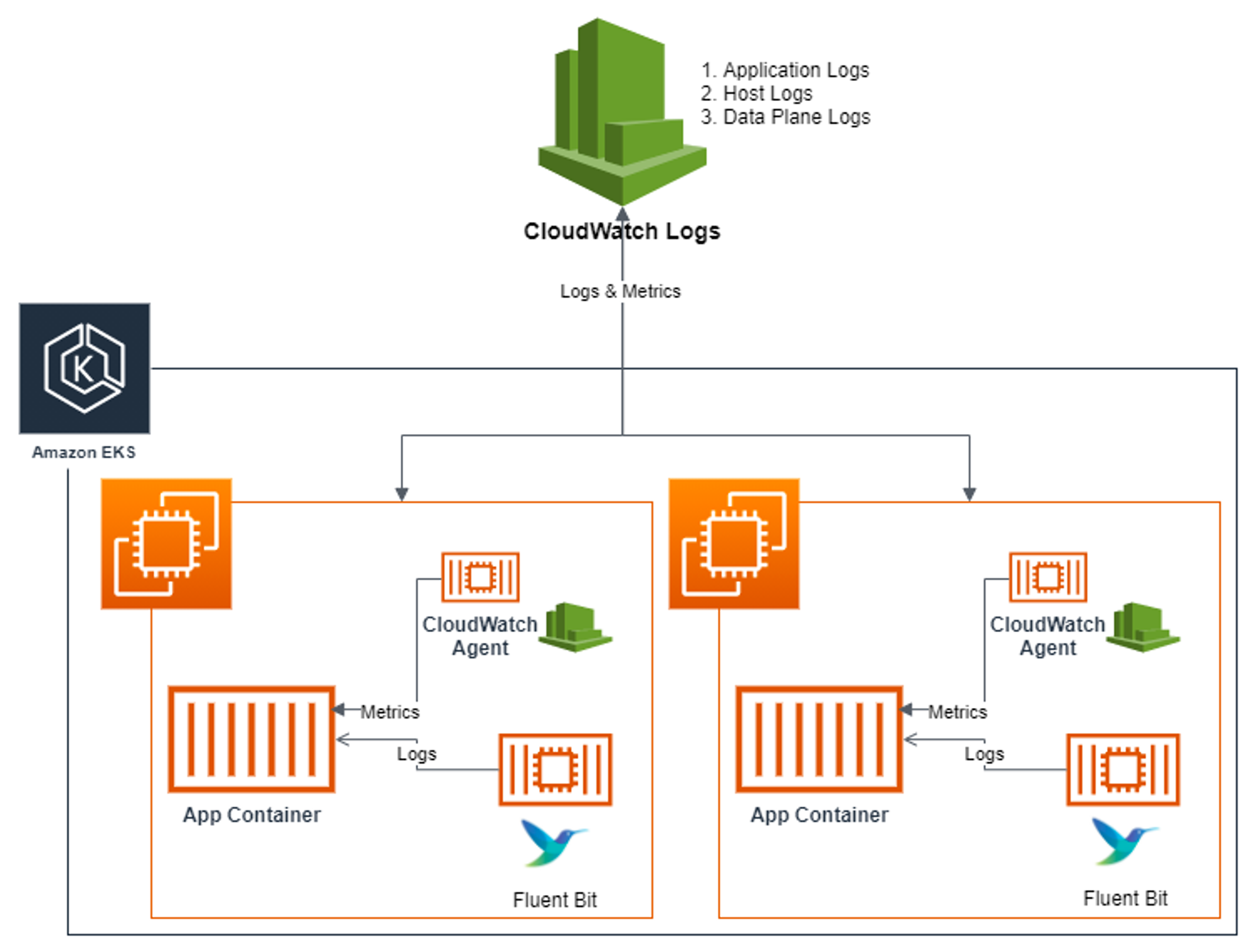

containerLogMaxFiles: 52. Container Insights metrics in Amazon CloudWatch & Fluent Bit (Logs)

- CloudWatch Container Insight : 노드에 CW Agent 파드와 Fluent Bit 파드가 데몬셋으로 배치되어 Metrics 와 Logs 수집

-

[수집] : Fluent Bit 컨테이너를 데몬셋으로 동작시키고, 아래 3가지 종류의 로그를 CloudWatch Logs 에 전송합니다.

- /aws/containerinsights/

Cluster_Name/application : 로그 소스(All log files in/var/log/containers), 각 컨테이너/파드 로그 - /aws/containerinsights/

Cluster_Name/host : 로그 소스(Logs from/var/log/dmesg,/var/log/secure, and/var/log/messages), 노드(호스트) 로그 - /aws/containerinsights/

Cluster_Name/dataplane : 로그 소스(/var/log/journalforkubelet.service,kubeproxy.service, anddocker.service), 쿠버네티스 데이터플레인 로그

- /aws/containerinsights/

-

[저장] : CloudWatch Logs 에 로그를 저장, 로그 그룹 별 로그 보존 기간 설정 가능

-

[시각화] : CloudWatch 의 Logs Insights 를 사용하여 대상 로그를 분석하고, CloudWatch 의 대시보드로 시각화합니다.

- 노드의 로그 확인

- application 로그 소스(All log files in /var/log/containers → 심볼릭 링크 /var/log/pods/<컨테이너>, 각 컨테이너/파드 로그

# 로그 위치 확인

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# ssh ec2-user@$N1 sudo tree /var/log/containers

/var/log/containers

├── aws-load-balancer-controller-5f7b66cdd5-4xdsv_kube-system_aws-load-balancer-controller-b091ee305b084902ca8805a68f961d637cd683ce36536c9c85ddd47ad07b78b4.log -> /var/log/pods/kube-system_aws-load-balancer-controller-5f7b66cdd5-4xdsv_f1e0e25d-b2f1-432b-b95c-de8d529dc902/aws-load-balancer-controller/0.log

├── aws-node-pzbwp_kube-system_aws-eks-nodeagent-f42cd0529e17cf3ec511ac36c8922d110116d1f9002997b8a3f991780de39653.log -> /var/log/pods/kube-system_aws-node-pzbwp_2495d0b9-52aa-4878-a2de-1fee29bbef82/aws-eks-nodeagent/0.log

├── aws-node-pzbwp_kube-system_aws-node-c6b0004c77492452400d420c8d6cafcf7e9716f3f3e875f18864968dc4a9bb36.log -> /var/log/pods/kube-system_aws-node-pzbwp_2495d0b9-52aa-4878-a2de-1fee29bbef82/aws-node/0.log

├── aws-node-pzbwp_kube-system_aws-vpc-cni-init-0198d933927985b5a846461c6354dd4c1112641cff53bfb710fe85a8cfc8f3e1.log -> /var/log/pods/kube-system_aws-node-pzbwp_2495d0b9-52aa-4878-a2de-1fee29bbef82/aws-vpc-cni-init/0.log

├── ebs-csi-controller-7df5f479d4-2fkct_kube-system_csi-attacher-11a6205f6aa64a54bc2e33be2fecc5648be28d942b9d68d0853428bb41c2b11d.log -> /var/log/pods/kube-system_ebs-csi-controller-7df5f479d4-2fkct_5b9f84ef-7368-4830-a8f3-81da5a90a3de/csi-attacher/0.log

├── ebs-csi-controller-7df5f479d4-2fkct_kube-system_csi-provisioner-95b2685d171f085b3b9ab38de06401c3ad01fd55d05b3448139a9485f8431c53.log -> /var/log/pods/kube-system_ebs-csi-controller-7df5f479d4-2fkct_5b9f84ef-7368-4830-a8f3-81da5a90a3de/csi-provisioner/0.log

├── ebs-csi-controller-7df5f479d4-2fkct_kube-system_csi-resizer-451fd48171a7c45731316f37d87d412f105b701cc0e8c57a551238ff8f032c20.log -> /var/log/pods/kube-system_ebs-csi-controller-7df5f479d4-2fkct_5b9f84ef-7368-4830-a8f3-81da5a90a3de/csi-resizer/0.log

├── ebs-csi-controller-7df5f479d4-2fkct_kube-system_csi-snapshotter-4b9c4791813c0bee494c60a97fe9021eb38dd12fa6c48c9ab9bb99a5b8950690.log -> /var/log/pods/kube-system_ebs-csi-controller-7df5f479d4-2fkct_5b9f84ef-7368-4830-a8f3-81da5a90a3de/csi-snapshotter/0.log

├── ebs-csi-controller-7df5f479d4-2fkct_kube-system_ebs-plugin-bd292c0666d0041864af581ae565c12370512fe899f3e5e7f881b28febb6f2cd.log -> /var/log/pods/kube-system_ebs-csi-controller-7df5f479d4-2fkct_5b9f84ef-7368-4830-a8f3-81da5a90a3de/ebs-plugin/0.log

├── ebs-csi-controller-7df5f479d4-2fkct_kube-system_liveness-probe-3e93634d93cfa5fdae86297735b55cd2cee1b8ed095727ea43c4493f87d3e7ac.log -> /var/log/pods/kube-system_ebs-csi-controller-7df5f479d4-2fkct_5b9f84ef-7368-4830-a8f3-81da5a90a3de/liveness-probe/0.log

├── ebs-csi-node-ppjzz_kube-system_ebs-plugin-10fee86a44b7b0ac04623a6fe0f571561908b4bf1ca182b0ec1c8414cba6ad69.log -> /var/log/pods/kube-system_ebs-csi-node-ppjzz_ae69b9b9-25f7-4f53-8c4b-5413d229fe73/ebs-plugin/0.log

├── ebs-csi-node-ppjzz_kube-system_liveness-probe-adffdf4ea27a303a52e151950586bcbb032c71297e56a2eb4e18e0f23f677eb1.log -> /var/log/pods/kube-system_ebs-csi-node-ppjzz_ae69b9b9-25f7-4f53-8c4b-5413d229fe73/liveness-probe/0.log

├── ebs-csi-node-ppjzz_kube-system_node-driver-registrar-7a296ed4cc4883a5e8ccc389151cb3f30b744e520af3dfc79b775bb608e05624.log -> /var/log/pods/kube-system_ebs-csi-node-ppjzz_ae69b9b9-25f7-4f53-8c4b-5413d229fe73/node-driver-registrar/0.log

├── external-dns-7fd77dcbc-2zrwl_kube-system_external-dns-7cabd2fd2895ffd14c5e4e1844f96b9da5a883ede5e494034333afdf89d84b8f.log -> /var/log/pods/kube-system_external-dns-7fd77dcbc-2zrwl_2bb2e013-cef2-45ec-8ebc-0ed85b244f5c/external-dns/0.log

└── kube-proxy-wqx6h_kube-system_kube-proxy-487cf8f577d839b3dfb1a33f6566acfb6c622b3eade5b24163cca3e53018c540.log -> /var/log/pods/kube-system_kube-proxy-wqx6h_1e2778dc-5dc6-4df2-bc40-a2e235047e3d/kube-proxy/0.log

0 directories, 15 files

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# ssh ec2-user@$N1 sudo ls -al /var/log/containers

total 8

drwxr-xr-x 2 root root 4096 Mar 29 12:04 .

drwxr-xr-x 10 root root 4096 Mar 29 11:55 ..

lrwxrwxrwx 1 root root 143 Mar 29 12:04 aws-load-balancer-controller-5f7b66cdd5-4xdsv_kube-system_aws-load-balancer-controller-b091ee305b084902ca8805a68f961d637cd683ce36536c9c85ddd47ad07b78b4.log -> /var/log/pods/kube-system_aws-load-balancer-controller-5f7b66cdd5-4xdsv_f1e0e25d-b2f1-432b-b95c-de8d529dc902/aws-load-balancer-controller/0.log

lrwxrwxrwx 1 root root 101 Mar 29 11:55 aws-node-pzbwp_kube-system_aws-eks-nodeagent-f42cd0529e17cf3ec511ac36c8922d110116d1f9002997b8a3f991780de39653.log -> /var/log/pods/kube-system_aws-node-pzbwp_2495d0b9-52aa-4878-a2de-1fee29bbef82/aws-eks-nodeagent/0.log

lrwxrwxrwx 1 root root 92 Mar 29 11:55 aws-node-pzbwp_kube-system_aws-node-c6b0004c77492452400d420c8d6cafcf7e9716f3f3e875f18864968dc4a9bb36.log -> /var/log/pods/kube-system_aws-node-pzbwp_2495d0b9-52aa-4878-a2de-1fee29bbef82/aws-node/0.log

lrwxrwxrwx 1 root root 100 Mar 29 11:55 aws-node-pzbwp_kube-system_aws-vpc-cni-init-0198d933927985b5a846461c6354dd4c1112641cff53bfb710fe85a8cfc8f3e1.log -> /var/log/pods/kube-system_aws-node-pzbwp_2495d0b9-52aa-4878-a2de-1fee29bbef82/aws-vpc-cni-init/0.log

lrwxrwxrwx 1 root root 117 Mar 29 11:59 ebs-csi-controller-7df5f479d4-2fkct_kube-system_csi-attacher-11a6205f6aa64a54bc2e33be2fecc5648be28d942b9d68d0853428bb41c2b11d.log -> /var/log/pods/kube-system_ebs-csi-controller-7df5f479d4-2fkct_5b9f84ef-7368-4830-a8f3-81da5a90a3de/csi-attacher/0.log

lrwxrwxrwx 1 root root 120 Mar 29 11:59 ebs-csi-controller-7df5f479d4-2fkct_kube-system_csi-provisioner-95b2685d171f085b3b9ab38de06401c3ad01fd55d05b3448139a9485f8431c53.log -> /var/log/pods/kube-system_ebs-csi-controller-7df5f479d4-2fkct_5b9f84ef-7368-4830-a8f3-81da5a90a3de/csi-provisioner/0.log

lrwxrwxrwx 1 root root 116 Mar 29 11:59 ebs-csi-controller-7df5f479d4-2fkct_kube-system_csi-resizer-451fd48171a7c45731316f37d87d412f105b701cc0e8c57a551238ff8f032c20.log -> /var/log/pods/kube-system_ebs-csi-controller-7df5f479d4-2fkct_5b9f84ef-7368-4830-a8f3-81da5a90a3de/csi-resizer/0.log

lrwxrwxrwx 1 root root 120 Mar 29 11:59 ebs-csi-controller-7df5f479d4-2fkct_kube-system_csi-snapshotter-4b9c4791813c0bee494c60a97fe9021eb38dd12fa6c48c9ab9bb99a5b8950690.log -> /var/log/pods/kube-system_ebs-csi-controller-7df5f479d4-2fkct_5b9f84ef-7368-4830-a8f3-81da5a90a3de/csi-snapshotter/0.log

lrwxrwxrwx 1 root root 115 Mar 29 11:59 ebs-csi-controller-7df5f479d4-2fkct_kube-system_ebs-plugin-bd292c0666d0041864af581ae565c12370512fe899f3e5e7f881b28febb6f2cd.log -> /var/log/pods/kube-system_ebs-csi-controller-7df5f479d4-2fkct_5b9f84ef-7368-4830-a8f3-81da5a90a3de/ebs-plugin/0.log

lrwxrwxrwx 1 root root 119 Mar 29 11:59 ebs-csi-controller-7df5f479d4-2fkct_kube-system_liveness-probe-3e93634d93cfa5fdae86297735b55cd2cee1b8ed095727ea43c4493f87d3e7ac.log -> /var/log/pods/kube-system_ebs-csi-controller-7df5f479d4-2fkct_5b9f84ef-7368-4830-a8f3-81da5a90a3de/liveness-probe/0.log

lrwxrwxrwx 1 root root 98 Mar 29 11:59 ebs-csi-node-ppjzz_kube-system_ebs-plugin-10fee86a44b7b0ac04623a6fe0f571561908b4bf1ca182b0ec1c8414cba6ad69.log -> /var/log/pods/kube-system_ebs-csi-node-ppjzz_ae69b9b9-25f7-4f53-8c4b-5413d229fe73/ebs-plugin/0.log

lrwxrwxrwx 1 root root 102 Mar 29 11:59 ebs-csi-node-ppjzz_kube-system_liveness-probe-adffdf4ea27a303a52e151950586bcbb032c71297e56a2eb4e18e0f23f677eb1.log -> /var/log/pods/kube-system_ebs-csi-node-ppjzz_ae69b9b9-25f7-4f53-8c4b-5413d229fe73/liveness-probe/0.log

lrwxrwxrwx 1 root root 109 Mar 29 11:59 ebs-csi-node-ppjzz_kube-system_node-driver-registrar-7a296ed4cc4883a5e8ccc389151cb3f30b744e520af3dfc79b775bb608e05624.log -> /var/log/pods/kube-system_ebs-csi-node-ppjzz_ae69b9b9-25f7-4f53-8c4b-5413d229fe73/node-driver-registrar/0.log

lrwxrwxrwx 1 root root 110 Mar 29 12:02 external-dns-7fd77dcbc-2zrwl_kube-system_external-dns-7cabd2fd2895ffd14c5e4e1844f96b9da5a883ede5e494034333afdf89d84b8f.log -> /var/log/pods/kube-system_external-dns-7fd77dcbc-2zrwl_2bb2e013-cef2-45ec-8ebc-0ed85b244f5c/external-dns/0.log

lrwxrwxrwx 1 root root 96 Mar 29 11:56 kube-proxy-wqx6h_kube-system_kube-proxy-487cf8f577d839b3dfb1a33f6566acfb6c622b3eade5b24163cca3e53018c540.log -> /var/log/pods/kube-system_kube-proxy-wqx6h_1e2778dc-5dc6-4df2-bc40-a2e235047e3d/kube-proxy/0.log- host 로그 소스(Logs from /var/log/dmesg, /var/log/secure, and /var/log/messages), 노드(호스트) 로그

# 로그 위치 확인

var/log/

├── amazon

├── audit

├── aws-routed-eni

├── boot.log

├── btmp

├── chrony

├── cloud-init.log

├── cloud-init-output.log

├── containers

├── cron

├── dmesg

├── dmesg.old

├── grubby

├── grubby_prune_debug

├── journal

├── lastlog

├── maillog

├── messages

├── pods

├── sa

├── secure

├── spooler

├── tallylog

├── wtmp

└── yum.log

8 directories, 17 files

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# ssh ec2-user@$N1 sudo ls -la /var/log/

total 736

drwxr-xr-x 10 root root 4096 Mar 29 11:55 .

drwxr-xr-x 18 root root 254 Mar 15 04:19 ..

drwxr-xr-x 3 root root 17 Mar 29 11:54 amazon

drwx------ 2 root root 23 Mar 15 04:19 audit

drwxr-xr-x 2 root root 121 Mar 29 11:55 aws-routed-eni

-rw------- 1 root root 1155 Mar 29 14:07 boot.log

-rw------- 1 root utmp 0 Mar 7 18:43 btmp

drwxr-x--- 2 chrony chrony 72 Mar 15 04:19 chrony

-rw-r--r-- 1 root root 104803 Mar 29 11:55 cloud-init.log

-rw-r----- 1 root root 15206 Mar 29 11:55 cloud-init-output.log

drwxr-xr-x 2 root root 4096 Mar 29 12:04 containers

-rw------- 1 root root 3858 Mar 29 14:10 cron

-rw-r--r-- 1 root root 31945 Mar 29 11:54 dmesg

-rw-r--r-- 1 root root 30847 Mar 15 04:20 dmesg.old

-rw------- 1 root root 2375 Mar 15 04:20 grubby

-rw-r--r-- 1 root root 193 Mar 7 18:43 grubby_prune_debug

drwxr-sr-x+ 4 root systemd-journal 86 Mar 29 11:54 journal

-rw-r--r-- 1 root root 292292 Mar 29 11:54 lastlog

-rw------- 1 root root 924 Mar 29 11:54 maillog

-rw------- 1 root root 487969 Mar 29 14:15 messages

drwxr-xr-x 8 root root 4096 Mar 29 12:04 pods

drwxr-xr-x 2 root root 18 Mar 29 12:00 sa

-rw------- 1 root root 16280 Mar 29 14:15 secure

-rw------- 1 root root 0 Mar 7 18:43 spooler

-rw------- 1 root root 0 Mar 7 18:43 tallylog

-rw-rw-r-- 1 root utmp 2304 Mar 29 11:55 wtmp

-rw------- 1 root root 6936 Mar 29 11:54 yum.log- dataplane 로그 소스(/var/log/journal for kubelet.service, kubeproxy.service, and docker.service), 쿠버네티스 데이터플레인 로그

# 로그 위치 확인

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# ssh ec2-user@$N1 sudo tree /var/log/journal -L 1

/var/log/journal

├── ec23176a900a65d36ea89e5c2be53139

└── ec26de76cb85cc929ac531d1eb97342f- EKS Addon 형태로 제공되는 CloudWatch Container observability 설치해보겠습니다.

- 이전에는 FluentBit 였지만, 현재 CloudWatch Container observability 라는 이름으로 제공되고 있습니다.

# Install

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# aws eks create-addon --cluster-name $CLUSTER_NAME --addon-name amazon-cloudwatch-observability

{

"addon": {

"addonName": "amazon-cloudwatch-observability",

"clusterName": "myeks",

"status": "CREATING",

"addonVersion": "v1.4.0-eksbuild.1",

"health": {

"issues": []

},

"addonArn": "arn:aws:eks:ap-northeast-2:236747833953:addon/myeks/amazon-cloudwatch-observability/36c74549-3ca7-981f-7f40-bae0aa1bfdb2",

"createdAt": "2024-03-29T23:17:19.165000+09:00",

"modifiedAt": "2024-03-29T23:17:19.187000+09:00",

"tags": {}

}

}

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# aws eks list-addons --cluster-name myeks --output table

---------------------------------------

| ListAddons |

+-------------------------------------+

|| addons ||

|+-----------------------------------+|

|| amazon-cloudwatch-observability ||

|| aws-ebs-csi-driver ||

|| coredns ||

|| kube-proxy ||

|| vpc-cni ||

|+-----------------------------------+|

# 설치 확인

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl get ds,pod,cm,sa,amazoncloudwatchagent -n amazon-cloudwatch

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

daemonset.apps/cloudwatch-agent 3 3 3 3 3 <none> 13s

daemonset.apps/dcgm-exporter 0 0 0 0 0 <none> 17s

daemonset.apps/fluent-bit 3 3 3 3 3 <none> 17s

NAME READY STATUS RESTARTS AGE

pod/amazon-cloudwatch-observability-controller-manager-8544df7dk8c5 1/1 Running 0 17s

pod/cloudwatch-agent-gfzgp 1/1 Running 0 13s

pod/cloudwatch-agent-rcczp 1/1 Running 0 13s

pod/cloudwatch-agent-vkssd 1/1 Running 0 13s

pod/fluent-bit-gwfhs 1/1 Running 0 17s

pod/fluent-bit-lfzrb 1/1 Running 0 17s

pod/fluent-bit-xpspb 1/1 Running 0 17s

NAME DATA AGE

configmap/cloudwatch-agent-agent 1 13s

configmap/cwagent-clusterleader 0 2s

configmap/dcgm-exporter-config-map 2 19s

configmap/fluent-bit-config 5 19s

configmap/kube-root-ca.crt 1 20s

NAME SECRETS AGE

serviceaccount/amazon-cloudwatch-observability-controller-manager 0 19s

serviceaccount/cloudwatch-agent 0 19s

serviceaccount/dcgm-exporter-service-acct 0 19s

serviceaccount/default 0 20s

NAME MODE VERSION READY AGE IMAGE MANAGEMENT

amazoncloudwatchagent.cloudwatch.aws.amazon.com/cloudwatch-agent daemonset 0.0.0 17s managed

# Cluster Role 확인

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl describe clusterrole cloudwatch-agent-role amazon-cloudwatch-observability-manager-role

Name: cloudwatch-agent-role

Labels: app.kubernetes.io/instance=amazon-cloudwatch-observability

app.kubernetes.io/managed-by=EKS

app.kubernetes.io/name=amazon-cloudwatch-observability

app.kubernetes.io/version=1.0.0

Annotations: <none>

PolicyRule:

Resources Non-Resource URLs Resource Names Verbs

--------- ----------------- -------------- -----

configmaps [] [] [create get update]

events [] [] [create get]

nodes/stats [] [] [create get]

[/metrics] [] [get]

endpoints [] [] [list watch get]

namespaces [] [] [list watch get]

nodes/proxy [] [] [list watch get]

nodes [] [] [list watch get]

pods/logs [] [] [list watch get]

pods [] [] [list watch get]

daemonsets.apps [] [] [list watch get]

deployments.apps [] [] [list watch get]

replicasets.apps [] [] [list watch get]

statefulsets.apps [] [] [list watch get]

services [] [] [list watch]

jobs.batch [] [] [list watch]

[/metrics] [] [list]

[/metrics] [] [watch]

Name: amazon-cloudwatch-observability-manager-role

Labels: <none>

Annotations: <none>

PolicyRule:

Resources Non-Resource URLs Resource Names Verbs

--------- ----------------- -------------- -----

configmaps [] [] [create delete get list patch update watch]

serviceaccounts [] [] [create delete get list patch update watch]

services [] [] [create delete get list patch update watch]

daemonsets.apps [] [] [create delete get list patch update watch]

deployments.apps [] [] [create delete get list patch update watch]

statefulsets.apps [] [] [create delete get list patch update watch]

ingresses.networking.k8s.io [] [] [create delete get list patch update watch]

routes.route.openshift.io/custom-host [] [] [create delete get list patch update watch]

routes.route.openshift.io [] [] [create delete get list patch update watch]

leases.coordination.k8s.io [] [] [create get list update]

events [] [] [create patch]

namespaces [] [] [get list patch update watch]

amazoncloudwatchagents.cloudwatch.aws.amazon.com [] [] [get list patch update watch]

instrumentations.cloudwatch.aws.amazon.com [] [] [get list patch update watch]

replicasets.apps [] [] [get list watch]

amazoncloudwatchagents.cloudwatch.aws.amazon.com/finalizers [] [] [get patch update]

amazoncloudwatchagents.cloudwatch.aws.amazon.com/status [] [] [get patch update]

# Cluster Role 바인딩 확인

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl describe clusterrolebindings cloudwatch-agent-role-binding amazon-cloudwatch-observability-manager-rolebinding

Name: cloudwatch-agent-role-binding

Labels: <none>

Annotations: <none>

Role:

Kind: ClusterRole

Name: cloudwatch-agent-role

Subjects:

Kind Name Namespace

---- ---- ---------

ServiceAccount cloudwatch-agent amazon-cloudwatch

Name: amazon-cloudwatch-observability-manager-rolebinding

Labels: app.kubernetes.io/instance=amazon-cloudwatch-observability

app.kubernetes.io/managed-by=EKS

app.kubernetes.io/name=amazon-cloudwatch-observability

app.kubernetes.io/version=1.0.0

Annotations: <none>

Role:

Kind: ClusterRole

Name: amazon-cloudwatch-observability-manager-role

Subjects:

Kind Name Namespace

---- ---- ---------

ServiceAccount amazon-cloudwatch-observability-controller-manager amazon-cloudwatch

# cloudwatch-agent 설정 확인

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl describe clusterrolebindings cloudwatch-agent-role-binding amazon-cloudwatch-observability-manager-rolebinding

Name: cloudwatch-agent-role-binding

Labels: <none>

Annotations: <none>

Role:

Kind: ClusterRole

Name: cloudwatch-agent-role

Subjects:

Kind Name Namespace

---- ---- ---------

ServiceAccount cloudwatch-agent amazon-cloudwatch

Name: amazon-cloudwatch-observability-manager-rolebinding

Labels: app.kubernetes.io/instance=amazon-cloudwatch-observability

app.kubernetes.io/managed-by=EKS

app.kubernetes.io/name=amazon-cloudwatch-observability

app.kubernetes.io/version=1.0.0

Annotations: <none>

Role:

Kind: ClusterRole

Name: amazon-cloudwatch-observability-manager-role

Subjects:

Kind Name Namespace

---- ---- ---------

ServiceAccount amazon-cloudwatch-observability-controller-manager amazon-cloudwatch

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl describe cm cloudwatch-agent-agent -n amazon-cloudwatch

Name: cloudwatch-agent-agent

Namespace: amazon-cloudwatch

Labels: app.kubernetes.io/component=amazon-cloudwatch-agent

app.kubernetes.io/instance=amazon-cloudwatch.cloudwatch-agent

app.kubernetes.io/managed-by=amazon-cloudwatch-agent-operator

app.kubernetes.io/name=cloudwatch-agent-agent

app.kubernetes.io/part-of=amazon-cloudwatch-agent

app.kubernetes.io/version=1.300034.1b536

Annotations: <none>

Data

====

cwagentconfig.json:

----

{"agent":{"region":"ap-northeast-2"},"logs":{"metrics_collected":{"app_signals":{"hosted_in":"myeks"},"kubernetes":{"cluster_name":"myeks","enhanced_container_insights":true}}},"traces":{"traces_collected":{"app_signals":{}}}}

BinaryData

====

Events: <none>

#Fluent bit 파드 수집하는 방법 : Volumes에 HostPath를 살펴봅니다.

Volumes:

otc-internal:

Type: ConfigMap (a volume populated by a ConfigMap)

Name: cloudwatch-agent-agent

Optional: false

rootfs:

Type: HostPath (bare host directory volume)

Path: /

HostPathType:

dockersock:

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# ssh ec2-user@$N1 sudo tree /dev/disk

/dev/disk

├── by-id

│ ├── nvme-Amazon_Elastic_Block_Store_vol030a1af7185cbfe9a -> ../../nvme0n1

│ ├── nvme-Amazon_Elastic_Block_Store_vol030a1af7185cbfe9a-ns-1 -> ../../nvme0n1

│ ├── nvme-Amazon_Elastic_Block_Store_vol030a1af7185cbfe9a-ns-1-part1 -> ../../nvme0n1p1

│ ├── nvme-Amazon_Elastic_Block_Store_vol030a1af7185cbfe9a-ns-1-part128 -> ../../nvme0n1p128

│ ├── nvme-Amazon_Elastic_Block_Store_vol030a1af7185cbfe9a-part1 -> ../../nvme0n1p1

│ ├── nvme-Amazon_Elastic_Block_Store_vol030a1af7185cbfe9a-part128 -> ../../nvme0n1p128

│ ├── nvme-nvme.1d0f-766f6c3033306131616637313835636266653961-416d617a6f6e20456c617374696320426c6f636b2053746f7265-00000001 -> ../../nvme0n1

│ ├── nvme-nvme.1d0f-766f6c3033306131616637313835636266653961-416d617a6f6e20456c617374696320426c6f636b2053746f7265-00000001-part1 -> ../../nvme0n1p1

│ └── nvme-nvme.1d0f-766f6c3033306131616637313835636266653961-416d617a6f6e20456c617374696320426c6f636b2053746f7265-00000001-part128 -> ../../nvme0n1p128

├── by-label

│ └── \\x2f -> ../../nvme0n1p1

├── by-partlabel

│ ├── BIOS\\x20Boot\\x20Partition -> ../../nvme0n1p128

│ └── Linux -> ../../nvme0n1p1

├── by-partuuid

│ ├── b91b2315-af75-4e0a-8c54-f6dbf056af64 -> ../../nvme0n1p128

│ └── fc396e8a-3965-454a-82fa-5b3a9bb01732 -> ../../nvme0n1p1

├── by-path

│ ├── pci-0000:00:04.0-nvme-1 -> ../../nvme0n1

│ ├── pci-0000:00:04.0-nvme-1-part1 -> ../../nvme0n1p1

│ └── pci-0000:00:04.0-nvme-1-part128 -> ../../nvme0n1p128

└── by-uuid

└── efdc04ec-36ca-4425-a2ff-e752a5aeed29 -> ../../nvme0n1p1

6 directories, 18 files

# Fluent Bit 로그 INPUT/FILTER/OUTPUT 설정 확인

## 설정 부분 구성 : application-log.conf , dataplane-log.conf , fluent-bit.conf , host-log.conf , parsers.conf

...

application-log.conf:

----

[INPUT]

Name tail

Tag application.*

Exclude_Path /var/log/containers/cloudwatch-agent*, /var/log/containers/fluent-bit*, /var/log/containers/aws-node*, /var/log/containers/kube-proxy*

Path /var/log/containers/*.log

multiline.parser docker, cri

DB /var/fluent-bit/state/flb_container.db

Mem_Buf_Limit 50MB

Skip_Long_Lines On

Refresh_Interval 10

Rotate_Wait 30

storage.type filesystem

Read_from_Head ${READ_FROM_HEAD}

[FILTER]

Name kubernetes

Match application.*

Kube_URL https://kubernetes.default.svc:443

Kube_Tag_Prefix application.var.log.containers.

Merge_Log On

Merge_Log_Key log_processed

K8S-Logging.Parser On

K8S-Logging.Exclude Off

Labels Off

Annotations Off

Use_Kubelet On

Kubelet_Port 10250

Buffer_Size 0

[OUTPUT]

Name cloudwatch_logs

Match application.*

region ${AWS_REGION}

log_group_name /aws/containerinsights/${CLUSTER_NAME}/application

log_stream_prefix ${HOST_NAME}-

auto_create_group true

extra_user_agent container-insights

# Fluent Bit 파드가 수집하는 방법 : Volumes에 HostPath

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl describe -n amazon-cloudwatch ds fluent-bit

Name: fluent-bit

Selector: k8s-app=fluent-bit

Node-Selector: <none>

Labels: k8s-app=fluent-bit

kubernetes.io/cluster-service=true

version=v1

Annotations: deprecated.daemonset.template.generation: 1

Desired Number of Nodes Scheduled: 3

Current Number of Nodes Scheduled: 3

Number of Nodes Scheduled with Up-to-date Pods: 3

Number of Nodes Scheduled with Available Pods: 3

Number of Nodes Misscheduled: 0

Pods Status: 3 Running / 0 Waiting / 0 Succeeded / 0 Failed

Pod Template:

Labels: k8s-app=fluent-bit

kubernetes.io/cluster-service=true

version=v1

Annotations: checksum/config: 1356b1d704d353a90c127f6dad453991f51d88ae994a7583c1064e0c883d898e

Service Account: cloudwatch-agent

Containers:

fluent-bit:

Image: 602401143452.dkr.ecr.ap-northeast-2.amazonaws.com/eks/observability/aws-for-fluent-bit:2.32.0.20240304

Port: <none>

Host Port: <none>

Limits:

cpu: 500m

memory: 250Mi

Requests:

cpu: 50m

memory: 25Mi

Environment:

AWS_REGION: ap-northeast-2

CLUSTER_NAME: myeks

READ_FROM_HEAD: Off

READ_FROM_TAIL: On

HOST_NAME: (v1:spec.nodeName)

HOSTNAME: (v1:metadata.name)

CI_VERSION: k8s/1.3.17

Mounts:

/fluent-bit/etc/ from fluent-bit-config (rw)

/run/log/journal from runlogjournal (ro)

/var/fluent-bit/state from fluentbitstate (rw)

/var/lib/docker/containers from varlibdockercontainers (ro)

/var/log from varlog (ro)

/var/log/dmesg from dmesg (ro)

Volumes:

fluentbitstate:

Type: HostPath (bare host directory volume)

Path: /var/fluent-bit/state

HostPathType:

varlog:

Type: HostPath (bare host directory volume)

Path: /var/log

HostPathType:

varlibdockercontainers:

Type: HostPath (bare host directory volume)

Path: /var/lib/docker/containers

HostPathType:

fluent-bit-config:

Type: ConfigMap (a volume populated by a ConfigMap)

Name: fluent-bit-config

Optional: false

runlogjournal:

Type: HostPath (bare host directory volume)

Path: /run/log/journal

HostPathType:

dmesg:

Type: HostPath (bare host directory volume)

Path: /var/log/dmesg

HostPathType:

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal SuccessfulCreate 7m26s daemonset-controller Created pod: fluent-bit-lfzrb

Normal SuccessfulCreate 7m26s daemonset-controller Created pod: fluent-bit-xpspb

Normal SuccessfulCreate 7m26s daemonset-controller Created pod: fluent-bit-gwfhs

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# ssh ec2-user@$N1 sudo tree /var/log

/var/log

├── amazon

│ └── ssm

│ ├── amazon-ssm-agent.log

│ └── audits

│ └── amazon-ssm-agent-audit-2024-03-29

├── audit

│ └── audit.log

├── aws-routed-eni

│ ├── ebpf-sdk.log

│ ├── egress-v6-plugin.log

│ ├── ipamd.log

│ ├── network-policy-agent.log

│ └── plugin.log

├── boot.log

├── btmp

├── chrony

│ ├── measurements.log

│ ├── statistics.log

│ └── tracking.log

├── cloud-init.log

├── cloud-init-output.log

├── containers

│ ├── aws-load-balancer-controller-5f7b66cdd5-4xdsv_kube-system_aws-load-balancer-controller-b091ee305b084902ca8805a68f961d637cd683ce36536c9c85ddd47ad07b78b4.log -> /var/log/pods/kube-system_aws-load-balancer-controller-5f7b66cdd5-4xdsv_f1e0e25d-b2f1-432b-b95c-de8d529dc902/aws-load-balancer-controller/0.log

│ ├── aws-node-pzbwp_kube-system_aws-eks-nodeagent-f42cd0529e17cf3ec511ac36c8922d110116d1f9002997b8a3f991780de39653.log -> /var/log/pods/kube-system_aws-node-pzbwp_2495d0b9-52aa-4878-a2de-1fee29bbef82/aws-eks-nodeagent/0.log

│ ├── aws-node-pzbwp_kube-system_aws-node-c6b0004c77492452400d420c8d6cafcf7e9716f3f3e875f18864968dc4a9bb36.log -> /var/log/pods/kube-system_aws-node-pzbwp_2495d0b9-52aa-4878-a2de-1fee29bbef82/aws-node/0.log

│ ├── aws-node-pzbwp_kube-system_aws-vpc-cni-init-0198d933927985b5a846461c6354dd4c1112641cff53bfb710fe85a8cfc8f3e1.log -> /var/log/pods/kube-system_aws-node-pzbwp_2495d0b9-52aa-4878-a2de-1fee29bbef82/aws-vpc-cni-init/0.log

│ ├── cloudwatch-agent-rcczp_amazon-cloudwatch_otc-container-1aae21b804fdfa289c449a60a00020aca34aa11c611ce65e76fb02e7664c9917.log -> /var/log/pods/amazon-cloudwatch_cloudwatch-agent-rcczp_acbc437e-fed1-4ef2-b734-a38b7d4d2df0/otc-container/0.log

│ ├── ebs-csi-controller-7df5f479d4-2fkct_kube-system_csi-attacher-11a6205f6aa64a54bc2e33be2fecc5648be28d942b9d68d0853428bb41c2b11d.log -> /var/log/pods/kube-system_ebs-csi-controller-7df5f479d4-2fkct_5b9f84ef-7368-4830-a8f3-81da5a90a3de/csi-attacher/0.log

│ ├── ebs-csi-controller-7df5f479d4-2fkct_kube-system_csi-provisioner-95b2685d171f085b3b9ab38de06401c3ad01fd55d05b3448139a9485f8431c53.log -> /var/log/pods/kube-system_ebs-csi-controller-7df5f479d4-2fkct_5b9f84ef-7368-4830-a8f3-81da5a90a3de/csi-provisioner/0.log

│ ├── ebs-csi-controller-7df5f479d4-2fkct_kube-system_csi-resizer-451fd48171a7c45731316f37d87d412f105b701cc0e8c57a551238ff8f032c20.log -> /var/log/pods/kube-system_ebs-csi-controller-7df5f479d4-2fkct_5b9f84ef-7368-4830-a8f3-81da5a90a3de/csi-resizer/0.log

│ ├── ebs-csi-controller-7df5f479d4-2fkct_kube-system_csi-snapshotter-4b9c4791813c0bee494c60a97fe9021eb38dd12fa6c48c9ab9bb99a5b8950690.log -> /var/log/pods/kube-system_ebs-csi-controller-7df5f479d4-2fkct_5b9f84ef-7368-4830-a8f3-81da5a90a3de/csi-snapshotter/0.log

│ ├── ebs-csi-controller-7df5f479d4-2fkct_kube-system_ebs-plugin-bd292c0666d0041864af581ae565c12370512fe899f3e5e7f881b28febb6f2cd.log -> /var/log/pods/kube-system_ebs-csi-controller-7df5f479d4-2fkct_5b9f84ef-7368-4830-a8f3-81da5a90a3de/ebs-plugin/0.log

│ ├── ebs-csi-controller-7df5f479d4-2fkct_kube-system_liveness-probe-3e93634d93cfa5fdae86297735b55cd2cee1b8ed095727ea43c4493f87d3e7ac.log -> /var/log/pods/kube-system_ebs-csi-controller-7df5f479d4-2fkct_5b9f84ef-7368-4830-a8f3-81da5a90a3de/liveness-probe/0.log

│ ├── ebs-csi-node-ppjzz_kube-system_ebs-plugin-10fee86a44b7b0ac04623a6fe0f571561908b4bf1ca182b0ec1c8414cba6ad69.log -> /var/log/pods/kube-system_ebs-csi-node-ppjzz_ae69b9b9-25f7-4f53-8c4b-5413d229fe73/ebs-plugin/0.log

│ ├── ebs-csi-node-ppjzz_kube-system_liveness-probe-adffdf4ea27a303a52e151950586bcbb032c71297e56a2eb4e18e0f23f677eb1.log -> /var/log/pods/kube-system_ebs-csi-node-ppjzz_ae69b9b9-25f7-4f53-8c4b-5413d229fe73/liveness-probe/0.log

│ ├── ebs-csi-node-ppjzz_kube-system_node-driver-registrar-7a296ed4cc4883a5e8ccc389151cb3f30b744e520af3dfc79b775bb608e05624.log -> /var/log/pods/kube-system_ebs-csi-node-ppjzz_ae69b9b9-25f7-4f53-8c4b-5413d229fe73/node-driver-registrar/0.log

│ ├── external-dns-7fd77dcbc-2zrwl_kube-system_external-dns-7cabd2fd2895ffd14c5e4e1844f96b9da5a883ede5e494034333afdf89d84b8f.log -> /var/log/pods/kube-system_external-dns-7fd77dcbc-2zrwl_2bb2e013-cef2-45ec-8ebc-0ed85b244f5c/external-dns/0.log

│ ├── fluent-bit-gwfhs_amazon-cloudwatch_fluent-bit-8e74de5398fdcd84b002cd767f12bb2ad141b79cc154f4552a81458b0f627133.log -> /var/log/pods/amazon-cloudwatch_fluent-bit-gwfhs_80072808-30b3-4b99-9a54-912897e665ae/fluent-bit/0.log

│ └── kube-proxy-wqx6h_kube-system_kube-proxy-487cf8f577d839b3dfb1a33f6566acfb6c622b3eade5b24163cca3e53018c540.log -> /var/log/pods/kube-system_kube-proxy-wqx6h_1e2778dc-5dc6-4df2-bc40-a2e235047e3d/kube-proxy/0.log

├── cron

├── dmesg

├── dmesg.old

├── grubby

├── grubby_prune_debug

├── journal

│ ├── ec23176a900a65d36ea89e5c2be53139

│ │ └── system.journal

│ └── ec26de76cb85cc929ac531d1eb97342f

│ ├── system.journal

│ └── user-1000.journal

├── lastlog

├── maillog

├── messages

├── pods

│ ├── amazon-cloudwatch_cloudwatch-agent-rcczp_acbc437e-fed1-4ef2-b734-a38b7d4d2df0

│ │ └── otc-container

│ │ └── 0.log

│ ├── amazon-cloudwatch_fluent-bit-gwfhs_80072808-30b3-4b99-9a54-912897e665ae

│ │ └── fluent-bit

│ │ └── 0.log

│ ├── kube-system_aws-load-balancer-controller-5f7b66cdd5-4xdsv_f1e0e25d-b2f1-432b-b95c-de8d529dc902

│ │ └── aws-load-balancer-controller

│ │ └── 0.log

│ ├── kube-system_aws-node-pzbwp_2495d0b9-52aa-4878-a2de-1fee29bbef82

│ │ ├── aws-eks-nodeagent

│ │ │ └── 0.log

│ │ ├── aws-node

│ │ │ └── 0.log

│ │ └── aws-vpc-cni-init

│ │ └── 0.log

│ ├── kube-system_ebs-csi-controller-7df5f479d4-2fkct_5b9f84ef-7368-4830-a8f3-81da5a90a3de

│ │ ├── csi-attacher

│ │ │ └── 0.log

│ │ ├── csi-provisioner

│ │ │ └── 0.log

│ │ ├── csi-resizer

│ │ │ └── 0.log

│ │ ├── csi-snapshotter

│ │ │ └── 0.log

│ │ ├── ebs-plugin

│ │ │ └── 0.log

│ │ └── liveness-probe

│ │ └── 0.log

│ ├── kube-system_ebs-csi-node-ppjzz_ae69b9b9-25f7-4f53-8c4b-5413d229fe73

│ │ ├── ebs-plugin

│ │ │ └── 0.log

│ │ ├── liveness-probe

│ │ │ └── 0.log

│ │ └── node-driver-registrar

│ │ └── 0.log

│ ├── kube-system_external-dns-7fd77dcbc-2zrwl_2bb2e013-cef2-45ec-8ebc-0ed85b244f5c

│ │ └── external-dns

│ │ └── 0.log

│ └── kube-system_kube-proxy-wqx6h_1e2778dc-5dc6-4df2-bc40-a2e235047e3d

│ └── kube-proxy

│ └── 0.log

├── sa

│ └── sa29

├── secure

├── spooler

├── tallylog

├── wtmp

└── yum.log

37 directories, 66 files-

위험한 점 : host에서 사용하는 docker.sock가 Pod에 mount 되어있는 상태에서 악의적인 사용자가 해당 Pod에 docker만 설치할 수 있다면, mount된 dock.sock을 이용하여 host의 docker에 명령을 보낼 수 있게 됩니다.(docker가 client-server 구조이기 때문에 가능).

- 이는 container escape 라고도 할 수 있습니다.

-

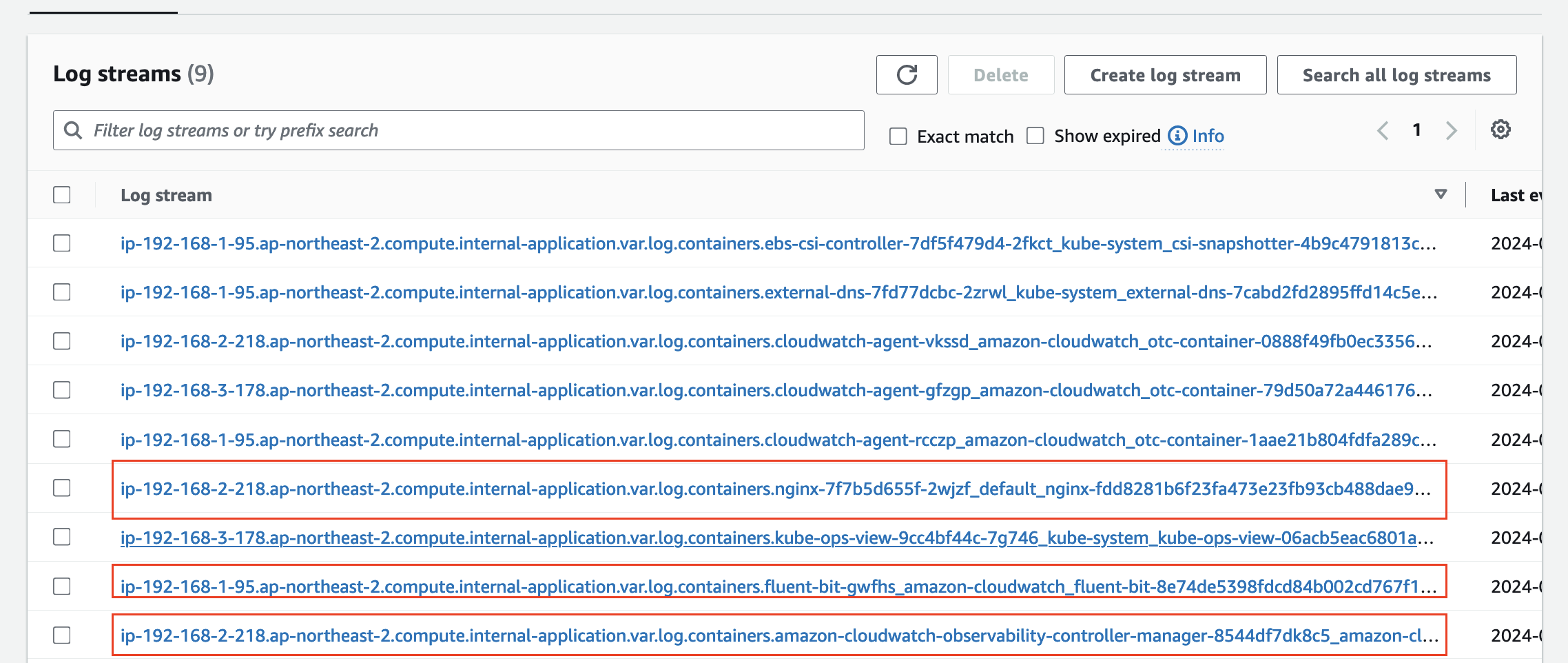

CloudWatch Agent 로그 그룹을 살펴 보겠습니다.

- 로그 그룹 중 하나인 /aws/containerinsights/myeks/application 을 살펴 봅시다.

- nginx, fluentbit..

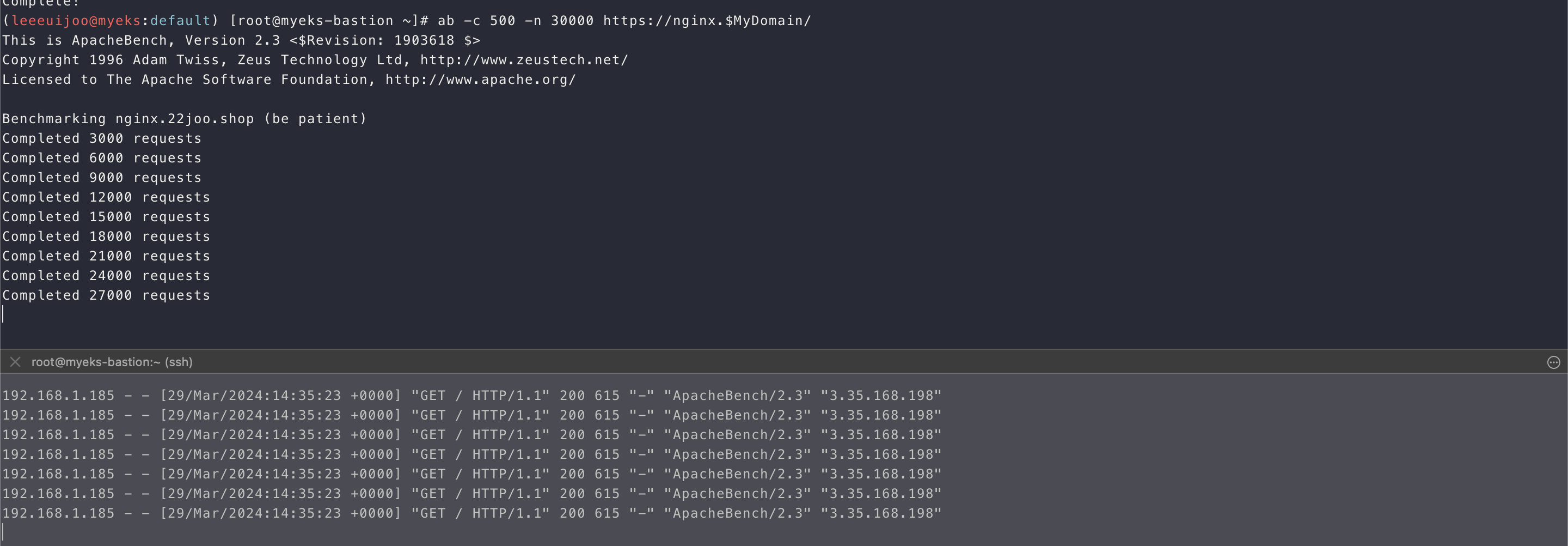

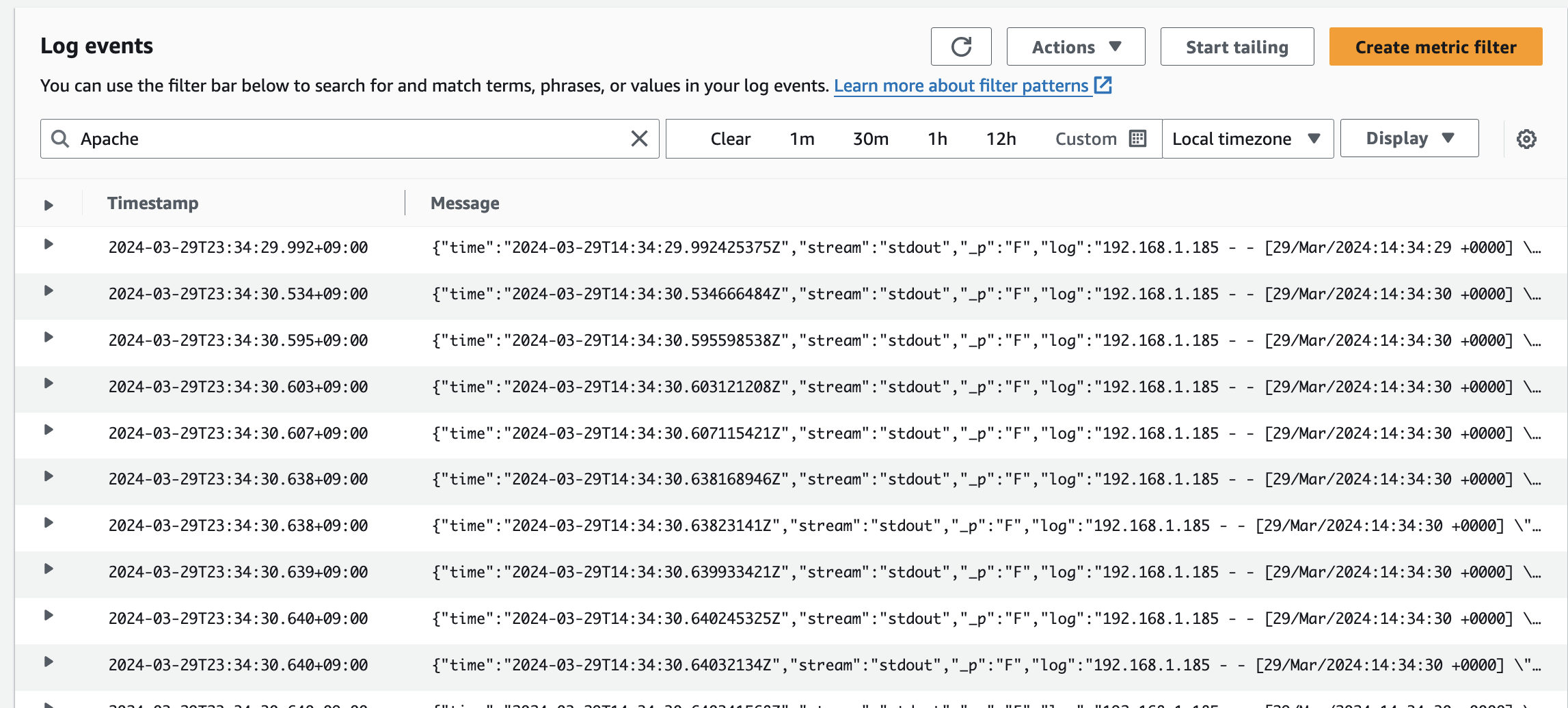

- nginx 서비스에 Apache bench Tool 을 이용하여 부하를 주겠습니다.

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# yum install -y httpd

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# ab -c 500 -n 30000 https://nginx.$MyDomain/

This is ApacheBench, Version 2.3 <$Revision: 1903618 $>

Copyright 1996 Adam Twiss, Zeus Technology Ltd, http://www.zeustech.net/

Licensed to The Apache Software Foundation, http://www.apache.org/

Benchmarking nginx.22joo.shop (be patient)

Completed 3000 requests

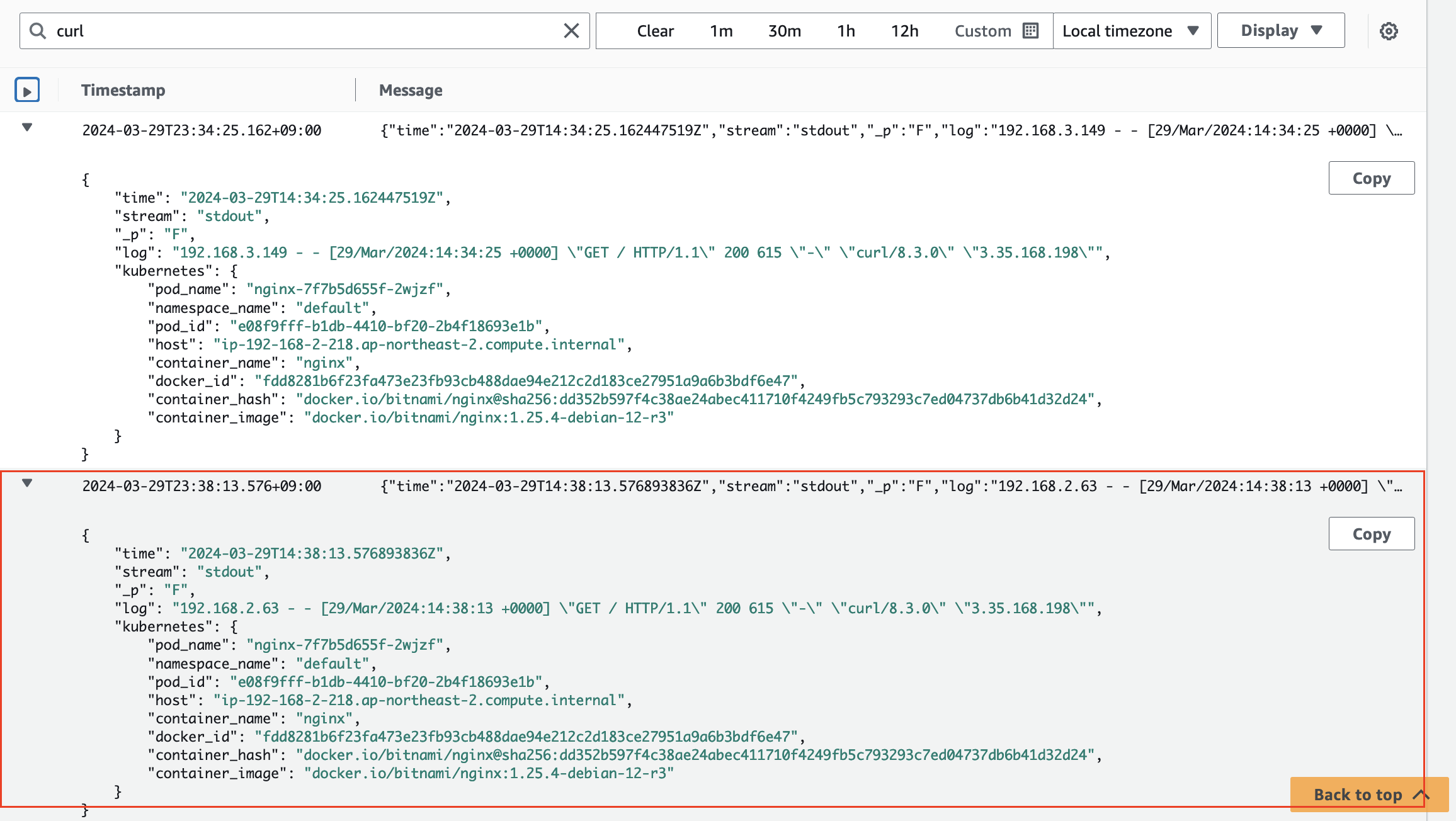

Completed 6000 requests- nignx pod log 모니터링

- nginx log stream 을 검색합니다.

- Domain Curl

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# curl -s https://nginx.$MyDomain

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>- nginx 로그 스트림 확인

- 방금 Pod 에 찌른 Log 가 기록됩니다.

- Apache Bench Tool Log

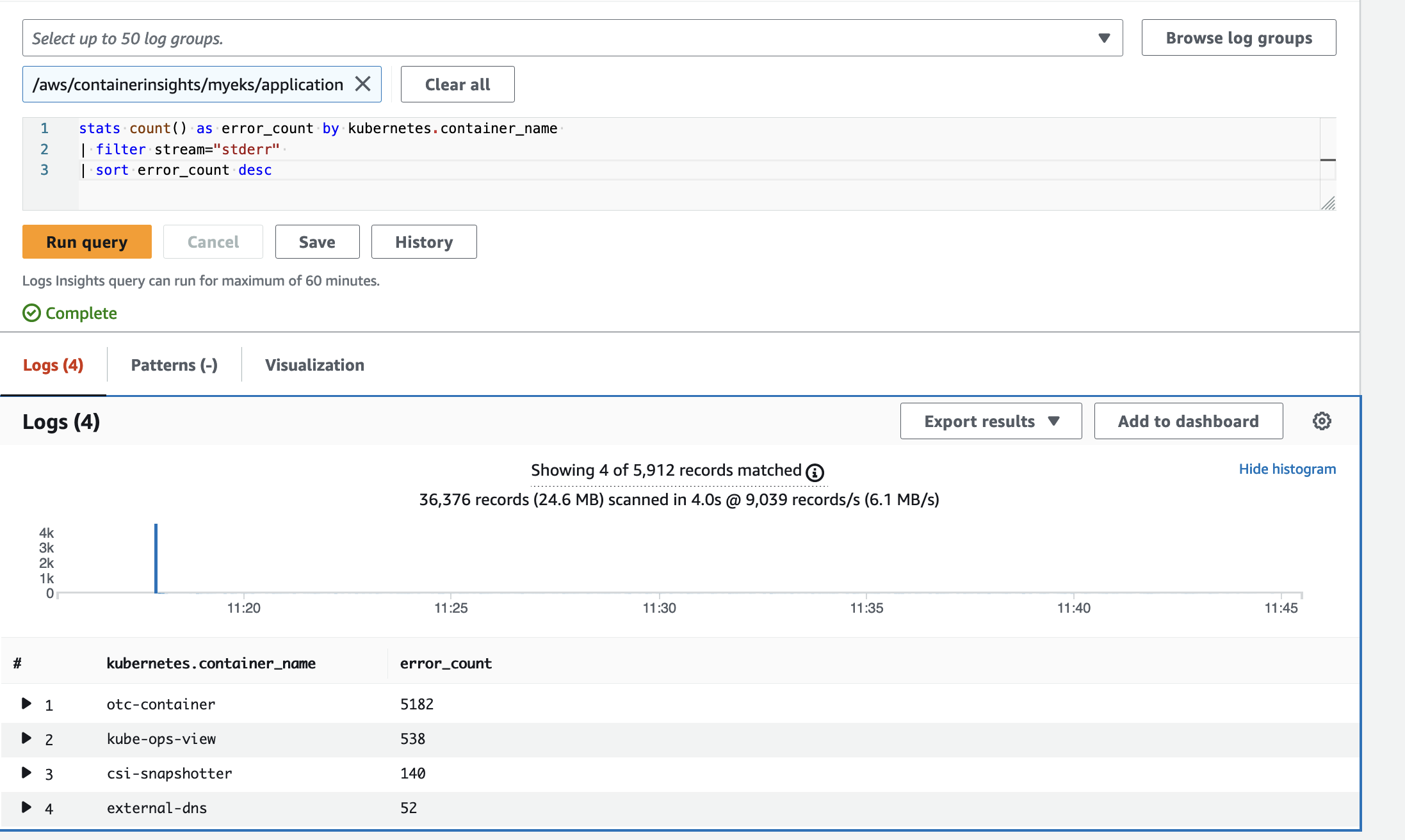

- Log Insights

- Application 들에서 발생했던 오류를 Count 하는 쿼리

stats count() as error_count by kubernetes.container_name

| filter stream="stderr"

| sort error_count desc

- 기타 Log Insights Query (Sample)

# All Kubelet errors/warning logs for for a given EKS worker node

# 로그 그룹 선택 : /aws/containerinsights/<CLUSTER_NAME>/dataplane

fields @timestamp, @message, ec2_instance_id

| filter message =~ /.*(E|W)[0-9]{4}.*/ and ec2_instance_id="<YOUR INSTANCE ID>"

| sort @timestamp desc

# Kubelet errors/warning count per EKS worker node in the cluster

# 로그 그룹 선택 : /aws/containerinsights/<CLUSTER_NAME>/dataplane

fields @timestamp, @message, ec2_instance_id

| filter message =~ /.*(E|W)[0-9]{4}.*/

| stats count(*) as error_count by ec2_instance_id

# performance 로그 그룹

# 로그 그룹 선택 : /aws/containerinsights/<CLUSTER_NAME>/performance

# 노드별 평균 CPU 사용률

STATS avg(node_cpu_utilization) as avg_node_cpu_utilization by NodeName

| SORT avg_node_cpu_utilization DESC

# 파드별 재시작(restart) 카운트

STATS avg(number_of_container_restarts) as avg_number_of_container_restarts by PodName

| SORT avg_number_of_container_restarts DESC

# 요청된 Pod와 실행 중인 Pod 간 비교

fields @timestamp, @message

| sort @timestamp desc

| filter Type="Pod"

| stats min(pod_number_of_containers) as requested, min(pod_number_of_running_containers) as running, ceil(avg(pod_number_of_containers-pod_number_of_running_containers)) as pods_missing by kubernetes.pod_name

| sort pods_missing desc

# 클러스터 노드 실패 횟수

stats avg(cluster_failed_node_count) as CountOfNodeFailures

| filter Type="Cluster"

| sort @timestamp desc

# 파드별 CPU 사용량

stats pct(container_cpu_usage_total, 50) as CPUPercMedian by kubernetes.container_name

| filter Type="Container"

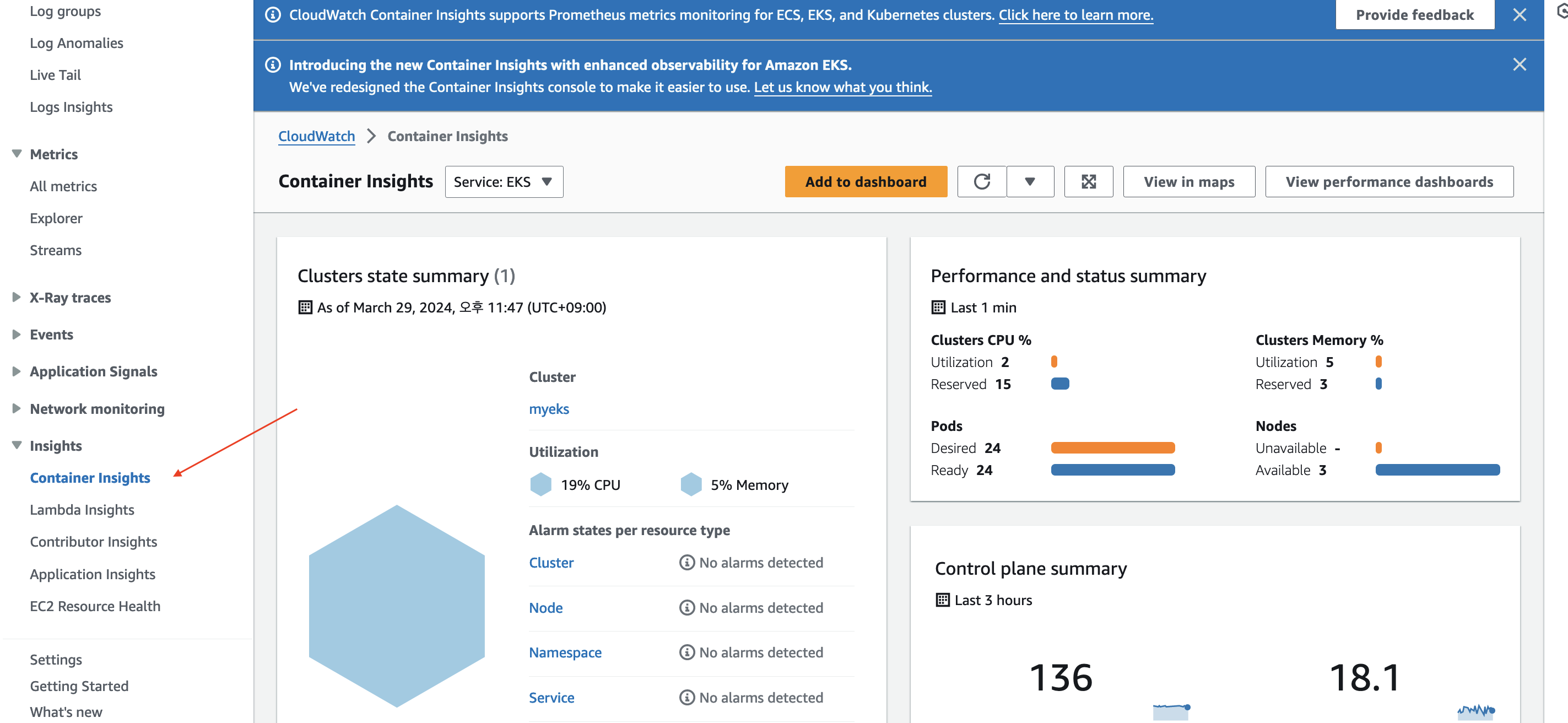

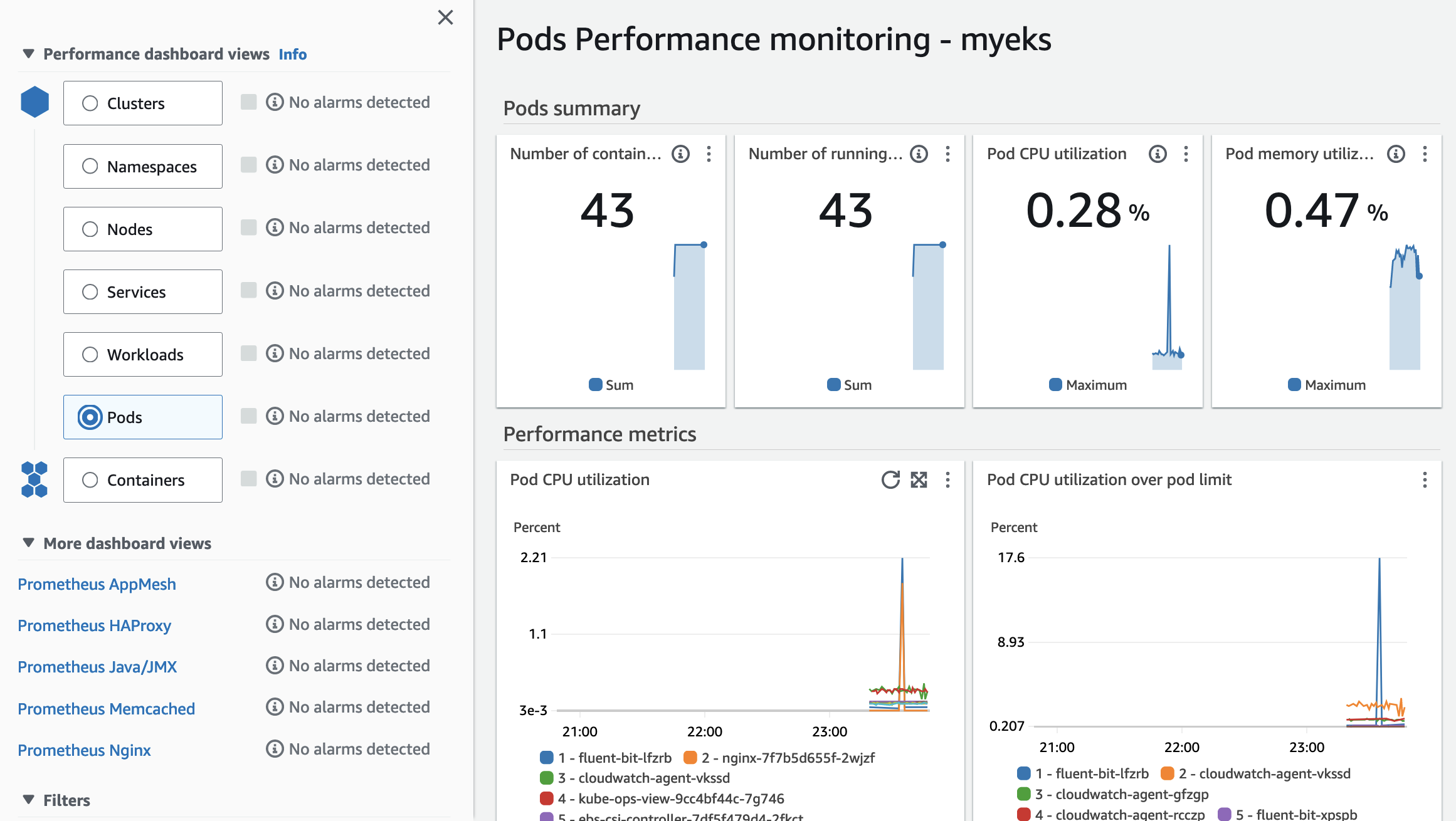

| sort CPUPercMedian desc- CloudWatch Container observability 애드온을 설치했기 때문에 Container Insights 를 볼 수 있게 됩니다.

- 현재 EKS 가 어떤 퍼포먼스를 내고 있는지 확인 할 수도 있습니다.

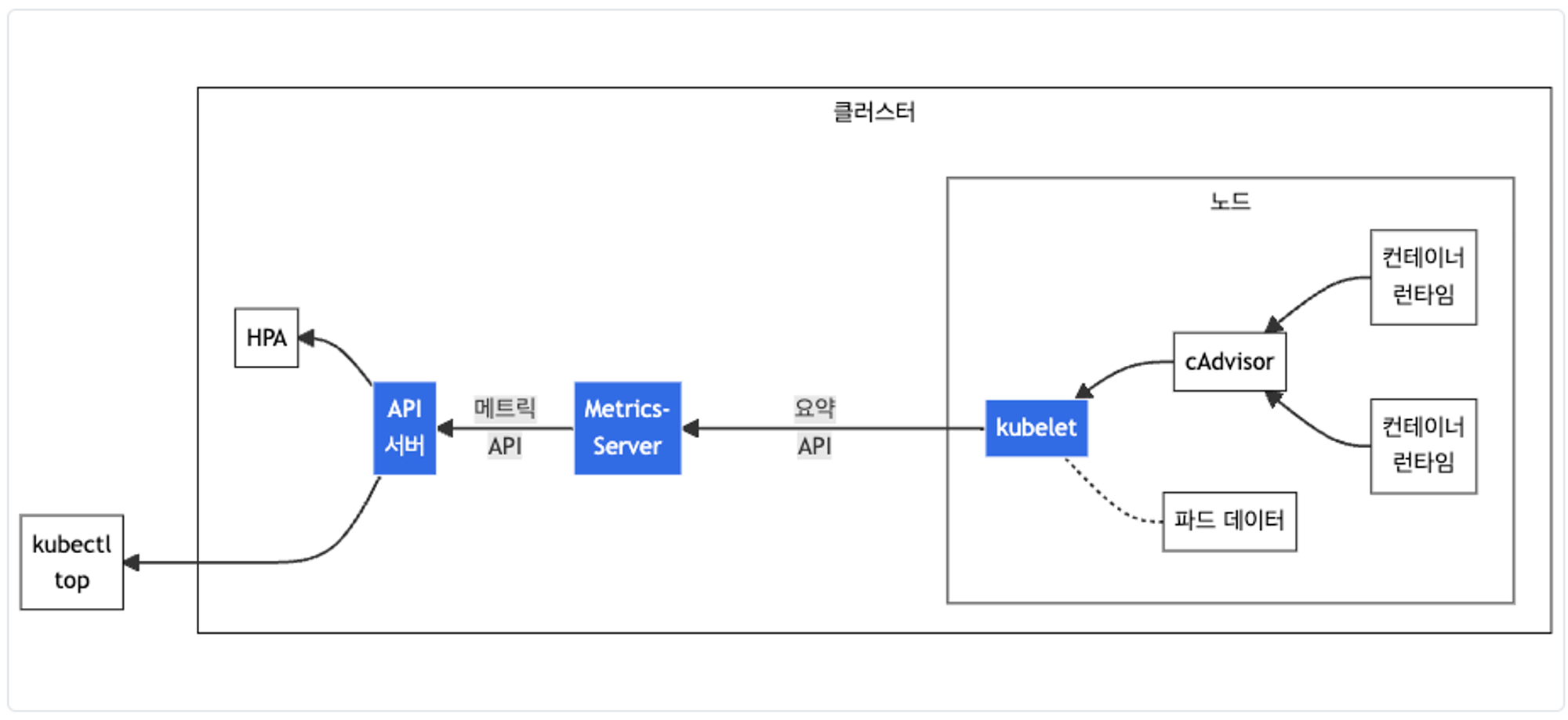

3. Metrics-server & kwatch & botkube

- Metrics-server : kubelet으로부터 수집한 리소스 메트릭을 수집 및 집계하는 클러스터 애드온 구성 요소

- cAdvisor : kubelet에 포함된 컨테이너 메트릭을 수집, 집계, 노출하는 데몬

# Install

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl apply -f https://github.com/kubernetes-sigs/metrics-server/releases/latest/download/components.yaml

serviceaccount/metrics-server created

clusterrole.rbac.authorization.k8s.io/system:aggregated-metrics-reader created

clusterrole.rbac.authorization.k8s.io/system:metrics-server created

rolebinding.rbac.authorization.k8s.io/metrics-server-auth-reader created

clusterrolebinding.rbac.authorization.k8s.io/metrics-server:system:auth-delegator created

clusterrolebinding.rbac.authorization.k8s.io/system:metrics-server created

service/metrics-server created

# 메트릭 서버 확인 : 메트릭은 15초 간격으로 cAdvisor를 통하여 가져옴

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl get pod -n kube-system -l k8s-app=metrics-server

NAME READY STATUS RESTARTS AGE

metrics-server-6d94bc8694-xtl5w 1/1 Running 0 41s

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl api-resources | grep metrics

nodes metrics.k8s.io/v1beta1 false NodeMetrics

pods metrics.k8s.io/v1beta1 true PodMetrics

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl get apiservices |egrep '(AVAILABLE|metrics)'

NAME SERVICE AVAILABLE AGE

v1beta1.metrics.k8s.io kube-system/metrics-server True 45s

# 노드 메트릭 확인

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl top node

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

ip-192-168-1-95.ap-northeast-2.compute.internal 92m 2% 787Mi 5%

ip-192-168-2-218.ap-northeast-2.compute.internal 65m 1% 755Mi 5%

ip-192-168-3-178.ap-northeast-2.compute.internal 64m 1% 686Mi 4%

# 파드 메트릭 확인

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl top pod -A

NAMESPACE NAME CPU(cores) MEMORY(bytes)

amazon-cloudwatch amazon-cloudwatch-observability-controller-manager-8544df7dk8c5 1m 16Mi

amazon-cloudwatch cloudwatch-agent-gfzgp 4m 38Mi

amazon-cloudwatch cloudwatch-agent-rcczp 4m 38Mi

amazon-cloudwatch cloudwatch-agent-vkssd 4m 74Mi

amazon-cloudwatch fluent-bit-gwfhs 2m 27Mi

amazon-cloudwatch fluent-bit-lfzrb 2m 26Mi

amazon-cloudwatch fluent-bit-xpspb 1m 26Mi

default nginx-7f7b5d655f-2wjzf 1m 4Mi

kube-system aws-load-balancer-controller-5f7b66cdd5-4xdsv 3m 29Mi

kube-system aws-load-balancer-controller-5f7b66cdd5-7tdkg 1m 23Mi

kube-system aws-node-mntqr 5m 60Mi

kube-system aws-node-pzbwp 5m 61Mi

kube-system aws-node-qtwjb 5m 61Mi

kube-system coredns-55474bf7b9-5wrr7 3m 15Mi

kube-system ebs-csi-controller-7df5f479d4-2fkct 6m 62Mi

kube-system ebs-csi-controller-7df5f479d4-7242q 3m 52Mi

kube-system ebs-csi-node-285rr 1m 22Mi

kube-system ebs-csi-node-ppjzz 1m 21Mi

kube-system ebs-csi-node-r5qrs 1m 22Mi

kube-system external-dns-7fd77dcbc-2zrwl 1m 19Mi

kube-system kube-ops-view-9cc4bf44c-7g746 14m 34Mi

kube-system kube-proxy-cqqcv 1m 12Mi

kube-system kube-proxy-rlkls 1m 12Mi

kube-system kube-proxy-wqx6h 1m 12Mi

kube-system metrics-server-6d94bc8694-xtl5w 4m 17Mi

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl top pod -n kube-system --sort-by='cpu'

NAME CPU(cores) MEMORY(bytes)

kube-ops-view-9cc4bf44c-7g746 11m 34Mi

aws-node-qtwjb 6m 61Mi

ebs-csi-controller-7df5f479d4-2fkct 6m 62Mi

metrics-server-6d94bc8694-xtl5w 5m 18Mi

aws-node-pzbwp 4m 61Mi

aws-node-mntqr 4m 60Mi

coredns-55474bf7b9-5wrr7 3m 15Mi

aws-load-balancer-controller-5f7b66cdd5-4xdsv 3m 29Mi

ebs-csi-controller-7df5f479d4-7242q 3m 52Mi

ebs-csi-node-ppjzz 1m 21Mi

ebs-csi-node-r5qrs 1m 22Mi

external-dns-7fd77dcbc-2zrwl 1m 19Mi

aws-load-balancer-controller-5f7b66cdd5-7tdkg 1m 23Mi

kube-proxy-cqqcv 1m 12Mi

kube-proxy-rlkls 1m 12Mi

kube-proxy-wqx6h 1m 12Mi

ebs-csi-node-285rr 1m 22Mi

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl top pod -n kube-system --sort-by='memory'

NAME CPU(cores) MEMORY(bytes)

ebs-csi-controller-7df5f479d4-2fkct 6m 62Mi

aws-node-qtwjb 6m 61Mi

aws-node-pzbwp 4m 61Mi

aws-node-mntqr 4m 60Mi

ebs-csi-controller-7df5f479d4-7242q 3m 52Mi

kube-ops-view-9cc4bf44c-7g746 11m 34Mi

aws-load-balancer-controller-5f7b66cdd5-4xdsv 3m 29Mi

aws-load-balancer-controller-5f7b66cdd5-7tdkg 1m 23Mi

ebs-csi-node-r5qrs 1m 22Mi

ebs-csi-node-285rr 1m 22Mi

ebs-csi-node-ppjzz 1m 21Mi

external-dns-7fd77dcbc-2zrwl 1m 19Mi

metrics-server-6d94bc8694-xtl5w 5m 18Mi

coredns-55474bf7b9-5wrr7 3m 15Mi

kube-proxy-cqqcv 1m 12Mi

kube-proxy-rlkls 1m 12Mi

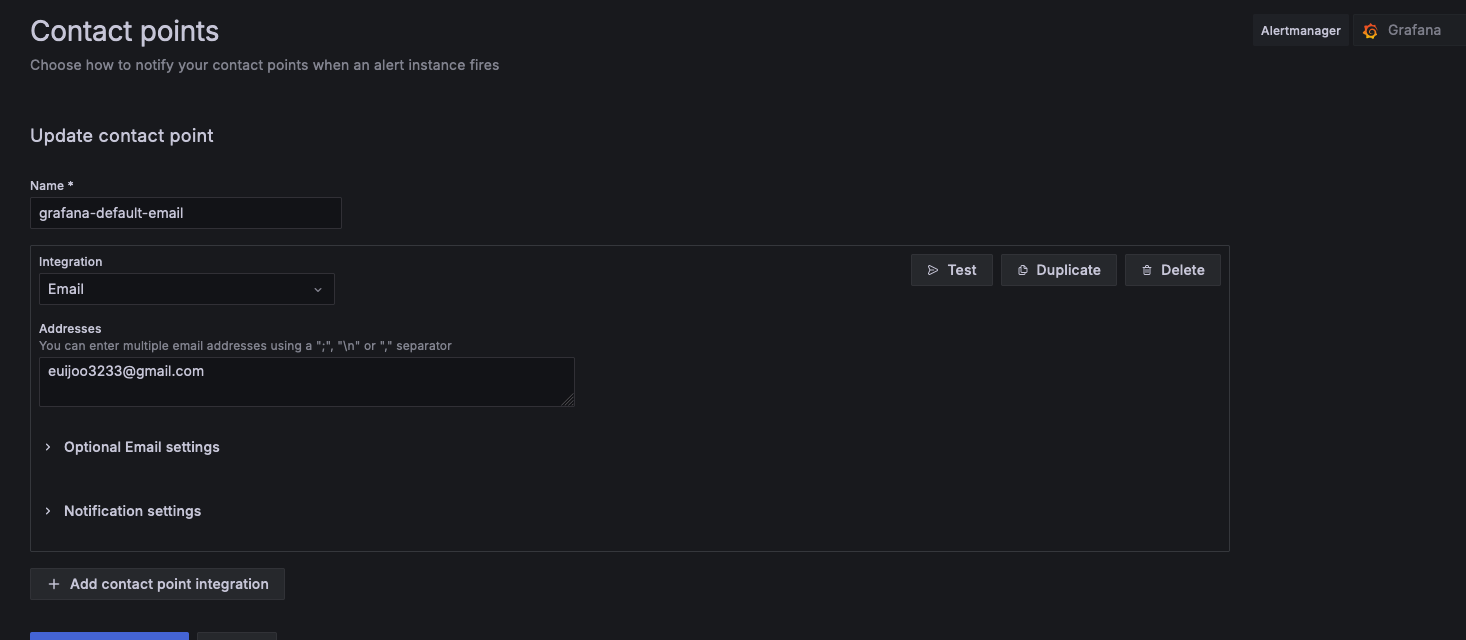

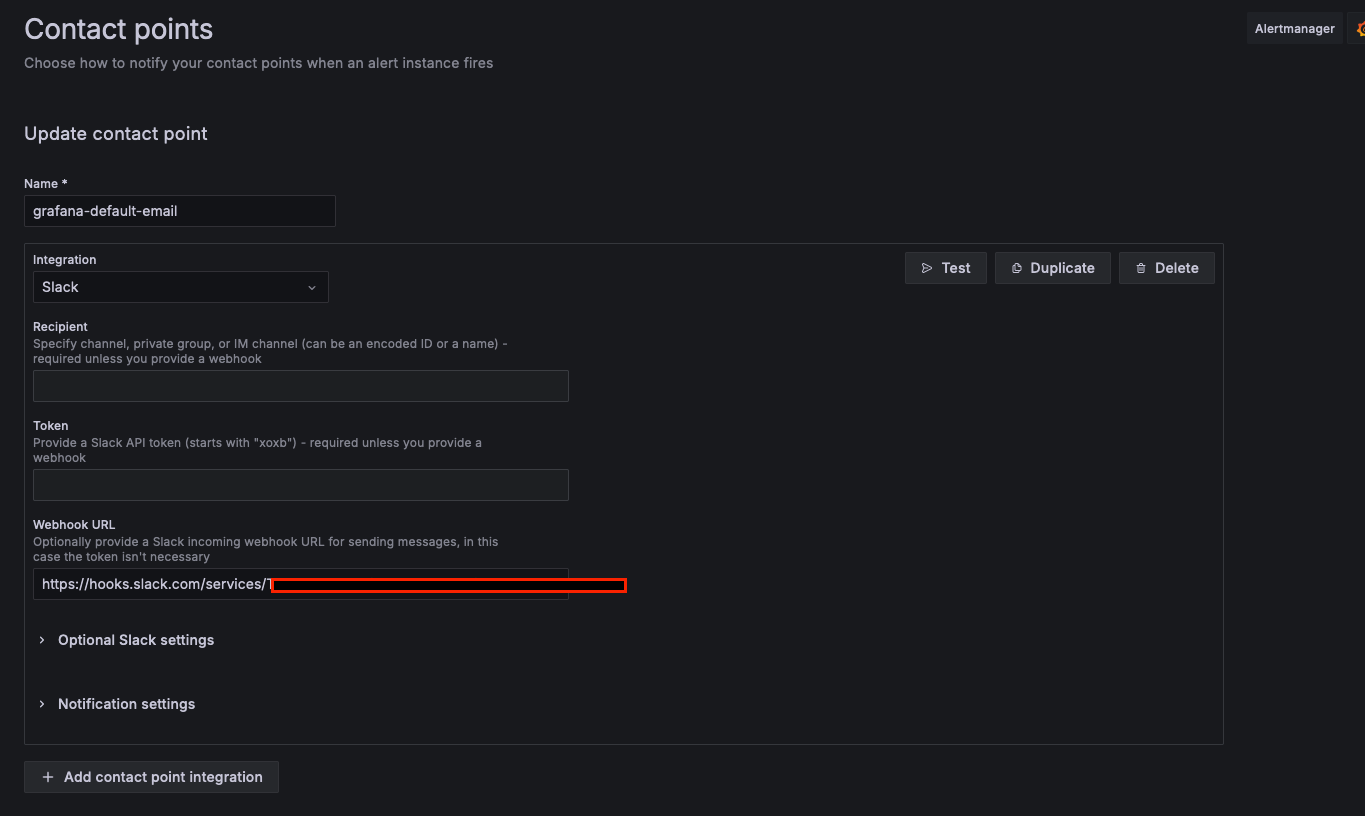

kube-proxy-wqx6h 1m 12Mi- kwatch : kwatch는 Kubernetes(K8s) 클러스터의 모든 변경 사항을 모니터링하고, 실행 중인 앱의 충돌을 실시간으로 감지하고, 채널(Slack, Discord 등)에 즉시, 알림을 게시하는 데 도움이 되는 Tool 입니다.

# 닉네임

NICK=euijoo

# configmap 생성

cat <<EOT > ~/kwatch-config.yaml

apiVersion: v1

kind: Namespace

metadata:

name: kwatch

---

apiVersion: v1

kind: ConfigMap

metadata:

name: kwatch

namespace: kwatch

data:

config.yaml: |

alert:

slack:

webhook: '<Webhook URL>'

title: $NICK-EKS

#text: Customized text in slack message

pvcMonitor:

enabled: true

interval: 5

threshold: 70

EOT

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl apply -f kwatch-config.yaml

namespace/kwatch created

configmap/kwatch created

# 배포

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl apply -f https://raw.githubusercontent.com/abahmed/kwatch/v0.8.5/deploy/deploy.yaml

namespace/kwatch unchanged

clusterrole.rbac.authorization.k8s.io/kwatch created

serviceaccount/kwatch created

clusterrolebinding.rbac.authorization.k8s.io/kwatch created

deployment.apps/kwatch created- 잘못된 이미지 파드 배포 및 확인

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl apply -f https://raw.githubusercontent.com/junghoon2/kube-books/main/ch05/nginx-error-pod.yml

pod/nginx-19 created

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl get events -w

LAST SEEN TYPE REASON OBJECT MESSAGE

9s Normal Scheduled pod/nginx-19 Successfully assigned default/nginx-19 to ip-192-168-3-178.ap-northeast-2.compute.internal

9s Normal Pulling pod/nginx-19 Pulling image "nginx:1.19.19"

7s Warning Failed pod/nginx-19 Failed to pull image "nginx:1.19.19": rpc error: code = NotFound desc = failed to pull and unpack image "docker.io/library/nginx:1.19.19": failed to resolve reference "docker.io/library/nginx:1.19.19": docker.io/library/nginx:1.19.19: not found

7s Warning Failed pod/nginx-19 Error: ErrImagePull

7s Normal BackOff pod/nginx-19 Back-off pulling image "nginx:1.19.19"

7s Warning Failed pod/nginx-19 Error: ImagePullBackOff

# k8s error pod 삭제

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl delete pod nginx-19

pod "nginx-19" deleted

# kwatch 삭제

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl delete -f https://raw.githubusercontent.com/abahmed/kwatch/v0.8.5/deploy/deploy.yaml

namespace "kwatch" deleted

clusterrole.rbac.authorization.k8s.io "kwatch" deleted

serviceaccount "kwatch" deleted

clusterrolebinding.rbac.authorization.k8s.io "kwatch" deleted

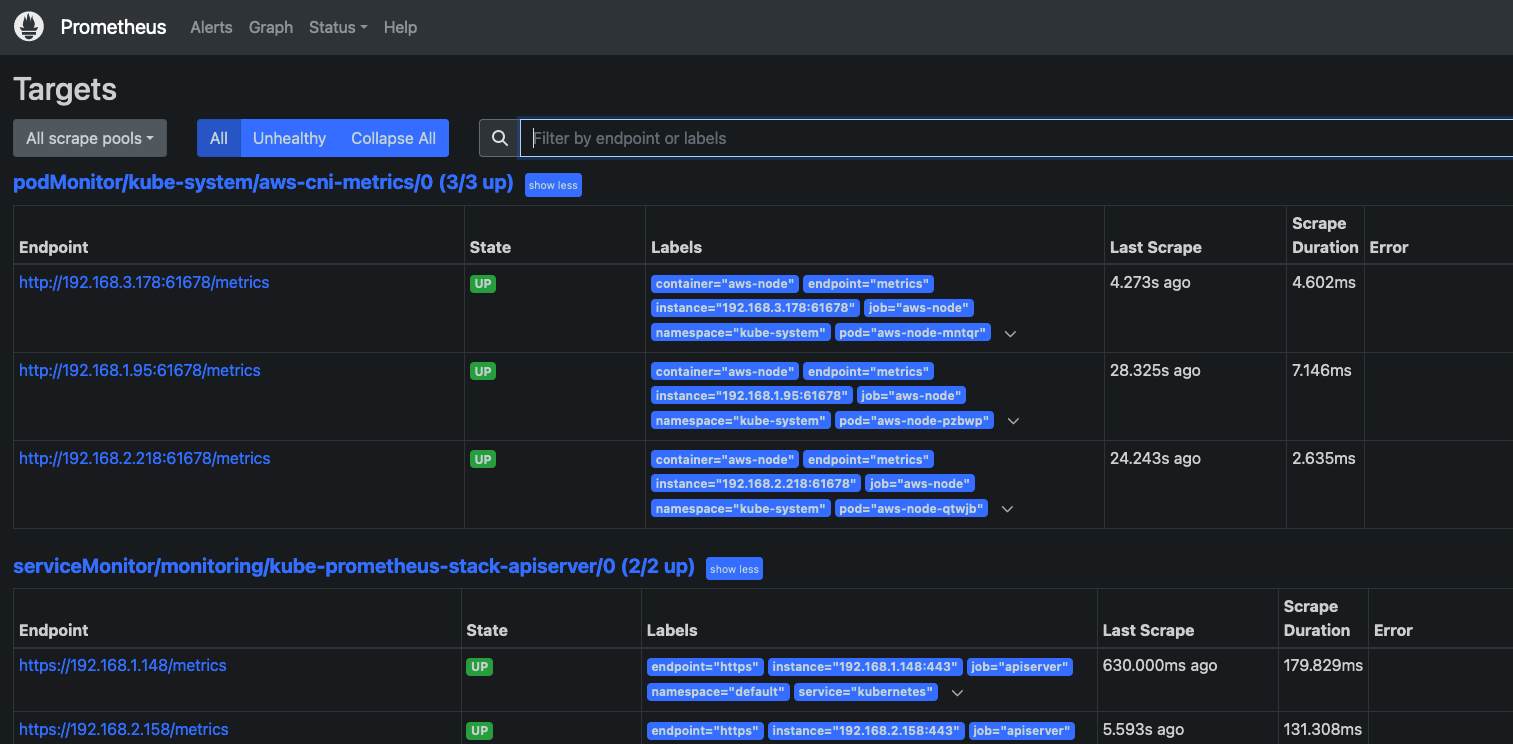

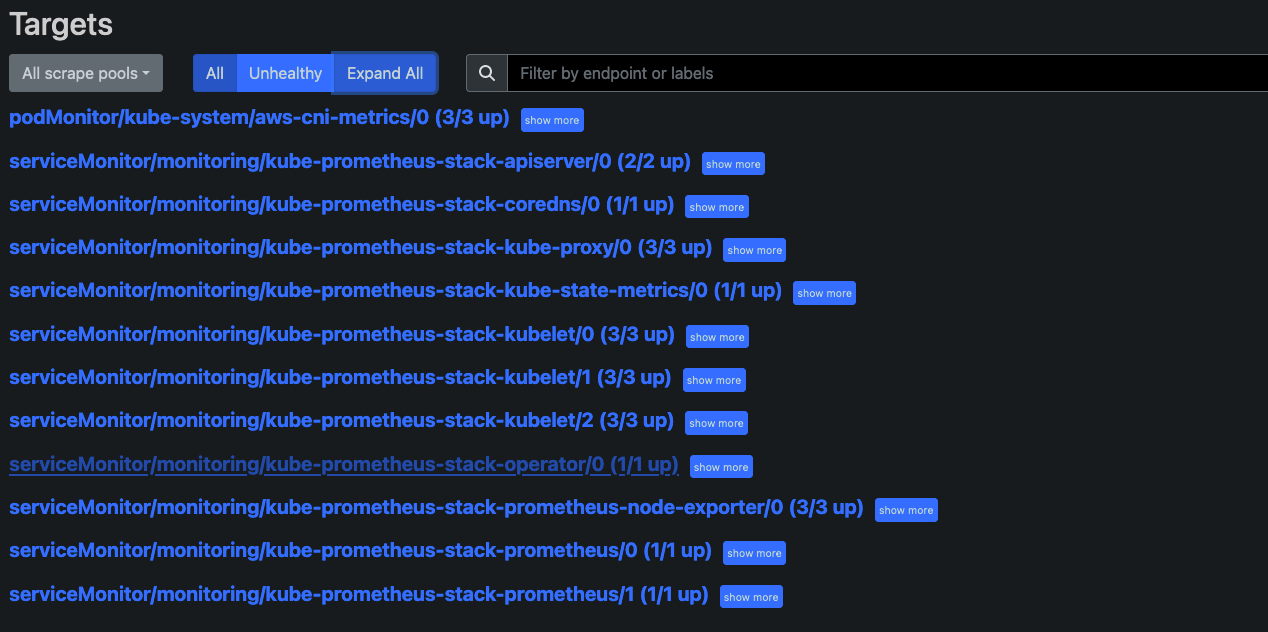

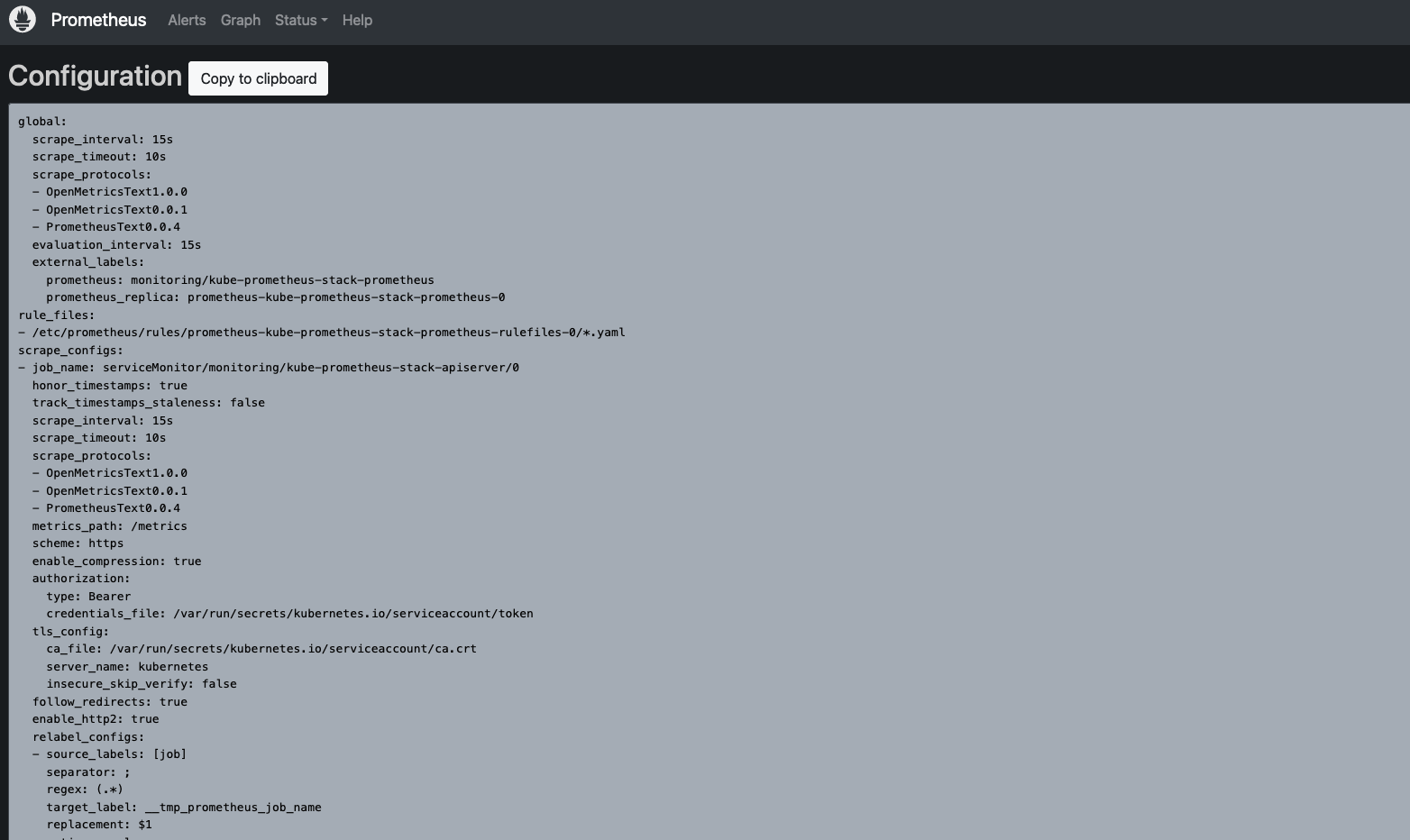

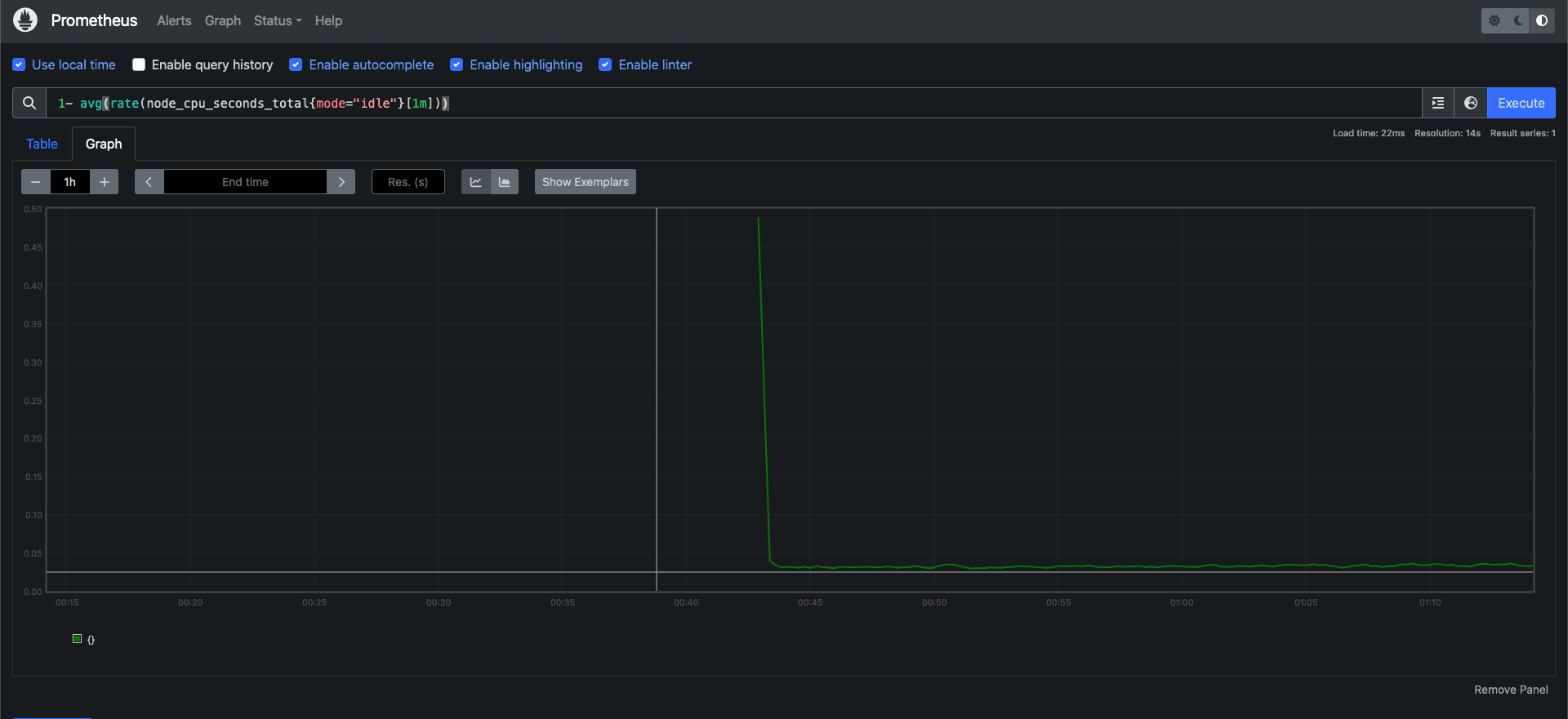

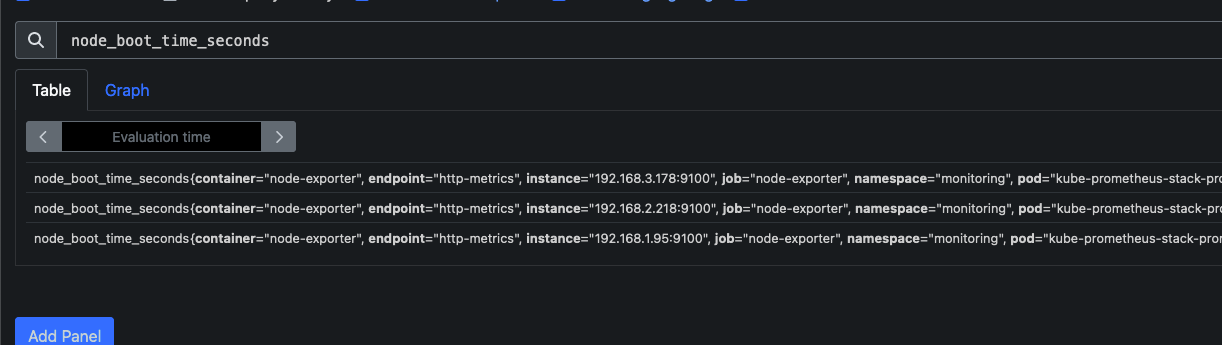

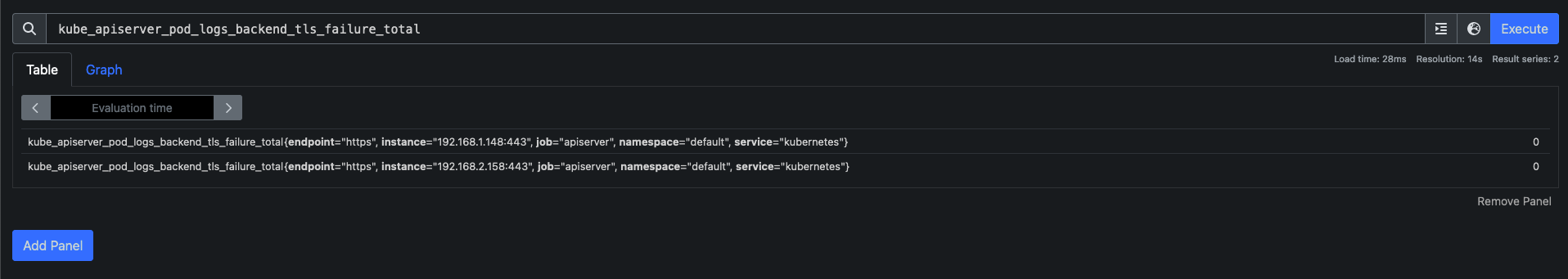

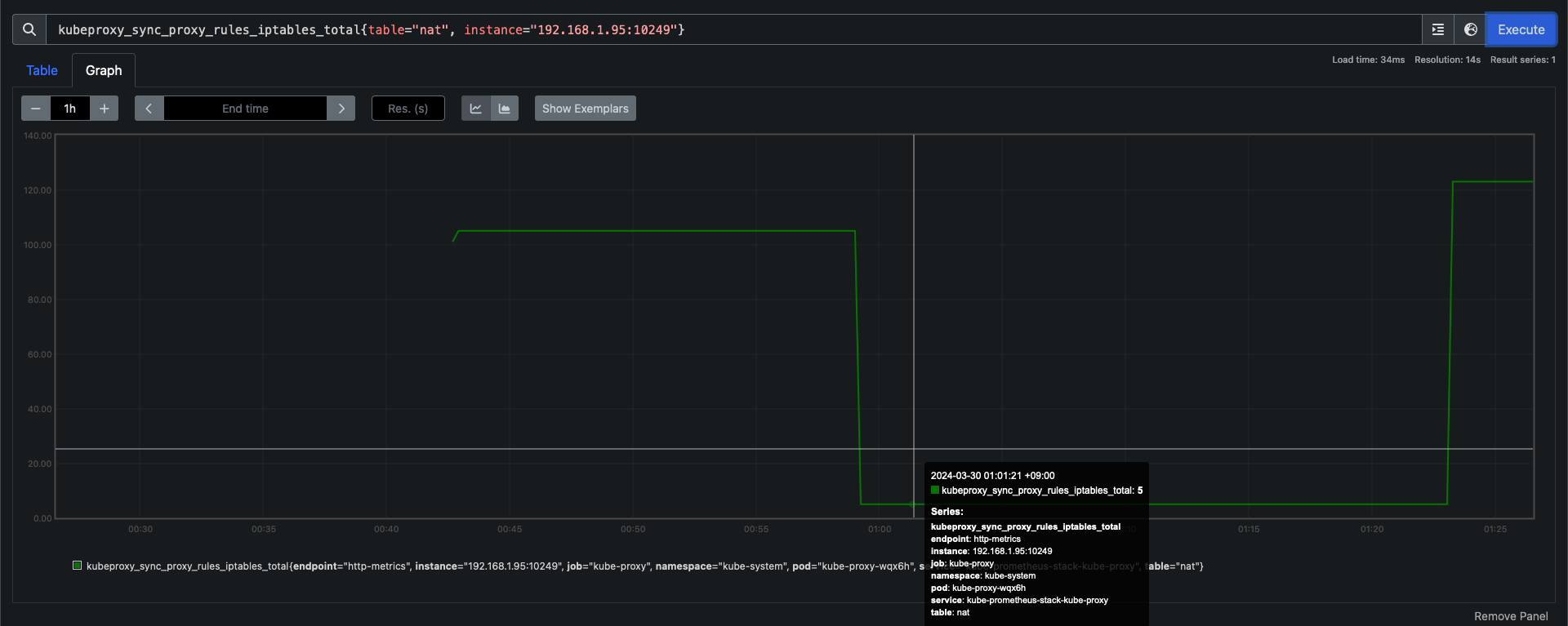

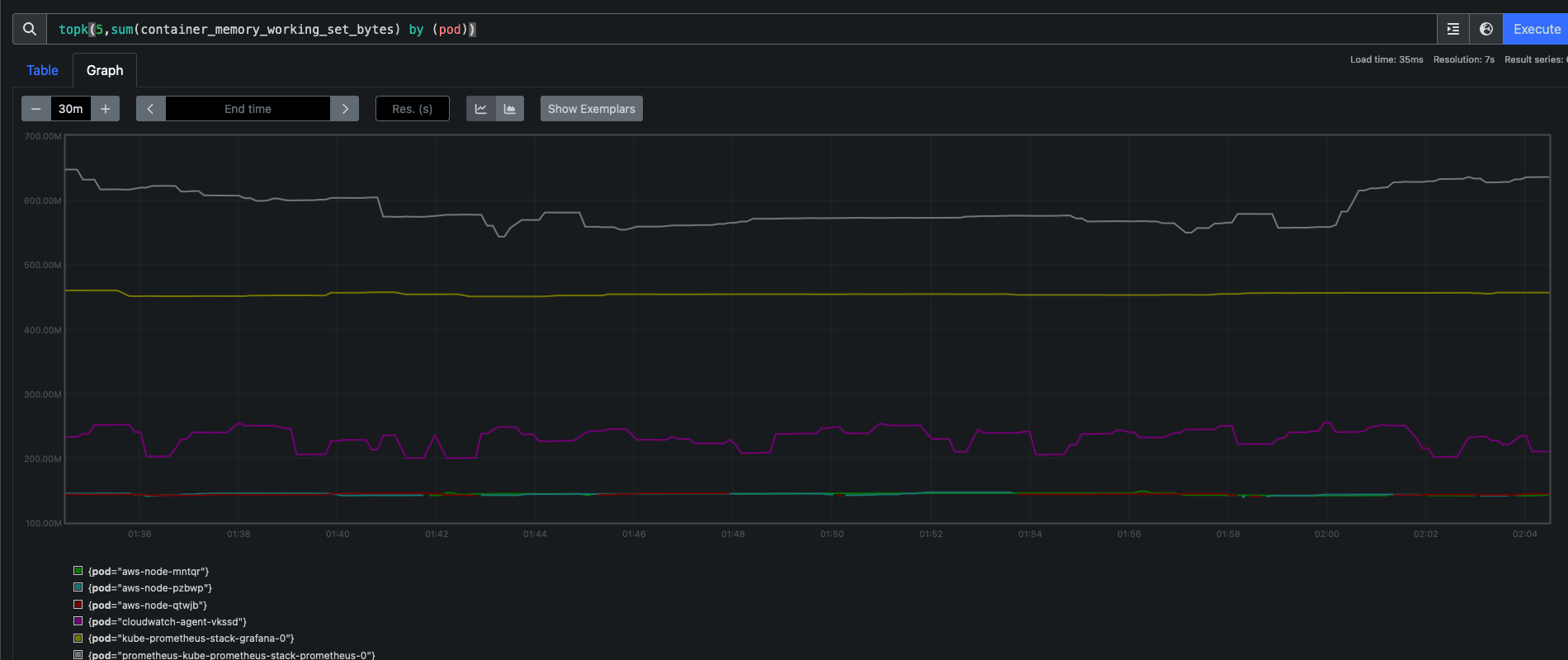

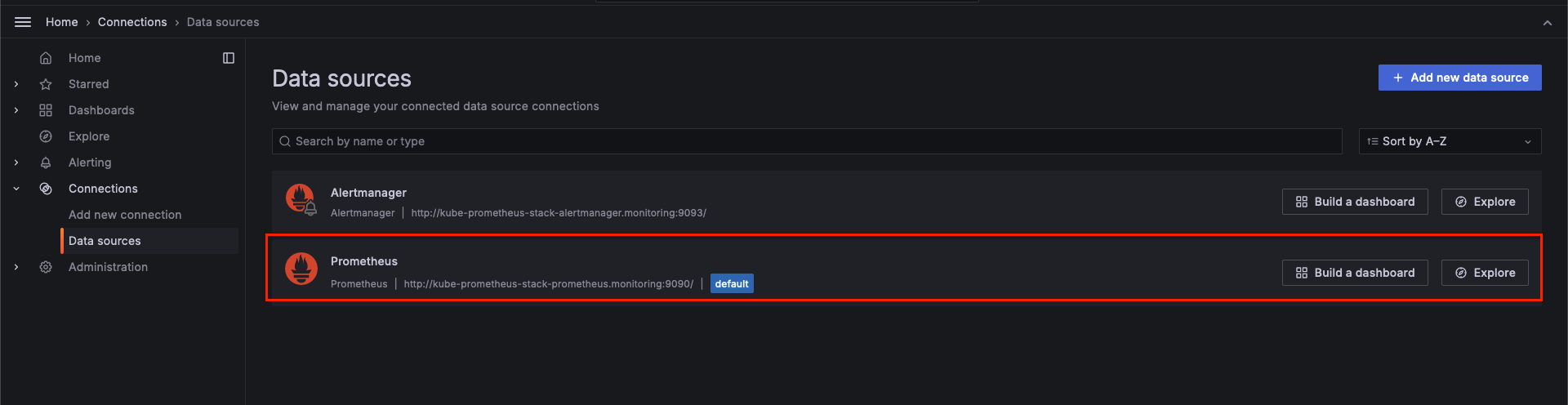

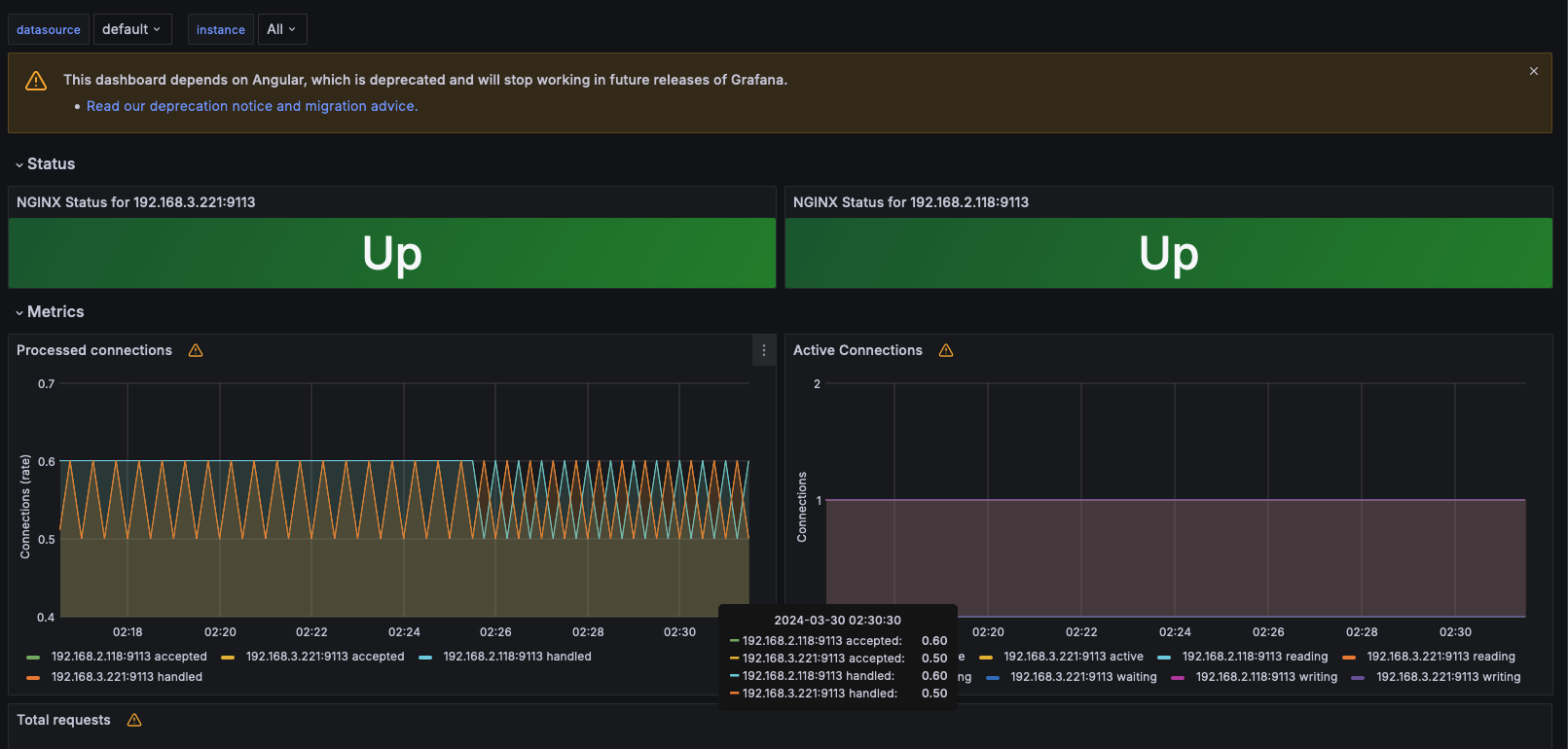

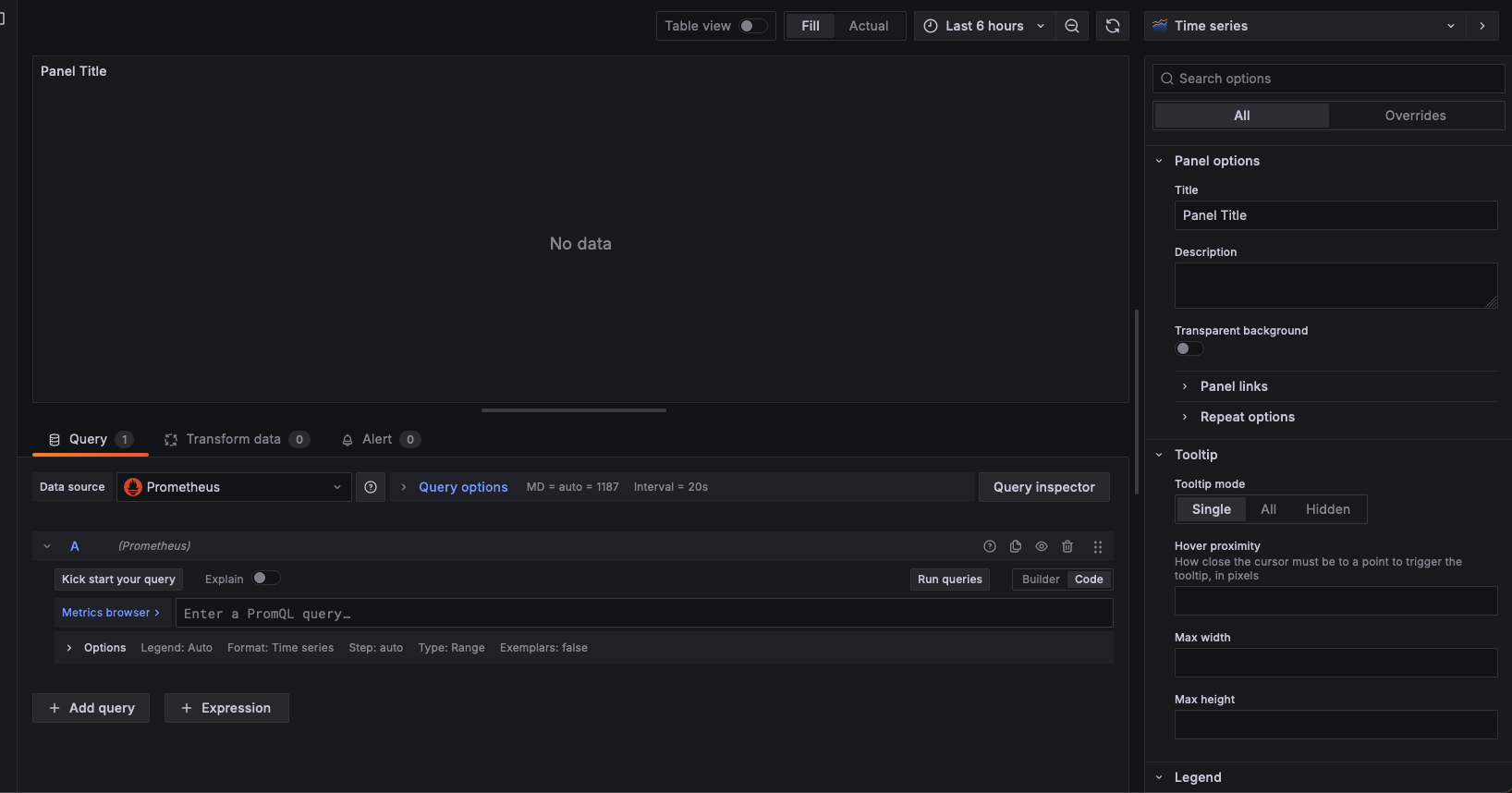

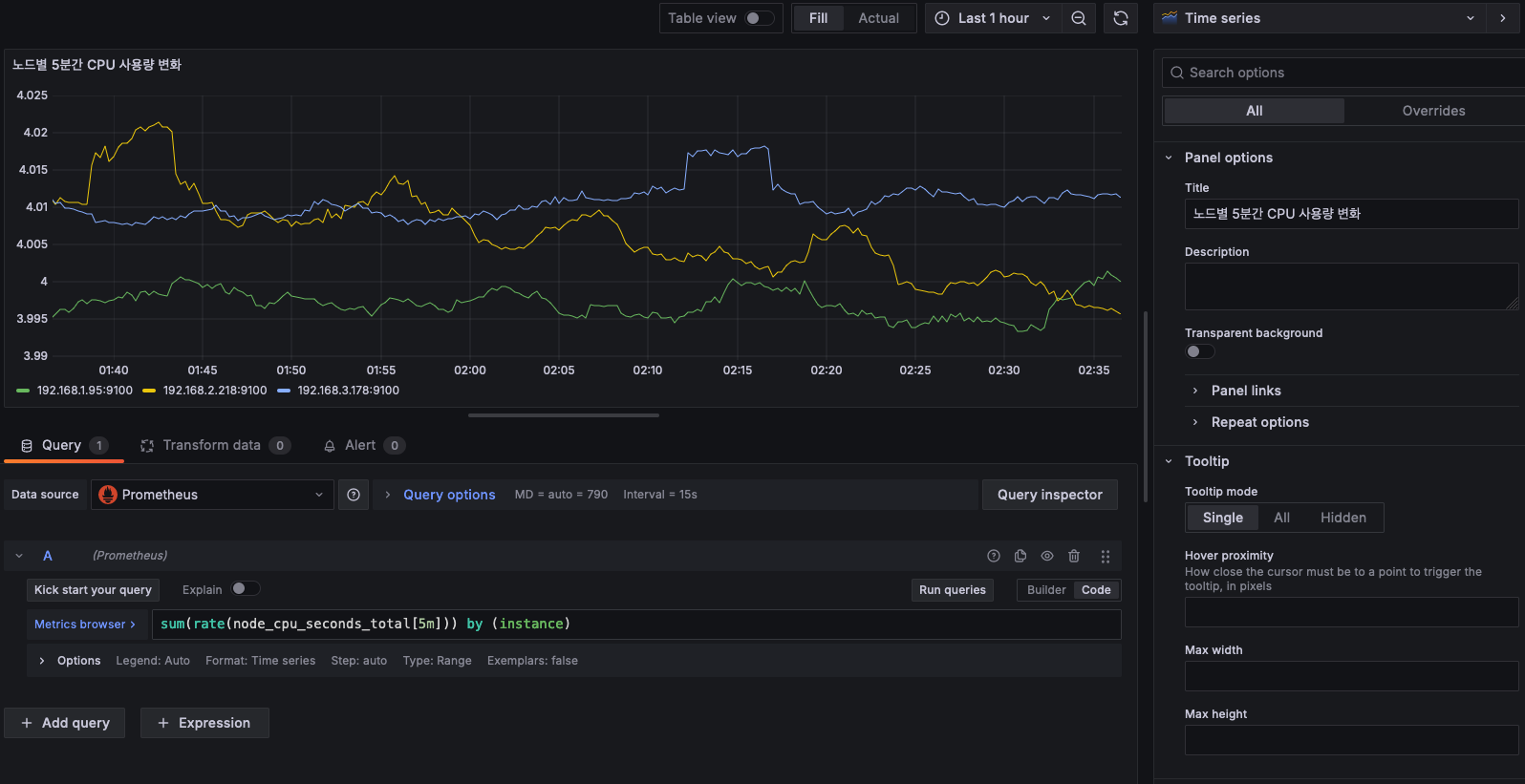

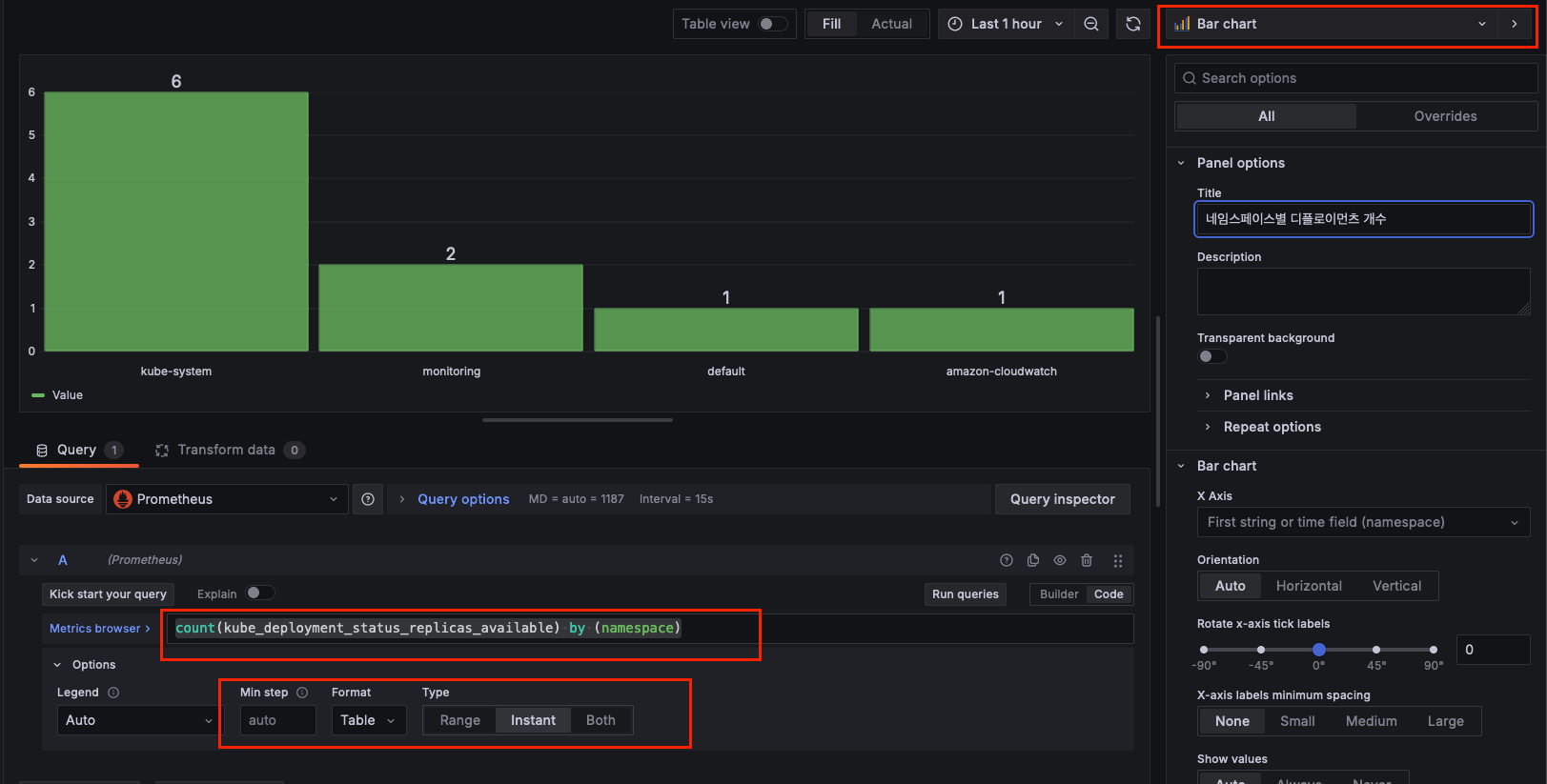

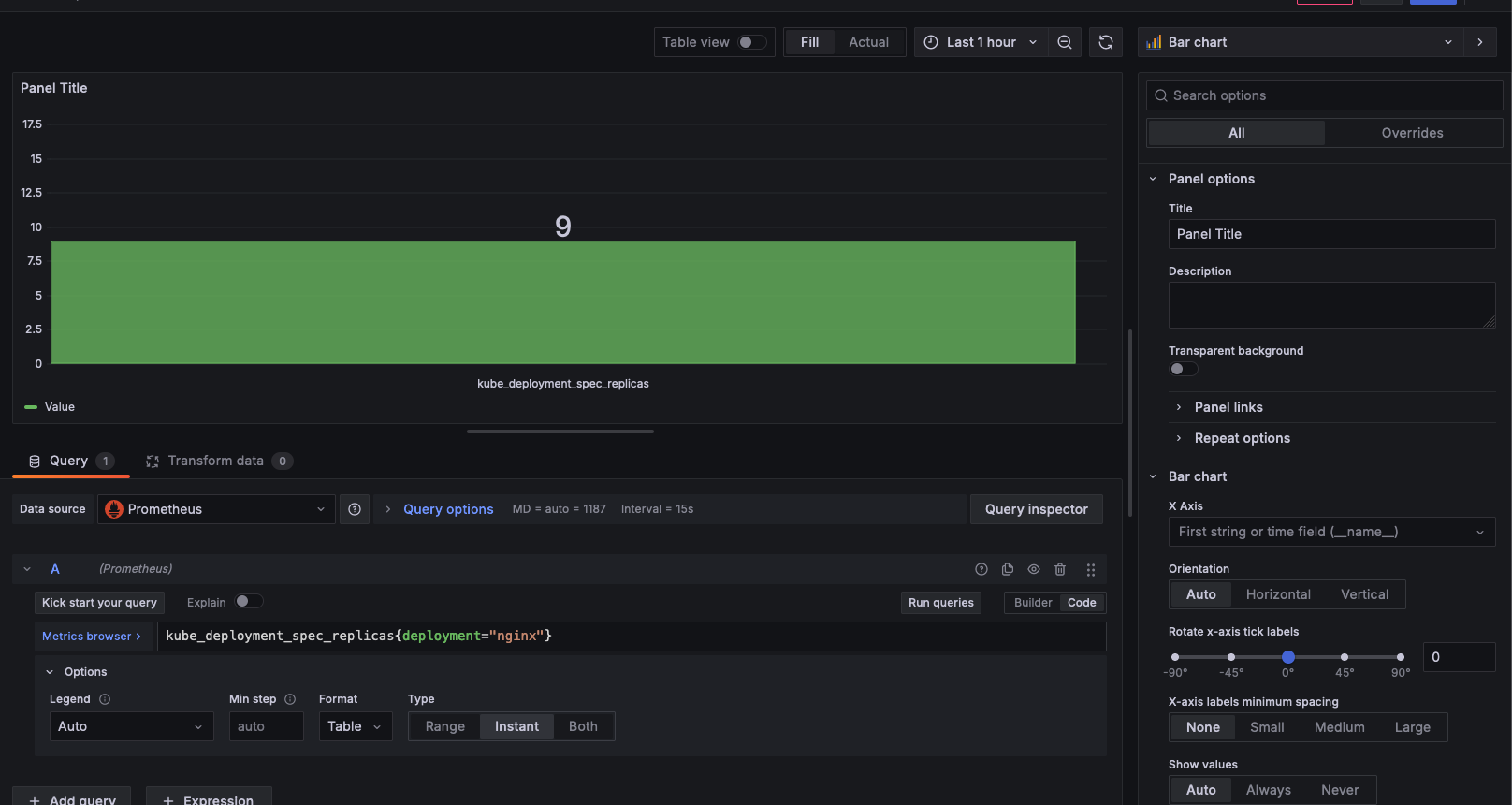

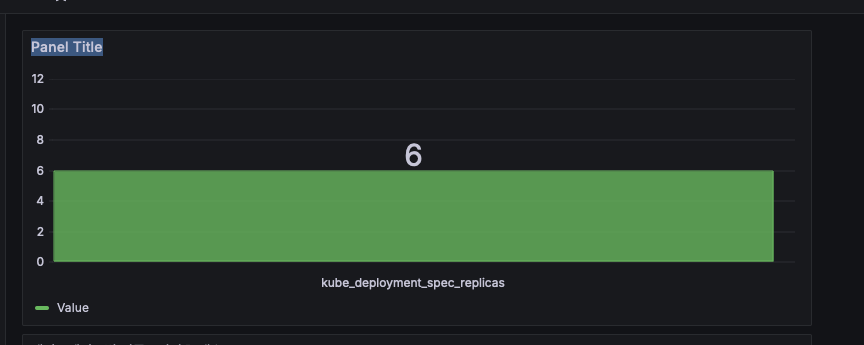

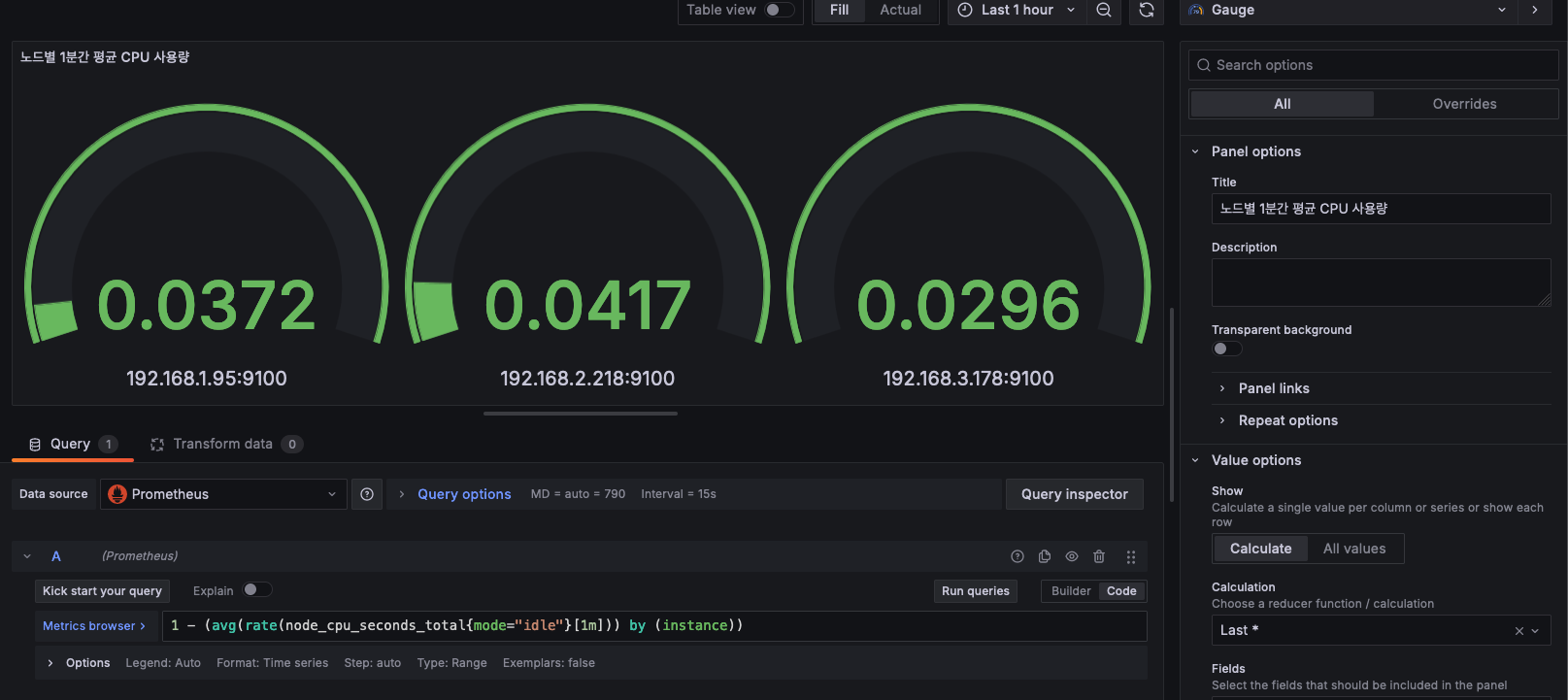

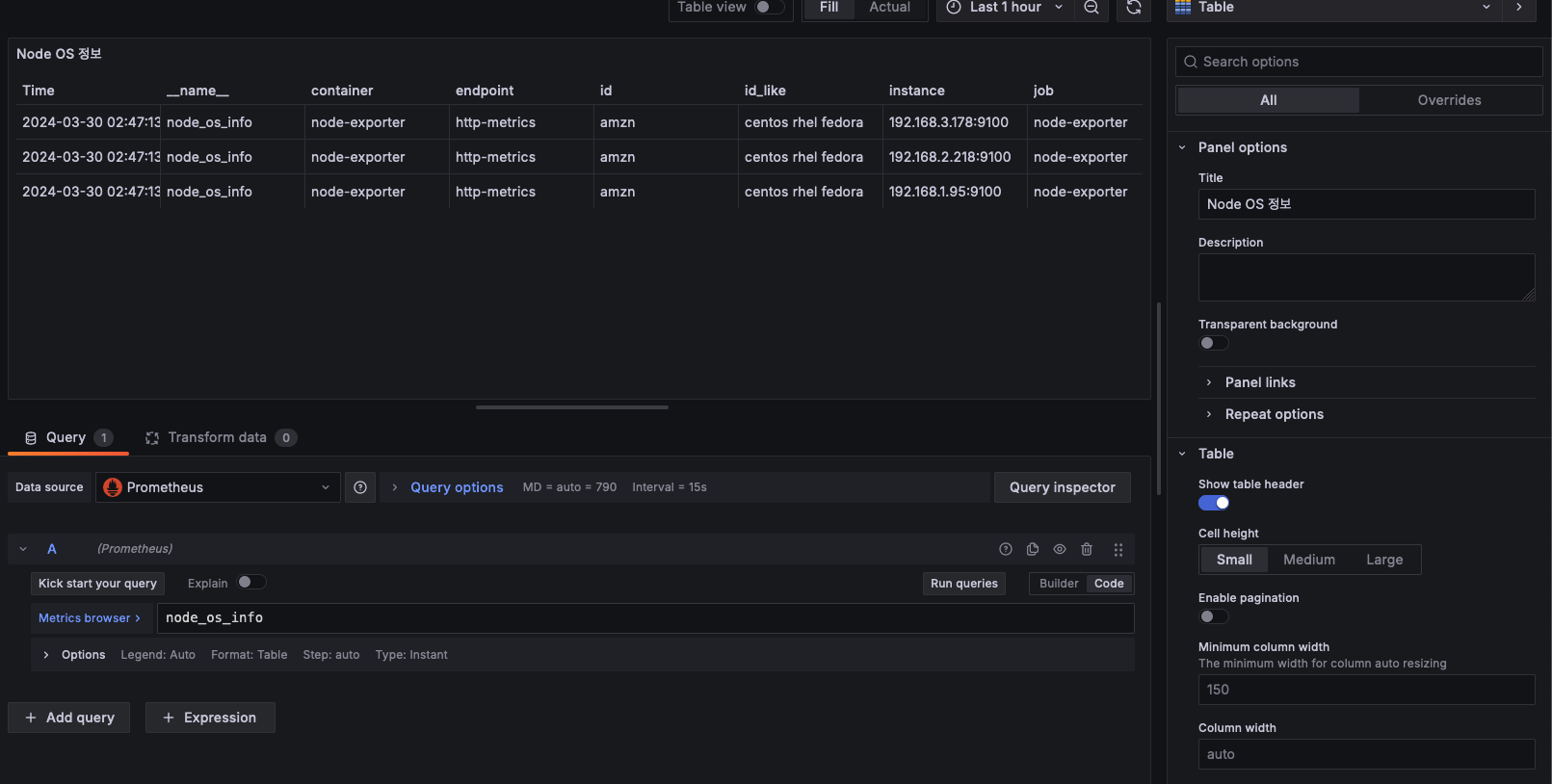

deployment.apps "kwatch" deleted4. Prometheus-stack

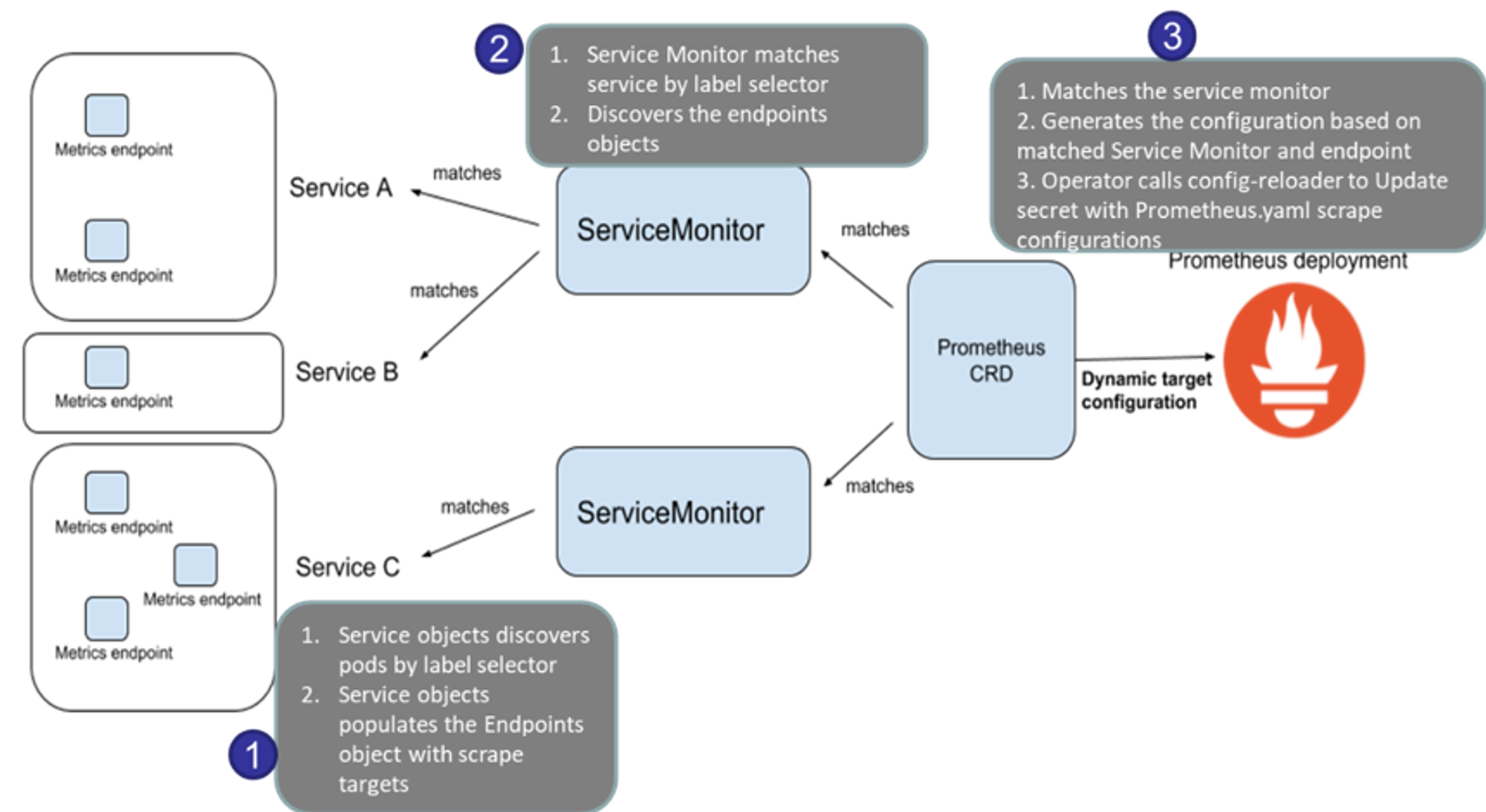

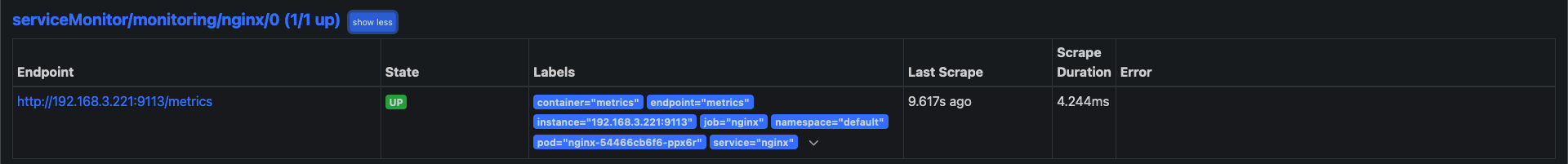

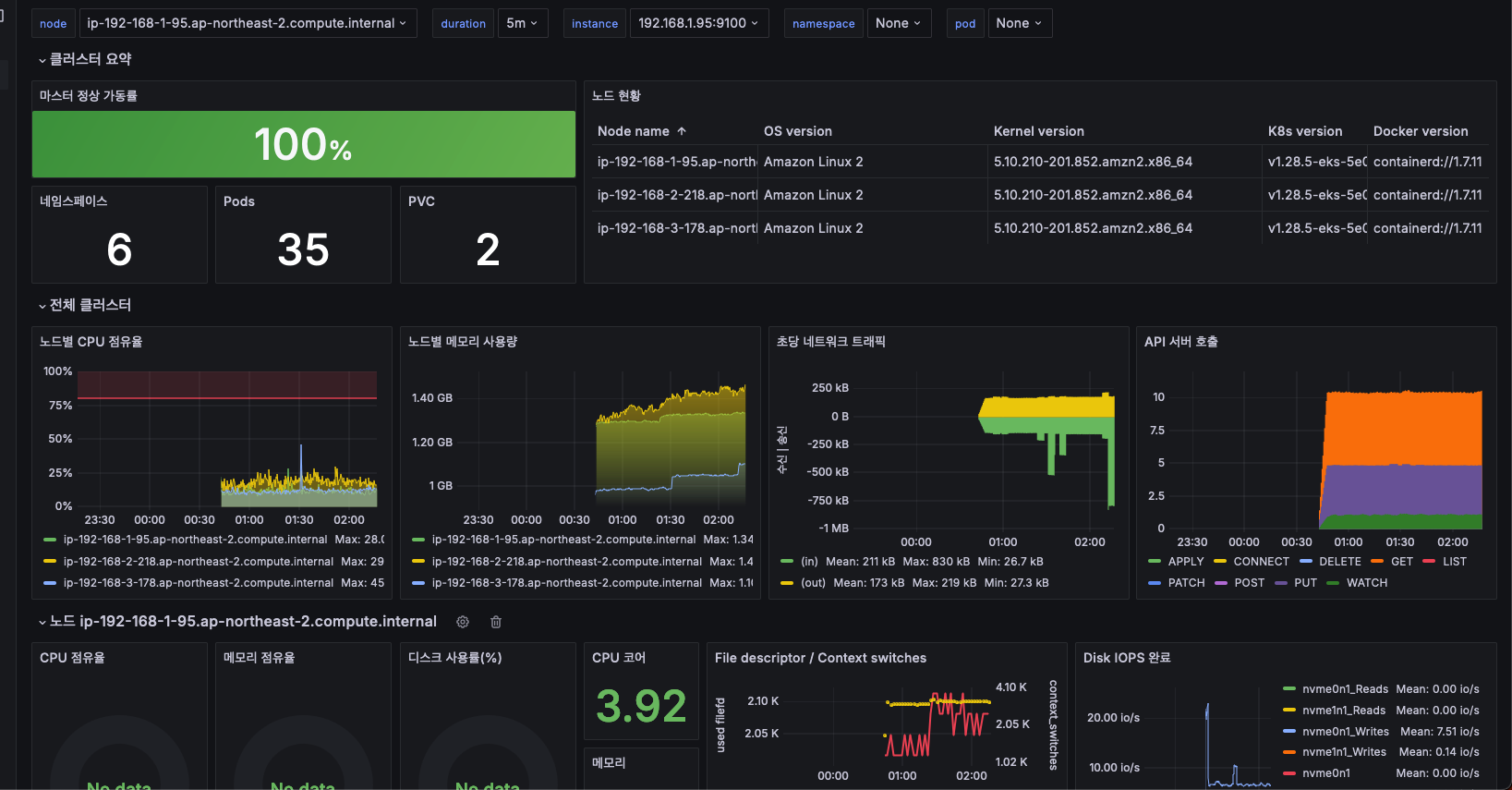

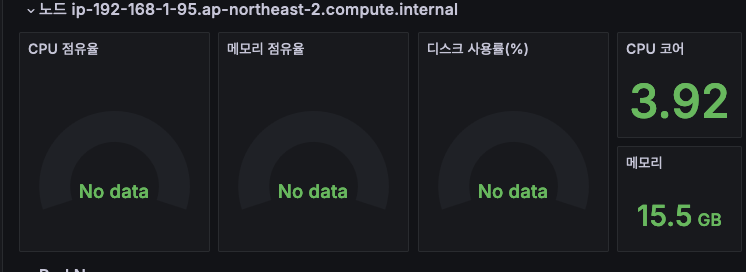

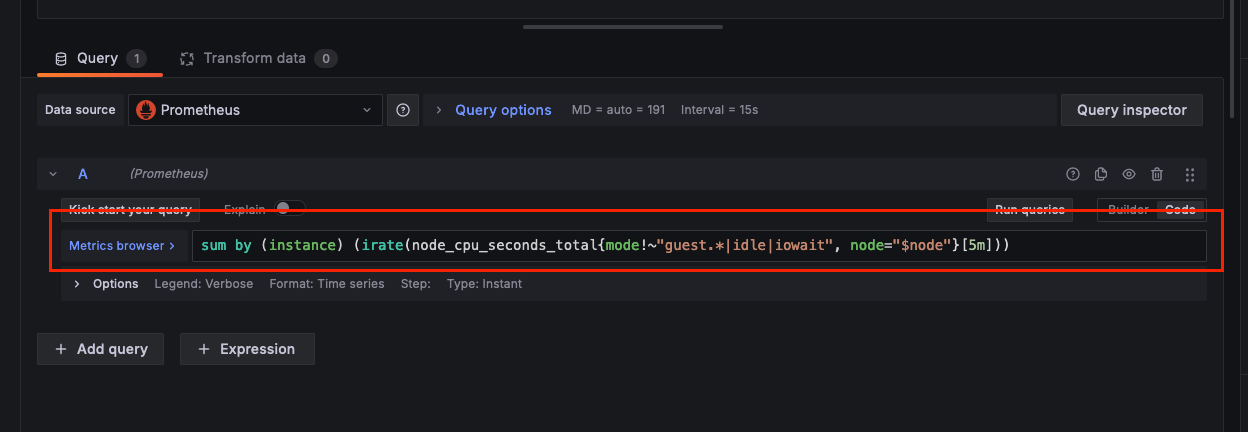

-

프로메테우스 오퍼레이터 : 프로메테우스 및 프로메테우스 오퍼레이터를 이용하여 메트릭 수집과 알람 기능

-

Thanos 타노드 : 프로메테우스 확장성과 고가용성 제공

-

프로메테우스-스택 : 모니터링에 필요한 여러 요소를 단일 차트(스택)으로 제공 ← 시각화(그라파나), 이벤트 메시지 정책(경고 임계값, 경고 수준) 등

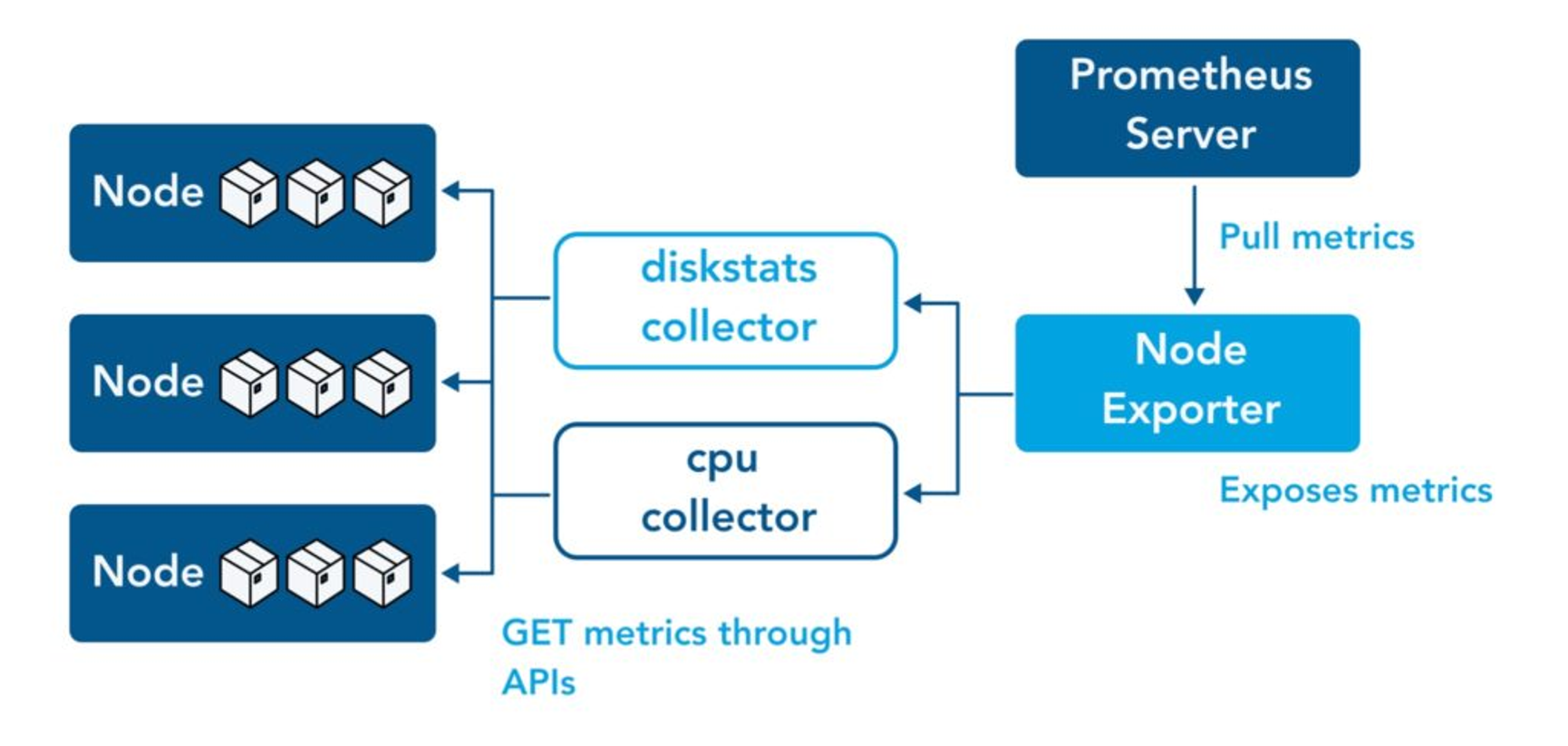

- 컴포넌트

- grafana : 프로메테우스는 메트릭 정보를 저장하는 용도로 사용하며, 그라파나로 시각화 처리

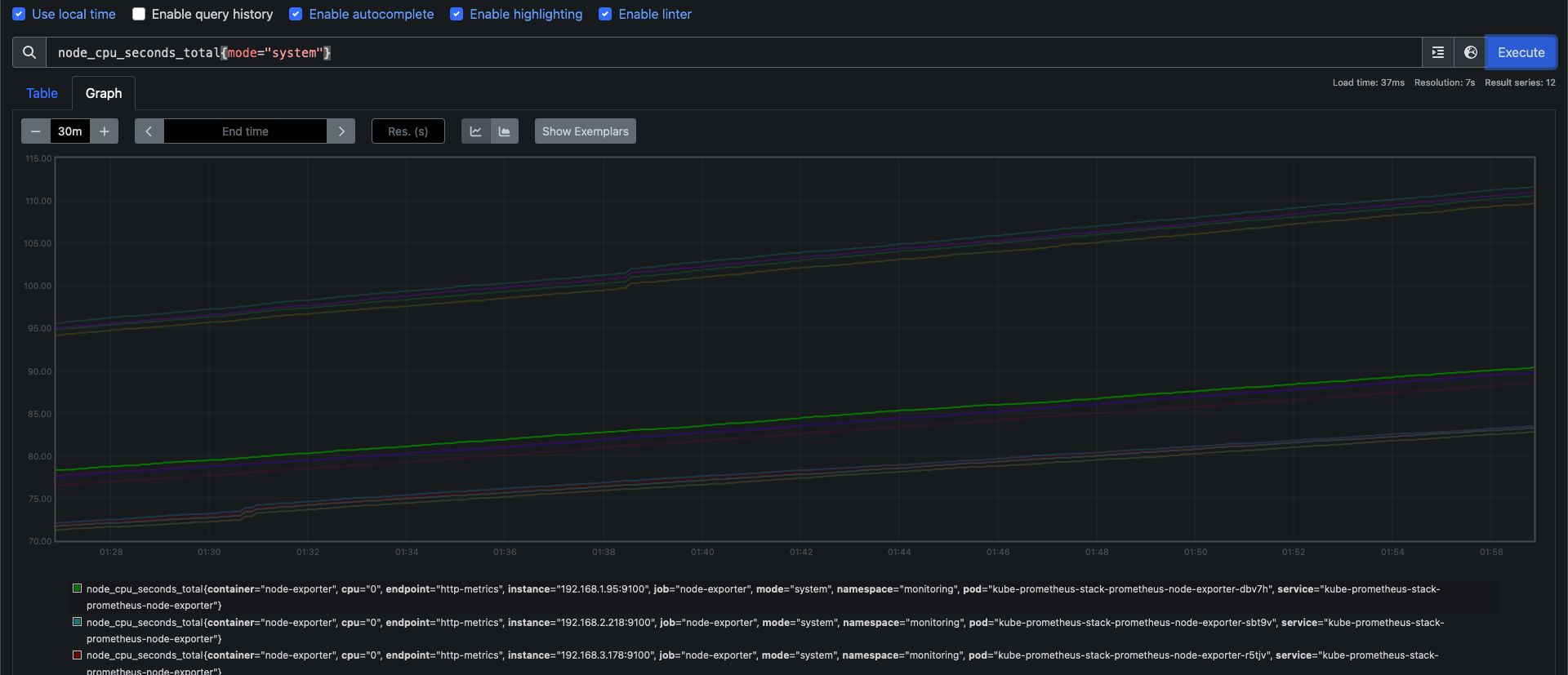

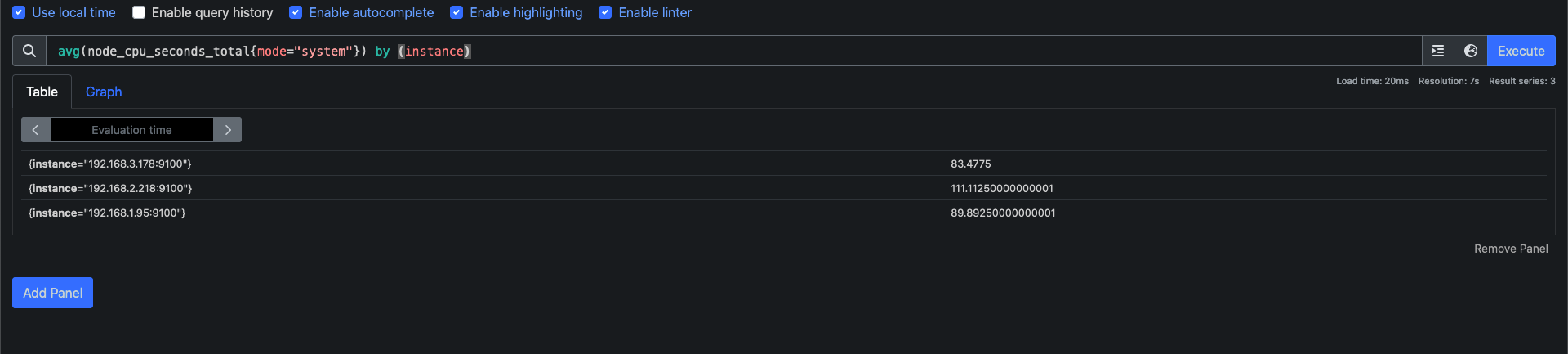

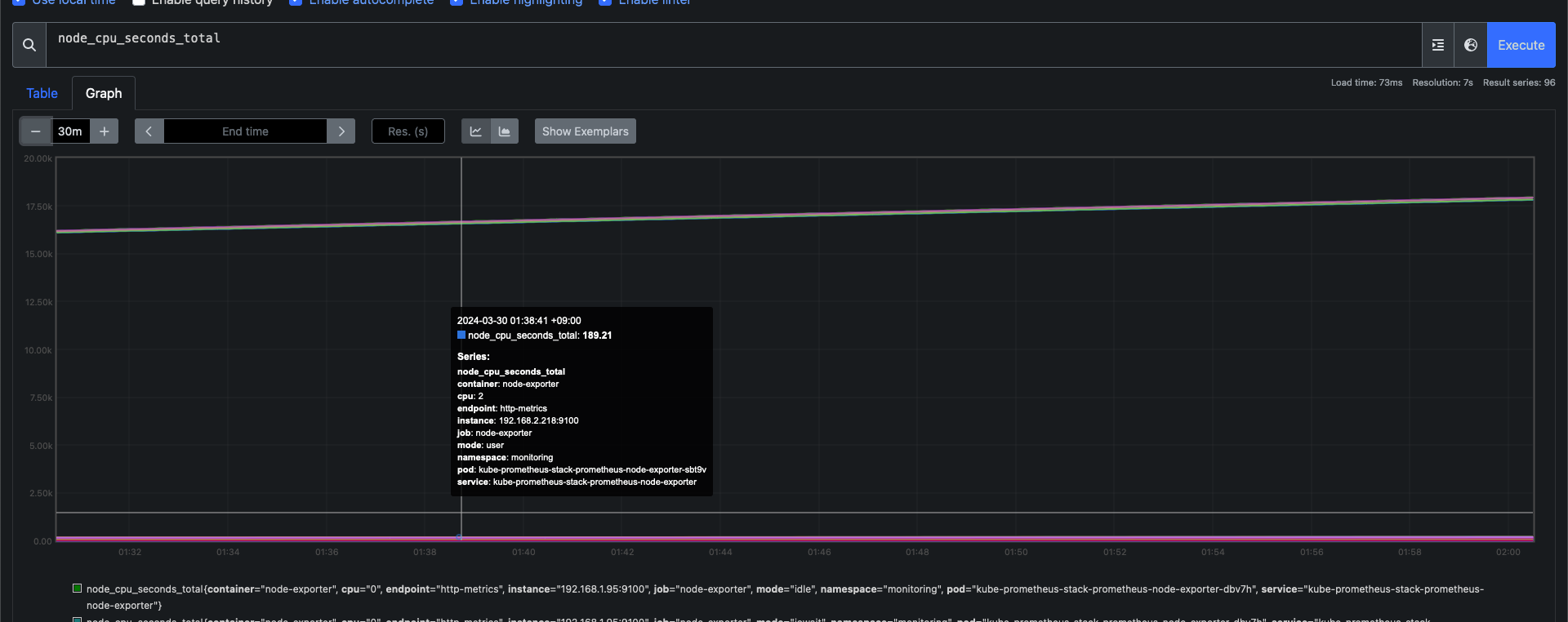

- prometheus-0 : 모니터링 대상이 되는 파드는 ‘exporter’라는 별도의 사이드카 형식의 파드에서 모니터링 메트릭을 노출, pull 방식으로 가져와 내부의 시계열 데이터베이스에 저장

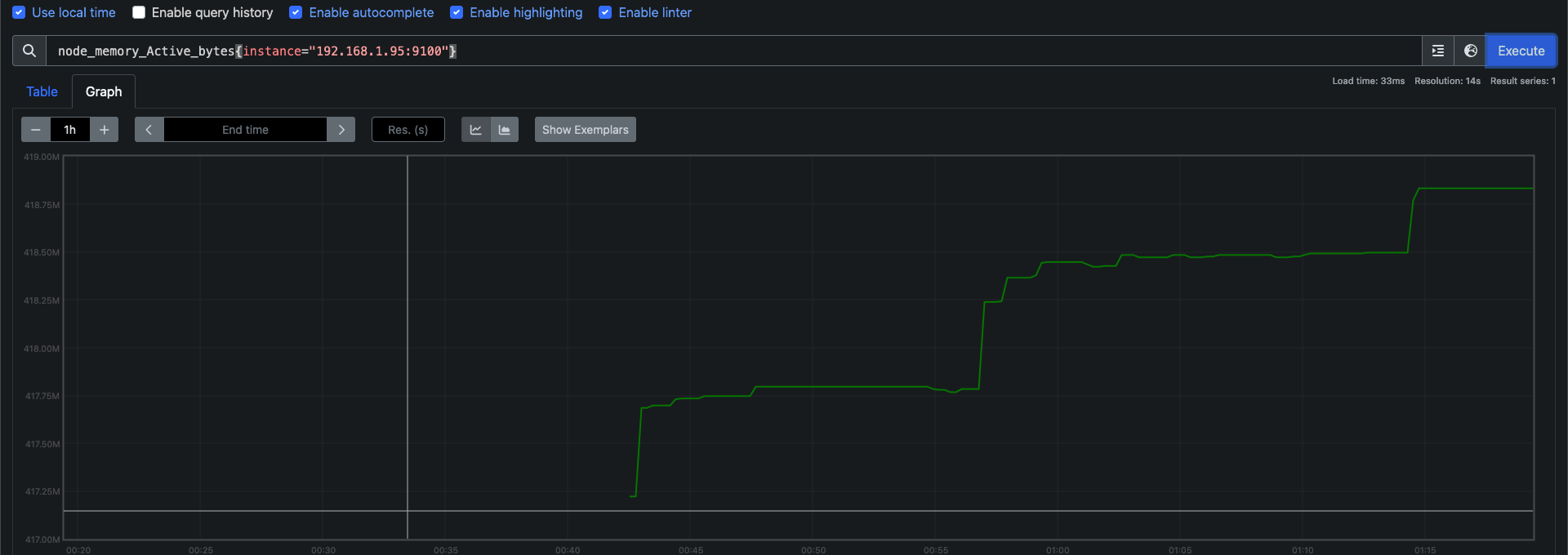

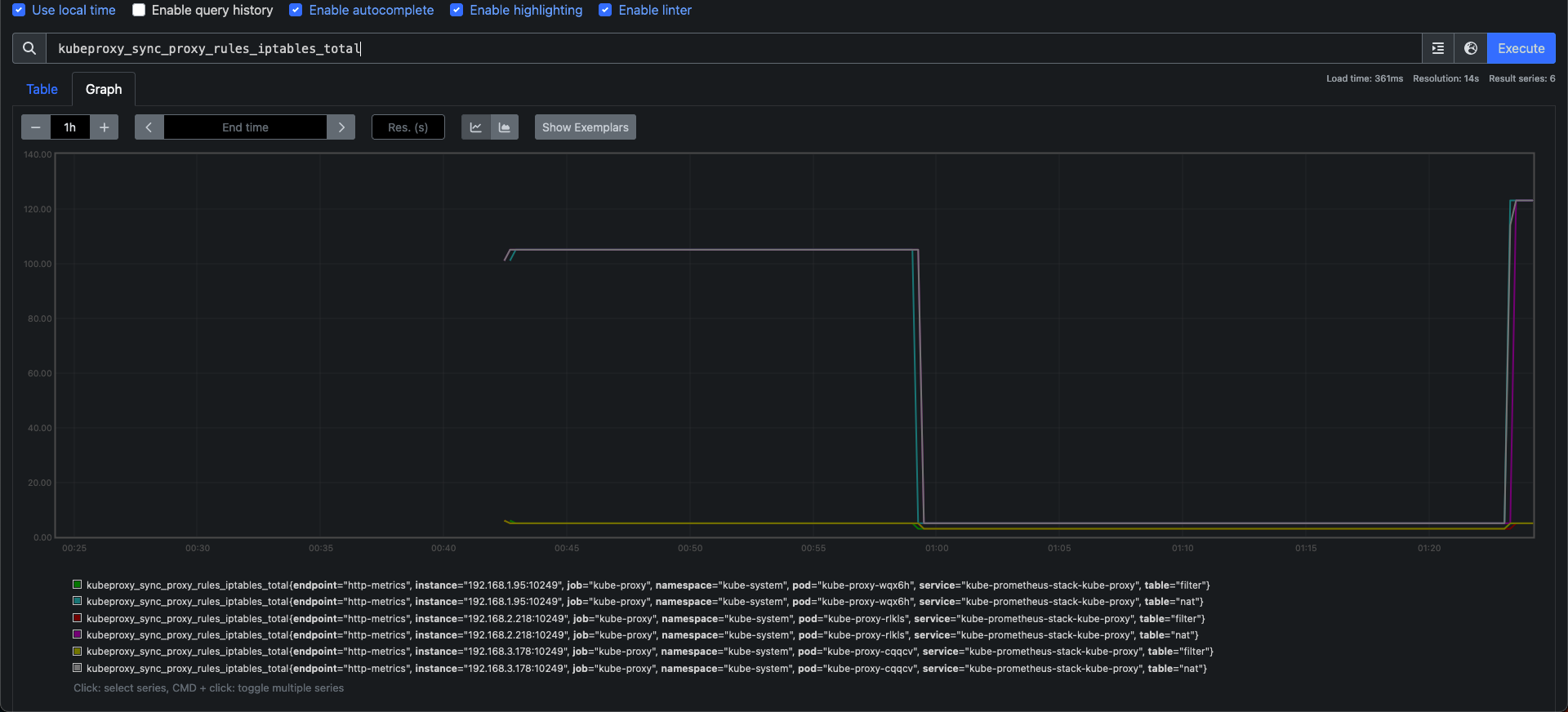

- node-exporter : 노드익스포터는 물리 노드에 대한 자원 사용량(네트워크, 스토리지 등 전체) 정보를 메트릭 형태로 변경하여 노출

operator : 시스템 경고 메시지 정책(prometheus rule), 애플리케이션 모니터링 대상 추가 등의 작업을 편리하게 할수 있게 CRD 지원

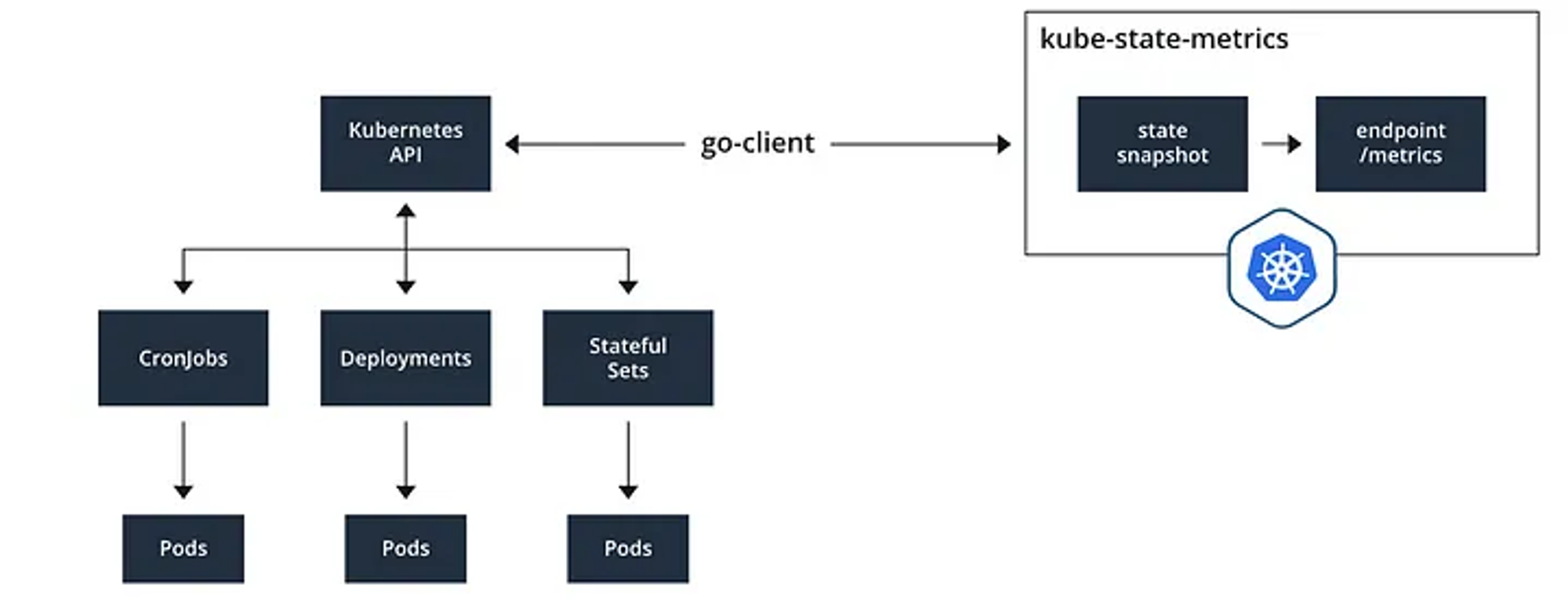

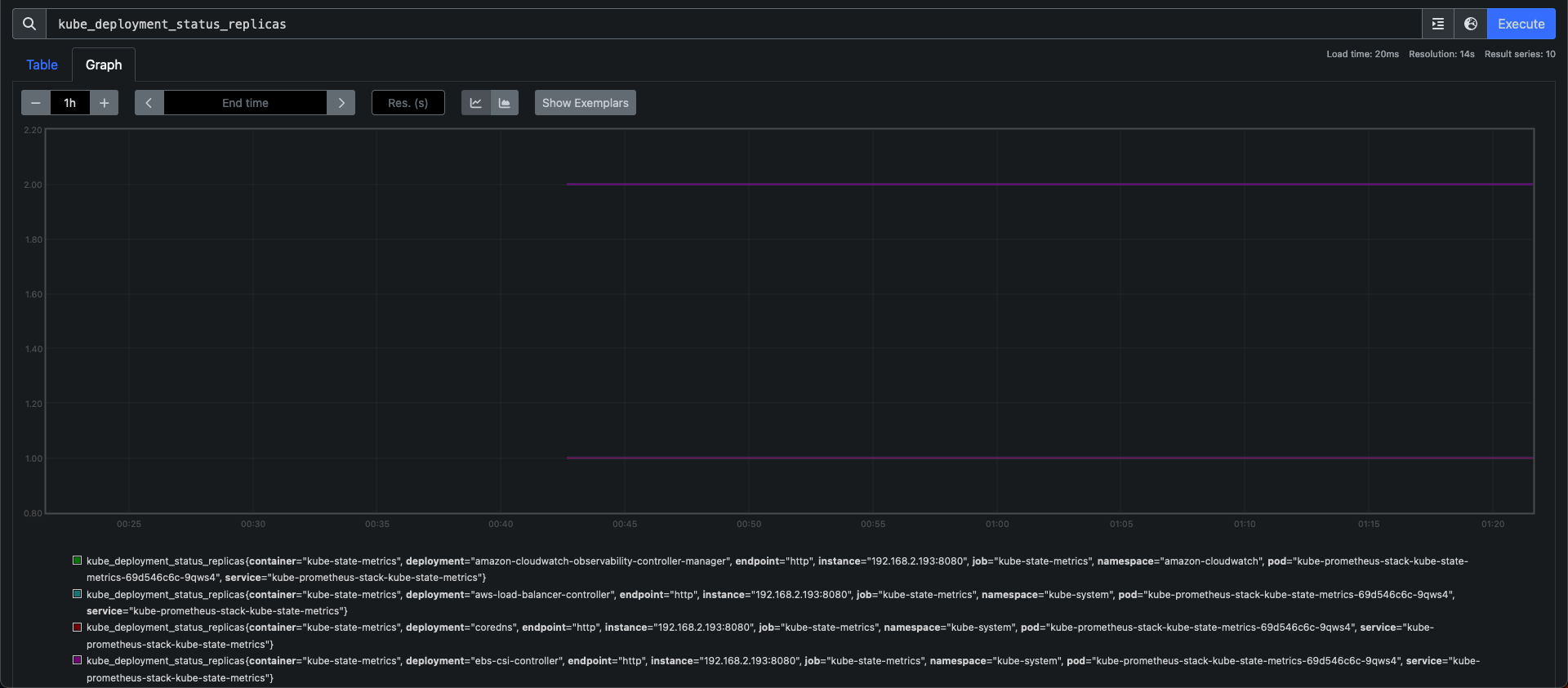

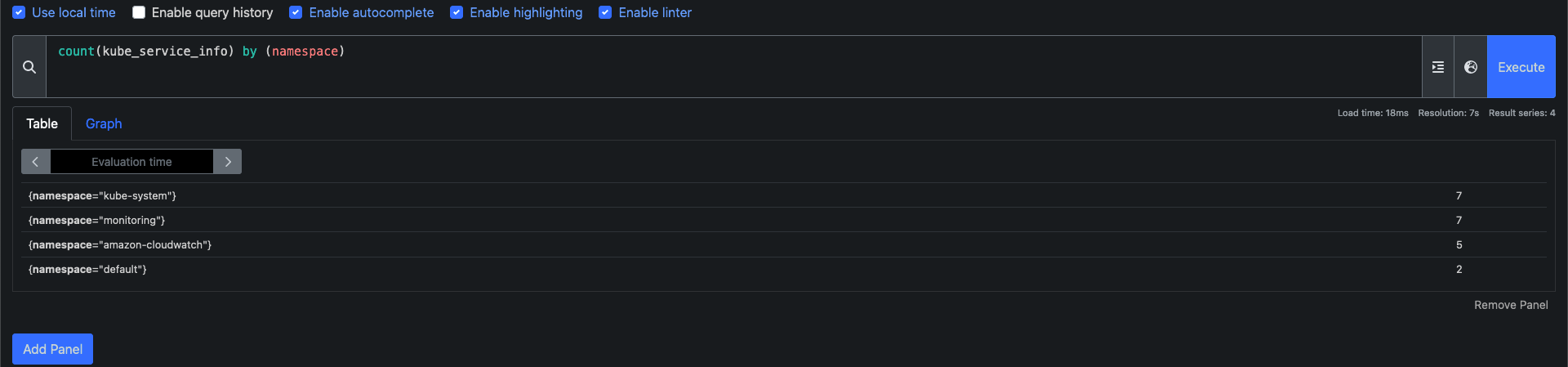

kube-state-metrics : 쿠버네티스의 클러스터의 상태(kube-state)를 메트릭으로 변환하는 파드

- 컴포넌트

# 네임스페이스 생성

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# k create ns monitoring

namespace/monitoring created

# 모니터링

watch kubectl get pod,pvc,svc,ingress -n monitoring

# 사용 리전의 인증서 ARN 확인

df(leeeuijoo@myeks:default) [root@myeks-bastion ~]# clear

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# CERT_ARN=`aws acm list-certificates --query 'CertificateSummaryList[].CertificateArn[]' --output text`

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# echo $CERT_ARN

arn:aws:acm:ap-northeast-2:236747833953:certificate/1244562e-aaa2-48df-a178-07ad03ef921d

# helm repo 추가

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# helm repo add prometheus-community https://prometheus-community.github.io/helm-charts

"prometheus-community" has been added to your repositories

# 파라미터 파일 생성

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# cat <<EOT > monitor-values.yaml

> prometheus:

> prometheusSpec:

> podMonitorSelectorNilUsesHelmValues: false

> serviceMonitorSelectorNilUsesHelmValues: false

> retention: 5d

> retentionSize: "10GiB"

> storageSpec:

> volumeClaimTemplate:

> spec:

> storageClassName: gp3

> accessModes: ["ReadWriteOnce"]

> resources:

> requests:

> storage: 30Gi

>

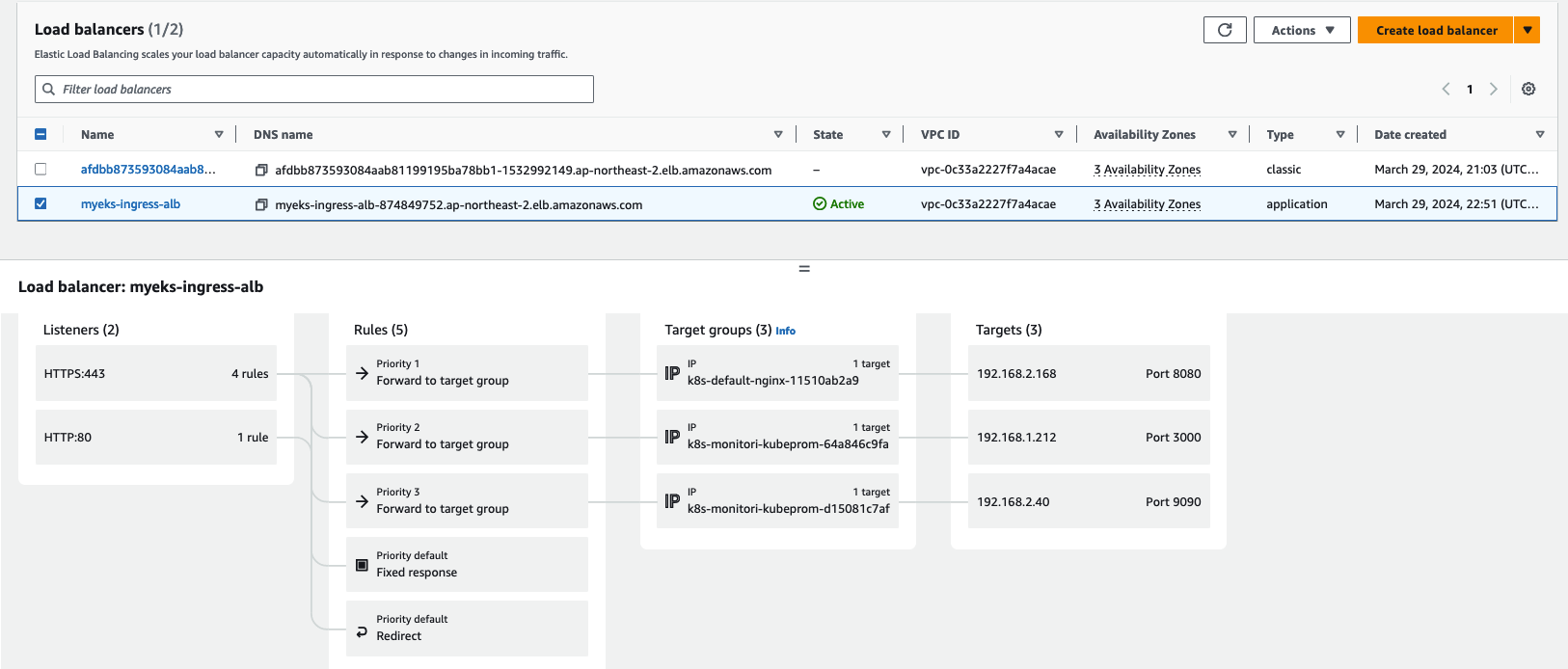

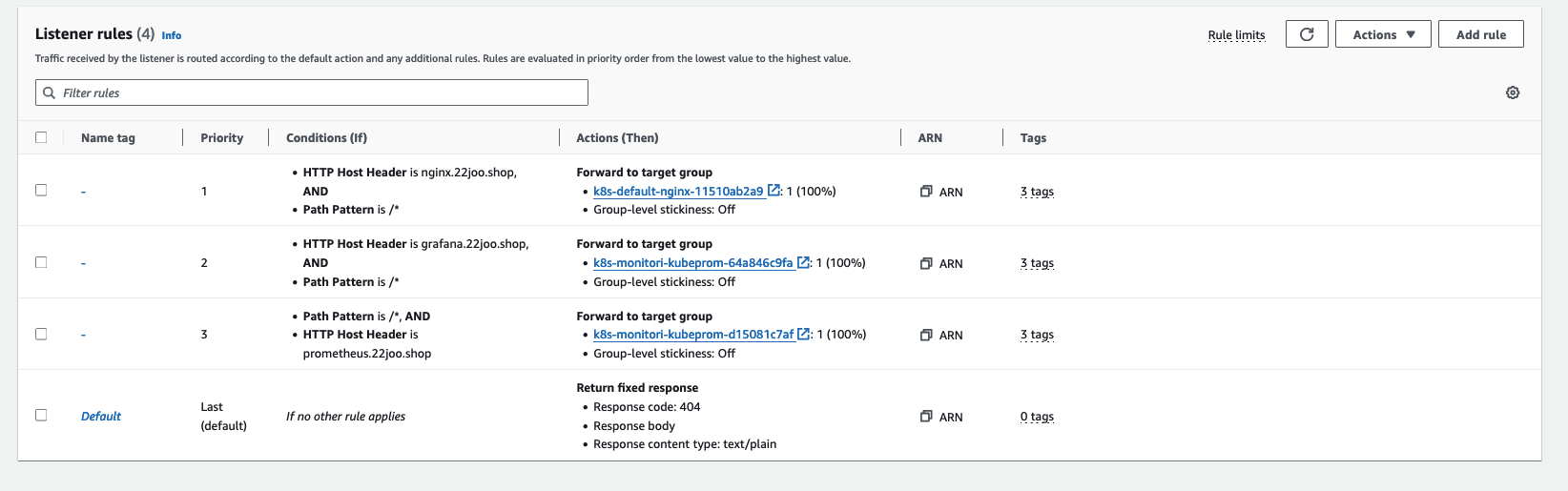

> ingress:

> enabled: true

> ingressClassName: alb

> hosts:

> - prometheus.$MyDomain

> paths:

> - /*

> annotations:

> alb.ingress.kubernetes.io/scheme: internet-facing

> alb.ingress.kubernetes.io/target-type: ip

> alb.ingress.kubernetes.io/listen-ports: '[{"HTTPS":443}, {"HTTP":80}]'

> alb.ingress.kubernetes.io/certificate-arn: $CERT_ARN

> alb.ingress.kubernetes.io/success-codes: 200-399

> alb.ingress.kubernetes.io/load-balancer-name: myeks-ingress-alb

> alb.ingress.kubernetes.io/group.name: study

> alb.ingress.kubernetes.io/ssl-redirect: '443'

>

> grafana:

> defaultDashboardsTimezone: Asia/Seoul

> adminPassword: prom-operator

>

> ingress:

> enabled: true

> ingressClassName: alb

> hosts:

> - grafana.$MyDomain

> paths:

> - /*

> annotations:

> alb.ingress.kubernetes.io/scheme: internet-facing

> alb.ingress.kubernetes.io/target-type: ip

> alb.ingress.kubernetes.io/listen-ports: '[{"HTTPS":443}, {"HTTP":80}]'

> alb.ingress.kubernetes.io/certificate-arn: $CERT_ARN

> alb.ingress.kubernetes.io/success-codes: 200-399

> alb.ingress.kubernetes.io/load-balancer-name: myeks-ingress-alb

> alb.ingress.kubernetes.io/group.name: study

> alb.ingress.kubernetes.io/ssl-redirect: '443'

>

> persistence:

> enabled: true

> type: sts

> storageClassName: "gp3"

> accessModes:

> - ReadWriteOnce

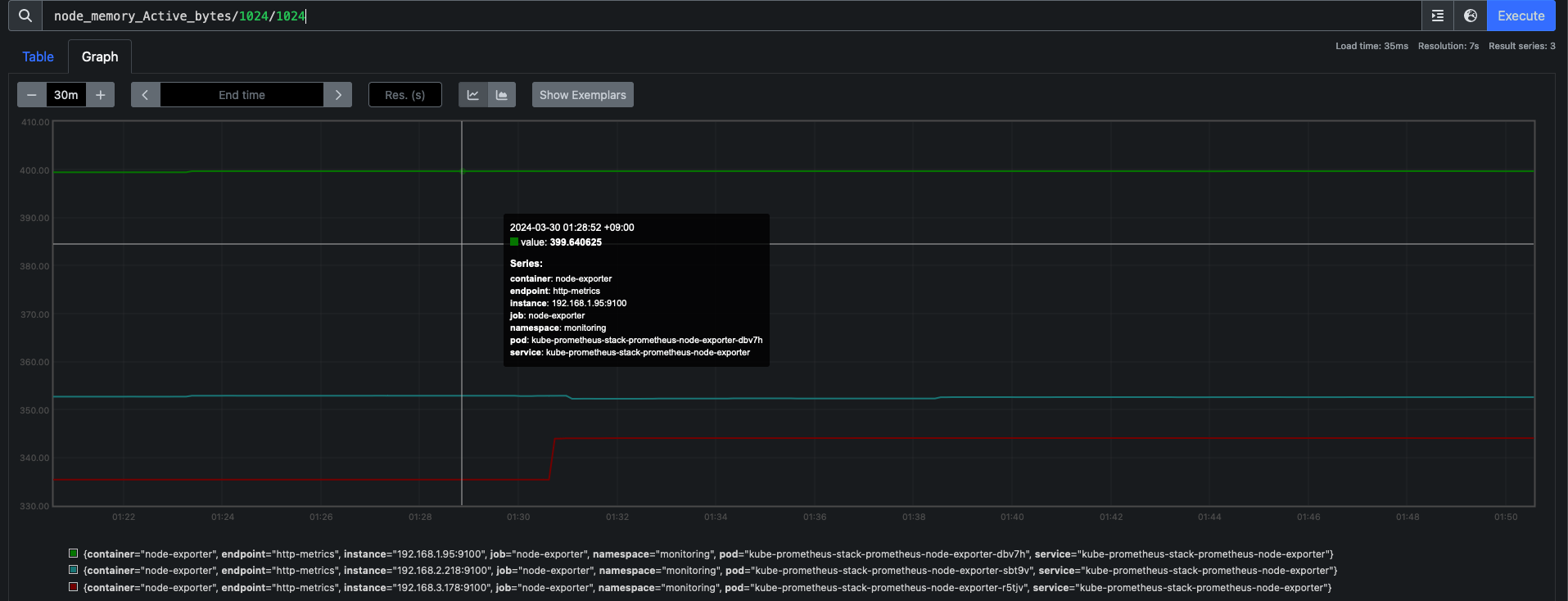

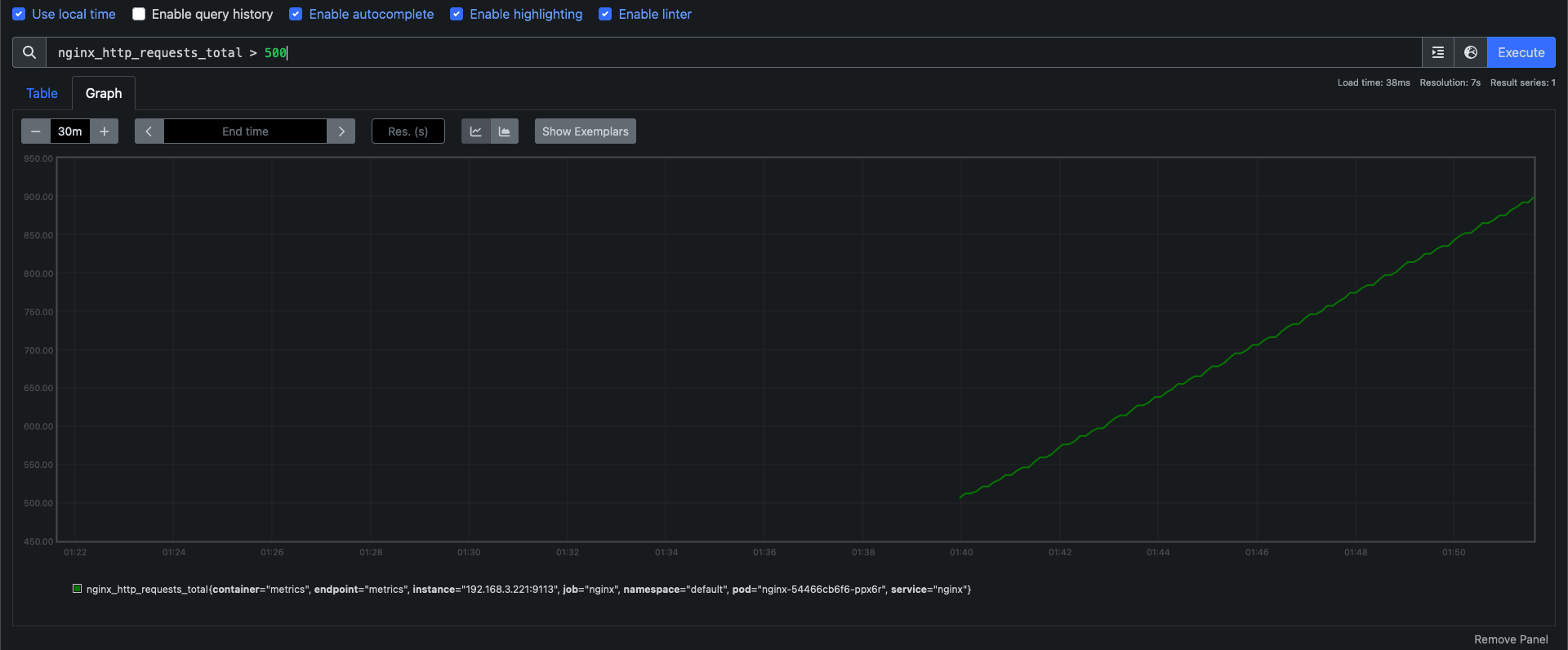

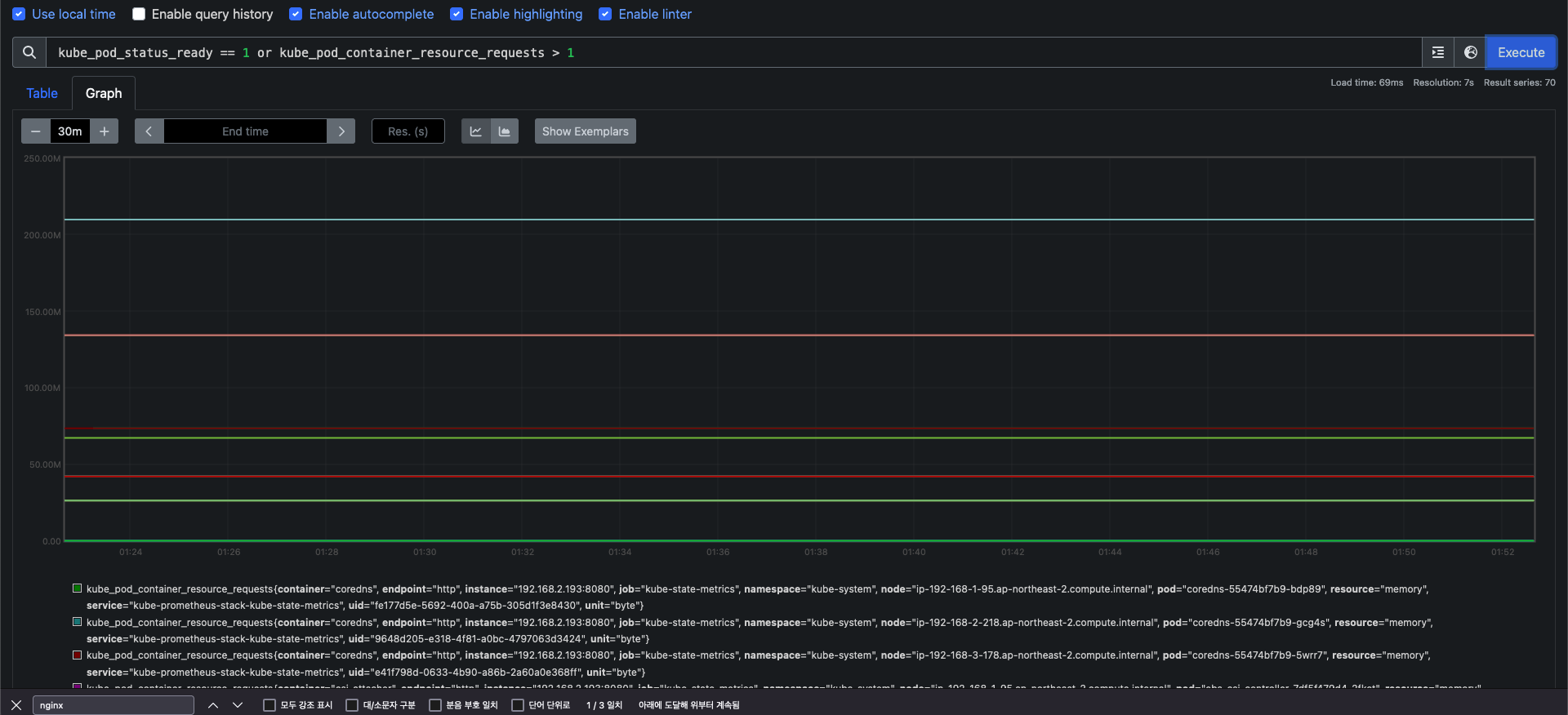

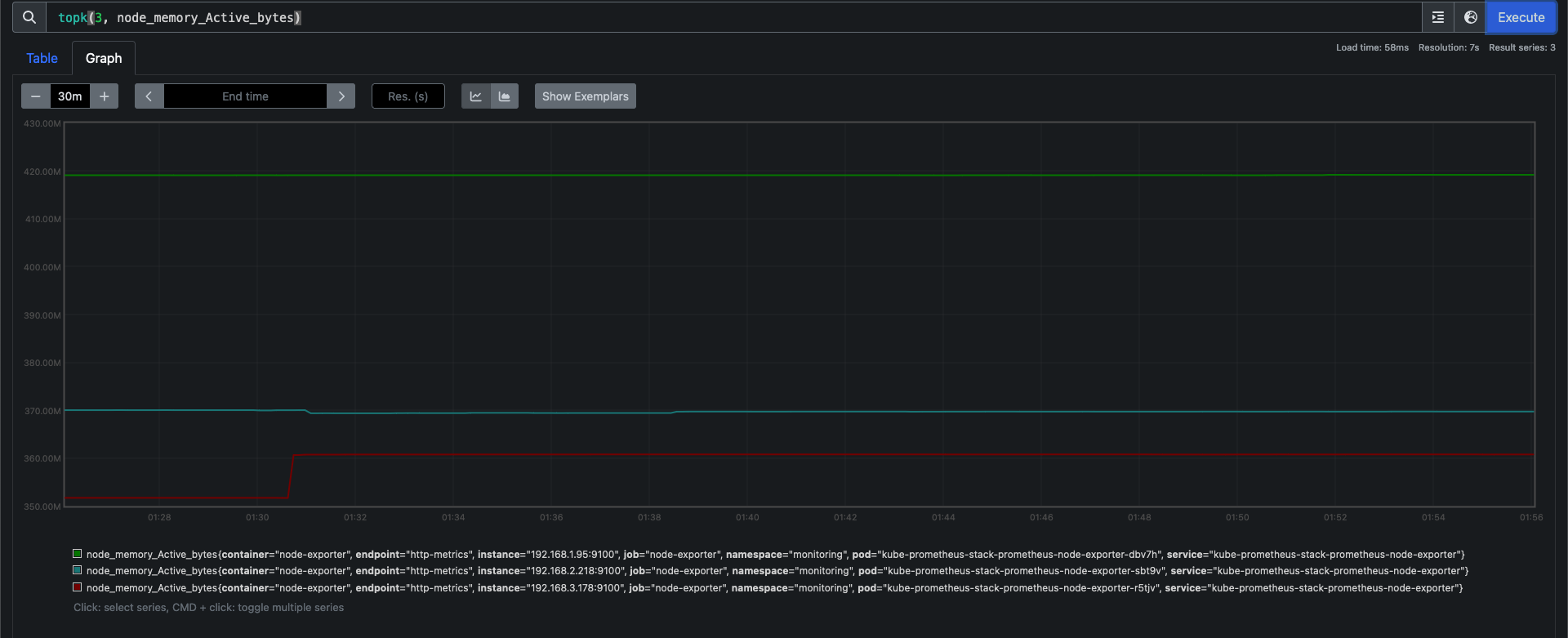

> size: 20Gi