0. 실습환경 배포

- 원클릭 배포

# YAML 파일 다운로드

curl -O https://s3.ap-northeast-2.amazonaws.com/cloudformation.cloudneta.net/K8S/eks-oneclick4.yaml

# CloudFormation 스택 배포

예시) aws cloudformation deploy --template-file eks-oneclick4.yaml --stack-name myeks --parameter-overrides KeyName=kp-gasida SgIngressSshCidr=$(curl -s ipinfo.io/ip)/32 MyIamUserAccessKeyID=AKIA5... MyIamUserSecretAccessKey='CVNa2...' ClusterBaseName=myeks --region ap-northeast-2

# CloudFormation 스택 배포 완료 후 작업용 EC2 IP 출력

aws cloudformation describe-stacks --stack-name myeks --query 'Stacks[*].Outputs[0].OutputValue' --output text

# 작업용 EC2 SSH 접속

ssh -i ~/.ssh/kp-gasida.pem ec2-user@$(aws cloudformation describe-stacks --stack-name myeks --query 'Stacks[*].Outputs[0].OutputValue' --output text)

or

ssh -i ~/.ssh/kp-gasida.pem root@$(aws cloudformation describe-stacks --stack-name myeks --query 'Stacks[*].Outputs[0].OutputValue' --output text)

~ password: qwe123- 기본 설정

# default 네임스페이스 적용

kubectl ns default

# 노드 정보 확인

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl get node --label-columns=node.kubernetes.io/instance-type,eks.amazonaws.com/capacityType,topology.kubernetes.io/zone

NAME STATUS ROLES AGE VERSION INSTANCE-TYPE CAPACITYTYPE ZONE

ip-192-168-1-146.ap-northeast-2.compute.internal Ready <none> 3m4s v1.28.5-eks-5e0fdde t3.medium ON_DEMAND ap-northeast-2a

ip-192-168-2-60.ap-northeast-2.compute.internal Ready <none> 3m9s v1.28.5-eks-5e0fdde t3.medium ON_DEMAND ap-northeast-2b

ip-192-168-3-134.ap-northeast-2.compute.internal Ready <none> 3m5s v1.28.5-eks-5e0fdde t3.medium ON_DEMAND ap-northeast-2c

# External DNS 설치

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# MyDomain=22joo.shop

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# echo "export MyDomain=22joo.shop" >> /etc/profile

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# MyDnzHostedZoneId=$(aws route53 list-hosted-zones-by-name --dns-name "${MyDomain}." --query "HostedZones[0].Id" --output text)

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# echo $MyDomain, $MyDnzHostedZoneId

22joo.shop, /hostedzone/Z07798463AFECYTX1ODP4

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# curl -s -O https://raw.githubusercontent.com/gasida/PKOS/main/aews/externaldns.yaml

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# MyDomain=$MyDomain MyDnzHostedZoneId=$MyDnzHostedZoneId envsubst < externaldns.yaml | kubectl apply -f -

serviceaccount/external-dns created

clusterrole.rbac.authorization.k8s.io/external-dns created

clusterrolebinding.rbac.authorization.k8s.io/external-dns-viewer created

deployment.apps/external-dns created

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# k get pods -n kube-system | grep external

external-dns-7fd77dcbc-7tx9b 1/1 Running 0 31s

# kube-ops-view 설치

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# helm repo add geek-cookbook https://geek-cookbook.github.io/charts/

"geek-cookbook" has been added to your repositories

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# helm install kube-ops-view geek-cookbook/kube-ops-view --version 1.2.2 --set env.TZ="Asia/Seoul" --namespace kube-system

NAME: kube-ops-view

LAST DEPLOYED: Thu Apr 4 18:30:57 2024

NAMESPACE: kube-system

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

1. Get the application URL by running these commands:

export POD_NAME=$(kubectl get pods --namespace kube-system -l "app.kubernetes.io/name=kube-ops-view,app.kubernetes.io/instance=kube-ops-view" -o jsonpath="{.items[0].metadata.name}")

echo "Visit http://127.0.0.1:8080 to use your application"

kubectl port-forward $POD_NAME 8080:8080

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl patch svc -n kube-system kube-ops-view -p '{"spec":{"type":"LoadBalancer"}}'

service/kube-ops-view patched

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl annotate service kube-ops-view -n kube-system "external-dns.alpha.kubernetes.io/hostname=kubeopsview.$MyDomain"

service/kube-ops-view annotated

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# echo -e "Kube Ops View URL = http://kubeopsview.$MyDomain:8080/#scale=1.5"

Kube Ops View URL = http://kubeopsview.22joo.shop:8080/#scale=1.5

# AWS LB 컨트롤러 설치

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# helm repo add eks https://aws.github.io/eks-charts

"eks" has been added to your repositories

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# helm repo update

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "eks" chart repository

...Successfully got an update from the "geek-cookbook" chart repository

Update Complete. ⎈Happy Helming!⎈

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# helm install aws-load-balancer-controller eks/aws-load-balancer-controller -n kube-system --set clusterName=$CLUSTER_NAME \

> --set serviceAccount.create=false --set serviceAccount.name=aws-load-balancer-controller

NAME: aws-load-balancer-controller

LAST DEPLOYED: Thu Apr 4 18:31:47 2024

NAMESPACE: kube-system

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

AWS Load Balancer controller installed!

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# k get pods -n kube-system | grep load

aws-load-balancer-controller-5f7b66cdd5-p9tld 1/1 Running 0 37s

aws-load-balancer-controller-5f7b66cdd5-qdmbx 1/1 Running 0 37s

# gp3 스토리지 클래스 생성

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl apply -f https://raw.githubusercontent.com/gasida/PKOS/main/aews/gp3-sc.yaml

storageclass.storage.k8s.io/gp3 created

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# k get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

gp2 (default) kubernetes.io/aws-ebs Delete WaitForFirstConsumer false 18m

gp3 ebs.csi.aws.com Delete WaitForFirstConsumer true 5s

# 노드 보안그룹 ID 확인

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# NGSGID=$(aws ec2 describe-security-groups --filters Name=group-name,Values=*ng1* --query "SecurityGroups[*].[GroupId]" --output text)

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# aws ec2 authorize-security-group-ingress --group-id $NGSGID --protocol '-1' --cidr 192.168.1.100/32

{

"Return": true,

"SecurityGroupRules": [

{

"SecurityGroupRuleId": "sgr-0a7cf7d5ab4ea1b7f",

"GroupId": "sg-0b63afee06b7cd84e",

"GroupOwnerId": "236747833953",

"IsEgress": false,

"IpProtocol": "-1",

"FromPort": -1,

"ToPort": -1,

"CidrIpv4": "192.168.1.100/32"

}

]

}- Prometheus & Grafana (admin/prom-operator) 설치

- Grafana DashBoard recommanded : 15757 / 17900 / 15172

# 사용 리전의 인증서 ARN 확인

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# CERT_ARN=`aws acm list-certificates --query 'CertificateSummaryList[].CertificateArn[]' --output text`

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# echo $CERT_ARN

arn:aws:acm:ap-northeast-2:236747833953:certificate/1244562e-aaa2-48df-a178-07ad03ef921d

# repo 추가

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# helm repo add prometheus-community https://prometheus-community.github.io/helm-charts

"prometheus-community" has been added to your repositories

# 파라미터 생성 : PV/PVC(AWS EBS) 삭제의 불편 때문에 PV/PVC 미사용

cat <<EOT > monitor-values.yaml

prometheus:

prometheusSpec:

podMonitorSelectorNilUsesHelmValues: false

serviceMonitorSelectorNilUsesHelmValues: false

retention: 5d

retentionSize: "10GiB"

verticalPodAutoscaler:

enabled: true

ingress:

enabled: true

ingressClassName: alb

hosts:

- prometheus.$MyDomain

paths:

- /*

annotations:

alb.ingress.kubernetes.io/scheme: internet-facing

alb.ingress.kubernetes.io/target-type: ip

alb.ingress.kubernetes.io/listen-ports: '[{"HTTPS":443}, {"HTTP":80}]'

alb.ingress.kubernetes.io/certificate-arn: $CERT_ARN

alb.ingress.kubernetes.io/success-codes: 200-399

alb.ingress.kubernetes.io/load-balancer-name: myeks-ingress-alb

alb.ingress.kubernetes.io/group.name: study

alb.ingress.kubernetes.io/ssl-redirect: '443'

grafana:

defaultDashboardsTimezone: Asia/Seoul

adminPassword: prom-operator

defaultDashboardsEnabled: false

ingress:

enabled: true

ingressClassName: alb

hosts:

- grafana.$MyDomain

paths:

- /*

annotations:

alb.ingress.kubernetes.io/scheme: internet-facing

alb.ingress.kubernetes.io/target-type: ip

alb.ingress.kubernetes.io/listen-ports: '[{"HTTPS":443}, {"HTTP":80}]'

alb.ingress.kubernetes.io/certificate-arn: $CERT_ARN

alb.ingress.kubernetes.io/success-codes: 200-399

alb.ingress.kubernetes.io/load-balancer-name: myeks-ingress-alb

alb.ingress.kubernetes.io/group.name: study

alb.ingress.kubernetes.io/ssl-redirect: '443'

kube-state-metrics:

rbac:

extraRules:

- apiGroups: ["autoscaling.k8s.io"]

resources: ["verticalpodautoscalers"]

verbs: ["list", "watch"]

prometheus:

monitor:

enabled: true

customResourceState:

enabled: true

config:

kind: CustomResourceStateMetrics

spec:

resources:

- groupVersionKind:

group: autoscaling.k8s.io

kind: "VerticalPodAutoscaler"

version: "v1"

labelsFromPath:

verticalpodautoscaler: [metadata, name]

namespace: [metadata, namespace]

target_api_version: [apiVersion]

target_kind: [spec, targetRef, kind]

target_name: [spec, targetRef, name]

metrics:

- name: "vpa_containerrecommendations_target"

help: "VPA container recommendations for memory."

each:

type: Gauge

gauge:

path: [status, recommendation, containerRecommendations]

valueFrom: [target, memory]

labelsFromPath:

container: [containerName]

commonLabels:

resource: "memory"

unit: "byte"

- name: "vpa_containerrecommendations_target"

help: "VPA container recommendations for cpu."

each:

type: Gauge

gauge:

path: [status, recommendation, containerRecommendations]

valueFrom: [target, cpu]

labelsFromPath:

container: [containerName]

commonLabels:

resource: "cpu"

unit: "core"

selfMonitor:

enabled: true

alertmanager:

enabled: false

EOT

# 배포

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl create ns monitoring

namespace/monitoring created

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# helm install kube-prometheus-stack prometheus-community/kube-prometheus-stack --version 57.2.0 \

> --set prometheus.prometheusSpec.scrapeInterval='15s' --set prometheus.prometheusSpec.evaluationInterval='15s' \

> -f monitor-values.yaml --namespace monitoring

NAME: kube-prometheus-stack

LAST DEPLOYED: Thu Apr 4 18:37:27 2024

NAMESPACE: monitoring

STATUS: deployed

REVISION: 1

NOTES:

kube-prometheus-stack has been installed. Check its status by running:

kubectl --namespace monitoring get pods -l "release=kube-prometheus-stack"

Visit https://github.com/prometheus-operator/kube-prometheus for instructions on how to create & configure Alertmanager and Prometheus instances using the Operator.

# Metrics-server 배포

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl apply -f https://github.com/kubernetes-sigs/metrics-server/releases/latest/download/components.yaml

serviceaccount/metrics-server created

clusterrole.rbac.authorization.k8s.io/system:aggregated-metrics-reader created

clusterrole.rbac.authorization.k8s.io/system:metrics-server created

rolebinding.rbac.authorization.k8s.io/metrics-server-auth-reader created

clusterrolebinding.rbac.authorization.k8s.io/metrics-server:system:auth-delegator created

clusterrolebinding.rbac.authorization.k8s.io/system:metrics-server created

service/metrics-server created

deployment.apps/metrics-server created

apiservice.apiregistration.k8s.io/v1beta1.metrics.k8s.io created

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# k get ingress -n monitoring

NAME CLASS HOSTS ADDRESS PORTS AGE

kube-prometheus-stack-grafana alb grafana.22joo.shop myeks-ingress-alb-1771502456.ap-northeast-2.elb.amazonaws.com 80 44s

kube-prometheus-stack-prometheus alb prometheus.22joo.shop myeks-ingress-alb-1771502456.ap-northeast-2.elb.amazonaws.com 80 44s

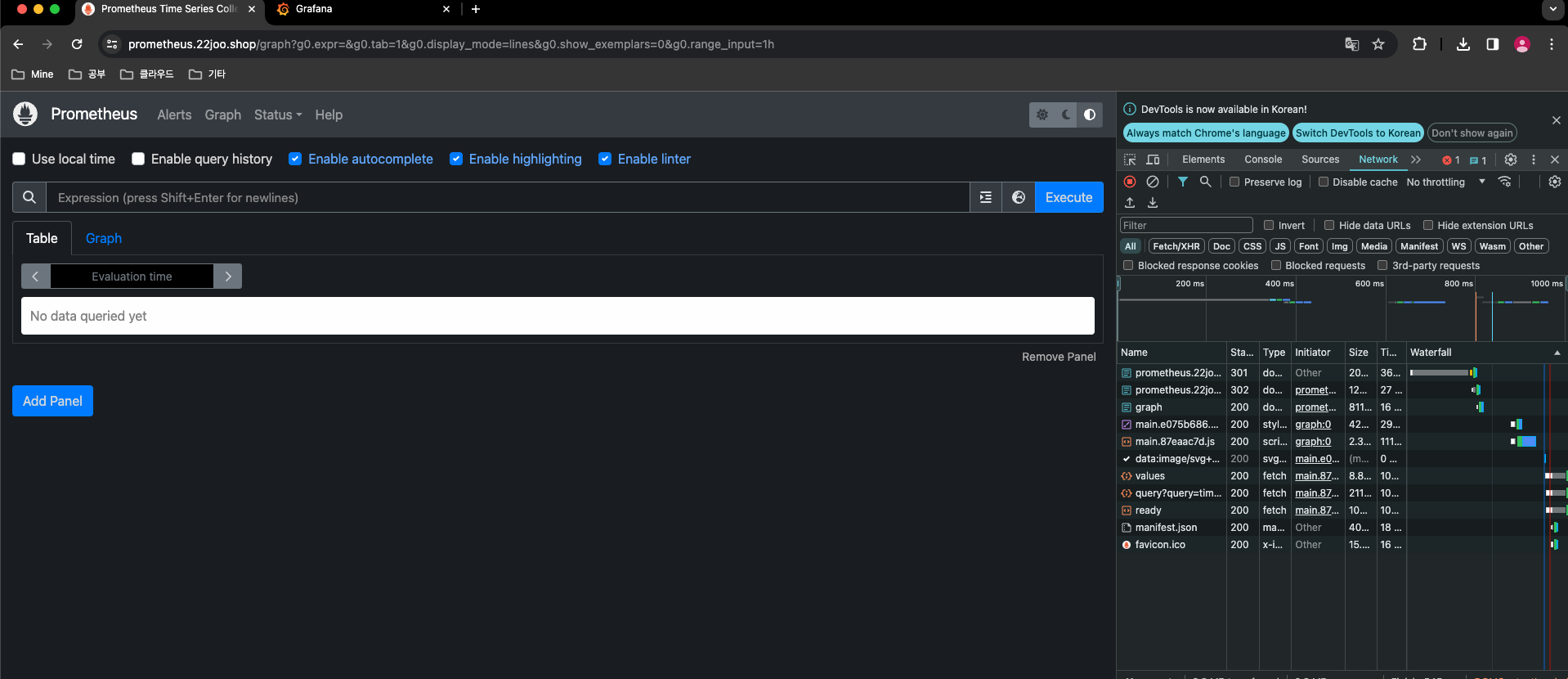

# 프로메테우스 ingress 도메인으로 웹 접속

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# echo -e "Prometheus Web URL = https://prometheus.$MyDomain"

Prometheus Web URL = https://prometheus.22joo.shop

# 그라파나 웹 접속 : 기본 계정 - admin / prom-operator

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# echo -e "Grafana Web URL = https://grafana.$MyDomain"

Grafana Web URL = https://grafana.22joo.shop

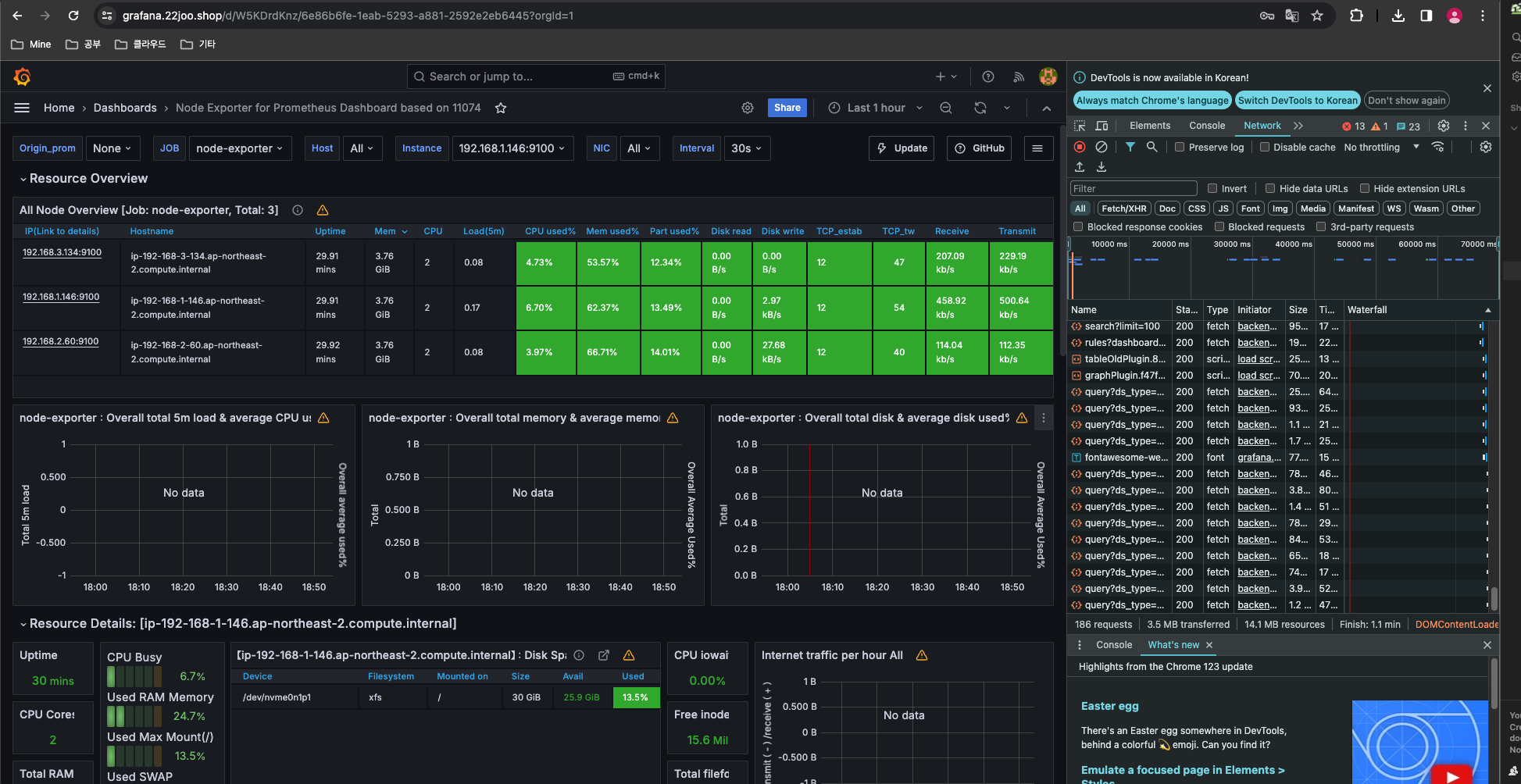

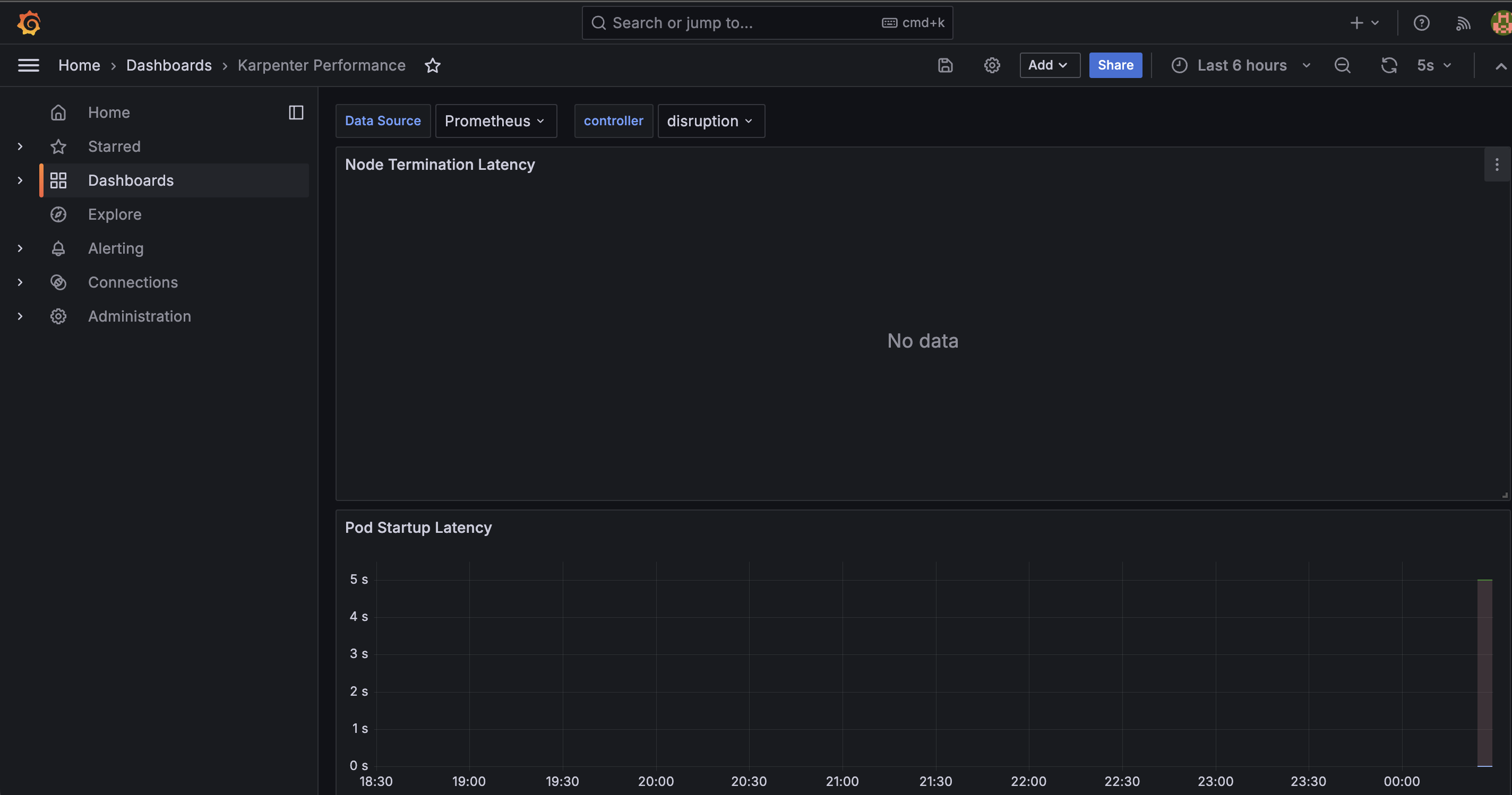

- Grafana recommanded DashBoard 추가

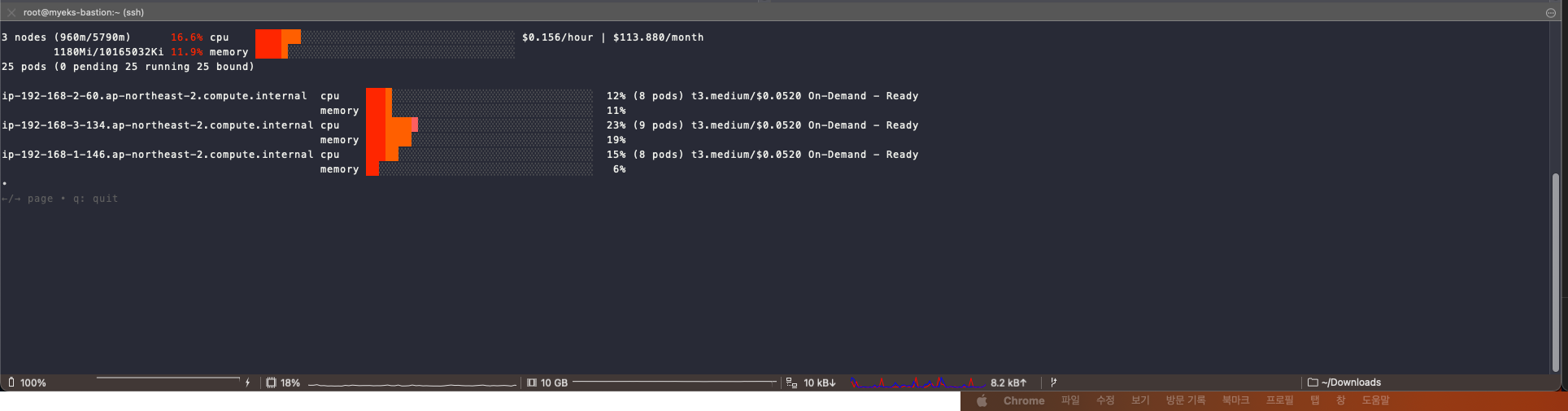

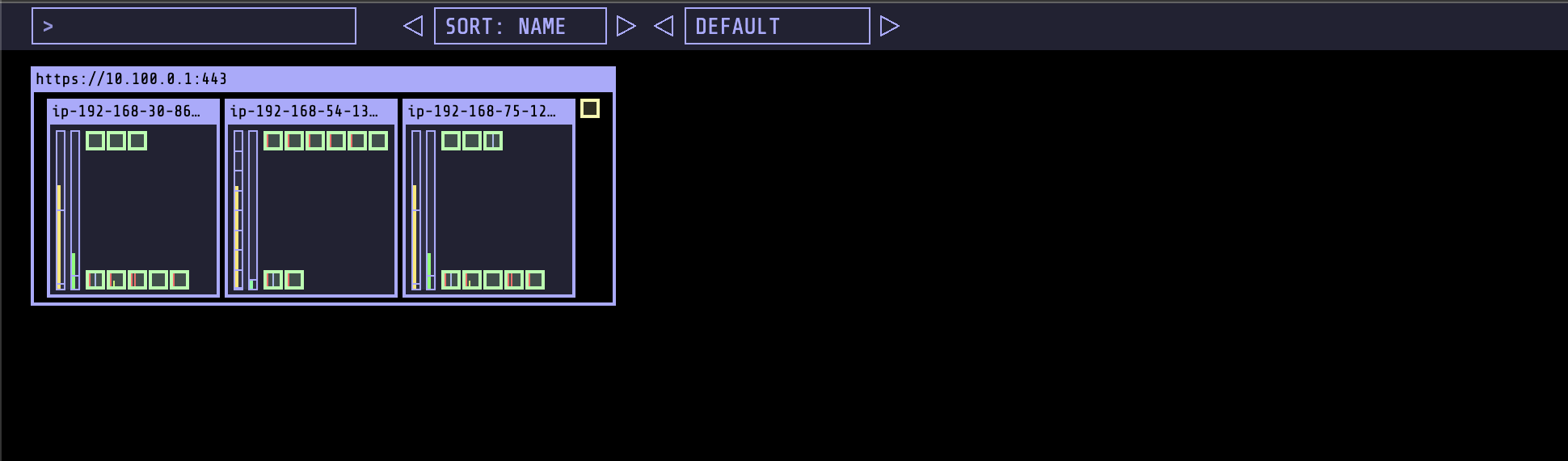

- EKS Node Viewer 설치

- 노드 할당 가능 용량과 요청 request 리소스를 표시해줍니다.

- 실제 Pod 의 사용량은 표시되지 않습니다.

- 참고 : https://github.com/awslabs/eks-node-viewer

# go 설치

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# wget https://go.dev/dl/go1.22.1.linux-amd64.tar.gz

--2024-04-04 18:41:47-- https://go.dev/dl/go1.22.1.linux-amd64.tar.gz

Resolving go.dev (go.dev)... 216.239.34.21, 216.239.32.21, 216.239.36.21, ...

Connecting to go.dev (go.dev)|216.239.34.21|:443... connected.

HTTP request sent, awaiting response... 302 Found

Location: https://dl.google.com/go/go1.22.1.linux-amd64.tar.gz [following]

--2024-04-04 18:41:48-- https://dl.google.com/go/go1.22.1.linux-amd64.tar.gz

Resolving dl.google.com (dl.google.com)... 142.251.42.206, 2404:6800:4004:827::200e

Connecting to dl.google.com (dl.google.com)|142.251.42.206|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 68965341 (66M) [application/x-gzip]

Saving to: ‘go1.22.1.linux-amd64.tar.gz’

100%[==========================================================================>] 68,965,341 66.2MB/s in 1.0s

2024-04-04 18:41:49 (66.2 MB/s) - ‘go1.22.1.linux-amd64.tar.gz’ saved [68965341/68965341]

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# tar -C /usr/local -xzf go1.22.1.linux-amd64.tar.gz

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# export PATH=$PATH:/usr/local/go/bin

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# go version

go version go1.22.1 linux/amd64

# EKS Node Viewer 설치 : 약간의 시간이 소요됩니다.

go install github.com/awslabs/eks-node-viewer/cmd/eks-node-viewer@latest

# 확인

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# cd ~/go/bin/

(leeeuijoo@myeks:default) [root@myeks-bastion bin]# ls

eks-node-viewer

# [신규 터미널] EKS Node Viewer 접속

## 명령 샘플

# Standard usage

./eks-node-viewer

# Display both CPU and Memory Usage

./eks-node-viewer --resources cpu,memory

# Karenter nodes only

./eks-node-viewer --node-selector "karpenter.sh/provisioner-name"

# Display extra labels, i.e. AZ

./eks-node-viewer --extra-labels topology.kubernetes.io/zone

# Specify a particular AWS profile and region

AWS_PROFILE=myprofile AWS_REGION=us-west-2

기본 옵션

# select only Karpenter managed nodes

node-selector=karpenter.sh/provisioner-name

# display both CPU and memory

resources=cpu,memory- 새로운 터미널로 열어 확인

Overview

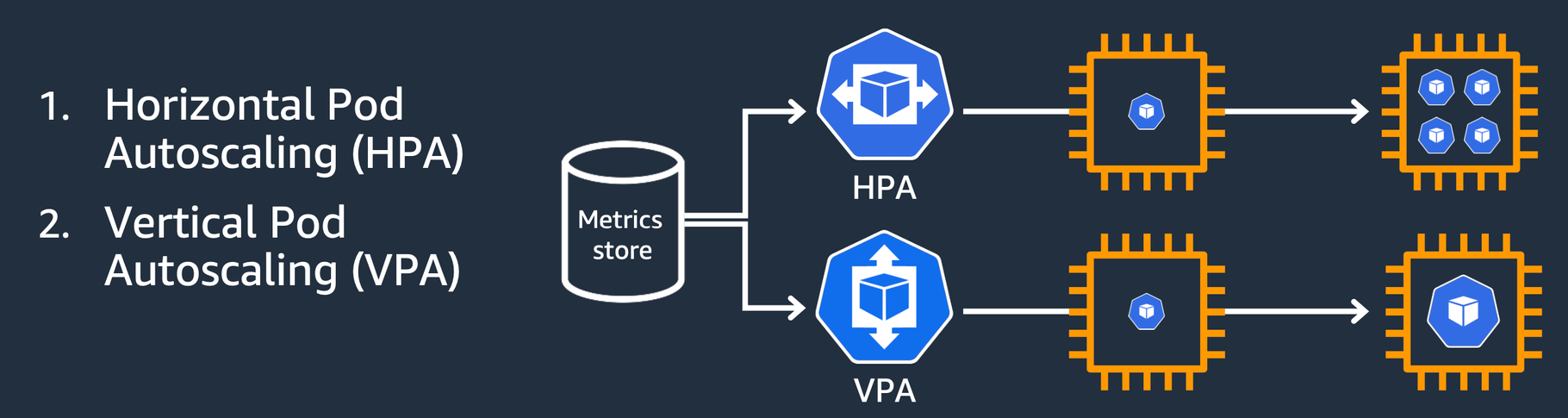

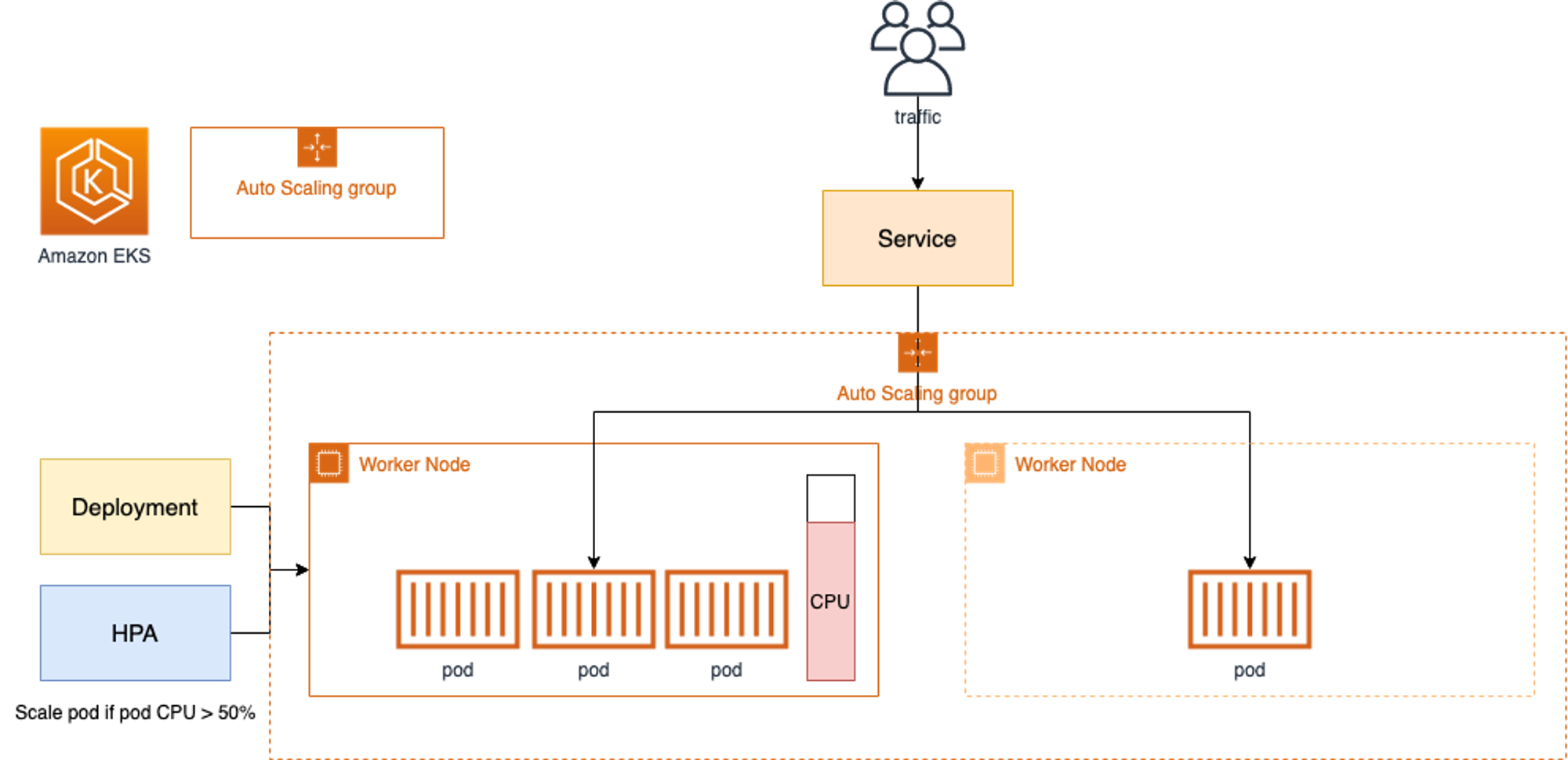

- HPA & VPA

- HPA : 간단하게 말하자면 Pod 의 개수를 늘려줌

- VPA : Pod 하나의 스펙을 높여줌

- Cluster Auto-Scaler - On-prem 환경에서 유리

- Pending 되어 있는 파드를 running 시키기 위해 EC2(노드)를 하나 더 띄어서 적용시켜주는 방식

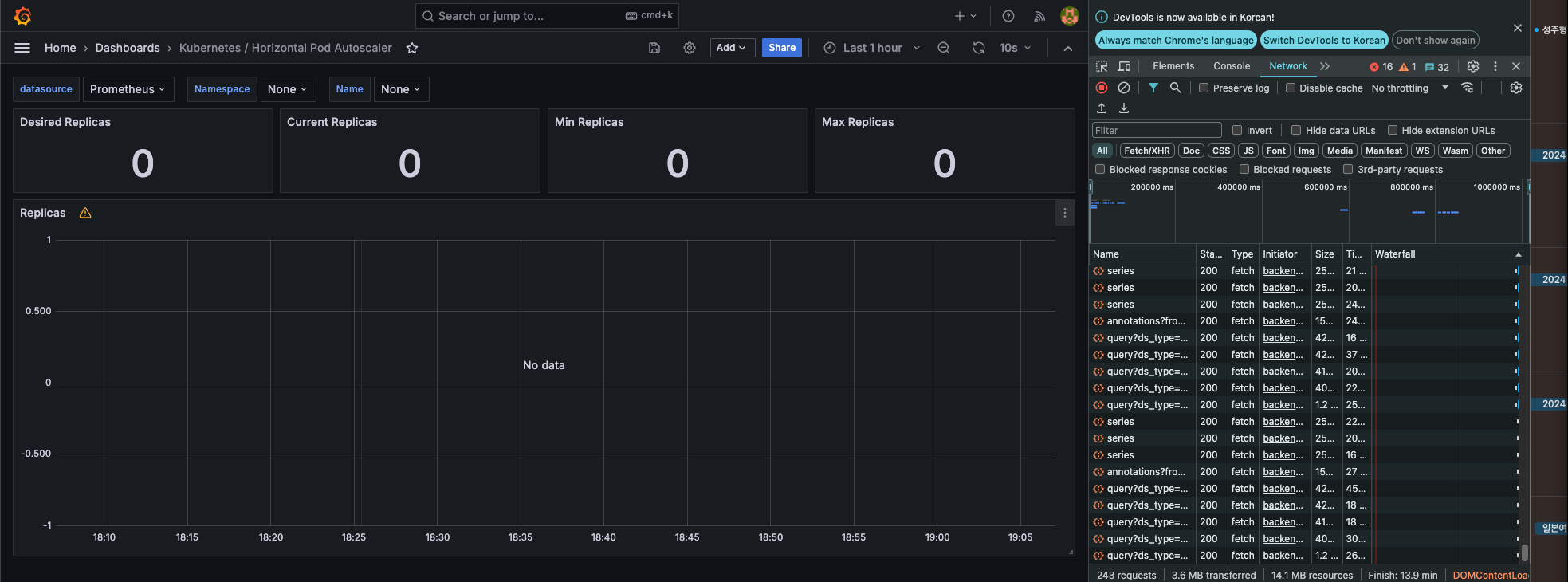

1. HPA - Horizontal Pod Autoscaler

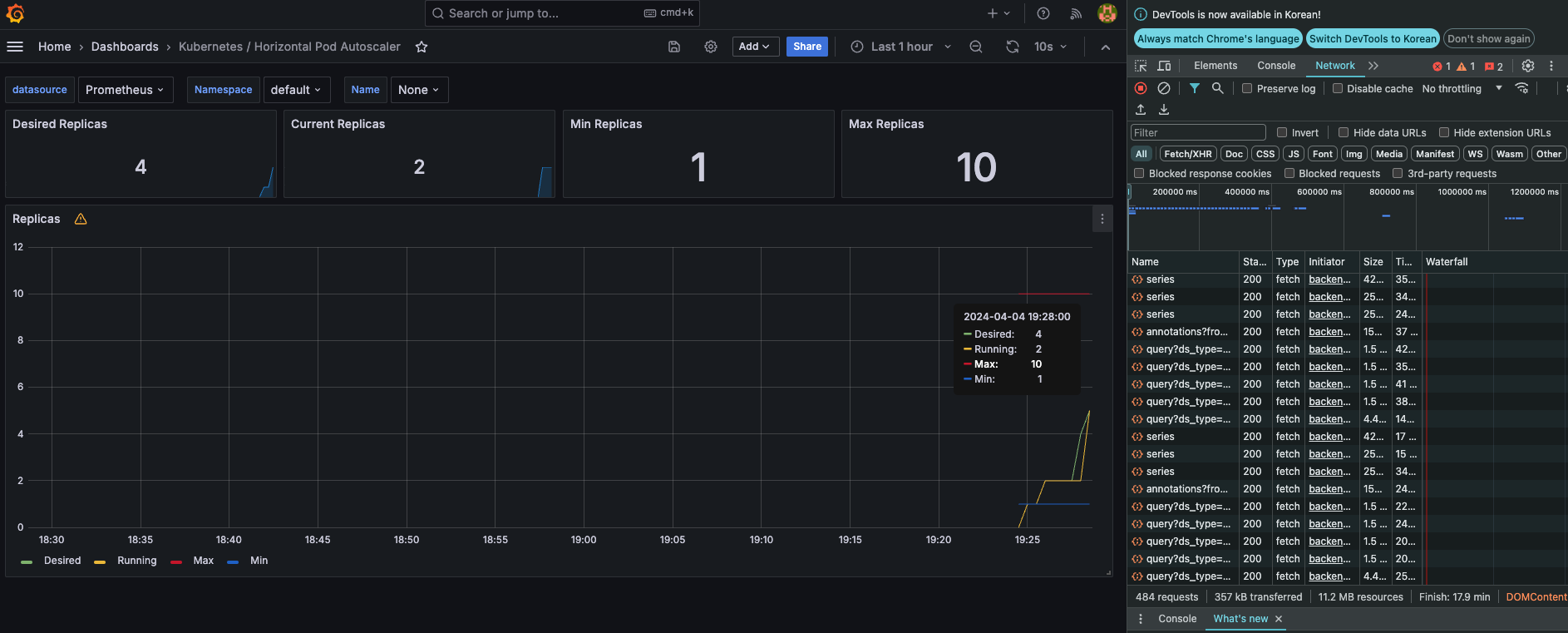

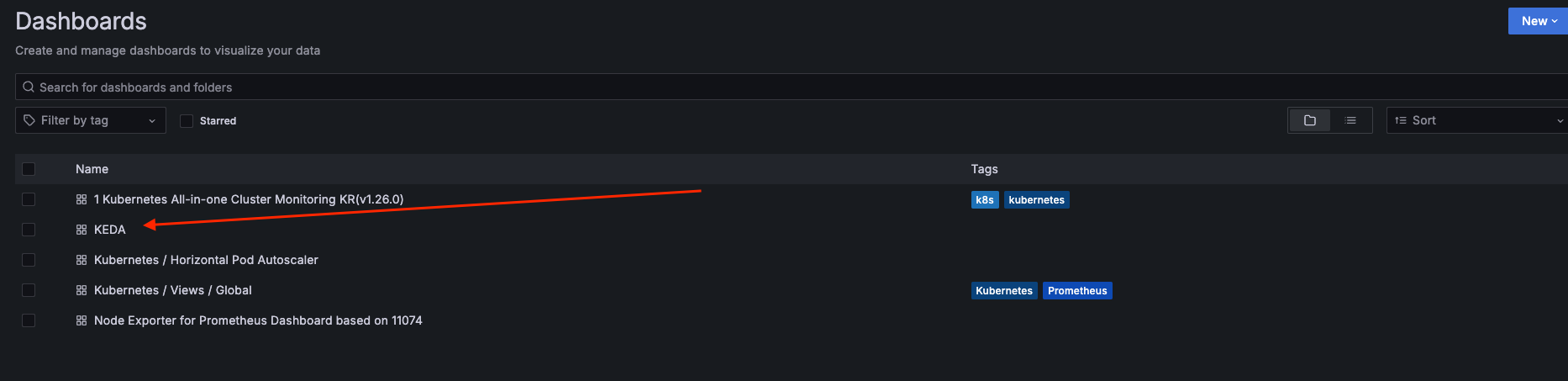

- Grafana DashBoard 추가

- JSON 형식의 파일로 Import 해줍니다.

JSON 주소 : https://file.notion.so/f/f/a6af158e-5b0f-4e31-9d12-0d0b2805956a/93cfa58d-5c66-4d80-9c4d-50da0563b6ad/17125_rev1.json?id=3db24c0f-8843-4ac7-9edc-91b5a6895dd3&table=block&spaceId=a6af158e-5b0f-4e31-9d12-0d0b2805956a&expirationTimestamp=1712361600000&signature=_zotBai9md1wOQ1GzSsyeWFPmFzPxbujFFccCP7DL3Q&downloadName=17125_rev1.json

- 샘플 Apache 애플리케이션 배포

- PHP-Apache

- CPU 과부하 연산을 수행합니다.

- PHP-Apache

curl -s -O https://raw.githubusercontent.com/kubernetes/website/main/content/en/examples/application/php-apache.yamlapiVersion: apps/v1

kind: Deployment

metadata:

name: php-apache

spec:

selector:

matchLabels:

run: php-apache

template:

metadata:

labels:

run: php-apache

spec:

containers:

- name: php-apache

image: registry.k8s.io/hpa-example

ports:

- containerPort: 80

resources:

limits:

cpu: 500m

requests:

cpu: 200m

---

apiVersion: v1

kind: Service

metadata:

name: php-apache

labels:

run: php-apache

spec:

ports:

- port: 80

selector:

run: php-apache

# 배포

(leeeuijoo@myeks:default) [root@myeks-bastion bin]# kubectl apply -f php-apache.yaml

deployment.apps/php-apache created

service/php-apache created

# 확인

(leeeuijoo@myeks:default) [root@myeks-bastion bin]# kubectl exec -it deploy/php-apache -- cat /var/www/html/index.php

<?php

$x = 0.0001;

for ($i = 0; $i <= 1000000; $i++) {

$x += sqrt($x);

}

echo "OK!";

?>

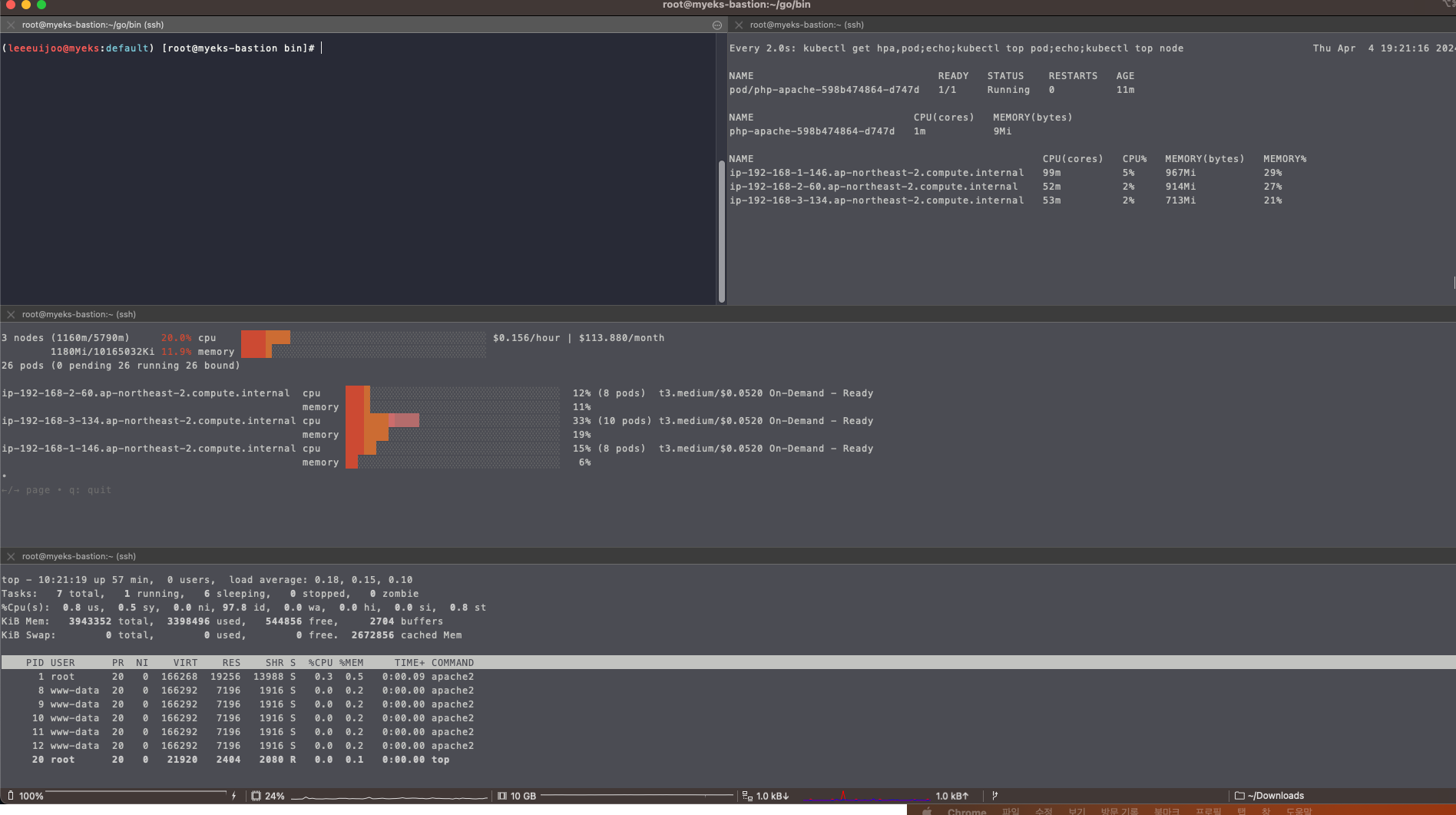

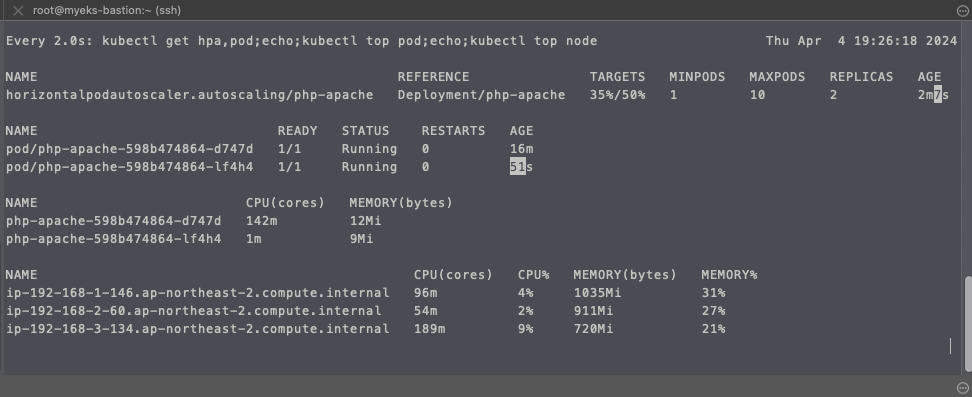

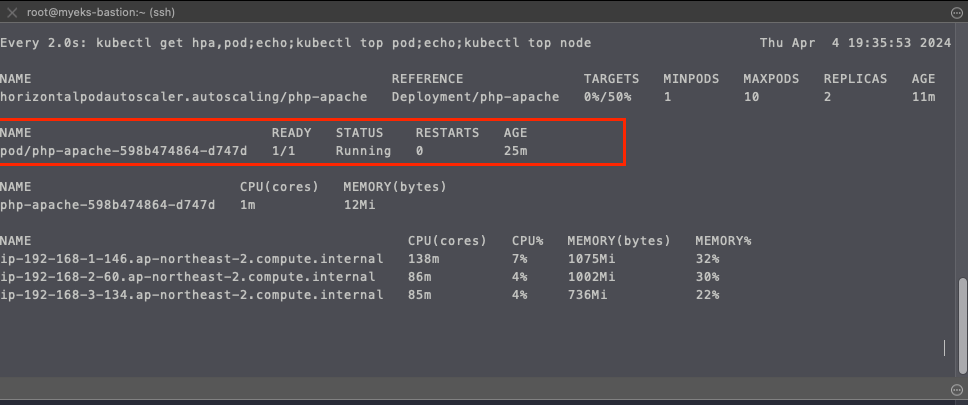

# 모니터링 : 터미널2개 사용

watch -d 'kubectl get hpa,pod;echo;kubectl top pod;echo;kubectl top node'

kubectl exec -it deploy/php-apache -- top

# 접속

(leeeuijoo@myeks:default) [root@myeks-bastion bin]# PODIP=$(kubectl get pod -l run=php-apache -o jsonpath={.items[0].status.podIP})

(leeeuijoo@myeks:default) [root@myeks-bastion bin]# curl -s $PODIP; echo

OK!

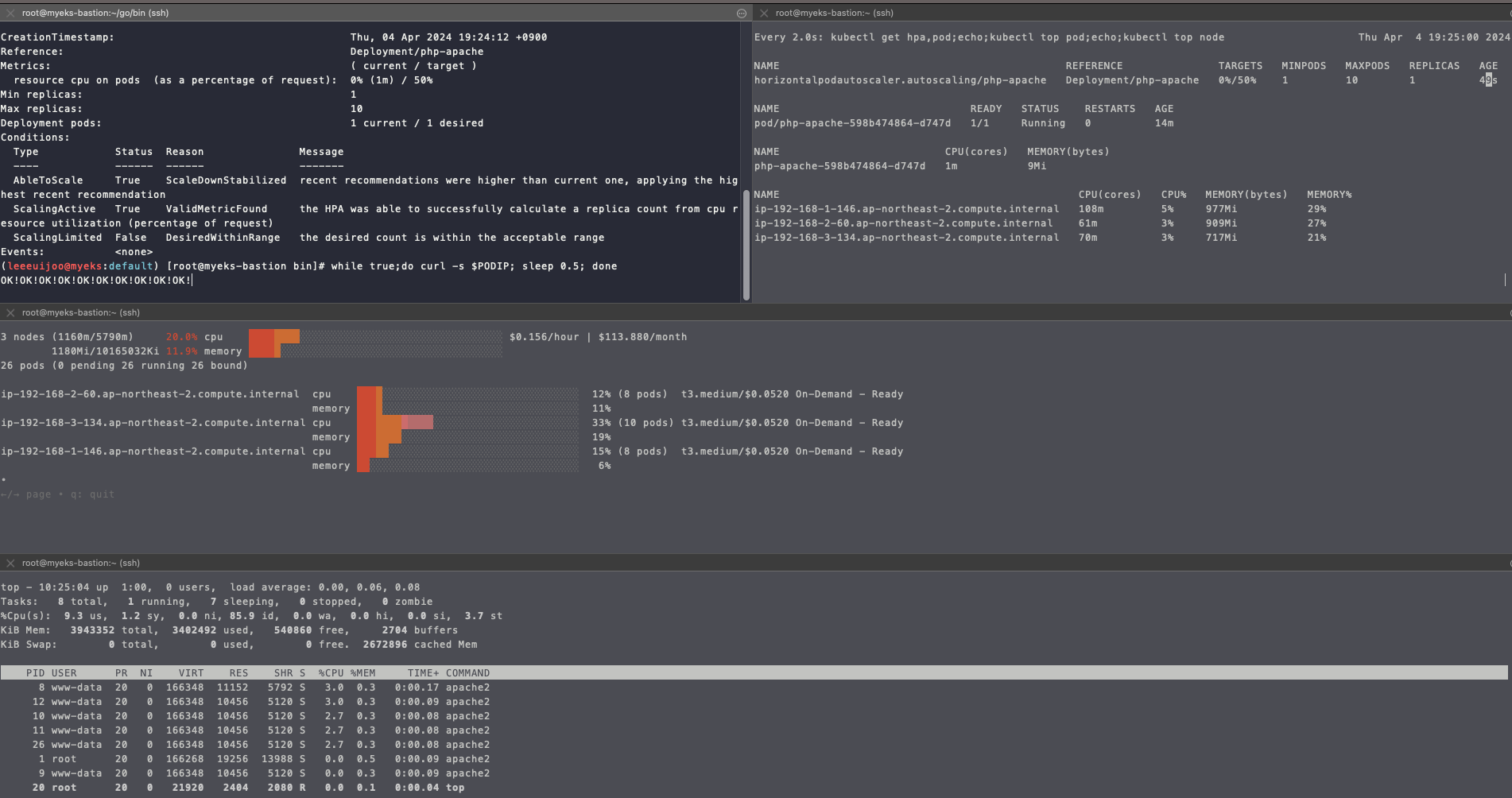

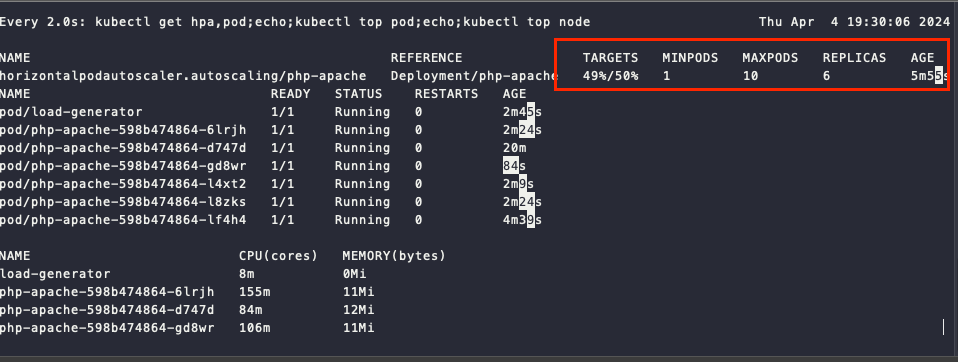

- HPA 생성 및 부하 발생 후 오토 스케일링 테스트 : 증가 시 기본 대기 시간(30초), 감소 시 기본 대기 시간(5분) → 조정 가능

- pod 가 1개에서 10개까지 오토스케일링

- hpa 의 정책을 살펴 보려면

kubectl describe hpa

(leeeuijoo@myeks:default) [root@myeks-bastion bin]# kubectl autoscale deployment php-apache --cpu-percent=50 --min=1 --max=10

horizontalpodautoscaler.autoscaling/php-apache autoscaled

# 반복 접속 1 (파드1 IP로 접속) >> 증가 확인 후 중지

(leeeuijoo@myeks:default) [root@myeks-bastion bin]# while true;do curl -s $PODIP; sleep 0.5; done

# HPA 설정 확인

kubectl get hpa php-apache -o yaml | kubectl neat | yh

spec:

minReplicas: 1 # [4] 또는 최소 1개까지 줄어들 수도 있습니다

maxReplicas: 10 # [3] 포드를 최대 5개까지 늘립니다

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: php-apache # [1] php-apache 의 자원 사용량에서

metrics:

- type: Resource

resource:

name: cpu

target:

type: Utilization

averageUtilization: 50 # [2] CPU 활용률이 50% 이상인 경우

- Pod 생성

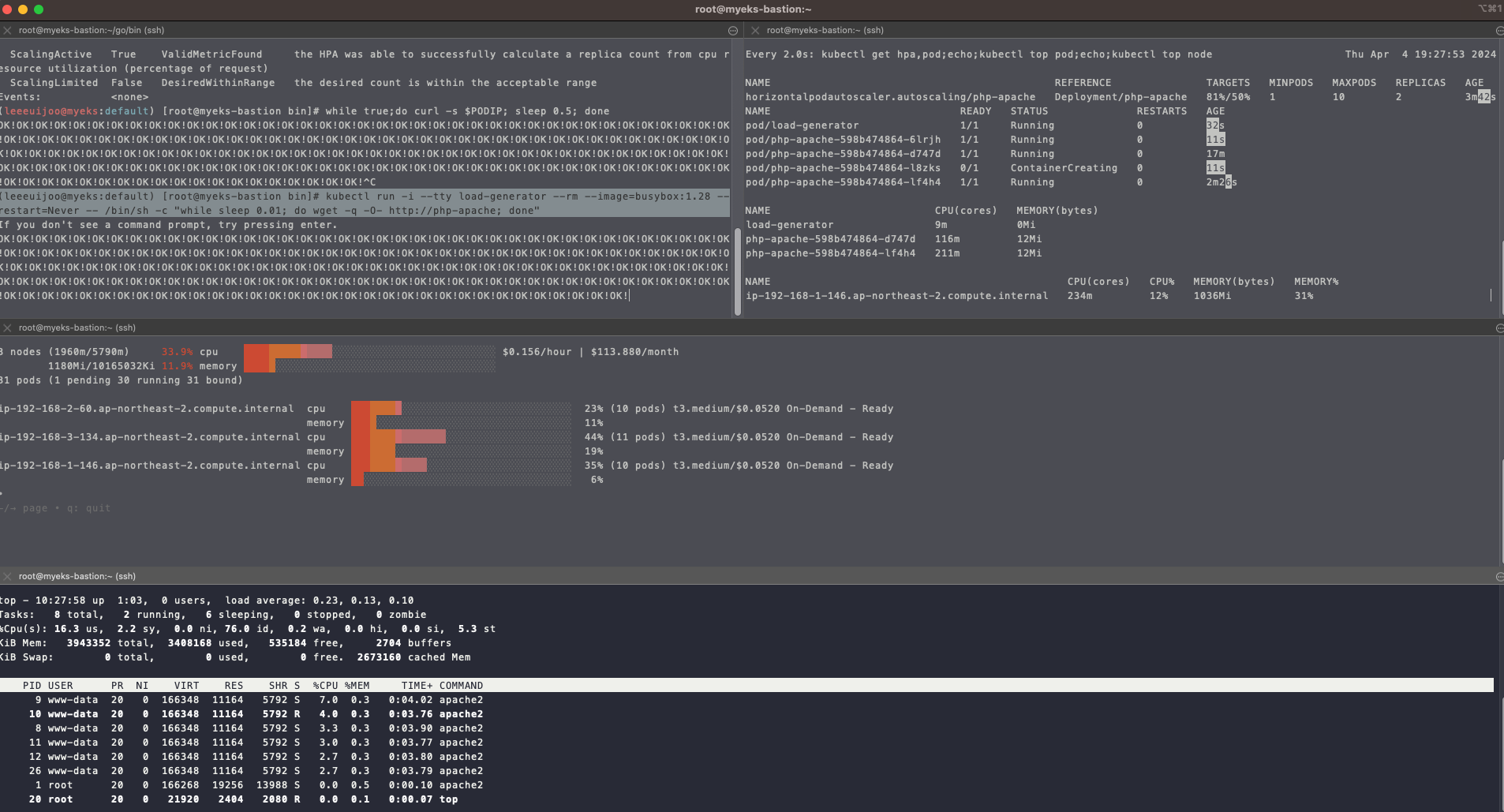

- 반복 접속 2 (서비스명 도메인으로 접속) >> 증가 확인(몇개까지 증가되는가? 그 이유는?) 후 중지 >> 중지 5분 후 파드 갯수 감소 확인

- 더 빠르게 부하를 주는 옵션 추가

(leeeuijoo@myeks:default) [root@myeks-bastion bin]# kubectl run -i --tty load-generator --rm --image=busybox:1.28 --restart=Never -- /bin/sh -c "while sleep 0.01; do wget -q -O- http://php-apache; done"

- 타겟의 전체 Utilization 을 50으로 두었고, 그 한계치에 도달한다면 scaling 이 더이상 이루어 지지 않습니다.

- 부하 취소 후 Graph 추이 확인 - 5분 정도 소요

- Pod 가 1개로 스케일링 됨

- 오브젝트 삭제

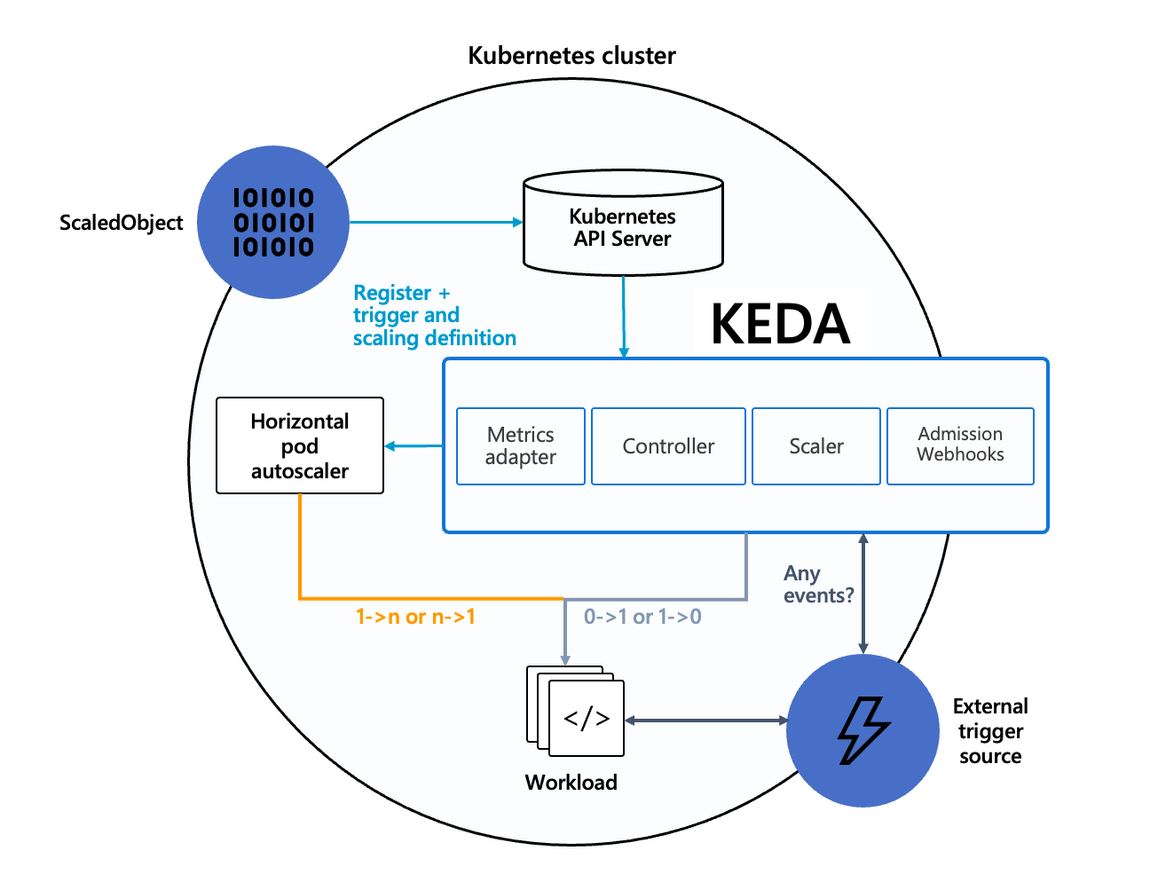

kubectl delete deploy,svc,hpa,pod --all2. KEDA - Kubernetes based Event Driven Autoscaler

-

HPA 의 단점 CPU, Memory 만 가지고 오토스케일링을 진행함

- 쓰는 용도가 떨어짐 이래서 등장한게 KEDA

-

기존의 HPA(Horizontal Pod Autoscaler)는 리소스(CPU, Memory) 메트릭을 기반으로 스케일 여부를 결정하게 됩니다.

-

반면에 KEDA는 특정 이벤트를 기반으로 스케일 여부를 결정할 수 있습니다.

-

예를 들어 airflow는 metadb를 통해 현재 실행 중이거나 대기 중인 task가 얼마나 존재하는지 알 수 있습니다.

-

이러한 이벤트를 활용하여 worker의 scale을 결정한다면 queue에 task가 많이 추가되는 시점에 더 빠르게 확장할 수 있습니다.

-

- KEDA DashBoard JSON

{

"annotations": {

"list": [

{

"builtIn": 1,

"datasource": {

"type": "grafana",

"uid": "-- Grafana --"

},

"enable": true,

"hide": true,

"iconColor": "rgba(0, 211, 255, 1)",

"name": "Annotations & Alerts",

"target": {

"limit": 100,

"matchAny": false,

"tags": [],

"type": "dashboard"

},

"type": "dashboard"

}

]

},

"description": "Visualize metrics provided by KEDA",

"editable": true,

"fiscalYearStartMonth": 0,

"graphTooltip": 0,

"id": 1653,

"links": [],

"liveNow": false,

"panels": [

{

"collapsed": false,

"gridPos": {

"h": 1,

"w": 24,

"x": 0,

"y": 0

},

"id": 8,

"panels": [],

"title": "Metric Server",

"type": "row"

},

{

"datasource": {

"type": "prometheus",

"uid": "${datasource}"

},

"description": "The total number of errors encountered for all scalers.",

"fieldConfig": {

"defaults": {

"color": {

"mode": "palette-classic"

},

"custom": {

"axisCenteredZero": false,

"axisColorMode": "text",

"axisLabel": "",

"axisPlacement": "auto",

"barAlignment": 0,

"drawStyle": "line",

"fillOpacity": 25,

"gradientMode": "opacity",

"hideFrom": {

"legend": false,

"tooltip": false,

"viz": false

},

"lineInterpolation": "linear",

"lineWidth": 2,

"pointSize": 5,

"scaleDistribution": {

"type": "linear"

},

"showPoints": "never",

"spanNulls": true,

"stacking": {

"group": "A",

"mode": "none"

},

"thresholdsStyle": {

"mode": "off"

}

},

"mappings": [],

"thresholds": {

"mode": "absolute",

"steps": [

{

"color": "green",

"value": null

},

{

"color": "red",

"value": 80

}

]

},

"unit": "Errors/sec"

},

"overrides": [

{

"matcher": {

"id": "byName",

"options": "http-demo"

},

"properties": [

{

"id": "color",

"value": {

"fixedColor": "red",

"mode": "fixed"

}

}

]

},

{

"matcher": {

"id": "byName",

"options": "scaledObject"

},

"properties": [

{

"id": "color",

"value": {

"fixedColor": "red",

"mode": "fixed"

}

}

]

},

{

"matcher": {

"id": "byName",

"options": "keda-system/keda-operator-metrics-apiserver"

},

"properties": [

{

"id": "color",

"value": {

"fixedColor": "red",

"mode": "fixed"

}

}

]

}

]

},

"gridPos": {

"h": 9,

"w": 8,

"x": 0,

"y": 1

},

"id": 4,

"options": {

"legend": {

"calcs": [],

"displayMode": "list",

"placement": "bottom",

"showLegend": true

},

"tooltip": {

"mode": "single",

"sort": "none"

}

},

"targets": [

{

"datasource": {

"type": "prometheus",

"uid": "${datasource}"

},

"editorMode": "code",

"expr": "sum by(job) (rate(keda_scaler_errors{}[5m]))",

"legendFormat": "{{ job }}",

"range": true,

"refId": "A"

}

],

"title": "Scaler Total Errors",

"type": "timeseries"

},

{

"datasource": {

"type": "prometheus",

"uid": "${datasource}"

},

"description": "The number of errors that have occurred for each scaler.",

"fieldConfig": {

"defaults": {

"color": {

"mode": "palette-classic"

},

"custom": {

"axisCenteredZero": false,

"axisColorMode": "text",

"axisLabel": "",

"axisPlacement": "auto",

"barAlignment": 0,

"drawStyle": "line",

"fillOpacity": 25,

"gradientMode": "opacity",

"hideFrom": {

"legend": false,

"tooltip": false,

"viz": false

},

"lineInterpolation": "linear",

"lineWidth": 2,

"pointSize": 5,

"scaleDistribution": {

"type": "linear"

},

"showPoints": "never",

"spanNulls": true,

"stacking": {

"group": "A",

"mode": "none"

},

"thresholdsStyle": {

"mode": "off"

}

},

"mappings": [],

"thresholds": {

"mode": "absolute",

"steps": [

{

"color": "green",

"value": null

},

{

"color": "red",

"value": 80

}

]

},

"unit": "Errors/sec"

},

"overrides": [

{

"matcher": {

"id": "byName",

"options": "http-demo"

},

"properties": [

{

"id": "color",

"value": {

"fixedColor": "red",

"mode": "fixed"

}

}

]

},

{

"matcher": {

"id": "byName",

"options": "scaler"

},

"properties": [

{

"id": "color",

"value": {

"fixedColor": "red",

"mode": "fixed"

}

}

]

},

{

"matcher": {

"id": "byName",

"options": "prometheusScaler"

},

"properties": [

{

"id": "color",

"value": {

"fixedColor": "red",

"mode": "fixed"

}

}

]

}

]

},

"gridPos": {

"h": 9,

"w": 8,

"x": 8,

"y": 1

},

"id": 3,

"options": {

"legend": {

"calcs": [],

"displayMode": "list",

"placement": "bottom",

"showLegend": true

},

"tooltip": {

"mode": "single",

"sort": "none"

}

},

"targets": [

{

"datasource": {

"type": "prometheus",

"uid": "${datasource}"

},

"editorMode": "code",

"expr": "sum by(scaler) (rate(keda_scaler_errors{exported_namespace=~\"$namespace\", scaledObject=~\"$scaledObject\", scaler=~\"$scaler\"}[5m]))",

"legendFormat": "{{ scaler }}",

"range": true,

"refId": "A"

}

],

"title": "Scaler Errors",

"type": "timeseries"

},

{

"datasource": {

"type": "prometheus",

"uid": "${datasource}"

},

"description": "The number of errors that have occurred for each scaled object.",

"fieldConfig": {

"defaults": {

"color": {

"mode": "palette-classic"

},

"custom": {

"axisCenteredZero": false,

"axisColorMode": "text",

"axisLabel": "",

"axisPlacement": "auto",

"barAlignment": 0,

"drawStyle": "line",

"fillOpacity": 25,

"gradientMode": "opacity",

"hideFrom": {

"legend": false,

"tooltip": false,

"viz": false

},

"lineInterpolation": "linear",

"lineWidth": 2,

"pointSize": 5,

"scaleDistribution": {

"type": "linear"

},

"showPoints": "never",

"spanNulls": true,

"stacking": {

"group": "A",

"mode": "none"

},

"thresholdsStyle": {

"mode": "off"

}

},

"mappings": [],

"thresholds": {

"mode": "absolute",

"steps": [

{

"color": "green",

"value": null

},

{

"color": "red",

"value": 80

}

]

},

"unit": "Errors/sec"

},

"overrides": [

{

"matcher": {

"id": "byName",

"options": "http-demo"

},

"properties": [

{

"id": "color",

"value": {

"fixedColor": "red",

"mode": "fixed"

}

}

]

}

]

},

"gridPos": {

"h": 9,

"w": 8,

"x": 16,

"y": 1

},

"id": 2,

"options": {

"legend": {

"calcs": [],

"displayMode": "list",

"placement": "bottom",

"showLegend": true

},

"tooltip": {

"mode": "single",

"sort": "none"

}

},

"targets": [

{

"datasource": {

"type": "prometheus",

"uid": "${datasource}"

},

"editorMode": "code",

"expr": "sum by(scaledObject) (rate(keda_scaled_object_errors{exported_namespace=~\"$namespace\", scaledObject=~\"$scaledObject\"}[5m]))",

"legendFormat": "{{ scaledObject }}",

"range": true,

"refId": "A"

}

],

"title": "Scaled Object Errors",

"type": "timeseries"

},

{

"collapsed": false,

"gridPos": {

"h": 1,

"w": 24,

"x": 0,

"y": 10

},

"id": 10,

"panels": [],

"title": "Scale Target",

"type": "row"

},

{

"datasource": {

"type": "prometheus",

"uid": "${datasource}"

},

"description": "The current value for each scaler’s metric that would be used by the HPA in computing the target average.",

"fieldConfig": {

"defaults": {

"color": {

"mode": "palette-classic"

},

"custom": {

"axisCenteredZero": false,

"axisColorMode": "text",

"axisLabel": "",

"axisPlacement": "auto",

"barAlignment": 0,

"drawStyle": "line",

"fillOpacity": 25,

"gradientMode": "opacity",

"hideFrom": {

"legend": false,

"tooltip": false,

"viz": false

},

"lineInterpolation": "linear",

"lineWidth": 2,

"pointSize": 5,

"scaleDistribution": {

"type": "linear"

},

"showPoints": "never",

"spanNulls": true,

"stacking": {

"group": "A",

"mode": "none"

},

"thresholdsStyle": {

"mode": "off"

}

},

"mappings": [],

"thresholds": {

"mode": "absolute",

"steps": [

{

"color": "green",

"value": null

},

{

"color": "red",

"value": 80

}

]

},

"unit": "none"

},

"overrides": [

{

"matcher": {

"id": "byName",

"options": "http-demo"

},

"properties": [

{

"id": "color",

"value": {

"fixedColor": "blue",

"mode": "fixed"

}

}

]

}

]

},

"gridPos": {

"h": 9,

"w": 24,

"x": 0,

"y": 11

},

"id": 5,

"options": {

"legend": {

"calcs": [],

"displayMode": "list",

"placement": "bottom",

"showLegend": true

},

"tooltip": {

"mode": "single",

"sort": "none"

}

},

"targets": [

{

"datasource": {

"type": "prometheus",

"uid": "${datasource}"

},

"editorMode": "code",

"expr": "sum by(metric) (keda_scaler_metrics_value{exported_namespace=~\"$namespace\", metric=~\"$metric\", scaledObject=\"$scaledObject\"})",

"legendFormat": "{{ metric }}",

"range": true,

"refId": "A"

}

],

"title": "Scaler Metric Value",

"type": "timeseries"

},

{

"datasource": {

"type": "prometheus",

"uid": "${datasource}"

},

"description": "shows current replicas against max ones based on time difference",

"fieldConfig": {

"defaults": {

"color": {

"mode": "palette-classic"

},

"custom": {

"axisCenteredZero": false,

"axisColorMode": "text",

"axisLabel": "",

"axisPlacement": "auto",

"barAlignment": 0,

"drawStyle": "line",

"fillOpacity": 21,

"gradientMode": "opacity",

"hideFrom": {

"legend": false,

"tooltip": false,

"viz": false

},

"lineInterpolation": "linear",

"lineStyle": {

"fill": "solid"

},

"lineWidth": 1,

"pointSize": 5,

"scaleDistribution": {

"type": "linear"

},

"showPoints": "auto",

"spanNulls": false,

"stacking": {

"group": "A",

"mode": "none"

},

"thresholdsStyle": {

"mode": "off"

}

},

"mappings": [],

"thresholds": {

"mode": "absolute",

"steps": [

{

"color": "green",

"value": null

}

]

},

"unit": "short"

},

"overrides": []

},

"gridPos": {

"h": 8,

"w": 24,

"x": 0,

"y": 20

},

"id": 13,

"options": {

"legend": {

"calcs": [],

"displayMode": "list",

"placement": "bottom",

"showLegend": true

},

"tooltip": {

"mode": "single",

"sort": "none"

}

},

"targets": [

{

"datasource": {

"type": "prometheus",

"uid": "${datasource}"

},

"editorMode": "code",

"exemplar": false,

"expr": "kube_horizontalpodautoscaler_status_current_replicas{namespace=\"$namespace\",horizontalpodautoscaler=\"keda-hpa-$scaledObject\"}",

"format": "time_series",

"instant": false,

"interval": "",

"legendFormat": "current_replicas",

"range": true,

"refId": "A"

},

{

"datasource": {

"type": "prometheus",

"uid": "${datasource}"

},

"editorMode": "code",

"exemplar": false,

"expr": "kube_horizontalpodautoscaler_spec_max_replicas{namespace=\"$namespace\",horizontalpodautoscaler=\"keda-hpa-$scaledObject\"}",

"format": "time_series",

"hide": false,

"instant": false,

"legendFormat": "max_replicas",

"range": true,

"refId": "B"

}

],

"title": "Current/max replicas (time based)",

"type": "timeseries"

},

{

"datasource": {

"type": "prometheus",

"uid": "${datasource}"

},

"description": "shows current replicas against max ones based on time difference",

"fieldConfig": {

"defaults": {

"color": {

"mode": "continuous-GrYlRd"

},

"custom": {

"fillOpacity": 70,

"lineWidth": 0,

"spanNulls": false

},

"mappings": [

{

"options": {

"0": {

"color": "green",

"index": 0,

"text": "No scaling"

}

},

"type": "value"

},

{

"options": {

"from": -200,

"result": {

"color": "light-red",

"index": 1,

"text": "Scaling down"

},

"to": 0

},

"type": "range"

},

{

"options": {

"from": 0,

"result": {

"color": "semi-dark-red",

"index": 2,

"text": "Scaling up"

},

"to": 200

},

"type": "range"

}

],

"thresholds": {

"mode": "absolute",

"steps": [

{

"color": "green",

"value": null

}

]

},

"unit": "none"

},

"overrides": []

},

"gridPos": {

"h": 8,

"w": 24,

"x": 0,

"y": 28

},

"id": 16,

"options": {

"alignValue": "left",

"legend": {

"displayMode": "list",

"placement": "bottom",

"showLegend": false,

"width": 0

},

"mergeValues": true,

"rowHeight": 1,

"showValue": "never",

"tooltip": {

"mode": "single",

"sort": "none"

}

},

"targets": [

{

"datasource": {

"type": "prometheus",

"uid": "${datasource}"

},

"editorMode": "code",

"exemplar": false,

"expr": "delta(kube_horizontalpodautoscaler_status_current_replicas{namespace=\"$namespace\",horizontalpodautoscaler=\"keda-hpa-$scaledObject\"}[1m])",

"format": "time_series",

"instant": false,

"interval": "",

"legendFormat": ".",

"range": true,

"refId": "A"

}

],

"title": "Changes in replicas",

"type": "state-timeline"

},

{

"datasource": {

"type": "prometheus",

"uid": "${datasource}"

},

"description": "shows current replicas against max ones",

"fieldConfig": {

"defaults": {

"color": {

"mode": "thresholds"

},

"mappings": [],

"min": 0,

"thresholds": {

"mode": "percentage",

"steps": [

{

"color": "green"

},

{

"color": "red",

"value": 80

}

]

},

"unit": "short"

},

"overrides": []

},

"gridPos": {

"h": 8,

"w": 12,

"x": 0,

"y": 36

},

"id": 15,

"options": {

"orientation": "auto",

"reduceOptions": {

"calcs": [

"lastNotNull"

],

"fields": "/^current_replicas$/",

"values": false

},

"showThresholdLabels": false,

"showThresholdMarkers": true

},

"pluginVersion": "9.5.2",

"targets": [

{

"datasource": {

"type": "prometheus",

"uid": "${datasource}"

},

"editorMode": "code",

"exemplar": false,

"expr": "kube_horizontalpodautoscaler_status_current_replicas{namespace=\"$namespace\",horizontalpodautoscaler=\"keda-hpa-$scaledObject\"}",

"instant": true,

"legendFormat": "current_replicas",

"range": false,

"refId": "A"

},

{

"datasource": {

"type": "prometheus",

"uid": "${datasource}"

},

"editorMode": "code",

"exemplar": false,

"expr": "kube_horizontalpodautoscaler_spec_max_replicas{namespace=\"$namespace\",horizontalpodautoscaler=\"keda-hpa-$scaledObject\"}",

"hide": false,

"instant": true,

"legendFormat": "max_replicas",

"range": false,

"refId": "B"

}

],

"title": "Current/max replicas",

"type": "gauge"

}

],

"refresh": "1m",

"schemaVersion": 38,

"style": "dark",

"tags": [],

"templating": {

"list": [

{

"current": {

"selected": false,

"text": "Prometheus",

"value": "Prometheus"

},

"hide": 0,

"includeAll": false,

"multi": false,

"name": "datasource",

"options": [],

"query": "prometheus",

"queryValue": "",

"refresh": 1,

"regex": "",

"skipUrlSync": false,

"type": "datasource"

},

{

"current": {

"selected": false,

"text": "bhe-test",

"value": "bhe-test"

},

"datasource": {

"type": "prometheus",

"uid": "${datasource}"

},

"definition": "label_values(keda_scaler_active,exported_namespace)",

"hide": 0,

"includeAll": false,

"multi": false,

"name": "namespace",

"options": [],

"query": {

"query": "label_values(keda_scaler_active,exported_namespace)",

"refId": "PrometheusVariableQueryEditor-VariableQuery"

},

"refresh": 1,

"regex": "",

"skipUrlSync": false,

"sort": 1,

"type": "query"

},

{

"current": {

"selected": false,

"text": "All",

"value": "$__all"

},

"datasource": {

"type": "prometheus",

"uid": "${datasource}"

},

"definition": "label_values(keda_scaler_active{exported_namespace=\"$namespace\"},scaledObject)",

"hide": 0,

"includeAll": true,

"multi": true,

"name": "scaledObject",

"options": [],

"query": {

"query": "label_values(keda_scaler_active{exported_namespace=\"$namespace\"},scaledObject)",

"refId": "PrometheusVariableQueryEditor-VariableQuery"

},

"refresh": 2,

"regex": "",

"skipUrlSync": false,

"sort": 0,

"type": "query"

},

{

"current": {

"selected": false,

"text": "cronScaler",

"value": "cronScaler"

},

"datasource": {

"type": "prometheus",

"uid": "${datasource}"

},

"definition": "label_values(keda_scaler_active{exported_namespace=\"$namespace\"},scaler)",

"hide": 0,

"includeAll": false,

"multi": false,

"name": "scaler",

"options": [],

"query": {

"query": "label_values(keda_scaler_active{exported_namespace=\"$namespace\"},scaler)",

"refId": "PrometheusVariableQueryEditor-VariableQuery"

},

"refresh": 2,

"regex": "",

"skipUrlSync": false,

"sort": 0,

"type": "query"

},

{

"current": {

"selected": false,

"text": "s0-cron-Etc-UTC-40xxxx-55xxxx",

"value": "s0-cron-Etc-UTC-40xxxx-55xxxx"

},

"datasource": {

"type": "prometheus",

"uid": "${datasource}"

},

"definition": "label_values(keda_scaler_active{exported_namespace=\"$namespace\"},metric)",

"hide": 0,

"includeAll": false,

"multi": false,

"name": "metric",

"options": [],

"query": {

"query": "label_values(keda_scaler_active{exported_namespace=\"$namespace\"},metric)",

"refId": "PrometheusVariableQueryEditor-VariableQuery"

},

"refresh": 2,

"regex": "",

"skipUrlSync": false,

"sort": 0,

"type": "query"

}

]

},

"time": {

"from": "now-24h",

"to": "now"

},

"timepicker": {},

"timezone": "",

"title": "KEDA",

"uid": "asdasd8rvmMxdVk",

"version": 8,

"weekStart": ""

}

- KEDA 설치

cat <<EOT > keda-values.yaml

metricsServer:

useHostNetwork: true

prometheus:

metricServer:

enabled: true

port: 9022

portName: metrics

path: /metrics

serviceMonitor:

# Enables ServiceMonitor creation for the Prometheus Operator

enabled: true

podMonitor:

# Enables PodMonitor creation for the Prometheus Operator

enabled: true

operator:

enabled: true

port: 8080

serviceMonitor:

# Enables ServiceMonitor creation for the Prometheus Operator

enabled: true

podMonitor:

# Enables PodMonitor creation for the Prometheus Operator

enabled: true

webhooks:

enabled: true

port: 8080

serviceMonitor:

# Enables ServiceMonitor creation for the Prometheus webhooks

enabled: true

EOT

(leeeuijoo@myeks:default) [root@myeks-bastion bin]# kubectl create namespace keda

namespace/keda created

(leeeuijoo@myeks:default) [root@myeks-bastion bin]# helm repo add kedacore https://kedacore.github.io/charts

"kedacore" has been added to your repositories

(leeeuijoo@myeks:default) [root@myeks-bastion bin]# helm install keda kedacore/keda --version 2.13.0 --namespace keda -f keda-values.yaml

NAME: keda

LAST DEPLOYED: Thu Apr 4 19:40:38 2024

NAMESPACE: keda

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

...

Get started by deploying Scaled Objects to your cluster:

- Information about Scaled Objects : https://keda.sh/docs/latest/concepts/

- Samples: https://github.com/kedacore/samples

Get information about the deployed ScaledObjects:

kubectl get scaledobject [--namespace <namespace>]

Get details about a deployed ScaledObject:

kubectl describe scaledobject <scaled-object-name> [--namespace <namespace>]

Get information about the deployed ScaledObjects:

kubectl get triggerauthentication [--namespace <namespace>]

Get details about a deployed ScaledObject:

kubectl describe triggerauthentication <trigger-authentication-name> [--namespace <namespace>]

Get an overview of the Horizontal Pod Autoscalers (HPA) that KEDA is using behind the scenes:

kubectl get hpa [--all-namespaces] [--namespace <namespace>]

Learn more about KEDA:

- Documentation: https://keda.sh/

- Support: https://keda.sh/support/

- File an issue: https://github.com/kedacore/keda/issues/new/choose

# KEDA 설치 확인

(leeeuijoo@myeks:default) [root@myeks-bastion bin]# kubectl get all -n keda

NAME READY STATUS RESTARTS AGE

pod/keda-admission-webhooks-5bffd88dcf-s6kng 1/1 Running 0 2m7s

pod/keda-operator-856b546d-k7whb 1/1 Running 1 (115s ago) 2m7s

pod/keda-operator-metrics-apiserver-5666945c65-kp85f 1/1 Running 0 2m7s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/keda-admission-webhooks ClusterIP 10.100.142.96 <none> 443/TCP,8080/TCP 2m7s

service/keda-operator ClusterIP 10.100.132.136 <none> 9666/TCP,8080/TCP 2m7s

service/keda-operator-metrics-apiserver ClusterIP 10.100.116.210 <none> 443/TCP,9022/TCP 2m7s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/keda-admission-webhooks 1/1 1 1 2m7s

deployment.apps/keda-operator 1/1 1 1 2m7s

deployment.apps/keda-operator-metrics-apiserver 1/1 1 1 2m7s

NAME DESIRED CURRENT READY AGE

replicaset.apps/keda-admission-webhooks-5bffd88dcf 1 1 1 2m7s

replicaset.apps/keda-operator-856b546d 1 1 1 2m7s

replicaset.apps/keda-operator-metrics-apiserver-5666945c65 1 1 1 2m7s

kubectl get validatingwebhookconfigurations keda-admission

kubectl get validatingwebhookconfigurations keda-admission | kubectl neat | yh

kubectl get crd | grep keda

# keda 네임스페이스에 디플로이먼트 생성

## 방금 전 PHP

(leeeuijoo@myeks:default) [root@myeks-bastion bin]# kubectl apply -f php-apache.yaml -n keda

deployment.apps/php-apache created

service/php-apache created

(leeeuijoo@myeks:default) [root@myeks-bastion bin]#

(leeeuijoo@myeks:default) [root@myeks-bastion bin]# kubectl get pod -n keda

NAME READY STATUS RESTARTS AGE

keda-admission-webhooks-5bffd88dcf-s6kng 1/1 Running 0 3m7s

keda-operator-856b546d-k7whb 1/1 Running 1 (2m55s ago) 3m7s

keda-operator-metrics-apiserver-5666945c65-kp85f 1/1 Running 0 3m7s

php-apache-598b474864-4g8nr 1/1 Running 0 3s

- ScaledObject 정책 생성 : cron

cat <<EOT > keda-cron.yaml

apiVersion: keda.sh/v1alpha1

kind: ScaledObject

metadata:

name: php-apache-cron-scaled

spec:

minReplicaCount: 0

maxReplicaCount: 2

pollingInterval: 30

cooldownPeriod: 300

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: php-apache

triggers:

- type: cron

metadata:

timezone: Asia/Seoul

start: 00,15,30,45 * * * *

end: 05,20,35,50 * * * *

desiredReplicas: "1"

EOT

kubectl apply -f keda-cron.yaml -n keda

# 모니터링

watch -d 'kubectl get ScaledObject,hpa,pod -n keda'

kubectl get ScaledObject -w

# 확인

(leeeuijoo@myeks:default) [root@myeks-bastion bin]# kubectl get ScaledObject,hpa,pod -n keda

NAME SCALETARGETKIND SCALETARGETNAME MIN MAX TRIGGERS AUTHENTICATION READY ACTIVE FALLBACK PAUSED AGE

scaledobject.keda.sh/php-apache-cron-scaled apps/v1.Deployment php-apache 0 2 cron True True Unknown Unknown 2m18s

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

horizontalpodautoscaler.autoscaling/keda-hpa-php-apache-cron-scaled Deployment/php-apache <unknown>/1 (avg) 1 2 1 2m17s

NAME READY STATUS RESTARTS AGE

pod/keda-admission-webhooks-5bffd88dcf-s6kng 1/1 Running 0 6m12s

pod/keda-operator-856b546d-k7whb 1/1 Running 1 (6m ago) 6m12s

pod/keda-operator-metrics-apiserver-5666945c65-kp85f 1/1 Running 0 6m12s

pod/php-apache-598b474864-bm88x 1/1 Running 0 107s

kubectl get hpa -o jsonpath={.items[0].spec} -n keda | jq

...

"metrics": [

{

"external": {

"metric": {

"name": "s0-cron-Asia-Seoul-00,15,30,45xxxx-05,20,35,50xxxx",

"selector": {

"matchLabels": {

"scaledobject.keda.sh/name": "php-apache-cron-scaled"

}

}

},

"target": {

"averageValue": "1",

"type": "AverageValue"

}

},

"type": "External"

}

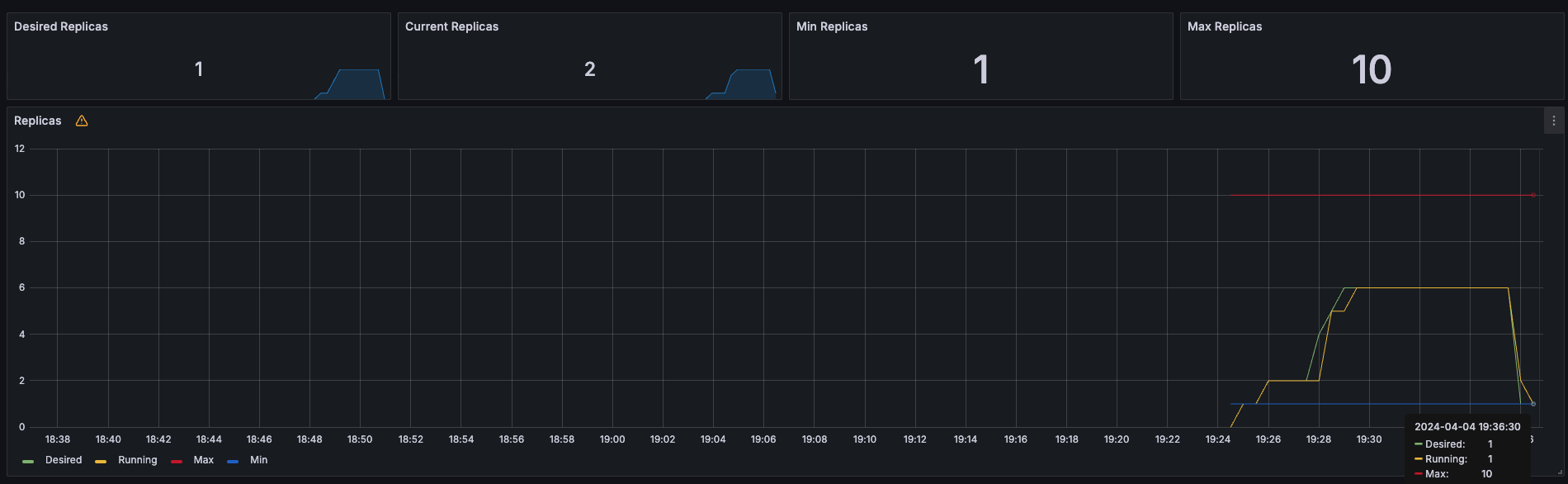

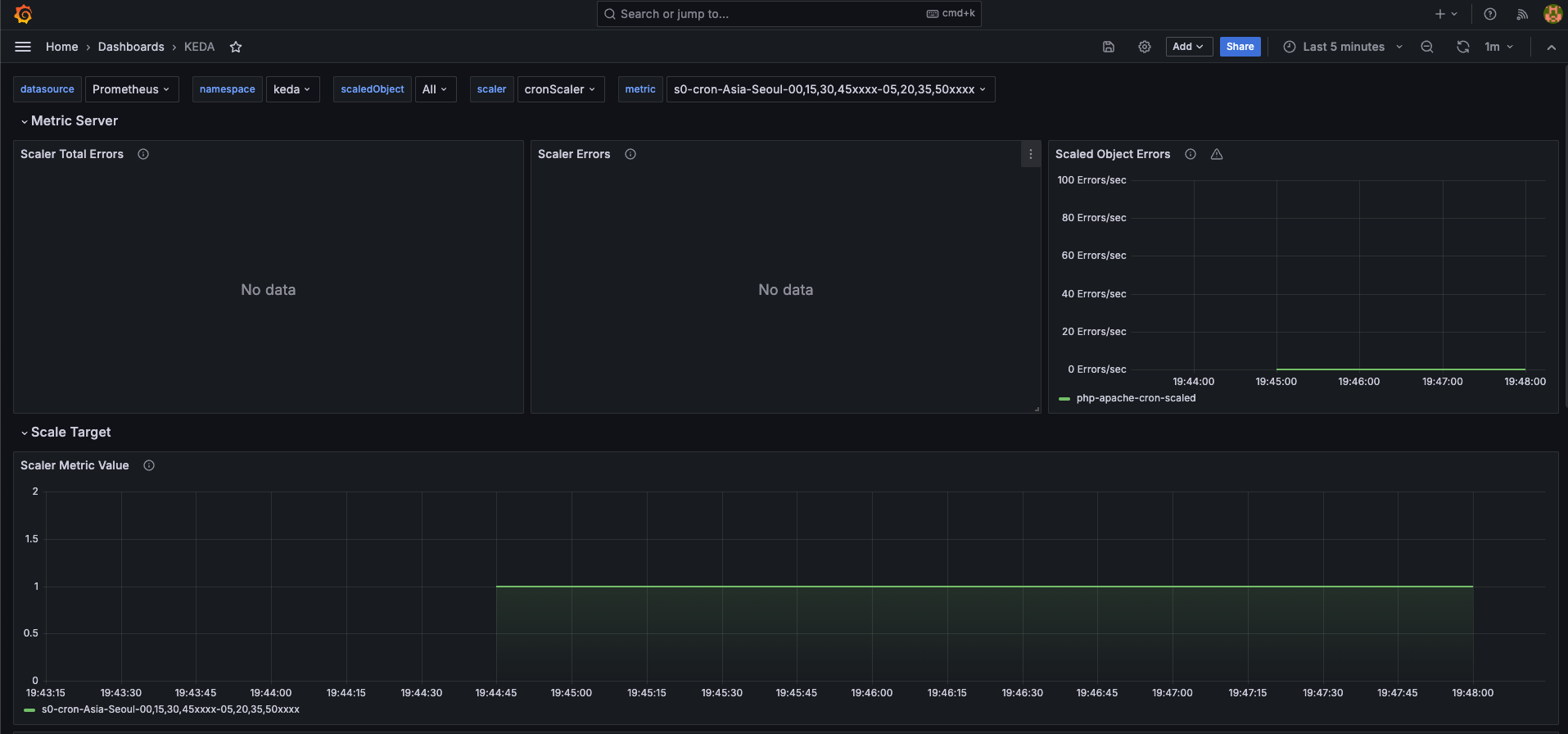

- 시간을 두고 Garfana 대쉬보드에서 확인해봅시다.

- ScaleUp 될때 빨간색으로 나타남

- ScaleDown 될때 초록색으로 나타남

- 리소스 삭제

kubectl delete -f keda-cron.yaml -n keda && kubectl delete deploy php-apache -n keda && helm uninstall keda -n keda

kubectl delete namespace keda3. VPA - Vertical Pod Autoscaler

-

pod resources.request을 최대한 최적값으로 수정합니다.

-

단, HPA와 같이 사용이 불가능하며, 수정 시 파드가 재실행 됩니다.

-

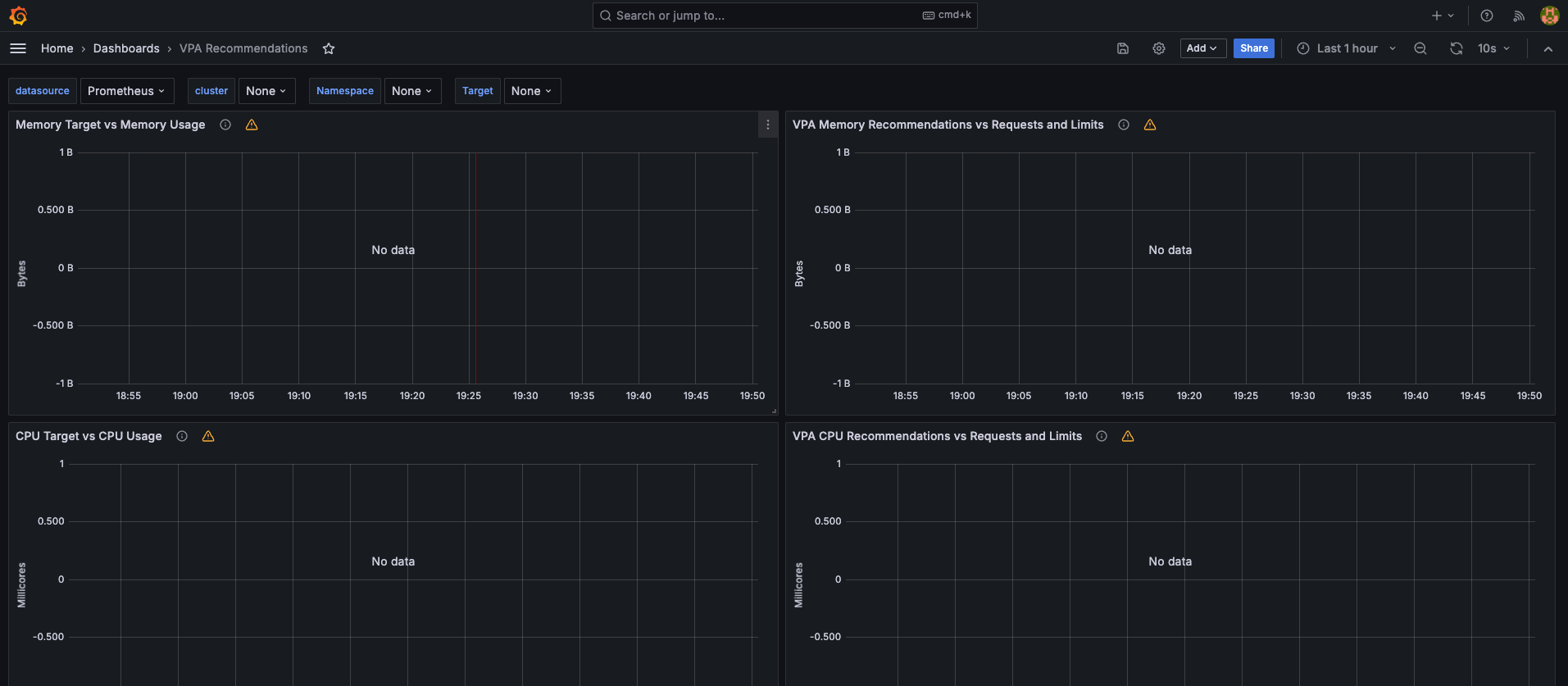

대쉬보드 생성 - 14588 공식 대쉬보드 사용

- 설치

# 코드 다운로드

## Git Clone

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# git clone https://github.com/kubernetes/autoscaler.git

Cloning into 'autoscaler'...

remote: Enumerating objects: 195289, done.

remote: Counting objects: 100% (2544/2544), done.

remote: Compressing objects: 100% (1788/1788), done.

remote: Total 195289 (delta 1383), reused 1033 (delta 741), pack-reused 192745

Receiving objects: 100% (195289/195289), 228.81 MiB | 14.95 MiB/s, done.

Resolving deltas: 100% (124157/124157), done.

Updating files: 100% (30209/30209), done.

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# cd ~/autoscaler/vertical-pod-autoscaler/

(leeeuijoo@myeks:default) [root@myeks-bastion vertical-pod-autoscaler]# tree hack

hack

├── boilerplate.go.txt

├── convert-alpha-objects.sh

├── deploy-for-e2e-locally.sh

├── deploy-for-e2e.sh

├── e2e

│ ├── Dockerfile.externalmetrics-writer

│ ├── k8s-metrics-server.yaml

│ ├── kind-with-registry.sh

│ ├── metrics-pump.yaml

│ ├── prometheus-adapter.yaml

│ ├── prometheus.yaml

│ ├── recommender-externalmetrics-deployment.yaml

│ └── vpa-rbac.diff

├── emit-metrics.py

├── generate-crd-yaml.sh

├── local-cluster.md

├── run-e2e-locally.sh

├── run-e2e.sh

├── run-e2e-tests.sh

├── update-codegen.sh

├── update-kubernetes-deps-in-e2e.sh

├── update-kubernetes-deps.sh

├── verify-codegen.sh

├── vpa-apply-upgrade.sh

├── vpa-down.sh

├── vpa-process-yaml.sh

├── vpa-process-yamls.sh

├── vpa-up.sh

└── warn-obsolete-vpa-objects.sh

1 directory, 28 files

# openssl 버전 확인

(leeeuijoo@myeks:default) [root@myeks-bastion vertical-pod-autoscaler]# openssl version

OpenSSL 1.0.2k-fips 26 Jan 2017

# openssl 1.1.1 이상 버전 확인

yum install openssl11 -y

(leeeuijoo@myeks:default) [root@myeks-bastion vertical-pod-autoscaler]# openssl11 version

OpenSSL 1.1.1g FIPS 21 Apr 2020

# 스크립트파일내에 openssl11 수정

(leeeuijoo@myeks:default) [root@myeks-bastion bin]# sed -i 's/openssl/openssl11/g' ./autoscaler/vertical-pod-autoscaler/pkg/admission-controller/gencerts.sh

# 모니터링

watch -d kubectl get pod -n kube-system

# 설치 스크립트 싫행

(leeeuijoo@myeks:default) [root@myeks-bastion vertical-pod-autoscaler]# ./hack/vpa-up.sh

# 확인

(leeeuijoo@myeks:default) [root@myeks-bastion vertical-pod-autoscaler]# kubectl get crd | grep autoscaling

verticalpodautoscalercheckpoints.autoscaling.k8s.io 2024-04-04T11:02:30Z

verticalpodautoscalers.autoscaling.k8s.io 2024-04-04T11:02:30Z

(leeeuijoo@myeks:default) [root@myeks-bastion vertical-pod-autoscaler]# kubectl get mutatingwebhookconfigurations vpa-webhook-config

NAME WEBHOOKS AGE

vpa-webhook-config 1 93s

(leeeuijoo@myeks:default) [root@myeks-bastion vertical-pod-autoscaler]# kubectl get mutatingwebhookconfigurations vpa-webhook-config -o json | jq

- 공식 에제 수행

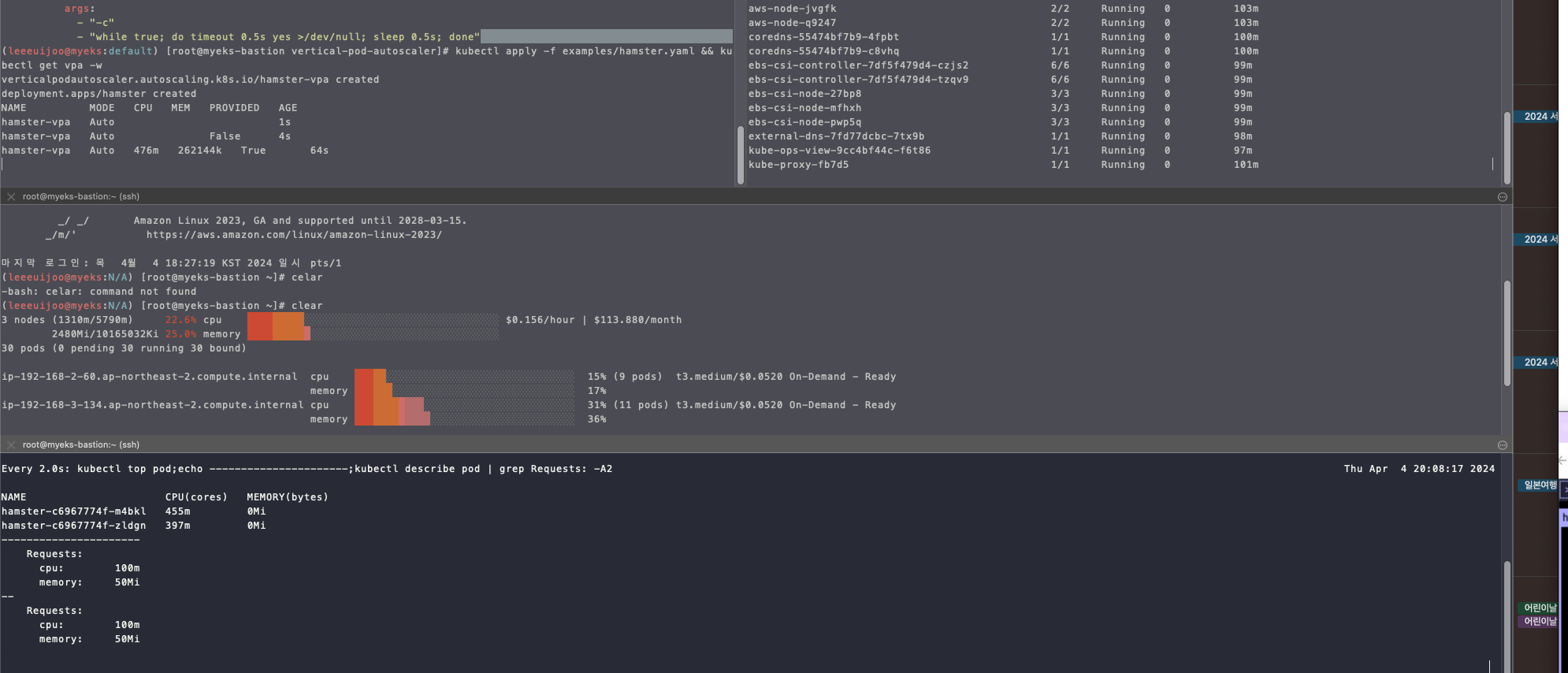

- pod가 실행되면 약 2~3분 뒤에 pod resource.reqeust가 VPA에 의해 수정

- vpa에 spec.updatePolicy.updateMode를 Off 로 변경 시 파드에 Spec을 자동으로 변경 재실행 하지 않습니다. 기본값(Auto)

- pod가 실행되면 약 2~3분 뒤에 pod resource.reqeust가 VPA에 의해 수정

# 모니터링

watch -d "kubectl top pod;echo "----------------------";kubectl describe pod | grep Requests: -A2"

# 공식 예제 배포

(leeeuijoo@myeks:default) [root@myeks-bastion vertical-pod-autoscaler]# cd ~/autoscaler/vertical-pod-autoscaler/

(leeeuijoo@myeks:default) [root@myeks-bastion vertical-pod-autoscaler]# cat examples/hamster.yaml | yh

---

apiVersion: "autoscaling.k8s.io/v1"

kind: VerticalPodAutoscaler

metadata:

name: hamster-vpa

spec:

# recommenders

# - name 'alternative'

targetRef:

apiVersion: "apps/v1"

kind: Deployment

name: hamster

resourcePolicy:

containerPolicies:

- containerName: '*'

minAllowed:

cpu: 100m

memory: 50Mi

maxAllowed:

cpu: 1

memory: 500Mi

controlledResources: ["cpu", "memory"]

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: hamster

spec:

selector:

matchLabels:

app: hamster

replicas: 2

template:

metadata:

labels:

app: hamster

spec:

securityContext:

runAsNonRoot: true

runAsUser: 65534 # nobody

containers:

- name: hamster

image: registry.k8s.io/ubuntu-slim:0.1

resources:

requests:

cpu: 100m

memory: 50Mi

command: ["/bin/sh"]

args:

- "-c"

- "while true; do timeout 0.5s yes >/dev/null; sleep 0.5s; done"

(leeeuijoo@myeks:default) [root@myeks-bastion vertical-pod-autoscaler]# kubectl apply -f examples/hamster.yaml && kubectl get vpa -w

verticalpodautoscaler.autoscaling.k8s.io/hamster-vpa created

deployment.apps/hamster created

NAME MODE CPU MEM PROVIDED AGE

hamster-vpa Auto 1s

hamster-vpa Auto False 4s

- 추천 권고 하는 것을 볼 수 있습니다.

- 메모리 : 262144k

- CPU : 476m

- 실제로 권고 하는 사양에 따라 Pod 가 하나 생성 되었습니다.

NAME MODE CPU MEM PROVIDED AGE

hamster-vpa Auto 1s

hamster-vpa Auto False 4s

hamster-vpa Auto 476m 262144k True 64s

hamster-vpa Auto 511m 262144k True 3m4s

----------------

Every 2.0s: kubectl top pod;echo ----------------------;kubectl describe pod | grep Requests: -A2 Thu Apr 4 20:11:38 2024

NAME CPU(cores) MEMORY(bytes)

hamster-c6967774f-hv69t 440m 0Mi

hamster-c6967774f-qqsbg 405m 0Mi

----------------------

Requests:

cpu: 476m

memory: 262144k

--

Requests:

cpu: 476m

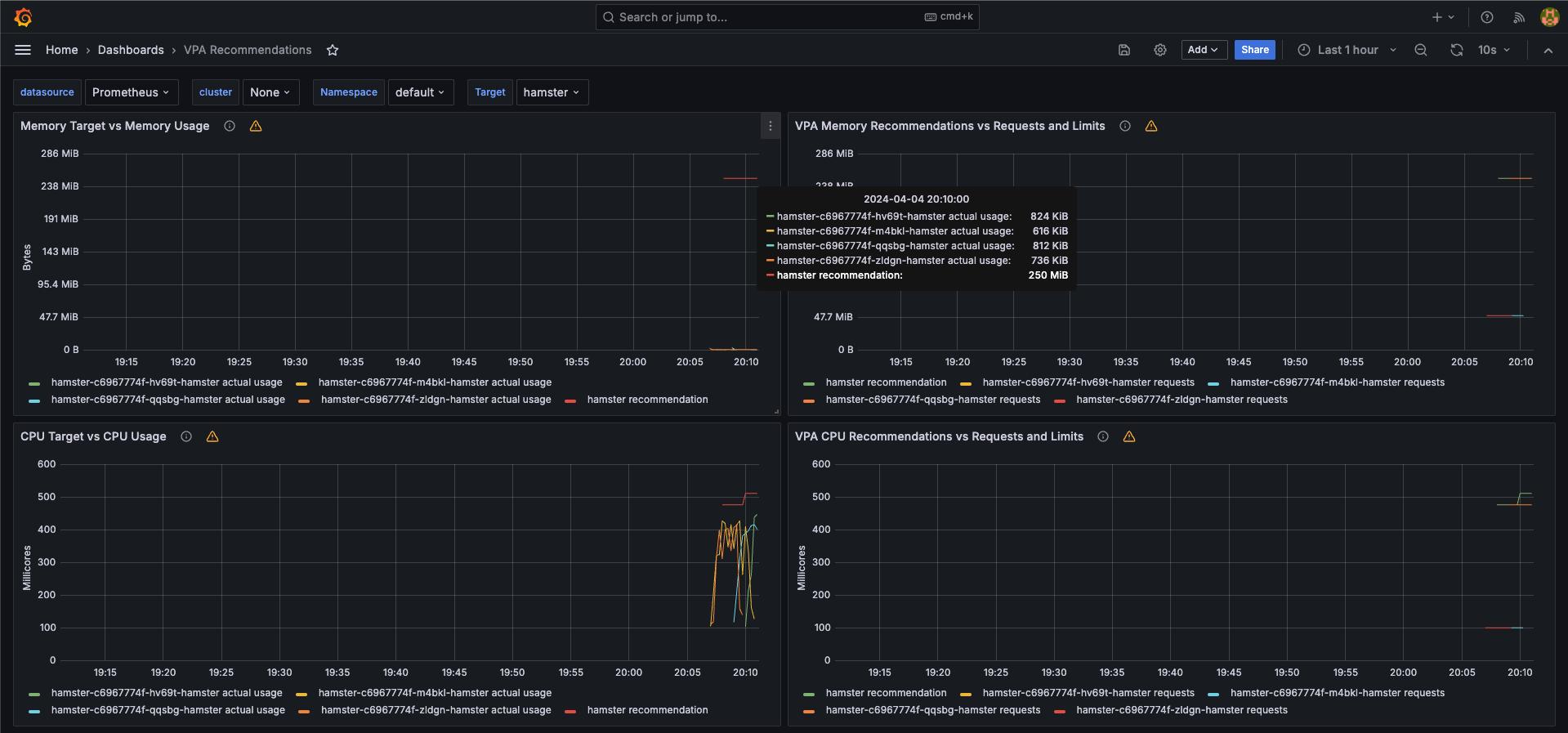

memory: 262144k- Grafana 대쉬보드에서 확인

- VPA에 의해 기존 파드 삭제되고 신규 파드가 생성됨

(leeeuijoo@myeks:default) [root@myeks-bastion vertical-pod-autoscaler]# kubectl get events --sort-by=".metadata.crtionTimestamp" | grep VPA

4m9s Normal EvictedByVPA pod/hamster-c6967774f-zldgn Pod was evicted by VPA Updater to apply resource recommendation.

3m9s Normal EvictedByVPA pod/hamster-c6967774f-m4bkl Pod was evicted by VPA Updater to apply resource recommendation.- 리소스 삭제

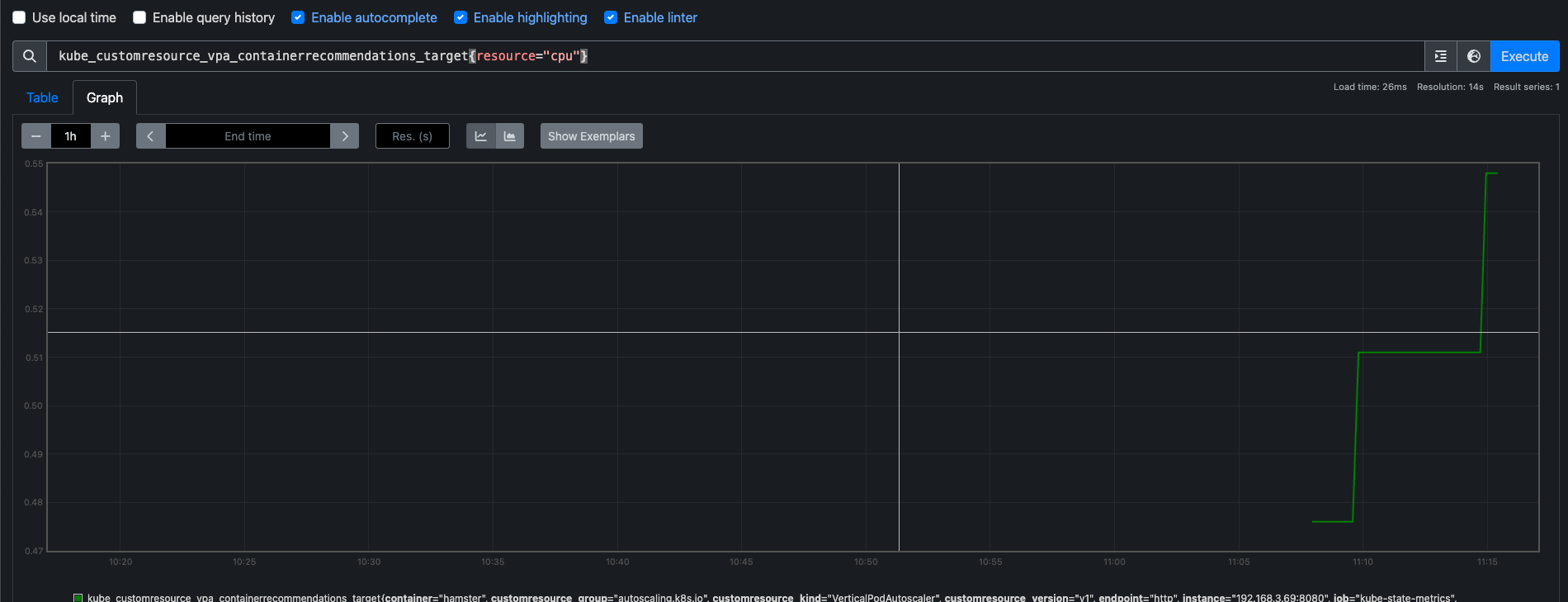

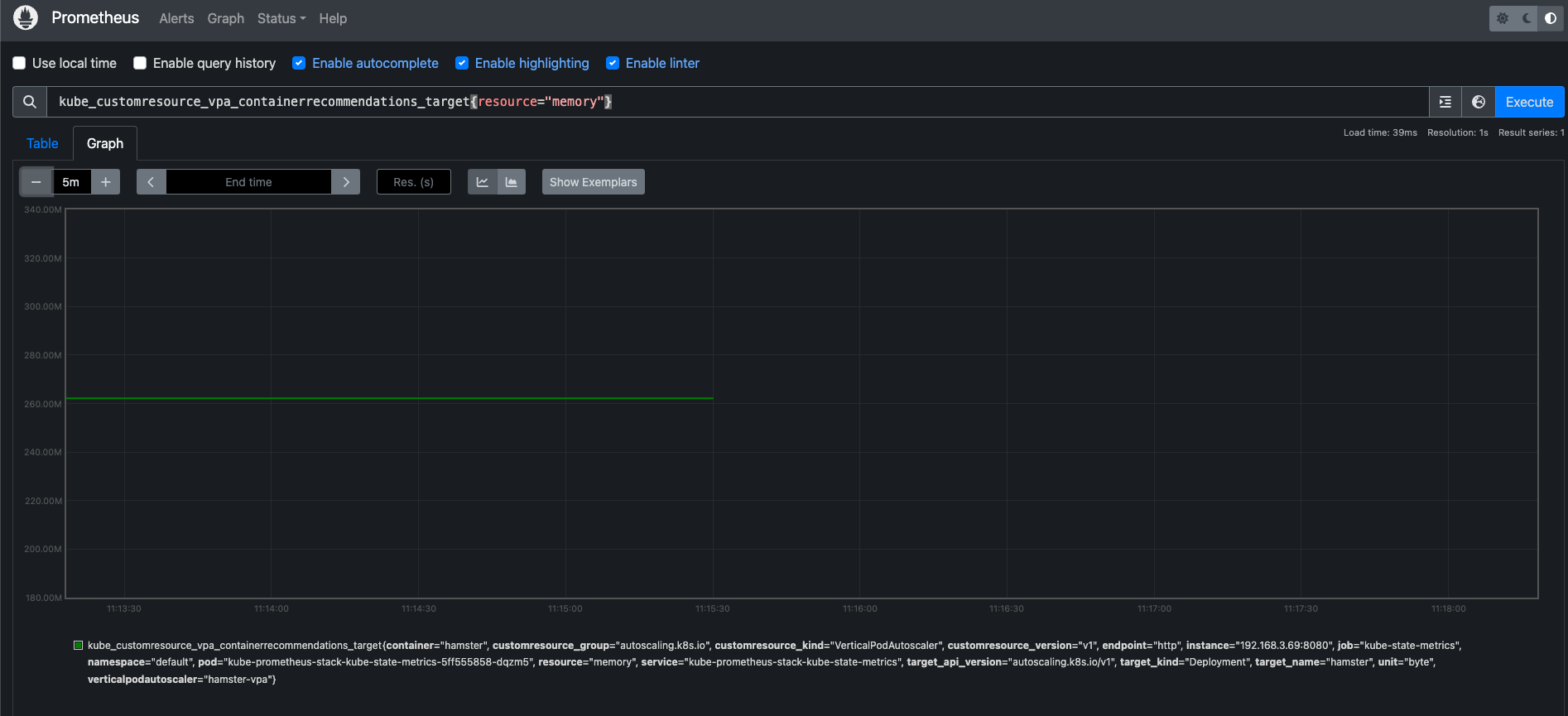

kubectl delete -f examples/hamster.yaml && cd ~/autoscaler/vertical-pod-autoscaler/ && ./hack/vpa-down.sh- VPA 를 Prometheus 쿼리를 이용하여 확인할 수 있음

kube_customresource_vpa_containerrecommendations_target{resource="cpu"}

kube_customresource_vpa_containerrecommendations_target{resource="memory"}- VPA 에 의해 CPU 올라간 것 확인

- 메모리 확인

- KRR : Prometheus-based Kubernetes Resource Recommendations

- 이제, VPA 가 필요없어지고, k8s 1.27 인플레이스로 Pod 의 리소스를 리사이즈 할 수 있다고 합니다.

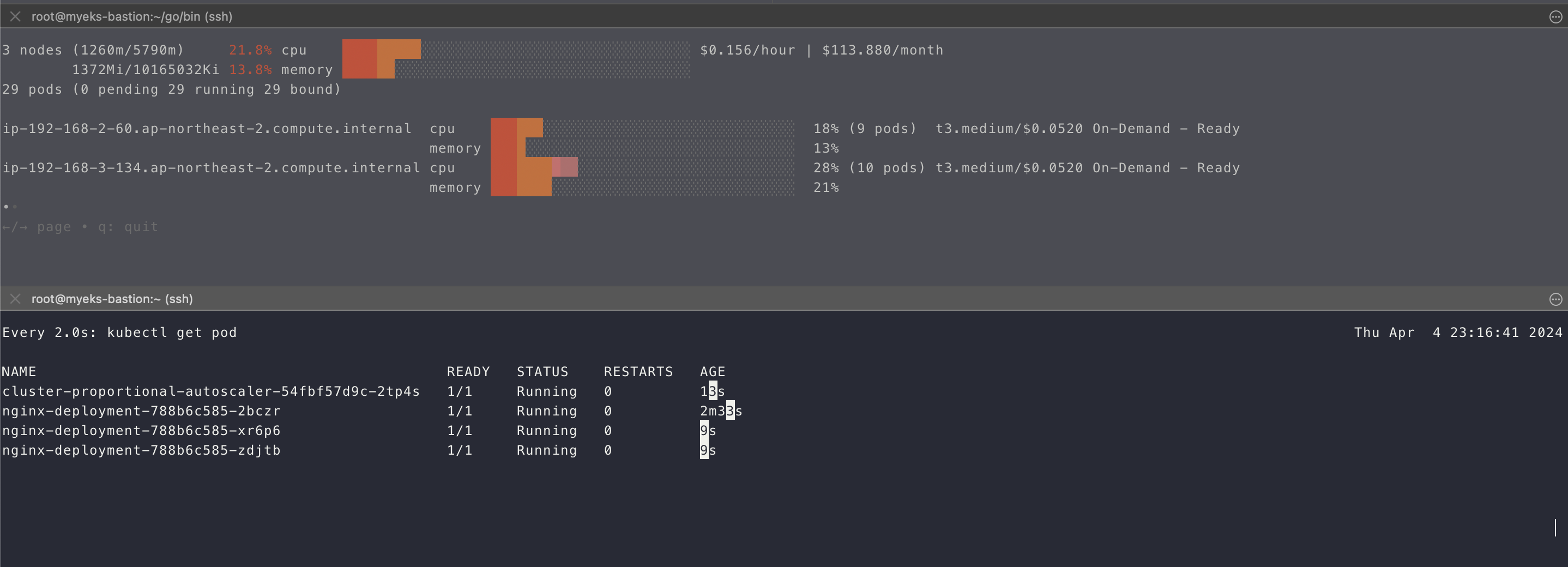

4. CA - Cluster AutoScaler

- 현재까지 Pod 수준을 다루고 있었음, 노드 그룹 수준 레벨의 오토스케일링이라고 보면 됩니다.

- Cluster Autoscale 동작을 하기 위한 cluster-autoscaler 파드(디플로이먼트)를 배치합니다.

- Cluster Autoscaler(CA)는 pending 상태인 파드가 존재할 경우, 워커 노드를 스케일 아웃합니다.

- 특정 시간을 간격으로 사용률을 확인하여 스케일 인/아웃을 수행합니다. 그리고 AWS에서는 Auto Scaling Group(ASG)을 사용하여 Cluster Autoscaler를 적용합니다.

# EKS 노드에 이미 아래 tag가 들어가 있습니다.

# k8s.io/cluster-autoscaler/enabled : true

# k8s.io/cluster-autoscaler/myeks : owned

---------------------

- Key: k8s.io/cluster-autoscaler/myeks

Value: owned

- Key: k8s.io/cluster-autoscaler/enabled

Value: 'true'

---------------------- 오토스케일링 그룹 확인

- 수치만 변경하는 과정

(leeeuijoo@myeks:default) [root@myeks-bastion vertical-pod-autoscaler]# aws autoscaling describe-auto-scaling-groups \

> --query "AutoScalingGroups[? Tags[? (Key=='eks:cluster-name') && Value=='myeks']].[AutoScalingGroupName, MinSize, MaxSize,DesiredCapacity]" \

> --output table

-----------------------------------------------------------------

| DescribeAutoScalingGroups |

+------------------------------------------------+----+----+----+

| eks-ng1-f0c75435-4623-c223-5024-ce7ffb6535e8 | 3 | 3 | 3 |

+------------------------------------------------+----+----+----+

# MaxSize 6개로 수정 - 수치만 변경하는 것입니다.

(leeeuijoo@myeks:default) [root@myeks-bastion vertical-pod-autoscaler]# export ASG_NAME=$(aws autoscaling describe-auto-scaling-groups --query "AutoScalingGroups[? Tags[? (Key=='eks:cluster-name') && Value=='myeks']].AutoScalingGroupName" --output text)

(leeeuijoo@myeks:default) [root@myeks-bastion vertical-pod-autoscaler]# aws autoscaling update-auto-scaling-group --auto-scaling-group-name ${ASG_NAME} --min-size 3 --desired-capacity 3 --max-size 6

# 확인

(leeeuijoo@myeks:default) [root@myeks-bastion vertical-pod-autoscaler]# aws autoscaling describe-auto-scaling-groups --query "AutoScalingGroups[? Tags[? (Key=='eks:cluster-name') && Value=='myeks']].[AutoScalingGroupName, MinSize, MaxSize,DesiredCapacity]" --output table

-----------------------------------------------------------------

| DescribeAutoScalingGroups |

+------------------------------------------------+----+----+----+

| eks-ng1-f0c75435-4623-c223-5024-ce7ffb6535e8 | 3 | 6 | 3 |

+------------------------------------------------+----+----+----+

- 배포 : Deploy the Cluster Autoscaler (CA)

(leeeuijoo@myeks:default) [root@myeks-bastion vertical-pod-autoscaler]# curl -s -O https://raw.githubusercontent.com/kubernetes/autoscaler/master/cluster-autoscaler/cloudprovider/aws/examples/cluster-autoscaler-autodiscover.yaml

(leeeuijoo@myeks:default) [root@myeks-bastion vertical-pod-autoscaler]# sed -i "s/<YOUR CLUSTER NAME>/$CLUSTER_NAME/g" cluster-autoscaler-autodiscover.yaml

(leeeuijoo@myeks:default) [root@myeks-bastion vertical-pod-autoscaler]# kubectl apply -f cluster-autoscaler-autodiscover.yaml

# 확인

(leeeuijoo@myeks:default) [root@myeks-bastion vertical-pod-autoscaler]# kubectl get pod -n kube-system | grep cluster-autoscaler

cluster-autoscaler-857b945c88-vjwtq 1/1 Running 0 14s

kubectl describe deployments.apps -n kube-system cluster-autoscaler

kubectl describe deployments.apps -n kube-system cluster-autoscaler | grep node-group-auto-discovery

--node-group-auto-discovery=asg:tag=k8s.io/cluster-autoscaler/enabled,k8s.io/cluster-autoscaler/myeks

# (옵션) cluster-autoscaler 파드가 동작하는 워커 노드가 퇴출(evict) 되지 않게 설정

(leeeuijoo@myeks:default) [root@myeks-bastion vertical-pod-autoscaler]# kubectl -n kube-system annotate deployment.apps/cluster-autoscaler cluster-autoscaler.kubernetes.io/safe-to-evict="false"

deployment.apps/cluster-autoscaler annotated

- CA 적용

# 모니터링

kubectl get nodes -w

while true; do kubectl get node; echo "------------------------------" ; date ; sleep 1; done

# 디플로이먼트 배포

# 파드 1

cat <<EoF> nginx.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-to-scaleout

spec:

replicas: 1

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

service: nginx

app: nginx

spec:

containers:

- image: nginx

name: nginx-to-scaleout

resources:

limits:

cpu: 500m

memory: 512Mi

requests:

cpu: 500m

memory: 512Mi

EoF

(leeeuijoo@myeks:default) [root@myeks-bastion vertical-pod-autoscaler]# kubectl apply -f nginx.yaml

deployment.apps/nginx-to-scaleout created

(leeeuijoo@myeks:default) [root@myeks-bastion vertical-pod-autoscaler]# kubectl get deployment/nginx-to-scaleout

NAME READY UP-TO-DATE AVAILABLE AGE

nginx-to-scaleout 1/1 1 1 12s

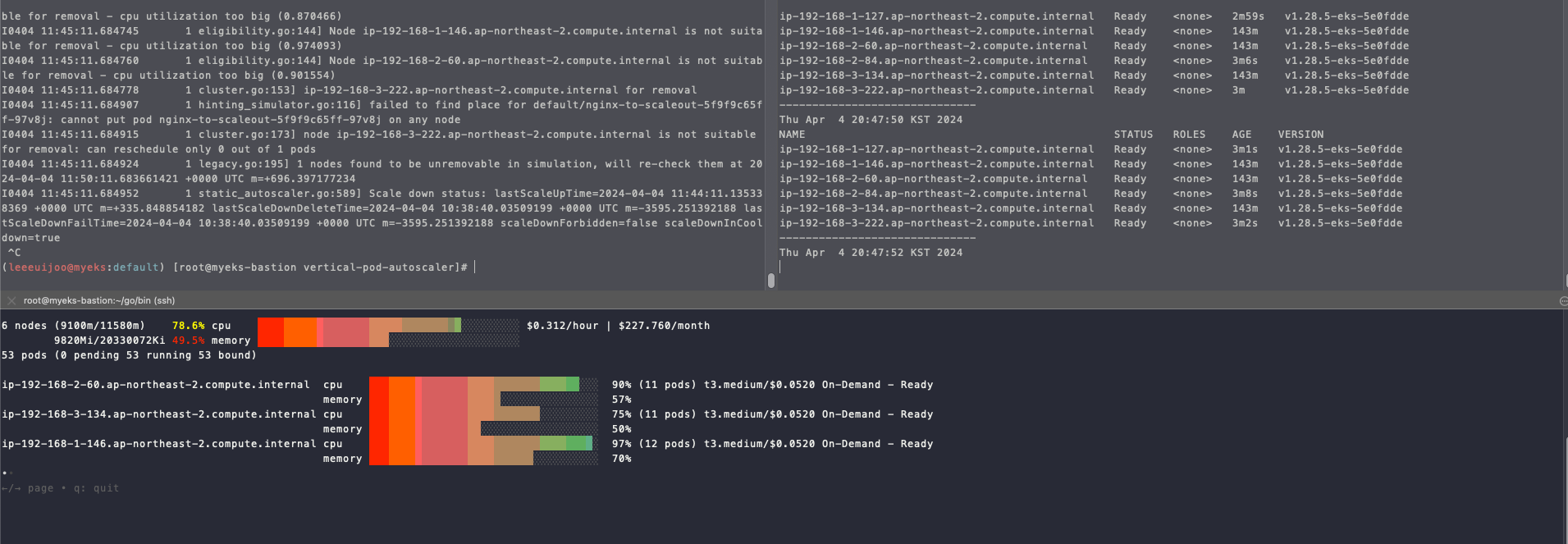

# 스케일링 진행

(leeeuijoo@myeks:default) [root@myeks-bastion vertical-pod-autoscaler]# kubectl scale --replicas=15 deployment/nginx-to-scaleout && date

deployment.apps/nginx-to-scaleout scaled

Thu Apr 4 20:44:05 KST 2024

# 확인

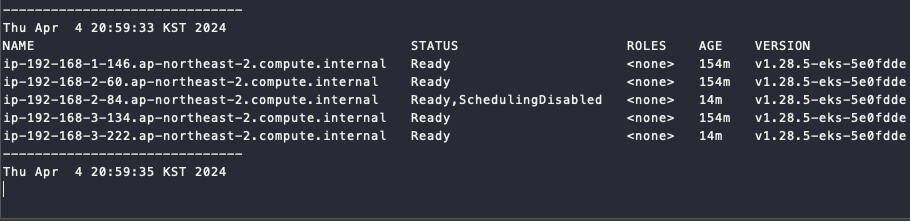

Thu Apr 4 20:44:58 KST 2024

NAME STATUS ROLES AGE VERSION

ip-192-168-1-127.ap-northeast-2.compute.internal NotReady <none> 9s v1.28.5-eks-5e0fdde

ip-192-168-1-146.ap-northeast-2.compute.internal Ready <none> 140m v1.28.5-eks-5e0fdde

ip-192-168-2-60.ap-northeast-2.compute.internal Ready <none> 140m v1.28.5-eks-5e0fdde

ip-192-168-2-84.ap-northeast-2.compute.internal Ready <none> 16s v1.28.5-eks-5e0fdde

ip-192-168-3-134.ap-northeast-2.compute.internal Ready <none> 140m v1.28.5-eks-5e0fdde

ip-192-168-3-222.ap-northeast-2.compute.internal NotReady <none> 10s v1.28.5-eks-5e0fdde

# pending 된 pod 가 여러개 있음

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-to-scaleout-5f9f9c65ff-2vj5c 1/1 Running 0 46s 192.168.2.198 ip-192-168-2-60.ap-northeast-2.compute.internal <none> <none>

nginx-to-scaleout-5f9f9c65ff-68nl9 0/1 Pending 0 46s <none> <none> <none> <none>

nginx-to-scaleout-5f9f9c65ff-97v8j 0/1 Pending 0 46s <none> <none> <none> <none>

nginx-to-scaleout-5f9f9c65ff-g2ddk 1/1 Running 0 46s 192.168.1.235 ip-192-168-1-146.ap-northeast-2.compute.internal <none> <none>

nginx-to-scaleout-5f9f9c65ff-jxzzb 0/1 Pending 0 46s <none> <none> <none> <none>

nginx-to-scaleout-5f9f9c65ff-kl8b6 0/1 Pending 0 46s <none> <none> <none> <none>

nginx-to-scaleout-5f9f9c65ff-mdqqm 1/1 Running 0 2m 192.168.3.36 ip-192-168-3-134.ap-northeast-2.compute.internal <none> <none>

nginx-to-scaleout-5f9f9c65ff-p5c8g 0/1 Pending 0 46s <none> <none> <none> <none>

nginx-to-scaleout-5f9f9c65ff-q72xf 1/1 Running 0 46s 192.168.2.47 ip-192-168-2-60.ap-northeast-2.compute.internal <none> <none>

nginx-to-scaleout-5f9f9c65ff-rl9p8 1/1 Running 0 46s 192.168.2.135 ip-192-168-2-60.ap-northeast-2.compute.internal <none> <none>

nginx-to-scaleout-5f9f9c65ff-vcm9q 1/1 Running 0 46s 192.168.3.243 ip-192-168-3-134.ap-northeast-2.compute.internal <none> <none>

nginx-to-scaleout-5f9f9c65ff-wgljw 1/1 Running 0 46s 192.168.1.20 ip-192-168-1-146.ap-northeast-2.compute.internal <none> <none>

# node 들이 증가된 것을 확인

Thu Apr 4 20:46:37 KST 2024

NAME STATUS ROLES AGE VERSION

ip-192-168-1-127.ap-northeast-2.compute.internal Ready <none> 108s v1.28.5-eks-5e0fdde

ip-192-168-1-146.ap-northeast-2.compute.internal Ready <none> 141m v1.28.5-eks-5e0fdde

ip-192-168-2-60.ap-northeast-2.compute.internal Ready <none> 141m v1.28.5-eks-5e0fdde

ip-192-168-2-84.ap-northeast-2.compute.internal Ready <none> 115s v1.28.5-eks-5e0fdde

ip-192-168-3-134.ap-northeast-2.compute.internal Ready <none> 141m v1.28.5-eks-5e0fdde

ip-192-168-3-222.ap-northeast-2.compute.internal Ready <none> 109s v1.28.5-eks-5e0fdde

- Pod 모두 생성 확인

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl get pods -l app=nginx -o wide --watch

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-to-scaleout-5f9f9c65ff-2vj5c 1/1 Running 0 3m58s 192.168.2.198 ip-192-168-2-60.ap-northeast-2.compute.internal <none> <none>

nginx-to-scaleout-5f9f9c65ff-68nl9 1/1 Running 0 3m58s 192.168.1.126 ip-192-168-1-127.ap-northeast-2.compute.internal <none> <none>

nginx-to-scaleout-5f9f9c65ff-97v8j 1/1 Running 0 3m58s 192.168.3.113 ip-192-168-3-222.ap-northeast-2.compute.internal <none> <none>

nginx-to-scaleout-5f9f9c65ff-g2ddk 1/1 Running 0 3m58s 192.168.1.235 ip-192-168-1-146.ap-northeast-2.compute.internal <none> <none>

nginx-to-scaleout-5f9f9c65ff-jxzzb 1/1 Running 0 3m58s 192.168.1.217 ip-192-168-1-127.ap-northeast-2.compute.internal <none> <none>

nginx-to-scaleout-5f9f9c65ff-kl8b6 1/1 Running 0 3m58s 192.168.1.240 ip-192-168-1-127.ap-northeast-2.compute.internal <none> <none>

nginx-to-scaleout-5f9f9c65ff-mdqqm 1/1 Running 0 5m12s 192.168.3.36 ip-192-168-3-134.ap-northeast-2.compute.internal <none> <none>

nginx-to-scaleout-5f9f9c65ff-p5c8g 1/1 Running 0 3m58s 192.168.2.175 ip-192-168-2-84.ap-northeast-2.compute.internal <none> <none>

nginx-to-scaleout-5f9f9c65ff-q72xf 1/1 Running 0 3m58s 192.168.2.47 ip-192-168-2-60.ap-northeast-2.compute.internal <none> <none>

nginx-to-scaleout-5f9f9c65ff-rl9p8 1/1 Running 0 3m58s 192.168.2.135 ip-192-168-2-60.ap-northeast-2.compute.internal <none> <none>

nginx-to-scaleout-5f9f9c65ff-vcm9q 1/1 Running 0 3m58s 192.168.3.243 ip-192-168-3-134.ap-northeast-2.compute.internal <none> <none>

nginx-to-scaleout-5f9f9c65ff-wgljw 1/1 Running 0 3m58s 192.168.1.20 ip-192-168-1-146.ap-northeast-2.compute.internal <none> <none>

nginx-to-scaleout-5f9f9c65ff-wns67 1/1 Running 0 3m58s 192.168.2.28 ip-192-168-2-84.ap-northeast-2.compute.internal <none> <none>

nginx-to-scaleout-5f9f9c65ff-xnn82 1/1 Running 0 3m58s 192.168.2.90 ip-192-168-2-84.ap-northeast-2.compute.internal <none> <none>

nginx-to-scaleout-5f9f9c65ff-zp7dg 1/1 Running 0 3m58s 192.168.1.210 ip-192-168-1-146.ap-northeast-2.compute.internal <none> <none>

# 디플로이먼트 삭제

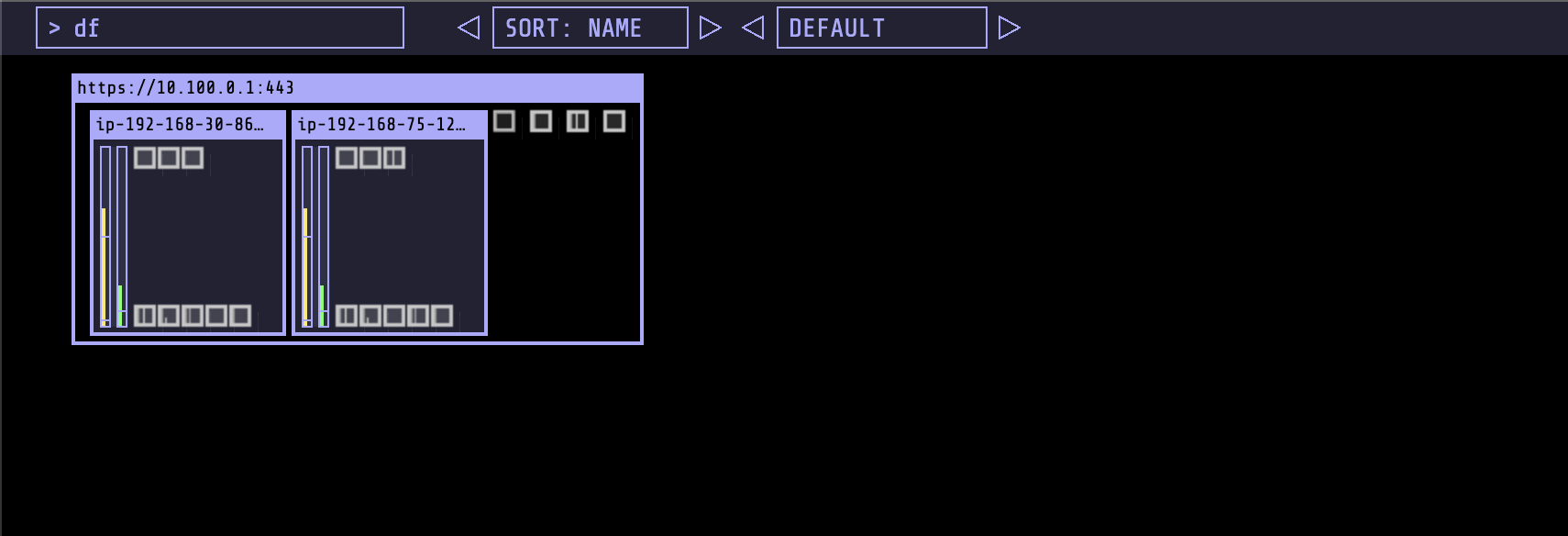

kubectl delete -f nginx.yaml && date- 노드 개수 축소 : 기본은 10분 후 scale down 됩니다.

- 물론 아래 flag 로 시간 수정 가능하기 때문에 디플로이먼트 삭제 후 10분 기다리고 나서 봅시다.

- 확인 :

watch -d kubectl get node- 점점 node 의 개수가 줄어듭니다.

- 3개의 노드 확인

- 리소스 삭제

# size 수정

aws autoscaling update-auto-scaling-group --auto-scaling-group-name ${ASG_NAME} --min-size 3 --desired-capacity 3 --max-size 3

aws autoscaling describe-auto-scaling-groups --query "AutoScalingGroups[? Tags[? (Key=='eks:cluster-name') && Value=='myeks']].[AutoScalingGroupName, MinSize, MaxSize,DesiredCapacity]" --output table

# Cluster Autoscaler 삭제

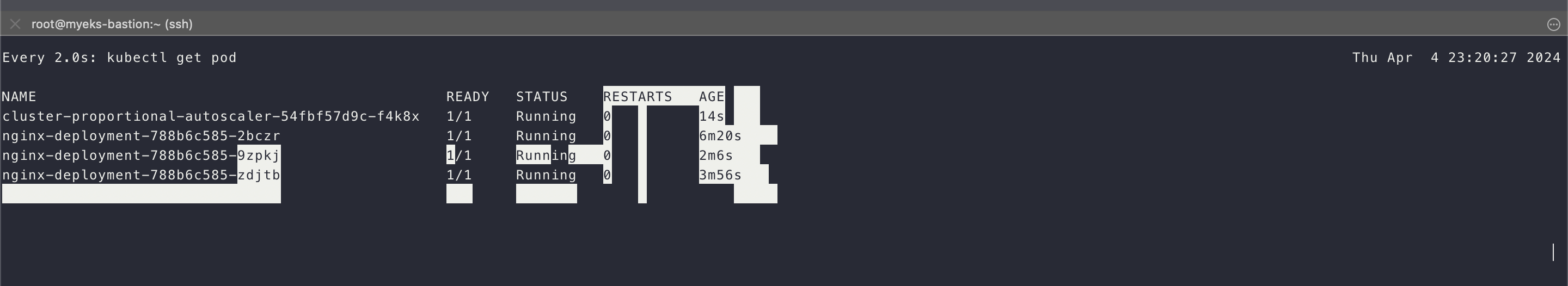

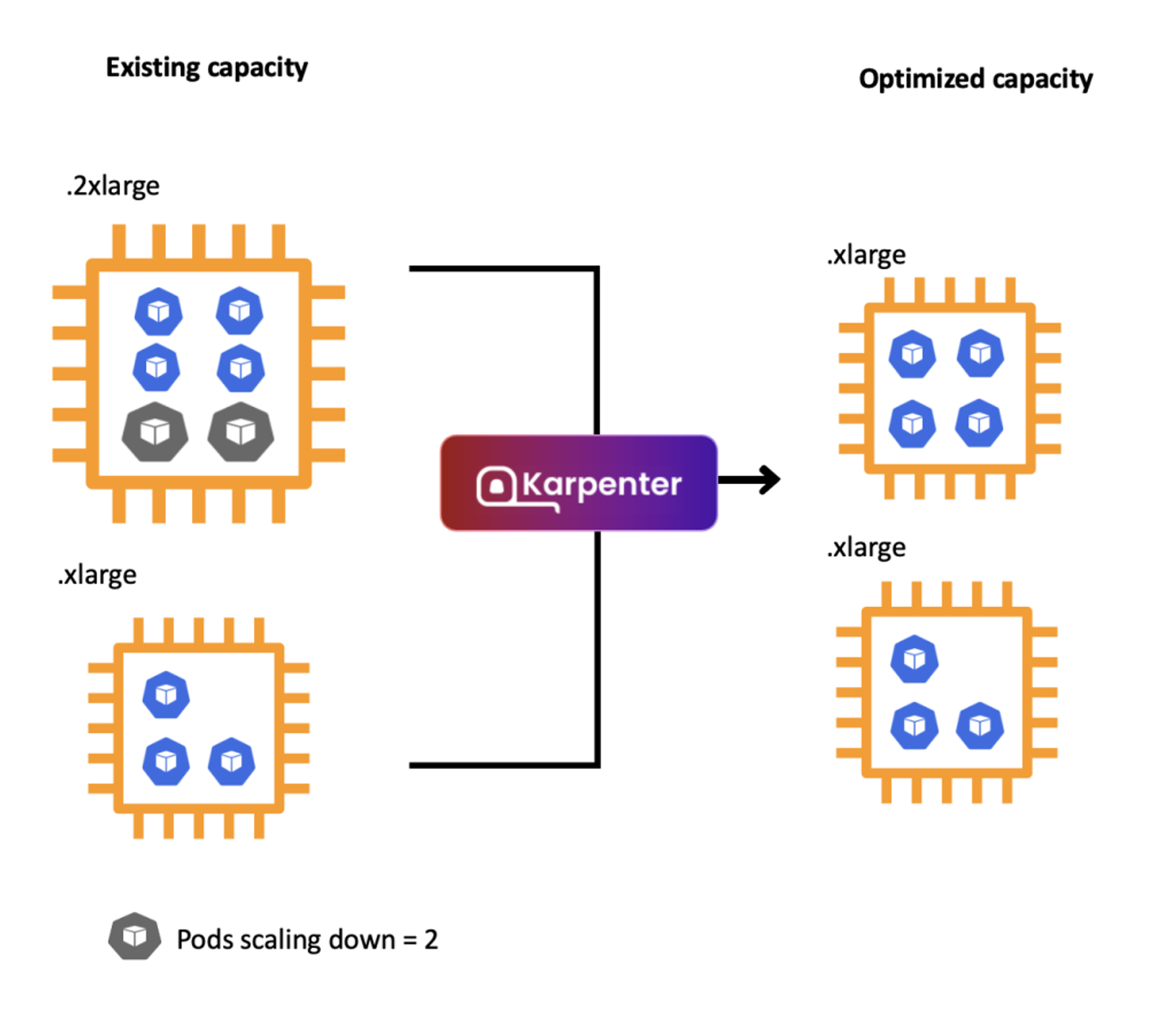

kubectl delete -f cluster-autoscaler-autodiscover.yamlCA 의 문제점 - 이것을 보완한 것이 Karpenter : 쿠버네티스가 직접 ASG 에 관여하는 것처럼!

하나의 자원에 대해 두군데 (AWS ASG vs AWS EKS)에서 각자의 방식으로 관리하기 때문에 관리 정보가 서로 동기화되지 않아 다양한 문제 발생합니다.

- ASG에만 의존하고 노드 생성/삭제 등에 직접 관여 안함

- EKS에서 노드를 삭제 해도 인스턴스는 삭제 안됨

- 노드 축소 될 때 특정 노드가 축소 되도록 하기 매우 어려움 : pod이 적은 노드 먼저 축소, 이미 드레인 된 노드 먼저 축소

- 특정 노드를 삭제 하면서 동시에 노드 개수를 줄이기 어려움 : 줄일때 삭제 정책 옵션이 다양하지 않음

- 정책 미지원 시 삭제 방식(예시) : 100대 중 미삭제 EC2 보호 설정 후 삭제 될 ec2의 파드를 이주 후 scaling 조절로 삭제 후 원복

- 특정 노드를 삭제하면서 동시에 노드 개수를 줄이기 어려움

- 폴링 방식이기에 너무 자주 확장 여유를 확인 하면 API 제한에 도달할 수 있음

- 스케일링 속도가 매우 느림

- Cluster Autoscaler 는 쿠버네티스 클러스터 자체의 오토 스케일링을 의미하며, 수요에 따라 워커 노드를 자동으로 추가하는 기능

- 언뜻 보기에 클러스터 전체나 각 노드의 부하 평균이 높아졌을 때 확장으로 보인다 → 함정! 🚧

- Pending 상태의 파드가 생기는 타이밍에 처음으로 Cluster Autoscaler 이 동작한다

- 즉, Request 와 Limits 를 적절하게 설정하지 않은 상태에서는 실제 노드의 부하 평균이 낮은 상황에서도 스케일 아웃이 되거나,

부하 평균이 높은 상황임에도 스케일 아웃이 되지 않는다!

- 즉, Request 와 Limits 를 적절하게 설정하지 않은 상태에서는 실제 노드의 부하 평균이 낮은 상황에서도 스케일 아웃이 되거나,

- 기본적으로 리소스에 의한 스케줄링은 Requests(최소)를 기준으로 이루어진다. 다시 말해 Requests 를 초과하여 할당한 경우에는 최소 리소스 요청만으로 리소스가 꽉 차 버려서 신규 노드를 추가해야만 한다. 이때 실제 컨테이너 프로세스가 사용하는 리소스 사용량은 고려되지 않는다.

- 반대로 Request 를 낮게 설정한 상태에서 Limit 차이가 나는 상황을 생각해보자. 각 컨테이너는 Limits 로 할당된 리소스를 최대로 사용한다. 그래서 실제 리소스 사용량이 높아졌더라도 Requests 합계로 보면 아직 스케줄링이 가능하기 때문에 클러스터가 스케일 아웃하지 않는 상황이 발생한다.

- 여기서는 CPU 리소스 할당을 예로 설명했지만 메모리의 경우도 마찬가지다.

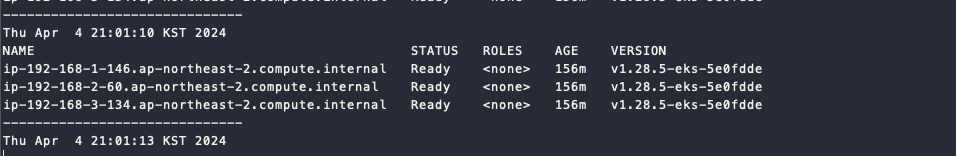

5. CPA - Cluster Proportional Autoscaler

- 노드 수 증가에 비례하여 성능 처리가 필요한 애플리케이션(컨테이너/파드)를 수평으로 자동 확장

- CPA Innstall

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# helm repo add cluster-proportional-autoscaler https://kubernetes-sigs.github.io/cluster-proportional-autoscaler

"cluster-proportional-autoscaler" has been added to your repositories

# CPA규칙을 설정하고 helm차트를 릴리즈 할 것입니다.

# Deployment 배포

(leeeuijoo@myeks:default) [root@myeks-bastion vertical-pod-autoscaler]# cat <<EOT > cpa-nginx.yaml

> apiVersion: apps/v1

> kind: Deployment

> metadata:

> name: nginx-deployment

> spec:

> replicas: 1

> selector:

> matchLabels:

> app: nginx

> template:

> metadata:

> labels:

> app: nginx

> spec:

> containers:

> - name: nginx

> image: nginx:latest

> resources:

> limits:

> cpu: "100m"

> memory: "64Mi"

> requests:

> cpu: "100m"

> memory: "64Mi"

> ports:

> - containerPort: 80

> EOT

(leeeuijoo@myeks:default) [root@myeks-bastion vertical-pod-autoscaler]# kubectl apply -f cpa-nginx.yaml

deployment.apps/nginx-deployment created

# CPA 규칙 설정

cat <<EOF > cpa-values.yaml

config:

ladder:

nodesToReplicas:

- [1, 1]

- [2, 2]

- [3, 3]

- [4, 3]

- [5, 5]

options:

namespace: default

target: "deployment/nginx-deployment"

EOF

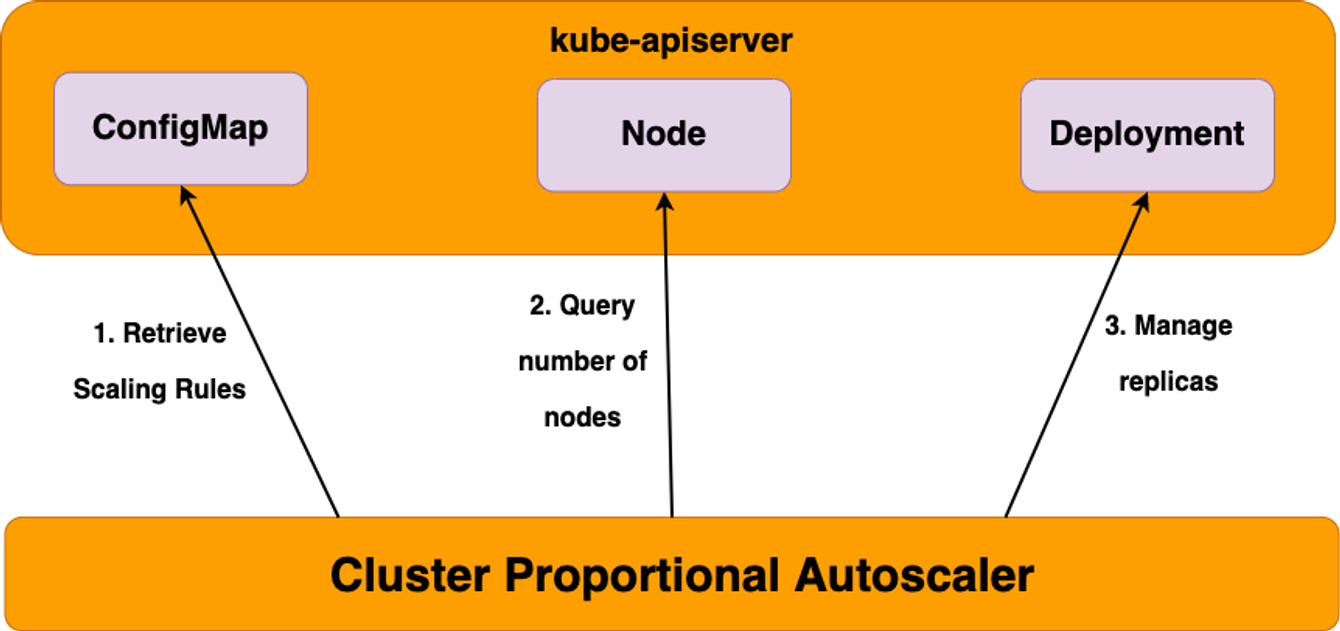

# 모니터링

watch -d kubectl get pod

# helm 업그레이드

(leeeuijoo@myeks:default) [root@myeks-bastion vertical-pod-autoscaler]# helm upgrade --install cluster-proportional-autoscaler -f cpa-values.yaml cluster-proportional-autoscaler/cluster-proportional-autoscaler

Release "cluster-proportional-autoscaler" does not exist. Installing it now.

NAME: cluster-proportional-autoscaler

LAST DEPLOYED: Thu Apr 4 23:16:28 2024

NAMESPACE: default

STATUS: deployed

REVISION: 1

TEST SUITE: None

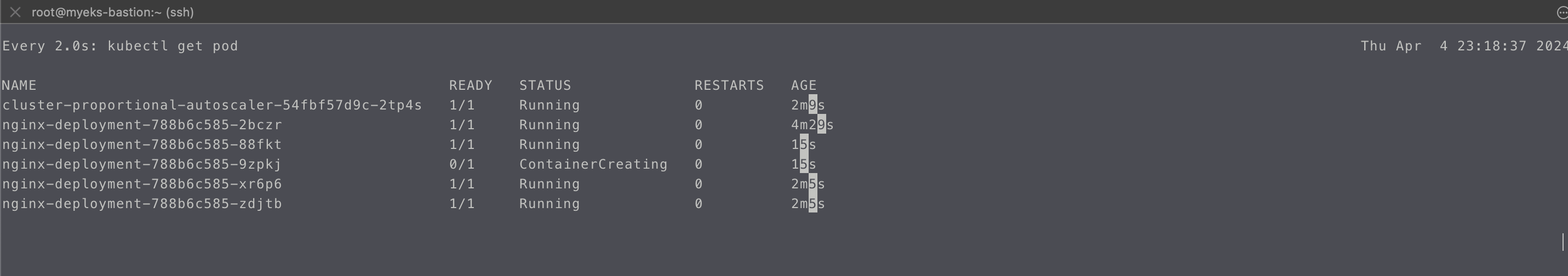

- 노드 5개로 증가

(leeeuijoo@myeks:default) [root@myeks-bastion vertical-pod-autoscaler]# export ASG_NAME=$(aws autoscaling describe-auto-scaling-groups --query "AutoScalingGroups[? Tags[? (Key=='eks:cluster-name') && Value=='myeks']].AutoScalingGroupName" --output text)

(leeeuijoo@myeks:default) [root@myeks-bastion vertical-pod-autoscaler]# aws autoscaling update-auto-scaling-group --auto-scaling-group-name ${ASG_NAME} --min-size 5 --desired-capacity 5 --max-size 5

(leeeuijoo@myeks:default) [root@myeks-bastion vertical-pod-autoscaler]# aws autoscaling describe-auto-scaling-groups --query "AutoScalingGroups[? Tags[? (Key=='eks:cluster-name') && Value=='myeks']].[AutoScalingGroupName, MinSize, MaxSize,DesiredCapacity]" --output table

-----------------------------------------------------------------

| DescribeAutoScalingGroups |

+------------------------------------------------+----+----+----+

| eks-ng1-f0c75435-4623-c223-5024-ce7ffb6535e8 | 5 | 5 | 5 |

+------------------------------------------------+----+----+----+- 노드 개수 증가 -> CPA 규칙에 따라 Pod 생성 확인

- 노드 4개로 축소 : 규칙에 따라 replica 는 3개로 줄어야 합니다.

(leeeuijoo@myeks:default) [root@myeks-bastion vertical-pod-autoscaler]# aws autoscaling update-auto-scaling-group --auto-scaling-group-name ${ASG_NAME} --min-size 4 --desired-capacity 4 --max-size 4

(leeeuijoo@myeks:default) [root@myeks-bastion vertical-pod-autoscaler]# aws autoscaling describe-auto-scaling-groups --query "AutoScalingGroups[? Tags[? (Key=='eks:cluster-name') && Value=='myeks']].[AutoScalingGroupName, MinSize, MaxSize,DesiredCapacity]" --output table

-----------------------------------------------------------------

| DescribeAutoScalingGroups |

+------------------------------------------------+----+----+----+

| eks-ng1-f0c75435-4623-c223-5024-ce7ffb6535e8 | 4 | 4 | 4 |

+------------------------------------------------+----+----+----+- Pod 3개로 축소된 것을 확인

- 리소스 삭제

(leeeuijoo@myeks:default) [root@myeks-bastion vertical-pod-autoscaler]# helm uninstall cluster-proportional-autoscaler && kubectl delete -f cpa-nginx.yaml

release "cluster-proportional-autoscaler" uninstalled

deployment.apps "nginx-deployment" deleted- Karpenter 실습 환경 준비를 위해서 현재 EKS 실습 환경 전부 삭제

eksctl delete cluster --name $CLUSTER_NAME && aws cloudformation delete-stack --stack-name $CLUSTER_NAME

6.Karpenter : K8S Native AutoScaler

- 오픈소스 노드 수명 주기 관리 솔루션, 몇 초 만에 컴퓨팅 리소스 제공

- 작동 방식

- 모니터링 → (스케줄링 안된 Pod 발견) → 스펙 평가 → 생성 ⇒ Provisioning

- 모니터링 → (비어있는 노드 발견) → 제거 ⇒ Deprovisioning

- Provisioner CRD : 시작 템플릿이 필요 없습니다! ← 시작 템플릿의 대부분의 설정 부분을 대신함

- 필수 : 보안그룹, 서브넷

- 리소스 찾는 방식 : 태그 기반 자동, 리소스 ID 직접 명시

- 인스턴스 타입은 가드레일 방식으로 선언 가능! : 스팟(우선) vs 온디멘드, 다양한 인스턴스 type 가능

- Pod에 적합한 인스턴스 중 가장 저렴한 인스턴스로 증설 됩니다

- PV를 위해 단일 서브넷에 노드 그룹을 만들 필요가 없습니다 → 자동으로 PV가 존재하는 서브넷에 노드를 만듭니다

- 사용 안하는 노드를 자동으로 정리, 일정 기간이 지나면 노드를 자동으로 만료 시킬 수 있음

- ttlSecondsAfterEmpty : 노드에 데몬셋을 제외한 모든 Pod이 존재하지 않을 경우 해당 값 이후에 자동으로 정리됨

- ttlSecondsUntilExpired : 설정한 기간이 지난 노드는 자동으로 cordon, drain 처리가 되어 노드를 정리함

- 이때 노드가 주기적으로 정리되면 자연스럽게 기존에 여유가 있는 노드에 재배치 되기 때문에 좀 더 효율적으로 리소스 사용 가능 + 최신 AMI 사용 환경에 도움

- 노드가 제때 drain 되지 않는다면 비효율적으로 운영 될 수 있습니다

- 노드를 줄여도 다른 노드에 충분한 여유가 있다면 자동으로 정리해줌!

- 큰 노드 하나가 작은 노드 여러개 보다 비용이 저렴하다면 자동으로 합쳐줌! → 기존에 확장 속도가 느려서 보수적으로 운영 하던 부분을 해소

- 오버 프로비저닝 필요 : 카펜터를 쓰더라도 EC2가 뜨고 데몬셋이 모두 설치되는데 최소 1~2분이 소요 → 깡통 증설용 Pod를 만들어서 여유 공간을 강제로 확보!

- 오버 프로비저닝 Pod x KEDA : 대규모 증설이 예상 되는 경우 미리 준비

실습 환경 구성

# YAML 파일 다운로드

curl -O https://s3.ap-northeast-2.amazonaws.com/cloudformation.cloudneta.net/K8S/karpenter-preconfig.yaml

# CloudFormation 스택 배포

예시) aws cloudformation deploy --template-file karpenter-preconfig.yaml --stack-name myeks2 --parameter-overrides KeyName=kp-gasida SgIngressSshCidr=$(curl -s ipinfo.io/ip)/32 MyIamUserAccessKeyID=AKIA5... MyIamUserSecretAccessKey='CVNa2...' ClusterBaseName=myeks2 --region ap-northeast-2

# CloudFormation 스택 배포 완료 후 작업용 EC2 IP 출력

aws cloudformation describe-stacks --stack-name myeks2 --query 'Stacks[*].Outputs[0].OutputValue' --output text

# 작업용 EC2 SSH 접속

ssh -i ~/.ssh/kp-gasida.pem ec2-user@$(aws cloudformation describe-stacks --stack-name myeks2 --query 'Stacks[*].Outputs[0].OutputValue' --output text)- 사전 확인 & eks-node-viewer 설치

# IP 주소 확인 : 172.30.0.0/16 VPC 대역에서 172.30.1.0/24 대역을 사용 중

[root@myeks2-bastion ~]# ip -br -c addr

lo UNKNOWN 127.0.0.1/8 ::1/128

eth0 UP 172.30.1.100/24 fe80::8:beff:fec1:560b/64

docker0 DOWN 172.17.0.1/16

[root@myeks2-bastion ~]#

# EKS Node Viewer 설치 : 현재 ec2 spec에서는 설치에 다소 시간이 소요됨 = 2분 이상

[root@myeks2-bastion ~]# wget https://go.dev/dl/go1.22.1.linux-amd64.tar.gz

--2024-04-04 23:48:13-- https://go.dev/dl/go1.22.1.linux-amd64.tar.gz

Resolving go.dev (go.dev)... 216.239.38.21, 216.239.32.21, 216.239.34.21, ...

Connecting to go.dev (go.dev)|216.239.38.21|:443... connected.

HTTP request sent, awaiting response... 302 Found

Location: https://dl.google.com/go/go1.22.1.linux-amd64.tar.gz [following]

--2024-04-04 23:48:14-- https://dl.google.com/go/go1.22.1.linux-amd64.tar.gz

Resolving dl.google.com (dl.google.com)... 172.217.161.78, 2404:6800:4004:81f::200e

Connecting to dl.google.com (dl.google.com)|172.217.161.78|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 68965341 (66M) [application/x-gzip]

Saving to: ‘go1.22.1.linux-amd64.tar.gz’

100%[=========================================================================================================================================>] 68,965,341 66.9MB/s in 1.0s

2024-04-04 23:48:15 (66.9 MB/s) - ‘go1.22.1.linux-amd64.tar.gz’ saved [68965341/68965341]

[root@myeks2-bastion ~]# tar -C /usr/local -xzf go1.22.1.linux-amd64.tar.gz

[root@myeks2-bastion ~]# export PATH=$PATH:/usr/local/go/bin

[root@myeks2-bastion ~]# go install github.com/awslabs/eks-node-viewer/cmd/eks-node-viewer@latest

# EKS 배포 완료 후 실행

cd ~/go/bin && ./eks-node-viewer --resources cpu,memory- EKS 배포

# 변수 정보 확인

[root@myeks2-bastion ~]# export | egrep 'ACCOUNT|AWS_' | egrep -v 'SECRET|KEY'

declare -x ACCOUNT_ID="236747833953"

declare -x AWS_ACCOUNT_ID="236747833953"

declare -x AWS_DEFAULT_REGION="ap-northeast-2"

declare -x AWS_PAGER=""

declare -x AWS_REGION="ap-northeast-2"

# 변수 설정

export KARPENTER_NAMESPACE="kube-system"

export K8S_VERSION="1.29"

export KARPENTER_VERSION="0.35.2"

export TEMPOUT=$(mktemp)

export ARM_AMI_ID="$(aws ssm get-parameter --name /aws/service/eks/optimized-ami/${K8S_VERSION}/amazon-linux-2-arm64/recommended/image_id --query Parameter.Value --output text)"

export AMD_AMI_ID="$(aws ssm get-parameter --name /aws/service/eks/optimized-ami/${K8S_VERSION}/amazon-linux-2/recommended/image_id --query Parameter.Value --output text)"

export GPU_AMI_ID="$(aws ssm get-parameter --name /aws/service/eks/optimized-ami/${K8S_VERSION}/amazon-linux-2-gpu/recommended/image_id --query Parameter.Value --output text)"

export AWS_PARTITION="aws"

export CLUSTER_NAME="${USER}-karpenter-demo"

echo "export CLUSTER_NAME=$CLUSTER_NAME" >> /etc/profile

echo $KARPENTER_VERSION $CLUSTER_NAME $AWS_DEFAULT_REGION $AWS_ACCOUNT_ID $TEMPOUT $ARM_AMI_ID $AMD_AMI_ID $GPU_AMI_ID

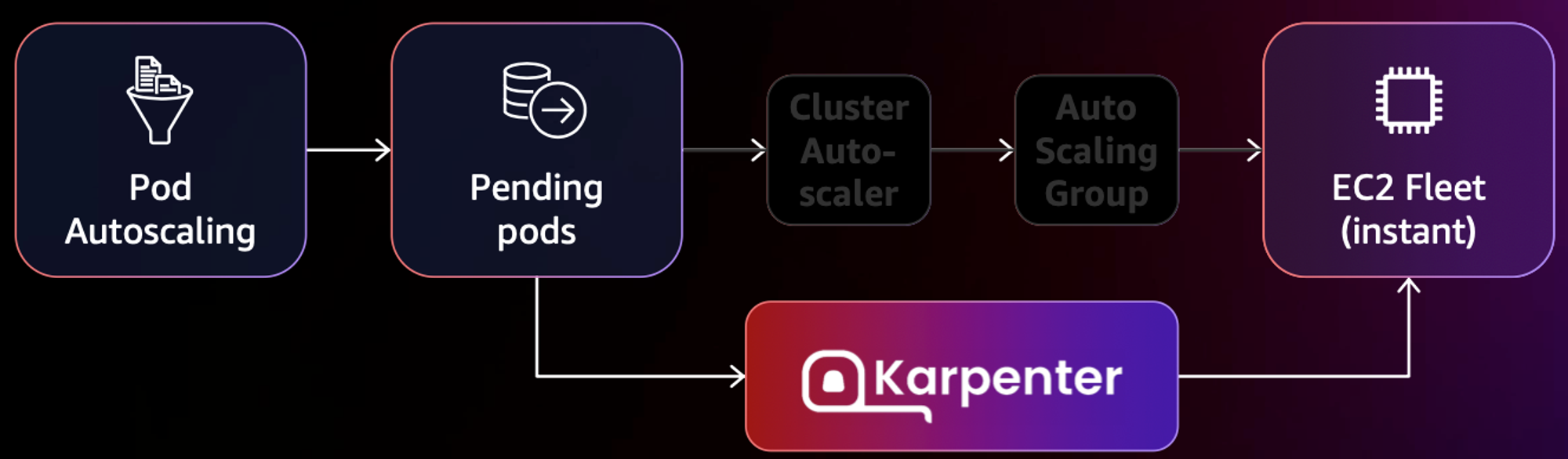

# CloudFormation 스택으로 IAM Policy, Role(KarpenterNodeRole-myeks2) 생성 : 3분 정도 소요

curl -fsSL https://raw.githubusercontent.com/aws/karpenter-provider-aws/v"${KARPENTER_VERSION}"/website/content/en/preview/getting-started/getting-started-with-karpenter/cloudformation.yaml > "${TEMPOUT}" \

&& aws cloudformation deploy \

--stack-name "Karpenter-${CLUSTER_NAME}" \

--template-file "${TEMPOUT}" \

--capabilities CAPABILITY_NAMED_IAM \

--parameter-overrides "ClusterName=${CLUSTER_NAME}"

- Cluster 생성

eksctl create cluster -f - <<EOF

---

apiVersion: eksctl.io/v1alpha5

kind: ClusterConfig

metadata:

name: ${CLUSTER_NAME}

region: ${AWS_DEFAULT_REGION}

version: "${K8S_VERSION}"

tags:

karpenter.sh/discovery: ${CLUSTER_NAME}

iam:

withOIDC: true

serviceAccounts:

- metadata:

name: karpenter

namespace: "${KARPENTER_NAMESPACE}"

roleName: ${CLUSTER_NAME}-karpenter

attachPolicyARNs:

- arn:${AWS_PARTITION}:iam::${AWS_ACCOUNT_ID}:policy/KarpenterControllerPolicy-${CLUSTER_NAME}

roleOnly: true

iamIdentityMappings:

- arn: "arn:${AWS_PARTITION}:iam::${AWS_ACCOUNT_ID}:role/KarpenterNodeRole-${CLUSTER_NAME}"

username: system:node:{{EC2PrivateDNSName}}

groups:

- system:bootstrappers

- system:nodes

managedNodeGroups:

- instanceType: m5.large

amiFamily: AmazonLinux2

name: ${CLUSTER_NAME}-ng

desiredCapacity: 2

minSize: 1

maxSize: 10

iam:

withAddonPolicies:

externalDNS: true

EOF

# EKS 배포 확인