0. CloudFormation Stack 배포

- 만약 CloudFormation 스택 배포를 Default 계정이 아닌 다른 AWS 계정(Profile)을 사용할 경우

--profile <profile Name>옵션을 추가 해줍시다.

# YAML 파일 다운로드

curl -O https://s3.ap-northeast-2.amazonaws.com/cloudformation.cloudneta.net/K8S/eks-oneclick2.yaml

# CloudFormation 스택 배포

예시) aws cloudformation deploy --template-file eks-oneclick2.yaml --stack-name myeks --parameter-overrides KeyName=kp-gasida SgIngressSshCidr=$(curl -s ipinfo.io/ip)/32 MyIamUserAccessKeyID='~' MyIamUserSecretAccessKey='~' ClusterBaseName=myeks --region ap-northeast-2

# CloudFormation 스택 배포 완료 후 작업용 EC2 IP 출력

aws cloudformation describe-stacks --stack-name myeks --query 'Stacks[*].Outputs[0].OutputValue' --output text

# 작업용 EC2 SSH 접속

ssh -i ~/.ssh/kp-gasida.pem ec2-user@$(aws cloudformation describe-stacks --stack-name myeks --query 'Stacks[*].Outputs[0].OutputValue' --output text)

예시) ssh -i ejl-eks.pem ec2-user@$(aws cloudformation describe-stacks --stack-name myeks --query 'Stacks[*].Outputs[0].OutputValue' --output text --profile ejl-personal) ✔ 14:40:08

The authenticity of host '3.38.115.229 (3.38.115.229)' can't be established.

ED25519 key fingerprint is SHA256:tQwPk327qdcRbXZ1vtHT4d1W09OhFyDZR/ULkgGPMLA.

This key is not known by any other names

Are you sure you want to continue connecting (yes/no/[fingerprint])? yes

Warning: Permanently added '3.38.115.229' (ED25519) to the list of known hosts.

, #_

~\_ ####_ Amazon Linux 2

~~ \_#####\

~~ \###| AL2 End of Life is 2025-06-30.

~~ \#/ ___

~~ V~' '->

~~~ / A newer version of Amazon Linux is available!

~~._. _/

_/ _/ Amazon Linux 2023, GA and supported until 2028-03-15.

_/m/' https://aws.amazon.com/linux/amazon-linux-2023/

7 package(s) needed for security, out of 12 available

Run "sudo yum update" to apply all updates.- 기본 설정 및 EFS 확인

- 이번 실습은 Storage와 관련된 내용이므로 이전 포스팅과는 다르게 AWS EFS 서비스가 프로비저닝 됩니다.

# EFS 서비스 ID 확인

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# echo $EfsFsId

fs-024b43fbf2d8c3b93

# EFS 시스템에 마운트 설정

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# mount -t efs -o tls $EfsFsId:/ /mnt/myefs

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# df -hT --type nfs4

Filesystem Type Size Used Avail Use% Mounted on

127.0.0.1:/ nfs4 8.0E 0 8.0E 0% /mnt/myefs

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# echo "efs file test" > /mnt/myefs/memo.txt

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# cat /mnt/myefs/memo.txt

efs file test

# 스토리지 클래스 확인

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

gp2 (default) kubernetes.io/aws-ebs Delete WaitForFirstConsumer false 23m

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# clear

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# k get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

gp2 (default) kubernetes.io/aws-ebs Delete WaitForFirstConsumer false 27m

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl get sc gp2 -o yaml | yh

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"storage.k8s.io/v1","kind":"StorageClass","metadata":{"annotations":{"storageclass.kubernetes.io/is-default-class":"true"},"name":"gp2"},"parameters":{"fsType":"ext4","type":"gp2"},"provisioner":"kubernetes.io/aws-ebs","volumeBindingMode":"WaitForFirstConsumer"}

storageclass.kubernetes.io/is-default-class: "true"

creationTimestamp: "2024-03-21T05:28:41Z"

name: gp2

resourceVersion: "281"

uid: 19c4debb-c6bf-4135-8f69-0da412605537

parameters:

fsType: ext4

type: gp2

provisioner: kubernetes.io/aws-ebs

reclaimPolicy: Delete

volumeBindingMode: WaitForFirstConsumer

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl get csinodes

NAME DRIVERS AGE

ip-192-168-1-166.ap-northeast-2.compute.internal 0 18m

ip-192-168-2-138.ap-northeast-2.compute.internal 0 18m

ip-192-168-3-184.ap-northeast-2.compute.internal 0 18m

# 노드의 정보 확인

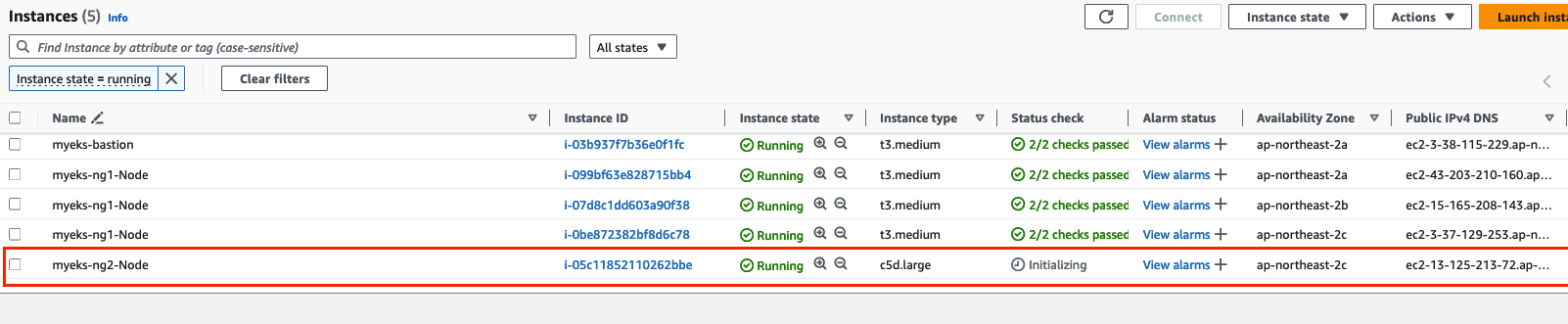

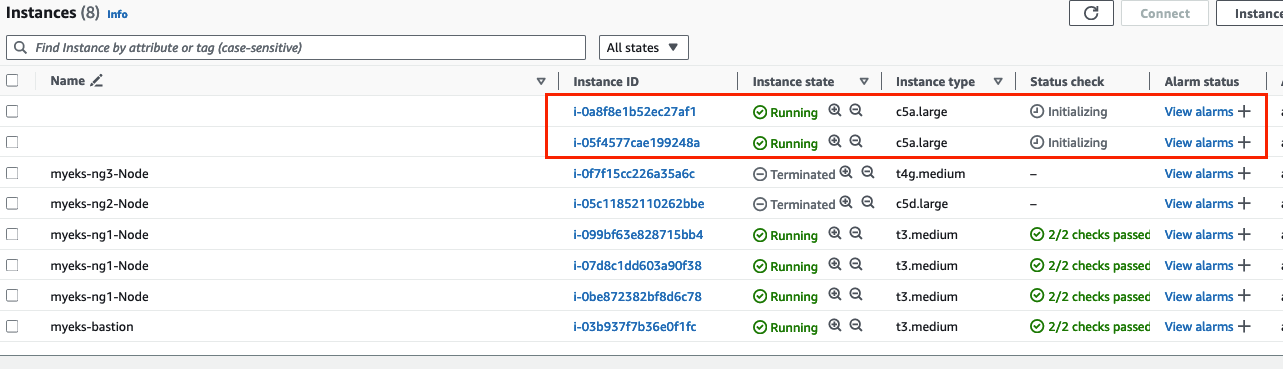

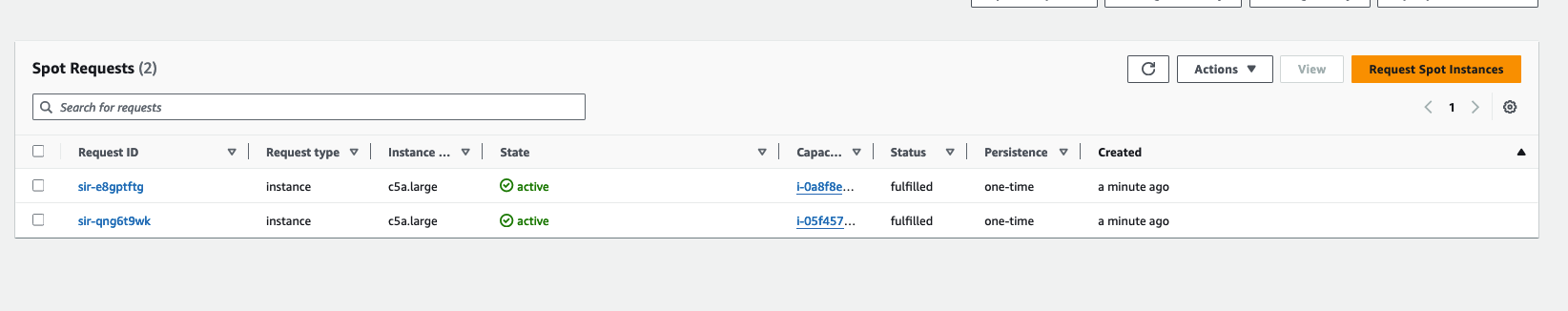

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl get node --label-columns=node.kubernetes.io/instance-type,eks.amazonaws.com/capacityType,topology.kubernetes.io/zone

NAME STATUS ROLES AGE VERSION INSTANCE-TYPE CAPACITYTYPE ZONE

ip-192-168-1-166.ap-northeast-2.compute.internal Ready <none> 19m v1.28.5-eks-5e0fdde t3.medium ON_DEMAND ap-northeast-2a

ip-192-168-2-138.ap-northeast-2.compute.internal Ready <none> 19m v1.28.5-eks-5e0fdde t3.medium ON_DEMAND ap-northeast-2b

ip-192-168-3-184.ap-northeast-2.compute.internal Ready <none> 19m v1.28.5-eks-5e0fdde t3.medium ON_DEMAND ap-northeast-2c

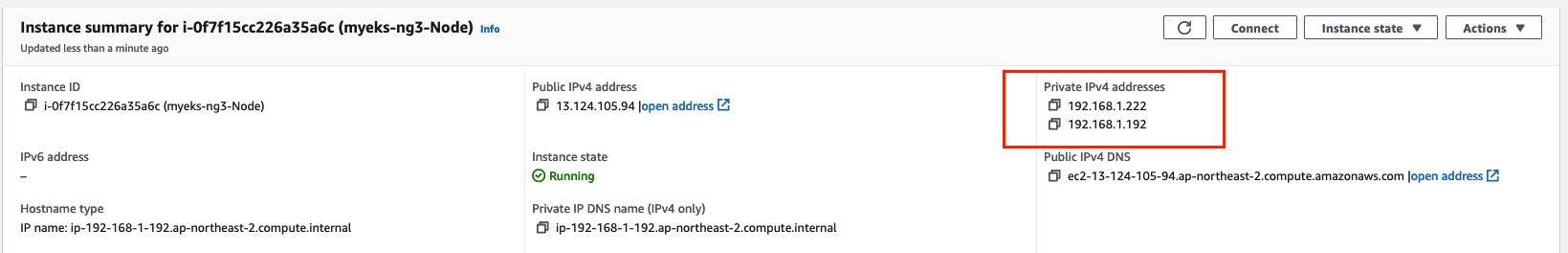

# 실습을 위한 노드 IP 변수 지정 (private)

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# N1=$(kubectl get node --label-columns=topology.kubernetes.io/zone --selector=topology.kubernetes.io/zone=ap-northeast-2a -o jsonpath={.items[0].status.addresses[0].address})

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# N2=$(kubectl get node --label-columns=topology.kubernetes.io/zone --selector=topology.kubernetes.io/zone=ap-northeast-2b -o jsonpath={.items[0].status.addresses[0].address})

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# N3=$(kubectl get node --label-columns=topology.kubernetes.io/zone --selector=topology.kubernetes.io/zone=ap-northeast-2c -o jsonpath={.items[0].status.addresses[0].address})

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# echo "export N1=$N1" >> /etc/profile

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# echo "export N2=$N2" >> /etc/profile

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# echo "export N3=$N3" >> /etc/profile

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# echo $N1, $N2, $N3

192.168.1.166, 192.168.2.138, 192.168.3.184

# 노드 보안그룹 ID 확인

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# NGSGID=$(aws ec2 describe-security-groups --filters Name=group-name,Values=*ng1* --query "SecurityGroups[*].[GroupId]" --output text)

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# aws ec2 authorize-security-group-ingress --group-id $NGSGID --protocol '-1' --cidr 192.168.1.100/32

{

"Return": true,

"SecurityGroupRules": [

{

"SecurityGroupRuleId": "sgr-07f45e0d4a0d62cc5",

"GroupId": "sg-0d1dcd192d8d6bcf6",

"GroupOwnerId": "236747833953",

"IsEgress": false,

"IpProtocol": "-1",

"FromPort": -1,

"ToPort": -1,

"CidrIpv4": "192.168.1.100/32"

}

]

}

# Worker 노드 SSH 접속 확인

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# for node in $N1 $N2 $N3; do ssh ec2-user@$node hostname; done

yes

yes

yes- AWS LB/ExternalDNS, kube-ops-view Install

# LB Controller Install

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# helm repo add eks https://aws.github.io/eks-charts

"eks" has been added to your repositories

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# helm repo update

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "eks" chart repository

Update Complete. ⎈Happy Helming!⎈

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# helm install aws-load-balancer-controller eks/aws-load-balancer-controller -n kube-system --set clusterName=$CLUSTER_NAME \

> --set serviceAccount.create=false --set serviceAccount.name=aws-load-balancer-controller

NAME: aws-load-balancer-controller

LAST DEPLOYED: Thu Mar 21 15:02:28 2024

NAMESPACE: kube-system

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

AWS Load Balancer controller installed!

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# k get pod -n kube-system | grep load

aws-load-balancer-controller-75c55f7bd-b9gxv 1/1 Running 0 58s

aws-load-balancer-controller-75c55f7bd-zpq4n 1/1 Running 0 58s

# ExternalDNS Install

# 도메인 등록

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# MyDomain=22joo.shop

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# MyDnzHostedZoneId=$(aws route53 list-hosted-zones-by-name --dns-name "${MyDomain}." --query "HostedZones[0].Id" --output text)

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# echo $MyDomain, $MyDnzHostedZoneId

22joo.shop, /hostedzone/Z07798463AFECYTX1ODP4

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# curl -s -O https://raw.githubusercontent.com/gasida/PKOS/main/aews/externaldns.yaml

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# sed -i "s/0.13.4/0.14.0/g" externaldns.yaml

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# MyDomain=$MyDomain MyDnzHostedZoneId=$MyDnzHostedZoneId envsubst < externaldns.yaml | kubectl apply -f -

serviceaccount/external-dns created

clusterrole.rbac.authorization.k8s.io/external-dns created

clusterrolebinding.rbac.authorization.k8s.io/external-dns-viewer created

deployment.apps/external-dns created

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# k get pod -n kube-system | grep external

external-dns-7fd77dcbc-9gpll 1/1 Running 0 26s

# kube-ops-view 설치

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# helm repo add geek-cookbook https://geek-cookbook.github.io/charts/

"geek-cookbook" has been added to your repositories

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# helm install kube-ops-view geek-cookbook/kube-ops-view --version 1.2.2 --set env.TZ="Asia/Seoul" --namespace kube-system

NAME: kube-ops-view

LAST DEPLOYED: Thu Mar 21 15:28:31 2024

NAMESPACE: kube-system

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

1. Get the application URL by running these commands:

export POD_NAME=$(kubectl get pods --namespace kube-system -l "app.kubernetes.io/name=kube-ops-view,app.kubernetes.io/instance=kube-ops-view" -o jsonpath="{.items[0].metadata.name}")

echo "Visit http://127.0.0.1:8080 to use your application"

kubectl port-forward $POD_NAME 8080:8080

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl patch svc -n kube-system kube-ops-view -p '{"spec":{"type":"LoadBalancer"}}'

service/kube-ops-view patched

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl annotate service kube-ops-view -n kube-system "external-dns.alpha.kubernetes.io/hostname=kubeopsview.$MyDomain"

service/kube-ops-view annotated

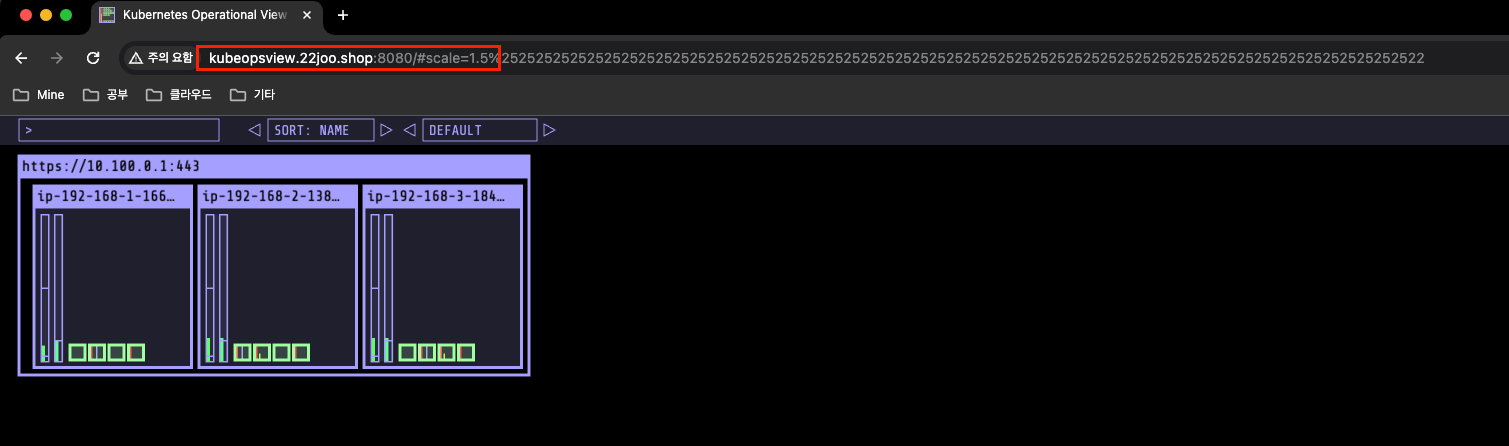

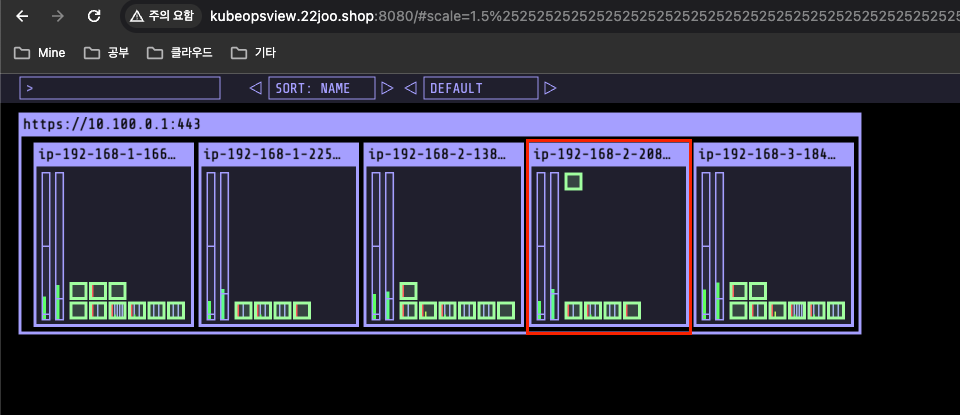

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# echo -e "Kube Ops View URL = http://kubeopsview.$MyDomain:8080/#scale=1.5"

Kube Ops View URL = http://kubeopsview.22joo.shop:8080/#scale=1.5

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# k get svc -n kube-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

aws-load-balancer-webhook-service ClusterIP 10.100.128.136 <none> 443/TCP 28m

kube-dns ClusterIP 10.100.0.10 <none> 53/UDP,53/TCP,9153/TCP 60m

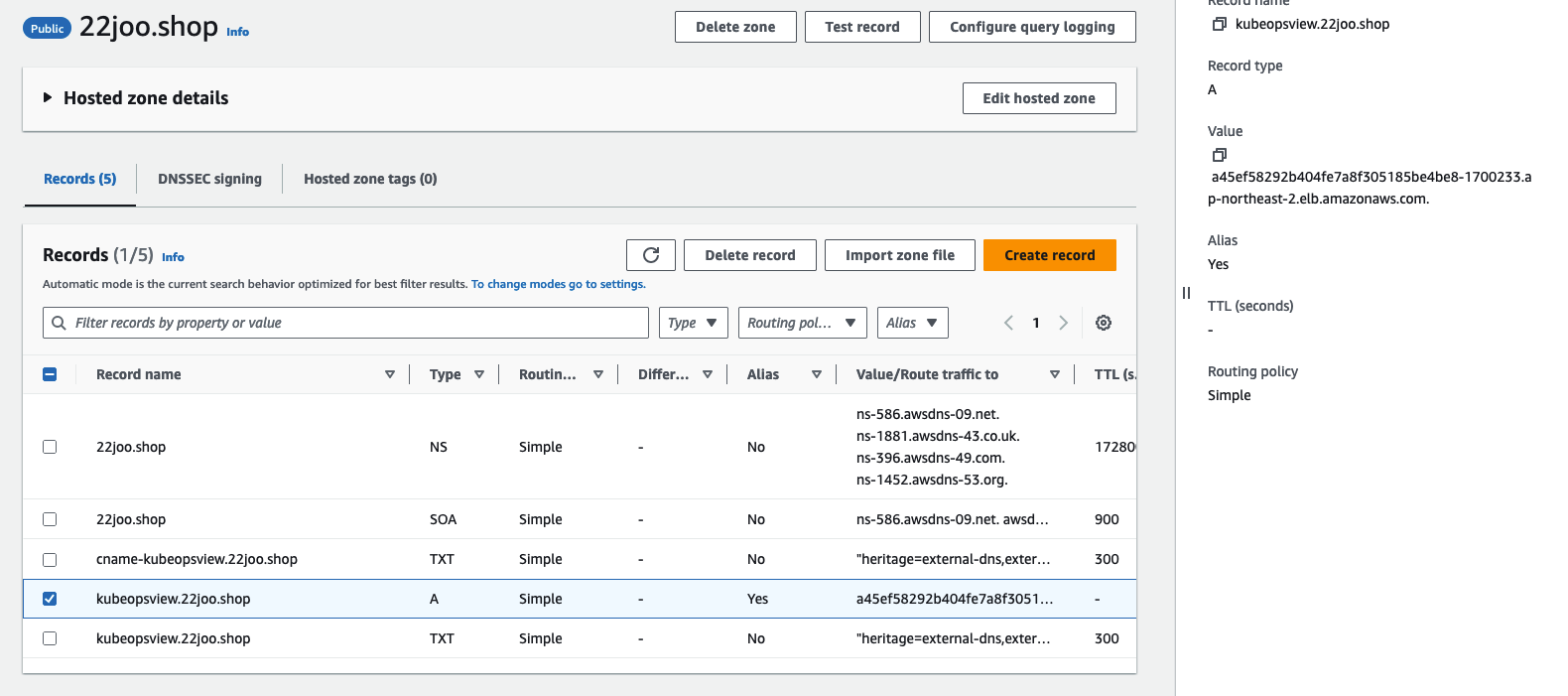

kube-ops-view LoadBalancer 10.100.13.209 a45ef58292b404fe7a8f305185be4be8-1700233.ap-northeast-2.elb.amazonaws.com 8080:31678/TCP 2m3s- A Record 로 Loadbalancer type으로 설정한 kube-ops-view 서비스를 먹고있음을 확인 할 수 있습니다.

- 도메인으로 접속

- 설치 정보 확인

# 이미지 정보 확인

kubectl get pods --all-namespaces -o jsonpath="{.items[*].spec.containers[*].image}" | tr -s '[[:space:]]' '\n' | sort | uniq -c

# eksctl 설치/업데이트 addon 확인

eksctl get addon --cluster $CLUSTER_NAME

# IRSA 확인

eksctl get iamserviceaccount --cluster $CLUSTER_NAME1. Storage의 이해

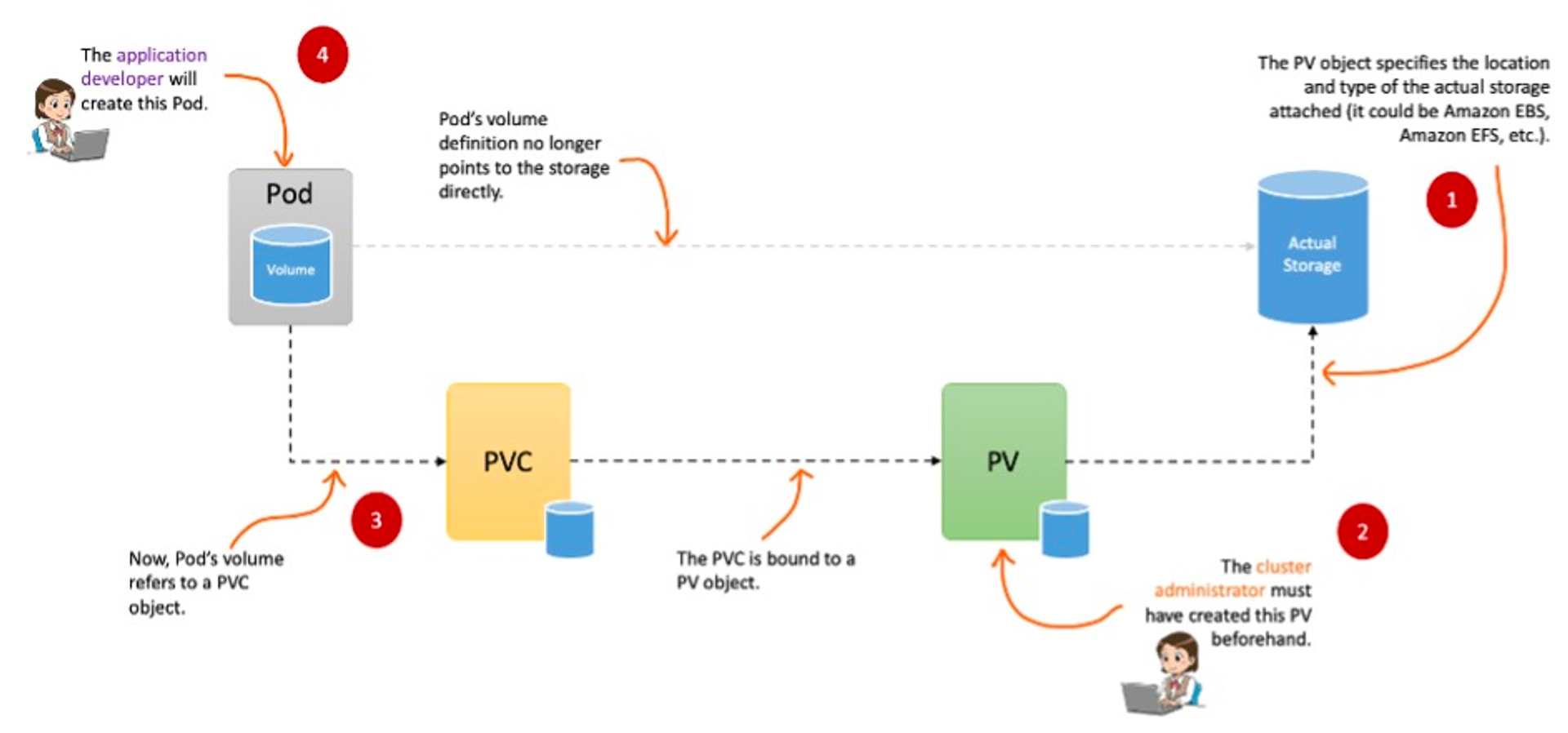

- 실행되고 있는 Pod 가 정지되면 해당 Pod 내의 데이터는 모두 삭제됩니다. 즉 일시적인 파일시스템을 사용하고 있습니다.

- 따라서, 데이터의 보존이 필요하기 때문에 k8s 에서는 PV, PVC 의 개념을 도입합니다.

- 로컬 Volume (Host path)에 PV(Persist Volume)을 정의 한뒤, PVC(Persist Volume Claim)을 통해 Bound 시켜 사용합니다.

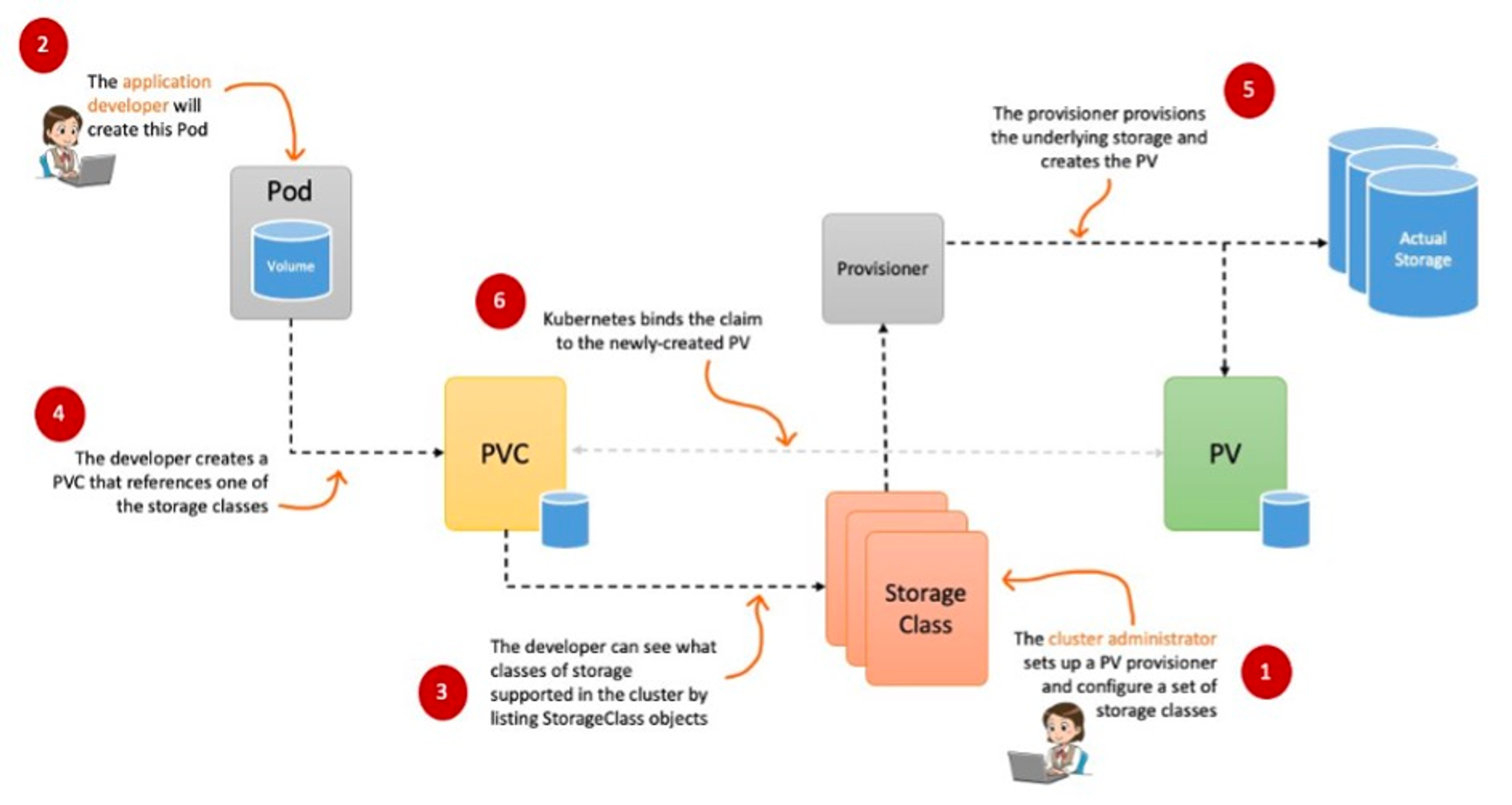

- 또한, 파드가 생성될 때 자동으로 볼륨을 마운트하여 파드에 연결하는 기능을 동적 프로비저닝(Dynamic Provisioning)이라고 합니다.

- 만약, PV 의 사용이 끝났을 때 해당 볼륨을 어떻게 할 것인지 별도로 설정할 수 있으며 이것을 k8s 에서 Reclaim Policy 라고 칭합니다. 아래는 Reclaim Policy 방식입니다.

- Retain (보존)

- Delete (삭제, EBS 볼륨도 삭제되게 됩니다.)

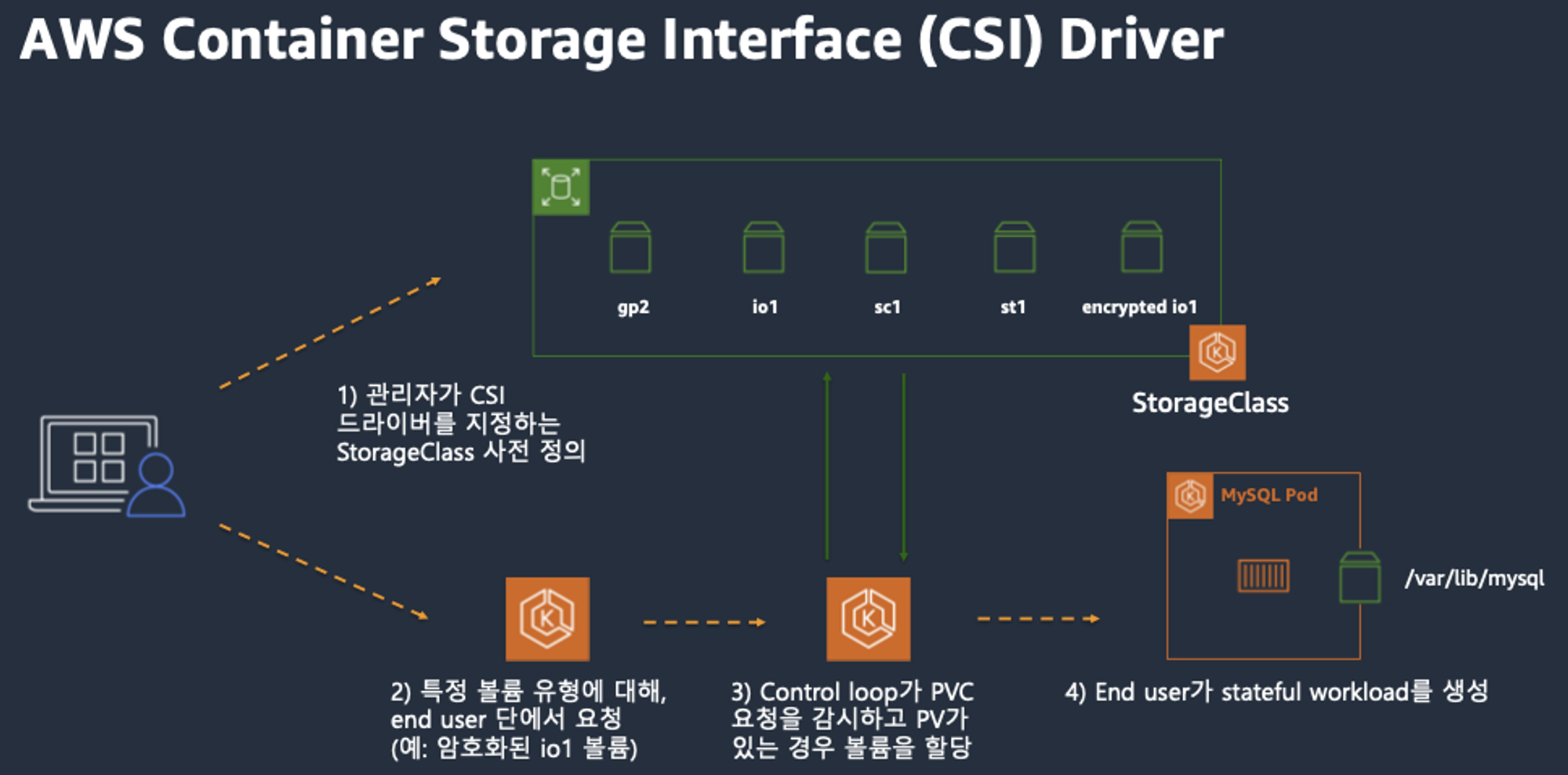

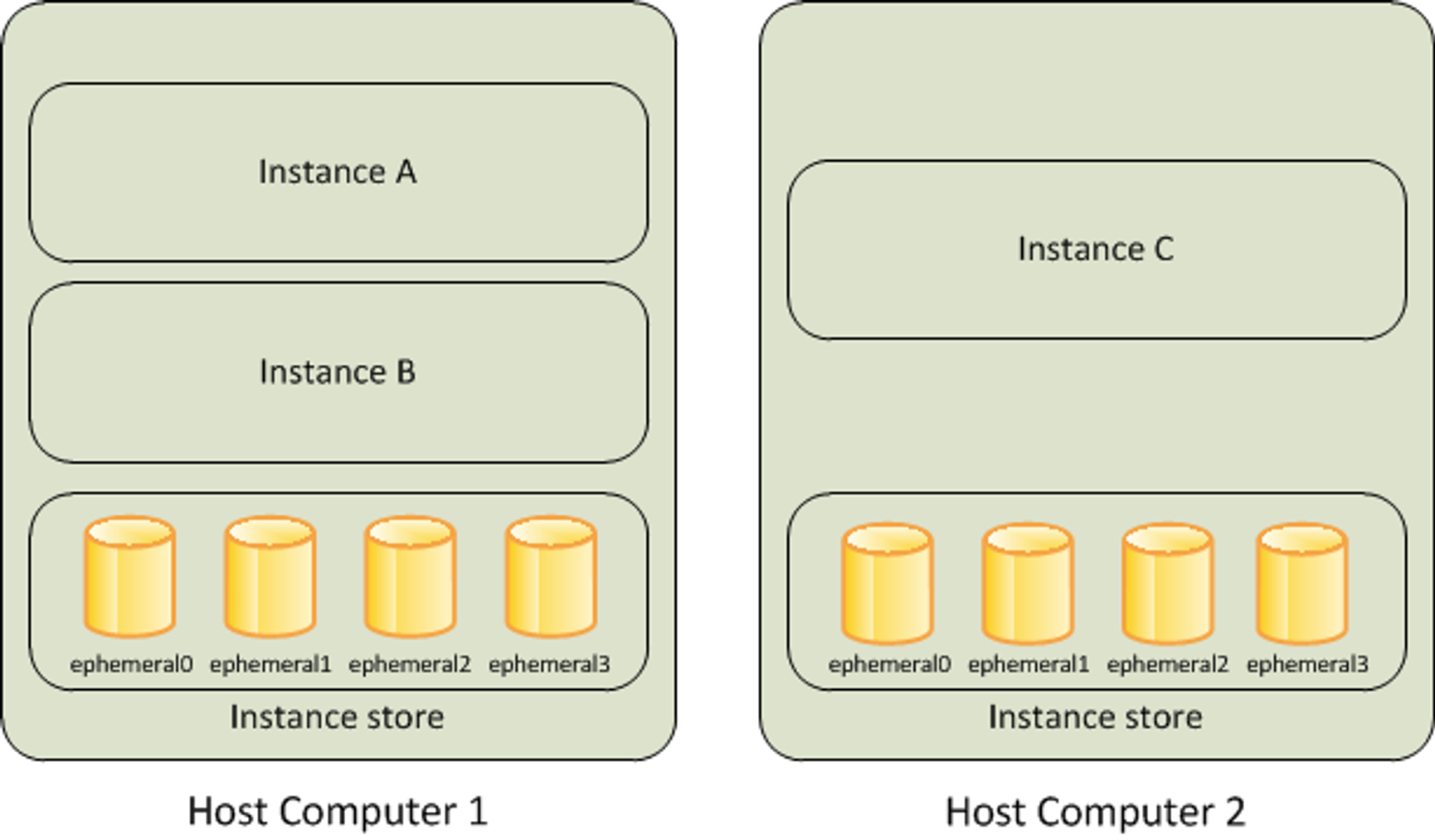

CSI Driver

- 간단히 요약하자면, k8s 에 내장된 provisioner를 모두 삭제하고, 별도의 Controller Pod 를 통해 Dynamic Provisioning 을 사용할 수 있게 만든 Driver 입니다.

-

오른쪽 StatefulSet 또는 Deployment로 배포된 controller Pod이 AWS API를 사용하여 실제 EBS volume을 생성하는 역할을 합니다.

왼쪽 DaemonSet으로 배포된 node Pod은 AWS API를 사용하여 Kubernetes node (EC2 instance)에 EBS volume을 attach 해줍니다. -

그러면 기본 컨테이너 환경에서는 임시 파일 시스템이 어떻게 사용되는지 확인해봅시다.

- Pod 를 삭제했다가 다시 띄우는 시나리오를 통해 알아봅시다.

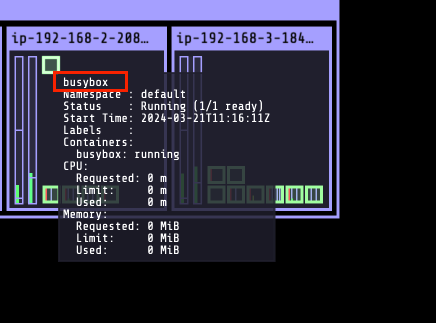

# date 명령어로 현재 시간을 10초 간격으로 /home/pod-out.txt 파일에 저장하는 Pod 배포

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# curl -s -O https://raw.githubusercontent.com/gasida/PKOS/main/3/date-busybox-pod.yaml

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# cat date-busybox-pod.yaml | yh

apiVersion: v1

kind: Pod

metadata:

name: busybox

spec:

terminationGracePeriodSeconds: 3

containers:

- name: busybox

image: busybox

command:

- "/bin/sh"

- "-c"

- "while true; do date >> /home/pod-out.txt; cd /home; sync; sync; sleep 10; done"

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl apply -f date-busybox-pod.yaml

pod/busybox created

# file 확인

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

busybox 1/1 Running 0 27s

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl exec busybox -- tail -f /home/pod-out.txt

Thu Mar 21 07:28:26 UTC 2024

Thu Mar 21 07:28:36 UTC 2024

Thu Mar 21 07:28:46 UTC 2024

# 파드 삭제 후 다시 생성 후 파일 정보 확인

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl delete pod busybox

pod "busybox" deleted

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl apply -f date-busybox-pod.yaml

pod/busybox created

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl exec busybox -- tail -f /home/pod-out.txt

Thu Mar 21 07:29:35 UTC 2024-

확인 결과

Thu Mar 21 07:28:26 UTC 2024 Thu Mar 21 07:28:36 UTC 2024 Thu Mar 21 07:28:46 UTC 2024에 대한 데이터들은 보이지 않습니다. 임시적이란 것입니다. -

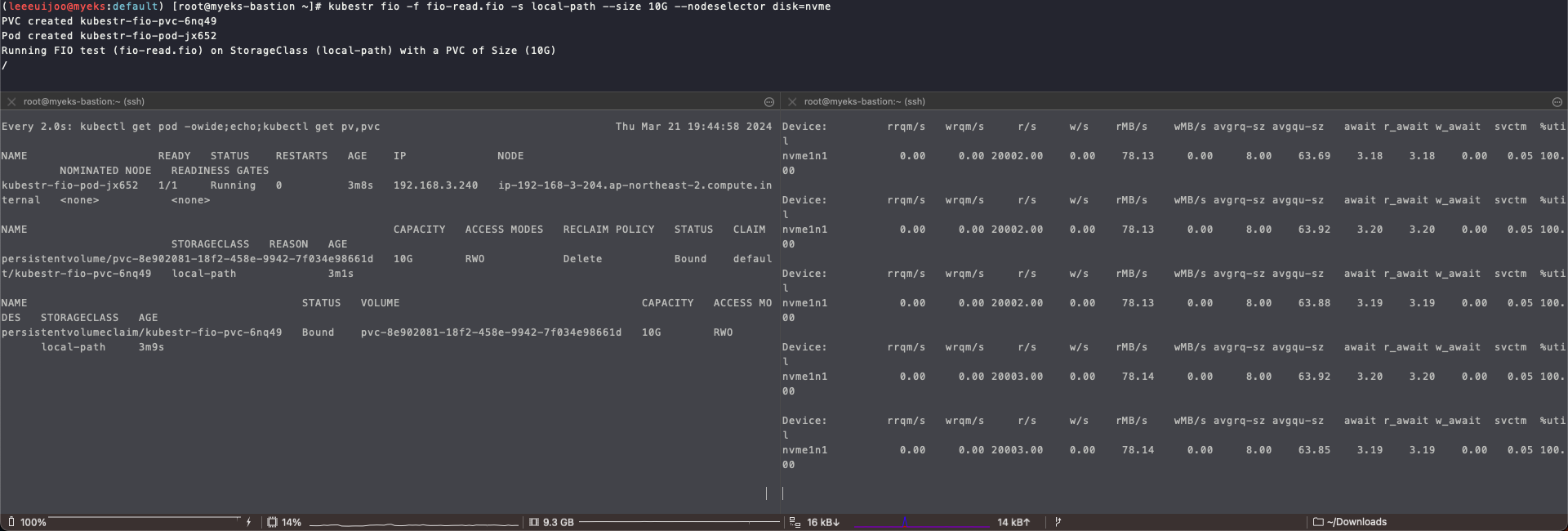

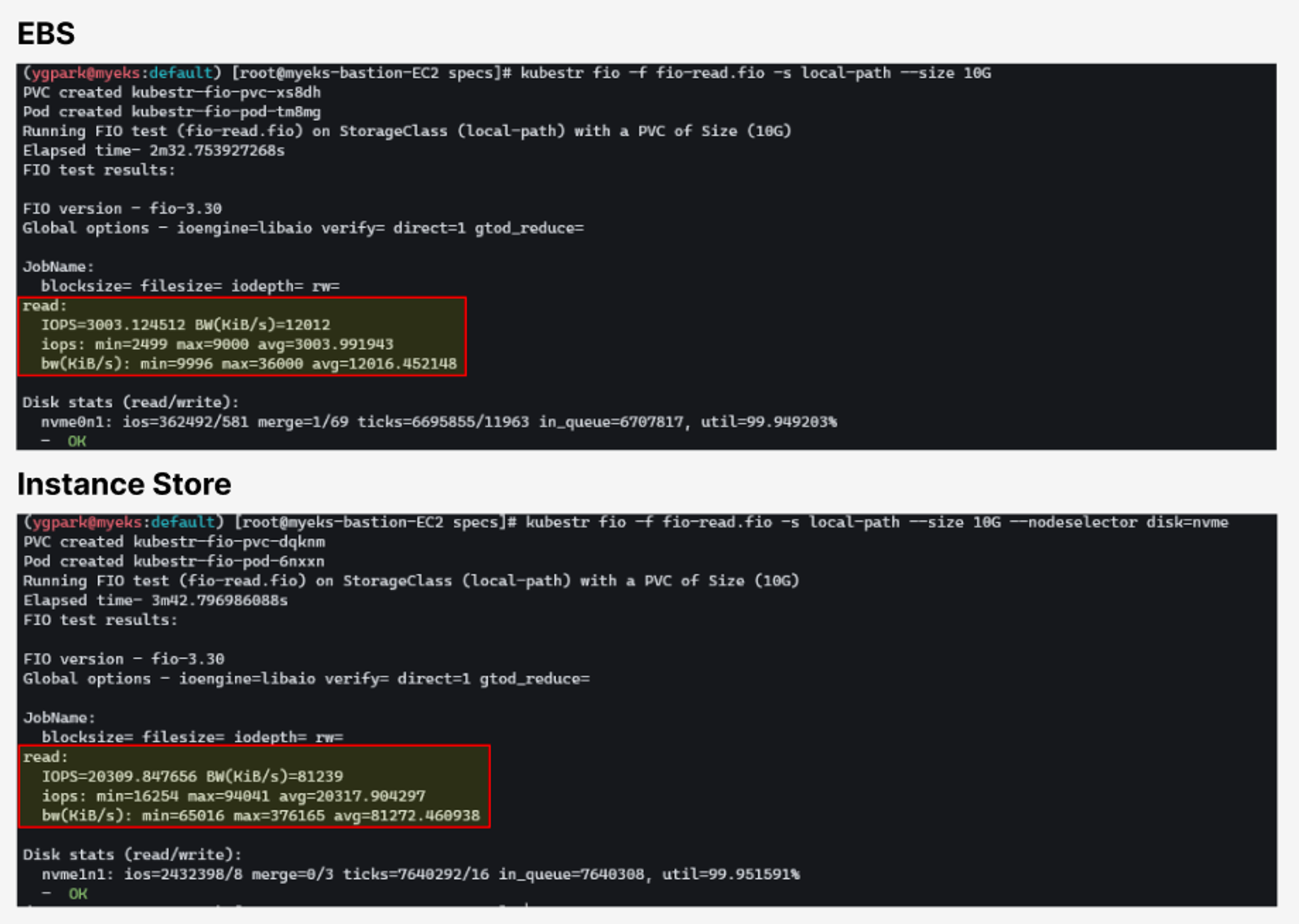

그러면, 호스트 Path 를 사용하는 PV/PVC : local-path-provisioner 스트리지 클래스 배포해보겠습니다.

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# curl -s -O https://raw.githubusercontent.com/rancher/local-path-provisioner/master/deploy/local-path-storage.yaml

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# cat local-path-storage.yaml

apiVersion: v1

kind: Namespace

metadata:

name: local-path-storage

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: local-path-provisioner-service-account

namespace: local-path-storage

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

name: local-path-provisioner-role

namespace: local-path-storage

rules:

- apiGroups: [""]

resources: ["pods"]

verbs: ["get", "list", "watch", "create", "patch", "update", "delete"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: local-path-provisioner-role

rules:

- apiGroups: [""]

resources: ["nodes", "persistentvolumeclaims", "configmaps", "pods", "pods/log"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "patch", "update", "delete"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "patch"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: local-path-provisioner-bind

namespace: local-path-storage

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: local-path-provisioner-role

subjects:

- kind: ServiceAccount

name: local-path-provisioner-service-account

namespace: local-path-storage

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: local-path-provisioner-bind

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: local-path-provisioner-role

subjects:

- kind: ServiceAccount

name: local-path-provisioner-service-account

namespace: local-path-storage

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: local-path-provisioner

namespace: local-path-storage

spec:

replicas: 1

selector:

matchLabels:

app: local-path-provisioner

template:

metadata:

labels:

app: local-path-provisioner

spec:

serviceAccountName: local-path-provisioner-service-account

containers:

- name: local-path-provisioner

image: rancher/local-path-provisioner:master-head

imagePullPolicy: IfNotPresent

command:

- local-path-provisioner

- --debug

- start

- --config

- /etc/config/config.json

volumeMounts:

- name: config-volume

mountPath: /etc/config/

env:

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumes:

- name: config-volume

configMap:

name: local-path-config

---

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: local-path

provisioner: rancher.io/local-path

volumeBindingMode: WaitForFirstConsumer

reclaimPolicy: Delete

---

kind: ConfigMap

apiVersion: v1

metadata:

name: local-path-config

namespace: local-path-storage

data:

config.json: |-

{

"nodePathMap":[

{

"node":"DEFAULT_PATH_FOR_NON_LISTED_NODES",

"paths":["/opt/local-path-provisioner"]

}

]

}

setup: |-

#!/bin/sh

set -eu

mkdir -m 0777 -p "$VOL_DIR"

teardown: |-

#!/bin/sh

set -eu

rm -rf "$VOL_DIR"

helperPod.yaml: |-

apiVersion: v1

kind: Pod

metadata:

name: helper-pod

spec:

priorityClassName: system-node-critical

tolerations:

- key: node.kubernetes.io/disk-pressure

operator: Exists

effect: NoSchedule

containers:

- name: helper-pod

image: busybox

imagePullPolicy: IfNotPresent

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl apply -f local-path-storage.yaml

namespace/local-path-storage created

serviceaccount/local-path-provisioner-service-account created

role.rbac.authorization.k8s.io/local-path-provisioner-role created

clusterrole.rbac.authorization.k8s.io/local-path-provisioner-role created

rolebinding.rbac.authorization.k8s.io/local-path-provisioner-bind created

clusterrolebinding.rbac.authorization.k8s.io/local-path-provisioner-bind created

deployment.apps/local-path-provisioner created

storageclass.storage.k8s.io/local-path created

configmap/local-path-config created

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl get-all -n local-path-storage

NAME NAMESPACE AGE

configmap/kube-root-ca.crt local-path-storage 5s

configmap/local-path-config local-path-storage 5s

pod/local-path-provisioner-5d854bc5c4-bj55s local-path-storage 5s

serviceaccount/default local-path-storage 5s

serviceaccount/local-path-provisioner-service-account local-path-storage 5s

deployment.apps/local-path-provisioner local-path-storage 5s

replicaset.apps/local-path-provisioner-5d854bc5c4 local-path-storage 5s

rolebinding.rbac.authorization.k8s.io/local-path-provisioner-bind local-path-storage 5s

role.rbac.authorization.k8s.io/local-path-provisioner-role local-path-storage 5s

# 스토리지 클래스 생성 확인

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

gp2 (default) kubernetes.io/aws-ebs Delete WaitForFirstConsumer false 125m

local-path rancher.io/local-path Delete WaitForFirstConsumer false 34s- PV/PVC 를 사용하는 파드를 생성해보겠습니다.

# PVC 생성

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# curl -s -O https://raw.githubusercontent.com/gasida/PKOS/main/3/localpath1.yaml

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# cat localpath1.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: localpath-claim

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

# 현재 Pending 상태 입니다.

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl apply -f localpath1.yaml

persistentvolumeclaim/localpath-claim created

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# k get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

localpath-claim Pending local-path 7s

# Pod 생성

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# curl -s -O https://raw.githubusercontent.com/gasida/PKOS/main/3/localpath2.yaml

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# cat localpath2.yaml | yh

apiVersion: v1

kind: Pod

metadata:

name: app

spec:

terminationGracePeriodSeconds: 3

containers:

- name: app

image: centos

command: ["/bin/sh"]

args: ["-c", "while true; do echo $(date -u) >> /data/out.txt; sleep 5; done"]

volumeMounts:

- name: persistent-storage

mountPath: /data

volumes:

- name: persistent-storage

persistentVolumeClaim:

claimName: localpath-claim

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl apply -f localpath2.yaml

pod/app created

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# k get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

localpath-claim Bound pvc-47595f15-4808-4084-98e8-067c9d15284e 1Gi RWO local-path 86s- Data 보존 확인

- Pod 삭제 이후 어떻게 되는지 변화 확인

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl exec -it app -- tail -f /data/out.txt

Thu Mar 21 07:37:41 UTC 2024

Thu Mar 21 07:37:46 UTC 2024

Thu Mar 21 07:37:51 UTC 2024

Thu Mar 21 07:37:56 UTC 2024

Thu Mar 21 07:38:01 UTC 2024

Thu Mar 21 07:38:06 UTC 2024

Thu Mar 21 07:38:11 UTC 2024

Thu Mar 21 07:38:16 UTC 2024

Thu Mar 21 07:38:21 UTC 2024

Thu Mar 21 07:38:26 UTC 2024

Thu Mar 21 07:38:31 UTC 2024

# 각 Node 에 볼륨이 연결되어 저장되어 있는 파일 확인

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# for node in $N1 $N2 $N3; do ssh ec2-user@$node tree /opt/local-path-provisioner; done

/opt/local-path-provisioner [error opening dir]

0 directories, 0 files

/opt/local-path-provisioner [error opening dir]

0 directories, 0 files

/opt/local-path-provisioner

└── pvc-47595f15-4808-4084-98e8-067c9d15284e_default_localpath-claim

└── out.txt

1 directory, 1 file

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl delete pod app

pod "app" deleted

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# k get pv,pvc

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

persistentvolume/pvc-47595f15-4808-4084-98e8-067c9d15284e 1Gi RWO Delete Bound default/localpath-claim local-path 3m56s

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/localpath-claim Bound pvc-47595f15-4808-4084-98e8-067c9d15284e 1Gi RWO local-path 4m55s

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# for node in $N1 $N2 $N3; do ssh ec2-user@$node tree /opt/local-path-provisioner; done

/opt/local-path-provisioner [error opening dir]

0 directories, 0 files

/opt/local-path-provisioner [error opening dir]

0 directories, 0 files

/opt/local-path-provisioner

└── pvc-47595f15-4808-4084-98e8-067c9d15284e_default_localpath-claim

└── out.txt

1 directory, 1 file

# Pod 다시 실행 이전의 time travel 도 남아 있는 것을 확인할 수 있습니다.

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl exec -it app -- head /data/out.txt

Thu Mar 21 07:37:06 UTC 2024

Thu Mar 21 07:37:11 UTC 2024

Thu Mar 21 07:37:16 UTC 2024

Thu Mar 21 07:37:21 UTC 2024

Thu Mar 21 07:37:26 UTC 2024

Thu Mar 21 07:37:31 UTC 2024

Thu Mar 21 07:37:36 UTC 2024

Thu Mar 21 07:37:41 UTC 2024

Thu Mar 21 07:37:46 UTC 2024

Thu Mar 21 07:37:51 UTC 2024

# 삭제

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl delete pod app

pod "app" deleted

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl get pv,pvc

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

persistentvolume/pvc-47595f15-4808-4084-98e8-067c9d15284e 1Gi RWO Delete Bound default/localpath-claim local-path 7m49s

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/localpath-claim Bound pvc-47595f15-4808-4084-98e8-067c9d15284e 1Gi RWO local-path 8m48s

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl delete pvc localpath-claim

persistentvolumeclaim "localpath-claim" deleted

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pvc-47595f15-4808-4084-98e8-067c9d15284e 1Gi RWO Delete Released default/localpath-claim local-path 7m51s

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# for node in $N1 $N2 $N3; do ssh ec2-user@$node tree /opt/local-path-provisioner; done

/opt/local-path-provisioner [error opening dir]

0 directories, 0 files

/opt/local-path-provisioner [error opening dir]

0 directories, 0 files

/opt/local-path-provisioner

0 directories, 0 files2. AWS EBS Controller

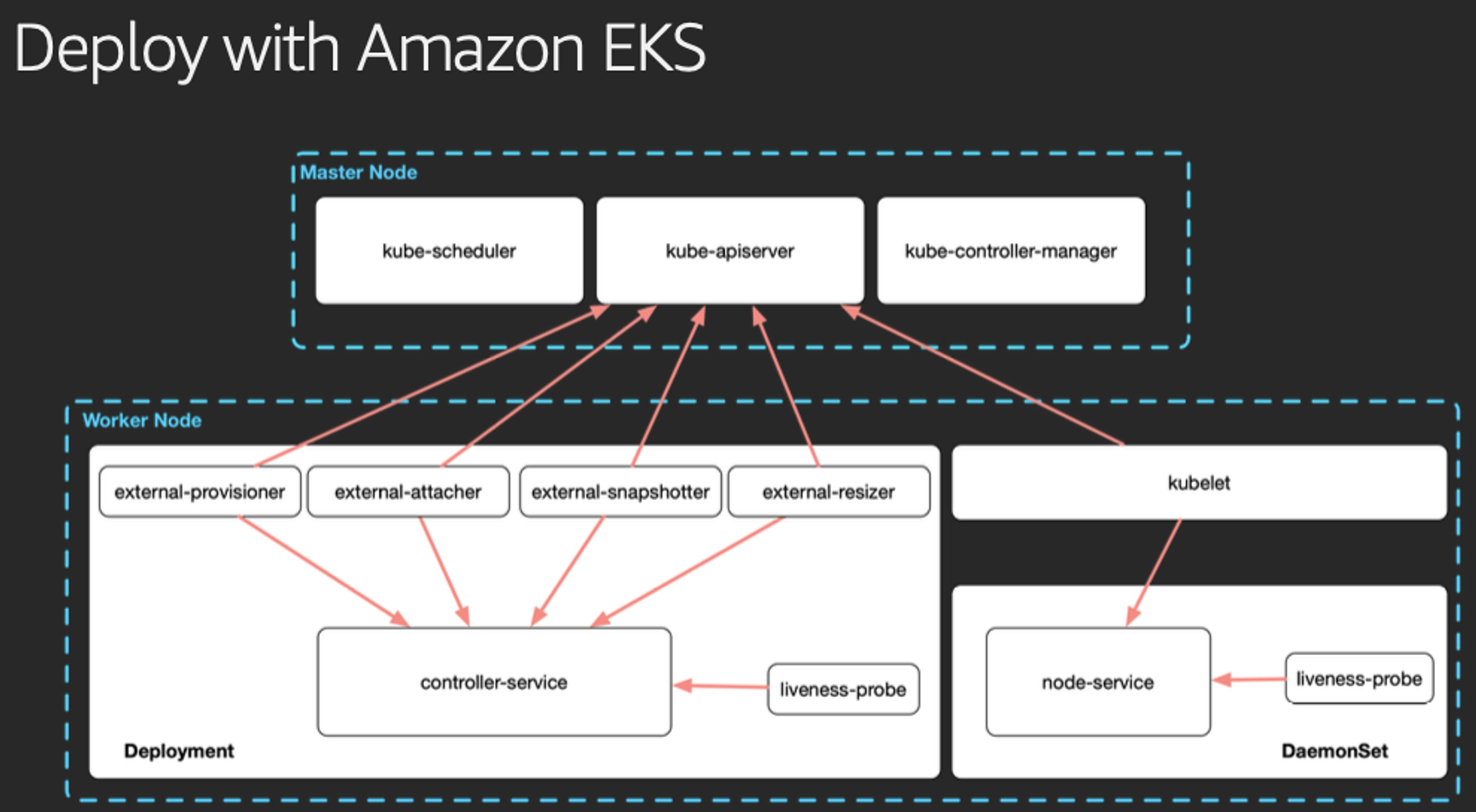

-

EBS CSI 드라이버는 동작이 단순합니다.

-

k8s API 서버가 csi-controller 를 지켜보고 있다가. EBS 볼륨 요청이 들어오면 AWS API (볼륨 생성 API)를 사용하여 노드에 볼륨을 탑재시킵니다.

-

다음으로 k8s API 서버의 Mount 요청은 kubelet 이 받아 csi-node에게 전달하며 결과적으로 Mount 요청에 따라 Node 와 Pod의 EBS 볼륨 마운트가 형성됩니다.

- EBS Driver 는 EKS 의 Add-on 기능으로 분리되어 있으므로 이것을 설치해보겠습니다.

# aws-ebs-csi-driver 전체 버전 정보와 기본 설치 버전(True) 정보 확인

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# aws eks describe-addon-versions \

> --addon-name aws-ebs-csi-driver \

> --kubernetes-version 1.28 \

> --query "addons[].addonVersions[].[addonVersion, compatibilities[].defaultVersion]" \

> --output text

v1.28.0-eksbuild.1

True # 가능

v1.27.0-eksbuild.1

False

v1.26.1-eksbuild.1

False

v1.26.0-eksbuild.1

False

v1.25.0-eksbuild.1

False

v1.24.1-eksbuild.1

False

v1.24.0-eksbuild.1

False

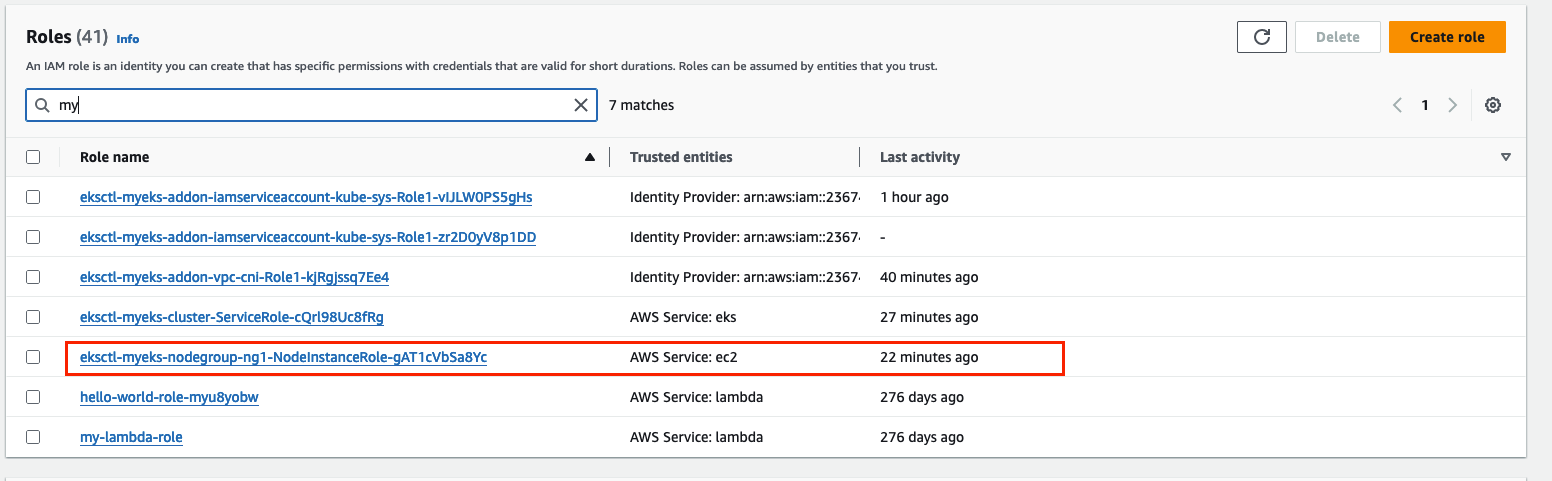

# ISRA 설정 : AWS관리형 정책 AmazonEBSCSIDriverPolicy 사용

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# eksctl create iamserviceaccount \

> --name ebs-csi-controller-sa \

> --namespace kube-system \

> --cluster ${CLUSTER_NAME} \

> --attach-policy-arn arn:aws:iam::aws:policy/service-role/AmazonEBSCSIDriverPolicy \

> --approve \

> --role-only \

> --role-name AmazonEKS_EBS_CSI_DriverRole

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# eksctl get iamserviceaccount --cluster myeks

NAMESPACE NAME ROLE ARN

kube-system aws-load-balancer-controller arn:aws:iam::236747833953:role/eksctl-myeks-addon-iamserviceaccount-kube-sys-Role1-zr2D0yV8p1DD

kube-system ebs-csi-controller-sa arn:aws:iam::236747833953:role/AmazonEKS_EBS_CSI_DriverRole

# Add on 기능 추가

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# eksctl create addon --name aws-ebs-csi-driver --cluster ${CLUSTER_NAME} --service-account-role-arn arn:aws:iam::${ACCOUNT_ID}:role/AmazonEKS_EBS_CSI_DriverRole --force

2024-03-21 17:03:24 [ℹ] Kubernetes version "1.28" in use by cluster "myeks"

2024-03-21 17:03:24 [ℹ] using provided ServiceAccountRoleARN "arn:aws:iam::236747833953:role/AmazonEKS_EBS_CSI_DriverRole"

2024-03-21 17:03:24 [ℹ] creating addon

# 애드온 추가 됐는지 확인

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# eksctl get addon --cluster ${CLUSTER_NAME}

2024-03-21 17:03:55 [ℹ] Kubernetes version "1.28" in use by cluster "myeks"

2024-03-21 17:03:55 [ℹ] getting all addons

2024-03-21 17:03:57 [ℹ] to see issues for an addon run `eksctl get addon --name <addon-name> --cluster <cluster-name>`

NAME VERSION STATUS ISSUES IAMROLE UPDATE AVAILABLE CONFIGURATION VALUES

aws-ebs-csi-driver v1.28.0-eksbuild.1 CREATING 0 arn:aws:iam::236747833953:role/AmazonEKS_EBS_CSI_DriverRole

coredns v1.10.1-eksbuild.7 ACTIVE 0

kube-proxy v1.28.6-eksbuild.2 ACTIVE 0

vpc-cni v1.17.1-eksbuild.1 ACTIVE 0 arn:aws:iam::236747833953:role/eksctl-myeks-addon-vpc-cni-Role1-kjRgjssq7Ee4 enableNetworkPolicy: "true"

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl get deploy,ds -l=app.kubernetes.io/name=aws-ebs-csi-driver -n kube-system

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/ebs-csi-controller 2/2 2 2 30s

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

daemonset.apps/ebs-csi-node 3 3 3 3 3 kubernetes.io/os=linux 30s

daemonset.apps/ebs-csi-node-windows 0 0 0 0 0 kubernetes.io/os=windows 30s

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl get pod -n kube-system -l 'app in (ebs-csi-controller,ebs-csi-node)'

NAME READY STATUS RESTARTS AGE

ebs-csi-controller-765cf7cf9-8pk4z 6/6 Running 0 31s

ebs-csi-controller-765cf7cf9-9ljpn 6/6 Running 0 31s

ebs-csi-node-45t6q 3/3 Running 0 31s

ebs-csi-node-6zk7p 3/3 Running 0 31s

ebs-csi-node-tdbqz 3/3 Running 0 31s

- gp3 스토리지 클래스 생성

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# k get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

gp2 (default) kubernetes.io/aws-ebs Delete WaitForFirstConsumer false 157m

local-path rancher.io/local-path Delete WaitForFirstConsumer false 32m

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# cat <<EOT > gp3-sc.yaml

> kind: StorageClass

> apiVersion: storage.k8s.io/v1

> metadata:

> name: gp3

> allowVolumeExpansion: true

> provisioner: ebs.csi.aws.com

> volumeBindingMode: WaitForFirstConsumer

> parameters:

> type: gp3

> #iops: "5000"

> #throughput: "250"

> allowAutoIOPSPerGBIncrease: 'true'

> encrypted: 'true'

> fsType: xfs # 기본값이 ext4

> EOT

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl apply -f gp3-sc.yaml

storageclass.storage.k8s.io/gp3 created

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

gp2 (default) kubernetes.io/aws-ebs Delete WaitForFirstConsumer false 158m

gp3 ebs.csi.aws.com Delete WaitForFirstConsumer true 2s

local-path rancher.io/local-path Delete WaitForFirstConsumer false 33m

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl describe sc gp3 | grep Parameters

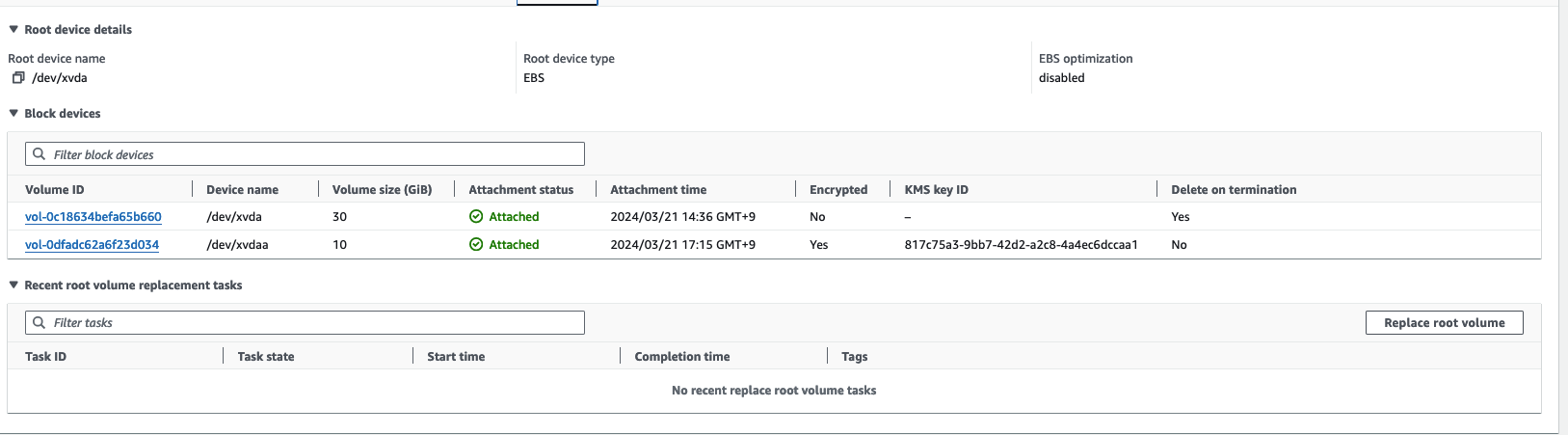

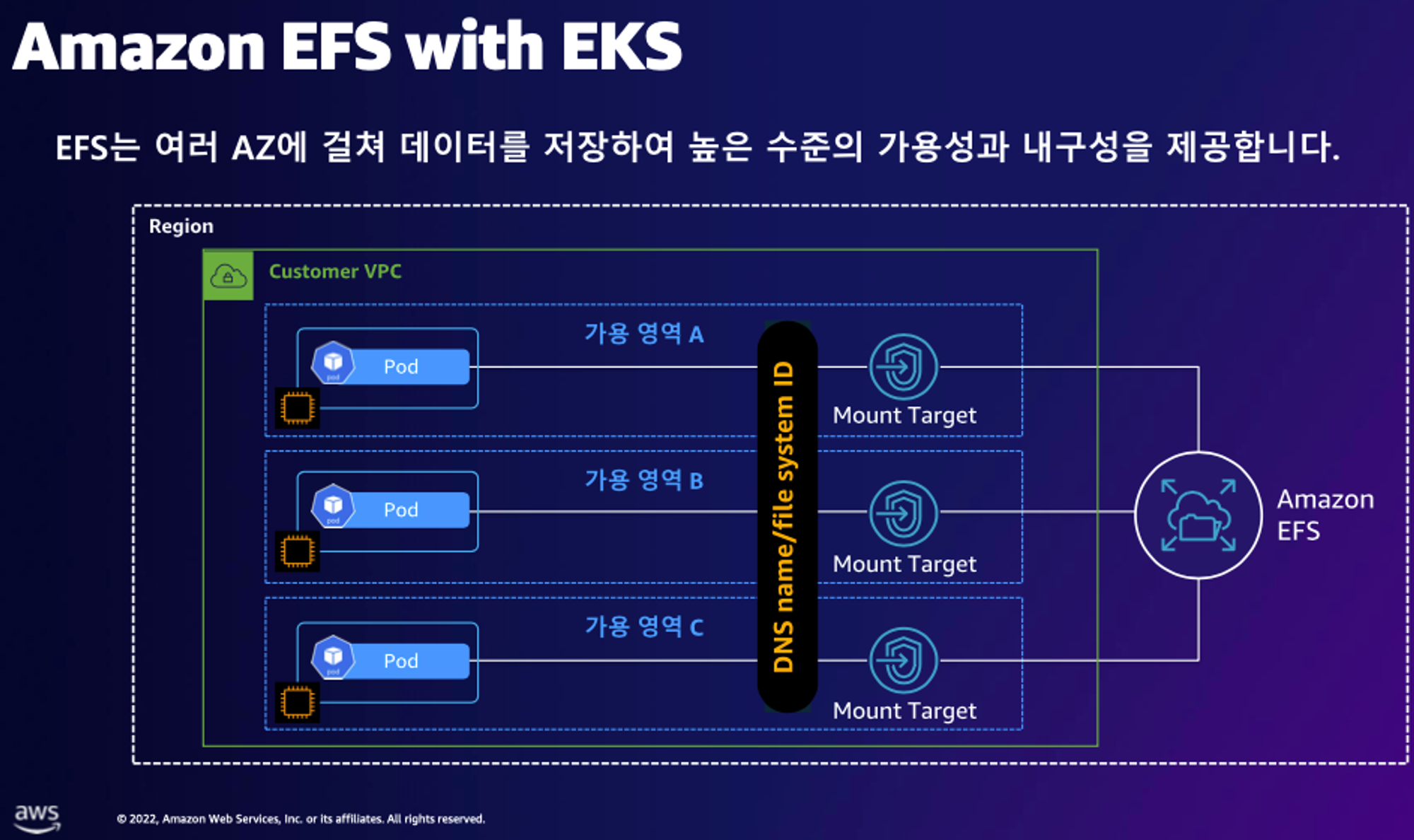

Parameters: allowAutoIOPSPerGBIncrease=true,encrypted=true,fsType=xfs,type=gp3- Worker Node 의 EBS 볼륨 확인

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# aws ec2 describe-volumes --filters Name=tag:Name,Values=$CLUSTER_NAME-ng1-Node --output table

--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

| DescribeVolumes |

+------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

|| Volumes ||

|+------------------+-----------------------------------+------------+-------+---------------------+-------+-------------------------+---------+-------------+-------------------------+--------------+|

|| AvailabilityZone | CreateTime | Encrypted | Iops | MultiAttachEnabled | Size | SnapshotId | State | Throughput | VolumeId | VolumeType ||

|+------------------+-----------------------------------+------------+-------+---------------------+-------+-------------------------+---------+-------------+-------------------------+--------------+|

|| ap-northeast-2a | 2024-03-21T05:36:54.520000+00:00 | False | 3000 | False | 30 | snap-0143f910a7ab68752 | in-use | 125 | vol-0ad80fc82498caf7a | gp3 ||

|+------------------+-----------------------------------+------------+-------+---------------------+-------+-------------------------+---------+-------------+-------------------------+--------------+|

||| Attachments |||

||+-------------------------------------------+-----------------------------------+-------------------+-----------------------------------+------------------+---------------------------------------+||

||| AttachTime | DeleteOnTermination | Device | InstanceId | State | VolumeId |||

||+-------------------------------------------+-----------------------------------+-------------------+-----------------------------------+------------------+---------------------------------------+||

||| 2024-03-21T05:36:54+00:00 | True | /dev/xvda | i-099bf63e828715bb4 | attached | vol-0ad80fc82498caf7a |||

||+-------------------------------------------+-----------------------------------+-------------------+-----------------------------------+------------------+---------------------------------------+||

||| Tags |||

||+------------------------------------------------------------------------------------------------------------------------------+-------------------------------------------------------------------+||

||| Key | Value |||

||+------------------------------------------------------------------------------------------------------------------------------+-------------------------------------------------------------------+||

||| eks:cluster-name | myeks |||

||| Name | myeks-ng1-Node |||

||| eks:nodegroup-name | ng1 |||

||| alpha.eksctl.io/nodegroup-type | managed |||

||| alpha.eksctl.io/nodegroup-name | ng1 |||

||+------------------------------------------------------------------------------------------------------------------------------+-------------------------------------------------------------------+||

|| Volumes ||

|+------------------+-----------------------------------+------------+-------+---------------------+-------+-------------------------+---------+-------------+-------------------------+--------------+|

|| AvailabilityZone | CreateTime | Encrypted | Iops | MultiAttachEnabled | Size | SnapshotId | State | Throughput | VolumeId | VolumeType ||

|+------------------+-----------------------------------+------------+-------+---------------------+-------+-------------------------+---------+-------------+-------------------------+--------------+|

|| ap-northeast-2b | 2024-03-21T05:36:54.510000+00:00 | False | 3000 | False | 30 | snap-0143f910a7ab68752 | in-use | 125 | vol-04d0478c63af9f20f | gp3 ||

|+------------------+-----------------------------------+------------+-------+---------------------+-------+-------------------------+---------+-------------+-------------------------+--------------+|

||| Attachments |||

||+-------------------------------------------+-----------------------------------+-------------------+-----------------------------------+------------------+---------------------------------------+||

||| AttachTime | DeleteOnTermination | Device | InstanceId | State | VolumeId |||

||+-------------------------------------------+-----------------------------------+-------------------+-----------------------------------+------------------+---------------------------------------+||

||| 2024-03-21T05:36:54+00:00 | True | /dev/xvda | i-07d8c1dd603a90f38 | attached | vol-04d0478c63af9f20f |||

||+-------------------------------------------+-----------------------------------+-------------------+-----------------------------------+------------------+---------------------------------------+||

||| Tags |||

||+------------------------------------------------------------------------------------------------------------------------------+-------------------------------------------------------------------+||

||| Key | Value |||

||+------------------------------------------------------------------------------------------------------------------------------+-------------------------------------------------------------------+||

||| Name | myeks-ng1-Node |||

||| eks:nodegroup-name | ng1 |||

||| alpha.eksctl.io/nodegroup-type | managed |||

||| eks:cluster-name | myeks |||

||| alpha.eksctl.io/nodegroup-name | ng1 |||

||+------------------------------------------------------------------------------------------------------------------------------+-------------------------------------------------------------------+||

|| Volumes ||

|+------------------+-----------------------------------+------------+-------+---------------------+-------+-------------------------+---------+-------------+-------------------------+--------------+|

|| AvailabilityZone | CreateTime | Encrypted | Iops | MultiAttachEnabled | Size | SnapshotId | State | Throughput | VolumeId | VolumeType ||

|+------------------+-----------------------------------+------------+-------+---------------------+-------+-------------------------+---------+-------------+-------------------------+--------------+|

|| ap-northeast-2c | 2024-03-21T05:36:54.597000+00:00 | False | 3000 | False | 30 | snap-0143f910a7ab68752 | in-use | 125 | vol-0c18634befa65b660 | gp3 ||

|+------------------+-----------------------------------+------------+-------+---------------------+-------+-------------------------+---------+-------------+-------------------------+--------------+|

||| Attachments |||

||+-------------------------------------------+-----------------------------------+-------------------+-----------------------------------+------------------+---------------------------------------+||

||| AttachTime | DeleteOnTermination | Device | InstanceId | State | VolumeId |||

||+-------------------------------------------+-----------------------------------+-------------------+-----------------------------------+------------------+---------------------------------------+||

||| 2024-03-21T05:36:54+00:00 | True | /dev/xvda | i-0be872382bf8d6c78 | attached | vol-0c18634befa65b660 |||

||+-------------------------------------------+-----------------------------------+-------------------+-----------------------------------+------------------+---------------------------------------+||

||| Tags |||

||+------------------------------------------------------------------------------------------------------------------------------+-------------------------------------------------------------------+||

||| Key | Value |||

||+------------------------------------------------------------------------------------------------------------------------------+-------------------------------------------------------------------+||

||| alpha.eksctl.io/nodegroup-name | ng1 |||

||| eks:cluster-name | myeks |||

||| eks:nodegroup-name | ng1 |||

||| Name | myeks-ng1-Node |||

||| alpha.eksctl.io/nodegroup-type | managed |||

||+------------------------------------------------------------------------------------------------------------------------------+-------------------------------------------------------------------+||

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# aws ec2 describe-volumes --filters Name=tag:Name,Values=$CLUSTER_NAME-ng1-Node --query "Volumes[*].Attachments" | jq

[

[

{

"AttachTime": "2024-03-21T05:36:54+00:00",

"Device": "/dev/xvda",

"InstanceId": "i-099bf63e828715bb4",

"State": "attached",

"VolumeId": "vol-0ad80fc82498caf7a",

"DeleteOnTermination": true

}

],

[

{

"AttachTime": "2024-03-21T05:36:54+00:00",

"Device": "/dev/xvda",

"InstanceId": "i-07d8c1dd603a90f38",

"State": "attached",

"VolumeId": "vol-04d0478c63af9f20f",

"DeleteOnTermination": true

}

],

[

{

"AttachTime": "2024-03-21T05:36:54+00:00",

"Device": "/dev/xvda",

"InstanceId": "i-0be872382bf8d6c78",

"State": "attached",

"VolumeId": "vol-0c18634befa65b660",

"DeleteOnTermination": true

}

]

]

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# aws ec2 describe-volumes --filters Name=tag:Name,Values=$CLUSTER_NAME-ng1-Node --query "Volumes[*].{ID:VolumeId,Tag:Tags}" | jq

[

{

"ID": "vol-0ad80fc82498caf7a",

"Tag": [

{

"Key": "eks:cluster-name",

"Value": "myeks"

},

{

"Key": "Name",

"Value": "myeks-ng1-Node"

},

{

"Key": "eks:nodegroup-name",

"Value": "ng1"

},

{

"Key": "alpha.eksctl.io/nodegroup-type",

"Value": "managed"

},

{

"Key": "alpha.eksctl.io/nodegroup-name",

"Value": "ng1"

}

]

},

{

"ID": "vol-04d0478c63af9f20f",

"Tag": [

{

"Key": "Name",

"Value": "myeks-ng1-Node"

},

{

"Key": "eks:nodegroup-name",

"Value": "ng1"

},

{

"Key": "alpha.eksctl.io/nodegroup-type",

"Value": "managed"

},

{

"Key": "eks:cluster-name",

"Value": "myeks"

},

{

"Key": "alpha.eksctl.io/nodegroup-name",

"Value": "ng1"

}

]

},

{

"ID": "vol-0c18634befa65b660",

"Tag": [

{

"Key": "alpha.eksctl.io/nodegroup-name",

"Value": "ng1"

},

{

"Key": "eks:cluster-name",

"Value": "myeks"

},

{

"Key": "eks:nodegroup-name",

"Value": "ng1"

},

{

"Key": "Name",

"Value": "myeks-ng1-Node"

},

{

"Key": "alpha.eksctl.io/nodegroup-type",

"Value": "managed"

}

]

}

]

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# aws ec2 describe-volumes --filters Name=tag:Name,Values=$CLUSTER_NAME-ng1-Node --query "Volumes[].[VolumeId, VolumeType, Attachments[].[InstanceId, State][]][]" | jq

[

"vol-0ad80fc82498caf7a",

"gp3",

[

"i-099bf63e828715bb4",

"attached"

],

"vol-04d0478c63af9f20f",

"gp3",

[

"i-07d8c1dd603a90f38",

"attached"

],

"vol-0c18634befa65b660",

"gp3",

[

"i-0be872382bf8d6c78",

"attached"

]

]

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# aws ec2 describe-volumes --filters Name=tag:Name,Values=$CLUSTER_NAME-ng1-Node --query "Volumes[].{VolumeId: VolumeId, VolumeType: VolumeType, InstanceId: Attachments[0].InstanceId, State: Attachments[0].State}" | jq

[

{

"VolumeId": "vol-0ad80fc82498caf7a",

"VolumeType": "gp3",

"InstanceId": "i-099bf63e828715bb4",

"State": "attached"

},

{

"VolumeId": "vol-04d0478c63af9f20f",

"VolumeType": "gp3",

"InstanceId": "i-07d8c1dd603a90f38",

"State": "attached"

},

{

"VolumeId": "vol-0c18634befa65b660",

"VolumeType": "gp3",

"InstanceId": "i-0be872382bf8d6c78",

"State": "attached"

}

]- Worker Node 에서 Pod 에 추가한 EBS 볼륨 확인

- 아직 Volume Claim 을 걸지 않았기 때문에 요소가 없습니다.

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# aws ec2 describe-volumes --filters Name=tag:ebs.csi.aws.com/cluster,Values=true --output table

-----------------

|DescribeVolumes|

+---------------+

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# aws ec2 describe-volumes --filters Name=tag:ebs.csi.aws.com/cluster,Values=true --query "Volumes[*].{ID:VolumeId,Tag:Tags}" | jq

[]

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# aws ec2 describe-volumes --filters Name=tag:ebs.csi.aws.com/cluster,Values=true --query "Volumes[].{VolumeId: VolumeId, VolumeType: VolumeType, InstanceId: Attachments[0].InstanceId, State: Attachments[0].State}" | jq

[]

# 워커노드에서 파드에 추가한 EBS 볼륨 모니터링 - 아직 안뜸

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# while true; do aws ec2 describe-volumes --filters Name=tag:ebs.csi.aws.com/cluster,Values=true --query "Volumes[].{VolumeId: VolumeId, VolumeType: VolumeType, InstanceId: Attachments[0].InstanceId, State: Attachments[0].State}" --output text; date; sleep 1; done

Thu Mar 21 17:14:05 KST 2024

Thu Mar 21 17:14:07 KST 2024

Thu Mar 21 17:14:09 KST 2024

Thu Mar 21 17:14:11 KST 2024

Thu Mar 21 17:14:13 KST 2024

Thu Mar 21 17:14:15 KST 2024- PVC 및 Pod 생성

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# cat <<EOT > awsebs-pvc.yaml

> apiVersion: v1

> kind: PersistentVolumeClaim

> metadata:

> name: ebs-claim

> spec:

> accessModes:

> - ReadWriteOnce

> resources:

> requests:

> storage: 4Gi

> storageClassName: gp3

> EOT

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl apply -f awsebs-pvc.yaml

persistentvolumeclaim/ebs-claim created

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl get pvc,pv

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/ebs-claim Pending gp3 4s

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# cat <<EOT > awsebs-pod.yaml

> apiVersion: v1

> kind: Pod

> metadata:

> name: app

> spec:

> terminationGracePeriodSeconds: 3

> containers:

> - name: app

> image: centos

> command: ["/bin/sh"]

> args: ["-c", "while true; do echo \$(date -u) >> /data/out.txt; sleep 5; done"]

> volumeMounts:

> - name: persistent-storage

> mountPath: /data

> volumes:

> - name: persistent-storage

> persistentVolumeClaim:

> claimName: ebs-claim

> EOT

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl apply -f awsebs-pod.yaml

pod/app created

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl get pvc,pv,pod

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/ebs-claim Bound pvc-88219b6e-b07a-4e4c-9c44-3beb085ac338 4Gi RWO gp3 43s

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

persistentvolume/pvc-88219b6e-b07a-4e4c-9c44-3beb085ac338 4Gi RWO Delete Bound default/ebs-claim gp3 3s

NAME READY STATUS RESTARTS AGE

pod/app 0/1 ContainerCreating 0 7s

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl get VolumeAttachment

NAME ATTACHER PV NODE ATTACHED AGE

csi-601ea645c59ddf481c1194dc41417b87955f266107106b73a87c1755a59c4d16 ebs.csi.aws.com pvc-88219b6e-b07a-4e4c-9c44-3beb085ac338 ip-192-168-3-184.ap-northeast-2.compute.internal true 23s

# 모니터링

Thu Mar 21 17:15:10 KST 2024

Thu Mar 21 17:15:12 KST 2024

Thu Mar 21 17:15:14 KST 2024

Thu Mar 21 17:15:17 KST 2024

Thu Mar 21 17:15:19 KST 2024

Thu Mar 21 17:15:21 KST 2024

None None vol-0dfadc62a6f23d034 gp3

Thu Mar 21 17:15:24 KST 2024

None None vol-0dfadc62a6f23d034 gp3

Thu Mar 21 17:15:26 KST 2024

i-0be872382bf8d6c78 attaching vol-0dfadc62a6f23d034 gp3 ## 탑재되기 시작합니다.

Thu Mar 21 17:15:28 KST 2024

i-0be872382bf8d6c78 attached vol-0dfadc62a6f23d034 gp3

Thu Mar 21 17:15:30 KST 2024

i-0be872382bf8d6c78 attached vol-0dfadc62a6f23d034 gp3

Thu Mar 21 17:15:32 KST 2024

i-0be872382bf8d6c78 attached vol-0dfadc62a6f23d034 gp3

Thu Mar 21 17:15:35 KST 2024

i-0be872382bf8d6c78 attached vol-0dfadc62a6f23d034 gp3

Thu Mar 21 17:15:37 KST 2024

i-0be872382bf8d6c78 attached vol-0dfadc62a6f23d034 gp3

# 추가된 EBS 볼륨 상세 정보 확인

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# aws ec2 describe-volumes --volume-ids $(kubectl get pv -o jsonpath="{.items[0].spec.csi.volumeHandle}") | jq

{

"Volumes": [

{

"Attachments": [

{

"AttachTime": "2024-03-21T08:15:27+00:00",

"Device": "/dev/xvdaa",

"InstanceId": "i-0be872382bf8d6c78",

"State": "attached",

"VolumeId": "vol-0dfadc62a6f23d034",

"DeleteOnTermination": false

}

],

"AvailabilityZone": "ap-northeast-2c",

"CreateTime": "2024-03-21T08:15:22.720000+00:00",

"Encrypted": true,

"KmsKeyId": "arn:aws:kms:ap-northeast-2:236747833953:key/817c75a3-9bb7-42d2-a2c8-4a4ec6dccaa1",

"Size": 4,

"SnapshotId": "",

"State": "in-use",

"VolumeId": "vol-0dfadc62a6f23d034",

"Iops": 3000,

"Tags": [

{

"Key": "KubernetesCluster",

"Value": "myeks"

},

{

"Key": "CSIVolumeName",

"Value": "pvc-88219b6e-b07a-4e4c-9c44-3beb085ac338"

},

{

"Key": "ebs.csi.aws.com/cluster",

"Value": "true"

},

{

"Key": "kubernetes.io/created-for/pvc/name",

"Value": "ebs-claim"

},

{

"Key": "Name",

"Value": "myeks-dynamic-pvc-88219b6e-b07a-4e4c-9c44-3beb085ac338"

},

{

"Key": "kubernetes.io/cluster/myeks",

"Value": "owned"

},

{

"Key": "kubernetes.io/created-for/pvc/namespace",

"Value": "default"

},

{

"Key": "kubernetes.io/created-for/pv/name",

"Value": "pvc-88219b6e-b07a-4e4c-9c44-3beb085ac338"

}

],

"VolumeType": "gp3",

"MultiAttachEnabled": false,

"Throughput": 125

}

]

}

- PV 에서 nodeAffinity 는 무엇일까

- 볼륨에 접근할 수 있는 노드를 제한하는 제약 조건을 정의하는 것입니다.

- PV를 사용하는 파드는 Node affinity에 의해 선택된 노드로만 스케줄링됩니다.

- 즉, 아래의 경우는 ap-northeast-2c 에 프로비저닝 되어 있는 Node 만이 볼륨에 접근할 수 있는 것입니다.

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl get pv -o yaml | yh

apiVersion: v1

items:

- apiVersion: v1

kind: PersistentVolume

metadata:

annotations:

pv.kubernetes.io/provisioned-by: ebs.csi.aws.com

volume.kubernetes.io/provisioner-deletion-secret-name: ""

volume.kubernetes.io/provisioner-deletion-secret-namespace: ""

creationTimestamp: "2024-03-21T08:15:26Z"

finalizers:

- kubernetes.io/pv-protection

- external-attacher/ebs-csi-aws-com

name: pvc-88219b6e-b07a-4e4c-9c44-3beb085ac338

resourceVersion: "40040"

uid: b83c3fdc-2750-4435-a6ec-364ebfdf2f97

spec:

.

.

.## 아래 부분

nodeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: topology.ebs.csi.aws.com/zone

operator: In

values:

- ap-northeast-2c

persistentVolumeReclaimPolicy: Delete

storageClassName: gp3

volumeMode: Filesystem

status:

phase: Bound

kind: List

metadata:

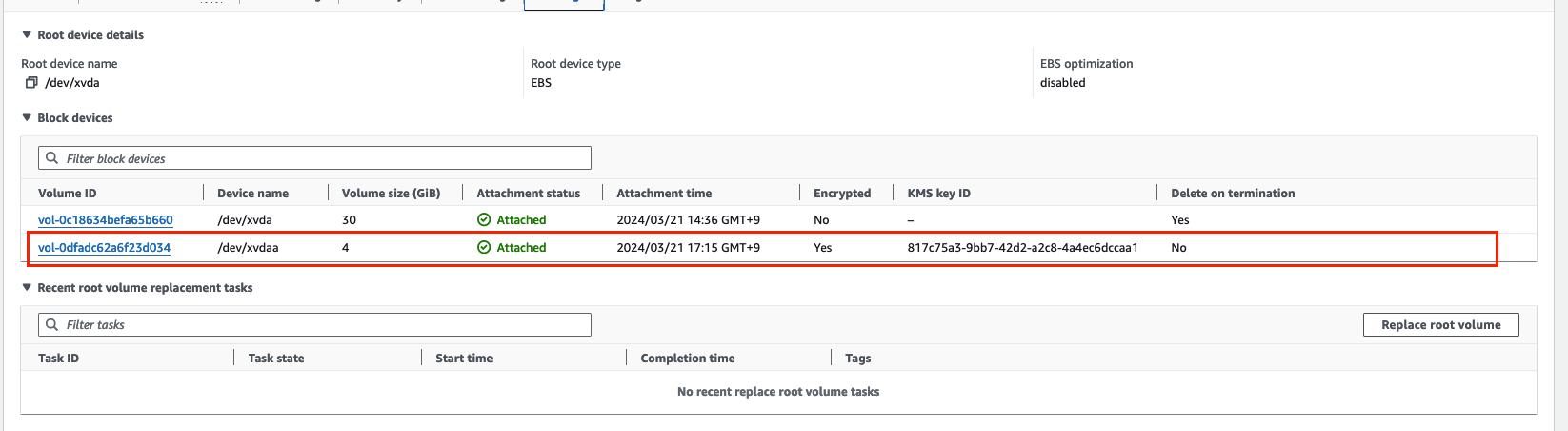

resourceVersion: ""- 아래는 ap-northeast-2c 의 Node 의 EBS 탑재 상태입니다.

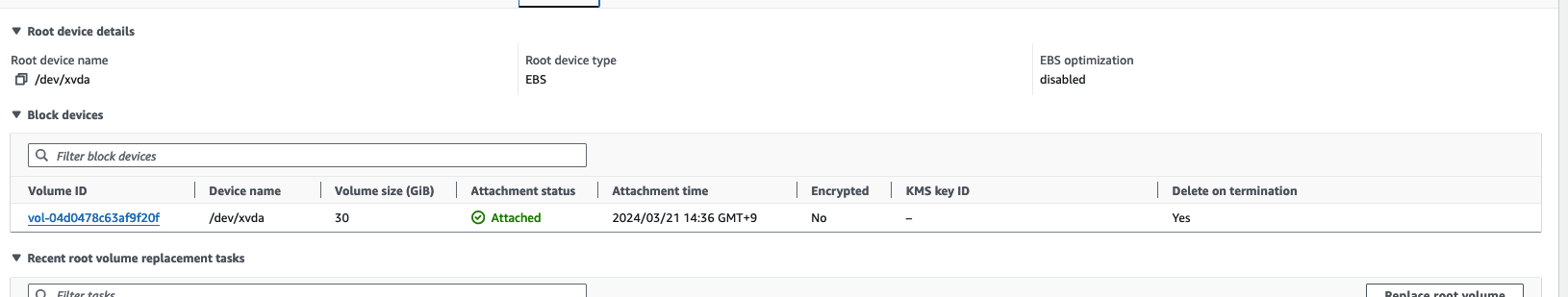

- 다른 AZ의 노드에서 확인헤봅시다.

- 아래는 AZ : b 의 노트의 EBS 볼륨 정보입니다. nodeAffinity 에 의해 스케줄링 된 것을 볼 수 있습니다.

- 방금 탑재한 EBS 볼륨의 사이즈를 증가시켜보겠습니다. 4GiB - 10 GiB

- 당연히, 현재 상태에서 사이즈를 줄이는 것은 불가능 합니다.

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl get pvc ebs-claim -o jsonpath={.status.capacity.storage} ; echo

4Gi

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl patch pvc ebs-claim -p '{"spec":{"resources":{"requests":{"storage":"10Gi"}}}}'

persistentvolumeclaim/ebs-claim patched

- Resizing 하는데 시간이 소요됩니다.

- 삭제

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl delete pod app && kubectl delete pvc ebs-claim

pod "app" deleted

persistentvolumeclaim "ebs-claim" deleted3. AWS Volume SnapShots Controller

- AWS 의 볼륨 스냅샷 컨트롤러를 설치를 통해 스냅샷으로 Pod 를 복원해보겠습니다.

- Controller 설치

# Snapshot CRD 설치

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# curl -s -O https://raw.githubusercontent.com/kubernetes-csi/external-snapshotter/master/client/config/crd/snapshot.storage.k8s.io_volumesnapshots.yaml

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# curl -s -O https://raw.githubusercontent.com/kubernetes-csi/external-snapshotter/master/client/config/crd/snapshot.storage.k8s.io_volumesnapshotclasses.yaml

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# curl -s -O https://raw.githubusercontent.com/kubernetes-csi/external-snapshotter/master/client/config/crd/snapshot.storage.k8s.io_volumesnapshotcontents.yaml

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl apply -f snapshot.storage.k8s.io_volumesnapshots.yaml,snapshot.storage.k8s.io_volumesnapshotclasses.yaml,snapshot.storage.k8s.io_volumesnapshotcontents.yaml

customresourcedefinition.apiextensions.k8s.io/volumesnapshots.snapshot.storage.k8s.io created

customresourcedefinition.apiextensions.k8s.io/volumesnapshotclasses.snapshot.storage.k8s.io created

customresourcedefinition.apiextensions.k8s.io/volumesnapshotcontents.snapshot.storage.k8s.io created

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl get crd | grep snapshot

volumesnapshotclasses.snapshot.storage.k8s.io 2024-03-21T08:37:39Z

volumesnapshotcontents.snapshot.storage.k8s.io 2024-03-21T08:37:39Z

volumesnapshots.snapshot.storage.k8s.io 2024-03-21T08:37:39Z

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl api-resources | grep snapshot

volumesnapshotclasses vsclass,vsclasses snapshot.storage.k8s.io/v1 false VolumeSnapshotClass

volumesnapshotcontents vsc,vscs snapshot.storage.k8s.io/v1 false VolumeSnapshotContent

volumesnapshots vs snapshot.storage.k8s.io/v1 true VolumeSnapshot

# Snapshot controller 설치

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# curl -s -O https://raw.githubusercontent.com/kubernetes-csi/external-snapshotter/master/deploy/kubernetes/snapshot-controller/rbac-snapshot-controller.yaml

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# curl -s -O https://raw.githubusercontent.com/kubernetes-csi/external-snapshotter/master/deploy/kubernetes/snapshot-controller/setup-snapshot-controller.yaml

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl apply -f rbac-snapshot-controller.yaml,setup-snapshot-controller.yaml

serviceaccount/snapshot-controller created

clusterrole.rbac.authorization.k8s.io/snapshot-controller-runner created

clusterrolebinding.rbac.authorization.k8s.io/snapshot-controller-role created

role.rbac.authorization.k8s.io/snapshot-controller-leaderelection created

rolebinding.rbac.authorization.k8s.io/snapshot-controller-leaderelection created

deployment.apps/snapshot-controller created

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl get deploy -n kube-system snapshot-controller

NAME READY UP-TO-DATE AVAILABLE AGE

snapshot-controller 2/2 2 0 11s

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl get pod -n kube-system | grep snapshot

snapshot-controller-749cb47b55-jsrpp 1/1 Running 0 55s

snapshot-controller-749cb47b55-zkhd6 1/1 Running 0 55s

# Snapshotclass 설치

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# curl -s -O https://raw.githubusercontent.com/kubernetes-sigs/aws-ebs-csi-driver/master/examples/kubernetes/snapshot/manifests/classes/snapshotclass.yaml

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# cat snapshotclass.yaml

apiVersion: snapshot.storage.k8s.io/v1

kind: VolumeSnapshotClass

metadata:

name: csi-aws-vsc

driver: ebs.csi.aws.com

deletionPolicy: Delete

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl apply -f snapshotclass.yaml

volumesnapshotclass.snapshot.storage.k8s.io/csi-aws-vsc created

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl get vsclass

NAME DRIVER DELETIONPOLICY AGE

csi-aws-vsc ebs.csi.aws.com Delete 8s- PVC 및 Pod 생성

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# cat awsebs-pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: ebs-claim

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 4Gi

storageClassName: gp3

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl apply -f awsebs-pvc.yaml

persistentvolumeclaim/ebs-claim created

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl apply -f awsebs-pod.yaml

pod/app created

# 파일 내용 확인

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl exec app -- tail -f /data/out.txt

Thu Mar 21 08:43:44 UTC 2024

Thu Mar 21 08:43:49 UTC 2024

Thu Mar 21 08:43:54 UTC 2024

Thu Mar 21 08:43:59 UTC 2024

# Volume Snapshot 생성

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# clear

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# curl -s -O https://raw.githubusercontent.com/gasida/PKOS/main/3/ebs-volume-snapshot.yaml

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# cat ebs-volume-snapshot.yaml | yh

apiVersion: snapshot.storage.k8s.io/v1

kind: VolumeSnapshot

metadata:

name: ebs-volume-snapshot

spec:

volumeSnapshotClassName: csi-aws-vsc

source:

persistentVolumeClaimName: ebs-claim

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl apply -f ebs-volume-snapshot.yaml

volumesnapshot.snapshot.storage.k8s.io/ebs-volume-snapshot created

# 스냅샷 확인

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl get volumesnapshot

NAME READYTOUSE SOURCEPVC SOURCESNAPSHOTCONTENT RESTORESIZE SNAPSHOTCLASS SNAPSHOTCONTENT CREATIONTIME AGE

ebs-volume-snapshot true ebs-claim 4Gi csi-aws-vsc snapcontent-e2dc48b5-db52-4d9f-972b-7dd2bf42f814 19s 20s

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl get volumesnapshot ebs-volume-snapshot -o jsonpath={.status.boundVolumeSnapshotContentName} ; echo

snapcontent-e2dc48b5-db52-4d9f-972b-7dd2bf42f814

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl describe volumesnapshot.snapshot.storage.k8s.io ebs-volume-snapshot

Name: ebs-volume-snapshot

Namespace: default

Labels: <none>

Annotations: <none>

API Version: snapshot.storage.k8s.io/v1

Kind: VolumeSnapshot

Metadata:

Creation Timestamp: 2024-03-21T08:44:38Z

Finalizers:

snapshot.storage.kubernetes.io/volumesnapshot-as-source-protection

snapshot.storage.kubernetes.io/volumesnapshot-bound-protection

Generation: 1

Resource Version: 49020

UID: e2dc48b5-db52-4d9f-972b-7dd2bf42f814

Spec:

Source:

Persistent Volume Claim Name: ebs-claim

Volume Snapshot Class Name: csi-aws-vsc

Status:

Bound Volume Snapshot Content Name: snapcontent-e2dc48b5-db52-4d9f-972b-7dd2bf42f814

Creation Time: 2024-03-21T08:44:39Z

Ready To Use: true

Restore Size: 4Gi

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal CreatingSnapshot 23s snapshot-controller Waiting for a snapshot default/ebs-volume-snapshot to be created by the CSI driver.

Normal SnapshotCreated 22s snapshot-controller Snapshot default/ebs-volume-snapshot was successfully created by the CSI driver.

Normal SnapshotReady 12s snapshot-controller Snapshot default/ebs-volume-snapshot is ready to use.

# VS ID 확인

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl get volumesnapshotcontents -o jsonpath='{.items[*].status.snapshotHandle}' ; echo

snap-066b8006c377cf428

# AWS EBS 스냅샷 확인 - snapshot ID 확인

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# aws ec2 describe-snapshots --owner-ids self --query 'Snapshots[]' --output table

-----------------------------------------------------------------------------------------------------

| DescribeSnapshots |

+-------------+-------------------------------------------------------------------------------------+

| Description| Created by AWS EBS CSI driver for volume vol-099cac72524c097fd |

| Encrypted | True |

| KmsKeyId | arn:aws:kms:ap-northeast-2:236747833953:key/817c75a3-9bb7-42d2-a2c8-4a4ec6dccaa1 |

| OwnerId | 236747833953 |

| Progress | 100% |

| SnapshotId | snap-066b8006c377cf428 |

| StartTime | 2024-03-21T08:44:39.180000+00:00 |

| State | completed |

| StorageTier| standard |

| VolumeId | vol-099cac72524c097fd |

| VolumeSize | 4 |

+-------------+-------------------------------------------------------------------------------------+

|| Tags ||

|+-------------------------------+-----------------------------------------------------------------+|

|| Key | Value ||

|+-------------------------------+-----------------------------------------------------------------+|

|| ebs.csi.aws.com/cluster | true ||

|| kubernetes.io/cluster/myeks | owned ||

|| Name | myeks-dynamic-snapshot-e2dc48b5-db52-4d9f-972b-7dd2bf42f814 ||

|| CSIVolumeSnapshotName | snapshot-e2dc48b5-db52-4d9f-972b-7dd2bf42f814 ||

|+-------------------------------+-----------------------------------------------------------------+|- 복원이 되는지 강제로 Pod, PVC 를 삭제해보겠습니다.

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl delete pod app && kubectl delete pvc ebs-claim

pod "app" deleted

persistentvolumeclaim "ebs-claim" deleted

# 스냅샷에서 PVC 로 복원

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# cat <<EOT > ebs-snapshot-restored-claim.yaml

> apiVersion: v1

> kind: PersistentVolumeClaim

> metadata:

> name: ebs-snapshot-restored-claim

> spec:

> storageClassName: gp3

> accessModes:

> - ReadWriteOnce

> resources:

> requests:

> storage: 4Gi

> dataSource:

> name: ebs-volume-snapshot

> kind: VolumeSnapshot

> apiGroup: snapshot.storage.k8s.io

> EOT

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# cat ebs-snapshot-restored-claim.yaml | yh

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: ebs-snapshot-restored-claim

spec:

storageClassName: gp3

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 4Gi

dataSource:

name: ebs-volume-snapshot

kind: VolumeSnapshot

apiGroup: snapshot.storage.k8s.io

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl apply -f ebs-snapshot-restored-claim.yaml

persistentvolumeclaim/ebs-snapshot-restored-claim created

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# k get pv,pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/ebs-snapshot-restored-claim Pending gp3 20s

# Pod 생성

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# curl -s -O https://raw.githubusercontent.com/gasida/PKOS/main/3/ebs-snapshot-restored-pod.yaml

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# cat ebs-snapshot-restored-pod.yaml | yh

apiVersion: v1

kind: Pod

metadata:

name: app

spec:

containers:

- name: app

image: centos

command: ["/bin/sh"]

args: ["-c", "while true; do echo $(date -u) >> /data/out.txt; sleep 5; done"]

volumeMounts:

- name: persistent-storage

mountPath: /data

volumes:

- name: persistent-storage

persistentVolumeClaim:

claimName: ebs-snapshot-restored-claim

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl apply -f ebs-snapshot-restored-pod.yaml

pod/app created

# 파일 내용 확인

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl exec app -- cat /data/out.txt

Thu Mar 21 08:43:44 UTC 2024

Thu Mar 21 08:43:49 UTC 2024

Thu Mar 21 08:43:54 UTC 2024

Thu Mar 21 08:43:59 UTC 2024

Thu Mar 21 08:44:04 UTC 2024

Thu Mar 21 08:44:09 UTC 2024

Thu Mar 21 08:44:14 UTC 2024 # 작업시간 5분의 텀이 있으며 이전의 데이터가 저장되어 있음

Thu Mar 21 08:49:34 UTC 2024

Thu Mar 21 08:49:39 UTC 2024

Thu Mar 21 08:49:44 UTC 2024

Thu Mar 21 08:49:49 UTC 2024

# 자원 삭제

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl delete pod app && kubectl delete pvc ebs-snapshot-restored-claim && kubectl delete volumesnapshots ebs-volume-snapshot

pod "app" deleted

persistentvolumeclaim "ebs-snapshot-restored-claim" deleted

volumesnapshot.snapshot.storage.k8s.io "ebs-volume-snapshot" deleted- 참고로 정기적으로 스냅샷을 찍어내는 Volume SnapShot Scheduler 도 제공됩니다.

참고 문서 : https://popappend.tistory.com/113

참고 문서 : https://jerryljh.tistory.com/42

참고 문서 : https://backube.github.io/snapscheduler/

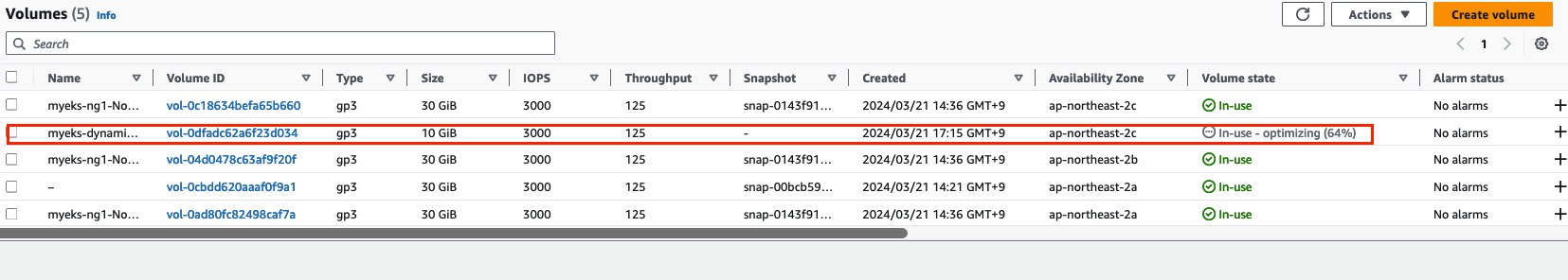

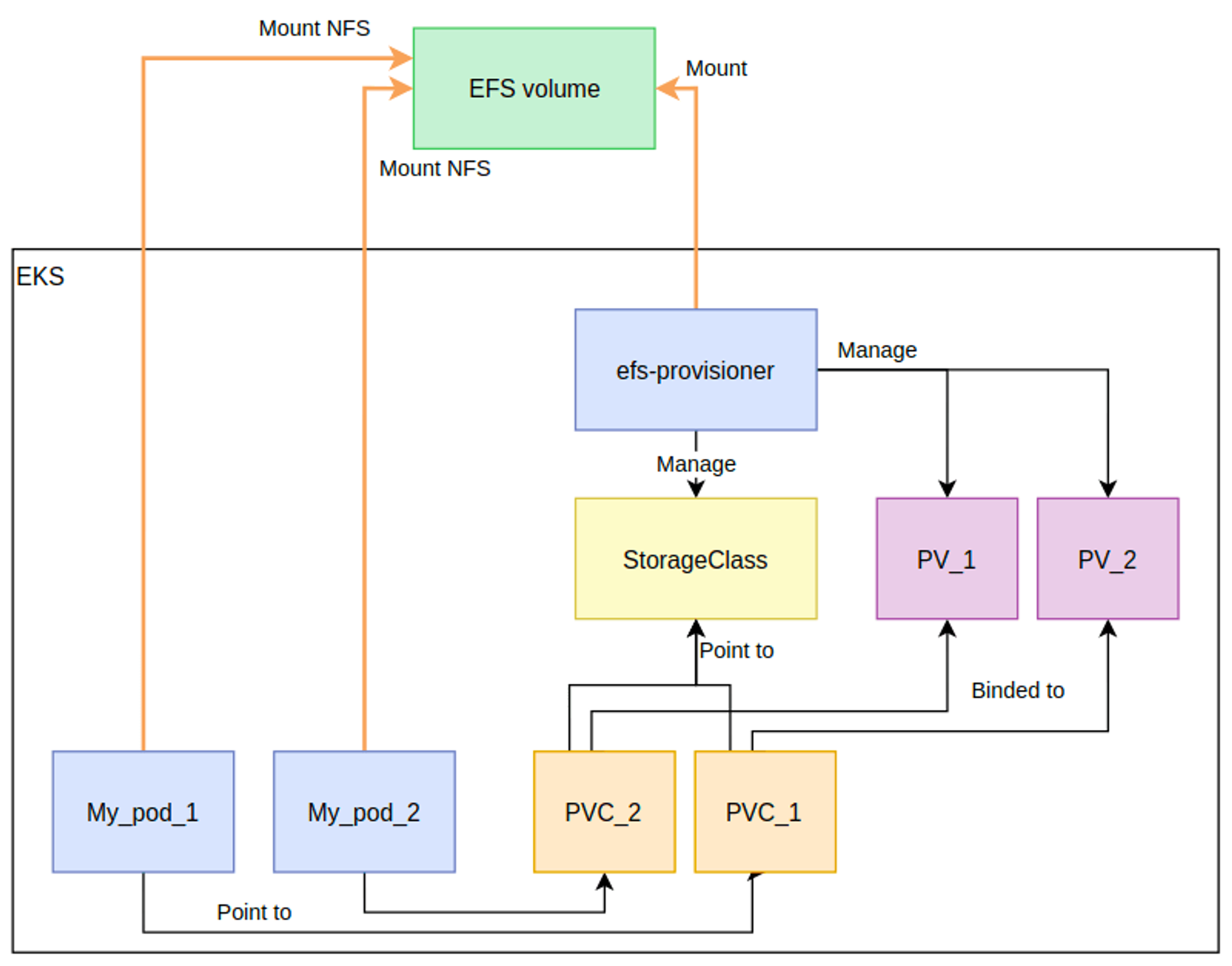

4. AWS EFS Controller

- AWS EFS Controller 소개 및 아키텍처 구성

- EKS Add on 으로 설치하겠습니다.

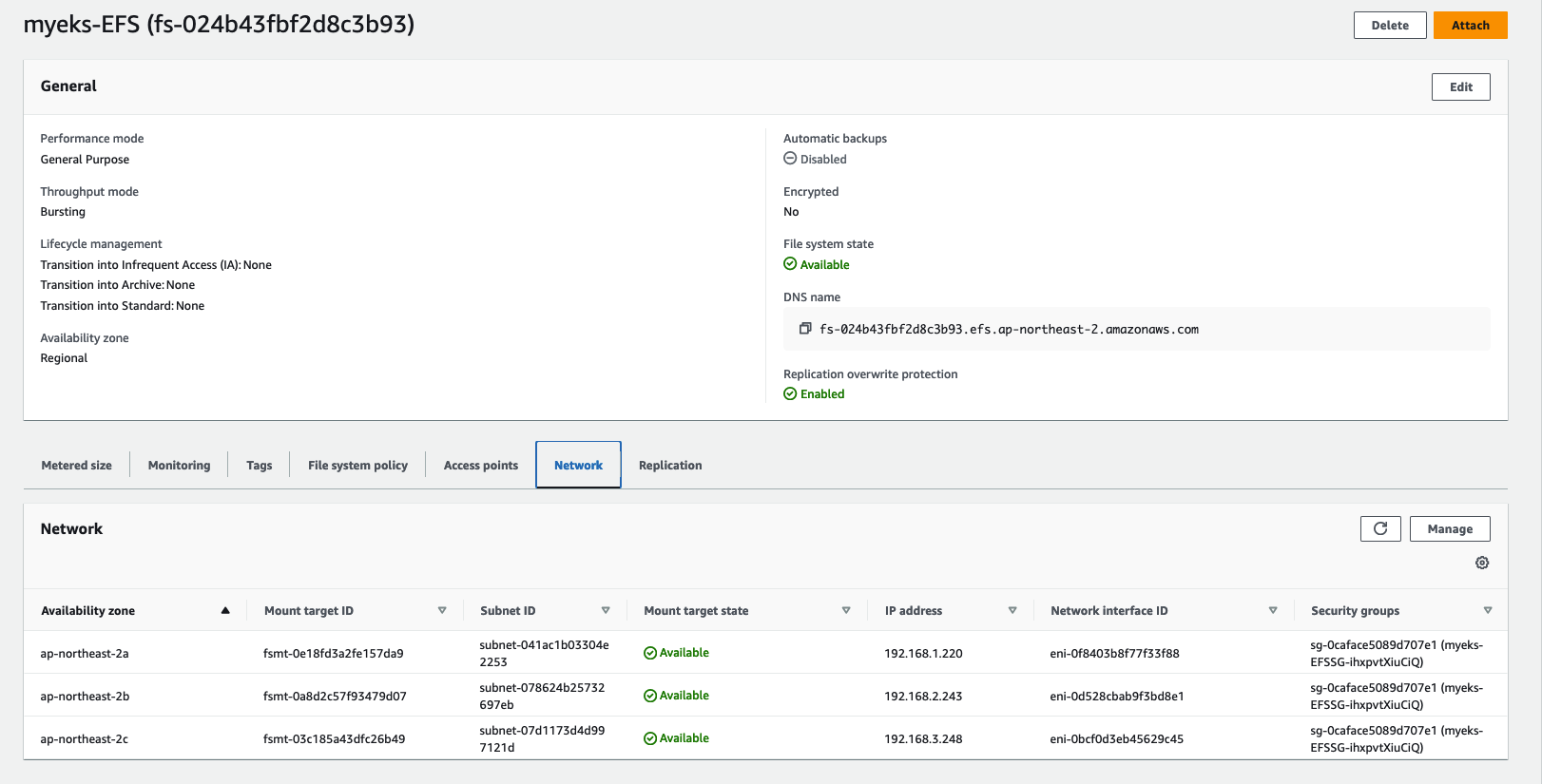

# EFS 정보 확인

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# aws efs describe-file-systems --query "FileSystems[*].FileSystemId" --output text

fs-024b43fbf2d8c3b93

# IAM Policy 생성

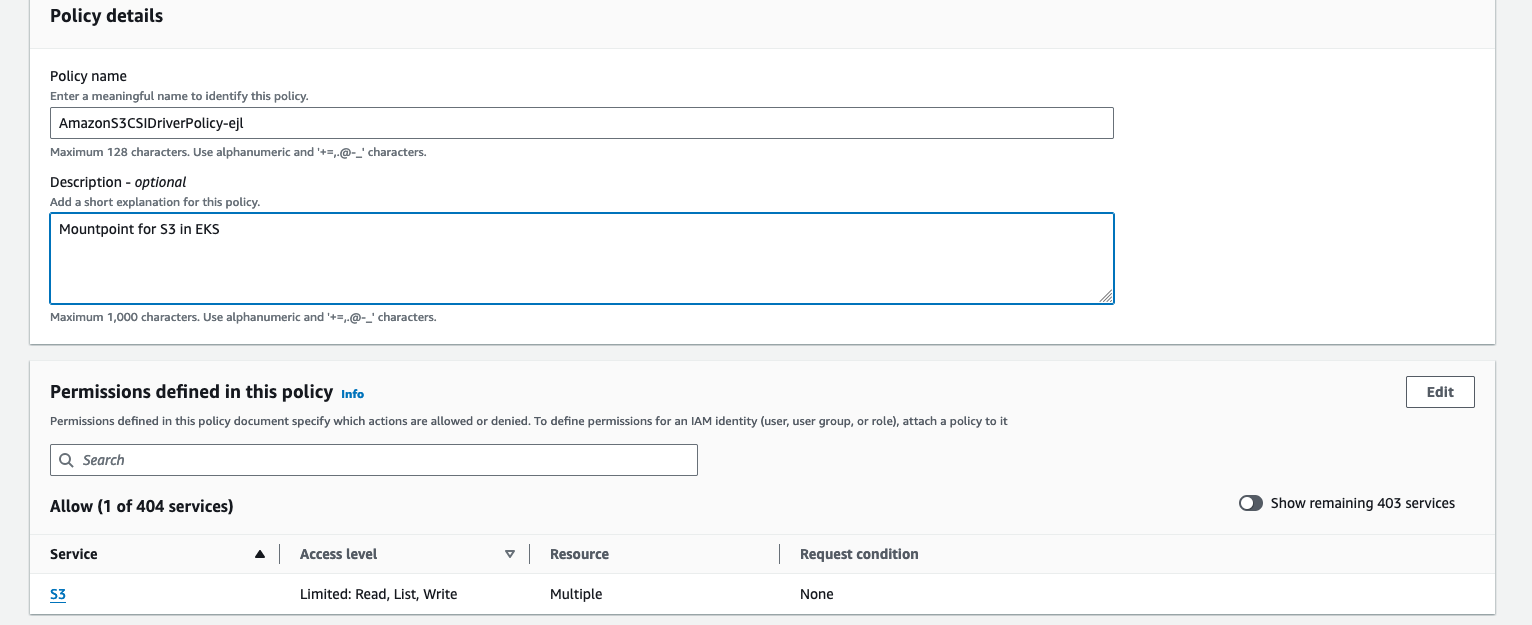

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# curl -s -O https://raw.githubusercontent.com/kubernetes-sigs/aws-efs-csi-driver/master/docs/iam-policy-example.json

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# aws iam create-policy --policy-name AmazonEKS_EFS_CSI_Driver_Policy --policy-document file://iam-policy-example.json

{

"Policy": {

"PolicyName": "AmazonEKS_EFS_CSI_Driver_Policy",

"PolicyId": "ANPATOH2FIJQ2OICO52VI",

"Arn": "arn:aws:iam::236747833953:policy/AmazonEKS_EFS_CSI_Driver_Policy",

"Path": "/",

"DefaultVersionId": "v1",

"AttachmentCount": 0,

"PermissionsBoundaryUsageCount": 0,

"IsAttachable": true,

"CreateDate": "2024-03-21T09:01:10+00:00",

"UpdateDate": "2024-03-21T09:01:10+00:00"

}

}

# ISRA 설정 : 고객관리형 정책 AmazonEKS_EFS_CSI_Driver_Policy 사용

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# eksctl create iamserviceaccount \

> --name efs-csi-controller-sa \

> --namespace kube-system \

> --cluster ${CLUSTER_NAME} \

> --attach-policy-arn arn:aws:iam::${ACCOUNT_ID}:policy/AmazonEKS_EFS_CSI_Driver_Policy \

> --approve

# ISRA 확인

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl get sa -n kube-system efs-csi-controller-sa -o yaml | head -5

apiVersion: v1

kind: ServiceAccount

metadata:

annotations:

eks.amazonaws.com/role-arn: arn:aws:iam::236747833953:role/eksctl-myeks-addon-iamserviceaccount-kube-sys-Role1-vIJLW0PS5gHs

# 애드온 설치 Using Helm

'(leeeuijoo@myeks:default) [root@myeks-bastion ~]# helm repo add aws-efs-csi-driver https://kubernetes-sigs.github.io/aws-efs-csi-driver/

"aws-efs-csi-driver" has been added to your repositories

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# helm repo update

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "aws-efs-csi-driver" chart repository

...Successfully got an update from the "eks" chart repository

...Successfully got an update from the "geek-cookbook" chart repository

Update Complete. ⎈Happy Helming!⎈

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# helm upgrade -i aws-efs-csi-driver aws-efs-csi-driver/aws-efs-csi-driver \

> --namespace kube-system \

> --set image.repository=602401143452.dkr.ecr.${AWS_DEFAULT_REGION}.amazonaws.com/eks/aws-efs-csi-driver \

> --set controller.serviceAccount.create=false \

> --set controller.serviceAccount.name=efs-csi-controller-sa

Release "aws-efs-csi-driver" does not exist. Installing it now.

NAME: aws-efs-csi-driver

LAST DEPLOYED: Thu Mar 21 18:03:08 2024

NAMESPACE: kube-system

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

To verify that aws-efs-csi-driver has started, run:

kubectl get pod -n kube-system -l "app.kubernetes.io/name=aws-efs-csi-driver,app.kubernetes.io/instance=aws-efs-csi-driver"

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# kubectl get pod -n kube-system -l "app.kubernetes.io/name=aws-efs-csi-driver,app.kubernetes.io/instance=aws-efs-csi-driver"

NAME READY STATUS RESTARTS AGE

efs-csi-controller-789c8bf7bf-5c8tg 3/3 Running 0 51s

efs-csi-controller-789c8bf7bf-dqdfw 3/3 Running 0 51s

efs-csi-node-ht75x 3/3 Running 0 51s

efs-csi-node-rhwj2 3/3 Running 0 51s

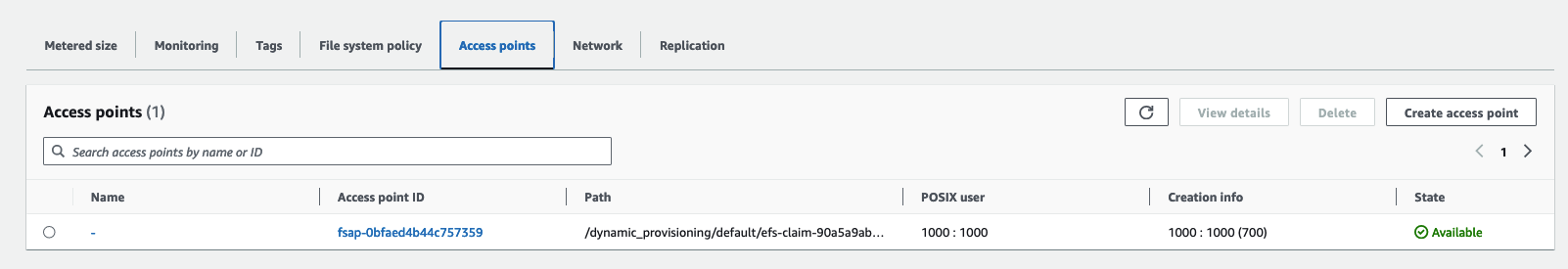

efs-csi-node-w4ftz 3/3 Running 0 51s- AWS EFS 파일 시스템의 네트워크 탭에서 탑재 대상의 ID 를 확인 합니다.

시나리오 1 : EFS 파일시스템을 다수의 파드가 사용하게 설정 : Add empty StorageClasses from static provisioning

# 모니터링 걸어주기

watch 'kubectl get sc efs-sc; echo; kubectl get pv,pvc,pod'

Every 2.0s: kubectl get sc efs-sc; echo; kubectl get pv,pvc,pod Thu Mar 21 18:06:06 2024

Error from server (NotFound): storageclasses.storage.k8s.io "efs-sc" not found

No resources found

# 실습 code clone

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# git clone https://github.com/kubernetes-sigs/aws-efs-csi-driver.git /root/efs-csi

Cloning into '/root/efs-csi'...

remote: Enumerating objects: 22887, done.

remote: Counting objects: 100% (4784/4784), done.

remote: Compressing objects: 100% (1191/1191), done.

remote: Total 22887 (delta 3911), reused 3713 (delta 3549), pack-reused 18103

Receiving objects: 100% (22887/22887), 20.08 MiB | 18.40 MiB/s, done.

Resolving deltas: 100% (12597/12597), done.

(leeeuijoo@myeks:default) [root@myeks-bastion ~]# cd /root/efs-csi/examples/kubernetes/multiple_pods/specs && tree

.

├── claim.yaml

├── pod1.yaml

├── pod2.yaml

├── pv.yaml

└── storageclass.yaml

0 directories, 5 files

# EFS 스토리지클래스 생성 및 확인

(leeeuijoo@myeks:default) [root@myeks-bastion specs]# cat storageclass.yaml | yh

kind: StorageClass

apiVersion: storage.k8s.io/v1

metadata:

name: efs-sc

provisioner: efs.csi.aws.com

(leeeuijoo@myeks:default) [root@myeks-bastion specs]# kubectl apply -f storageclass.yaml

storageclass.storage.k8s.io/efs-sc created

(leeeuijoo@myeks:default) [root@myeks-bastion specs]# kubectl get sc efs-sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

efs-sc efs.csi.aws.com Delete Immediate false 2s

# 모니터링

Every 2.0s: kubectl get sc efs-sc; echo; kubectl get pv,pvc,pod Thu Mar 21 18:07:20 2024

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

efs-sc efs.csi.aws.com Delete Immediate false 18s

No resources found

# PV 생성 및 확인 : volumeHandle을 자신의 EFS 파일시스템ID로 변경

(leeeuijoo@myeks:default) [root@myeks-bastion specs]# EfsFsId=$(aws efs describe-file-systems --query "FileSystems[*].FileSystemId" --output text)

(leeeuijoo@myeks:default) [root@myeks-bastion specs]# sed -i "s/fs-4af69aab/$EfsFsId/g" pv.yaml

(leeeuijoo@myeks:default) [root@myeks-bastion specs]# cat pv.yaml | yh

apiVersion: v1

kind: PersistentVolume

metadata:

name: efs-pv

spec:

capacity:

storage: 5Gi

volumeMode: Filesystem

accessModes:

- ReadWriteMany

persistentVolumeReclaimPolicy: Retain

storageClassName: efs-sc

csi:

driver: efs.csi.aws.com

volumeHandle: fs-024b43fbf2d8c3b93

(leeeuijoo@myeks:default) [root@myeks-bastion specs]# kubectl apply -f pv.yaml

persistentvolume/efs-pv created

# PVC 생성 및 확인

(leeeuijoo@myeks:default) [root@myeks-bastion specs]# cat claim.yaml | yh

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: efs-claim

spec:

accessModes:

- ReadWriteMany

storageClassName: efs-sc

resources:

requests:

storage: 5Gi

(leeeuijoo@myeks:default) [root@myeks-bastion specs]# kubectl apply -f claim.yaml

persistentvolumeclaim/efs-claim created

(leeeuijoo@myeks:default) [root@myeks-bastion specs]# k get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

efs-claim Pending efs-sc 34s

(leeeuijoo@myeks:default) [root@myeks-bastion specs]# cat pod1.yaml pod2.yaml | yh

apiVersion: v1

kind: Pod

metadata:

name: app1

spec:

containers:

- name: app1

image: busybox

command: ["/bin/sh"]

args: ["-c", "while true; do echo $(date -u) >> /data/out1.txt; sleep 5; done"]

volumeMounts:

- name: persistent-storage

mountPath: /data

volumes:

- name: persistent-storage

persistentVolumeClaim:

claimName: efs-claim

apiVersion: v1

kind: Pod

metadata:

name: app2

spec:

containers:

- name: app2

image: busybox

command: ["/bin/sh"]

args: ["-c", "while true; do echo $(date -u) >> /data/out2.txt; sleep 5; done"]

volumeMounts:

- name: persistent-storage

mountPath: /data

volumes:

- name: persistent-storage

persistentVolumeClaim:

claimName: efs-claim

(leeeuijoo@myeks:default) [root@myeks-bastion specs]# kubectl apply -f pod1.yaml,pod2.yaml

pod/app1 created

pod/app2 created

# 모니터링

Every 2.0s: kubectl get sc efs-sc; echo; kubectl get pv,pvc,pod Thu Mar 21 18:12:37 2024

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

efs-sc efs.csi.aws.com Delete Immediate false 5m35s

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASO

N AGE

persistentvolume/efs-pv 5Gi RWX Retain Bound default/efs-claim efs-sc

87s

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/efs-claim Bound efs-pv 5Gi RWX efs-sc 3m54s

NAME READY STATUS RESTARTS AGE

pod/app1 1/1 Running 0 2m59s

pod/app2 1/1 Running 0 2m59s

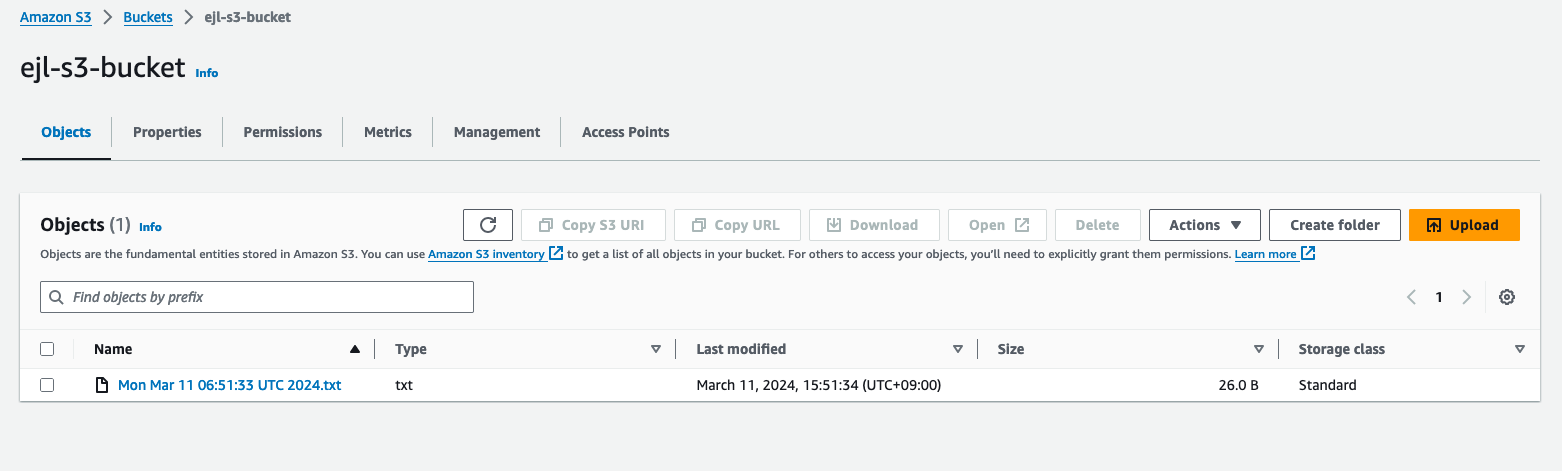

# 파드 생성 및 연동 : 파드 내에 /data 데이터는 EFS를 사용

(leeeuijoo@myeks:default) [root@myeks-bastion specs]# kubectl exec -ti app2 -- sh -c "df -hT -t nfs4"

Filesystem Type Size Used Available Use% Mounted on

127.0.0.1:/ nfs4 8.0E 0 8.0E 0% /data

# 공유 저장소 저장 동작 확인 - bastion Host 에서 확인

(leeeuijoo@myeks:default) [root@myeks-bastion specs]# tree /mnt/myefs

/mnt/myefs

├── memo.txt

├── out1.txt

└── out2.txt

0 directories, 3 files

(leeeuijoo@myeks:default) [root@myeks-bastion specs]# tail -f /mnt/myefs/out1.txt

Thu Mar 21 09:14:24 UTC 2024

Thu Mar 21 09:14:29 UTC 2024

Thu Mar 21 09:14:34 UTC 2024

Thu Mar 21 09:14:39 UTC 2024

Thu Mar 21 09:14:45 UTC 2024

Thu Mar 21 09:14:50 UTC 2024

Thu Mar 21 09:14:55 UTC 2024

Thu Mar 21 09:15:00 UTC 2024

Thu Mar 21 09:15:05 UTC 2024

Thu Mar 21 09:15:10 UTC 2024

# Pod 데이터 확인

(leeeuijoo@myeks:default) [root@myeks-bastion specs]# kubectl exec -ti app1 -- tail -f /data/out1.txt

Thu Mar 21 09:14:55 UTC 2024

Thu Mar 21 09:15:00 UTC 2024

Thu Mar 21 09:15:05 UTC 2024

Thu Mar 21 09:15:10 UTC 2024

Thu Mar 21 09:15:15 UTC 2024

Thu Mar 21 09:15:20 UTC 2024

Thu Mar 21 09:15:25 UTC 2024

Thu Mar 21 09:15:30 UTC 2024

Thu Mar 21 09:15:35 UTC 2024

Thu Mar 21 09:15:40 UTC 2024

^Ccommand terminated with exit code 130

(leeeuijoo@myeks:default) [root@myeks-bastion specs]# kubectl exec -ti app2 -- tail -f /data/out2.txt

Thu Mar 21 09:15:04 UTC 2024

Thu Mar 21 09:15:09 UTC 2024

Thu Mar 21 09:15:14 UTC 2024

Thu Mar 21 09:15:19 UTC 2024

Thu Mar 21 09:15:24 UTC 2024

Thu Mar 21 09:15:29 UTC 2024

Thu Mar 21 09:15:34 UTC 2024

Thu Mar 21 09:15:39 UTC 2024

Thu Mar 21 09:15:44 UTC 2024

Thu Mar 21 09:15:49 UTC 2024

# 자원 삭제

(leeeuijoo@myeks:default) [root@myeks-bastion specs]# kubectl delete pod app1 app2

pod "app1" deleted

pod "app2" deleted

(leeeuijoo@myeks:default) [root@myeks-bastion specs]# kubectl delete pvc efs-claim && kubectl delete pv efs-pv && kubectl delete sc efs-sc

persistentvolumeclaim "efs-claim" deleted

persistentvolume "efs-pv" deleted

storageclass.storage.k8s.io "efs-sc" deleted시나리오 2 : EFS 파일시스템을 다수의 파드가 사용하게 설정 : Dynamic provisioning

# 모니터링 걸어 줍니다.

watch 'kubectl get sc efs-sc; echo; kubectl get pv,pvc,pod'

Every 2.0s: kubectl get sc efs-sc; echo; kubectl get pv,pvc,pod Thu Mar 21 18:24:48 2024

Error from server (NotFound): storageclasses.storage.k8s.io "efs-sc" not found

No resources found

# EFS 스토리지클래스 생성 및 확인

(leeeuijoo@myeks:default) [root@myeks-bastion specs]# curl -s -O https://raw.githubusercontent.com/kubernetes-sigs/aws-efs-csi-driver/master/examples/kubernetes/dynamic_provisioning/specs/storageclass.yaml

(leeeuijoo@myeks:default) [root@myeks-bastion specs]# cat storageclass.yaml | yh

kind: StorageClass

apiVersion: storage.k8s.io/v1

metadata:

name: efs-sc

provisioner: efs.csi.aws.com

parameters:

provisioningMode: efs-ap

fileSystemId: fs-92107410

directoryPerms: "700"

gidRangeStart: "1000" # optional

gidRangeEnd: "2000" # optional

basePath: "/dynamic_provisioning" # optional

subPathPattern: "${.PVC.namespace}/${.PVC.name}" # optional

ensureUniqueDirectory: "true" # optional

reuseAccessPoint: "false" # optional

(leeeuijoo@myeks:default) [root@myeks-bastion specs]# sed -i "s/fs-92107410/$EfsFsId/g" storageclass.yaml

(leeeuijoo@myeks:default) [root@myeks-bastion specs]# kubectl apply -f storageclass.yaml

storageclass.storage.k8s.io/efs-sc created

(leeeuijoo@myeks:default) [root@myeks-bastion specs]# kubectl get sc efs-sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

efs-sc efs.csi.aws.com Delete Immediate false

# PVC/파드 생성 및 확인

(leeeuijoo@myeks:default) [root@myeks-bastion specs]# curl -s -O https://raw.githubusercontent.com/kubernetes-sigs/aws-efs-csi-driver/master/examples/kubernetes/dynamic_provisioning/specs/pod.yaml

(leeeuijoo@myeks:default) [root@myeks-bastion specs]# cat pod.yaml | yh

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: efs-claim

spec:

accessModes:

- ReadWriteMany