Node 10. 가중치 초기화와 배치 정규화

AIFFELBatch NormalizationReutersWeights Initialization가중치 초기화데싸데싸 3기데이터 사이언스데이터사이언티스트데이터사이언티스트 3기배치 정규화아이펠

☺️ AIFFEL 데이터사이언티스트 3기

목록 보기

82/115

10-1. 들어가며

학습 목표

- 가중치 초기화

- Reuters 데이터셋 이용 -> 다중 분류를 위한 딥러닝 모델 학습

- 배치 정규화 : 딥러닝 모델의 빠른 학습, 오버피팅 해결

학습 내용

-

가중치 초기화

- 선형 함수 가중치 초기화

- 비선형 함수 가중치 초기화

-

Reuters 딥러닝 모델 예제

-

배치 정규화

10-2. 가중치 초기화(Weights Initialization)

가중치 초기화

- 신경망 성능에 큰 영향을 줌

- 가중치 값의 편향 -> 활성화 함수를 통과한 값도 편향 -> 표현 가능한 신경망 수가 적어짐

- 가중치 초기값은 0에 가까운 무작위 값을 써도, 초기값에 따라 모델 성능 차이가 발생함

- 활성화 값이 고루 분포되는 것이 중요!

- build_model

- 활성화 함수 activation, 초기화 initializer

from tensorflow.keras import models, layers, optimizers

def build_model(activation, initializer):

model = models.Sequential()

model.add(layers.Input(shape=(400, 20), name='input'))

model.add(layers.Dense(20, activation=activation, name='hidden1',

kernel_initializer=initializer))

model.add(layers.Dense(20, activation=activation, name='hidden2',

kernel_initializer=initializer))

model.add(layers.Dense(20, activation=activation, name='hidden3',

kernel_initializer=initializer))

model.add(layers.Dense(20, activation=activation, name='hidden4',

kernel_initializer=initializer))

model.add(layers.Dense(20, activation=activation, name='hidden5',

kernel_initializer=initializer))

model.compile(loss='sparse_categorical_crossentropy',

optimizer=optimizers.SGD(),

metrics=['accuracy'])

return modelshow_layer: 각 레이어마다의 분포값을 히스토그램으로 출력

import numpy as np

import matplotlib.pyplot as plt

plt.style.use('seaborn-white')

def show_layer(model):

input = np.random.randn(400, 20)

plt.figure(figsize=(12, 6))

for i in range(1, 6):

name = 'hidden' + str(i)

layer = model.get_layer(name)

input = layer(input)

plt.subplot(1, 6, i)

plt.title(name)

plt.hist(input, 20, range=(-1, 1))

plt.subplots_adjust(wspace=0.5, hspace=0.5)

plt.show()선형 함수 가중치 초기화

- activations.sigmoid로 초기화 방법 비교 예정

- sigmoid는 특정 범위 (-1, 1)에서는 선형!

- sigmoid는 특정 범위 (-1, 1)에서는 선형!

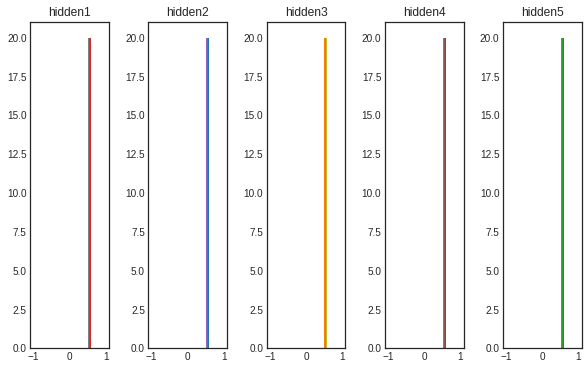

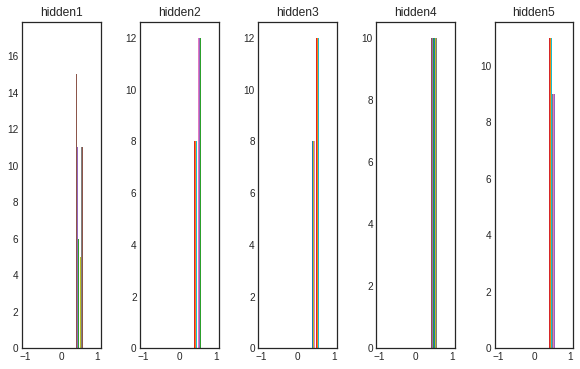

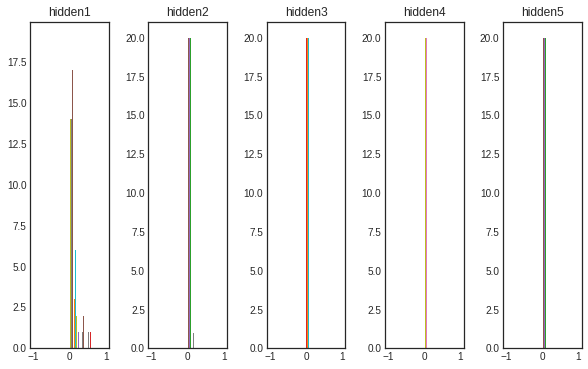

제로 초기화

- 가중치의 제로값 초기화 -> 각 레이어 가중치 값 분포는 중앙으로 몰림

- 가중치 값이 0 -> 오차역전파에서의 모든 가중치 값이 같게 갱신 -> 학습 진행 불가

initializers.Zeros()이용

from tensorflow.keras import initializers, activations

model = build_model(activations.sigmoid, initializers.Zeros())

show_layer(model)

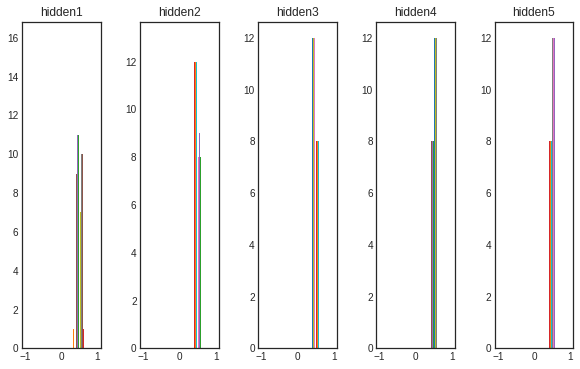

정규분포 초기화

- 정규분포를 따를 수 있도록 무작위 값 초기화 -> 제로 초기화보다는 퍼져있음

- 치우침 있음 -> 신경망 표현은 아직 제한적

initializers.RandomNormal()이용

model = build_model(activations.sigmoid, initializers.RandomNormal())

show_layer(model)

균일분포 초기화

- 균일분포를 따르도록 무작위 값 초기화 -> 제로 초기화보다는 퍼져있음

- 활성화 값 균일하지 ❌ -> 역전파로 전해지는 기울기 값 사라짐 발생 가능성 있음

initializers.RandomUniform()사용

model = build_model(activations.sigmoid, initializers.RandomUniform())

show_layer(model)

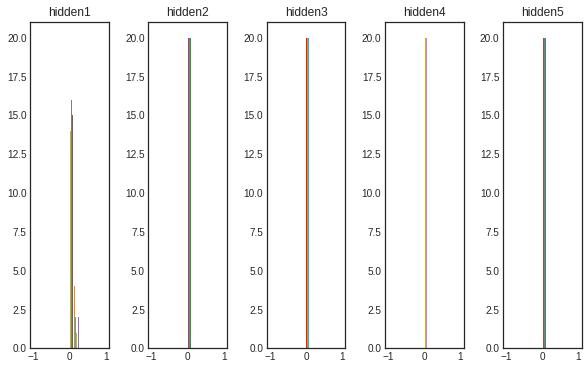

Xavier(Glorot) 정규분포 초기화

- 은닉층 노드 수가 n -> 표준 편차는 인 분포로 초기화가 수행되는 것을 말함

- 분포가 고르고, 레이어마다의 표현이 잘되어 더 많은 가중치에 역전파 전달 가능하다는 장점이 있음!

- 활성함수가 선형함수인 경우 매우 적합!

model = build_model(activations.sigmoid, initializers.GlorotNormal())

show_layer(model)

Xavier(Glorot) 균일분포 초기화

initializers.GlorotUniform()사용

model = build_model(activations.sigmoid, initializers.GlorotUniform())

show_layer(model)

- 활성화 함수 변경 : sigmoid -> tanh

- 더 균일해짐

model = build_model(activations.tanh, initializers.GlorotUniform())

show_layer(model)

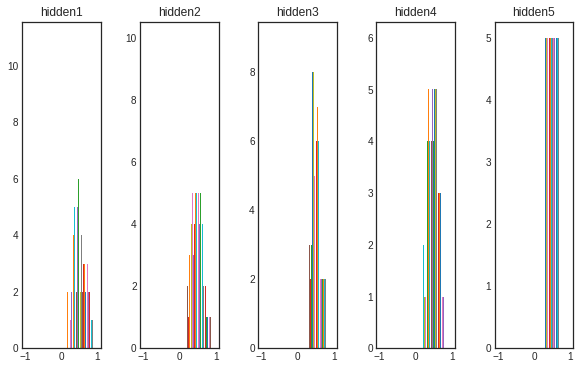

He 정규분포 초기화

- 표준편차가 인 분포를 가지도록 초기화하는 것

- 활성화값 분포가 균일하게 분포됨

- 비선형 함수(ReLU 등)에 더 적합

model = build_model(activations.sigmoid, initializers.HeNormal())

show_layer(model)

He 균일분포 초기화

- 가중치 값이 잘 분포되어 있음

model = build_model(activations.sigmoid, initializers.HeUniform())

show_layer(model)비선형 함수 가중치 초기화

activations.relu사용

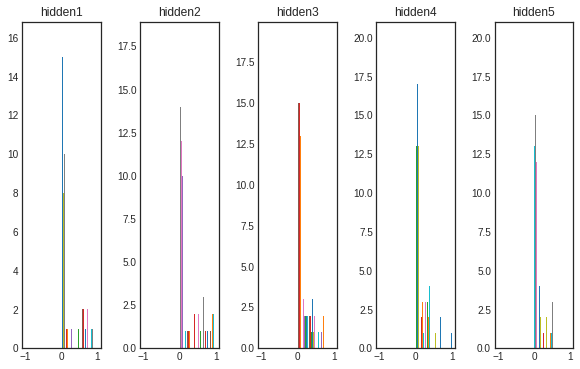

제로 초기화

- 활성화 함수로 ReLU 이용 -> 0인 값을 그대로 0으로

model = build_model(activations.relu, initializers.Zeros())

show_layer(model)

정규분포 초기화

- hidden1 레이어 제외 0에 치우쳐 있음

model = build_model(activations.relu, initializers.RandomNormal())

show_layer(model)

균일분포 시각화

- 첫 레이어 제외, 가중치 값이 0에 치우쳐 있음

model = build_model(activations.relu, initializers.RandomUniform())

show_layer(model)

Xavier(Glorot) 정규분포 초기화

- relu 특성이 있어서 0인 값이 많기는 하나, 전체 레이어를 봤을 때 어느정도 분포가 잘 퍼져있음을 알 수 있음.

model = build_model(activations.relu, initializers.GlorotNormal())

show_layer(model)

Xavier(Glorot) 균일분포 초기화

- 정규 분포와 유사

model = build_model(activations.relu, initializers.GlorotUniform())

show_layer(model)

He 정규분포 초기화

- 비선형 함수에 더 적합

- 분포가 고르게 형성되어 있음

model = build_model(activations.relu, initializers.HeNormal())

show_layer(model)

He 균일분포 초기화

- 정규 분포와 유사한 형태

model = build_model(activations.relu, initializers.HeUniform())

show_layer(model)

실습

def build_model(activation='selu', initializer='lecun_normal'):

model = models.Sequential()

model.add(layers.Dense(16, activation=activation, kernel_initializer=initializer, input_shape=(10000,)))

model.add(layers.Dense(16, activation=activation, kernel_initializer=initializer))

model.add(layers.Dense(1, activation='sigmoid'))

model.compile(optimizer='rmsprop',

loss='binary_crossentropy',

metrics=['accuracy'])

return model

def show_layer(model):

model.summary()

model = build_model(activation='selu', initializer='lecun_normal')

show_layer(model)

10-3. Reuters 딥러닝 모델 예제

Reuters 데이터셋

- 뉴스 기사 모음, 주제 분류 데이터로 자주 사용

- 구성 : 뉴스 기사 텍스트, 46개 토픽

가중치 초기화 실습

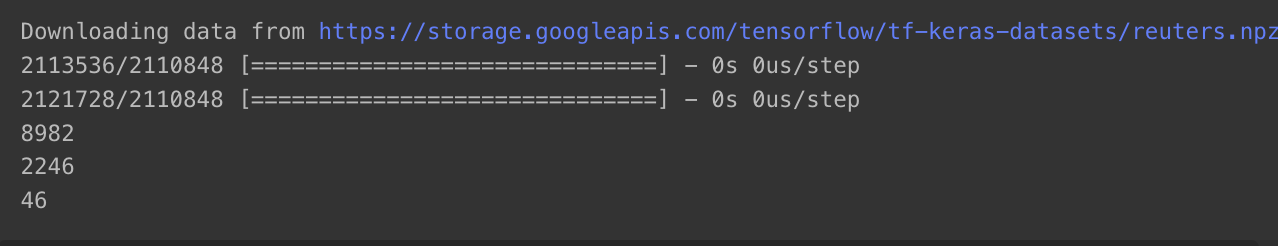

데이터 로드 및 전처리

reuters.load_data()함수로 다운로드 가능- num_words 1000개로 제한

- 훈련 데이터 : 8,982개

- 테스트 데이터 : 2,246개

from keras.datasets import reuters

import numpy as np

(train_data, train_labels), (test_data, test_labels) = reuters.load_data(num_words=10000)

print(len(train_data))

print(len(test_data))

print(len(set(train_labels)))

reuters.get_word_index()- 단어 인덱스 딕셔너리 이용 -> 단어로 변환

word_index = reuters.get_word_index()

word_index{'mdbl': 10996,

'fawc': 16260,

'degussa': 12089,

'woods': 8803,

'hanging': 13796,

'localized': 20672,

'sation': 20673,

'chanthaburi': 20675,

'refunding': 10997,

'hermann': 8804,

'passsengers': 20676,

'stipulate': 20677,

'heublein': 8352,

'screaming': 20713,

'tcby': 16261,

'four': 185,

'grains': 1642,

'broiler': 20680,

'wooden': 12090,

'wednesday': 1220,

'highveld': 13797,

'duffour': 7593,

'0053': 20681,

'elections': 3914,

'270': 2563,

'271': 3551,

'272': 5113,

'273': 3552,

'274': 3400,

'rudman': 7975,

'276': 3401,

'277': 3478,

'278': 3632,

'279': 4309,

'dormancy': 9381,

'errors': 7247,

'deferred': 3086,

'sptnd': 20683,

'cooking': 8805,

'stratabit': 20684,

'designing': 16262,

'metalurgicos': 20685,

'databank': 13798,

'300er': 20686,

'shocks': 20687,

'nawg': 7972,

'tnta': 20688,

'perforations': 20689,

'affiliates': 2891,

'27p': 20690,

'ching': 16263,

'china': 595,

'wagyu': 16264,

'affiliated': 3189,

'chino': 16265,

'chinh': 16266,

'slickline': 20692,

'doldrums': 13799,

'kids': 12092,

'climbed': 3028,

'controversy': 6693,

'kidd': 20693,

'spotty': 12093,

'rebel': 12639,

'millimetres': 9382,

'golden': 4007,

'projection': 5689,

'stern': 12094,

"hudson's": 7903,

'dna': 10066,

'dnc': 20695,

'hodler': 20696,

'lme': 2394,

'insolvancy': 20697,

'music': 13800,

'therefore': 1984,

'dns': 10998,

'distortions': 6959,

'thassos': 13801,

'populations': 20698,

'meteorologist': 8806,

'loss': 43,

'exco': 9383,

'adventist': 20813,

'murchison': 16267,

'locked': 10999,

'kampala': 13802,

'arndt': 20699,

'nakasone': 1267,

'steinweg': 20700,

"india's": 3633,

'wang': 3029,

'wane': 10067,

'unjust': 13803,

'titanium': 13804,

'want': 850,

'pinto': 20701,

"institutes'": 16268,

'absolute': 7973,

'travel': 4677,

'cutback': 6422,

'nazmi': 16269,

'modest': 1858,

'shopwell': 16270,

'sedi': 20702,

'adoped': 20703,

'tulis': 16271,

'18th': 20704,

"wmc's": 20705,

'menlo': 20706,

'reiners': 11000,

'farmlands': 12095,

'nonsensical': 20707,

'elisra': 20708,

'welcomed': 2461,

'peup': 20709,

"holiday's": 16272,

'activating': 20711,

'avondale': 16273,

'interational': 16274,

'welcomes': 20712,

'fip': 16275,

'tailings': 11001,

'fit': 4205,

'lifeline': 16276,

'bringing': 1916,

'fix': 4819,

'624': 6164,

'naturalite': 12096,

'wales': 6165,

'fin': 8807,

'fio': 11129,

'ceremenony': 20714,

'sovr': 20715,

"yeo's": 20716,

'effects': 1788,

'sixteen': 13805,

'undeveloped': 8808,

'glutted': 13806,

'barton': 20717,

'froday': 20718,

'arrow': 10089,

'stabilises': 11002,

'allan': 6960,

'374p': 20719,

'393': 3891,

'392': 4008,

'391': 4206,

'390': 3079,

'397': 4550,

'396': 6166,

'395': 6423,

'394': 4207,

'399': 6961,

'398': 4208,

'stabilised': 7595,

'smelters': 5114,

'oprah': 20720,

'orginially': 20721,

"tvx's": 20722,

'ponomarev': 16278,

'enviroment': 20723,

"reeves'": 20724,

'mason': 8363,

'encourage': 1670,

'adapt': 7596,

'abbott': 12776,

'stamping': 13808,

'colquiri': 20726,

'ambrit': 11003,

'strata': 8353,

'corrects': 4821,

'sandra': 11922,

'estimate': 859,

'universally': 20727,

'chlorine': 20728,

'competes': 16279,

'leiner': 10068,

'ministries': 8809,

'disturbed': 8810,

'competed': 13809,

'juergen': 8811,

'kfw': 13810,

'turben': 11004,

'reintroduced': 9384,

'maladies': 20729,

'chevron': 4101,

'lazere': 16280,

'antilles': 8812,

'dti': 11907,

'specially': 9070,

'bilzerian': 4678,

'bakelite': 13811,

'renovated': 20730,

'service': 568,

'payless': 16281,

'spiegler': 20731,

'needed': 831,

'wigglesworth': 16282,

'master': 6962,

'antonson': 13812,

'genesis': 20732,

'vismara': 13813,

'organically': 20734,

"accords'": 20735,

'task': 5940,

'positively': 7974,

'feasibility': 3479,

'ahmed': 6963,

"suralco's": 13814,

'awacs': 20736,

'idly': 16283,

'regulator': 20737,

'pseudorabies': 12097,

'staubli': 16284,

'nzi': 8813,

'feeling': 5115,

'275': 3127,

'6819': 20738,

'gorman': 16285,

'sustaining': 8354,

'spectrum': 9385,

'consenting': 20739,

'recapitalized': 12098,

'sailed': 11562,

'dozen': 7597,

'affairs': 1985,

'courier': 2253,

'kremlin': 8355,

'shipments': 895,

"aquino's": 16286,

'committing': 10070,

'sugarcane': 5293,

'diminishing': 9386,

'vexing': 16287,

'simplify': 11005,

'mouth': 6167,

'steinhardt': 7248,

'conceded': 8814,

'bradford': 9387,

'singer': 7976,

'5602': 20740,

"1987's": 13816,

'tech': 4950,

'teck': 6424,

'majv': 20741,

'saying': 666,

'dickey': 16477,

'sweetner': 20742,

'teresa': 21149,

'ulcer': 20743,

'cheaply': 13817,

'thai': 2361,

'orleans': 6964,

'excavator': 16290,

'rico': 6168,

'lube': 12099,

'rick': 13818,

'rich': 4679,

'kerna': 13819,

'rice': 950,

'rica': 4209,

'plate': 5503,

'platt': 16291,

'altogether': 8356,

'jaguar': 8815,

'dynair': 20744,

'patch': 8816,

'ldp': 2892,

'boarded': 13820,

'precluding': 16292,

'clarified': 11006,

'sensitivity': 16293,

'alternative': 1511,

'clarifies': 11007,

'lots': 5116,

'irs': 7598,

'irv': 20745,

'iri': 13821,

'ira': 13822,

'timber': 5690,

'ire': 20746,

'discipline': 5219,

'extend': 1937,

'nature': 3634,

"amb's": 16295,

'dunhill': 16296,

'extent': 2142,

'restrcitions': 20747,

'heating': 2396,

"mannesmann's": 11008,

'outsanding': 20748,

'multimillions': 20749,

'sarcinelli': 13824,

'southeastern': 6694,

'eradicate': 10071,

'libyan': 9388,

'foreclosing': 20750,

'maclaine': 12101,

'fra': 20751,

'union': 353,

'frn': 11009,

'much': 386,

'fry': 12102,

'mothball': 20752,

'chlorazepate': 10072,

'dxns': 12103,

'toyko': 19981,

'spit': 20753,

'007050': 16297,

'freehold': 16298,

'davy': 13825,

'dave': 11010,

'spie': 12177,

'aguayo': 10117,

'wildcat': 12104,

'fecs': 10069,

'kennan': 20754,

'intal': 16299,

'contingencies': 9389,

'professionally': 16551,

'microbiological': 16300,

'misconstrued': 20756,

'k': 409,

'securitiesd': 20757,

'deferring': 16301,

'kohl': 5941,

'conditioned': 3030,

'fnhb': 20758,

"october's": 16302,

'memorial': 13954,

'democracies': 6965,

'conformed': 27520,

'split': 464,

"bond's": 12105,

'thinly': 11112,

'dunkirk': 16515,

'cavanaugh': 16303,

"securities'": 13827,

'marches': 21345,

'issam': 16304,

'workforce': 2020,

'meinert': 12106,

'boiler': 13828,

"bp's": 5294,

'torpedoed': 16305,

'indidate': 20762,

'downwardly': 13829,

'viviez': 20763,

'vladiminovich': 20764,

'academic': 16306,

'architecural': 20765,

'corporate': 1117,

'appropriately': 16307,

'teicc': 20766,

"hanover's": 20767,

'aristech': 8817,

'portrayed': 20768,

'raffineries': 21383,

'hai': 20770,

'hal': 7599,

'ham': 13830,

'han': 10073,

'e15b': 20771,

'had': 61,

'hay': 20772,

'botchwey': 13831,

'haq': 10074,

'has': 37,

'hat': 13832,

'hav': 20773,

'fortin': 20774,

'municipal': 8818,

'osman': 20775,

'fsical': 20776,

'elders': 3480,

'survival': 12107,

'unequivocally': 16308,

'objective': 2519,

'indicative': 6695,

'shadow': 10075,

'riskiness': 21411,

'positiive': 20778,

"american's": 10076,

'alick': 16309,

'harima': 16310,

'alice': 12108,

'altschul': 20779,

'festivities': 16311,

'medecines': 20780,

'beneficial': 2942,

'yoweri': 12109,

'crowd': 13833,

'crowe': 9390,

'crown': 3553,

'topping': 13679,

'captive': 8819,

'billboard': 12110,

'fiduciary': 6169,

'bottom': 3402,

'plucked': 20782,

'locksmithing': 20783,

'ecopetrol': 9391,

'pipestone': 24018,

"growers'": 5505,

'borrows': 20785,

'eduard': 16312,

'venpres': 13834,

'bamboo': 16313,

'foolish': 13835,

'uruguyan': 20786,

'officeholders': 20787,

'economiques': 20788,

'aden': 16314,

'maxwell': 4822,

'marshall': 4680,

'honeymoon': 16315,

'administer': 16316,

'shoots': 20790,

'rubbertech': 16317,

'johsen': 16318,

'reciprocity': 10077,

'fabric': 13836,

'suffice': 20791,

'spokemsan': 20792,

"sonora's": 20793,

'5865': 16319,

"systems'": 16320,

'perfumes': 20794,

'halycon': 20795,

'nonvoting': 20796,

'safeguard': 7250,

'sawdust': 21538,

"else's": 20797,

'arrays': 13837,

'aza': 20798,

'smasher': 20799,

'complications': 12111,

'pesos': 1813,

'relabelling': 20800,

'passenger': 3722,

"avon's": 12112,

'megahertz': 20801,

'mirror': 10683,

'minas': 8357,

'bourdain': 16322,

'crownx': 20802,

'eventual': 6425,

'crowns': 1207,

'role': 1369,

'obliges': 20803,

'rolf': 16323,

'vegetative': 13838,

'rolm': 20804,

'roll': 4419,

'intend': 2463,

'palms': 16324,

'denys': 19255,

'transported': 13839,

'moresby': 20805,

'devon': 16325,

'intent': 1351,

"camco's": 20806,

'variable': 5942,

'transporter': 20807,

'danske': 16326,

'friedhelm': 13840,

'hawker': 8358,

"sand's": 17774,

'preseving': 20808,

'80386': 12113,

'bnls': 16328,

'ordination': 19984,

'overturned': 11011,

'erred': 16329,

'cincinnati': 6696,

'corps': 16710,

'whoever': 20809,

'osp': 16330,

'osr': 13841,

'ost': 12114,

'chair': 16331,

'690': 5647,

'grapples': 20810,

'megawatts': 13842,

'photocopiers': 20811,

'sconninx': 20812,

'circumstances': 2274,

'oversight': 13843,

"paradyne's": 20814,

'691': 6363,

'paychecks': 20815,

"stadelmann's": 13844,

'choice': 3241,

'vastagh': 11012,

'embark': 8820,

'gloomy': 9392,

'stays': 9393,

'exact': 4009,

'minute': 5117,

'kittiwake': 11892,

'picul': 20816,

'skewed': 20817,

'cooke': 11013,

'defaults': 10078,

'reimpose': 11014,

'hindered': 9394,

'lengthened': 20818,

'chopping': 16333,

'mckiernan': 13845,

'collaspe': 20819,

'corazon': 7251,

'antwerp': 7600,

'abdullah': 13846,

'goldston': 13847,

'300': 442,

'cassa': 20821,

'casse': 20822,

'695': 4081,

'ground': 2979,

'boost': 839,

'azusa': 16334,

'drafted': 9395,

'303': 4823,

'climbs': 13848,

'honour': 7601,

'vanderbilt': 20823,

'305': 3968,

'address': 3031,

'dwindling': 8821,

'benson': 7252,

'enroll': 12115,

'revenues': 501,

'impacted': 12116,

'queue': 20826,

'accomplished': 10079,

'throughput': 7602,

'influx': 9396,

'stockbuilding': 10080,

'aproximates': 20827,

'petroleo': 13849,

'sistemas': 16335,

'feretti': 14053,

'opposes': 5943,

'working': 882,

'perished': 20829,

'oldham': 13850,

'27000': 20830,

'optimize': 19245,

'vigour': 20832,

'opposed': 1580,

'liberalizing': 16336,

'wvz': 20833,

'dampness': 20834,

'approving': 13851,

'sierra': 13496,

'entrepot': 20835,

'currency': 224,

'originally': 1499,

'tindemans': 20837,

'valorem': 16337,

'following': 477,

'fossen': 20838,

'locke': 11016,

'employess': 20839,

'rotberg': 12117,

'parachute': 16338,

'locks': 11017,

'incremental': 12255,

'woolowrth': 16339,

'listens': 20841,

'litre': 7253,

'edouard': 3554,

'ounce': 1377,

'nicanor': 20843,

'sucocitrico': 20844,

'minicomputers': 16340,

"silva's": 16341,

'restitutions': 11018,

'custer': 16342,

'3rd': 2590,

'fueled': 10081,

'trydahl': 20845,

'aice': 11019,

'harmon': 12118,

'conscious': 10082,

'herbicidesand': 20846,

'subdivisions': 20847,

"veslefrikk's": 20848,

'swollen': 11020,

'pulled': 7978,

'tilney': 20849,

'years': 203,

'structuring': 20850,

'episodes': 20851,

'sportscene': 16343,

"northair's": 16344,

'jig': 20852,

'jin': 20853,

'jim': 3403,

'troubles': 8359,

'workforces': 13852,

'suspension': 2362,

'troubled': 3892,

'fondiaria': 16345,

'modestly': 6697,

'recipients': 12119,

'civilian': 7979,

'indigenous': 13853,

'overpowering': 20854,

'drilling': 1051,

'sorted': 16346,

'lichtenstein': 16347,

'bedevil': 20855,

'dispite': 20856,

'battleships': 16843,

'instability': 4824,

'quarter': 95,

'salado': 20857,

'honduras': 5692,

"chevron's": 13855,

"lazere's": 12273,

'receipt': 2660,

'sponsor': 8360,

'entering': 4825,

"kcbt's": 16349,

'nowicki': 19987,

'salads': 13856,

'augar': 16351,

'797': 7980,

'796': 7254,

'795': 8361,

'794': 5295,

'793': 5118,

'792': 6170,

'791': 5296,

'790': 4826,

"nikko's": 20858,

'unsaleable': 20859,

'799': 5720,

'798': 5693,

'seriously': 2143,

'trauma': 16352,

'tvbh': 20860,

'macedon': 20861,

'disintegrated': 21906,

'adddition': 21909,

'incentives': 2244,

'complicated': 5944,

'reevaluating': 20864,

'thatching': 21921,

'brasil': 7981,

'79p': 20865,

'wrong': 4951,

'initiate': 8822,

'aboard': 16353,

'saving': 7255,

'spoken': 8823,

'parkinson': 16364,

'one': 65,

'ont': 20867,

'concert': 7256,

"boston's": 16354,

'stifled': 13859,

'types': 4622,

'lingering': 20868,

'surges': 16356,

'hurdman': 20869,

'herds': 16357,

'absorbs': 14114,

'surged': 4681,

'dalkon': 14211,

'crossroads': 13860,

'shakeup': 20870,

'disasterous': 20871,

'illness': 11021,

'turned': 3242,

'locations': 3801,

'tyranite': 12120,

'minesweepers': 13861,

'turner': 7257,

'borough': 20872,

'underlines': 12358,

"bancorporation's": 20873,

'fashionable': 20874,

"ae's": 20875,

'dilutions': 16358,

'goodman': 9472,

'unlawfully': 10510,

'mayer': 16359,

'printer': 16360,

'offload': 20877,

'opposite': 13862,

'buffer': 738,

'printed': 9398,

'pequiven': 16361,

'panoche': 13863,

'knowingly': 20878,

'ecusta': 16362,

'thsl': 20879,

'phil': 8825,

'jitters': 13864,

'touche': 16363,

'jittery': 20881,

'friction': 3291,

'fecal': 16365,

'resurgance': 22068,

'heeding': 20882,

'soviets': 2363,

'imagined': 16366,

'transact': 16367,

'califoirnia': 20883,

"chrysler's": 9399,

'respecitvely': 16368,

'presse': 16369,

'euromarket': 10084,

'guarded': 12121,

'satisfacotry': 16371,

'authroization': 20884,

'simplistic': 20885,

'monde': 20886,

'awaiting': 4102,

'recombinant': 13865,

'refinancement': 20887,

'comserv': 20888,

'kitakyushu': 20889,

'pima': 16372,

'basle': 11022,

'6250': 20891,

'choudhury': 16373,

'vision': 8826,

'interruptible': 20892,

'weatherford': 13866,

'832': 7982,

'833': 5694,

'830': 4420,

'831': 5119,

'836': 5297,

'837': 4553,

'834': 6172,

'835': 4952,

'alarming': 22144,

'838': 5695,

'839': 6173,

'524p': 20893,

'sponsorship': 20894,

'vendex': 12122,

"amsouth's": 20895,

'kilometer': 20896,

'enjoys': 10086,

'illiberal': 20897,

'punta': 6174,

'punte': 20898,

'girozentrale': 10087,

'missstatements': 20899,

'marietta': 10088,

'awards': 6175,

'concentrated': 3635,

'83p': 20900,

'developpement': 13867,

'rhodes': 13868,

'matheson': 5696,

'1720': 20901,

'paring': 20902,

's': 35,

'concentrates': 4953,

"can's": 16374,

'polysaturated': 22183,

'parini': 20903,

'baden': 13869,

'bader': 20904,

'buoyancy': 12123,

'erdem': 20905,

'properites': 16375,

'comparitive': 20906,

'practises': 12124,

'collides': 20907,

'west': 189,

'wess': 20908,

'collided': 13870,

'practised': 20909,

"amalgamated's": 20910,

'motives': 20911,

'wants': 1378,

'formed': 1273,

'readings': 20912,

'geothermal': 12125,

'tightened': 7315,

"d'or": 11023,

'former': 1109,

'venezulean': 20913,

'curd': 19935,

'squeezes': 12126,

'newspaper': 1019,

'situation': 817,

'ivey': 13871,

'engaged': 3636,

'dubious': 13872,

'cayacq': 17061,

'cobol': 20916,

'limping': 20917,

'technology': 883,

'koerner': 20919,

'debilitating': 16376,

'verified': 7983,

'otto': 4010,

'7770': 20920,

'emulsions': 16377,

"onic's": 16378,

'slate': 9075,

'wires': 20921,

'edged': 5506,

'assigns': 20922,

'singapore': 1341,

'deflate': 20923,

"strategy's": 20924,

'walesa': 16379,

'advertisement': 4554,

'luyten': 20925,

'shrortly': 20926,

'corpoartion': 20927,

'preferance': 22290,

'tracking': 16380,

'sunnyvale': 13874,

'colorants': 20928,

'persistently': 16381,

"officers'": 16382,

"his's": 20929,

'being': 367,

'divestitures': 7259,

'steamer': 20930,

'rover': 20931,

'grounded': 8362,

"businessmen's": 16383,

'cyanidation': 16384,

'overthrow': 20932,

'partnerhip': 20933,

'sumt': 16385,

'sums': 8827,

'oelmuehle': 16386,

'unveil': 16387,

'gestures': 13875,

'penta': 20934,

'traffic': 2544,

'preference': 2428,

'sumi': 20935,

'world': 166,

'postal': 9400,

'bced': 16388,

'dornbush': 12128,

'confine': 14215,

'2555': 20936,

"zambia's": 5945,

'superiority': 20937,

'militate': 20938,

'satisfactory': 2395,

'superintendent': 20939,

'tvx': 5946,

'tvt': 16389,

'magma': 6698,

'diving': 20940,

'tvb': 15548,

'seaman': 13876,

'matsunaga': 11025,

'919': 4827,

'918': 5298,

'refundable': 17070,

'914': 5947,

'917': 7260,

'916': 6699,

'911': 5507,

'910': 4828,

'restoring': 10213,

'912': 4555,

'squabble': 20942,

'retains': 7261,

"partner's": 20943,

'leadership': 5300,

'graaf': 11026,

'spacelab': 20944,

'thailand': 1800,

'graan': 9402,

'exasperating': 20945,

'hartmarx': 12129,

'frights': 16390,

'niall': 20946,

'johnston': 11027,

'91p': 16391,

'sensitively': 16392,

'porsche': 6016,

'prepares': 15494,

'lively': 12130,

'stoppages': 10686,

"associated's": 16394,

'pivot': 12131,

'series': 1037,

'sese': 24050,

'bubble': 7604,

'trusses': 16395,

'interestate': 20949,

'continents': 20950,

'societal': 20951,

'with': 28,

'pull': 6176,

'rush': 6700,

'monopoly': 6222,

'operationally': 20953,

'dirty': 20954,

'abuses': 10090,

'prudhoe': 7262,

'pulp': 5949,

'rust': 16396,

'hellman': 20955,

'amdec': 20956,

'australasian': 16397,

'watches': 13878,

'hypertension': 20957,

"hemdale's": 20958,

'formulation': 16398,

'watched': 7605,

'jargon': 20959,

'cream': 13879,

'ideally': 9404,

'ryavec': 11028,

'microoganisms': 20960,

'indemnify': 13880,

'wincenty': 20961,

'waving': 20962,

"multifood's": 20963,

'midges': 20964,

'natalie': 11029,

'crosbie': 13881,

'posible': 20965,

'omnibus': 13882,

'assetsof': 20966,

'tricks': 13883,

'rs': 16399,

'kilogram': 20967,

'pruning': 25363,

'dyer': 13884,

'dyes': 20968,

'legislatures': 20969,

'scm': 16400,

'sci': 9405,

'riedel': 20970,

'ceramic': 16401,

'unitholders': 6701,

'scb': 13885,

'dn11': 20971,

'conditionality': 20972,

"stock's": 13807,

'masland': 20973,

'causes': 7606,

'riots': 10091,

'norf': 20974,

'nord': 9406,

'midwest': 3893,

'tamils': 13886,

'ofthe': 16402,

"colombia's": 3421,

'24th': 11030,

'sant': 20975,

'moines': 10092,

'electrotechnical': 22577,

'proceeded': 24534,

'sanz': 20976,

'insufficiently': 13887,

'sang': 20977,

'sand': 5950,

'bracho': 16404,

'small': 805,

'workloads': 20978,

'sank': 6702,

'kemper': 20979,

'abbreviated': 16405,

'quicker': 13888,

'199': 3802,

'198': 3243,

'195': 2661,

'194': 3080,

'197': 4310,

'196': 3894,

'191': 2850,

'190': 2199,

'193': 3481,

'192': 3350,

'past': 582,

'fractionation': 20980,

'displays': 20981,

'pass': 3081,

'investment': 202,

'quals': 27062,

'quicken': 16406,

"centronic's": 20983,

'menswear': 20984,

'clock': 16407,

'teape': 20985,

'teapa': 20986,

'prevailed': 10093,

'hebei': 9407,

...}- 단어 인덱스 딕셔너리 역변환

index_word = dict([(value, key) for (key, value) in word_index.items()])

index_word{10996: 'mdbl',

16260: 'fawc',

12089: 'degussa',

8803: 'woods',

13796: 'hanging',

20672: 'localized',

20673: 'sation',

20675: 'chanthaburi',

10997: 'refunding',

8804: 'hermann',

20676: 'passsengers',

20677: 'stipulate',

8352: 'heublein',

20713: 'screaming',

16261: 'tcby',

185: 'four',

1642: 'grains',

20680: 'broiler',

12090: 'wooden',

1220: 'wednesday',

13797: 'highveld',

7593: 'duffour',

20681: '0053',

3914: 'elections',

2563: '270',

3551: '271',

5113: '272',

3552: '273',

3400: '274',

7975: 'rudman',

3401: '276',

3478: '277',

3632: '278',

4309: '279',

9381: 'dormancy',

7247: 'errors',

3086: 'deferred',

20683: 'sptnd',

8805: 'cooking',

20684: 'stratabit',

16262: 'designing',

20685: 'metalurgicos',

13798: 'databank',

20686: '300er',

20687: 'shocks',

7972: 'nawg',

20688: 'tnta',

20689: 'perforations',

2891: 'affiliates',

20690: '27p',

16263: 'ching',

595: 'china',

16264: 'wagyu',

3189: 'affiliated',

16265: 'chino',

16266: 'chinh',

20692: 'slickline',

13799: 'doldrums',

12092: 'kids',

3028: 'climbed',

6693: 'controversy',

20693: 'kidd',

12093: 'spotty',

12639: 'rebel',

9382: 'millimetres',

4007: 'golden',

5689: 'projection',

12094: 'stern',

7903: "hudson's",

10066: 'dna',

20695: 'dnc',

20696: 'hodler',

2394: 'lme',

20697: 'insolvancy',

13800: 'music',

1984: 'therefore',

10998: 'dns',

6959: 'distortions',

13801: 'thassos',

20698: 'populations',

8806: 'meteorologist',

43: 'loss',

9383: 'exco',

20813: 'adventist',

16267: 'murchison',

10999: 'locked',

13802: 'kampala',

20699: 'arndt',

1267: 'nakasone',

20700: 'steinweg',

3633: "india's",

3029: 'wang',

10067: 'wane',

13803: 'unjust',

13804: 'titanium',

850: 'want',

20701: 'pinto',

16268: "institutes'",

7973: 'absolute',

4677: 'travel',

6422: 'cutback',

16269: 'nazmi',

1858: 'modest',

16270: 'shopwell',

20702: 'sedi',

20703: 'adoped',

16271: 'tulis',

20704: '18th',

20705: "wmc's",

20706: 'menlo',

11000: 'reiners',

12095: 'farmlands',

20707: 'nonsensical',

20708: 'elisra',

2461: 'welcomed',

20709: 'peup',

16272: "holiday's",

20711: 'activating',

16273: 'avondale',

16274: 'interational',

20712: 'welcomes',

16275: 'fip',

11001: 'tailings',

4205: 'fit',

16276: 'lifeline',

1916: 'bringing',

4819: 'fix',

6164: '624',

12096: 'naturalite',

6165: 'wales',

8807: 'fin',

11129: 'fio',

20714: 'ceremenony',

20715: 'sovr',

20716: "yeo's",

1788: 'effects',

13805: 'sixteen',

8808: 'undeveloped',

13806: 'glutted',

20717: 'barton',

20718: 'froday',

10089: 'arrow',

11002: 'stabilises',

6960: 'allan',

20719: '374p',

3891: '393',

4008: '392',

4206: '391',

3079: '390',

4550: '397',

6166: '396',

6423: '395',

4207: '394',

6961: '399',

4208: '398',

7595: 'stabilised',

5114: 'smelters',

20720: 'oprah',

20721: 'orginially',

20722: "tvx's",

16278: 'ponomarev',

20723: 'enviroment',

20724: "reeves'",

8363: 'mason',

1670: 'encourage',

7596: 'adapt',

12776: 'abbott',

13808: 'stamping',

20726: 'colquiri',

11003: 'ambrit',

8353: 'strata',

4821: 'corrects',

11922: 'sandra',

859: 'estimate',

20727: 'universally',

20728: 'chlorine',

16279: 'competes',

10068: 'leiner',

8809: 'ministries',

8810: 'disturbed',

13809: 'competed',

8811: 'juergen',

13810: 'kfw',

11004: 'turben',

9384: 'reintroduced',

20729: 'maladies',

4101: 'chevron',

16280: 'lazere',

8812: 'antilles',

11907: 'dti',

9070: 'specially',

4678: 'bilzerian',

13811: 'bakelite',

20730: 'renovated',

568: 'service',

16281: 'payless',

20731: 'spiegler',

831: 'needed',

16282: 'wigglesworth',

6962: 'master',

13812: 'antonson',

20732: 'genesis',

13813: 'vismara',

20734: 'organically',

20735: "accords'",

5940: 'task',

7974: 'positively',

3479: 'feasibility',

6963: 'ahmed',

13814: "suralco's",

20736: 'awacs',

16283: 'idly',

20737: 'regulator',

12097: 'pseudorabies',

16284: 'staubli',

8813: 'nzi',

5115: 'feeling',

3127: '275',

20738: '6819',

16285: 'gorman',

8354: 'sustaining',

9385: 'spectrum',

20739: 'consenting',

12098: 'recapitalized',

11562: 'sailed',

7597: 'dozen',

1985: 'affairs',

2253: 'courier',

8355: 'kremlin',

895: 'shipments',

16286: "aquino's",

10070: 'committing',

5293: 'sugarcane',

9386: 'diminishing',

16287: 'vexing',

11005: 'simplify',

6167: 'mouth',

7248: 'steinhardt',

8814: 'conceded',

9387: 'bradford',

7976: 'singer',

20740: '5602',

13816: "1987's",

4950: 'tech',

6424: 'teck',

20741: 'majv',

666: 'saying',

16477: 'dickey',

20742: 'sweetner',

21149: 'teresa',

20743: 'ulcer',

13817: 'cheaply',

2361: 'thai',

6964: 'orleans',

16290: 'excavator',

6168: 'rico',

12099: 'lube',

13818: 'rick',

4679: 'rich',

13819: 'kerna',

950: 'rice',

4209: 'rica',

5503: 'plate',

16291: 'platt',

8356: 'altogether',

8815: 'jaguar',

20744: 'dynair',

8816: 'patch',

2892: 'ldp',

13820: 'boarded',

16292: 'precluding',

11006: 'clarified',

16293: 'sensitivity',

1511: 'alternative',

11007: 'clarifies',

5116: 'lots',

7598: 'irs',

20745: 'irv',

13821: 'iri',

13822: 'ira',

5690: 'timber',

20746: 'ire',

5219: 'discipline',

1937: 'extend',

3634: 'nature',

16295: "amb's",

16296: 'dunhill',

2142: 'extent',

20747: 'restrcitions',

2396: 'heating',

11008: "mannesmann's",

20748: 'outsanding',

20749: 'multimillions',

13824: 'sarcinelli',

6694: 'southeastern',

10071: 'eradicate',

9388: 'libyan',

20750: 'foreclosing',

12101: 'maclaine',

20751: 'fra',

353: 'union',

11009: 'frn',

386: 'much',

12102: 'fry',

20752: 'mothball',

10072: 'chlorazepate',

12103: 'dxns',

19981: 'toyko',

20753: 'spit',

16297: '007050',

16298: 'freehold',

13825: 'davy',

11010: 'dave',

12177: 'spie',

10117: 'aguayo',

12104: 'wildcat',

10069: 'fecs',

20754: 'kennan',

16299: 'intal',

9389: 'contingencies',

16551: 'professionally',

16300: 'microbiological',

20756: 'misconstrued',

409: 'k',

20757: 'securitiesd',

16301: 'deferring',

5941: 'kohl',

3030: 'conditioned',

20758: 'fnhb',

16302: "october's",

13954: 'memorial',

6965: 'democracies',

27520: 'conformed',

464: 'split',

12105: "bond's",

11112: 'thinly',

16515: 'dunkirk',

16303: 'cavanaugh',

13827: "securities'",

21345: 'marches',

16304: 'issam',

2020: 'workforce',

12106: 'meinert',

13828: 'boiler',

5294: "bp's",

16305: 'torpedoed',

20762: 'indidate',

13829: 'downwardly',

20763: 'viviez',

20764: 'vladiminovich',

16306: 'academic',

20765: 'architecural',

1117: 'corporate',

16307: 'appropriately',

20766: 'teicc',

20767: "hanover's",

8817: 'aristech',

20768: 'portrayed',

21383: 'raffineries',

20770: 'hai',

7599: 'hal',

13830: 'ham',

10073: 'han',

20771: 'e15b',

61: 'had',

20772: 'hay',

13831: 'botchwey',

10074: 'haq',

37: 'has',

13832: 'hat',

20773: 'hav',

20774: 'fortin',

8818: 'municipal',

20775: 'osman',

20776: 'fsical',

3480: 'elders',

12107: 'survival',

16308: 'unequivocally',

2519: 'objective',

6695: 'indicative',

10075: 'shadow',

21411: 'riskiness',

20778: 'positiive',

10076: "american's",

16309: 'alick',

16310: 'harima',

12108: 'alice',

20779: 'altschul',

16311: 'festivities',

20780: 'medecines',

2942: 'beneficial',

12109: 'yoweri',

13833: 'crowd',

9390: 'crowe',

3553: 'crown',

13679: 'topping',

8819: 'captive',

12110: 'billboard',

6169: 'fiduciary',

3402: 'bottom',

20782: 'plucked',

20783: 'locksmithing',

9391: 'ecopetrol',

24018: 'pipestone',

5505: "growers'",

20785: 'borrows',

16312: 'eduard',

13834: 'venpres',

16313: 'bamboo',

13835: 'foolish',

20786: 'uruguyan',

20787: 'officeholders',

20788: 'economiques',

16314: 'aden',

4822: 'maxwell',

4680: 'marshall',

16315: 'honeymoon',

16316: 'administer',

20790: 'shoots',

16317: 'rubbertech',

16318: 'johsen',

10077: 'reciprocity',

13836: 'fabric',

20791: 'suffice',

20792: 'spokemsan',

20793: "sonora's",

16319: '5865',

16320: "systems'",

20794: 'perfumes',

20795: 'halycon',

20796: 'nonvoting',

7250: 'safeguard',

21538: 'sawdust',

20797: "else's",

13837: 'arrays',

20798: 'aza',

20799: 'smasher',

12111: 'complications',

1813: 'pesos',

20800: 'relabelling',

3722: 'passenger',

12112: "avon's",

20801: 'megahertz',

10683: 'mirror',

8357: 'minas',

16322: 'bourdain',

20802: 'crownx',

6425: 'eventual',

1207: 'crowns',

1369: 'role',

20803: 'obliges',

16323: 'rolf',

13838: 'vegetative',

20804: 'rolm',

4419: 'roll',

2463: 'intend',

16324: 'palms',

19255: 'denys',

13839: 'transported',

20805: 'moresby',

16325: 'devon',

1351: 'intent',

20806: "camco's",

5942: 'variable',

20807: 'transporter',

16326: 'danske',

13840: 'friedhelm',

8358: 'hawker',

17774: "sand's",

20808: 'preseving',

12113: '80386',

16328: 'bnls',

19984: 'ordination',

11011: 'overturned',

16329: 'erred',

6696: 'cincinnati',

16710: 'corps',

20809: 'whoever',

16330: 'osp',

13841: 'osr',

12114: 'ost',

16331: 'chair',

5647: '690',

20810: 'grapples',

13842: 'megawatts',

20811: 'photocopiers',

20812: 'sconninx',

2274: 'circumstances',

13843: 'oversight',

20814: "paradyne's",

6363: '691',

20815: 'paychecks',

13844: "stadelmann's",

3241: 'choice',

11012: 'vastagh',

8820: 'embark',

9392: 'gloomy',

9393: 'stays',

4009: 'exact',

5117: 'minute',

11892: 'kittiwake',

20816: 'picul',

20817: 'skewed',

11013: 'cooke',

10078: 'defaults',

11014: 'reimpose',

9394: 'hindered',

20818: 'lengthened',

16333: 'chopping',

13845: 'mckiernan',

20819: 'collaspe',

7251: 'corazon',

7600: 'antwerp',

13846: 'abdullah',

13847: 'goldston',

442: '300',

20821: 'cassa',

20822: 'casse',

4081: '695',

2979: 'ground',

839: 'boost',

16334: 'azusa',

9395: 'drafted',

4823: '303',

13848: 'climbs',

7601: 'honour',

20823: 'vanderbilt',

3968: '305',

3031: 'address',

8821: 'dwindling',

7252: 'benson',

12115: 'enroll',

501: 'revenues',

12116: 'impacted',

20826: 'queue',

10079: 'accomplished',

7602: 'throughput',

9396: 'influx',

10080: 'stockbuilding',

20827: 'aproximates',

13849: 'petroleo',

16335: 'sistemas',

14053: 'feretti',

5943: 'opposes',

882: 'working',

20829: 'perished',

13850: 'oldham',

20830: '27000',

19245: 'optimize',

20832: 'vigour',

1580: 'opposed',

16336: 'liberalizing',

20833: 'wvz',

20834: 'dampness',

13851: 'approving',

13496: 'sierra',

20835: 'entrepot',

224: 'currency',

1499: 'originally',

20837: 'tindemans',

16337: 'valorem',

477: 'following',

20838: 'fossen',

11016: 'locke',

20839: 'employess',

12117: 'rotberg',

16338: 'parachute',

11017: 'locks',

12255: 'incremental',

16339: 'woolowrth',

20841: 'listens',

7253: 'litre',

3554: 'edouard',

1377: 'ounce',

20843: 'nicanor',

20844: 'sucocitrico',

16340: 'minicomputers',

16341: "silva's",

11018: 'restitutions',

16342: 'custer',

2590: '3rd',

10081: 'fueled',

20845: 'trydahl',

11019: 'aice',

12118: 'harmon',

10082: 'conscious',

20846: 'herbicidesand',

20847: 'subdivisions',

20848: "veslefrikk's",

11020: 'swollen',

7978: 'pulled',

20849: 'tilney',

203: 'years',

20850: 'structuring',

20851: 'episodes',

16343: 'sportscene',

16344: "northair's",

20852: 'jig',

20853: 'jin',

3403: 'jim',

8359: 'troubles',

13852: 'workforces',

2362: 'suspension',

3892: 'troubled',

16345: 'fondiaria',

6697: 'modestly',

12119: 'recipients',

7979: 'civilian',

13853: 'indigenous',

20854: 'overpowering',

1051: 'drilling',

16346: 'sorted',

16347: 'lichtenstein',

20855: 'bedevil',

20856: 'dispite',

16843: 'battleships',

4824: 'instability',

95: 'quarter',

20857: 'salado',

5692: 'honduras',

13855: "chevron's",

12273: "lazere's",

2660: 'receipt',

8360: 'sponsor',

4825: 'entering',

16349: "kcbt's",

19987: 'nowicki',

13856: 'salads',

16351: 'augar',

7980: '797',

7254: '796',

8361: '795',

5295: '794',

5118: '793',

6170: '792',

5296: '791',

4826: '790',

20858: "nikko's",

20859: 'unsaleable',

5720: '799',

5693: '798',

2143: 'seriously',

16352: 'trauma',

20860: 'tvbh',

20861: 'macedon',

21906: 'disintegrated',

21909: 'adddition',

2244: 'incentives',

5944: 'complicated',

20864: 'reevaluating',

21921: 'thatching',

7981: 'brasil',

20865: '79p',

4951: 'wrong',

8822: 'initiate',

16353: 'aboard',

7255: 'saving',

8823: 'spoken',

16364: 'parkinson',

65: 'one',

20867: 'ont',

7256: 'concert',

16354: "boston's",

13859: 'stifled',

4622: 'types',

20868: 'lingering',

16356: 'surges',

20869: 'hurdman',

16357: 'herds',

14114: 'absorbs',

4681: 'surged',

14211: 'dalkon',

13860: 'crossroads',

20870: 'shakeup',

20871: 'disasterous',

11021: 'illness',

3242: 'turned',

3801: 'locations',

12120: 'tyranite',

13861: 'minesweepers',

7257: 'turner',

20872: 'borough',

12358: 'underlines',

20873: "bancorporation's",

20874: 'fashionable',

20875: "ae's",

16358: 'dilutions',

9472: 'goodman',

10510: 'unlawfully',

16359: 'mayer',

16360: 'printer',

20877: 'offload',

13862: 'opposite',

738: 'buffer',

9398: 'printed',

16361: 'pequiven',

13863: 'panoche',

20878: 'knowingly',

16362: 'ecusta',

20879: 'thsl',

8825: 'phil',

13864: 'jitters',

16363: 'touche',

20881: 'jittery',

3291: 'friction',

16365: 'fecal',

22068: 'resurgance',

20882: 'heeding',

2363: 'soviets',

16366: 'imagined',

16367: 'transact',

20883: 'califoirnia',

9399: "chrysler's",

16368: 'respecitvely',

16369: 'presse',

10084: 'euromarket',

12121: 'guarded',

16371: 'satisfacotry',

20884: 'authroization',

20885: 'simplistic',

20886: 'monde',

4102: 'awaiting',

13865: 'recombinant',

20887: 'refinancement',

20888: 'comserv',

20889: 'kitakyushu',

16372: 'pima',

11022: 'basle',

20891: '6250',

16373: 'choudhury',

8826: 'vision',

20892: 'interruptible',

13866: 'weatherford',

7982: '832',

5694: '833',

4420: '830',

5119: '831',

5297: '836',

4553: '837',

6172: '834',

4952: '835',

22144: 'alarming',

5695: '838',

6173: '839',

20893: '524p',

20894: 'sponsorship',

12122: 'vendex',

20895: "amsouth's",

20896: 'kilometer',

10086: 'enjoys',

20897: 'illiberal',

6174: 'punta',

20898: 'punte',

10087: 'girozentrale',

20899: 'missstatements',

10088: 'marietta',

6175: 'awards',

3635: 'concentrated',

20900: '83p',

13867: 'developpement',

13868: 'rhodes',

5696: 'matheson',

20901: '1720',

20902: 'paring',

35: 's',

4953: 'concentrates',

16374: "can's",

22183: 'polysaturated',

20903: 'parini',

13869: 'baden',

20904: 'bader',

12123: 'buoyancy',

20905: 'erdem',

16375: 'properites',

20906: 'comparitive',

12124: 'practises',

20907: 'collides',

189: 'west',

20908: 'wess',

13870: 'collided',

20909: 'practised',

20910: "amalgamated's",

20911: 'motives',

1378: 'wants',

1273: 'formed',

20912: 'readings',

12125: 'geothermal',

7315: 'tightened',

11023: "d'or",

1109: 'former',

20913: 'venezulean',

19935: 'curd',

12126: 'squeezes',

1019: 'newspaper',

817: 'situation',

13871: 'ivey',

3636: 'engaged',

13872: 'dubious',

17061: 'cayacq',

20916: 'cobol',

20917: 'limping',

883: 'technology',

20919: 'koerner',

16376: 'debilitating',

7983: 'verified',

4010: 'otto',

20920: '7770',

16377: 'emulsions',

16378: "onic's",

9075: 'slate',

20921: 'wires',

5506: 'edged',

20922: 'assigns',

1341: 'singapore',

20923: 'deflate',

20924: "strategy's",

16379: 'walesa',

4554: 'advertisement',

20925: 'luyten',

20926: 'shrortly',

20927: 'corpoartion',

22290: 'preferance',

16380: 'tracking',

13874: 'sunnyvale',

20928: 'colorants',

16381: 'persistently',

16382: "officers'",

20929: "his's",

367: 'being',

7259: 'divestitures',

20930: 'steamer',

20931: 'rover',

8362: 'grounded',

16383: "businessmen's",

16384: 'cyanidation',

20932: 'overthrow',

20933: 'partnerhip',

16385: 'sumt',

8827: 'sums',

16386: 'oelmuehle',

16387: 'unveil',

13875: 'gestures',

20934: 'penta',

2544: 'traffic',

2428: 'preference',

20935: 'sumi',

166: 'world',

9400: 'postal',

16388: 'bced',

12128: 'dornbush',

14215: 'confine',

20936: '2555',

5945: "zambia's",

20937: 'superiority',

20938: 'militate',

2395: 'satisfactory',

20939: 'superintendent',

5946: 'tvx',

16389: 'tvt',

6698: 'magma',

20940: 'diving',

15548: 'tvb',

13876: 'seaman',

11025: 'matsunaga',

4827: '919',

5298: '918',

17070: 'refundable',

5947: '914',

7260: '917',

6699: '916',

5507: '911',

4828: '910',

10213: 'restoring',

4555: '912',

20942: 'squabble',

7261: 'retains',

20943: "partner's",

5300: 'leadership',

11026: 'graaf',

20944: 'spacelab',

1800: 'thailand',

9402: 'graan',

20945: 'exasperating',

12129: 'hartmarx',

16390: 'frights',

20946: 'niall',

11027: 'johnston',

16391: '91p',

16392: 'sensitively',

6016: 'porsche',

15494: 'prepares',

12130: 'lively',

10686: 'stoppages',

16394: "associated's",

12131: 'pivot',

1037: 'series',

24050: 'sese',

7604: 'bubble',

16395: 'trusses',

20949: 'interestate',

20950: 'continents',

20951: 'societal',

28: 'with',

6176: 'pull',

6700: 'rush',

6222: 'monopoly',

20953: 'operationally',

20954: 'dirty',

10090: 'abuses',

7262: 'prudhoe',

5949: 'pulp',

16396: 'rust',

20955: 'hellman',

20956: 'amdec',

16397: 'australasian',

13878: 'watches',

20957: 'hypertension',

20958: "hemdale's",

16398: 'formulation',

7605: 'watched',

20959: 'jargon',

13879: 'cream',

9404: 'ideally',

11028: 'ryavec',

20960: 'microoganisms',

13880: 'indemnify',

20961: 'wincenty',

20962: 'waving',

20963: "multifood's",

20964: 'midges',

11029: 'natalie',

13881: 'crosbie',

20965: 'posible',

13882: 'omnibus',

20966: 'assetsof',

13883: 'tricks',

16399: 'rs',

20967: 'kilogram',

25363: 'pruning',

13884: 'dyer',

20968: 'dyes',

20969: 'legislatures',

16400: 'scm',

9405: 'sci',

20970: 'riedel',

16401: 'ceramic',

6701: 'unitholders',

13885: 'scb',

20971: 'dn11',

20972: 'conditionality',

13807: "stock's",

20973: 'masland',

7606: 'causes',

10091: 'riots',

20974: 'norf',

9406: 'nord',

3893: 'midwest',

13886: 'tamils',

16402: 'ofthe',

3421: "colombia's",

11030: '24th',

20975: 'sant',

10092: 'moines',

22577: 'electrotechnical',

24534: 'proceeded',

20976: 'sanz',

13887: 'insufficiently',

20977: 'sang',

5950: 'sand',

16404: 'bracho',

805: 'small',

20978: 'workloads',

6702: 'sank',

20979: 'kemper',

16405: 'abbreviated',

13888: 'quicker',

3802: '199',

3243: '198',

2661: '195',

3080: '194',

4310: '197',

3894: '196',

2850: '191',

2199: '190',

3481: '193',

3350: '192',

582: 'past',

20980: 'fractionation',

20981: 'displays',

3081: 'pass',

202: 'investment',

27062: 'quals',

16406: 'quicken',

20983: "centronic's",

20984: 'menswear',

16407: 'clock',

20985: 'teape',

20986: 'teapa',

10093: 'prevailed',

9407: 'hebei',

...}- train_data[0] 인덱스 매핑 단어로 연결해 1개의 리뷰로 만들기

news = ' '.join([str(i) for i in train_data[0]])

news'1 2 2 8 43 10 447 5 25 207 270 5 3095 111 16 369 186 90 67 7 89 5 19 102 6 19 124 15 90 67 84 22 482 26 7 48 4 49 8 864 39 209 154 6 151 6 83 11 15 22 155 11 15 7 48 9 4579 1005 504 6 258 6 272 11 15 22 134 44 11 15 16 8 197 1245 90 67 52 29 209 30 32 132 6 109 15 17 12'- 단어 인덱스의 의미

- 0 : 패딩을 의미하는

pad - 1 : 문장 시작을 의미하는 토큰

sos - 2 : OOV(Out Of Vocabulary)를 의미하는 토큰

unk

- 0 : 패딩을 의미하는

- 인덱스

i-3으로 맞추기 + 해당 토큰은?로 대체

news = ' '.join([index_word.get(i-3, '?') for i in train_data[0]])

news'? ? ? said as a result of its december acquisition of space co it expects earnings per share in 1987 of 1 15 to 1 30 dlrs per share up from 70 cts in 1986 the company said pretax net should rise to nine to 10 mln dlrs from six mln dlrs in 1986 and rental operation revenues to 19 to 22 mln dlrs from 12 5 mln dlrs it said cash flow per share this year should be 2 50 to three dlrs reuter 3'- 딥러닝 모델 학습에 사용하기 위해 텍스트 데이터 ➡️ 벡터 변환

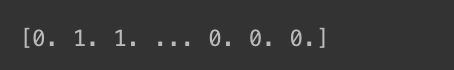

- 원-핫 인코딩으로 텍스트를 0, 1 벡터로 변환

def one_hot_encoding(data, dim=10000):

results = np.zeros((len(data), dim))

for i, d in enumerate(data):

results[i, d] = 1.

return results

x_train = one_hot_encoding(train_data)

x_test = one_hot_encoding(test_data)

print(x_train[0])

- 레이블 확인

print(train_labels[5])

print(train_labels[15])

print(train_labels[25])

print(train_labels[35])

print(train_labels[45])

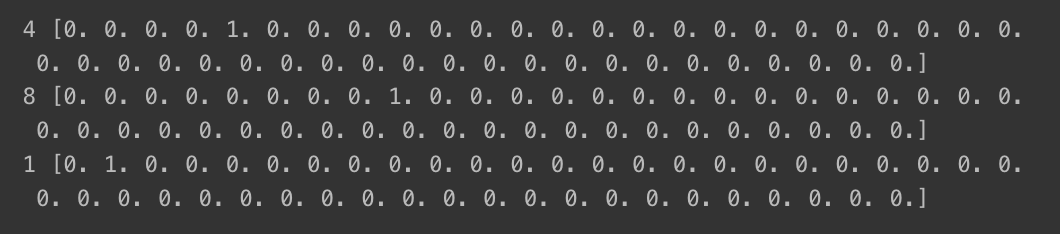

- 뉴스 주제 레이블도 원-핫 인코딩 수행

utils.to_categorical()

from tensorflow.keras import utils

y_train = utils.to_categorical(train_labels)

y_test = utils.to_categorical(test_labels)

print(train_labels[5], y_train[5])

print(train_labels[15], y_train[15])

print(train_labels[25], y_train[25])

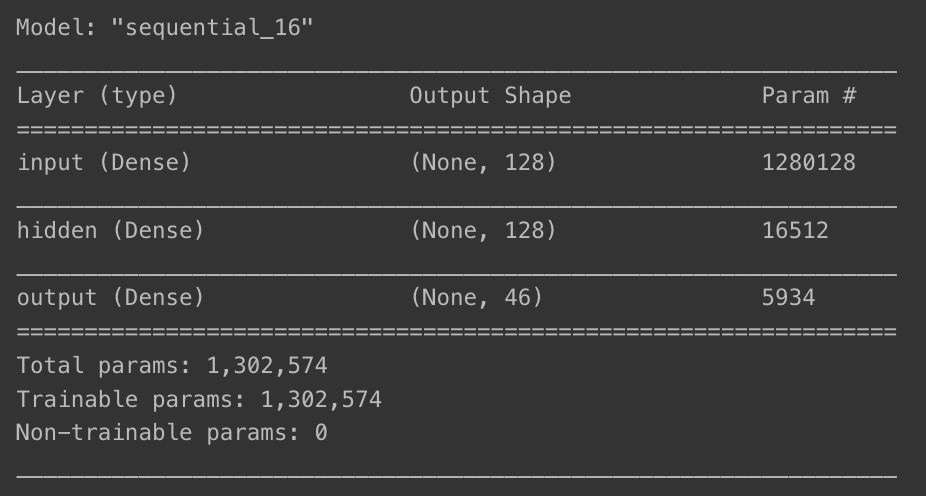

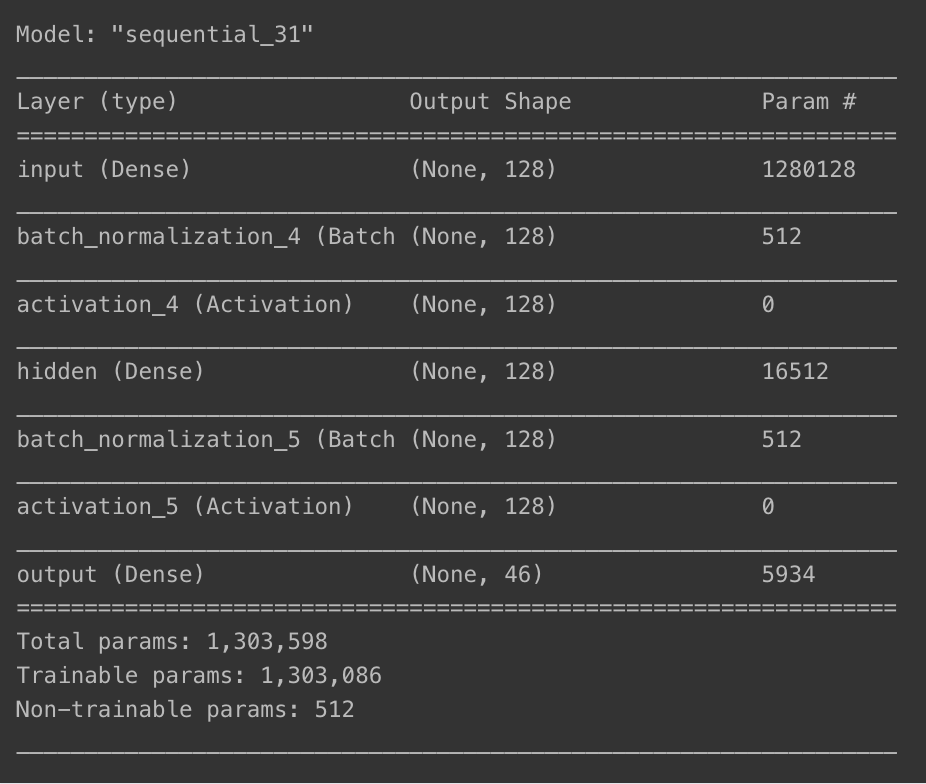

모델 구성

Sequential()

import tensorflow as tf

from tensorflow.keras import models, layers

model = models.Sequential()

model.add(layers.Dense(128, activation='relu', input_shape=(10000, ), name='input'))

model.add(layers.Dense(128, activation='relu', name='hidden'))

model.add(layers.Dense(46, activation='softmax', name='output'))모델 컴파일 및 학습

- 옵티마이저 : rmsprop

- 손실 함수 : categorical_crossentropy(다중 분류)

- 지표 : accuracy

model.compile(optimizer='rmsprop',

loss='categorical_crossentropy',

metrics=['accuracy'])

model.summary()

- 학습

history = model.fit(x_train, y_train,

epochs=40,

batch_size=512,

validation_data=(x_test, y_test))Epoch 1/40

18/18 [==============================] - 1s 40ms/step - loss: 2.1501 - accuracy: 0.5777 - val_loss: 1.4131 - val_accuracy: 0.6950

Epoch 2/40

18/18 [==============================] - 0s 15ms/step - loss: 1.0742 - accuracy: 0.7719 - val_loss: 1.1408 - val_accuracy: 0.7431

Epoch 3/40

18/18 [==============================] - 0s 14ms/step - loss: 0.7373 - accuracy: 0.8500 - val_loss: 0.9869 - val_accuracy: 0.7814

Epoch 4/40

18/18 [==============================] - 0s 14ms/step - loss: 0.5251 - accuracy: 0.8899 - val_loss: 0.9763 - val_accuracy: 0.7872

Epoch 5/40

18/18 [==============================] - 0s 14ms/step - loss: 0.3829 - accuracy: 0.9212 - val_loss: 0.9058 - val_accuracy: 0.8010

Epoch 6/40

18/18 [==============================] - 0s 14ms/step - loss: 0.3009 - accuracy: 0.9340 - val_loss: 0.9412 - val_accuracy: 0.7996

Epoch 7/40

18/18 [==============================] - 0s 13ms/step - loss: 0.2361 - accuracy: 0.9441 - val_loss: 0.9665 - val_accuracy: 0.7956

Epoch 8/40

18/18 [==============================] - 0s 15ms/step - loss: 0.2121 - accuracy: 0.9473 - val_loss: 0.9604 - val_accuracy: 0.8019

Epoch 9/40

18/18 [==============================] - 0s 14ms/step - loss: 0.1808 - accuracy: 0.9501 - val_loss: 0.9918 - val_accuracy: 0.8041

Epoch 10/40

18/18 [==============================] - 0s 13ms/step - loss: 0.1646 - accuracy: 0.9527 - val_loss: 1.0448 - val_accuracy: 0.7956

Epoch 11/40

18/18 [==============================] - 0s 13ms/step - loss: 0.1504 - accuracy: 0.9530 - val_loss: 1.1152 - val_accuracy: 0.7952

Epoch 12/40

18/18 [==============================] - 0s 13ms/step - loss: 0.1494 - accuracy: 0.9551 - val_loss: 1.0423 - val_accuracy: 0.8001

Epoch 13/40

18/18 [==============================] - 0s 14ms/step - loss: 0.1367 - accuracy: 0.9541 - val_loss: 1.0708 - val_accuracy: 0.7983

Epoch 14/40

18/18 [==============================] - 0s 12ms/step - loss: 0.1354 - accuracy: 0.9532 - val_loss: 1.1132 - val_accuracy: 0.7988

Epoch 15/40

18/18 [==============================] - 0s 13ms/step - loss: 0.1271 - accuracy: 0.9549 - val_loss: 1.1707 - val_accuracy: 0.7872

Epoch 16/40

18/18 [==============================] - 0s 14ms/step - loss: 0.1200 - accuracy: 0.9555 - val_loss: 1.2099 - val_accuracy: 0.7858

Epoch 17/40

18/18 [==============================] - 0s 12ms/step - loss: 0.1251 - accuracy: 0.9547 - val_loss: 1.1861 - val_accuracy: 0.7921

Epoch 18/40

18/18 [==============================] - 0s 12ms/step - loss: 0.1190 - accuracy: 0.9534 - val_loss: 1.2401 - val_accuracy: 0.7898

Epoch 19/40

18/18 [==============================] - 0s 13ms/step - loss: 0.1128 - accuracy: 0.9560 - val_loss: 1.1930 - val_accuracy: 0.7952

Epoch 20/40

18/18 [==============================] - 0s 12ms/step - loss: 0.1182 - accuracy: 0.9529 - val_loss: 1.1770 - val_accuracy: 0.7947

Epoch 21/40

18/18 [==============================] - 0s 13ms/step - loss: 0.1076 - accuracy: 0.9552 - val_loss: 1.2215 - val_accuracy: 0.7903

Epoch 22/40

18/18 [==============================] - 0s 12ms/step - loss: 0.1054 - accuracy: 0.9554 - val_loss: 1.2284 - val_accuracy: 0.7983

Epoch 23/40

18/18 [==============================] - 0s 12ms/step - loss: 0.1031 - accuracy: 0.9560 - val_loss: 1.2456 - val_accuracy: 0.7970

Epoch 24/40

18/18 [==============================] - 0s 13ms/step - loss: 0.1050 - accuracy: 0.9540 - val_loss: 1.2541 - val_accuracy: 0.7916

Epoch 25/40

18/18 [==============================] - 0s 12ms/step - loss: 0.1024 - accuracy: 0.9536 - val_loss: 1.2160 - val_accuracy: 0.7961

Epoch 26/40

18/18 [==============================] - 0s 12ms/step - loss: 0.0996 - accuracy: 0.9547 - val_loss: 1.3172 - val_accuracy: 0.7841

Epoch 27/40

18/18 [==============================] - 0s 12ms/step - loss: 0.0974 - accuracy: 0.9554 - val_loss: 1.3242 - val_accuracy: 0.7885

Epoch 28/40

18/18 [==============================] - 0s 12ms/step - loss: 0.0945 - accuracy: 0.9558 - val_loss: 1.4148 - val_accuracy: 0.7752

Epoch 29/40

18/18 [==============================] - 0s 12ms/step - loss: 0.0955 - accuracy: 0.9544 - val_loss: 1.3871 - val_accuracy: 0.7836

Epoch 30/40

18/18 [==============================] - 0s 12ms/step - loss: 0.0917 - accuracy: 0.9562 - val_loss: 1.3063 - val_accuracy: 0.8014

Epoch 31/40

18/18 [==============================] - 0s 12ms/step - loss: 0.0910 - accuracy: 0.9557 - val_loss: 1.3421 - val_accuracy: 0.7916

Epoch 32/40

18/18 [==============================] - 0s 12ms/step - loss: 0.0903 - accuracy: 0.9551 - val_loss: 1.4495 - val_accuracy: 0.7805

Epoch 33/40

18/18 [==============================] - 0s 12ms/step - loss: 0.0907 - accuracy: 0.9548 - val_loss: 1.4393 - val_accuracy: 0.7858

Epoch 34/40

18/18 [==============================] - 0s 13ms/step - loss: 0.0879 - accuracy: 0.9555 - val_loss: 1.4314 - val_accuracy: 0.7841

Epoch 35/40

18/18 [==============================] - 0s 13ms/step - loss: 0.0878 - accuracy: 0.9552 - val_loss: 1.4626 - val_accuracy: 0.7872

Epoch 36/40

18/18 [==============================] - 0s 12ms/step - loss: 0.0855 - accuracy: 0.9554 - val_loss: 1.4567 - val_accuracy: 0.7872

Epoch 37/40

18/18 [==============================] - 0s 12ms/step - loss: 0.0859 - accuracy: 0.9550 - val_loss: 1.4738 - val_accuracy: 0.7832

Epoch 38/40

18/18 [==============================] - 0s 12ms/step - loss: 0.0835 - accuracy: 0.9547 - val_loss: 1.5629 - val_accuracy: 0.7809

Epoch 39/40

18/18 [==============================] - 0s 12ms/step - loss: 0.0821 - accuracy: 0.9585 - val_loss: 1.6183 - val_accuracy: 0.7792

Epoch 40/40

18/18 [==============================] - 0s 12ms/step - loss: 0.0817 - accuracy: 0.9572 - val_loss: 1.5548 - val_accuracy: 0.7845- 시각화

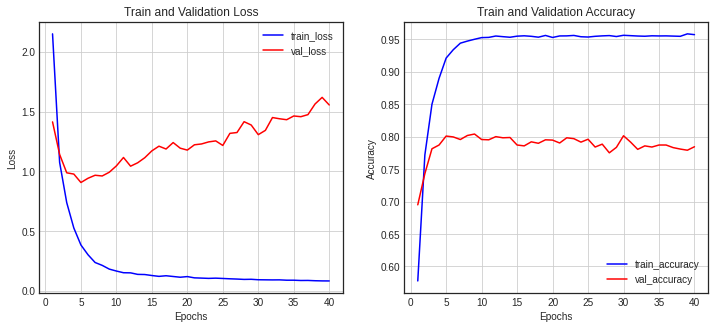

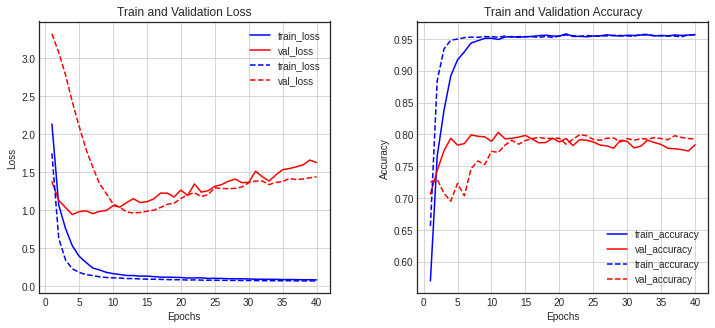

import matplotlib.pyplot as plt

history_dict = history.history

loss = history_dict['loss']

val_loss = history_dict['val_loss']

epochs = range(1, len(loss) + 1)

fig = plt.figure(figsize=(12, 5))

ax1 = fig.add_subplot(1, 2, 1)

ax1.plot(epochs, loss, color='blue', label='train_loss')

ax1.plot(epochs, val_loss, color='red', label='val_loss')

ax1.set_title('Train and Validation Loss')

ax1.set_xlabel('Epochs')

ax1.set_ylabel('Loss')

ax1.grid()

ax1.legend()

accuracy = history_dict['accuracy']

val_accuracy = history_dict['val_accuracy']

ax2 = fig.add_subplot(1, 2, 2)

ax2.plot(epochs, accuracy, color='blue', label='train_accuracy')

ax2.plot(epochs, val_accuracy, color='red', label='val_accuracy')

ax2.set_title('Train and Validation Accuracy')

ax2.set_xlabel('Epochs')

ax2.set_ylabel('Accuracy')

ax2.grid()

ax2.legend()

plt.show()

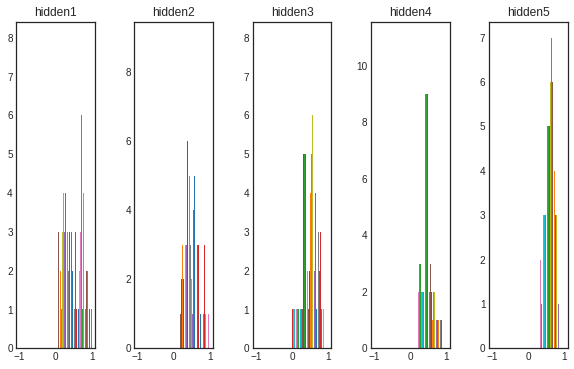

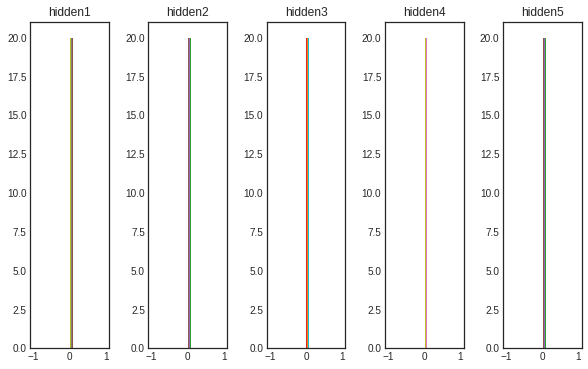

분석

- 에폭 진행 ➡️ val_loss 지속 증가, val_accuracy는 지속 감소

- 오버피팅!

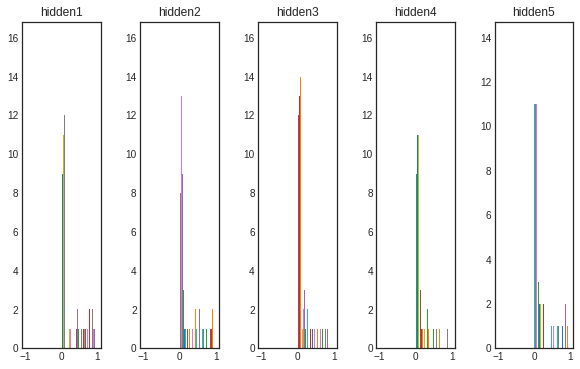

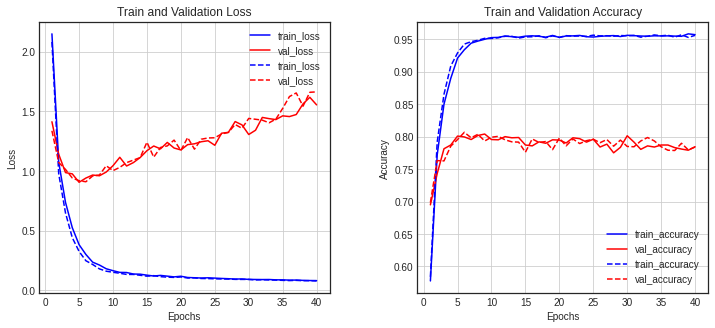

가중치 초기화

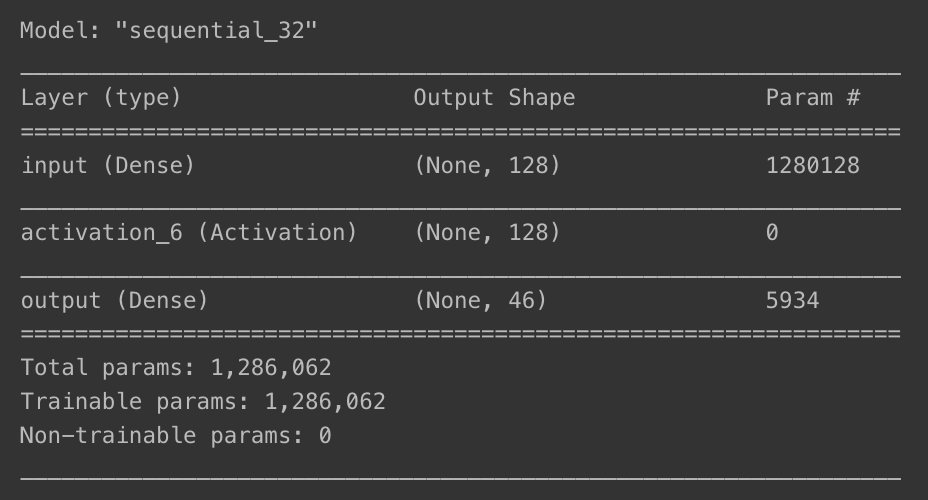

build_model함수 정의- 케라스 기본 레이어 : 균읿분포 초기화 수행

- 다른 초기화 방법들도 비교

def build_model(initializer):

model = models.Sequential()

model.add(layers.Dense(128,

activation='relu',

kernel_initializer=initializer,

input_shape=(10000, ),

name='input'))

model.add(layers.Dense(128,

activation='relu',

kernel_initializer=initializer,

name='hidden'))

model.add(layers.Dense(46,

activation='softmax',

name='output'))

model.compile(optimizer='rmsprop',

loss='categorical_crossentropy',

metrics=['accuracy'])

history = model.fit(x_train, y_train,

epochs=40,

batch_size=512,

validation_data=(x_test, y_test))

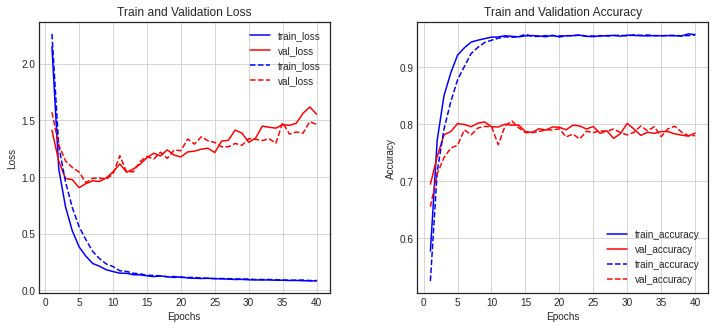

return historydiff_history- 모델 학습 히스토리 결과 비교를 위한 시각화

def diff_history(history1, history2):

history1_dict = history1.history

h1_loss = history1_dict['loss']

h1_val_loss = history1_dict['val_loss']

history2_dict = history2.history

h2_loss = history2_dict['loss']

h2_val_loss = history2_dict['val_loss']

epochs = range(1, len(h1_loss) + 1)

fig = plt.figure(figsize=(12, 5))

plt.subplots_adjust(wspace=0.3, hspace=0.3)

ax1 = fig.add_subplot(1, 2, 1)

ax1.plot(epochs, h1_loss, 'b-', label='train_loss')

ax1.plot(epochs, h1_val_loss, 'r-', label='val_loss')

ax1.plot(epochs, h2_loss, 'b--', label='train_loss')

ax1.plot(epochs, h2_val_loss, 'r--', label='val_loss')

ax1.set_title('Train and Validation Loss')

ax1.set_xlabel('Epochs')

ax1.set_ylabel('Loss')

ax1.grid()

ax1.legend()

h1_accuracy = history1_dict['accuracy']

h1_val_accuracy = history1_dict['val_accuracy']

h2_accuracy = history2_dict['accuracy']

h2_val_accuracy = history2_dict['val_accuracy']

ax2 = fig.add_subplot(1, 2, 2)

ax2.plot(epochs, h1_accuracy, 'b-', label='train_accuracy')

ax2.plot(epochs, h1_val_accuracy, 'r-', label='val_accuracy')

ax2.plot(epochs, h2_accuracy, 'b--', label='train_accuracy')

ax2.plot(epochs, h2_val_accuracy, 'r--', label='val_accuracy')

ax2.set_title('Train and Validation Accuracy')

ax2.set_xlabel('Epochs')

ax2.set_ylabel('Accuracy')

ax2.grid()

ax2.legend()

plt.show()제로 초기화

- 가중치 값 0으로 초기화하는 모델 생성 및 학습

zero_history = build_model(initializers.Zeros())Epoch 1/40

18/18 [==============================] - 1s 34ms/step - loss: 3.8105 - accuracy: 0.1207 - val_loss: 3.7958 - val_accuracy: 0.2110

Epoch 2/40

18/18 [==============================] - 0s 13ms/step - loss: 3.7843 - accuracy: 0.2069 - val_loss: 3.7728 - val_accuracy: 0.2110

Epoch 3/40

18/18 [==============================] - 0s 13ms/step - loss: 3.7616 - accuracy: 0.2170 - val_loss: 3.7507 - val_accuracy: 0.3620

Epoch 4/40

18/18 [==============================] - 0s 14ms/step - loss: 3.7393 - accuracy: 0.2504 - val_loss: 3.7289 - val_accuracy: 0.3620

Epoch 5/40

18/18 [==============================] - 0s 13ms/step - loss: 3.7173 - accuracy: 0.3164 - val_loss: 3.7072 - val_accuracy: 0.2110

Epoch 6/40

18/18 [==============================] - 0s 14ms/step - loss: 3.6953 - accuracy: 0.2440 - val_loss: 3.6857 - val_accuracy: 0.3620

Epoch 7/40

18/18 [==============================] - 0s 14ms/step - loss: 3.6735 - accuracy: 0.3322 - val_loss: 3.6644 - val_accuracy: 0.2110

Epoch 8/40

18/18 [==============================] - 0s 14ms/step - loss: 3.6521 - accuracy: 0.2541 - val_loss: 3.6434 - val_accuracy: 0.3620

Epoch 9/40

18/18 [==============================] - 0s 13ms/step - loss: 3.6308 - accuracy: 0.3378 - val_loss: 3.6225 - val_accuracy: 0.3620

Epoch 10/40

18/18 [==============================] - 0s 13ms/step - loss: 3.6096 - accuracy: 0.3118 - val_loss: 3.6017 - val_accuracy: 0.3620

Epoch 11/40

18/18 [==============================] - 0s 12ms/step - loss: 3.5886 - accuracy: 0.3416 - val_loss: 3.5811 - val_accuracy: 0.3620

Epoch 12/40

18/18 [==============================] - 0s 12ms/step - loss: 3.5678 - accuracy: 0.3517 - val_loss: 3.5608 - val_accuracy: 0.3620

Epoch 13/40

18/18 [==============================] - 0s 12ms/step - loss: 3.5471 - accuracy: 0.3517 - val_loss: 3.5406 - val_accuracy: 0.3620

Epoch 14/40

18/18 [==============================] - 0s 12ms/step - loss: 3.5267 - accuracy: 0.3517 - val_loss: 3.5206 - val_accuracy: 0.3620

Epoch 15/40

18/18 [==============================] - 0s 13ms/step - loss: 3.5065 - accuracy: 0.3517 - val_loss: 3.5009 - val_accuracy: 0.3620

Epoch 16/40

18/18 [==============================] - 0s 12ms/step - loss: 3.4866 - accuracy: 0.3517 - val_loss: 3.4813 - val_accuracy: 0.3620

Epoch 17/40

18/18 [==============================] - 0s 13ms/step - loss: 3.4668 - accuracy: 0.3517 - val_loss: 3.4619 - val_accuracy: 0.3620

Epoch 18/40

18/18 [==============================] - 0s 13ms/step - loss: 3.4472 - accuracy: 0.3517 - val_loss: 3.4428 - val_accuracy: 0.3620

Epoch 19/40

18/18 [==============================] - 0s 13ms/step - loss: 3.4278 - accuracy: 0.3517 - val_loss: 3.4237 - val_accuracy: 0.3620

Epoch 20/40

18/18 [==============================] - 0s 12ms/step - loss: 3.4086 - accuracy: 0.3517 - val_loss: 3.4050 - val_accuracy: 0.3620

Epoch 21/40

18/18 [==============================] - 0s 13ms/step - loss: 3.3897 - accuracy: 0.3517 - val_loss: 3.3865 - val_accuracy: 0.3620

Epoch 22/40

18/18 [==============================] - 0s 13ms/step - loss: 3.3709 - accuracy: 0.3517 - val_loss: 3.3682 - val_accuracy: 0.3620

Epoch 23/40

18/18 [==============================] - 0s 13ms/step - loss: 3.3525 - accuracy: 0.3517 - val_loss: 3.3500 - val_accuracy: 0.3620

Epoch 24/40

18/18 [==============================] - 0s 12ms/step - loss: 3.3341 - accuracy: 0.3517 - val_loss: 3.3321 - val_accuracy: 0.3620

Epoch 25/40

18/18 [==============================] - 0s 13ms/step - loss: 3.3159 - accuracy: 0.3517 - val_loss: 3.3142 - val_accuracy: 0.3620

Epoch 26/40

18/18 [==============================] - 0s 13ms/step - loss: 3.2980 - accuracy: 0.3517 - val_loss: 3.2967 - val_accuracy: 0.3620

Epoch 27/40

18/18 [==============================] - 0s 12ms/step - loss: 3.2803 - accuracy: 0.3517 - val_loss: 3.2793 - val_accuracy: 0.3620

Epoch 28/40

18/18 [==============================] - 0s 13ms/step - loss: 3.2627 - accuracy: 0.3517 - val_loss: 3.2620 - val_accuracy: 0.3620

Epoch 29/40

18/18 [==============================] - 0s 13ms/step - loss: 3.2454 - accuracy: 0.3517 - val_loss: 3.2450 - val_accuracy: 0.3620

Epoch 30/40

18/18 [==============================] - 0s 12ms/step - loss: 3.2282 - accuracy: 0.3517 - val_loss: 3.2281 - val_accuracy: 0.3620

Epoch 31/40

18/18 [==============================] - 0s 12ms/step - loss: 3.2112 - accuracy: 0.3517 - val_loss: 3.2114 - val_accuracy: 0.3620

Epoch 32/40

18/18 [==============================] - 0s 12ms/step - loss: 3.1945 - accuracy: 0.3517 - val_loss: 3.1949 - val_accuracy: 0.3620

Epoch 33/40

18/18 [==============================] - 0s 12ms/step - loss: 3.1779 - accuracy: 0.3517 - val_loss: 3.1786 - val_accuracy: 0.3620

Epoch 34/40

18/18 [==============================] - 0s 13ms/step - loss: 3.1614 - accuracy: 0.3517 - val_loss: 3.1623 - val_accuracy: 0.3620

Epoch 35/40

18/18 [==============================] - 0s 13ms/step - loss: 3.1452 - accuracy: 0.3517 - val_loss: 3.1463 - val_accuracy: 0.3620

Epoch 36/40

18/18 [==============================] - 0s 13ms/step - loss: 3.1291 - accuracy: 0.3517 - val_loss: 3.1305 - val_accuracy: 0.3620

Epoch 37/40

18/18 [==============================] - 0s 12ms/step - loss: 3.1133 - accuracy: 0.3517 - val_loss: 3.1148 - val_accuracy: 0.3620

Epoch 38/40

18/18 [==============================] - 0s 12ms/step - loss: 3.0976 - accuracy: 0.3517 - val_loss: 3.0993 - val_accuracy: 0.3620

Epoch 39/40

18/18 [==============================] - 0s 13ms/step - loss: 3.0820 - accuracy: 0.3517 - val_loss: 3.0839 - val_accuracy: 0.3620

Epoch 40/40

18/18 [==============================] - 0s 12ms/step - loss: 3.0666 - accuracy: 0.3517 - val_loss: 3.0687 - val_accuracy: 0.3620- 기본 모델 vs 제로 초기화 모델 학습 결과

- 제로 초기화 모델의 경우, 제대로 학습되고 있지 않음

diff_history(history, zero_history)

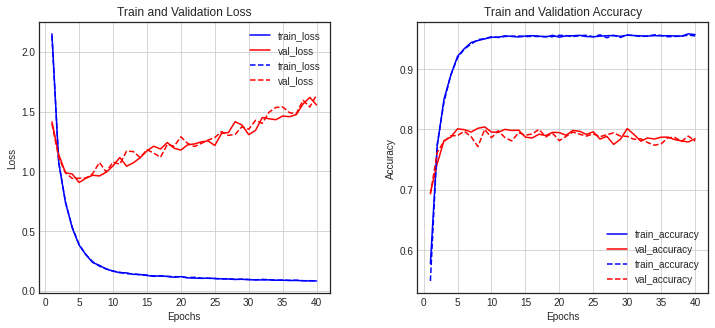

정규분포 초기화

normal_history = build_model(initializers.RandomNormal())Epoch 1/40

18/18 [==============================] - 1s 33ms/step - loss: 2.2645 - accuracy: 0.5253 - val_loss: 1.5718 - val_accuracy: 0.6554

Epoch 2/40

18/18 [==============================] - 0s 15ms/step - loss: 1.2771 - accuracy: 0.7167 - val_loss: 1.2849 - val_accuracy: 0.7133

Epoch 3/40

18/18 [==============================] - 0s 15ms/step - loss: 0.9558 - accuracy: 0.7914 - val_loss: 1.1424 - val_accuracy: 0.7413

Epoch 4/40

18/18 [==============================] - 0s 14ms/step - loss: 0.7299 - accuracy: 0.8392 - val_loss: 1.0844 - val_accuracy: 0.7582

Epoch 5/40

18/18 [==============================] - 0s 13ms/step - loss: 0.5612 - accuracy: 0.8779 - val_loss: 1.0442 - val_accuracy: 0.7631

Epoch 6/40

18/18 [==============================] - 0s 13ms/step - loss: 0.4467 - accuracy: 0.9011 - val_loss: 0.9503 - val_accuracy: 0.7903

Epoch 7/40

18/18 [==============================] - 0s 14ms/step - loss: 0.3424 - accuracy: 0.9238 - val_loss: 0.9890 - val_accuracy: 0.7814

Epoch 8/40

18/18 [==============================] - 0s 13ms/step - loss: 0.2796 - accuracy: 0.9349 - val_loss: 0.9906 - val_accuracy: 0.7934

Epoch 9/40

18/18 [==============================] - 0s 13ms/step - loss: 0.2319 - accuracy: 0.9433 - val_loss: 0.9811 - val_accuracy: 0.7961

Epoch 10/40

18/18 [==============================] - 0s 13ms/step - loss: 0.2091 - accuracy: 0.9472 - val_loss: 1.0250 - val_accuracy: 0.7961

Epoch 11/40

18/18 [==============================] - 0s 14ms/step - loss: 0.1720 - accuracy: 0.9508 - val_loss: 1.1883 - val_accuracy: 0.7640

Epoch 12/40

18/18 [==============================] - 0s 13ms/step - loss: 0.1659 - accuracy: 0.9532 - val_loss: 1.0460 - val_accuracy: 0.7970

Epoch 13/40

18/18 [==============================] - 0s 13ms/step - loss: 0.1497 - accuracy: 0.9526 - val_loss: 1.0462 - val_accuracy: 0.8059

Epoch 14/40

18/18 [==============================] - 0s 13ms/step - loss: 0.1439 - accuracy: 0.9534 - val_loss: 1.1367 - val_accuracy: 0.7939

Epoch 15/40

18/18 [==============================] - 0s 12ms/step - loss: 0.1316 - accuracy: 0.9578 - val_loss: 1.1815 - val_accuracy: 0.7854

Epoch 16/40

18/18 [==============================] - 0s 13ms/step - loss: 0.1306 - accuracy: 0.9540 - val_loss: 1.1586 - val_accuracy: 0.7854

Epoch 17/40

18/18 [==============================] - 0s 13ms/step - loss: 0.1271 - accuracy: 0.9540 - val_loss: 1.2187 - val_accuracy: 0.7872

Epoch 18/40

18/18 [==============================] - 0s 13ms/step - loss: 0.1186 - accuracy: 0.9555 - val_loss: 1.1646 - val_accuracy: 0.7903

Epoch 19/40

18/18 [==============================] - 0s 12ms/step - loss: 0.1206 - accuracy: 0.9549 - val_loss: 1.2377 - val_accuracy: 0.7898

Epoch 20/40

18/18 [==============================] - 0s 14ms/step - loss: 0.1124 - accuracy: 0.9549 - val_loss: 1.2325 - val_accuracy: 0.7921

Epoch 21/40

18/18 [==============================] - 0s 13ms/step - loss: 0.1125 - accuracy: 0.9550 - val_loss: 1.3360 - val_accuracy: 0.7778

Epoch 22/40

18/18 [==============================] - 0s 13ms/step - loss: 0.1130 - accuracy: 0.9552 - val_loss: 1.2904 - val_accuracy: 0.7827

Epoch 23/40

18/18 [==============================] - 0s 13ms/step - loss: 0.1071 - accuracy: 0.9568 - val_loss: 1.3552 - val_accuracy: 0.7743

Epoch 24/40

18/18 [==============================] - 0s 13ms/step - loss: 0.1059 - accuracy: 0.9545 - val_loss: 1.3197 - val_accuracy: 0.7876

Epoch 25/40

18/18 [==============================] - 0s 14ms/step - loss: 0.1018 - accuracy: 0.9548 - val_loss: 1.3054 - val_accuracy: 0.7854

Epoch 26/40

18/18 [==============================] - 0s 13ms/step - loss: 0.1033 - accuracy: 0.9554 - val_loss: 1.2666 - val_accuracy: 0.7885

Epoch 27/40

18/18 [==============================] - 0s 14ms/step - loss: 0.1017 - accuracy: 0.9547 - val_loss: 1.2670 - val_accuracy: 0.7876

Epoch 28/40

18/18 [==============================] - 0s 13ms/step - loss: 0.1002 - accuracy: 0.9555 - val_loss: 1.2960 - val_accuracy: 0.7916

Epoch 29/40

18/18 [==============================] - 0s 15ms/step - loss: 0.0976 - accuracy: 0.9560 - val_loss: 1.2800 - val_accuracy: 0.7854

Epoch 30/40

18/18 [==============================] - 0s 13ms/step - loss: 0.0997 - accuracy: 0.9548 - val_loss: 1.3389 - val_accuracy: 0.7814

Epoch 31/40

18/18 [==============================] - 0s 12ms/step - loss: 0.0937 - accuracy: 0.9568 - val_loss: 1.3335 - val_accuracy: 0.7858

Epoch 32/40

18/18 [==============================] - 0s 13ms/step - loss: 0.0945 - accuracy: 0.9558 - val_loss: 1.3215 - val_accuracy: 0.7970

Epoch 33/40

18/18 [==============================] - 0s 12ms/step - loss: 0.0946 - accuracy: 0.9566 - val_loss: 1.3381 - val_accuracy: 0.7885

Epoch 34/40

18/18 [==============================] - 0s 12ms/step - loss: 0.0918 - accuracy: 0.9552 - val_loss: 1.2981 - val_accuracy: 0.7956

Epoch 35/40

18/18 [==============================] - 0s 13ms/step - loss: 0.0908 - accuracy: 0.9548 - val_loss: 1.4795 - val_accuracy: 0.7783

Epoch 36/40

18/18 [==============================] - 0s 13ms/step - loss: 0.0882 - accuracy: 0.9557 - val_loss: 1.3786 - val_accuracy: 0.7921

Epoch 37/40

18/18 [==============================] - 0s 13ms/step - loss: 0.0883 - accuracy: 0.9559 - val_loss: 1.3973 - val_accuracy: 0.7965

Epoch 38/40

18/18 [==============================] - 0s 14ms/step - loss: 0.0900 - accuracy: 0.9549 - val_loss: 1.3873 - val_accuracy: 0.7863

Epoch 39/40

18/18 [==============================] - 0s 12ms/step - loss: 0.0853 - accuracy: 0.9555 - val_loss: 1.4894 - val_accuracy: 0.7801

Epoch 40/40

18/18 [==============================] - 0s 12ms/step - loss: 0.0858 - accuracy: 0.9564 - val_loss: 1.4638 - val_accuracy: 0.7801- 기본 모델과 유사하나, 조금 더딤

diff_history(history, normal_history)