2-1. 들어가며

1. Session-Based Recommendation

- 현재 시점에 고객이 좋아할만한 상품을 추천

- 세션 데이터 기반 "유저가 다음 클릭" or "구매할 아이템 예측"으로 추천

- Session

- 유저가 서비스를 이용하며 발생한 중요 정보를 담은 데이터

- 서버에 저장

- 유저 행동 데이터가 ➡️ 유저 측 브라우저를 통해 ➡️ 쿠키로 저장

- 쿠키가 세션과 상호작용하며 정보를 주고 받음!

- 세션 : 브라우저 종료 이전까지의 유저 행동을 담은 시퀀스 데이터

참고 자료 : [WEB] 쿠키, 세션이란?

-

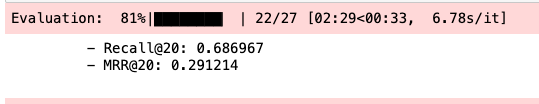

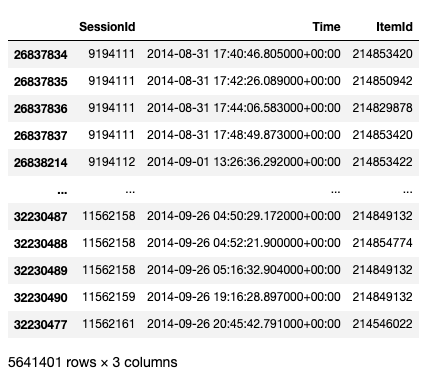

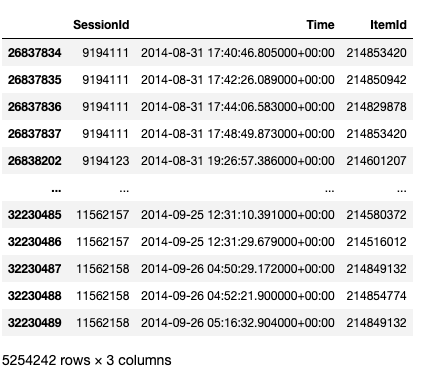

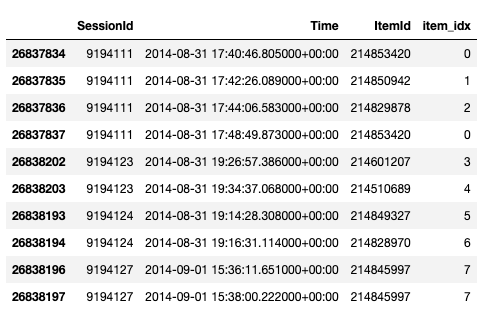

데이터 예시

- 9194111 세션을 가진 유저가 8분동안 4개 아이템을 본 상황

- 아이템 : 214853420, 214850942, 214829878, 214853420

- 9194111 세션을 가진 유저가 8분동안 4개 아이템을 본 상황

-

Task

- 유저가 214853420을 보면 -> 214850942을 추천

- 이어서 214850942을 보면 -> 214829878을 추천하고자 함

사용할 데이터: E-Commerce

- 프로젝트 폴더 생성 & 프로젝트 폴더 링크 연결

$ mkdir -p ~/aiffel/yoochoose/data

$ ln -s ~/data/* ~/aiffel/yoochoose/dataimport os

f = open(os.getenv('HOME')+'/aiffel/yoochoose/data/dataset-README.txt', 'r')

while True:

line = f.readline()

if not line: break

print(line)

f.close()SUMMARY

================================================================================

This dataset was constructed by YOOCHOOSE GmbH to support participants in the RecSys Challenge 2015.

See http://recsys.yoochoose.net for details about the challenge.

The YOOCHOOSE dataset contain a collection of sessions from a retailer, where each session

is encapsulating the click events that the user performed in the session.

For some of the sessions, there are also buy events; means that the session ended

with the user bought something from the web shop. The data was collected during several

months in the year of 2014, reflecting the clicks and purchases performed by the users

of an on-line retailer in Europe. To protect end users privacy, as well as the retailer,

all numbers have been modified. Do not try to reveal the identity of the retailer.

LICENSE

================================================================================

This dataset is licensed under the Creative Commons Attribution-NonCommercial-NoDerivatives 4.0

International License. To view a copy of this license, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

YOOCHOOSE cannot guarantee the completeness and correctness of the data or the validity

of results based on the use of the dataset as it was collected by implicit tracking of a website.

If you have any further questions or comments, please contact YooChoose <support@YooChoose.com>.

The data is provided "as it is" and there is no obligation of YOOCHOOSE to correct it,

improve it or to provide additional information about it.

CLICKS DATASET FILE DESCRIPTION

================================================================================

The file yoochoose-clicks.dat comprising the clicks of the users over the items.

Each record/line in the file has the following fields/format: Session ID, Timestamp, Item ID, Category

-Session ID – the id of the session. In one session there are one or many clicks. Could be represented as an integer number.

-Timestamp – the time when the click occurred. Format of YYYY-MM-DDThh:mm:ss.SSSZ

-Item ID – the unique identifier of the item that has been clicked. Could be represented as an integer number.

-Category – the context of the click. The value "S" indicates a special offer, "0" indicates a missing value, a number between 1 to 12 indicates a real category identifier,

any other number indicates a brand. E.g. if an item has been clicked in the context of a promotion or special offer then the value will be "S", if the context was a brand i.e BOSCH,

then the value will be an 8-10 digits number. If the item has been clicked under regular category, i.e. sport, then the value will be a number between 1 to 12.

BUYS DATSET FILE DESCRIPTION

================================================================================

The file yoochoose-buys.dat comprising the buy events of the users over the items.

Each record/line in the file has the following fields: Session ID, Timestamp, Item ID, Price, Quantity

-Session ID - the id of the session. In one session there are one or many buying events. Could be represented as an integer number.

-Timestamp - the time when the buy occurred. Format of YYYY-MM-DDThh:mm:ss.SSSZ

-Item ID – the unique identifier of item that has been bought. Could be represented as an integer number.

-Price – the price of the item. Could be represented as an integer number.

-Quantity – the quantity in this buying. Could be represented as an integer number.

TEST DATASET FILE DESCRIPTION

================================================================================

The file yoochoose-test.dat comprising only clicks of users over items.

This file served as a test file in the RecSys challenge 2015.

The structure is identical to the file yoochoose-clicks.dat but you will not find the

corresponding buying events to these sessions in the yoochoose-buys.dat file.- 데이터 설명 확인

- 유저에 대한 정보를 알 수 없음(성별, 나이, 장소, 마지막 접속 날짜, 이전 구매 내역, ...)

- 아이템 정보도 알 수 없음(실제로 어떤 물건인지와 사진, 설명, 가격 등..)

- 유저의 정보를 알 수 있는 경우 : Sequential Recommendation

- Context-Aware로 연구되고 있음(Sequential Recommendation 모델에 적용)

- E-Commerce 데이터 특징

- 비로그인 유저가 많음

- 로그인 상태여도, 접속 시마다 탐색 의도가 매우 다른 편

2-2. Data Preprocess

1. Data Load

import datetime as dt

from pathlib import Path

import os

import numpy as np

import pandas as pd

import warnings

warnings.filterwarnings('ignore')data_path = Path(os.getenv('HOME')+'/aiffel/yoochoose/data')

train_path = data_path / 'yoochoose-clicks.dat'

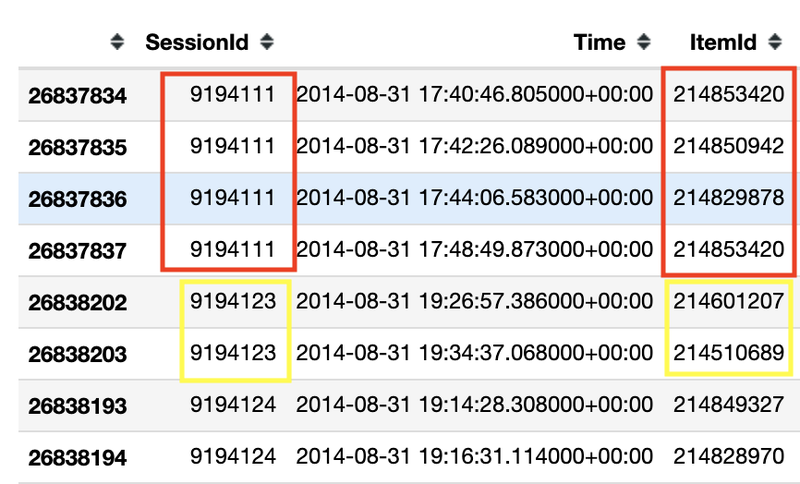

train_path

- Click 데이터 : Session Id, TimeStamp, ItemId 칼럼 사용

def load_data(data_path: Path, nrows=None):

data = pd.read_csv(data_path, sep=',', header=None, usecols=[0, 1, 2],

parse_dates=[1], dtype={0: np.int32, 2: np.int32}, nrows=nrows)

data.columns = ['SessionId', 'Time', 'ItemId']

return datadata = load_data(train_path, None)

data.sort_values(['SessionId', 'Time'], inplace=True) # data를 id와 시간 순서로 정렬

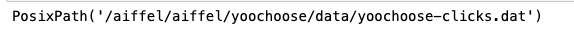

data

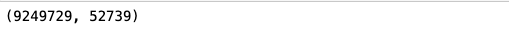

- 세션 수 및 아이템 수 확인

- 세션 수 : 약 900만 개(≠ 유저 수가 900만명이라는 건 아님, 유저 1명이 세션 여러 개를 만들 수 있기 때문)

- 아이템 수 : 약 5만 개

data['SessionId'].nunique(), data['ItemId'].nunique()

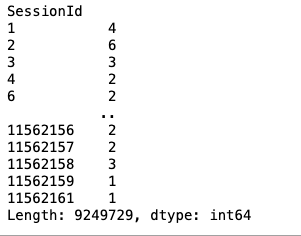

2. Session Length

- 세션이 갖는 클릭 데이터 확인

session_length=SessionId를 공유하는 데이터 row 개수- 그 세션의 사용자가 몇 번의 액션을 취했는지가 됨

session_length = data.groupby('SessionId').size()

session_length

- 각 세션 길이 확인

- 보통 2~3

- 99.9% 세션 : 41 이하

session_length.median(), session_length.mean()

session_length.min(), session_length.max()

session_length.quantile(0.999)

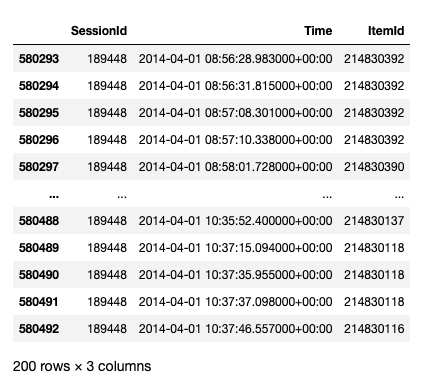

- 길이 200인 세션 이상 감지

- 짧은 간격으로 클릭을 1시간 30분 가량 지속중

- 이러한 이상 데이터를 제거할 것인지, 포함시킬 것인지를 고민해봐야 함

long_session = session_length[session_length==200].index[0]

data[data['SessionId']==long_session]

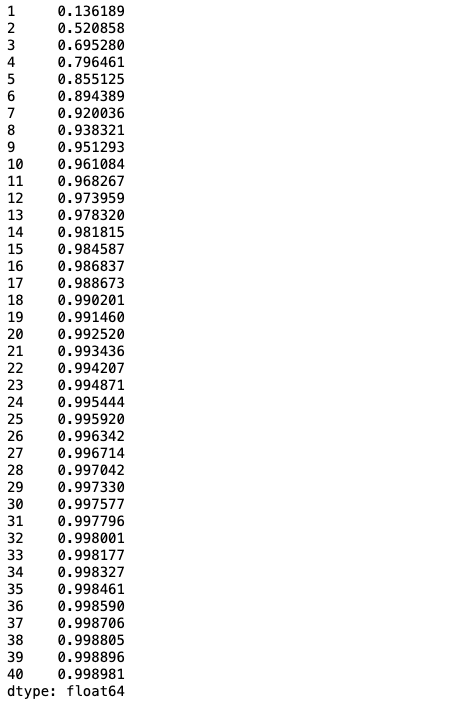

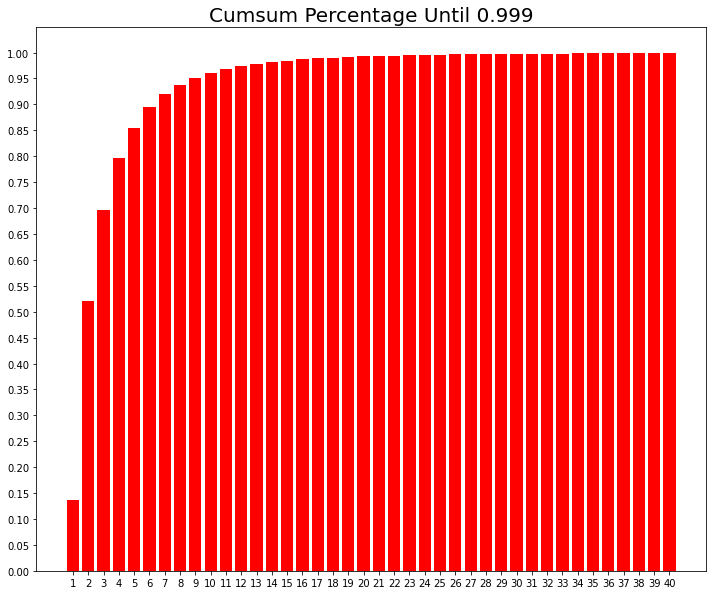

- 세션 길이 기준 하위 99.9%까지의 분포 누적합

length_count = session_length.groupby(session_length).size()

length_percent_cumsum = length_count.cumsum() / length_count.sum()

length_percent_cumsum_999 = length_percent_cumsum[length_percent_cumsum < 0.999]

length_percent_cumsum_999

import matplotlib.pyplot as plt

plt.figure(figsize=(12, 10))

plt.bar(x=length_percent_cumsum_999.index,

height=length_percent_cumsum_999, color='red')

plt.xticks(length_percent_cumsum_999.index)

plt.yticks(np.arange(0, 1.01, 0.05))

plt.title('Cumsum Percentage Until 0.999', size=20)

plt.show()

추천 시스템은 유저-상품 관계 매트릭스를 유저 행렬과 상품 행렬 곱으로 표현하는 Matrix Factorization 모델을 많이 사용

- 유저 - 클릭한 상품정보 관계를 적용한다면?

- 희소 행렬 -> 모델이 제대로 학습되지 않을 가능성이 큼

- 세션 정보를 통해 유저를 ID 기반으로 정리할 수 없음(비로그인)

- 세션 1개를 유저 1명으로 보게 된다면 기존 Matrix보다 훨씬 더 sparse한 형태가 됨

3. Session Time

-

추천 시스템 구축 시에는 최근 소비 트렌트를 학습하는 것이 중요!

-

추천 시스템에서 다루는 시간 데이터

- 데이터 생성 날짜

- 사용자가 특정 행동을 한 시간(클릭, 구매 등)

- 접속 시간대(오전, 오후, 새벽)

- 접속 유지 시간

- 접속 요일

- 계절

- 마지막 접속 시간

- 장바구니에 넣고 지난 시간

- 상품 인기도 변화

- ...

-

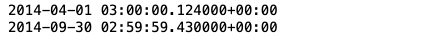

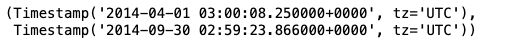

데이터 시간 관련 정보 확인

- 6개월 가량의 데이터 존재

oldest, latest = data['Time'].min(), data['Time'].max()

print(oldest)

print(latest)

- 1개월치 데이터만 사용

- 날짜 차이는 datetime 라이브러리의

timedalta사용

- 날짜 차이는 datetime 라이브러리의

month_ago = latest - dt.timedelta(30) # 최종 날짜로부터 30일 이전 날짜

data = data[data['Time'] > month_ago] # 방금 구한 날짜 이후의 데이터만.

data

4.Data Cleansing

- 길이가 1인 세션은 제거

- 유저가 최소 2개 이상 클릭 시 다음 클릭을 예측하니, 1은 제거

- 클릭 수가 과도하게 적은 아이템도 제거

short_session제거 후unpopular item아이템 제거 시 길이가 1인 세션이 다시 생길 수 있음- 반복문으로 지속 제거!

def cleanse_recursive(data: pd.DataFrame, shortest, least_click) -> pd.DataFrame:

while True:

before_len = len(data)

data = cleanse_short_session(data, shortest)

data = cleanse_unpopular_item(data, least_click)

after_len = len(data)

if before_len == after_len:

break

return data

def cleanse_short_session(data: pd.DataFrame, shortest):

session_len = data.groupby('SessionId').size()

session_use = session_len[session_len >= shortest].index

data = data[data['SessionId'].isin(session_use)]

return data

def cleanse_unpopular_item(data: pd.DataFrame, least_click):

item_popular = data.groupby('ItemId').size()

item_use = item_popular[item_popular >= least_click].index

data = data[data['ItemId'].isin(item_use)]

return datadata = cleanse_recursive(data, shortest=2, least_click=5)

data

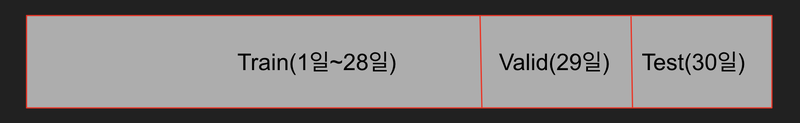

5. Train / Valid / Test split

- 테스트셋 확인

- Training set 및 기간이 겹치고 있음

- "지금"을 잘 예측해야 하기 때문에 1달 전에 성능이 좋았던 모델이 지금 성능이 좋다고 말할 수 없음

test_path = data_path / 'yoochoose-test.dat'

test= load_data(test_path)

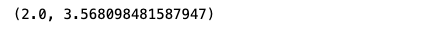

test['Time'].min(), test['Time'].max()

- Session-Based Recommendation에서는...

- 기간에 따라 Train / Valid / Test set으로 나눠 사용

- 마지막 1일 기간을 Test, 2일 전부터 1일 전까지를 Valid로 나누기

def split_by_date(data: pd.DataFrame, n_days: int):

final_time = data['Time'].max()

session_last_time = data.groupby('SessionId')['Time'].max()

session_in_train = session_last_time[session_last_time < final_time - dt.timedelta(n_days)].index

session_in_test = session_last_time[session_last_time >= final_time - dt.timedelta(n_days)].index

before_date = data[data['SessionId'].isin(session_in_train)]

after_date = data[data['SessionId'].isin(session_in_test)]

after_date = after_date[after_date['ItemId'].isin(before_date['ItemId'])]

return before_date, after_datetr, test = split_by_date(data, n_days=1)

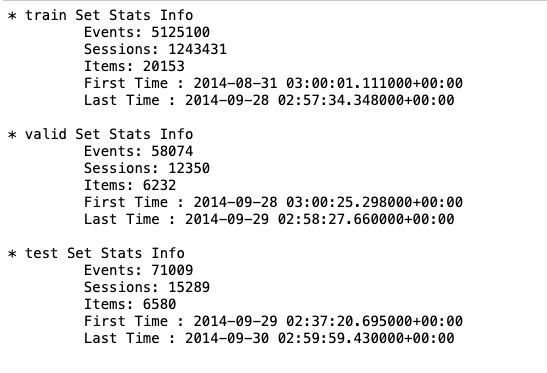

tr, val = split_by_date(tr, n_days=1)- data 확인

def stats_info(data: pd.DataFrame, status: str):

print(f'* {status} Set Stats Info\n'

f'\t Events: {len(data)}\n'

f'\t Sessions: {data["SessionId"].nunique()}\n'

f'\t Items: {data["ItemId"].nunique()}\n'

f'\t First Time : {data["Time"].min()}\n'

f'\t Last Time : {data["Time"].max()}\n')

stats_info(tr, 'train')

stats_info(val, 'valid')

stats_info(test, 'test')

- train data 기준으로 인덱싱

- train set에 없는 아이템이 val과 test 기간에 생길 수 있음

id2idx = {item_id : index for index, item_id in enumerate(tr['ItemId'].unique())}

def indexing(df, id2idx):

df['item_idx'] = df['ItemId'].map(lambda x: id2idx.get(x, -1))

return df

tr = indexing(tr, id2idx)

val = indexing(val, id2idx)

test = indexing(test, id2idx)- 데이터 저장

save_path = data_path / 'processed'

save_path.mkdir(parents=True, exist_ok=True)

tr.to_pickle(save_path / 'train.pkl')

val.to_pickle(save_path / 'valid.pkl')

test.to_pickle(save_path / 'test.pkl')2-3. 논문소개(GRU4REC)

SESSION-BASED RECOMMENDATIONS WITH RECURRENT NEURAL NETWORKS

- 2016년 ICLR에 공개한 모델

- Session data 분야에서 처음으로 RNN 계열 모델 적용 및 발표

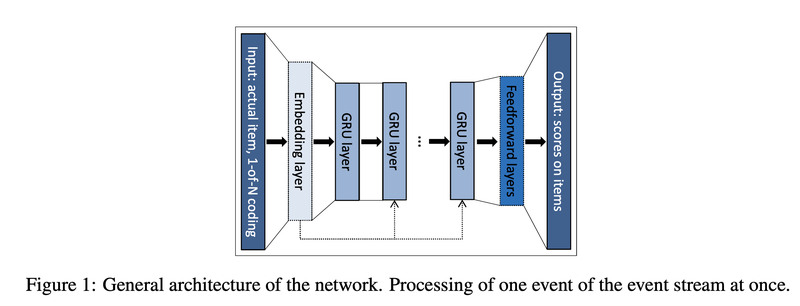

모델 구조

- 실험 결과, GRU 성능이 가장 뛰어남

- Embedding Layer를 사용하지 않아도 성능이 좋음

- One-hot Encoding만 사용

- One-hot Encoding만 사용

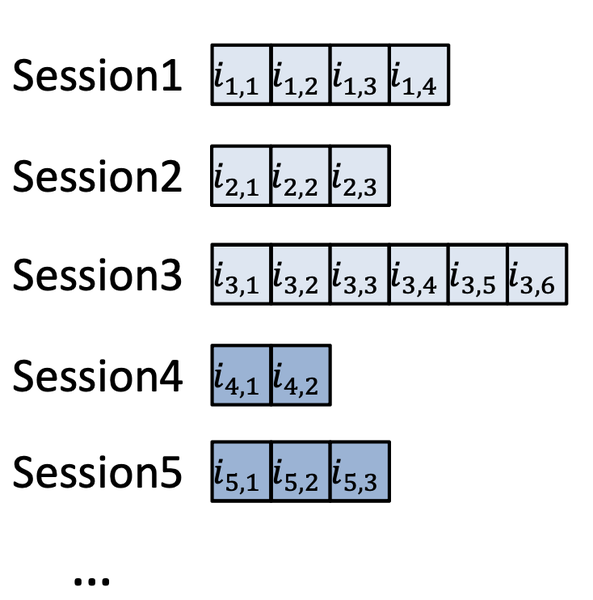

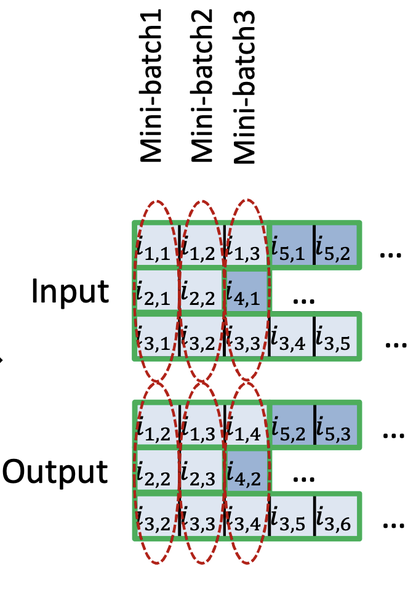

Session-Parallel Mini-Batches

- 세션이 끝날 때까지 무작정 기다리는 것이 아닌, 병렬 계산!

- Session2가 끝나면 ➡️ session4가 시작되는 방식

- Mini-Batch shape은 (3,1,1), RNN cell의 state가 1개로만 이뤄짐

- Tensorflow 기준 RNN ➡️ stateful=True 옵션으로 세션이 끝나면 state를 0으로 만듦

SAMPLING ON THE OUTPUT

- Negative Sampling와 같은 개념

- Item의 수가 많아, Loss 계산 시 인기도를 기반으로 샘플링

Ranking Loss

- 여러 아이템 중 다음 아이템이 무엇인지 분류하는 Task ✔️(현재 자료)

- 여러 아이템을 관련도 순으로 랭킹을 매겨 높은 랭킹 아이템을 추천하는 Task

2-4. Data Pipeline

- Session-Parallel Mini-Batch 구현

1.SessionDataset

- 세션이 시작되는 인덱스, 세션을 새로 인덱싱한 값을 갖는 클래스 만들기

class SessionDataset:

"""Credit to yhs-968/pyGRU4REC."""

def __init__(self, data):

self.df = data

self.click_offsets = self.get_click_offsets()

self.session_idx = np.arange(self.df['SessionId'].nunique()) # indexing to SessionId

def get_click_offsets(self):

"""

Return the indexes of the first click of each session IDs,

"""

offsets = np.zeros(self.df['SessionId'].nunique() + 1, dtype=np.int32)

offsets[1:] = self.df.groupby('SessionId').size().cumsum()

return offsetsSessionDataset 객체

- Train 데이터로 SessionDataset 객체 생성

click_offsets: 각 세션이 시작된 인덱스session_idx: 각 세션 인덱싱한 np.array

tr_dataset = SessionDataset(tr)

tr_dataset.df.head(10)

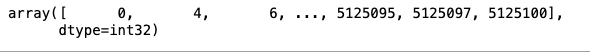

tr_dataset.click_offsets

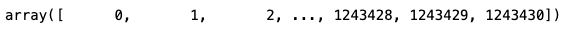

tr_dataset.session_idx

2. SessionDataLoader

SessionDataset

SessionDataset: Session-Parallel mini-batch 클래스를 만듦__iter__: 모델 인풋, 라벨, 세션이 끝나는 곳 yieldmask: RNN Cell State 초기화에 사용

class SessionDataLoader:

"""Credit to yhs-968/pyGRU4REC."""

def __init__(self, dataset: SessionDataset, batch_size=50):

self.dataset = dataset

self.batch_size = batch_size

def __iter__(self):

""" Returns the iterator for producing session-parallel training mini-batches.

Yields:

input (B,): Item indices that will be encoded as one-hot vectors later.

target (B,): a Variable that stores the target item indices

masks: Numpy array indicating the positions of the sessions to be terminated

"""

start, end, mask, last_session, finished = self.initialize()

"""

start : Index Where Session Start

end : Index Where Session End

mask : indicator for the sessions to be terminated

"""

while not finished:

min_len = (end - start).min() - 1 # Shortest Length Among Sessions

for i in range(min_len):

# Build inputs & targets

inp = self.dataset.df['item_idx'].values[start + i]

target = self.dataset.df['item_idx'].values[start + i + 1]

yield inp, target, mask

start, end, mask, last_session, finished = self.update_status(start, end, min_len, last_session, finished)

def initialize(self):

first_iters = np.arange(self.batch_size) # 첫 배치에 사용할 세션 Index 가져오기

last_session = self.batch_size - 1 # 마지막으로 다루고 있는 세션 Index 저장

start = self.dataset.click_offsets[self.dataset.session_idx[first_iters]] # data 상에서 session이 시작된 위치 가져오기

end = self.dataset.click_offsets[self.dataset.session_idx[first_iters] + 1] # session이 끝난 위치 바로 다음 위치 가져오기

mask = np.array([])

finished = False # data를 전부 순회했는지를 기록하기 위한 변수

return start, end, mask, last_session, finished

def update_status(self, start: np.ndarray, end: np.ndarray, min_len: int, last_session: int, finished: bool):

# 다음 배치 데이터를 생성하기 위해 상태를 update

start += min_len # __iter__에서 min_len 만큼 for문을 돌았으므로 start를 min_len만큼 더해주기

mask = np.arange(self.batch_size)[(end - start) == 1]

for i, idx in enumerate(mask, start=1): # mask에 추가된 세션 개수만큼 새로운 세션을 순회할 것

new_session = last_session + i

if new_session > self.dataset.session_idx[-1]: # 만약 새로운 세션이 마지막 세션 index보다 크다면 모든 학습데이터를 돈 것

finished = True

break

# update the next starting/ending point

start[idx] = self.dataset.click_offsets[self.dataset.session_idx[new_session]]

end[idx] = self.dataset.click_offsets[self.dataset.session_idx[new_session] + 1]

last_session += len(mask) # 마지막 세션의 위치 기록

return start, end, mask, last_session, finishedtr_data_loader = SessionDataLoader(tr_dataset, batch_size=4)

tr_dataset.df.head(15)

iter_ex = iter(tr_data_loader)- next : 계속해서 다음 데이터를 만듦

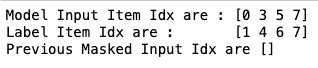

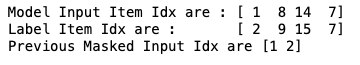

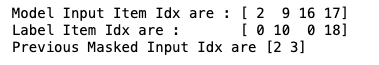

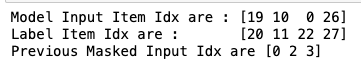

inputs, labels, mask = next(iter_ex)

print(f'Model Input Item Idx are : {inputs}')

print(f'Label Item Idx are : {"":5} {labels}')

print(f'Previous Masked Input Idx are {mask}')

2-5. Modeling

1. Evaluation Metric

-

성능 지표

-

recall@k지표(recall 개념 확장),Mean Average Precision@k지표(precision 개념 확장)- Session-Based Recommendation Task는 모델이 k개 아이템을 제시하면, 유저가 클릭/구매한 n개 아이템이 많아야 좋음

-

MRR,NDCG등의 지표- 몇 번째로 맞췄는지도 중요

- 순서에 민감한 지표도 주로 사용

-

-

사용할 지표는?

MRR: 정답 아이템이 나온 순번 역수Recall@k

정답 아이템이 추천 결과 앞쪽에 나오면 ➡️ 지표가 높아질 것!

def mrr_k(pred, truth: int, k: int):

indexing = np.where(pred[:k] == truth)[0]

if len(indexing) > 0:

return 1 / (indexing[0] + 1)

else:

return 0

def recall_k(pred, truth: int, k: int) -> int:

answer = truth in pred[:k]

return int(answer)2. Model Architecture

import numpy as np

import tensorflow as tf

from tensorflow.keras.layers import Input, Dense, Dropout, GRU

from tensorflow.keras.losses import categorical_crossentropy

from tensorflow.keras.models import Model

from tensorflow.keras.optimizers import Adam

from tensorflow.keras.utils import to_categorical

from tqdm import tqdm

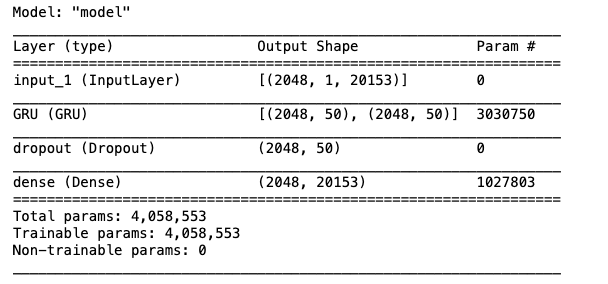

def create_model(args):

inputs = Input(batch_shape=(args.batch_size, 1, args.num_items))

gru, _ = GRU(args.hsz, stateful=True, return_state=True, name='GRU')(inputs)

dropout = Dropout(args.drop_rate)(gru)

predictions = Dense(args.num_items, activation='softmax')(dropout)

model = Model(inputs=inputs, outputs=[predictions])

model.compile(loss=categorical_crossentropy, optimizer=Adam(args.lr), metrics=['accuracy'])

model.summary()

return model- 모델에 사용한 하이퍼 파라미터를 class로 관리

class Args:

def __init__(self, tr, val, test, batch_size, hsz, drop_rate, lr, epochs, k):

self.tr = tr

self.val = val

self.test = test

self.num_items = tr['ItemId'].nunique()

self.num_sessions = tr['SessionId'].nunique()

self.batch_size = batch_size

self.hsz = hsz

self.drop_rate = drop_rate

self.lr = lr

self.epochs = epochs

self.k = k

args = Args(tr, val, test, batch_size=2048, hsz=50, drop_rate=0.1, lr=0.001, epochs=3, k=20)model = create_model(args)

3. Model Training

- epoch당 30분이 넘어가기 때문에 모델 학습 직전까지만 작성

# train셋으로 학습하면서 valid셋으로 검증

def train_model(model, args):

train_dataset = SessionDataset(args.tr)

train_loader = SessionDataLoader(train_dataset, batch_size=args.batch_size)

for epoch in range(1, args.epochs + 1):

total_step = len(args.tr) - args.tr['SessionId'].nunique()

tr_loader = tqdm(train_loader, total=total_step // args.batch_size, desc='Train', mininterval=1)

for feat, target, mask in tr_loader:

reset_hidden_states(model, mask)

input_ohe = to_categorical(feat, num_classes=args.num_items)

input_ohe = np.expand_dims(input_ohe, axis=1)

target_ohe = to_categorical(target, num_classes=args.num_items)

result = model.train_on_batch(input_ohe, target_ohe)

tr_loader.set_postfix(train_loss=result[0], accuracy = result[1])

val_recall, val_mrr = get_metrics(args.val, model, args, args.k) # valid set에 대해 검증합니다.

print(f"\t - Recall@{args.k} epoch {epoch}: {val_recall:3f}")

print(f"\t - MRR@{args.k} epoch {epoch}: {val_mrr:3f}\n")

def reset_hidden_states(model, mask):

gru_layer = model.get_layer(name='GRU')

hidden_states = gru_layer.states[0].numpy()

for elt in mask:

hidden_states[elt, :] = 0

gru_layer.reset_states(states=hidden_states)

def get_metrics(data, model, args, k: int):

dataset = SessionDataset(data)

loader = SessionDataLoader(dataset, batch_size=args.batch_size)

recall_list, mrr_list = [], []

total_step = len(data) - data['SessionId'].nunique()

for inputs, label, mask in tqdm(loader, total=total_step // args.batch_size, desc='Evaluation', mininterval=1):

reset_hidden_states(model, mask)

input_ohe = to_categorical(inputs, num_classes=args.num_items)

input_ohe = np.expand_dims(input_ohe, axis=1)

pred = model.predict(input_ohe, batch_size=args.batch_size)

pred_arg = tf.argsort(pred, direction='DESCENDING')

length = len(inputs)

recall_list.extend([recall_k(pred_arg[i], label[i], k) for i in range(length)])

mrr_list.extend([mrr_k(pred_arg[i], label[i], k) for i in range(length)])

recall, mrr = np.mean(recall_list), np.mean(mrr_list)

return recall, mrr# 학습 시간이 다소 오래 소요됩니다. 아래 주석을 풀지 마세요.

# train_model(model, args)

# 학습된 모델을 불러옵니다.

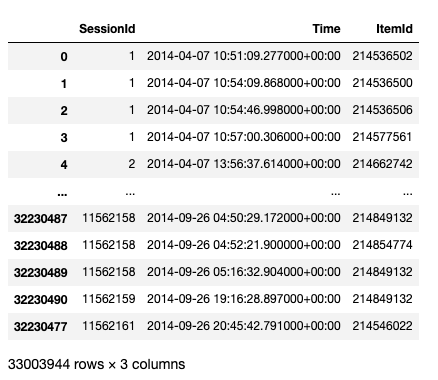

model = tf.keras.models.load_model(data_path / 'trained_model')4. Inference

- 모델 검증

def test_model(model, args, test):

test_recall, test_mrr = get_metrics(test, model, args, 20)

print(f"\t - Recall@{args.k}: {test_recall:3f}")

print(f"\t - MRR@{args.k}: {test_mrr:3f}\n")

test_model(model, args, test)