📌 목표

이번주는 VPC CNI를 사용한 쿠버네티스 클러스터 구성을 지난번과 마찬가지로 kops 를 통해 AWS 환경에 구성하게 된다.

POD간 통신을 패킷덤프를 통해 확인하게 되며,

VPC CNI에서 파드 생성 개수의 제한도 확인하게 된다.

AWS EBS를 통해 PV, PVC 를 다루며

볼륨 스냅샷도 확인한다.

추가로 AWS EFS,FSx,File cache 도 확인하자.

kops-oneclick 스크립트 분석

실습환경 구성에 앞서 가시다님이 제공해주시는 한방설치 스크립트 (kops-oneclick-f1.yaml)을 한번 분석해보자. (이런게 재미지요)

curl -O https://s3.ap-northeast-2.amazonaws.com/cloudformation.cloudneta.net/K8S/kops-oneclick-f1.yaml

# AWS cloudformation 템플릿으로 구성되어있으며 본인은 openstack heat template에 익숙하여

대략 파악할 수 있을 듯 하다. (참고: https://docs.aws.amazon.com/AWSCloudFormation/latest/UserGuide/Welcome.html )

AWSTemplateFormatVersion: '2010-09-09'

...

# 파라미터값은 keypair, IAM정보, S3, 노드 개수, VPC block 정보 그리고 배포 타겟 리전등이 설정된다.

...

## Resources 는 AWS 상의 각 자원을 생성하는 부분이며 Ref 함수를 이용하여 다른 리소스를 참조할 수 있다.

Resources:

## VPC를 생성하며 네트워크 대역을 선언한다.

MyVPC:

Type: AWS::EC2::VPC

Properties:

EnableDnsSupport: true

EnableDnsHostnames: true

CidrBlock: 10.0.0.0/16

Tags:

- Key: Name

Value: My-VPC

## internet gateway를 생성한다

MyIGW:

Type: AWS::EC2::InternetGateway

Properties:

Tags:

- Key: Name

Value: My-IGW

## 앞서 생성된 Internet Gateway를 내 VPC에 연결한다.

MyIGWAttachment:

Type: AWS::EC2::VPCGatewayAttachment

Properties:

InternetGatewayId: !Ref MyIGW

VpcId: !Ref MyVPC

## "MyPVC"에 라우팅 테이블을 생성한다.

MyPublicRT:

Type: AWS::EC2::RouteTable

Properties:

VpcId: !Ref MyVPC

Tags:

- Key: Name

Value: My-Public-RT

## 0.0.0.0/0 대역에 대한 Default gateway를 설정한다.

## 이때 앞서 생성한 internet gateway를 default gateway로 설정한다.

DefaultPublicRoute:

Type: AWS::EC2::Route

DependsOn: MyIGWAttachment

Properties:

RouteTableId: !Ref MyPublicRT

DestinationCidrBlock: 0.0.0.0/0

GatewayId: !Ref MyIGW

## "MyPVC"에 서브넷을 선언하며, AZ는 GetAZs 함수를 이용해서 array로 리턴된 첫번째 값으로 설정한다.

MyPublicSN:

Type: AWS::EC2::Subnet

Properties:

VpcId: !Ref MyVPC

AvailabilityZone: !Select [ 0, !GetAZs '' ]

CidrBlock: 10.0.0.0/24

Tags:

- Key: Name

Value: My-Public-SN

## MyPublicSN 서브넷에 앞서 생성한 라우팅 테이블을 할당한다.

MyPublicSNRouteTableAssociation:

Type: AWS::EC2::SubnetRouteTableAssociation

Properties:

RouteTableId: !Ref MyPublicRT

SubnetId: !Ref MyPublicSN

## ec2 sec group 설정: 파라미터(SgIngressSshCidr=$(curl -s ipinfo.io/ip)/32)

로 받게되는 SgIngressSshCidr 대역만 22,80 포트에 허용해준다.

KOPSEC2SG:

Type: AWS::EC2::SecurityGroup

Properties:

GroupDescription: kops ec2 Security Group

VpcId: !Ref MyVPC

Tags:

- Key: Name

Value: KOPS-EC2-SG

SecurityGroupIngress:

- IpProtocol: tcp

FromPort: '22'

ToPort: '22'

CidrIp: !Ref SgIngressSshCidr

- IpProtocol: tcp

FromPort: '80'

ToPort: '80'

CidrIp: !Ref SgIngressSshCidr

# EC2 생성 부분 : t3.small 타입의 인스턴스, 파라미터로 받는 ami 이미지와 keypair를 지정하며,

앞서 생성한 서브넷에 네트워크인터페이스를 생성한다.

KOPSEC2:

Type: AWS::EC2::Instance

Properties:

InstanceType: t3.small

ImageId: !Ref LatestAmiId

KeyName: !Ref KeyName

Tags:

- Key: Name

Value: kops-ec2

NetworkInterfaces:

- DeviceIndex: 0

SubnetId: !Ref MyPublicSN

GroupSet:

- !Ref KOPSEC2SG

AssociatePublicIpAddress: true

PrivateIpAddress: 10.0.0.10

## 인스턴스가 기동된 후 GuestOS내에서 실행되는 스크립트 내용이다. cloud-init에 의해 실행된다.

UserData:

## userdata 문자열을 Base64로 인코딩한다.

Fn::Base64:

!Sub |

#!/bin/bash

## hostname 변경

hostnamectl --static set-hostname kops-ec2

# Change Timezone

ln -sf /usr/share/zoneinfo/Asia/Seoul /etc/localtime

# Install Packages

cd /root

yum -y install tree jq git htop

## kubectl latest stable 버전을 내려 받아 설치.

curl -LO "https://dl.k8s.io/release/$(curl -L -s https://dl.k8s.io/release/stable.txt)/bin/linux/amd64/kubectl"

install -o root -g root -m 0755 kubectl /usr/local/bin/kubectl

## 최신버전의 kops를 내려받아 설치한다.

curl -Lo kops https://github.com/kubernetes/kops/releases/download/$(curl -s https://api.github.com/repos/kubernetes/kops/releases/latest | grep tag_name | cut -d '"' -f 4)/kops-linux-amd64

chmod +x kops

mv kops /usr/local/bin/kops

## aws client 를 받아 설치한다.

curl "https://awscli.amazonaws.com/awscli-exe-linux-x86_64.zip" -o "awscliv2.zip"

unzip awscliv2.zip >/dev/null 2>&1

sudo ./aws/install

export PATH=/usr/local/bin:$PATH

source ~/.bash_profile

## aws bash auto complition 설치

complete -C '/usr/local/bin/aws_completer' aws

## ssh rsa 키 생성 (password없이)

ssh-keygen -t rsa -N "" -f /root/.ssh/id_rsa

echo 'alias vi=vim' >> /etc/profile

## ec2-user 로 로그인시 바로 root 유저로 스위칭 설정

echo 'sudo su -' >> /home/ec2-user/.bashrc

## helm3, yh(yaml 하이라이트) 다운로드 및 설치

curl -s https://raw.githubusercontent.com/helm/helm/master/scripts/get-helm-3 | bash

wget https://github.com/andreazorzetto/yh/releases/download/v0.4.0/yh-linux-amd64.zip

unzip yh-linux-amd64.zip

mv yh /usr/local/bin/

## K8S Version 파라미터 변수 KubernetesVersion 사용.

export KUBERNETES_VERSION=${KubernetesVersion}

echo "export KUBERNETES_VERSION=${KubernetesVersion}" >> /etc/profile

## IAM User Credentials 파라미터 변수를 이용해 iam 설정.

export AWS_ACCESS_KEY_ID=${MyIamUserAccessKeyID}

export AWS_SECRET_ACCESS_KEY=${MyIamUserSecretAccessKey}

export AWS_DEFAULT_REGION=${AWS::Region}

export ACCOUNT_ID=$(aws sts get-caller-identity --query 'Account' --output text)

echo "export AWS_ACCESS_KEY_ID=$AWS_ACCESS_KEY_ID" >> /etc/profile

echo "export AWS_SECRET_ACCESS_KEY=$AWS_SECRET_ACCESS_KEY" >> /etc/profile

echo "export AWS_DEFAULT_REGION=$AWS_DEFAULT_REGION" >> /etc/profile

echo 'export AWS_PAGER=""' >>/etc/profile

echo "export ACCOUNT_ID=$(aws sts get-caller-identity --query 'Account' --output text)" >> /etc/profile

## CLUSTER_NAME 파라미터 변수 ClusterBaseName 사용.

export KOPS_CLUSTER_NAME=${ClusterBaseName}

echo "export KOPS_CLUSTER_NAME=$KOPS_CLUSTER_NAME" >> /etc/profile

## S3 State Store Bucket Name 지정

export KOPS_STATE_STORE=s3://${S3StateStore}

echo "export KOPS_STATE_STORE=s3://${S3StateStore}" >> /etc/profile

## 가시다님의 PKOS 깃허브 클론

git clone https://github.com/gasida/PKOS.git /root/pkos

## kubectl plugin manager인 "krew" 다운로드 및 설치

curl -LO https://github.com/kubernetes-sigs/krew/releases/download/v0.4.3/krew-linux_amd64.tar.gz

tar zxvf krew-linux_amd64.tar.gz

./krew-linux_amd64 install krew

export PATH="$PATH:/root/.krew/bin"

echo 'export PATH="$PATH:/root/.krew/bin"' >> /etc/profile

## kubectl autocompletion 설정 및 Install kube-ps1 (context,ns 표시툴)

echo 'source <(kubectl completion bash)' >> /etc/profile

echo 'alias k=kubectl' >> /etc/profile

echo 'complete -F __start_kubectl k' >> /etc/profile

git clone https://github.com/jonmosco/kube-ps1.git /root/kube-ps1

cat <<"EOT" >> /root/.bash_profile

source /root/kube-ps1/kube-ps1.sh

KUBE_PS1_SYMBOL_ENABLE=false

function get_cluster_short() {

echo "$1" | cut -d . -f1

}

KUBE_PS1_CLUSTER_FUNCTION=get_cluster_short

KUBE_PS1_SUFFIX=') '

PS1='$(kube_ps1)'$PS1

EOT

## Install krew plugin

## ctx (context 전환), ns (namespace전환)

## get-all (get all 보다 많은 범위의 오브젝트 확인가능)

## ktop (비주얼라이즈 된 관리툴 like k9s)

## df-pv (각 pv별 사용량 확인)

## mtail (다수 pod 의 로그 tail )

## tree (오브젝트 트리구조 표현)

kubectl krew install ctx ns get-all ktop # df-pv mtail tree

## Install Docker

amazon-linux-extras install docker -y

systemctl start docker && systemctl enable docker

## kops 사용하여 ec2인스턴스 생성 및 k8s 클러스터 디플로이 하는 과정이며

## dry-run으로 kops.yaml 을 우선 생성한다.

kops create cluster --zones=${AvailabilityZone1},${AvailabilityZone2} --networking amazonvpc --cloud aws \

--master-size ${MasterNodeInstanceType} --node-size ${WorkerNodeInstanceType} --node-count=${WorkerNodeCount} \

--network-cidr ${VpcBlock} --ssh-public-key ~/.ssh/id_rsa.pub --kubernetes-version "${KubernetesVersion}" --dry-run \

--output yaml > kops.yaml

## 아래 설정을 추가해준다.

cat <<EOT > addon.yaml

certManager:

enabled: true

awsLoadBalancerController:

enabled: true

externalDns:

provider: external-dns

metricsServer:

enabled: true

kubeProxy:

metricsBindAddress: 0.0.0.0

kubeDNS:

provider: CoreDNS

nodeLocalDNS:

enabled: true

memoryRequest: 5Mi

cpuRequest: 25m

EOT

sed -i -n -e '/aws$/r addon.yaml' -e '1,$p' kops.yaml

## max-pod per node 설정도 추가

cat <<EOT > maxpod.yaml

maxPods: 100

EOT

sed -i -n -e '/anonymousAuth/r maxpod.yaml' -e '1,$p' kops.yaml

## vpc ENABLE_PREFIX_DELEGATION 설정 추가

sed -i 's/amazonvpc: {}/amazonvpc:/g' kops.yaml

cat <<EOT > awsvpc.yaml

env:

- name: ENABLE_PREFIX_DELEGATION

value: "true"

EOT

sed -i -n -e '/amazonvpc/r awsvpc.yaml' -e '1,$p' kops.yaml

## 준비한 kops.yaml 을 이용해서 cluster를 생성한다.

cat kops.yaml | kops create -f -

kops update cluster --name $KOPS_CLUSTER_NAME --ssh-public-key ~/.ssh/id_rsa.pub --yes

## kops로 생성된 k8s cluster의 kubeconfig 를 사용하도록 설정

echo "kops export kubeconfig --admin" >> /etc/profile

## cloudformation 에서 사용가능한 output 설정: 현재 생성될 ec2인스턴스의 PublicIP를 변수로 받아 출력되도록 설정됨.

Outputs:

KOPSEC2IP:

Value: !GetAtt KOPSEC2.PublicIp 실습환경 배포

자 이제 실습환경을 배포해보자. 이번 실습에는 고사양 인스턴스 c5d 타입이 사용되므로 즉, 비싸다. 실습할때 배포하고, 사용하지 않을땐 삭제하고 궁금하면 다시 배포해서 확인하고 그렇게 하도록 하자. (아껴야 잘산다.)

## kops YAML 파일 다운로드

curl -O https://s3.ap-northeast-2.amazonaws.com/cloudformation.cloudneta.net/K8S/kops-oneclick-f1.yaml

## CloudFormation 스택 배포 : 노드 인스턴스 타입 변경 - MasterNodeInstanceType=t3.medium WorkerNodeInstanceType=c5d.large

# "kops-oneclick-f1.sh" 파일을 아래 내용으로 만들어준다. (필요시 재사용)

aws cloudformation deploy --template-file kops-oneclick-f1.yaml --stack-name mykops --parameter-overrides \

KeyName=spark SgIngressSshCidr=$(curl -s ipinfo.io/ip)/32 \

MyIamUserAccessKeyID=AKI..57W MyIamUserSecretAccessKey='F4KdY..og2d' \

ClusterBaseName='sparkandassociates.net' S3StateStore='pkos2' \

MasterNodeInstanceType=t3.medium WorkerNodeInstanceType=c5d.large \

--region ap-northeast-2

# CloudFormation 스택 배포 완료 후 kOps EC2 IP 출력 (yaml 안 outputs 정의된 k/v 사용)

aws cloudformation describe-stacks --stack-name mykops --query 'Stacks[*].Outputs[0].OutputValue' --output text

# ssh 접속가능한지 확인.

ssh -i ./spark.pem ec2-user@$(aws cloudformation describe-stacks --stack-name mykops --query 'Stacks[*].Outputs[0].OutputValue' --output text)

## post script 진행과정 확인은 cloud-init-output.log 로그통해 가능하다. (kops-ec2 노드)

## 15분뒤 k8s 노드 마스터,워커2대 배포가 되지않을 경우 에러로그 확인.

Created symlink from /etc/systemd/system/multi-user.target.wants/docker.service to /usr/lib/systemd/system/docker.service.

I0314 14:42:18.254922 3105 create_cluster.go:878] Using SSH public key: /root/.ssh/id_rsa.pub

Error: cluster "sparkandassociates.net" already exists; use 'kops update cluster' to apply changes

Error: error parsing file "-": Object 'Kind' is missing in 'null'

--ssh-public-key on update is deprecated - please use `kops create secret --name sparkandassociates.net sshpublickey admin -i ~/.ssh/id_rsa.pub` instead

I0314 14:42:18.661378 3125 update_cluster.go:238] Using SSH public key: /root/.ssh/id_rsa.pub

Error: exactly one 'admin' SSH public key can be specified when running with AWS; please delete a key using `kops delete secret`

Cloud-init v. 19.3-46.amzn2 finished at Tue, 14 Mar 2023 05:42:25 +0000. Datasource DataSourceEc2. Up 695.61 seconds

sparkandassociates.net 클러스터가 이미 있다고 나오는데, 몇번 재설치 과정에서 찌꺼기가 남아서 그런듯하다. 확인해보자.

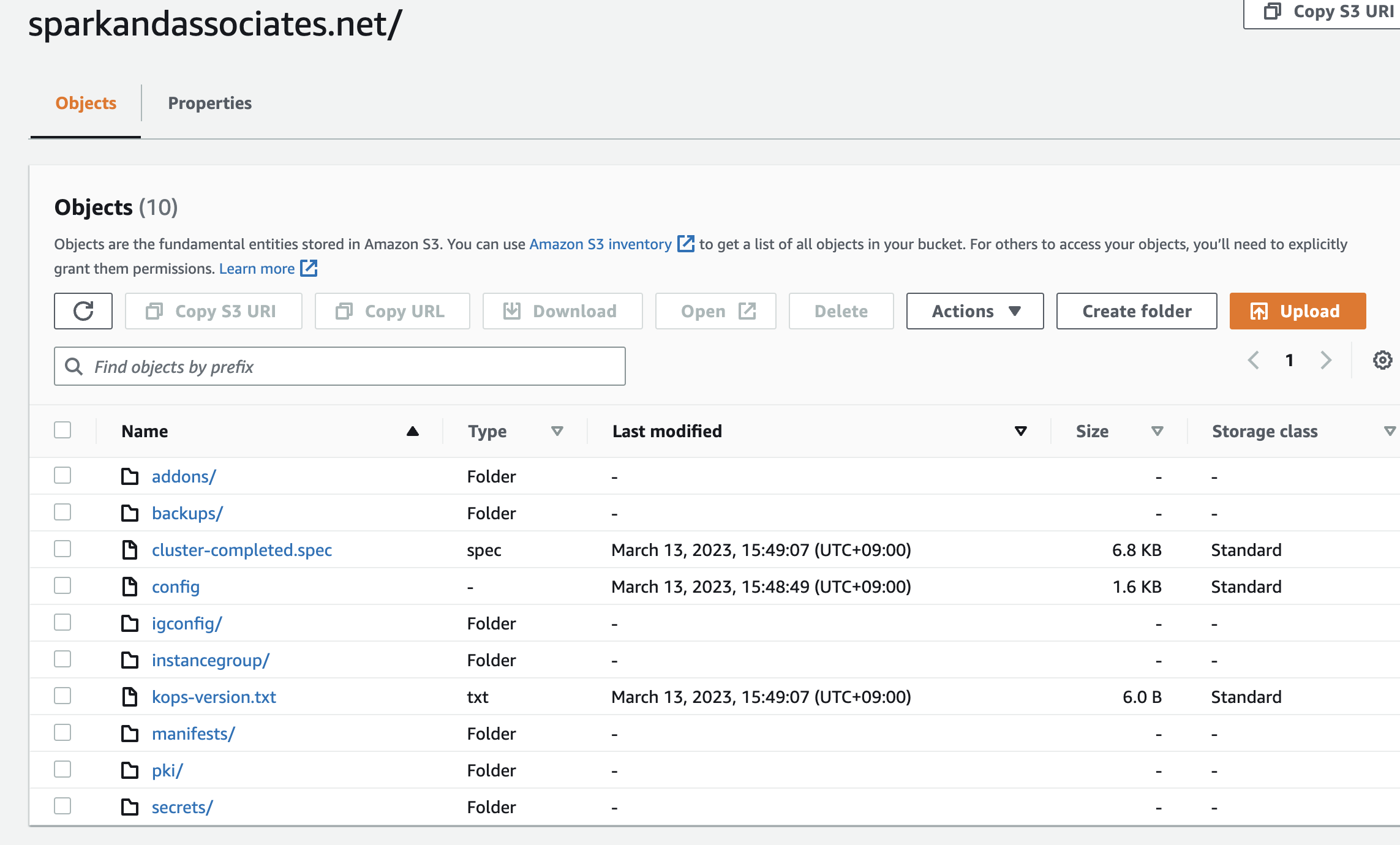

s3 pkos2 버킷내 클러스터 정보가 남아있음.

# s3 bucket 삭제후 다시 생성.

[root@san-1 pkos]# aws s3 mb s3://pkos2 --region ap-northeast-2

make_bucket: pkos2

[root@san-1 pkos]# aws s3 ls

2023-03-14 06:23:54 pkos2두번째 문제

Created symlink from /etc/systemd/system/multi-user.target.wants/docker.service to /usr/lib/systemd/system/docker.service.

/var/lib/cloud/instance/scripts/part-001: line 90: 3104 Segmentation fault kops create cluster --zones=ap-northeast-2a,ap-northeast-2c --networking amazonvpc --cloud aws --master-size t3.medium --node-size c5d.large --node-count=2 --network-cidr 172.30.0.0/16 --ssh-public-key ~/.ssh/id_rsa.pub --kubernetes-version "1.24.11" --dry-run --output yaml > kops.yaml

/var/lib/cloud/instance/scripts/part-001: line 127: 3115 Done cat kops.yaml

3116 Segmentation fault | kops create -f -

/var/lib/cloud/instance/scripts/part-001: line 128: 3117 Segmentation fault kops update cluster --name $KOPS_CLUSTER_NAME --ssh-public-key ~/.ssh/id_rsa.pub --yes

Cloud-init v. 19.3-46.amzn2 finished at Tue, 14 Mar 2023 06:41:53 +0000. Datasource DataSourceEc2. Up 999.85 secondsdry-run 실패.

## cloud-init 으로 변수 파싱되어 만들어진 스크립트 내용 확인.

/var/lib/cloud/instance/scripts/part-001

[root@kops-ec2 ~]# cat kops.yaml

[root@kops-ec2 ~]#route53내에서 만들어진 A-record 삭제후 정상적으로 생성됨.

cloud-init-output.log 파일 확인

kOps has set your kubectl context to sparkandassociates.net

W0315 13:37:59.908065 3042 update_cluster.go:347] Exported kubeconfig with no user authentication; use --admin, --user or --auth-plugin flags with `kops export kubeconfig`

Cluster is starting. It should be ready in a few minutes.

Suggestions:

* validate cluster: kops validate cluster --wait 10m

* list nodes: kubectl get nodes --show-labels

* ssh to a control-plane node: ssh -i ~/.ssh/id_rsa ubuntu@api.sparkandassociates.net

* the ubuntu user is specific to Ubuntu. If not using Ubuntu please use the appropriate user based on your OS.

* read about installing addons at: https://kops.sigs.k8s.io/addons.

Cloud-init v. 19.3-46.amzn2 finished at Wed, 15 Mar 2023 04:37:59 +0000. Datasource DataSourceEc2. Up 125.37 secondskops validate cluster 통해서 진행상황 확인가능하다.

(sparkandassociates:N/A) [root@kops-ec2 ~]# kops validate cluster --wait 10m

Validating cluster sparkandassociates.net

INSTANCE GROUPS

NAME ROLE MACHINETYPE MIN MAX SUBNETS

control-plane-ap-northeast-2a ControlPlane t3.medium 1 1 ap-northeast-2a

nodes-ap-northeast-2a Node c5d.large 1 1 ap-northeast-2a

nodes-ap-northeast-2c Node c5d.large 1 1 ap-northeast-2c

NODE STATUS

NAME ROLE READY

VALIDATION ERRORS

KIND NAME MESSAGE

dns apiserver Validation Failed

The external-dns Kubernetes deployment has not updated the Kubernetes cluster's API DNS entry to the correct IP address. The API DNS IP address is the placeholder address that kops creates: 203.0.113.123. Please wait about 5-10 minutes for a control plane node to start, external-dns to launch, and DNS to propagate. The protokube container and external-dns deployment logs may contain more diagnostic information. Etcd and the API DNS entries must be updated for a kops Kubernetes cluster to start.

Validation Failed

W0315 13:44:52.957150 4265 validate_cluster.go:232] (will retry): cluster not yet healthy

## 생성완료 확인.

(sparkandassociates:N/A) [root@kops-ec2 ~]# kops validate cluster --wait 10m

Validating cluster sparkandassociates.net

INSTANCE GROUPS

NAME ROLE MACHINETYPE MIN MAX SUBNETS

control-plane-ap-northeast-2a ControlPlane t3.medium 1 1 ap-northeast-2a

nodes-ap-northeast-2a Node c5d.large 1 1 ap-northeast-2a

nodes-ap-northeast-2c Node c5d.large 1 1 ap-northeast-2c

NODE STATUS

NAME ROLE READY

i-05538a0cedc2ceac8 node True

i-08a4f488be204357c control-plane True

i-0f94a3e2d9abe939f node True

Your cluster sparkandassociates.net is ready# 메트릭 서버 확인 : 메트릭은 15초 간격으로 cAdvisor를 통하여 가져옴

kubectl top node

(sparkandassociates:N/A) [root@kops-ec2 ~]# k top node

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

i-05538a0cedc2ceac8 32m 1% 1009Mi 28%

i-08a4f488be204357c 192m 9% 2027Mi 53%

i-0f94a3e2d9abe939f 24m 1% 965Mi 26%

# limit range 기본정책이 100m *최소 0.1CPU 를 개런티하므로

# 테스트로 100개 pod을 하나의 워커노드에서 기동시킬때 이부분에 걸리므로 테스트를 위해서 삭제.

(sparkandassociates:N/A) [root@kops-ec2 ~]# kubectl describe limitranges

Name: limits

Namespace: default

Type Resource Min Max Default Request Default Limit Max Limit/Request Ratio

---- -------- --- --- --------------- ------------- -----------------------

Container cpu - - 100m - -

(sparkandassociates:N/A) [root@kops-ec2 ~]# kubectl delete limitranges limits

limitrange "limits" deleted

(sparkandassociates:N/A) [root@kops-ec2 ~]# kubectl get limitranges

No resources found in default namespace.

(sparkandassociates:N/A) [root@kops-ec2 ~]#

자, 이제 시작

다음으로 네트워크이다.

쿠버네티스 네트워크

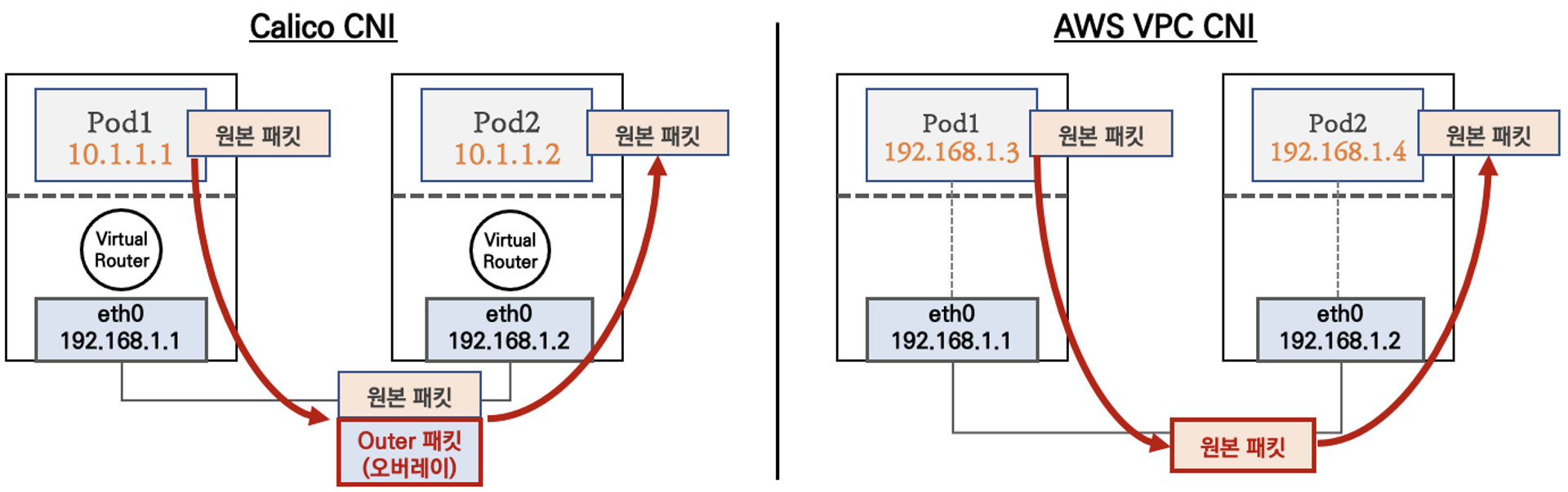

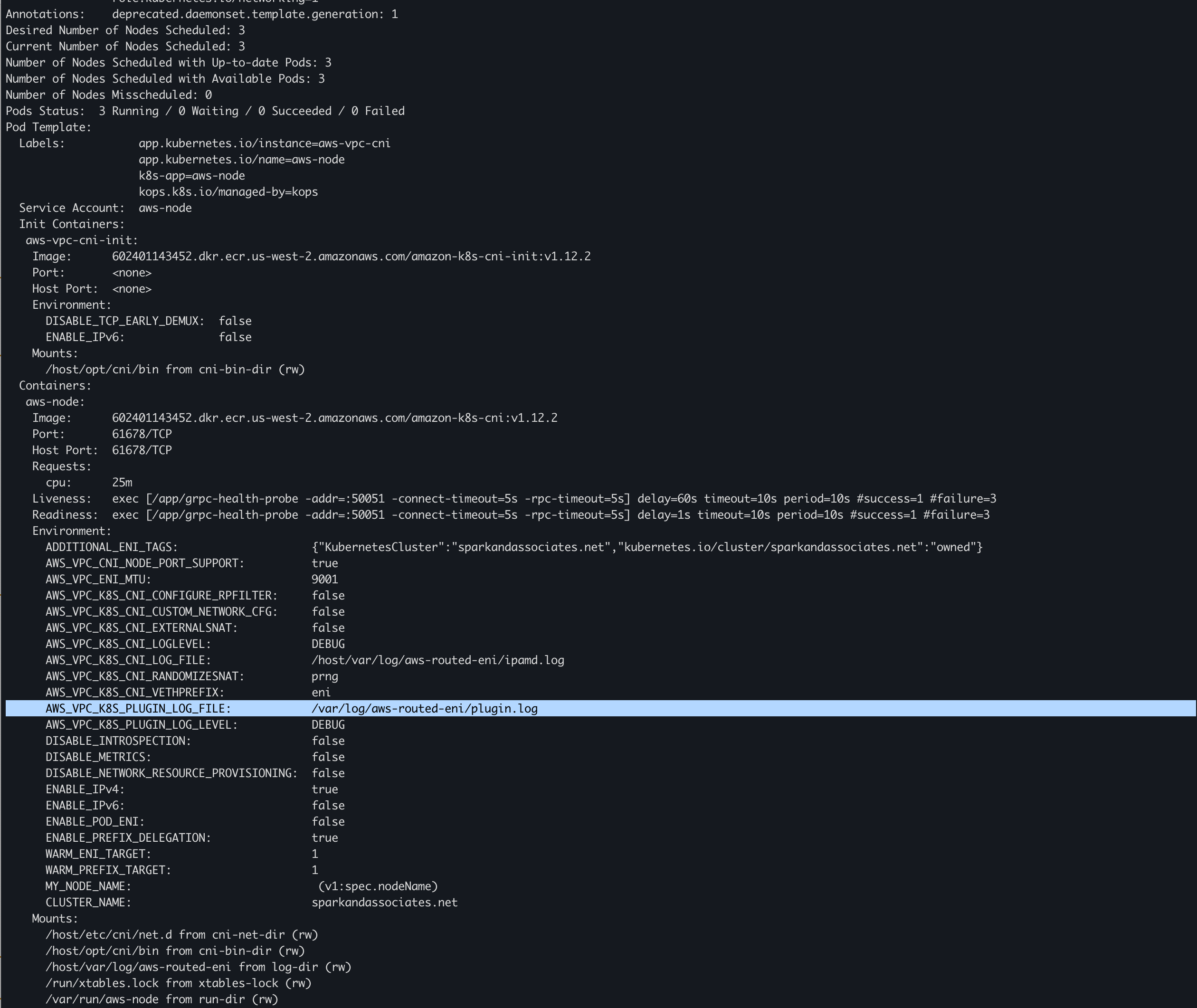

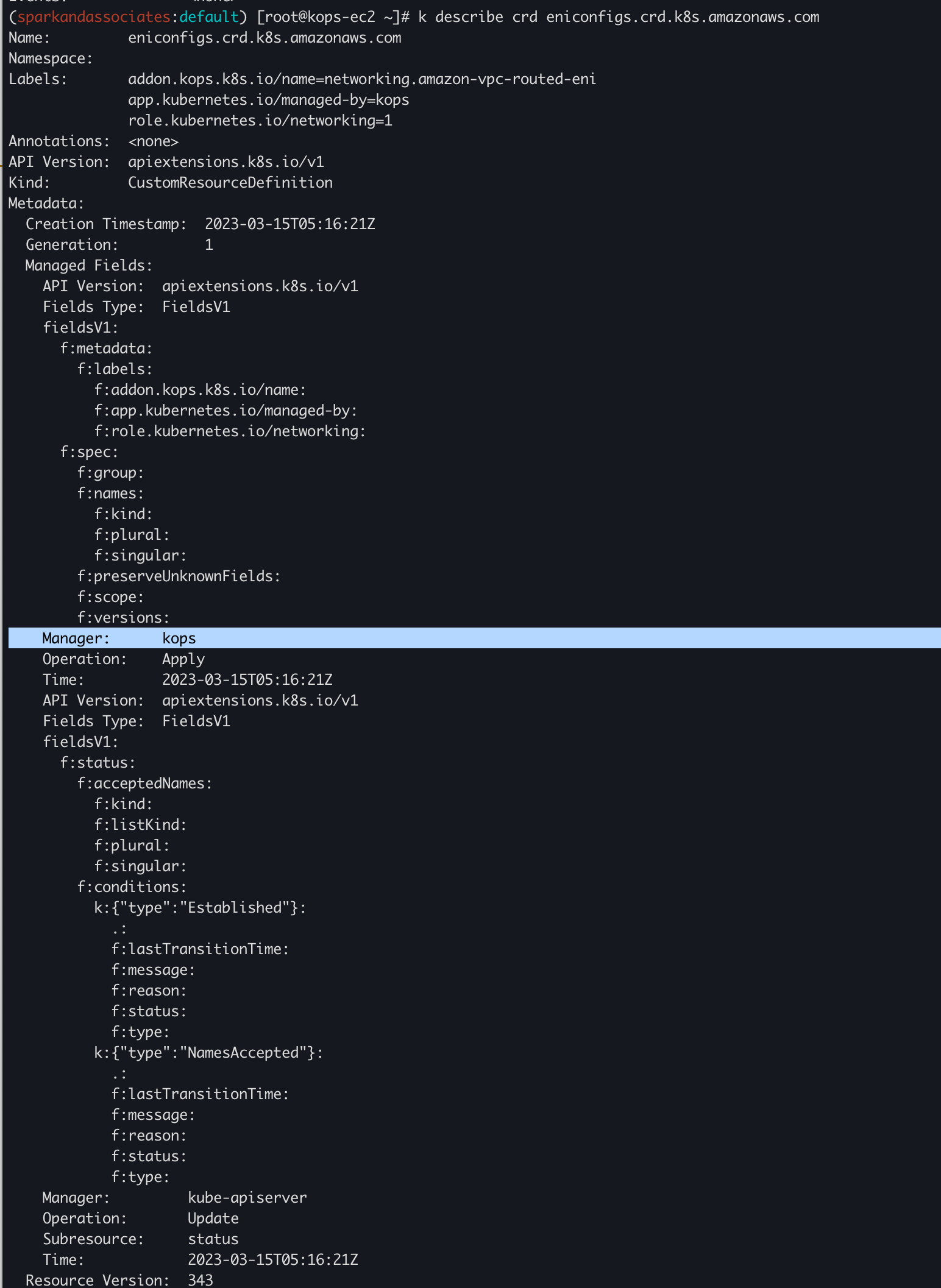

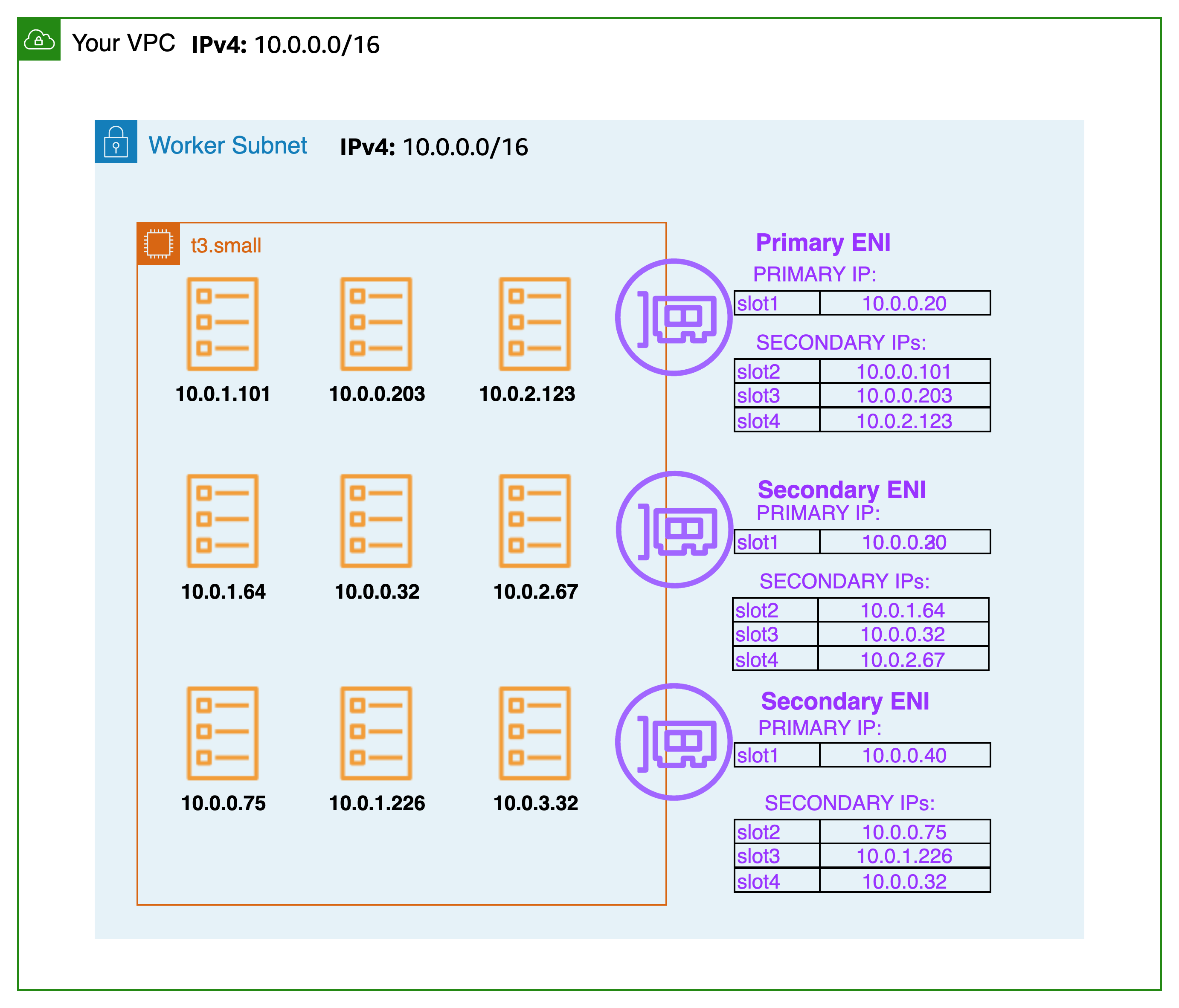

1. AWS VPC CNI 이해

일단 아래 그림하나로 바로 VPC CNI 에 대해 파악이 가능하다.

출처 - PKOS 자료내

직접 확인해보자.

# CNI 정보 확인

(sparkandassociates:N/A) [root@kops-ec2 ~]# kubectl describe daemonset aws-node --namespace kube-system | grep Image | cut -d "/" -f 2

amazon-k8s-cni-init:v1.12.2

amazon-k8s-cni:v1.12.2

# 노드 IP 확인

aws ec2 describe-instances --query "Reservations[*].Instances[*].{PublicIPAdd:PublicIpAddress,PrivateIPAdd:PrivateIpAddress,InstanceName:Tags[?Key=='Name']|[0].Value,Status:State.Name}" --filters Name=instance-state-name,Values=running --output table

(sparkandassociates:N/A) [root@kops-ec2 ~]# aws ec2 describe-instances --query "Reservations[*].Instances[*].{PublicIPAdd:PublicIpAddress,PrivateIPAdd:PrivateIpAddress,InstanceName:Tags[?Key=='Name']|[0].Value,Status:State.Name}" --filters Name=instance-state-name,Values=running --output table | grep -v 172.31.

----------------------------------------------------------------------------------------------------------------

| DescribeInstances |

+---------------------------------------------------------------+----------------+-----------------+-----------+

| InstanceName | PrivateIPAdd | PublicIPAdd | Status |

+---------------------------------------------------------------+----------------+-----------------+-----------+

| nodes-ap-northeast-2c.sparkandassociates.net | 172.30.66.26 | 15.164.221.63 | running |

| kops-ec2 | 10.0.0.10 | 13.124.35.72 | running |

| control-plane-ap-northeast-2a.masters.sparkandassociates.net | 172.30.63.222 | 13.125.181.109 | running |

| nodes-ap-northeast-2a.sparkandassociates.net | 172.30.32.225 | 3.35.141.26 | running |

+---------------------------------------------------------------+----------------+-----------------+-----------+

# 파드 IP 확인

kubectl get pod -n kube-system -o=custom-columns=NAME:.metadata.name,IP:.status.podIP,STATUS:.status.phase

# 파드 이름 확인

kubectl get pod -A -o name

# 파드 갯수 확인

kubectl get pod -A -o name | wc -l

kubectl ktop # 파드 정보 출력에는 다소 시간 필요

master node 접속 후 확인

# 툴 설치

sudo apt install -y tree jq net-tools

# CNI 정보 확인

ls /var/log/aws-routed-eni

cat /var/log/aws-routed-eni/plugin.log | jq

cat /var/log/aws-routed-eni/ipamd.log | jq

# 네트워크 정보 확인 : eniY는 pod network 네임스페이스와 veth pair

ip -br -c addr

ip -c addr

ip -c route

sudo iptables -t nat -S

sudo iptables -t nat -L -n -v

ubuntu@i-08a4f488be204357c:~$ ip -br -c addr

lo UNKNOWN 127.0.0.1/8 ::1/128

ens5 UP 172.30.63.222/19 fe80::d1:a1ff:febf:2b56/64

nodelocaldns DOWN 169.254.20.10/32

eni6d8cdfa2db1@if3 UP fe80::70f8:9fff:fe74:618d/64

eni95ee4851614@if3 UP fe80::e0fe:b9ff:fe1f:f5aa/64

enib6e94747ace@if3 UP fe80::5017:fdff:fe5a:24f6/64

enibafb7cbc19f@if3 UP fe80::b45f:96ff:febf:bd42/64

ubuntu@i-08a4f488be204357c:~$ ip -c route

default via 172.30.32.1 dev ens5 proto dhcp src 172.30.63.222 metric 100

172.30.32.0/19 dev ens5 proto kernel scope link src 172.30.63.222

172.30.32.1 dev ens5 proto dhcp scope link src 172.30.63.222 metric 100

172.30.56.192 dev eni6d8cdfa2db1 scope link

172.30.56.193 dev eni95ee4851614 scope link

172.30.56.194 dev enib6e94747ace scope link

172.30.56.195 dev enibafb7cbc19f scope link워커노드도 마찬가지로 접속해서 확인해보자.

# 워커 노드 Public IP 확인

aws ec2 describe-instances --query "Reservations[*].Instances[*].{PublicIPAdd:PublicIpAddress,InstanceName:Tags[?Key=='Name']|[0].Value}" --filters Name=instance-state-name,Values=running --output table

# 워커 노드 Public IP 변수 지정

W1PIP=15.164.221.63

W2PIP=3.35.141.26

# [워커 노드1~2] SSH 접속 : 접속 후 아래 툴 설치 등 정보 각각 확인

ssh -i ~/.ssh/id_rsa ubuntu@$W1PIP

ssh -i ~/.ssh/id_rsa ubuntu@$W2PIP

--------------------------------------------------

# 툴 설치

sudo apt install -y tree jq net-tools

# CNI 정보 확인

ls /var/log/aws-routed-eni

cat /var/log/aws-routed-eni/plugin.log | jq

cat /var/log/aws-routed-eni/ipamd.log | jq

# 네트워크 정보 확인

ip -br -c addr

ip -c addr

ip -c route

sudo iptables -t nat -S

sudo iptables -t nat -L -n -v2. 기본 네트워크 정보 확인

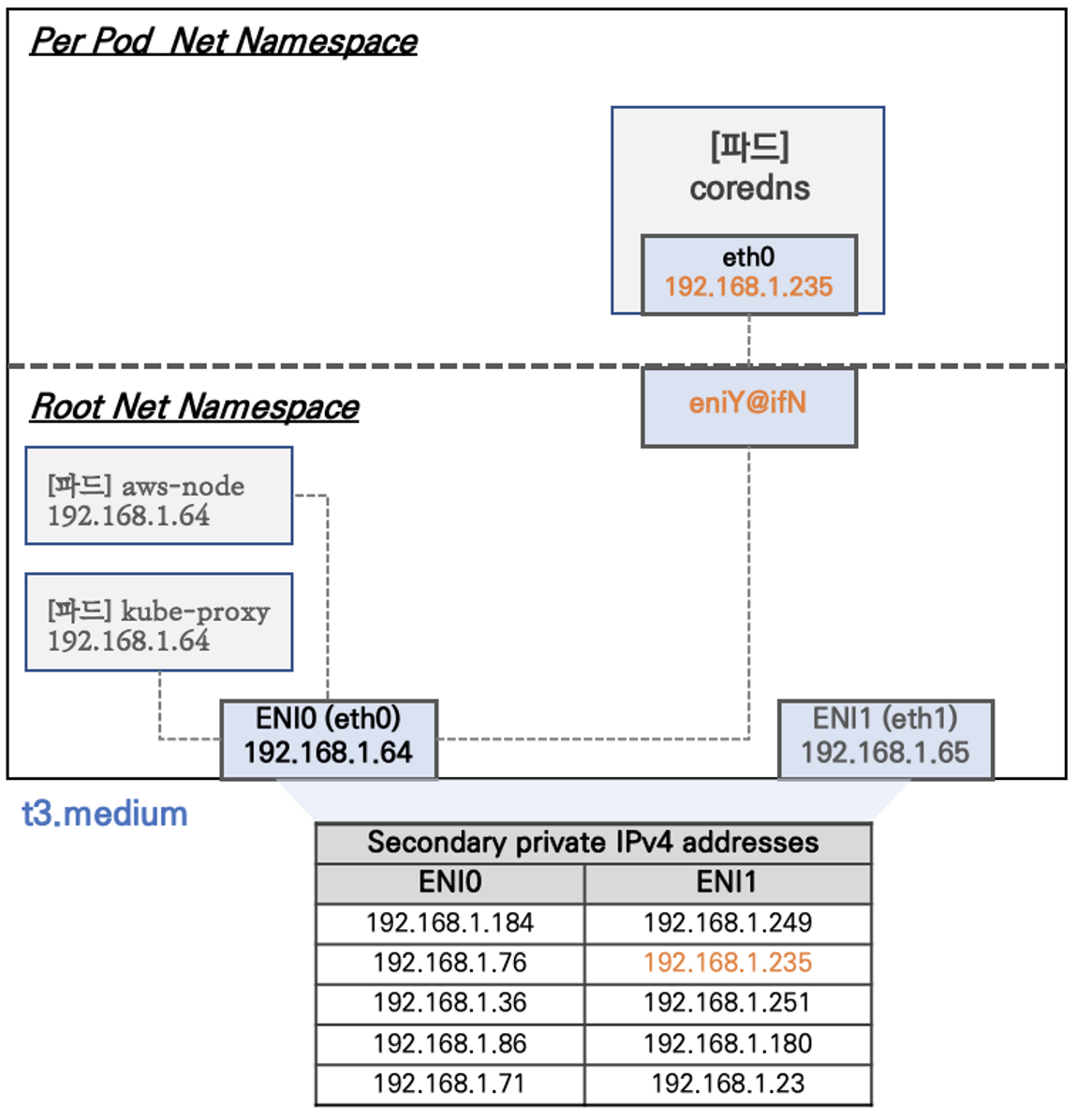

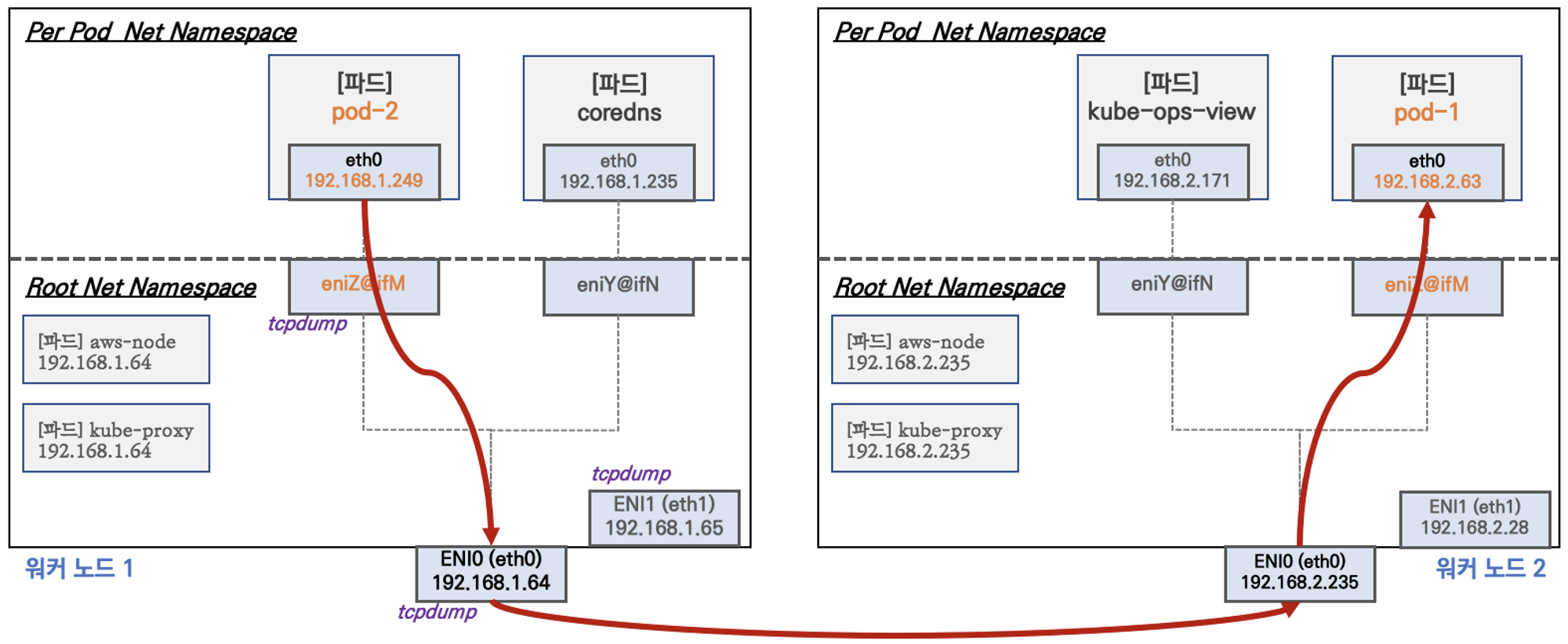

- Network 네임스페이스는 호스트(Root)와 파드 별(Per Pod)로 구분된다

- 특정한 파드(kube-proxy, aws-node)는 호스트(Root)의 IP를 그대로 사용한다

- t3.medium 의 경우 ENI 마다 최대 6개의 IP를 가질 수 있다

- ENI0, ENI1 으로 2개의 ENI는 자신의 IP 이외에 추가적으로 5개의 보조 프라이빗 IP를 가질수 있다

- coredns 파드는 veth 으로 호스트에는 eniY@ifN 인터페이스와 파드에 eth0 과 연결되어 있다

IP 주소 확인

(sparkandassociates:N/A) [root@kops-ec2 ~]# kubectl get pod -n kube-system -l app=ebs-csi-node -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

ebs-csi-node-7gd5l 3/3 Running 0 19h 172.30.56.192 i-08a4f488be204357c <none> <none>

ebs-csi-node-jghkn 3/3 Running 0 19h 172.30.94.160 i-05538a0cedc2ceac8 <none> <none>

ebs-csi-node-rz2x7 3/3 Running 0 19h 172.30.60.112 i-0f94a3e2d9abe939f <none> <none>

(sparkandassociates:N/A) [root@kops-ec2 ~]#

ubuntu@i-05538a0cedc2ceac8:~$ route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 172.30.64.1 0.0.0.0 UG 100 0 0 ens5

172.30.64.0 0.0.0.0 255.255.224.0 U 0 0 0 ens5

172.30.64.1 0.0.0.0 255.255.255.255 UH 100 0 0 ens5

172.30.88.64 0.0.0.0 255.255.255.255 UH 0 0 0 eniff5a9530b8d

172.30.94.160 0.0.0.0 255.255.255.255 UH 0 0 0 enif8808f94e33

172.30.94.161 0.0.0.0 255.255.255.255 UH 0 0 0 eni4122c9c8c4f

172.30.94.162 0.0.0.0 255.255.255.255 UH 0 0 0 eni942832c30bf

172.30.94.163 0.0.0.0 255.255.255.255 UH 0 0 0 eni3e424b82595

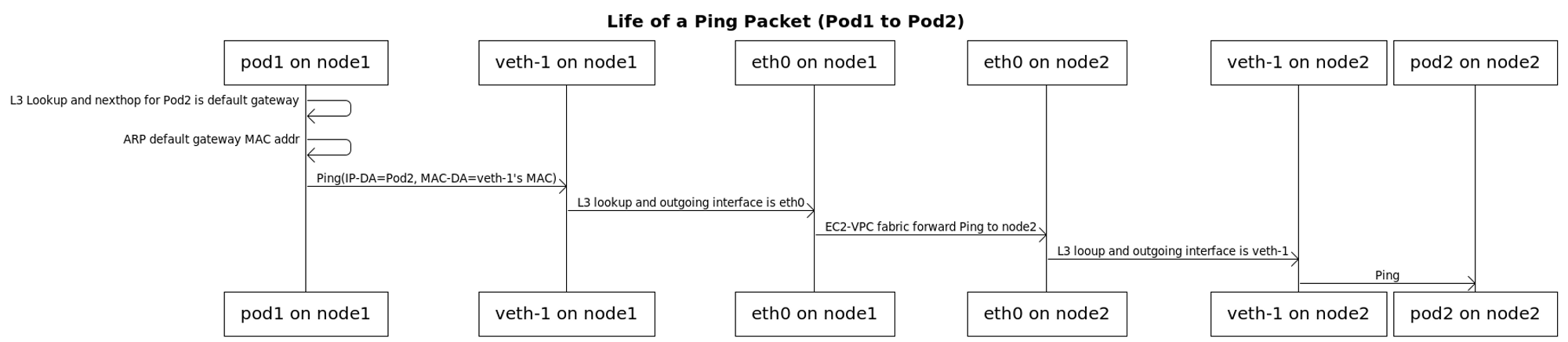

3. 노드 간 파드 통신

( 출처 : https://github.com/aws/amazon-vpc-cni-k8s/blob/master/docs/cni-proposal.md)

VPC CNI 는 VPC 내부에서 POD 네트워크 대역과 워커 노드 대역이 같으므로 별도 오버레이 통신 없이 직접 통신 가능하다.

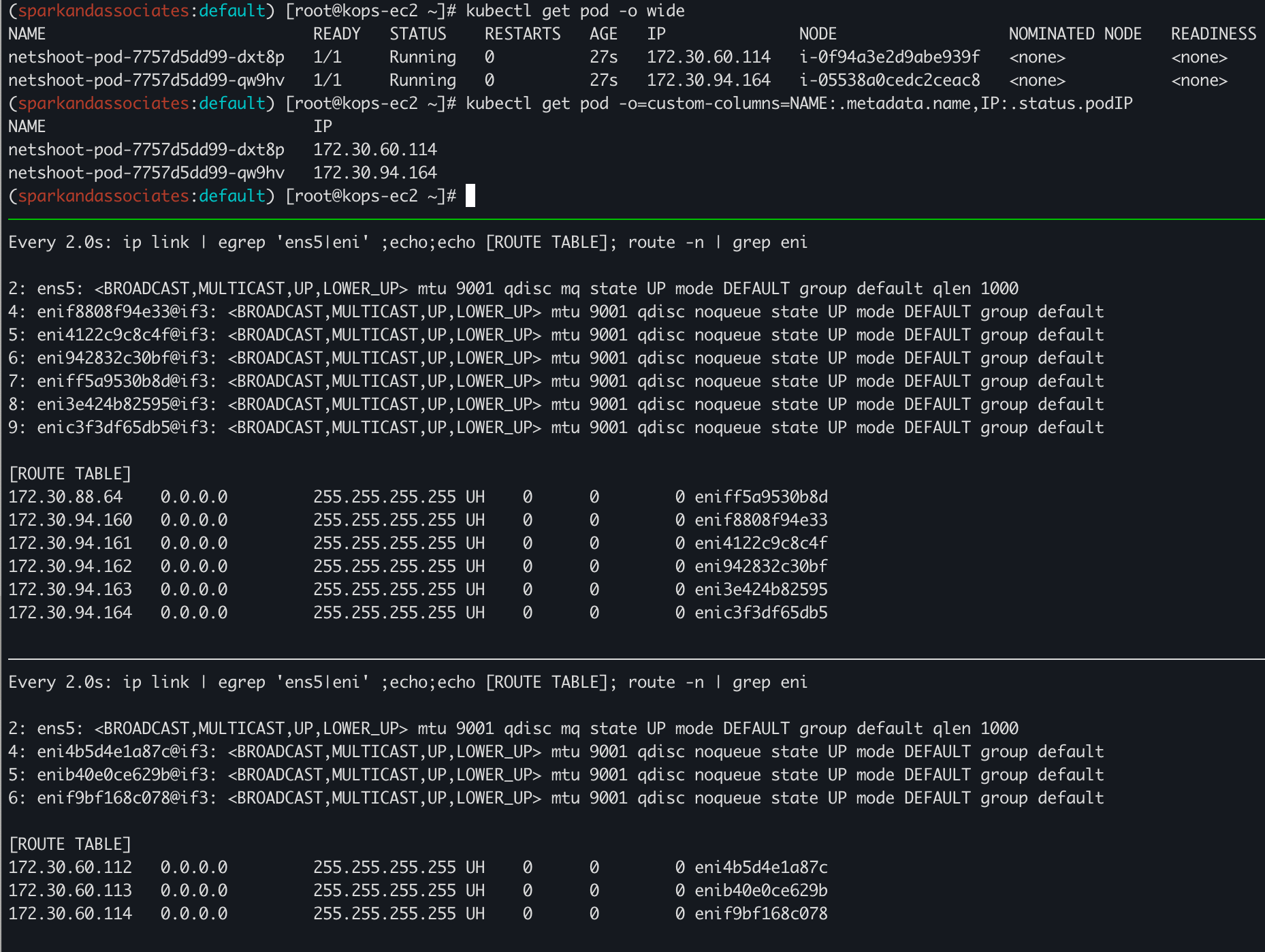

눈으로 확인해보자. 테스트 파드 생성 - nicolaka/netshoot

# [터미널1~2] 워커 노드 1~2 모니터링

ssh -i ~/.ssh/id_rsa ubuntu@$W1PIP

watch -d "ip link | egrep 'ens5|eni' ;echo;echo "[ROUTE TABLE]"; route -n | grep eni"

ssh -i ~/.ssh/id_rsa ubuntu@$W2PIP

watch -d "ip link | egrep 'ens5|eni' ;echo;echo "[ROUTE TABLE]"; route -n | grep eni"

# 테스트용 파드 netshoot-pod 생성

cat <<EOF | kubectl create -f -

apiVersion: apps/v1

kind: Deployment

metadata:

name: netshoot-pod

spec:

replicas: 2

selector:

matchLabels:

app: netshoot-pod

template:

metadata:

labels:

app: netshoot-pod

spec:

containers:

- name: netshoot-pod

image: nicolaka/netshoot

command: ["tail"]

args: ["-f", "/dev/null"]

terminationGracePeriodSeconds: 0

EOF

# 파드 이름 변수 지정

PODNAME1=$(kubectl get pod -l app=netshoot-pod -o jsonpath={.items[0].metadata.name})

PODNAME2=$(kubectl get pod -l app=netshoot-pod -o jsonpath={.items[1].metadata.name})

# 파드 확인

kubectl get pod -o wide

kubectl get pod -o=custom-columns=NAME:.metadata.name,IP:.status.podIP파드가 생성되면 eniY@ifN 추가되고 라우팅 테이블에 정보가 추가된다.

MTU는 점포프레임 9001로 설정된다.

AWS MTU 확인

awseniY@ifN 인터페이스 추가된것을 확인가능하다.

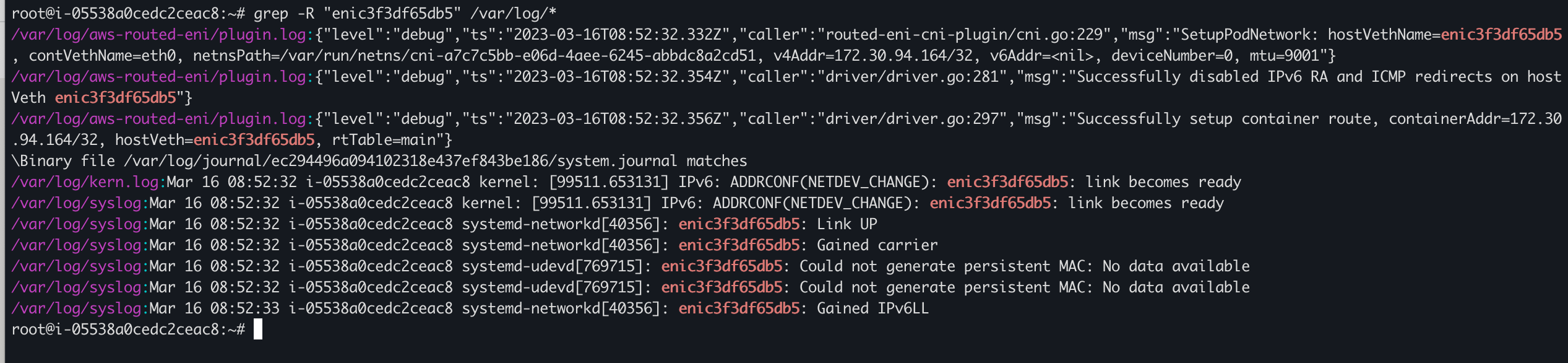

aws-routed-eni 로그를 찍는 프로세스를 찾아보자. 해당 프로세스에서 cni 인터페이스를 생성하고, 라우팅테이블 업데이트 함.

일단 k8s cluster 내에 aws 관련 pod 들이 있으며 aws-node-* 파드를 한번 살펴보자.

(sparkandassociates:default) [root@kops-ec2 ~]# k get pod -A -o wide | grep aws

kube-system aws-cloud-controller-manager-hr2qz 1/1 Running 0 27h 172.30.63.222 i-08a4f488be204357c <none> <none>

kube-system aws-load-balancer-controller-55bd49cfc7-5kq7q 1/1 Running 0 27h 172.30.63.222 i-08a4f488be204357c <none> <none>

kube-system aws-node-9d9h7 1/1 Running 0 27h 172.30.63.222 i-08a4f488be204357c <none> <none>

kube-system aws-node-lv8gm 1/1 Running 0 27h 172.30.66.26 i-05538a0cedc2ceac8 <none> <none>

kube-system aws-node-r5g6c 1/1 Running 0 27h 172.30.32.225 i-0f94a3e2d9abe939f <none> <none>

(sparkandassociates:default) [root@kops-ec2 ~]# kubectl get ds -n kube-system

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

aws-cloud-controller-manager 1 1 1 1 1 <none> 2d

aws-node 3 3 3 3 3 <none> 2d

ebs-csi-node 3 3 3 3 3 kubernetes.io/os=linux 2d

kops-controller 1 1 1 1 1 <none> 2d

node-local-dns 3 3 3 3 3 <none> 2d

aws-node daemonset 확인해보면 찾을 수 있다.

Liveness, Readiness 로 사용하는 grpc-health-probe 코드 확인

https://github.com/aws/amazon-vpc-cni-k8s/blob/master/cmd/grpc-health-probe/main.go

aws-vpc-cni 코드 확인

https://github.com/aws/amazon-vpc-cni-k8s/blob/master/cmd/aws-vpc-cni/main.go

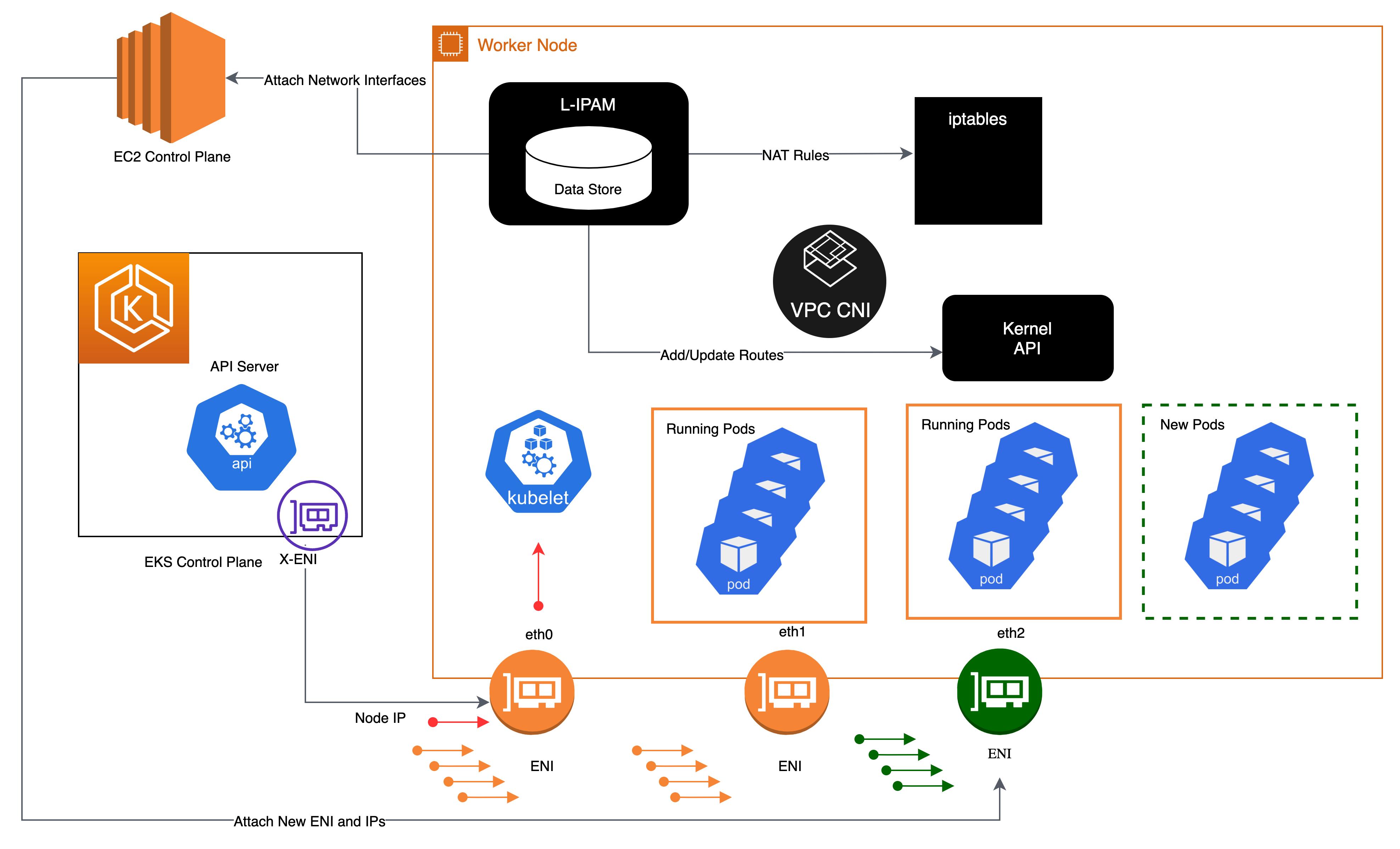

eniconfigs.crd.k8s.amazonaws.com CRD (CustomResourceDefinition)에 의해 vpc eni 컨트롤 되는듯.

VPC CNI 관련 설명

https://aws.github.io/aws-eks-best-practices/networking/vpc-cni/

아무튼 kops로 배포된 k8s 클러스터가 어떤 특성을 가졌는지 대략 확인했다.

POD - POD 통신 테스트

# 파드 IP 변수 지정

PODIP1=$(kubectl get pod -l app=netshoot-pod -o jsonpath={.items[0].status.podIP})

PODIP2=$(kubectl get pod -l app=netshoot-pod -o jsonpath={.items[1].status.podIP})

PODNAME1=$(kubectl get pod -l app=netshoot-pod -o jsonpath={.items[0].metadata.name})

PODNAME2=$(kubectl get pod -l app=netshoot-pod -o jsonpath={.items[1].metadata.name})

# 파드1 Shell 에서 파드2로 ping 테스트

kubectl exec -it $PODNAME1 -- ping -c 2 $PODIP2

# 파드2 Shell 에서 파드1로 ping 테스트

kubectl exec -it $PODNAME2 -- ping -c 2 $PODIP1

(sparkandassociates:default) [root@kops-ec2 ~]# kubectl exec -it $PODNAME1 -- ping -c 2 $PODIP2

PING 172.30.94.164 (172.30.94.164) 56(84) bytes of data.

64 bytes from 172.30.94.164: icmp_seq=1 ttl=62 time=1.12 ms

64 bytes from 172.30.94.164: icmp_seq=2 ttl=62 time=1.04 ms

--- 172.30.94.164 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1001ms

rtt min/avg/max/mdev = 1.035/1.076/1.117/0.041 ms

(sparkandassociates:default) [root@kops-ec2 ~]# ^C

(sparkandassociates:default) [root@kops-ec2 ~]# kubectl exec -it $PODNAME2 -- ping -c 2 $PODIP1

PING 172.30.60.114 (172.30.60.114) 56(84) bytes of data.

64 bytes from 172.30.60.114: icmp_seq=1 ttl=62 time=1.03 ms

64 bytes from 172.30.60.114: icmp_seq=2 ttl=62 time=1.02 ms

# 워커 노드 EC2 : TCPDUMP 확인

sudo tcpdump -i any -nn icmp

sudo tcpdump -i ens5 -nn icmp

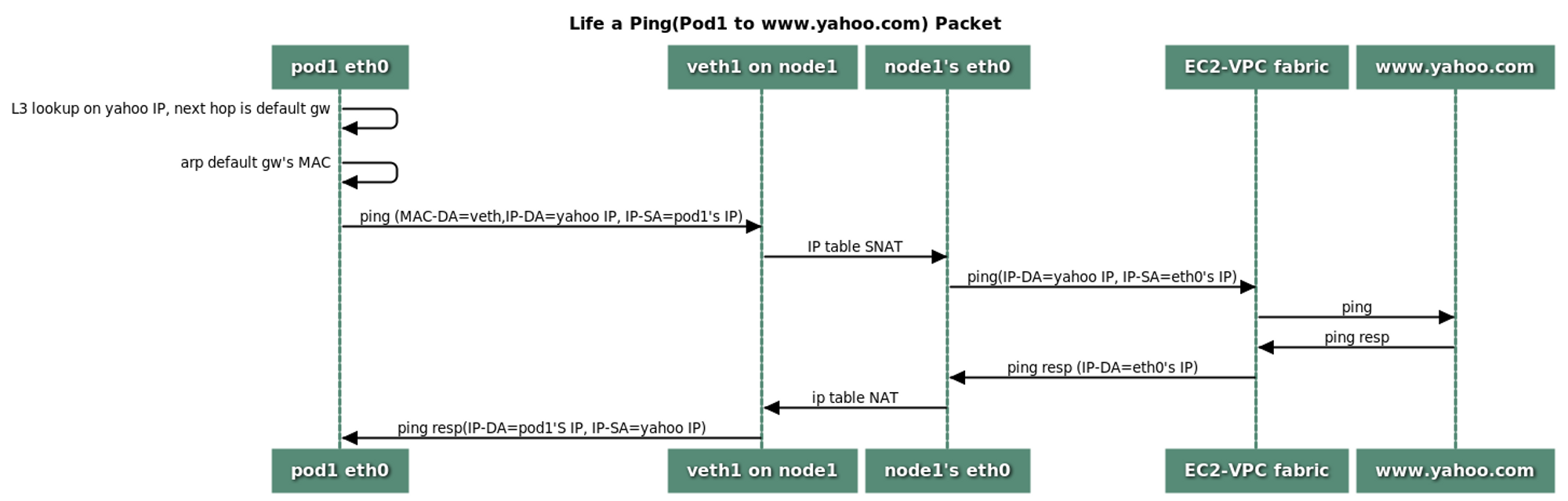

4. POD 외부 통신

통신 흐름 : iptables SNAT 통해 노드 인터페이스 IP로 변경되서 통신

(참고 : https://github.com/aws/amazon-vpc-cni-k8s/blob/master/docs/cni-proposal.md )

# 작업용 EC2 : pod-1 Shell 에서 외부로 ping

kubectl exec -it $PODNAME1 -- ping -c 1 www.google.com

kubectl exec -it $PODNAME1 -- ping -i 0.1 www.google.com

# 워커 노드 EC2 : 퍼블릭IP 확인, TCPDUMP 확인

curl -s ipinfo.io/ip ; echo

sudo tcpdump -i any -nn icmp

sudo tcpdump -i ens5 -nn icmp

(sparkandassociates:default) [root@kops-ec2 ~]# kubectl exec -it $PODNAME2 -- ping -c 1 www.google.com

PING www.google.com (142.250.196.100) 56(84) bytes of data.

64 bytes from nrt12s35-in-f4.1e100.net (142.250.196.100): icmp_seq=1 ttl=45 time=26.4 ms

--- www.google.com ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 26.374/26.374/26.374/0.000 ms

(sparkandassociates:default) [root@kops-ec2 ~]#

───────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────

root@i-05538a0cedc2ceac8:~#

root@i-05538a0cedc2ceac8:~#

root@i-05538a0cedc2ceac8:~# sudo tcpdump -i any -nn icmp

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on any, link-type LINUX_SLL (Linux cooked v1), capture size 262144 bytes

06:35:55.774315 IP 172.30.94.164 > 142.250.196.100: ICMP echo request, id 63106, seq 1, length 64

06:35:55.774339 IP 172.30.66.26 > 142.250.196.100: ICMP echo request, id 21114, seq 1, length 64

06:35:55.800666 IP 142.250.196.100 > 172.30.66.26: ICMP echo reply, id 21114, seq 1, length 64

06:35:55.800682 IP 142.250.196.100 > 172.30.94.164: ICMP echo reply, id 63106, seq 1, length 64

# podIP(172.30.94.164) 에서 node IP(172.30.66.26)로 SNAT 이 되어 통신됨.

# 파드가 외부와 통신시에는 아래 처럼 'AWS-SNAT-CHAIN-0, AWS-SNAT-CHAIN-1' 룰(rule)에 의해서 SNAT 되어서 외부와 통신!

root@i-05538a0cedc2ceac8:~# sudo iptables -t nat -S | grep 'A AWS-SNAT-CHAIN'

-A AWS-SNAT-CHAIN-0 ! -d 172.30.0.0/16 -m comment --comment "AWS SNAT CHAIN" -j AWS-SNAT-CHAIN-1

-A AWS-SNAT-CHAIN-1 ! -o vlan+ -m comment --comment "AWS, SNAT" -m addrtype ! --dst-type LOCAL -j SNAT --to-source 172.30.66.26 --random-fully

root@i-05538a0cedc2ceac8:~#

# 참고로 뒤 IP는 eth0(ENI 첫번째)의 IP 주소이다

# --random-fully 동작 - 링크1 링크2

sudo iptables -t nat -S | grep 'A AWS-SNAT-CHAIN'

-A AWS-SNAT-CHAIN-0 ! -d 172.30.0.0/16 -m comment --comment "AWS SNAT CHAIN" -j AWS-SNAT-CHAIN-1

-A AWS-SNAT-CHAIN-1 ! -o vlan+ -m comment --comment "AWS, SNAT" -m addrtype ! --dst-type LOCAL -j SNAT --to-source 172.30.85.242 --random-fully

## 아래 'mark 0x4000/0x4000' 매칭되지 않아서 RETURN 됨!

-A KUBE-POSTROUTING -m mark ! --mark 0x4000/0x4000 -j RETURN

-A KUBE-POSTROUTING -j MARK --set-xmark 0x4000/0x0

-A KUBE-POSTROUTING -m comment --comment "kubernetes service traffic requiring SNAT" -j MASQUERADE --random-fully

...

# 카운트 확인

Every 2.0s: sudo iptables -v --numeric --table nat --list AWS-SNAT-CHAIN-0; echo ; sudo iptables -v --numeric --table nat --list ... i-05538a0cedc2ceac8: Fri Mar 17 06:40:43 2023

Chain AWS-SNAT-CHAIN-0 (1 references)

pkts bytes target prot opt in out source destination

264K 16M AWS-SNAT-CHAIN-1 all -- * * 0.0.0.0/0 !172.30.0.0/16 /* AWS SNAT CHAIN */

Chain AWS-SNAT-CHAIN-1 (1 references)

pkts bytes target prot opt in out source destination

33030 1983K SNAT all -- * !vlan+ 0.0.0.0/0 0.0.0.0/0 /* AWS, SNAT */ ADDRTYPE match dst-type !LOCAL to:172.30.66.26 random-fully

Chain KUBE-POSTROUTING (1 references)

pkts bytes target prot opt in out source destination

2973 185K RETURN all -- * * 0.0.0.0/0 0.0.0.0/0 mark match ! 0x4000/0x4000

0 0 MARK all -- * * 0.0.0.0/0 0.0.0.0/0 MARK xor 0x4000

0 0 MASQUERADE all -- * * 0.0.0.0/0 0.0.0.0/0 /* kubernetes service traffic requiring SNAT */ random-fully

# conntrack 확인

sudo conntrack -L -n |grep -v '169.254.169'

conntrack v1.4.5 (conntrack-tools): 24 flow entries have been shown.

icmp 1 24 src=172.30.94.164 dst=142.251.42.132 type=8 code=0 id=33110 src=142.251.42.132 dst=172.30.66.26 type=0 code=0 id=29865 mark=128 use=1

tcp 6 102 TIME_WAIT src=172.30.94.164 dst=142.251.42.132 sport=60882 dport=80 src=142.251.42.132 dst=172.30.66.26 sport=80 dport=18054 [ASSURED] mark=128 use=1

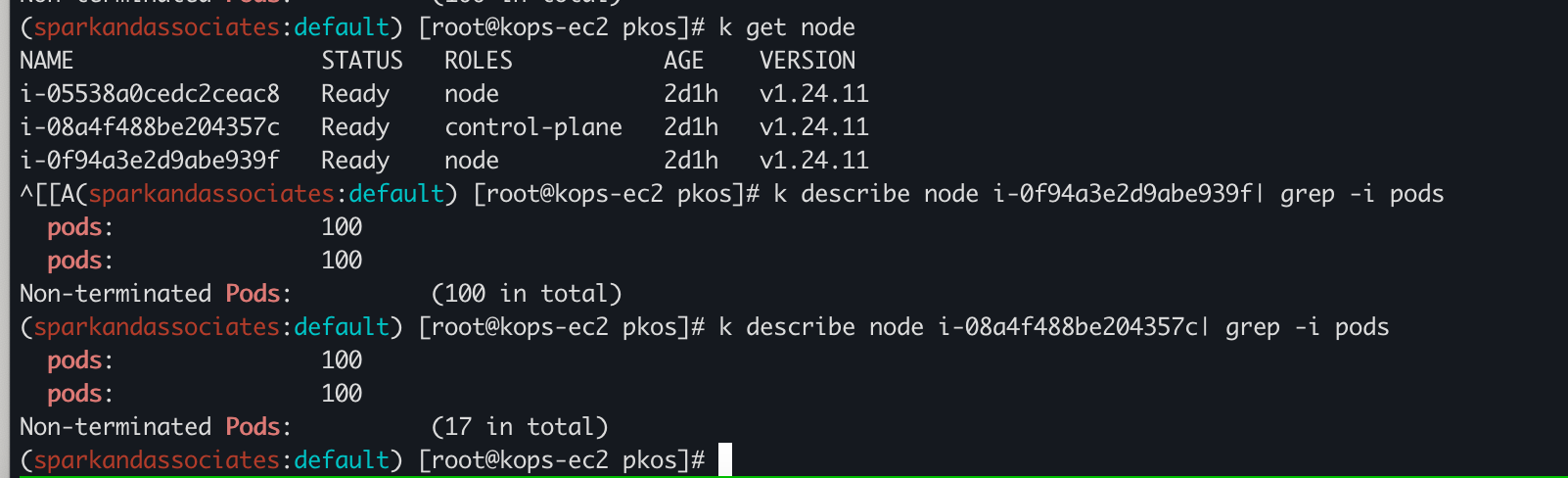

5. 노드에 파드 생성 갯수 제한

# t3 타입의 정보(필터) 확인

[root@san-1 yaml]# aws ec2 describe-instance-types --filters Name=instance-type,Values=t3.* \

> --query "InstanceTypes[].{Type: InstanceType, MaxENI: NetworkInfo.MaximumNetworkInterfaces, IPv4addr: NetworkInfo.Ipv4AddressesPerInterface}" \

> --output table

--------------------------------------

| DescribeInstanceTypes |

+----------+----------+--------------+

| IPv4addr | MaxENI | Type |

+----------+----------+--------------+

| 15 | 4 | t3.2xlarge |

| 15 | 4 | t3.xlarge |

| 12 | 3 | t3.large |

| 6 | 3 | t3.medium |

| 2 | 2 | t3.nano |

| 2 | 2 | t3.micro |

| 4 | 3 | t3.small |

+----------+----------+--------------+

# 파드 사용 가능 계산 예시 : aws-node 와 kube-proxy 파드는 host-networking 사용으로 IP 2개 남음

((MaxENI * (IPv4addr-1)) + 2)

t3.medium 경우 : ((3 * (6 - 1) + 2 ) = 17개 >> aws-node 와 kube-proxy 2개 제외하면 15개

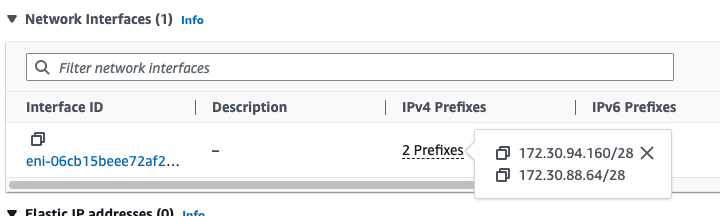

하지만, IP prefix delegation 설정을 이미 해둔거라 워커노드당 100개 pod이 생성 될 수 있도록 되어있다.

(IPv4 Prefix Delegation : IPv4 28bit 서브넷(prefix)를 위임하여 할당 가능 IP 수와 인스턴스 유형에 권장하는 최대 갯수로 선정)

(sparkandassociates:default) [root@kops-ec2 pkos]# kubectl describe daemonsets.apps -n kube-system aws-node | egrep 'ENABLE_PREFIX_DELEGATION|WARM_PREFIX_TARGET'

ENABLE_PREFIX_DELEGATION: true

WARM_PREFIX_TARGET: 1

(sparkandassociates:default) [root@kops-ec2 pkos]# kubectl describe node | grep Allocatable: -A6

Allocatable:

cpu: 2

ephemeral-storage: 119703055367

hugepages-1Gi: 0

hugepages-2Mi: 0

memory: 3670004Ki

pods: 100

--

Allocatable:

cpu: 2

ephemeral-storage: 59763732382

hugepages-1Gi: 0

hugepages-2Mi: 0

memory: 3854320Ki

pods: 100

--

Allocatable:

cpu: 2

ephemeral-storage: 119703055367

hugepages-1Gi: 0

hugepages-2Mi: 0

memory: 3698676Ki

pods: 100# pod 200개 생성

(sparkandassociates:default) [root@kops-ec2 ~]# kubectl apply -f ~/pkos/2/nginx-dp.yaml

deployment.apps/nginx-deployment created

(sparkandassociates:default) [root@kops-ec2 ~]# kubectl scale deployment nginx-deployment --replicas=200

deployment.apps/nginx-deployment scaled

(sparkandassociates:default) [root@kops-ec2 pkos]# k get pod | grep Pend| wc -l

13

워커 노드 각 100개씩 pod이 가득 차 있고, 13개 pod은 Pending 상태임. (* 워커노드에 pod이 이미 있어서 최대 200개 도달함)

maxPod 설정 확인

(sparkandassociates:default) [root@kops-ec2 pkos]# kops edit cluster

...

kubelet:

anonymousAuth: false

maxPods: 100

...

인스턴스 타입 확인

(sparkandassociates:default) [root@kops-ec2 pkos]# kubectl describe nodes | grep "node.kubernetes.io/instance-type"

node.kubernetes.io/instance-type=c5d.large

node.kubernetes.io/instance-type=t3.medium

node.kubernetes.io/instance-type=c5d.large

# Nitro 인스턴스 유형 확인

aws ec2 describe-instance-types --filters Name=hypervisor,Values=nitro --query "InstanceTypes[*].[InstanceType]" --output text | sort | egrep 't3\.|c5\.|c5d\.'

Ingress

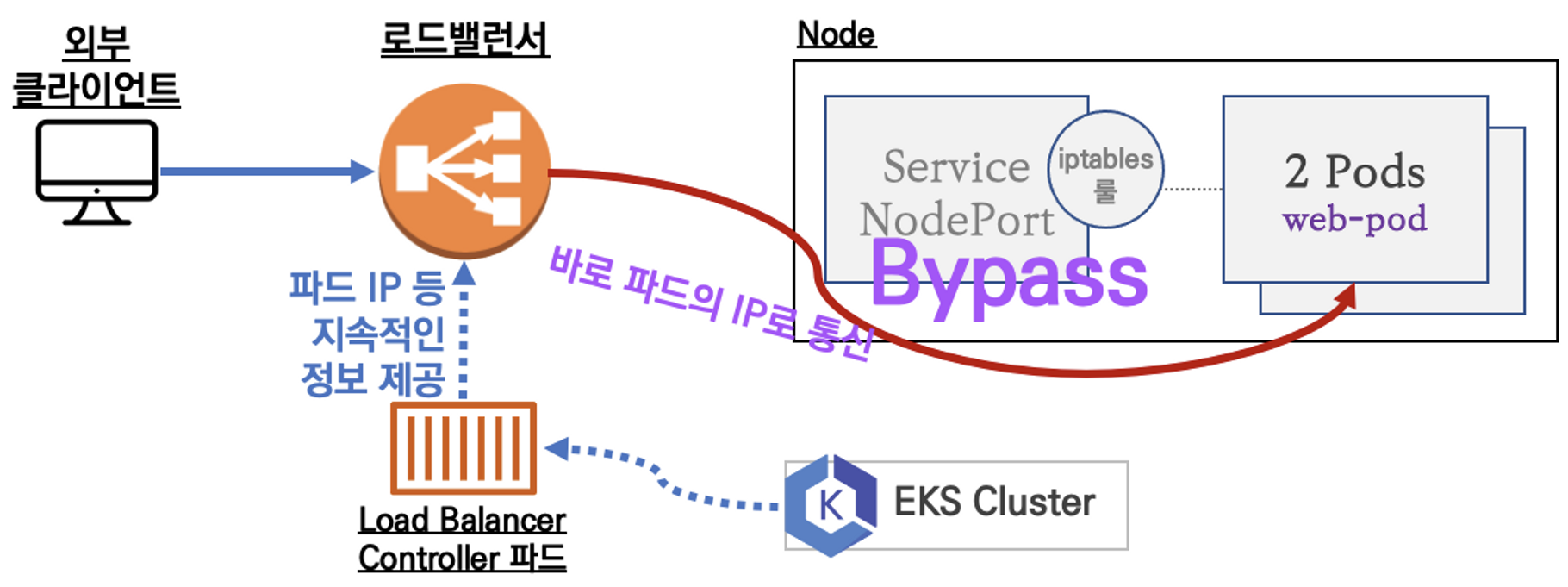

Service LoadBalancer Controller : AWS Load Balancer Controller + NLB IP 모드 동작 with AWS VPC CNI

(출처: 가시다님 스터디 모임 자료)

EC2 instance profiles 설정 및 AWS LoadBalancer 배포 & ExternalDNS 설치 및 배포 (이미 설정되어 있으므로 참고만)

# 마스터/워커 노드에 EC2 IAM Role 에 Policy (AWSLoadBalancerControllerIAMPolicy) 추가

## IAM Policy 정책 생성 : 2주차에서 IAM Policy 를 미리 만들어두었으니 Skip

curl -o iam_policy.json https://raw.githubusercontent.com/kubernetes-sigs/aws-load-balancer-controller/v2.4.5/docs/install/iam_policy.json

aws iam create-policy --policy-name AWSLoadBalancerControllerIAMPolicy --policy-document file://iam_policy.json

# EC2 instance profiles 에 IAM Policy 추가(attach)

aws iam attach-role-policy --policy-arn arn:aws:iam::$ACCOUNT_ID:policy/AWSLoadBalancerControllerIAMPolicy --role-name masters.$KOPS_CLUSTER_NAME

aws iam attach-role-policy --policy-arn arn:aws:iam::$ACCOUNT_ID:policy/AWSLoadBalancerControllerIAMPolicy --role-name nodes.$KOPS_CLUSTER_NAME

# IAM Policy 정책 생성 : 2주차에서 IAM Policy 를 미리 만들어두었으니 Skip

curl -s -O https://s3.ap-northeast-2.amazonaws.com/cloudformation.cloudneta.net/AKOS/externaldns/externaldns-aws-r53-policy.json

aws iam create-policy --policy-name AllowExternalDNSUpdates --policy-document file://externaldns-aws-r53-policy.json

# EC2 instance profiles 에 IAM Policy 추가(attach)

aws iam attach-role-policy --policy-arn arn:aws:iam::$ACCOUNT_ID:policy/AllowExternalDNSUpdates --role-name masters.$KOPS_CLUSTER_NAME

aws iam attach-role-policy --policy-arn arn:aws:iam::$ACCOUNT_ID:policy/AllowExternalDNSUpdates --role-name nodes.$KOPS_CLUSTER_NAME

# kOps 클러스터 편집 : 아래 내용 추가

kops edit cluster

-----

spec:

certManager:

enabled: true

awsLoadBalancerController:

enabled: true

externalDns:

provider: external-dns

-----

# 업데이트 적용

kops update cluster --yes && echo && sleep 3 && kops rolling-update cluster서비스/파드 배포 테스트 with Ingress(ALB)

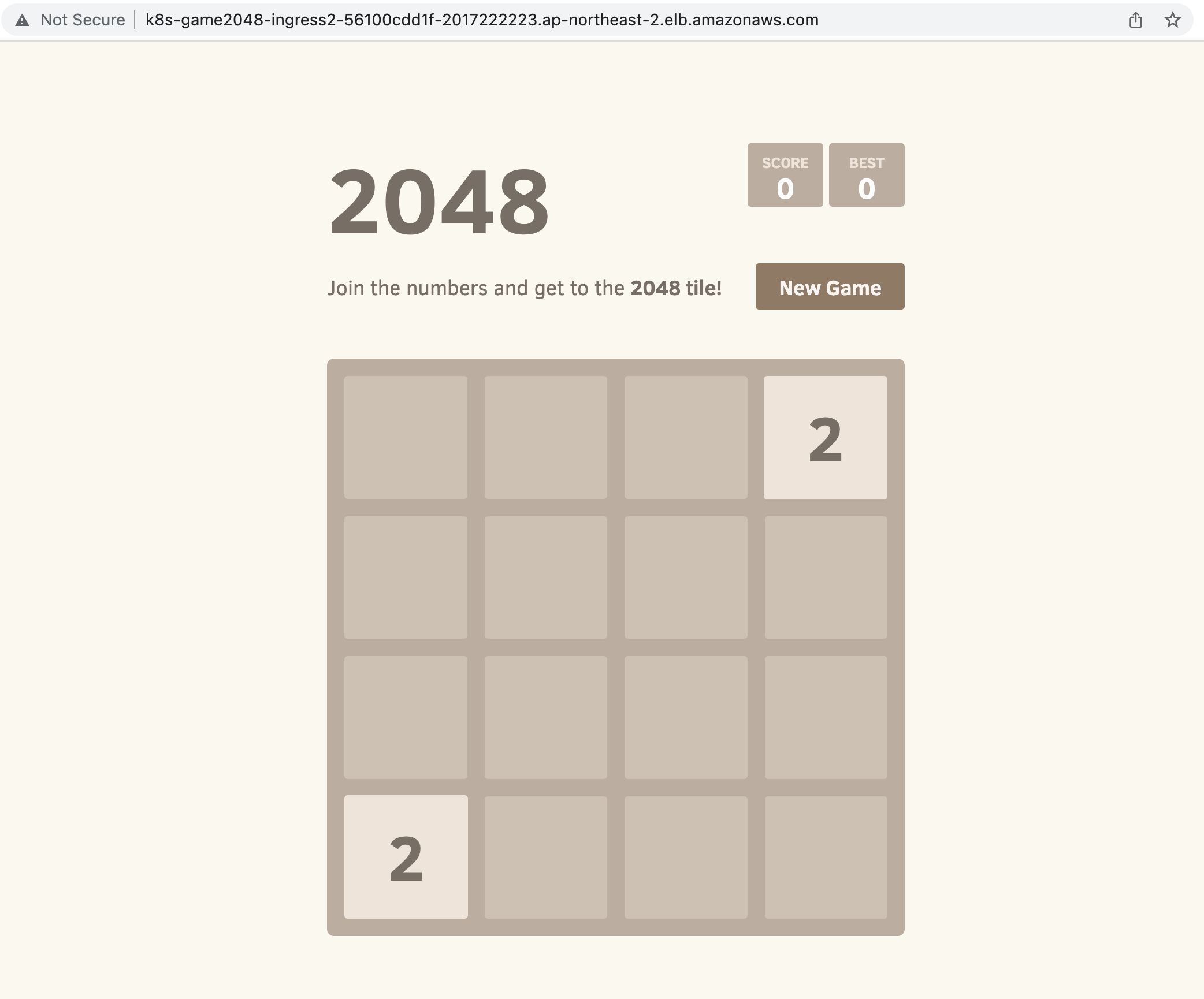

ingress1.yaml 내용 확인.

"2048" 게임 및 ingress 를 생성한다.

apiVersion: v1

kind: Namespace

metadata:

name: game-2048

---

apiVersion: apps/v1

kind: Deployment

metadata:

namespace: game-2048

name: deployment-2048

spec:

selector:

matchLabels:

app.kubernetes.io/name: app-2048

replicas: 2

template:

metadata:

labels:

app.kubernetes.io/name: app-2048

spec:

containers:

- image: public.ecr.aws/l6m2t8p7/docker-2048:latest

imagePullPolicy: Always

name: app-2048

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

namespace: game-2048

name: service-2048

spec:

ports:

- port: 80

targetPort: 80

protocol: TCP

type: NodePort

selector:

app.kubernetes.io/name: app-2048

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

namespace: game-2048

name: ingress-2048

annotations:

alb.ingress.kubernetes.io/scheme: internet-facing

alb.ingress.kubernetes.io/target-type: ip

spec:

ingressClassName: alb

rules:

- http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: service-2048

port:

number: 80

# 생성 확인

kubectl get-all -n game-2048

kubectl get ingress,svc,ep,pod -n game-2048

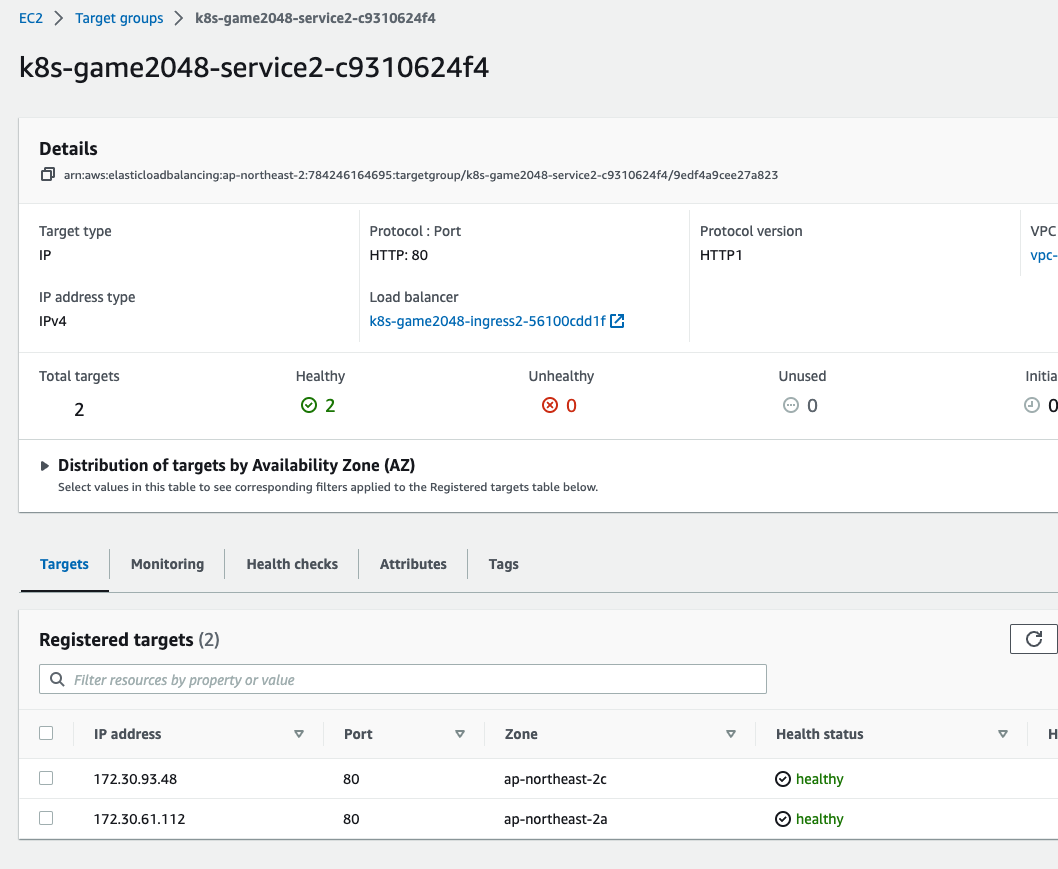

(sparkandassociates:default) [root@kops-ec2 pkos]# kubectl get targetgroupbindings -n game-2048

NAME SERVICE-NAME SERVICE-PORT TARGET-TYPE AGE

k8s-game2048-service2-c9310624f4 service-2048 80 ip 49s

# Ingress 확인

kubectl describe ingress -n game-2048 ingress-2048

(sparkandassociates:default) [root@kops-ec2 pkos]# kubectl describe ingress -n game-2048 ingress-2048

Name: ingress-2048

Labels: <none>

Namespace: game-2048

Address: k8s-game2048-ingress2-56100cdd1f-2017222223.ap-northeast-2.elb.amazonaws.com

Ingress Class: alb

Default backend: <default>

Rules:

Host Path Backends

---- ---- --------

*

/ service-2048:80 (172.30.61.112:80,172.30.93.48:80)

Annotations: alb.ingress.kubernetes.io/scheme: internet-facing

alb.ingress.kubernetes.io/target-type: ip

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal SuccessfullyReconciled 67s ingress Successfully reconciled

# 게임 접속 : ALB 주소로 웹 접속

kubectl get ingress -n game-2048 ingress-2048 -o jsonpath={.status.loadBalancer.ingress[0].hostname} | awk '{ print "Game URL = http://"$1 }'

kubectl get logs -n game-2048 -l app=game-2048

(sparkandassociates:default) [root@kops-ec2 pkos]# kubectl get ingress -n game-2048 ingress-2048 -o jsonpath={.status.loadBalancer.ingress[0].hostname} | awk '{ print "Game URL = http://"$1 }'

Game URL = http://k8s-game2048-ingress2-56100cdd1f-2017222223.ap-northeast-2.elb.amazonaws.com

# 파드 IP 확인

(sparkandassociates:default) [root@kops-ec2 pkos]# kubectl get pod -n game-2048 -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

deployment-2048-6bc9fd6bf5-9q9rj 1/1 Running 0 3m24s 172.30.61.112 i-0f94a3e2d9abe939f <none> <none>

deployment-2048-6bc9fd6bf5-q79sp 1/1 Running 0 3m24s 172.30.93.48 i-05538a0cedc2ceac8 <none> <none>

EC2 > Target Group 에서 확인가능하다.

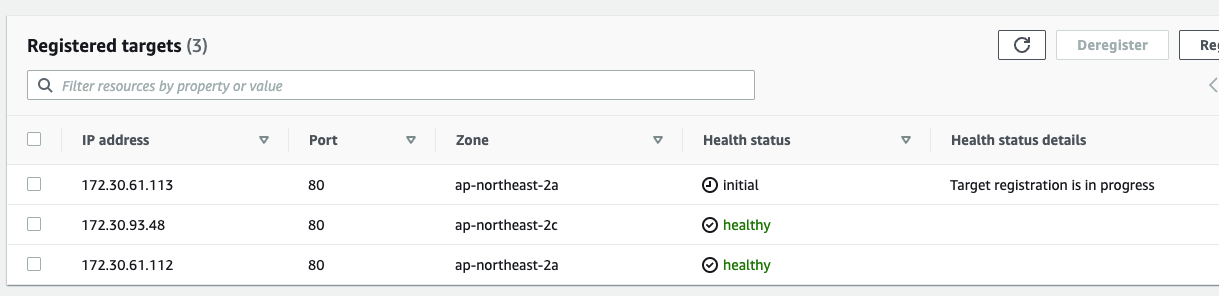

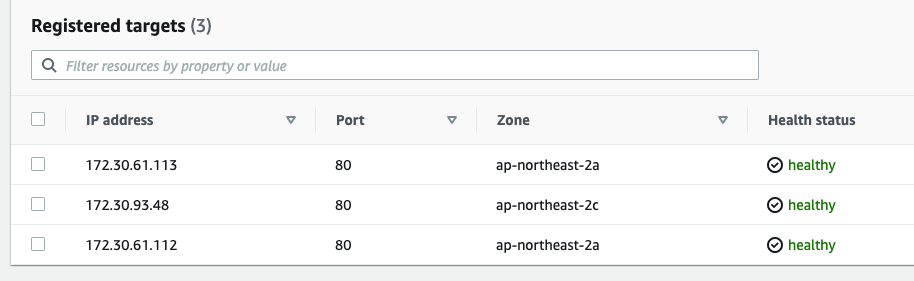

(sparkandassociates:default) [root@kops-ec2 pkos]# kubectl scale deployment -n game-2048 deployment-2048 --replicas 3

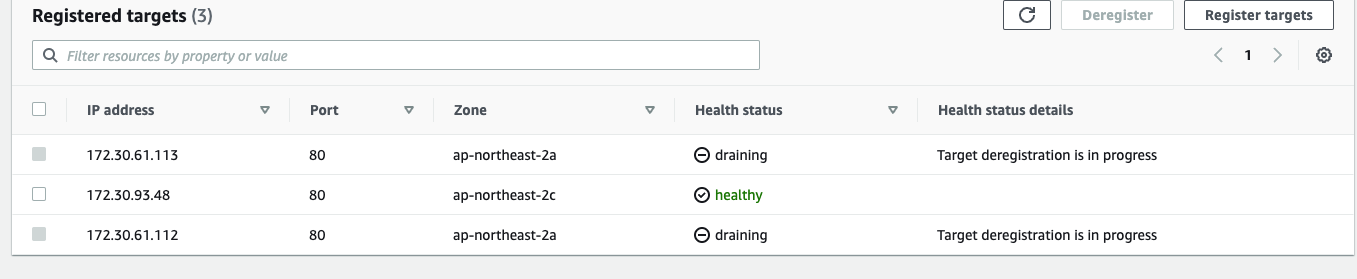

deployment.apps/deployment-2048 scaledpod 개수를 3개로 늘리면 수초내 ALB에 반영됨. (So cool...)

반대로 pod을 1개로 줄이면 그 즉시 타겟 그룹에서 draining 됨. (soooooo cool)

ExternalDNS 설정

# 현재 별도 DNS 등록이 안되어있어 elb 주소로 설정됨.

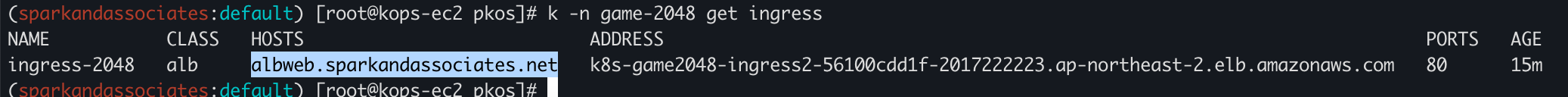

(sparkandassociates:default) [root@kops-ec2 pkos]# k -n game-2048 get ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

ingress-2048 alb * k8s-game2048-ingress2-56100cdd1f-2017222223.ap-northeast-2.elb.amazonaws.com 80 11m

# host 추가해준다.

(sparkandassociates:default) [root@kops-ec2 pkos]# k -n game-2048 edit ingress ingress-2048

...

ingressClassName: alb

rules:

- host: albweb.sparkandassociates.net

http:

paths:

- backend:

...

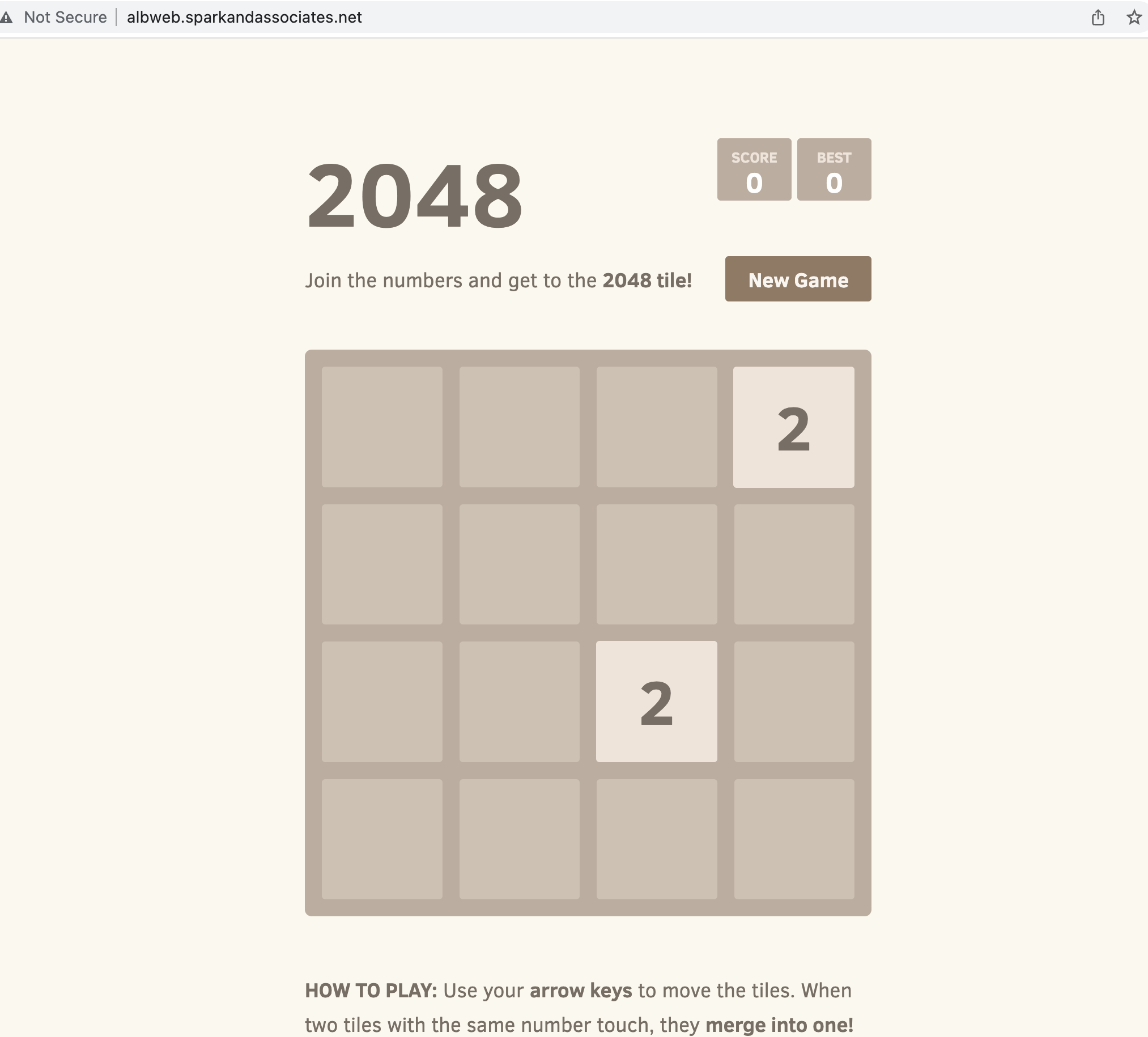

설정한 도메인으로 접속 확인

URI 분기 확인

테트리스, 마리오 게임 추가 배포

cat <<EOF | kubectl create -f -

apiVersion: apps/v1

kind: Deployment

metadata:

name: tetris

labels:

app: tetris

spec:

replicas: 1

selector:

matchLabels:

app: tetris

template:

metadata:

labels:

app: tetris

spec:

containers:

- name: tetris

image: bsord/tetris

---

apiVersion: v1

kind: Service

metadata:

name: tetris

spec:

selector:

app: tetris

ports:

- port: 80

protocol: TCP

targetPort: 80

type: NodePort

EOF

cat <<EOF | kubectl create -f -

apiVersion: apps/v1

kind: Deployment

metadata:

name: mario

labels:

app: mario

spec:

replicas: 1

selector:

matchLabels:

app: mario

template:

metadata:

labels:

app: mario

spec:

containers:

- name: mario

image: pengbai/docker-supermario

---

apiVersion: v1

kind: Service

metadata:

name: mario

spec:

selector:

app: mario

ports:

- port: 80

protocol: TCP

targetPort: 8080

type: NodePort

externalTrafficPolicy: Local

EOFIngress 생성

cat <<EOF | kubectl create -f -

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress-ps5

annotations:

alb.ingress.kubernetes.io/scheme: internet-facing

alb.ingress.kubernetes.io/target-type: ip

spec:

ingressClassName: alb

rules:

- host: albps5.sparkandassociates.net

http:

paths:

- path: /mario

pathType: Prefix

backend:

service:

name: mario

port:

number: 80

- path: /tetris

pathType: Prefix

backend:

service:

name: tetris

port:

number: 80

EOF

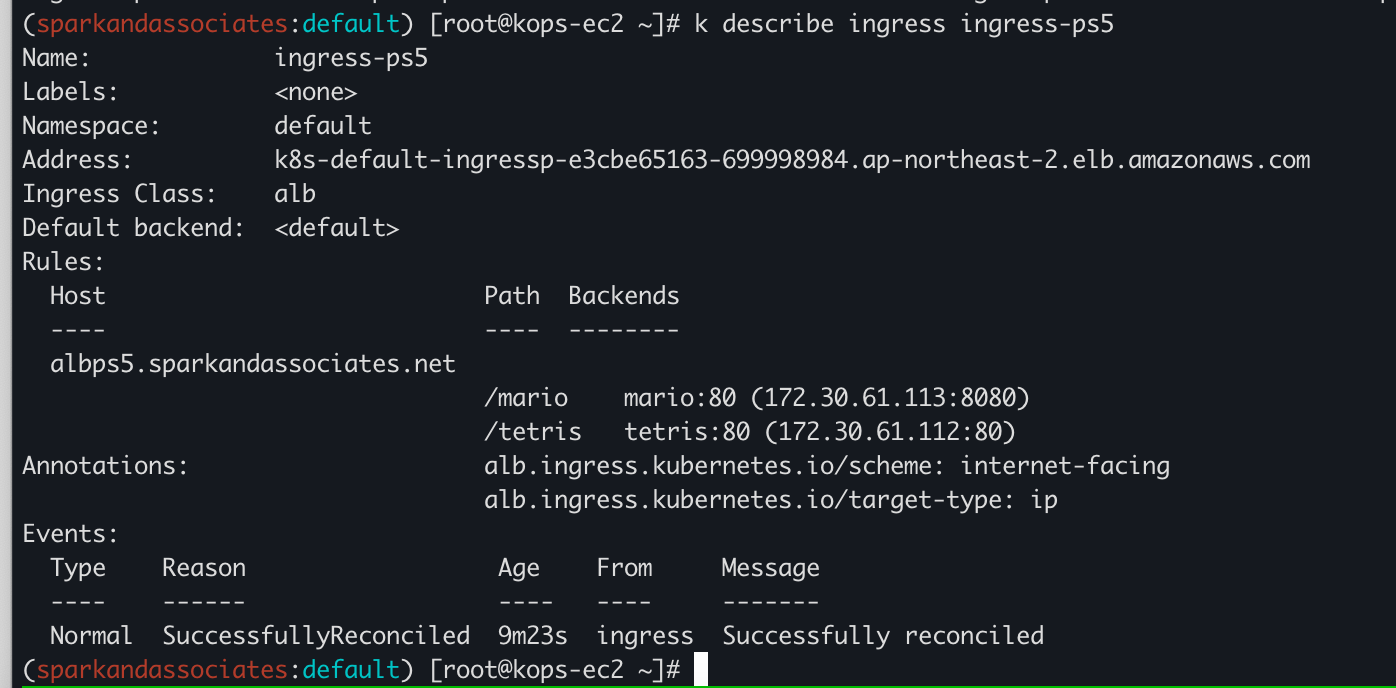

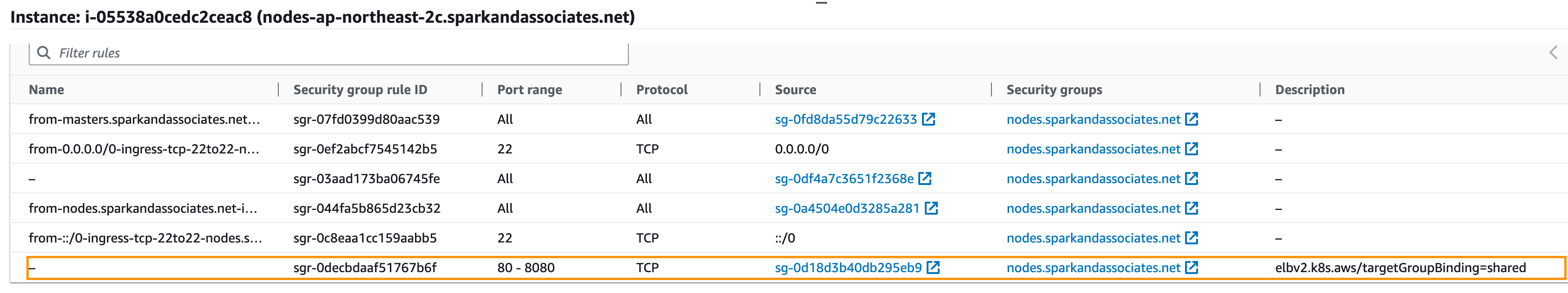

EC2 sec group에 elb target group binding 이란 description 달린채 80-8080 룰이 추가된다.

SSL 인증서 발급하고, CNAME 추가해서 도메인 인증후 사용가능.

ingress에 인증서를 설정하는 방법은 간단하다.

아래와 같이 cert arn 을 넣어주기만 하면되고

listen port를 어노테이션에 넣어주면 된다.

(참고 : https://guide.ncloud-docs.com/docs/k8s-k8suse-albingress)

kind: Ingress

metadata:

annotations:

alb.ingress.kubernetes.io/certificate-arn: arn:aws:acm:ap-northeast-2:7842695:certificate/57533b9f3

alb.ingress.kubernetes.io/listen-ports: '[{"HTTP": 80}, {"HTTPS": 443}]'

alb.ingress.kubernetes.io/scheme: internet-facing

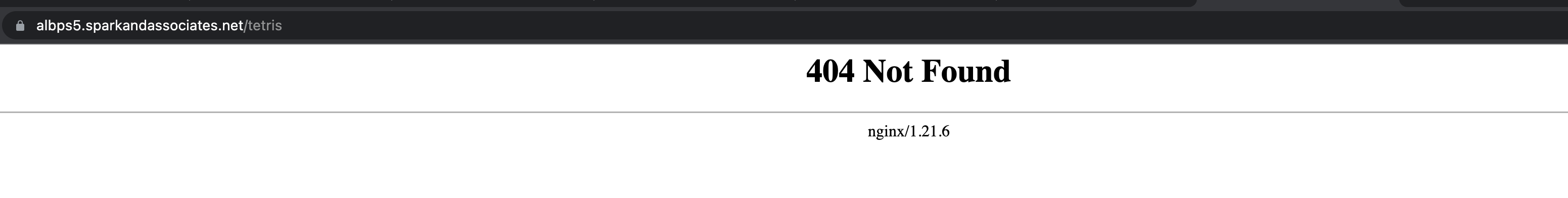

alb.ingress.kubernetes.io/target-type: ip인증서까지 올렸지만 여전히

404에러가 난다.

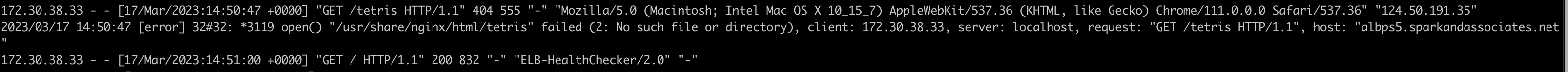

tetris pod 로그 확인.

/usr/share/nginx/html/tetris 경로를 찾는다?

/tetris 를 호출할경우 -> tetris endpoint 80 port의 / 경로로 가도록 의도하였으나 tetris pod의 /tetris 경로로 보내진다.

alb.ingress.kubernetes.io/certificate-arn: arn:aws:acm:ap-northeast-2:784246164695:certificate/57533136-de19-4485-b686-1f85e604b9f3

alb.ingress.kubernetes.io/listen-ports: '[{"HTTP": 80}, {"HTTPS": 443}]'

alb.ingress.kubernetes.io/scheme: internet-facing

alb.ingress.kubernetes.io/target-type: ip

# custom annotations (redirects, header versioning) (if any):

alb.ingress.kubernetes.io/actions.viewer-redirect: '{"Type": "redirect", "RedirectConfig": { "Protocol": "HTTPS", "Port": "443", "Path":"/", "Query": "#{query}", "StatusCode": "HTTP_301"}}'

annotations:

alb.ingress.kubernetes.io/actions.mario: '{"Type":"redirect","RedirectConfig":{"Host":"mario.sparkandassociates.net","Port":"443","Protocol":"HTTPS","Query":"#{query}","path":"/","StatusCode":"HTTP_301"}}'

alb.ingress.kubernetes.io/actions.tetris: '{"Type":"redirect","RedirectConfig":{"Host":"tetris.sparkandassociates.net","Port":"443","Protocol":"HTTPS","Query":"#{query}","path":"/","StatusCode":"HTTP_301"}}'

alb.ingress.kubernetes.io/certificate-arn: arn:aws:acm:ap-northeast-2:784246164695:certificate/57533136-de19-4485-b686-1f85e604b9f3

alb.ingress.kubernetes.io/conditions.mario: |

[{"field":"host-header","hostHeaderConfig":{"values":["mario.sparkandassociates.net"]}}]

alb.ingress.kubernetes.io/conditions.tetris: |

[{"field":"host-header","hostHeaderConfig":{"values":["tetris.sparkandassociates.net"]}}]

alb.ingress.kubernetes.io/listen-ports: '[{"HTTP": 80}, {"HTTPS": 443}]'

alb.ingress.kubernetes.io/scheme: internet-facing

alb.ingress.kubernetes.io/target-type: ip

external-dns.alpha.kubernetes.io/hostname: mario.sparkandassociates.net,tetris.sparkandassociates.net

spec:

ingressClassName: alb

rules:

- host: albps5.sparkandassociates.net

http:

paths:

- backend:

service:

name: mario

port:

name: use-annotation

path: /mario

pathType: Prefix

- backend:

service:

name: tetris

port:

name: use-annotation

path: /tetris

pathType: Prefix

(*3월 24일 업데이트)

alb ingress는 L7 로드밸런서로서 모든기능을 제공하지 않음.

rewrite 기능이 없어 redirect로 해보려했으나 제대로 되지않아 방법을 변경. (혹시 누가 아시면 연락주세요.)

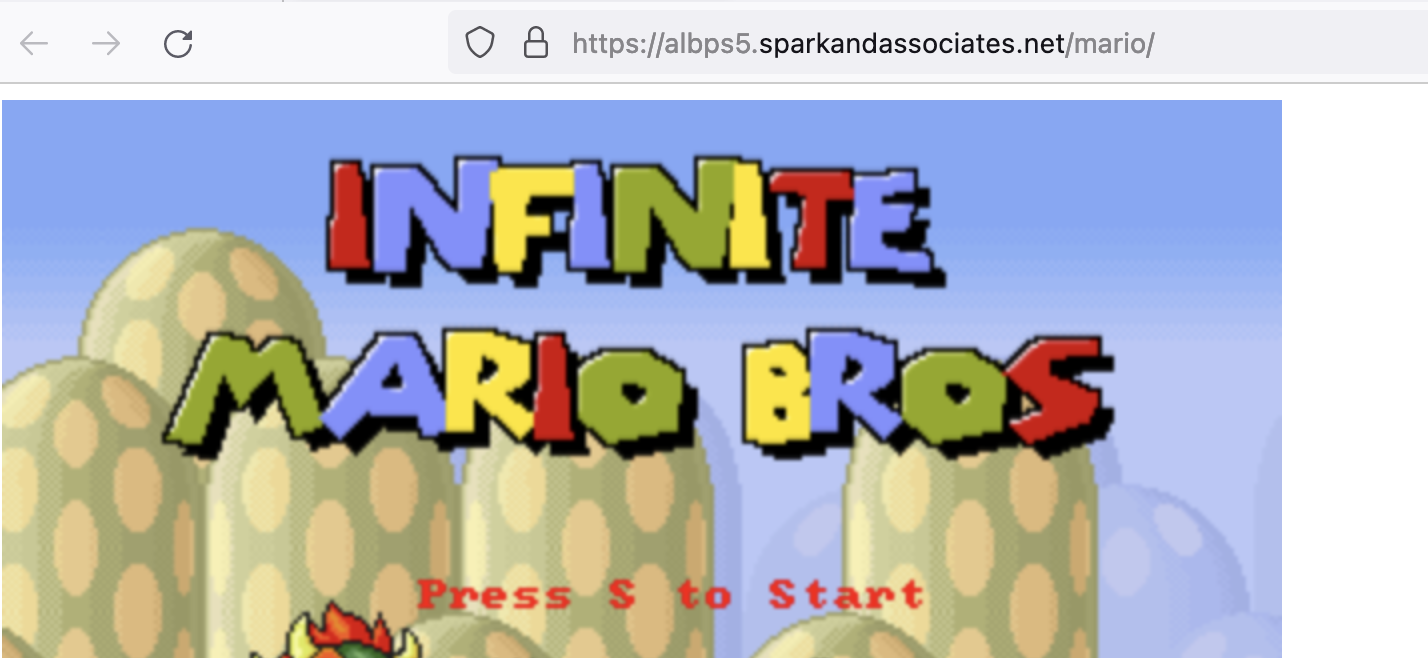

컨테이너내 web root 경로를 변경 각 /mario, /tetris

컨테이너 이미지 자체를 변경하지 않고 pod 기동이후 lifecycle 이용해 스크립트 사용하기로..

(lifecycle 참고 : https://kubernetes.io/docs/tasks/configure-pod-container/attach-handler-lifecycle-event/ )

mario deployment 를 아래와 같이 수정해준다.

spec:

containers:

- image: pengbai/docker-supermario

imagePullPolicy: Always

name: mario

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

lifecycle:

postStart:

exec:

command: ["/bin/sh", "-c", "mkdir -p /usr/local/tomcat/webapps/mario && cp -R /usr/local/tomcat/webapps/ROOT/* /usr/local/tomcat/webapps/mario; mv /usr/local/tomcat/webapps/mario /usr/local/tomcat/webapps/ROOT/mario"]tetris deployment도 마찬가지로 수정

spec:

containers:

- image: bsord/tetris

imagePullPolicy: Always

name: tetris

resources: {}

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

lifecycle:

postStart:

exec:

command: ["/bin/sh", "-c", "mkdir -p /usr/share/nginx/tetris && cp -R /usr/share/nginx/html/* /usr/share/nginx/tetris; mv /usr/share/nginx/tetris /usr/share/nginx/html/tetris"]확인

실습자원 삭제

kOps 클러스터 삭제 & AWS CloudFormation 스택 삭제

kops delete cluster --yes && aws cloudformation delete-stack --stack-name mykopsDNS레코드는 삭제가 자동으로 안되므로

route53안에 externalDNS로 만들어진 DNS 레코드는 직접 삭제해준다.