This is another way to speed up gradient descent.

RMS Prop stands for Root Mean Squared propagation.

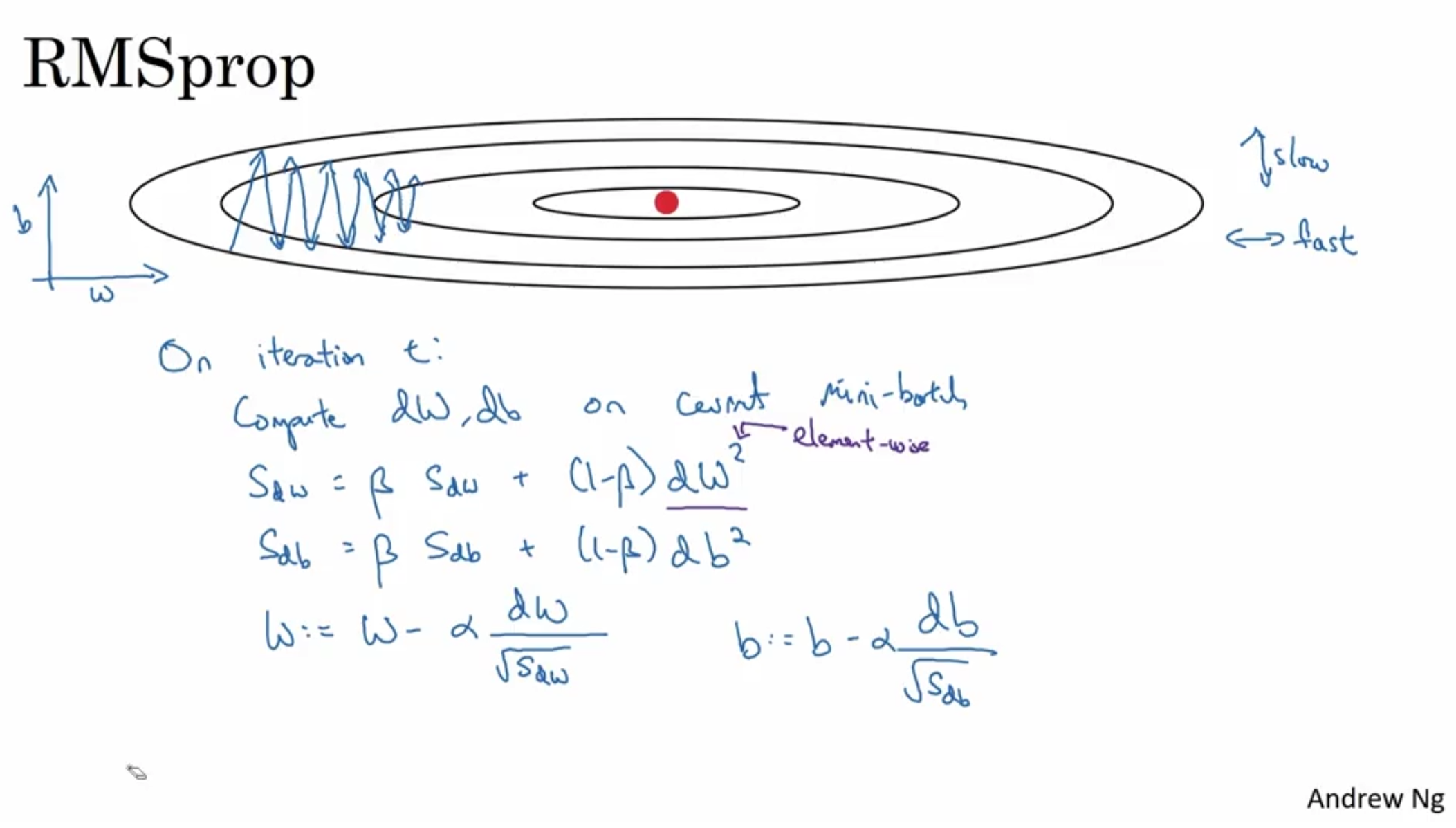

- Suppose in this example, w indicates the horizontal movement and b indicates the vertical movement.

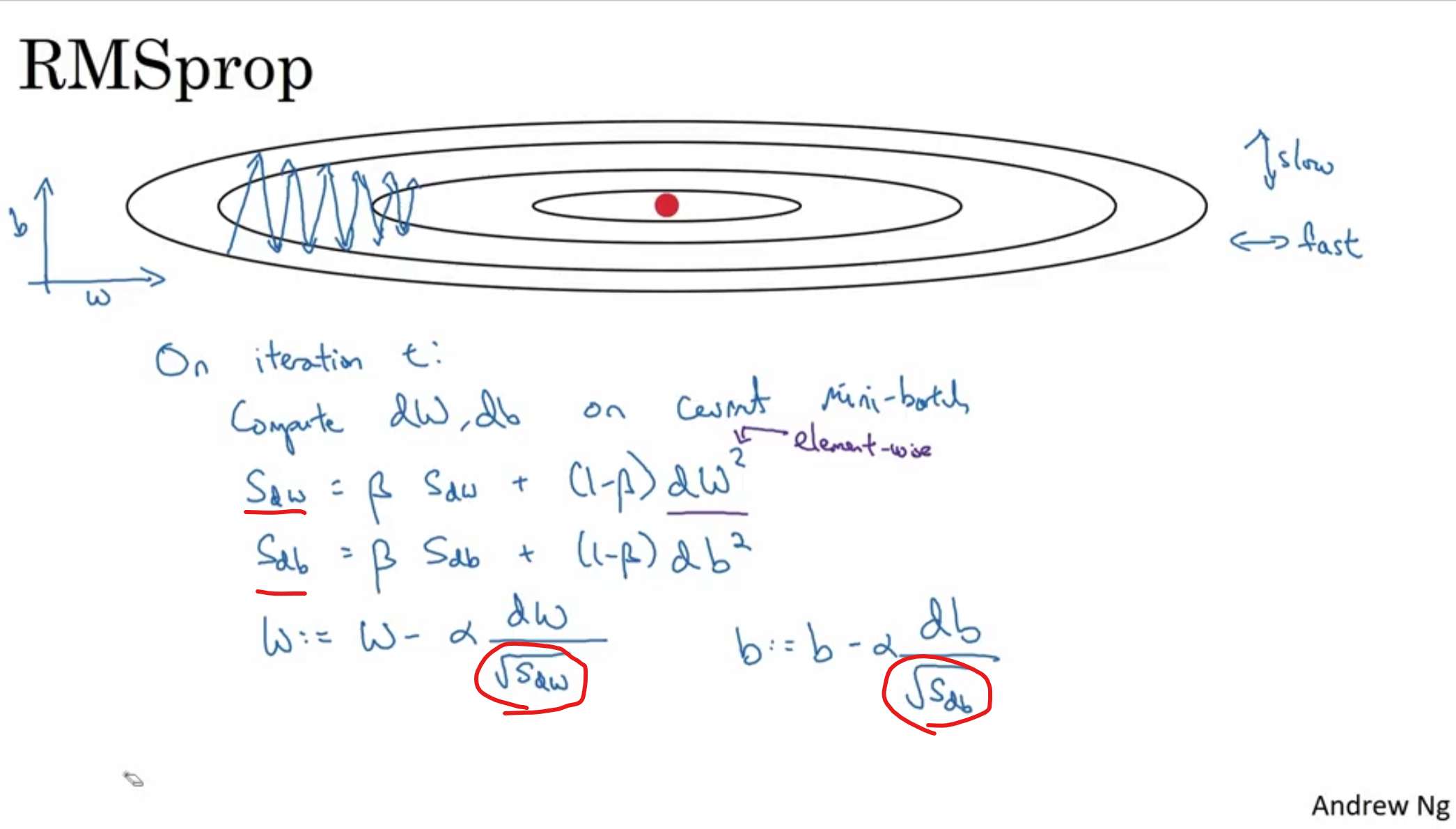

- We compute S_dw and S_db.

- if S_dw is relatively small and S_db is relatively large, we can place these in the denominator and update w and b accordingly.

- Then, we can diminish the movement of b and speed up w (horizontal movement)

- With RMSprop, we can possibly use larger learning rate to speed up the process even more.

※ In practice, the horizontal might be w1, w2, w3 and the vertial might be w4, w5, w6 and b.