cexrm : alias로 확인할 수 있다.

🐳 리눅스 네트워크에 기반한 도커 네트워크

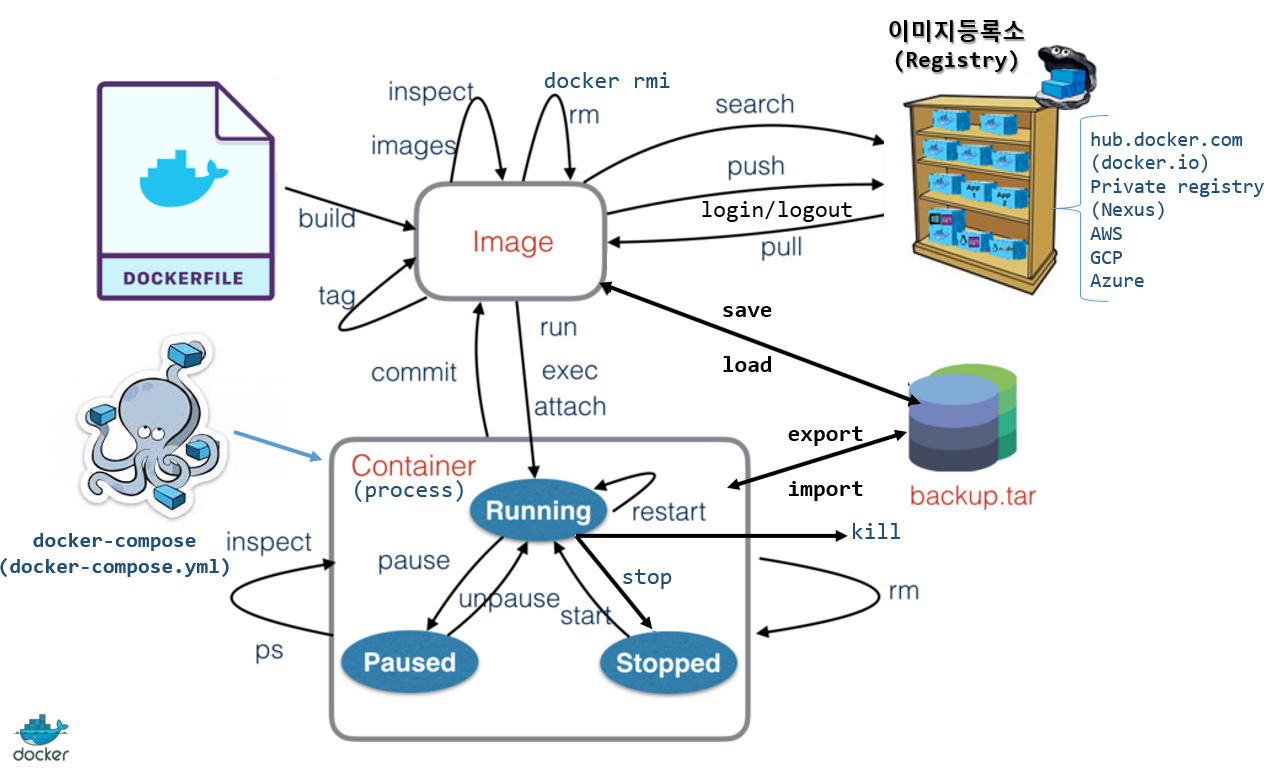

📕 도커 명령어

📔 kill

linux kill -> kill -9 [PID] : 프로세스 강제 종료 ( session kill)

docker kill -> force shutdown (강제 종료) -> 9) SIGKILL

docker stop -> graceful shutdown (정상 종료) -> 15) SIGTERM

yji@hostos1:~$ kill -l

1) SIGHUP 2) SIGINT 3) SIGQUIT 4) SIGILL 5) SIGTRAP

6) SIGABRT 7) SIGBUS 8) SIGFPE 9) SIGKILL 10) SIGUSR1

11) SIGSEGV 12) SIGUSR2 13) SIGPIPE 14) SIGALRM 15) SIGTERM

16) SIGSTKFLT 17) SIGCHLD 18) SIGCONT 19) SIGSTOP 20) SIGTSTP

21) SIGTTIN 22) SIGTTOU 23) SIGURG 24) SIGXCPU 25) SIGXFSZ

26) SIGVTALRM 27) SIGPROF 28) SIGWINCH 29) SIGIO 30) SIGPWR

31) SIGSYS 34) SIGRTMIN 35) SIGRTMIN+1 36) SIGRTMIN+2 37) SIGRTMIN+3

38) SIGRTMIN+4 39) SIGRTMIN+5 40) SIGRTMIN+6 41) SIGRTMIN+7 42) SIGRTMIN+8

43) SIGRTMIN+9 44) SIGRTMIN+10 45) SIGRTMIN+11 46) SIGRTMIN+12 47) SIGRTMIN+13

48) SIGRTMIN+14 49) SIGRTMIN+15 50) SIGRTMAX-14 51) SIGRTMAX-13 52) SIGRTMAX-12

53) SIGRTMAX-11 54) SIGRTMAX-10 55) SIGRTMAX-9 56) SIGRTMAX-8 57) SIGRTMAX-7

58) SIGRTMAX-6 59) SIGRTMAX-5 60) SIGRTMAX-4 61) SIGRTMAX-3 62) SIGRTMAX-2

63) SIGRTMAX-1 64) SIGRTMAXyji@hostos1:~$ docker kill mycent2

mycent2

yji@hostos1:~$ docker ps -a | grep mycent2

2f85be67d74d centos:7 "bash" 17 hours ago Exited (137) 12 seconds ago

---

yji@hostos1:~$ docker run -it --name myos8 ubuntu:14.04 bash

# pid 가지고 있으면 리눅스에서도 kill이 가능하지 않을까 ?

yji@hostos1:~$ ps -ef | grep myos8 | grep -v grep

yji 32696 31543 0 09:21 pts/1 00:00:00 docker run -it --name myos8 ubuntu:14.04 bash

---

# 우분투 컨테이너에서 killed 하면 세션은 나가지지만

docker ps로 확인하면 여전히 잘 살아있다.

따라서 linux killed 앞에는 (session) killed가 생략되어 있는 것이다.

- 이미지는 계층구조 = 컨테이너도 계층구조

-> tar(묶음) ! tar.gz(압축) ! 어버버

-

이미지를 파일로 이전하고 싶으면 backup.tar를 사용하자 -> 인터넷을 사용할 수 없는 폐쇄망일 경우 파일로 만들어서 다른 사람들에게 전달한다.

-

이미지를 save로 백업하는게 좋을지, export로 백업하는게 좋을지 -> save, why? image: 정적, container: 동적 ! 이전이나 백업 시에는 정적 상태인 이미지를 백업하기 위해서

save를 활용하세용

backup.tar

컨테이너를 백업해도 이미지로 올라온다.

-> 근데 docker run 바로 할 수 없음 !

-> Dockerfile을 한 번 더 건드려야지 import된 이미지를 사용할 수 있다.

-> save, load로 받은 파일은 바로 docker run으로 컨테이너를 실행시킬 수 있다.

# webserver container를 webserver.tar 파일로 저장

yji@hostos1:~$ docker run --name=webserver -d -p 9999:80 nginx:1.23.1

5022203ddcc6ac0a2c0890d49dae33b767b5d0b6ff44b965dfcfc9094cde771b

yji@hostos1:~$ docker export webserver > webserver.tar

# 생성된 tar 파일 상세 확인

yji@hostos1:~$ tar -tvf webserver.tar

yji@hostos1:~$ sudo scp webserver.tar yji@hostos2:/home/yji/backup/webserver.tar

[sudo] password for yji:

The authenticity of host 'hostos2 (192.168.56.102)' can't be established.

ECDSA key fingerprint is SHA256:SFPECj98u4CYVw/Y9OHblSpm9+0MhnRKlvD0+H+xcs0.

Are you sure you want to continue connecting (yes/no/[fingerprint])? yes

Warning: Permanently added 'hostos2,192.168.56.102' (ECDSA) to the list of known hosts.

yji@hostos2's password:

webserver.tar 100% 138MB 27.2MB/s 00:05

# 2번 서버에서 webserver.tar 파일을 기반으로 myweb:3.0 image 생성

yji@hostos2:~/backup$ cat webserver.tar | docker import - myweb:3.0

sha256:4f4e9a0a2fa21746d8d672ded1a1f281200915f08207cf97eea859c6f9c7b081

yji@hostos2:~/backup$ docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

myweb 3.0 4f4e9a0a2fa2 8 seconds ago 140MB

2214yj/myweb 1.0 17f9611c585e 5 days ago 23.6MB

yji@hostos2:~/backup$ docker run -d --name myweb3 -p 8002:80 myweb:3.0

docker: Error response from daemon: No command specified.

..???? 에러가 어떻게 나야된다구 한거지

Docker Container 관리

save & load !

# docker save

# tar (묶음)

yji@hostos1:~/LABs/save_lab$ docker image save phpserver:1.0 > phpserver1.tar

# gzip (압축)

yji@hostos1:~/LABs/save_lab$ docker image save phpserver:1.0 | gzip > phpserver1.tar.gz

# bzip2

yji@hostos1:~/LABs/save_lab$ docker image save phpserver:1.0 | bzip2 > phpserver1.tar.bz2

# 압축률 비교

ji@hostos1:~/LABs/save_lab$ ls -lh

total 663M

-rw-rw-r-- 1 yji yji 400M 9월 14 09:45 phpserver1.tar

-rw-rw-r-- 1 yji yji 125M 9월 14 09:48 phpserver1.tar.bz2

-rw-rw-r-- 1 yji yji 139M 9월 14 09:46 phpserver1.tar.gz

# 2번 서버에게 파일 전송

yji@hostos1:~/LABs/save_lab$ scp phpserver1.tar.gz yji@hostos2:/home/yji/backup/phpserver1.tar.gz

# 2번 서버) docker load

# < 사용해서 docker image load

yji@hostos2:~/backup$ docker image load < phpserver1.tar.gz

87c8a1d8f54f: Loading layer 72.5MB/72.5MB

ddcd8d2fcf7e: Loading layer 3.584kB/3.584kB

e45a78df7536: Loading layer 231.4MB/231.4MB

02eef72b445f: Loading layer 5.12kB/5.12kB

bc0429138e0d: Loading layer 46.65MB/46.65MB

d666585087a1: Loading layer 9.728kB/9.728kB

0ff9183bd099: Loading layer 7.68kB/7.68kB

914a1eddd57a: Loading layer 13.62MB/13.62MB

e1cd0107ea85: Loading layer 4.096kB/4.096kB

ce60a0c97d4a: Loading layer 54.61MB/54.61MB

9a60d912a14f: Loading layer 12.29kB/12.29kB

6ec4d4ce53cc: Loading layer 4.608kB/4.608kB

cc45506c4447: Loading layer 3.584kB/3.584kB

5dc980197467: Loading layer 4.608kB/4.608kB

51d64a1b8e7d: Loading layer 3.584kB/3.584kB

Loaded image: phpserver:1.0

yji@hostos2:~/backup$ docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

myweb 3.0 4f4e9a0a2fa2 6 minutes ago 140MB

phpserver 1.0 1385adda691e 24 hours ago 410MB

2214yj/myweb 1.0 17f9611c585e 5 days ago 23.6MB

yji@hostos2:~/backup$ docker run -itd -p 8200:80 phpserver:1.0

df1c4cf078473bb4e36dd082a3659a3c15781e79b95ef92048d47e3fe18db19a

yji@hostos2:~/backup$ curl localhost:8200

<html>

<body>

<div style="font-size:25px">

Container Name : df1c4cf07847<p> Welcome to the Docker world~! </p>

</div>

</body>

</html>

📕 도커 네트워크

도커 엔진을깔면 브릿지가 올라온다.

docker 0를 샘플일뿐 실제 사용하지는 않는다. 커스텀 할 수 있음.

docker network ls

yji@hostos1:~$ sudo apt install bridge-utils

# docker 0 : 브릿지네임

# docker0에 연결되어있는 인터페이스(veth)가 2개 올라와있다. = 컨테이너가 2개다.

# ifconfig하면 veth 볼 수 있음.

yji@hostos1:~$ brctl show

bridge name bridge id STP enabled interfaces

docker0 8000.0242ed0c4d4a no veth1daf760

veth2857015

# docker ps 하면 컨테이너 2개인 것을 볼 수 있음.

yji@hostos1:~$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

26509e9c2ba0 ubuntu:14.04 "bash" 2 minutes ago Up 2 minutes myos8

1592f89474bc google/cadvisor:latest "/usr/bin/cadvisor -…" 5 days ago Up 26 hours 0.0.0.0:9559->8080/tcp, :::9559->8080/tcp cadvisor

컨테이너를 띄우면 네트워크에 어떻게 연결되는지 조회하자.

# 컨테이너 띄우기

yji@hostos1:~$ docker run -itd --name myos5 ubuntu:14.04

97f3a838333fe1a49fc9c8d21b503b57e8c57411d107be8570c2ce152429e60d

# veth 하나 더 생성됨

# ifconfig로 봐도 하나 더 생겨있다.

yji@hostos1:~$ brctl show

bridge name bridge id STP enabled interfaces

docker0 8000.0242ed0c4d4a no veth1daf760

veth2627069

veth2857015

# ifconfig로 봐도 하나 더 생겨있다.

# lo = loop back = 안봐도됨. 자체 생성된 네트워크

eth0 ~ : 이걸 봐야한다.

inet : 172.17.0.4/16 = 대역의 4번 ip 사용 중입니다.

69, 70 : 링크 값

yji@hostos1:~$ docker exec -it myos5 ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

69: eth0@if70: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:ac:11:00:04 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.4/16 brd 172.17.255.255 scope global eth0

valid_lft forever preferred_lft forever

# 라우팅 테이블 : 컨테이너 안의 샌드박스 안에 존재한다.

yji@hostos1:~$ docker exec -it myos5 route

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

default 172.17.0.1 0.0.0.0 UG 0 0 0 eth0

172.17.0.0 * 255.255.0.0 U 0 0 0 eth0

# 컨테이너의 IP 주소 확인

yji@hostos1:~$ docker inspect myos5 | grep IPA

"SecondaryIPAddresses": null,

"IPAddress": "172.17.0.4",

"IPAMConfig": null,

"IPAddress": "172.17.0.4",

# 컨테이너의 MAC 주소 확인

yji@hostos1:~$ docker inspect myos5 | grep Mac

"MacAddress": "02:42:ac:11:00:04",

"MacAddress": "02:42:ac:11:00:04",컨테이너와 interface 확인

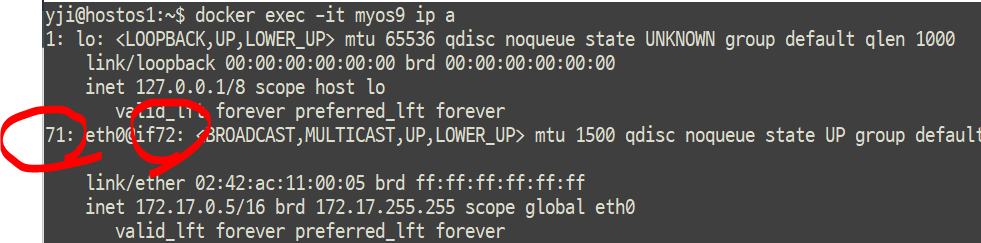

문제 : 뭐가 어떻게 연결되어있는지 모른다.

그떄 필요한게 링크값 !

docker exec -it myos5 ip addr

# 확인

## interface랑 컨테이너랑 순서대로 연결되어있지도 않다.

yji@hostos1:~$ brctl show

bridge name bridge id STP enabled interfaces

docker0 8000.0242ed0c4d4a no veth1daf760

veth2627069

veth2857015

yji@hostos1:~$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

97f3a838333f ubuntu:14.04 "/bin/bash" 5 minutes ago Up 5 minutes myos5

26509e9c2ba0 ubuntu:14.04 "bash" 8 minutes ago Up 8 minutes myos8

1592f89474bc google/cadvisor:latest "/usr/bin/cadvisor -…" 5 days ago Up 26 hours 0.0.0.0:9559->8080/tcp, :::9559->8080/tcp cadvisor

- 링크값

yji@hostos1:~$ docker exec -it myos9 ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

71: eth0@if72: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:ac:11:00:05 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.5/16 brd 172.17.255.255 scope global eth0

valid_lft forever preferred_lft forever# veth가 항상 숫자가 하나 더 큼.........

yji@hostos1:~$ sudo cat /sys/class/net/vetha852b6d/ifindex

72

# eth0에 연결된 link값

yji@hostos1:~$ docker exec -it myos9 cat /sys/class/net/eth0/iflink

72

📕 cadvisor의 interface값 찾기

!!!!!!!!!!!! 아직 못 찾음

yji@hostos1:~$ docker exec -it cadvisor cat /sys/class/net/enp0s3/iflink

2

yji@hostos1:~$ docker exec -it cadvisor cat /sys/class/net/enp0s8/iflink

3

yji@hostos1:~$ sudo cat /sys/class/net/veth1daf760/ifindex

68

yji@hostos1:~$ sudo cat /sys/class/net/veth2627069/ifindex

70

yji@hostos1:~$ sudo cat /sys/class/net/veth2857015/ifindex

8

yji@hostos1:~$ sudo cat /sys/class/net/vethc5099c2/ifindex

74

yji@hostos1:~$ sudo cat /sys/class/net/vetha852b6d/ifindex

72

yji@hostos1:~/LABs/docker-phpserver$ docker exec -it cadvisor ls /sys/class/net

br-43bba2c1decb br-fad17e6f022b lo vethb82fbec

br-b38113e7f7f2 docker0 veth1be7373 vethd138c25

br-d79507ea5285 enp0s3 veth4c8c3e8

br-d846ee1f1175 enp0s8 vethaef7e5d

📔 컨테이너 /etc/hosts 추가하는 옵션 --add-host

yji@hostos1:~$ docker run -it --name=myos11 --add-host=hostos1:192.168.56.101 centos:7

[root@0eb35edbb658 /]# cat /etc/hosts

127.0.0.1 localhost

::1 localhost ip6-localhost ip6-loopback

fe00::0 ip6-localnet

ff00::0 ip6-mcastprefix

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

192.168.56.101 hostos1

📔 컨테이너의 DNS(/etc/resolv.conf) 변경 옵션 --dns

yji@hostos1:~$ docker run -it --name=myos12 --dns=8.8.8.8 centos:7 cat /etc/resolv.conf

nameserver 8.8.8.8📔 -P 옵션

-

-p, --publish :

호스트와 컨테이너의 포트를 연결합니다. (포트포워딩)

<호스트 포트>:<컨테이너 포트>

-p 80:80 -

-P : 이미지 안에 exposed 된 포트를 찾고, 다른 포트를 알아서 붙인다.

# 컨테이너의 80번이 열려있고

yji@hostos1:~$ docker run -d --name=webserver2 -P nginx:1.23.1-alpine

f000a8762ec4a12721d4afdd9bc90256f8f60690c32de329bdad71d5e833d0c7

yji@hostos1:~$ docker port webserver2

80/tcp -> 0.0.0.0:49153

80/tcp -> :::49153

# 관리자용 포트 10000번 열어줘.

# 방화벽에 10000번이 오픈된다.

yji@hostos1:~$ docker run -d --name=webserver3 --expose=10000 -P nginx:1.23.1-alpine

8162813fe0ace2e0b569c8c788abbe136cc01fce16dc3d5b569e1213992e57f9

yji@hostos1:~$ docker port webserver3

10000/tcp -> 0.0.0.0:49154

10000/tcp -> :::49154

80/tcp -> 0.0.0.0:49155

80/tcp -> :::49155📔 패킷트래픽 확인 - iptraf-ng

: 이걸 왜 쓰는거라고요 ..?

1. 라운드로빈 확인

2. 컨테이너 interfaces값 확인

yji@hostos1:~$ sudo apt -y install iptraf-ng

- pps : 초당 패킷 유입량

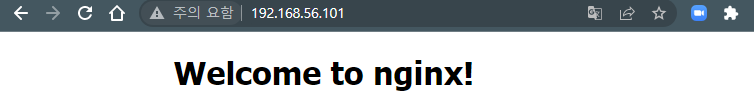

📔 --net, --network=host

포트포워딩 단계없이 트래픽을 컨테이너에 전달할 수 있다.

컨테이너가 호스트에 붙어버린 것임 .

포트포워딩 없이 컨테이너에 접근할 수 있기 때문에 좀 더 빠르게 패킷을 전달할 수 있다.

하지만 net=host 컨테이너가 너무 많아지면 호스트의 트래픽이 올라가기때문에 권장하지 않음.

# -p. 옵션 안줘서 포트가 없다 !

yji@hostos1:~$ docker run -d --name=hostnet --network=host nginx:1.23.1-alpine

0cebbf019459d27cecf92b0f108b06f76edf013391a0d4d5f12552b3117ef829

yji@hostos1:~$ docker ps | grep host

0cebbf019459 nginx:1.23.1-alpine "/docker-entrypoint.…" 7 seconds ago Up 6 seconds hostnet

# 근데 http://192.168.56.101: (80은 생략) 으로 접속하면 80번 포트가 열려있다 .

--net=host 때문임

yji@hostos1:~$ sudo netstat -nlp | grep 80

tcp 0 0 0.0.0.0:9559 0.0.0.0:* LISTEN 2880/docker-proxy

tcp 0 0 0.0.0.0:80 0.0.0.0:* LISTEN 109930/nginx: maste

tcp6 0 0 :::80 :::* LISTEN 109930/nginx: maste

unix 2 [ ACC ] STREAM LISTENING 1180322 105716/containerd-s /run/containerd/s/23c7591d44412f4dd7a951b6efd2bcf108df4f0470b8008d7b59844a4ba54dd6

unix 2 [ ACC ] STREAM LISTENING 1199814 108450/containerd-s /run/containerd/s/ef8079d3307713e34b98738f5c67ad48703fd09198b8f89c5aba57fe31527e4e

unix 2 [ ACC ] STREAM LISTENING 1193316 106877/containerd-s /run/containerd/s/2a5ad8455f935809c9b263088c5b4573774dab18dbaeb57dfe1fc7bd7a25f853

yji@hostos1:~$ ps -ef | grep 109930

root 109930 109910 0 11:42 ? 00:00:00 nginx: master process nginx -g daemon off;

systemd+ 109972 109930 0 11:42 ? 00:00:00 nginx: worker process

yji 110092 31483 0 11:45 pts/0 00:00:00 grep --color=auto 109930

# hostnet 컨테이너의 ip 주소가 없음. 그냥 host에 딱 붙어서 host의 것을 사용한다.

yji@hostos1:~$ docker inspect hostnet | grep IPA

"SecondaryIPAddresses": null,

"IPAddress": "",

"IPAMConfig": null,

"IPAddress": "",

📔 리눅스 포트 확인

sudo netstat -nlp | grep [포트번호]

yji@hostos1:~$ sudo netstat -nlp | grep 80

[sudo] password for yji:

tcp 0 0 0.0.0.0:9559 0.0.0.0:* LISTEN 2880/docker-proxy

tcp 0 0 0.0.0.0:80 0.0.0.0:* LISTEN 109930/nginx: maste

tcp6 0 0 :::80 :::* LISTEN 109930/nginx: maste

unix 2 [ ACC ] STREAM LISTENING 1180322 105716/containerd-s /run/containerd/s/23c7591d44412f4dd7a951b6efd2bcf108df4f0470b8008d7b59844a4ba54dd6

unix 2 [ ACC ] STREAM LISTENING 1199814 108450/containerd-s /run/containerd/s/ef8079d3307713e34b98738f5c67ad48703fd09198b8f89c5aba57fe31527e4e

unix 2 [ ACC ] STREAM LISTENING 1193316 106877/containerd-s /run/containerd/s/2a5ad8455f935809c9b263088c5b4573774dab18dbaeb57dfe1fc7bd7a25f853

📔 네트워크 만들기

보통 docker0를 그대로 쓰지않음 !

1. 만들고

2. 연결하자

docker network create -d(--driver) bridge web-net : -d bridge는 생략가능 ~

yji@hostos1:~$ docker network create web-net

조회 : route / ifconifg / docker network ls / brctl show

43bba2c1decb4406915fd7dda15c9db1332d73767e33c5b7f657133c8c46f8c2

yji@hostos1:~$ docker run --net=web-net -it --name=net-check1 ubuntu:14.04 bash

root@700f743be3f8:/# ifconfig

eth0 Link encap:Ethernet HWaddr 02:42:ac:12:00:02

inet addr:172.18.0.2 Bcast:172.18.255.255 Mask:255.255.0.0

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:33 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:4532 (4.5 KB) TX bytes:0 (0.0 B)

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

UP LOOPBACK RUNNING MTU:65536 Metric:1

RX packets:0 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:0 (0.0 B) TX bytes:0 (0.0 B)

root@700f743be3f8:/# route

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

default hostos1 0.0.0.0 UG 0 0 0 eth0

172.18.0.0 * 255.255.0.0 U 0 0 0 eth0

# 다른 터미널에서

yji@hostos1:~$ brctl show

bridge name bridge id STP enabled interfaces

br-43bba2c1decb 8000.0242f1d515ef no veth36b80e7

veth3e5a5e8

# 새로만든 브릿지 상세 확인

# "Subnet": 172.18.0.0/16" -> 65536개

yji@hostos1:~$ docker network inspect web-net | grep Subnet

"Subnet": "172.18.0.0/16",

yji@hostos1:~$ docker network inspect web-net

[

{

"Name": "web-net",

"Id": "43bba2c1decb4406915fd7dda15c9db1332d73767e33c5b7f657133c8c46f8c2",

"Created": "2022-09-14T12:07:45.195282262+09:00",

"Scope": "local",

"Driver": "bridge",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": {},

"Config": [

{

"Subnet": "172.18.0.0/16",

"Gateway": "172.18.0.1"

}

]

},

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

"Containers": {

"13736004b9cf03fea09ae83b3dcf3ea0ec29400adf446e76d8fbab307a50362a": {

"Name": "net-check2",

"EndpointID": "965ab971dd0dfe224e834f956fef242bbdb8701528e137613279683528f76c39",

"MacAddress": "02:42:ac:12:00:03",

"IPv4Address": "172.18.0.3/16",

"IPv6Address": ""

},

"700f743be3f89c12ce62b9e25fafa46f44adf77909f93f592aa8d71c491a035f": {

"Name": "net-check1",

"EndpointID": "3ef2b12eb48a3cb61b40b5b34aa318780a9c662abf2b67a900474b1044679c69",

"MacAddress": "02:42:ac:12:00:02",

"IPv4Address": "172.18.0.2/16",

"IPv6Address": ""

}

},

"Options": {},

"Labels": {}

}

]

브릿지 생성 후 조회

# route

yji@hostos1:~$ route

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

172.17.0.0 0.0.0.0 255.255.0.0 U 0 0 0 docker0

172.18.0.0 0.0.0.0 255.255.0.0 U 0 0 0 br-43bba2c1decb

# ifconfig

yji@hostos1:~$ ifconfig

br-43bba2c1decb: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 172.18.0.1 netmask 255.255.0.0 broadcast 172.18.255.255

inet6 fe80::42:f1ff:fed5:15ef prefixlen 64 scopeid 0x20<link>

ether 02:42:f1:d5:15:ef txqueuelen 0 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 34 bytes 4623 (4.6 KB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

docker0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 172.17.0.1 netmask 255.255.0.0 broadcast 172.17.255.255

inet6 fe80::42:edff:fe0c:4d4a prefixlen 64 scopeid 0x20<link>

ether 02:42:ed:0c:4d:4a txqueuelen 0 (Ethernet)

RX packets 95743 bytes 2721272612 (2.7 GB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 941356 bytes 53203655 (53.2 MB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

# docker network ls

yji@hostos1:~$ docker network ls

NETWORK ID NAME DRIVER SCOPE

85b12075b75c bridge bridge local

cd1e0d6188a3 host host local

6e2bc71954be none null local

43bba2c1decb web-net bridge local

# brctl show

yji@hostos1:~$ brctl show

bridge name bridge id STP enabled interfaces

br-43bba2c1decb 8000.0242f1d515ef no veth36b80e7

veth3e5a5e8

# 위에 docker network inspect web-net- Cloud, container 사용시 CIDR 기법으로 IP 대역을 지정 : private network를 지정 클라우드에서 사용하는 네트워크는 충돌을 막기 위해 사설망을 사용하면서 ⭐RFC 1918 (국제표준)⭐ 을 이용합니다. 권고사안일 뿐이다 ~ ~ ⭐벗어나도 상관없다⭐ 회사는 private ip라서 충돌나지 않음 1) 10.0.0.0 ~ 10.255.255.255 -> 10.0.0.0/8 : 천육십칠만개 ? 24 2) 172.16.0.0 ~ 172.31.0.0 -> (AWS) 172.16.0.0/12 백만개 20 3) 192.168.0.0 ~ 192.168.255.255 -> 192.168.0.0/16 .. 16 작을 수록 덩어리가 커진다. 32 = ip 1개 첫번째는 뭐라서 뺴고 마지막은 뭐라서 밴다ㅗㄱ ? 게이트웨이 ? 서브넷이 커 Ip-range가 커 ? == subnet이 더 크다. range를 줄이면 우리가 사용할 수 있는 범위가 적어지는 것뿐 서브넷 = ip-range 면 ip 대역폭에 해당하는거 다 쓰겟다는거임

- 우리는 200개 정도의 IP면 충분하기 때문에 /24면 된다. 32-24 = 8

📔 원하는 docker bridge 만들기

브릿지 하나 만들고 새로 만드는 컨테이너의 ip 주소는 ...~ 172.30.1.2 ! 왜 !

CIDR 표기만 설정 가능

--subnet : 255.255.255.0과 같음

--ip-range : subnet이하, ip 범위 조정 가능)172.30.1.100/26)

256개의 ip 중 254개 사용 가능

yji@hostos1:~$ docker network create \

> --driver bridge \

> --subnet 172.30.1.0/24 \

> --ip-range 172.30.1.0/24 \

> --gateway 172.30.1.1 \

>vswitch-net

yji@hostos1:~$ docker network create --driver bridge --subnet 172.30.1.0/24 --ip-range 172.30.1.0/24 --gateway 172.30.1.1 vswithch-net

d79507ea5285b7bb6c9452cee797038cda814c496dffdf20ade25d7f1508df4f

# 생성된 브릿지의 정보를 inspect 명령을 통해 확인

yji@hostos1:~$ docker network inspect vswitch-net

[]

Error: No such network: vswitch-net

yji@hostos1:~$ docker network inspect vswithch-net

[

{

"Name": "vswithch-net",

"Id": "d79507ea5285b7bb6c9452cee797038cda814c496dffdf20ade25d7f1508df4f",

"Created": "2022-09-14T12:26:55.599518307+09:00",

"Scope": "local",

"Driver": "bridge",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": {},

"Config": [

{

"Subnet": "172.30.1.0/24",

"IPRange": "172.30.1.0/24",

"Gateway": "172.30.1.1"

}

]

},

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

"Containers": {},

"Options": {},

"Labels": {}

}

]

yji@hostos1:~$ docker run --net=vswithch-net -itd --name=net1 ubuntu:14.04

ea46b4f629d65ecf8d55cbba2696adb3ae16f75ae6655045455ce3e51ac68012

# 원하는 ip 지정하는 --ip 옵션

yji@hostos1:~$ docker run --net=vswithch-net -itd --name=net2 --ip=172.30.1.100 ubuntu:14.04

ffaa099dd4a0e32e097e289ede4c38f2eea31d39f6a48d4c735f581e9604df3d

yji@hostos1:~$ docker network inspect vswithch-net

[

{

"Name": "vswithch-net",

"Id": "d79507ea5285b7bb6c9452cee797038cda814c496dffdf20ade25d7f1508df4f",

"Created": "2022-09-14T12:26:55.599518307+09:00",

"Scope": "local",

"Driver": "bridge",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": {},

"Config": [

{

"Subnet": "172.30.1.0/24",

"IPRange": "172.30.1.0/24",

"Gateway": "172.30.1.1"

}

]

},

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

"Containers": {

"ea46b4f629d65ecf8d55cbba2696adb3ae16f75ae6655045455ce3e51ac68012": {

"Name": "net1",

"EndpointID": "333caf0cf5e97feda4485aa7e36bf5b09bae8044e79512dbd971fd00ec85e8b8",

"MacAddress": "02:42:ac:1e:01:02",

"IPv4Address": "172.30.1.2/24",

"IPv6Address": ""

},

"ffaa099dd4a0e32e097e289ede4c38f2eea31d39f6a48d4c735f581e9604df3d": {

"Name": "net2",

"EndpointID": "611529339ee320994f1d24967adc47ec14a93ede3186e9e65e391f6d0c448206",

"MacAddress": "02:42:ac:1e:01:64",

"IPv4Address": "172.30.1.100/24",

"IPv6Address": ""

}

},

"Options": {},

"Labels": {}

}

]

yji@hostos1:~$ docker inspect net1 | grep IPA

"SecondaryIPAddresses": null,

"IPAddress": "",

"IPAMConfig": null,

"IPAddress": "172.30.1.2",

yji@hostos1:~$ docker inspect net1 | grep IPAddress

"SecondaryIPAddresses": null,

"IPAddress": "",

"IPAddress": "172.30.1.2",

yji@hostos1:~$ docker inspect net2 | grep IPAddress

"SecondaryIPAddresses": null,

"IPAddress": "",

"IPAddress": "172.30.1.100",

yji@hostos1:~$ docker network ls

NETWORK ID NAME DRIVER SCOPE

d79507ea5285 vswithch-net bridge local

yji@hostos1:~$ brctl show

bridge name bridge id STP enabled interfaces

br-43bba2c1decb 8000.0242f1d515ef no veth36b80e7

veth3e5a5e8

br-d79507ea5285 8000.024266026f38 no veth38f4718

veth3c55ca6

yji@hostos1:~$ docker exec net1 ip a

...

93: eth0@if94: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:ac:1e:01:02 brd ff:ff:ff:ff:ff:ff

inet 172.30.1.2/24 brd 172.30.1.255 scope global eth0

valid_lft forever preferred_lft forever

yji@hostos1:~$ docker exec net2 ip a

...

95: eth0@if96: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:ac:1e:01:64 brd ff:ff:ff:ff:ff:ff

inet 172.30.1.100/24 brd 172.30.1.255 scope global eth0

valid_lft forever preferred_lft forever

📔 컨테이너끼리 ping 던지기~ DNS의 부가적인 기능

- DNS

1) IP를 도메인 네임으로 해석 또는 그 반대

2)

yji@hostos1:~$ docker network create app-service

b38113e7f7f2db8f33ce7f59084c3b3e9b48010b30e3e798cb483b9f48afde9b

yji@hostos1:~$ route

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

default _gateway 0.0.0.0 UG 100 0 0 enp0s3

default _gateway 0.0.0.0 UG 20101 0 0 enp0s8

10.0.2.0 0.0.0.0 255.255.255.0 U 100 0 0 enp0s3

link-local 0.0.0.0 255.255.0.0 U 1000 0 0 enp0s8

172.17.0.0 0.0.0.0 255.255.0.0 U 0 0 0 docker0

172.18.0.0 0.0.0.0 255.255.0.0 U 0 0 0 br-43bba2c1decb

172.19.0.0 0.0.0.0 255.255.0.0 U 0 0 0 br-b38113e7f7f2

172.30.1.0 0.0.0.0 255.255.255.0 U 0 0 0 br-d79507ea5285

192.168.56.0 0.0.0.0 255.255.255.0 U 101 0 0 enp0s8

yji@hostos1:~$ docker run -it --name=appsrv1 --net=app-service ubuntu:14.04 bash

root@f2081dbb82de:/#

## 다른 터미널로

yji@hostos1:~$ docker run -it --name=appsrv2 --net=app-service ubuntu:14.04 bash

# ⭐⭐컨테이너 내에서 다른 컨테이너에게 ping을 던질 수 있다. 같은 컨테이너 상에서는 ping을 던질 수 있다. 그리고 어떤 네트워크에 있고, 어떤 ip를 가지는지도 알려준다. => why? DNS !

docker는 DNS 서버를 내장하고 있다.

⭐⭐

root@f2081dbb82de:/# ping -c 2 appsrv2

PING appsrv2 (172.19.0.3) 56(84) bytes of data.

64 bytes from appsrv2.app-service (172.19.0.3): icmp_seq=1 ttl=64 time=0.135 ms

64 bytes from appsrv2.app-service (172.19.0.3): icmp_seq=2 ttl=64 time=0.105 ms📔 컨테이너 로드 밸런서

- Container load balancer = switch 장비와 같은 역할 -> proxy -> HAproxy, Nginx, Apache LB -> RR

1) Docker container self LB: 내장된 DNS 서버(서비스)로 구현

- how?

- 1) 사용자 정의 Bridge network 생성 (위에서 ip-range 잡아서 했던 실습)

- 2) --net-alias : target group 지정 : workload(트래픽)를 받을 서버(또는 컨테이너)의 그룹

- 이렇게 하면 자체 DNS 기능 활성화 :

/etc/hosts: 127.0.0.11 : docker DNS = Service Discovery

Docker DNS는 컨테이너명을 ip로 바꿔준다.

2) nginx container를 proxy로 전환하여 LB로 구성

--- c1

외부 ---- [nginx LB] --- c2

--- c3

📔 LB 실습 1) docker container self LB

⭐ DNS 덕분에 가능하다. ⭐

1. 컨테이너 3개 만들어서

2. --net-alias 명령어 이용해서 target group으로 묶는다.

3. 등록된 서비스 확인하는 dns-utils인 dig

강사님이 실습하는거 먼저 보기

- 사용자 정의 도커 네트워크 만들기

이름: netlb - 컨테이너 세개 띄우기

docker run -itd --name=lb-test1 --net=netlb --net-alias=tg.net ubuntu:14.04 docker inspect lb-test1 | grep IPA로 ip 확인하기.- tg-net으로 묶여있는 컨테이너 3개는 docker dns에게 자체적으로 서비스를 받는다.

- 같은 netlb 네트워크를 가진, 근데 타겟그룹으로 묶이지 않은

frontend라는 컨테이너 생성

6.frontend컨테이너 접속 ping -c 2 tg-net명령 실행- 같은 네트워크 안에, 타겟그룹 안에, 컨테이너 3개가 있는 것을 알고있어서 ping 잘 날라간다. 그리고 컨테이너를 바꿔가며 ping을 날려보면서 LB 역할을 하는 걸 볼 수 있다.

apt -y install dns-utils- dns-utils 안에 dig라는 명령어가 있다.

dig tg-net

docker network create \

> --driver bridge \

> --subnet 172.200.1.0/24 \

> --ip-range 172.200.1.0/24 \

> --gateway 172.200.1.1 \

> netlb

yji@hostos1:~$ route

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

172.200.1.0 0.0.0.0 255.255.255.0 U 0 0 0 br-fad17e6f022b

yji@hostos1:~$ docker run -itd --name lb-test1 --net=netlb --net-alias=tg-net ubuntu:14.04

9bfb84e020bf2bda4653db46d3f8c82b2e5b9fecb628251fe2aeb6881f6cb77b

yji@hostos1:~$ docker run -itd --name lb-test2 --net=netlb --net-alias=tg-net ubuntu:14.04

3b0e72f1ed1209a16e6a9c377965a55e60ed5f3fd416dec968738c628f85b488

yji@hostos1:~$ docker run -itd --name lb-test3 --net=netlb --net-alias=tg-net ubuntu:14.04

560421df1cb652e759d5e9e2645b4c43c4a3ff9b9d071a0f3cbbb46e4d104270

yji@hostos1:~$ docker inspect lb-test1 | grep IPAddress

"SecondaryIPAddresses": null,

"IPAddress": "",

"IPAddress": "172.200.1.2",

yji@hostos1:~$ docker inspect lb-test2 | grep IPAddress

"SecondaryIPAddresses": null,

"IPAddress": "",

"IPAddress": "172.200.1.3",

yji@hostos1:~$ docker inspect lb-test3 | grep IPAddress

"SecondaryIPAddresses": null,

"IPAddress": "",

"IPAddress": "172.200.1.4",

# 컨테이너 생성 후 같은 네트워크가진 컨테이너끼리 ping 날려보기

## RR이 아니라 랜덤으로 응답하는 컨테이너를 볼 수 있음

yji@hostos1:~$ docker run -it --name=frontend --net=netlb ubuntu:14.04 bash

root@1ee5ea21622f:/# ping -c 2 tg-net

PING tg-net (172.200.1.3⭐) 56(84) bytes of data.

64 bytes from lb-test2.netlb (172.200.1.3): icmp_seq=1 ttl=64 time=0.197 ms

64 bytes from lb-test2.netlb (172.200.1.3): icmp_seq=2 ttl=64 time=0.115 ms

--- tg-net ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1003ms

rtt min/avg/max/mdev = 0.115/0.156/0.197/0.041 ms

root@1ee5ea21622f:/# ping -c 2 tg-net

PING tg-net (172.200.1.2⭐) 56(84) bytes of data.

64 bytes from lb-test1.netlb (172.200.1.2): icmp_seq=1 ttl=64 time=4.65 ms

64 bytes from lb-test1.netlb (172.200.1.2): icmp_seq=2 ttl=64 time=0.108 ms

--- tg-net ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1001ms

rtt min/avg/max/mdev = 0.108/2.381/4.655/2.274 ms

root@1ee5ea21622f:/# ping -c 2 tg-net

PING tg-net (172.200.1.4⭐) 56(84) bytes of data.

64 bytes from lb-test3.netlb (172.200.1.4): icmp_seq=1 ttl=64 time=0.254 ms

64 bytes from lb-test3.netlb (172.200.1.4): icmp_seq=2 ttl=64 time=0.105 ms

--- tg-net ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1002ms

rtt min/avg/max/mdev = 0.105/0.179/0.254/0.075 ms

# dig 명령으로 DNS ... 보기

apt -y install dnsutils

root@1ee5ea21622f:/# dig tg-net

; <<>> DiG 9.9.5-3-Ubuntu <<>> tg-net

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 45741

;; flags: qr rd ra; QUERY: 1, ANSWER: 3, AUTHORITY: 0, ADDITIONAL: 0

;; QUESTION SECTION:

;tg-net. IN A

;; ANSWER SECTION:

tg-net. 600 IN A 172.200.1.2

tg-net. 600 IN A 172.200.1.4

tg-net. 600 IN A 172.200.1.3

;; Query time: 6 msec

;; SERVER: 127.0.0.11#53(127.0.0.11)

;; WHEN: Wed Sep 14 05:45:16 UTC 2022

;; MSG SIZE rcvd: 90

# 2번 터미널에서 컨테이너 추가

docker run -itd --name lb-test4 --net=netlb --net-alias=tg-net ubuntu:14.04

root@1ee5ea21622f:/# dig tg-net

# ⭐⭐ 추가된 컨테이너가 자동으로 DNS에 등록된 것을 확인 가능 ⭐⭐

; <<>> DiG 9.9.5-3-Ubuntu <<>> tg-net

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 733

;; flags: qr rd ra; QUERY: 1, ANSWER: 4, AUTHORITY: 0, ADDITIONAL: 0

;; QUESTION SECTION:

;tg-net. IN A

;; ANSWER SECTION:

tg-net. 600 IN A 172.200.1.3

tg-net. 600 IN A 172.200.1.2

tg-net. 600 IN A 172.200.1.6

tg-net. 600 IN A 172.200.1.4

;; Query time: 6 msec

;; SERVER: 127.0.0.11#53(127.0.0.11)

;; WHEN: Wed Sep 14 05:46:36 UTC 2022

;; MSG SIZE rcvd: 112

내장 DNS 서버는

QUESTION SECTION nlb-net으로 들어오는 요청을

ANSWER SECTION에 등록된 nlb-net에 속한 ip들로 랜덤 배분한다!

같은 도커 네트워크가 아니라 같은 타겟 그룹 안에 들어와야 DNS가 관리되는 것이다.

📔 LB 실습 2) nginx LB

- 실습 개요

우분투 컨테이너가 있다.

이 우분투에다가 직접 nignx를 설치한다. ( nginx 컨테이너 띄우는거 아님 !)

그리고 이 nginx를 proxy 역할을 하도록 바꾼다.

그 다음 nginx 컨테이너로 proxy역할을 하도록 바꾼다.

- nginx 설치

sudo apt -y install nginx- nginx 설치 확인

근데 만약, 80번 포트를 다른 컨테이너가 잡고있으면 충돌나서 running 상태 나오지 않는다 ~

컨테이너 정리하자

yji@hostos1:~$ sudo netstat -nlp | grep 80

tcp 0 0 0.0.0.0:80 0.0.0.0:* LISTEN 123072/nginx: maste

tcp6 0 0 :::80 :::* LISTEN 123072/nginx: maste

yji@hostos1:~$ sudo nginx -v

nginx version: nginx/1.18.0 (Ubuntu)

yji@hostos1:~$ sudo systemctl status nginx.service

● nginx.service - A high performance web server and a reverse proxy server

Loaded: loaded (/lib/systemd/system/nginx.service; enabled; vendor preset: enabled)

⭐ Active: active (running) ⭐

- 컨테이너 3개 띄우기

5001 : 임의

yji@hostos1:~$ docker run -itd -e SERVER_PORT=5001 -p 5001:5001 -h alb-node01 \

> -u root --name=alb-node01 dbgurum/nginxlb:1.0

54b991d4957d18466dc85ee70ce5f076c092fbefa5e16f37b2f0a9c476e4128e

yji@hostos1:~$ docker run -itd -e SERVER_PORT=5002 -p 5002:5002 -h alb-node02 -u root --name=alb-node02 dbgurum/nginxlb:1.0

ba26b6deedc88eb52fa2b6cfced4f5c25ca347c4e0106702c23b587dcf1129e8

yji@hostos1:~$ docker run -itd -e SERVER_PORT=5003 -p 5003:5003 -h alb-node03 -u root --name=alb-node03 dbgurum/nginxlb:1.0

f5799db4a1cc0440fe16c781c4fc132bcb227fd7abcab92cb163e3aff274b67a- 확인

yji@hostos1:~$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

f5799db4a1cc dbgurum/nginxlb:1.0 "/cnb/lifecycle/laun…" 27 seconds ago Up 26 seconds 0.0.0.0:5003->5003/tcp, :::5003->5003/tcp alb-node03

ba26b6deedc8 dbgurum/nginxlb:1.0 "/cnb/lifecycle/laun…" 43 seconds ago Up 42 seconds 0.0.0.0:5002->5002/tcp, :::5002->5002/tcp alb-node02

54b991d4957d dbgurum/nginxlb:1.0 "/cnb/lifecycle/laun…" About a minute ago Up About a minute 0.0.0.0:5001->5001/tcp, :::5001->5001/tcp alb-node01

1592f89474bc google/cadvisor:latest "/usr/bin/cadvisor -…" 6 days ago Up 7 minutes 0.0.0.0:9559->8080/tcp, :::9559->8080/tcp cadvisor

# 포트도 확인해주자 ~ ~

yji@hostos1:~$ sudo netstat -nlp | grep 500*

tcp 0 0 0.0.0.0:5001 0.0.0.0:* LISTEN 123734/docker-proxy

tcp 0 0 0.0.0.0:5002 0.0.0.0:* LISTEN 123893/docker-proxy

tcp 0 0 0.0.0.0:5003 0.0.0.0:* LISTEN 124053/docker-proxy

tcp6 0 0 :::5001 :::* LISTEN 123739/docker-proxy

tcp6 0 0 :::5002 :::* LISTEN 123898/docker-proxy

tcp6 0 0 :::5003 :::* LISTEN 124058/docker-proxy- 이건 우분투에 깔린 nginx , 80번 포트 생략

이건 컨테이너

- 지금은 nginx가 웹서버 역할을 하고있음

nginx.conf파일을 수정하자

location

80번 포트로 들어오면 / <- 여기야

proxy_pass가 이걸 누구한테 전달해?

http://backend-alb

backend-alb가 받으면 그걸 아래 server ~ ~ ~ 여기로 포워딩 해주세요 ~

server 127.0.0.1:5001 여기로 업스트립(전달)해주세요.

# nginx.conf

events { worker_connections 1024; }

http{

upstream backend-alb {

server 127.0.0.1:5001;

server 127.0.0.1:5002;

server 127.0.0.1:5003;

}

server {

listen 80 default_server;

location / {

proxy_pass http://backend-alb;

}

}

}- 데몬 재시작

yji@hostos1:/etc/nginx$ sudo systemctl restart nginx.service

yji@hostos1:/etc/nginx$ sudo systemctl status nginx.service-

http://192.168.56.101/로 접속해서 계속 새로고침하면 LB 역할을 수행하는 것을 볼 수 있다. -

삭제

yji@hostos1:/etc/nginx$ sudo systemctl stop nginx.service

[sudo] password for yji:

yji@hostos1:/etc/nginx$ sudo apt -y autoremove nginx

# 아무 것도 안뜨면 잘 삭제된거다.

yji@hostos1:/etc/nginx$ sudo netstat -nlp | grep 80

yji@hostos1:/etc/nginx$HAproxy -> L4(TCP, NLB(network LB) L7(HTTP)

📔 LB 실습 3) 과제~

apache + php 로 구성된 image를 이용한 nginx container 기반의 alb 구축

# Dockerfile

FROM php:7.2-apache

MAINTAINER datastory Hub <hylee@dshub.cloud>

ADD index.php3 /var/www/html/index.php

EXPOSE 80

CMD ["/usr/sbin/apache2ctl", "-D", "FOREGROUND"]

~

# nginx.conf

# 게이트웨이를 확인하자 !!

events { worker_connections 1024; }

http{

upstream backend-alb {

// gateway ip : 컨테이너 포트

server ⭐172.20.0.1⭐:⭐10001⭐;

server 172.20.0.1:10002;

server 172.20.0.1:10003;

}

server {

listen ⭐80⭐ default_server;

location / {

proxy_pass http://backend-alb;

}

}

}

# 컨테이너 세 개 생성

# 들어오는 포트트 80이여야한다.

docker run -itd -e SERVER_PORT=10001 -p 10001:⭐80⭐ -h alb-php01 --net=apache-net -u root --name alb-php01 phpserver:2.0

docker run -itd -e SERVER_PORT=10002 -p 10002:80 -h alb-php02 --net=apache-net -u root --name alb-php02 phpserver:2.0

docker run -itd -e SERVER_PORT=10003 -p 10003:80 -h alb-php03 --net=apache-net -u root --name alb-php03 phpserver:2.0

#

로컬로 수정할 파일 데리고 나와서 / 수정하고 / 다시 컨테이너 안으로 넣어준다.

docker cp server:/etc/nginx/nginx.conf ./nginx..conf

docker cp nginx.conf server:/etc/nginx/nginx.conf

# 우리는 nginx를 컨테이너로 띄웠으니까

# sudo systemctl restart nginx.service 대신

# docker restart [nginx 컨테이너 이름] 적으면 된다.

메모장

cvf tvf xvf

255.255.255.0 = 64?

`yji@hostos1:~$ docker run -it ubuntu:14.04 ping 192.168.56.1

` : 컨테이너 실행하자마자 윈도우한테 ping 때려줘

- 질문 : 그럼 우리가 항상 docker run 돌릴 때는 --net=docker0 가 등록된거:?

⭐⭐ Bridge = Mac addr 기반 ! 2계층 !

docker stop $(docker ps -a -q)

docker start cadvisor

📔 ⭐ 📕 🐳