Decision Tree를 이용한 와인 데이터 분석 - Wine

# 데이터 읽기

import pandas as pd

red_url = 'https://raw.githubusercontent.com/PinkWink/ML_tutorial/master/dataset/winequality-red.csv'

white_url = 'https://raw.githubusercontent.com/PinkWink/ML_tutorial/master/dataset/winequality-white.csv'

red_wine = pd.read_csv(red_url, sep=';')

white_wine = pd.read_csv(white_url, sep=';')# 두 데이터의 구조는 동일하다

red_wine.head()white_wine.head()# 컬럼 종류

white_wine.columns# 두 데이터 합치기

red_wine['color'] = 1

white_wine['color'] = 0

wine = pd.concat([red_wine, white_wine])

wine.info()# quality 컬럼은 3부터 9등급까지 존재

wine['quality'].unique()# histogram

import plotly.express as px

fig = px.histogram(wine, x='quality')

fig.show()# 레드/화이트 와인별로 등급 Histogram

fig = px.histogram(wine, x='quality', color = 'color')

fig.show()Decision Tree를 이용한 와인 데이터 분석 - 레드와인 화이트 와인 분류기

# 라벨 분리

X = wine.drop(['color'], axis=1)

Y = wine['color']# 데이터를 훈련용과 테스트용으로 나누기

from sklearn.model_selection import train_test_split

import numpy as np

X_train, X_test, Y_train, Y_test = train_test_split(X, Y, test_size=0.2, random_state=13)

np.unique(Y_train, return_counts=True)# 훈련용과 테스트용이 레드/화이트 와인에 따라 어느정도 구분되었을까

import plotly.graph_objects as go

fig = go.Figure()

fig.add_trace(go.Histogram(x=X_train['quality'], name='Train'))

fig.add_trace(go.Histogram(x=X_test['quality'], name='Test'))

fig.update_layout(barmode = 'overlay')

fig.update_traces(opacity = 0.75)

fig.show()# 결정나무

from sklearn.tree import DecisionTreeClassifier

wine_tree = DecisionTreeClassifier(max_depth=2, random_state=13)

wine_tree.fit(X_train, Y_train)from sklearn.metrics import accuracy_score

y_pred_tr = wine_tree.predict(X_train) # 수치 보기 위해

y_pred_test = wine_tree.predict(X_test)

print('Train Acc : ', accuracy_score(Y_train, y_pred_tr))

print('Test Acc : ', accuracy_score(Y_test, y_pred_test))Decision Tree를 이용한 와인 데이터 분석 - 데이터 전처리

# 와인 데이터의 몇 개 항목의 Boxplot

# 컬럼들의 최대/최소 범위가 각각 다르고, 평균과 분산이 각각 다르다.

# 특성(feature)의 편향 문제는 최적의 모델을 찾는데 방해가 될 수도 있다.

fig = go.Figure()

fig.add_trace(go.Box(y=X['fixed acidity'], name = 'fixed acidity'))

fig.add_trace(go.Box(y=X['chlorides'], name='chlorides'))

fig.add_trace(go.Box(y=X['quality'], name='quality'))

fig.show()# 이럴 때 쓰는 것이 MinMaxScaler와 StandardScaler이다

# 결정나무에서는 이런 전처리는 의미를 가지지 않는다.

# 주로 Cost Function을 최적화할 때 유효할 때가 있다.

# MinMaxScaler와 StandardScaler 중 어떤 것이 좋을지는 해봐야 안다.

from sklearn.preprocessing import MinMaxScaler, StandardScaler

MMS = MinMaxScaler()

SS = StandardScaler()

SS.fit(X)

MMS.fit(X)

X_ss = SS.transform(X)

X_mms = MMS.transform(X)

X_ss_pd = pd.DataFrame(X_ss, columns=X.columns)

X_mms_pd = pd.DataFrame(X_mms, columns=X.columns)

# MinMaxScaler : 최대 최소값을 1과 0으로 강제로 맞추는 것

fig = go.Figure()

fig.add_trace(go.Box(y=X_mms_pd['fixed acidity'], name='fixed acidity'))

fig.add_trace(go.Box(y=X_mms_pd['chlorides'], name='chlorides'))

fig.add_trace(go.Box(y=X_mms_pd['quality'], name = 'quality'))

fig.show()# StandardScaler : 평균을 0으로 표준편차를 1로 맞추는 것

def px_box(target_df):

fig = go.Figure()

fig.add_trace(go.Box(y=target_df['fixed acidity'], name = 'fixed acidity'))

fig.add_trace(go.Box(y=target_df['chlorides'], name='chlorides'))

fig.add_trace(go.Box(y=target_df['quality'], name = 'quality'))

fig.show()

px_box(X_ss_pd)# MinMaxScaler를 적용해서 다시 학습

# 다시 이야기하지만 결정나무에서는 이런 전처리는 거의 효과가 없다.

X_train, X_test, Y_train, Y_test = train_test_split(X_mms_pd, Y, test_size=0.2, random_state=13)

wine_tree = DecisionTreeClassifier(max_depth=2, random_state=13)

wine_tree.fit(X_train, Y_train)

y_pred_tr = wine_tree.predict(X_train)

y_pred_test = wine_tree.predict(X_test)

print('Train Acc : ', accuracy_score(Y_train, y_pred_tr))

print('Test Acc : ', accuracy_score(Y_test, y_pred_test) )

# StandardScaler를 적용

X_train, X_test, Y_train, Y_test = train_test_split(X_ss_pd, Y, test_size=0.2, random_state=13)

wine_tree = DecisionTreeClassifier(max_depth=2, random_state=13)

wine_tree.fit(X_train, Y_train)

y_pred_tr = wine_tree.predict(X_train)

y_pred_test = wine_tree.predict(X_test)

print('Train Acc : ', accuracy_score(Y_train, y_pred_tr))

print('Test Acc : ', accuracy_score(Y_test, y_pred_test) )

# 결정나무는 화이트와인과 레드와인을 어떻게 구분할까?

#total sulfur dioxide가 중요한 역할을 하는 것같다.# 레드와인과 화이트와인을 구분하는 중요 특성

# MaxDepth를 높이면 저 수치에도 변화가 온다.

dict(zip(X_train.columns, wine_tree.feature_importances_))Decision Tree를 이용한 와인 데이터 분석 - 맛의 이진 분류

# quality 컬럼을 이진화

wine['taste'] = [1 if grade > 5 else 0 for grade in wine['quality']] # ★

wine.info()

# 레드/화이트 와인 분류와 동일 과정을 거치자

X = wine.drop(['taste'], axis=1)

Y = wine['taste']

X_train, X_test, Y_train, Y_test = train_test_split(X, Y, test_size=0.2, random_state=13)

wine_tree = DecisionTreeClassifier(max_depth=2, random_state=13)

wine_tree.fit(X_train, Y_train)# 100프로 가능한가? → 의심해야 한다

y_pred_tr = wine_tree.predict(X_train)

y_pred_test = wine_tree.predict(X_test)

print('Train Acc : ', accuracy_score(Y_train, y_pred_tr))

print('Test Acc : ', accuracy_score(Y_test, y_pred_test))

# 왜 이런 일이 생겼는지 확인해보자

# quality 컬럼으로 taste 컬럼을 만들었으니 quality 컬럼은 제거 했어야 했다

import matplotlib.pyplot as plt

import sklearn.tree as tree

plt.figure(figsize=(12, 8))

tree.plot_tree(wine_tree, feature_names=X.columns)# 다시 진행

X = wine.drop(['taste', 'quality'], axis=1)

Y = wine['taste']

X_train, X_test, Y_train, Y_test = train_test_split(X, Y, test_size=0.2, random_state=13)

wine_tree = DecisionTreeClassifier(max_depth=2, random_state=13)

wine_tree.fit(X_train, Y_train)y_pred_tr = wine_tree.predict(X_train)

y_pred_test = wine_tree.predict(X_test)

print('Train Acc : ', accuracy_score(Y_train, y_pred_tr))

print('Test Acc : ', accuracy_score(Y_test, y_pred_test))# 어떤 와인을 “맛있다”고 할 수 있나?

import matplotlib.pyplot as plt

import sklearn.tree as tree

plt.figure(figsize=(12, 8))

tree.plot_tree(wine_tree, feature_names=X.columns)Pipeline

import pandas as pd

red_url = 'https://raw.githubusercontent.com/PinkWink/ML_tutorial/master/dataset/winequality-red.csv'

white_url = 'https://raw.githubusercontent.com/PinkWink/ML_tutorial/master/dataset/winequality-white.csv'

red_wine = pd.read_csv(red_url, sep=';')

white_wine = pd.read_csv(white_url, sep=';')

red_wine['color'] = 1

white_wine['color'] = 0

wine = pd.concat([red_wine, white_wine])X = wine.drop(['color'], axis=1)

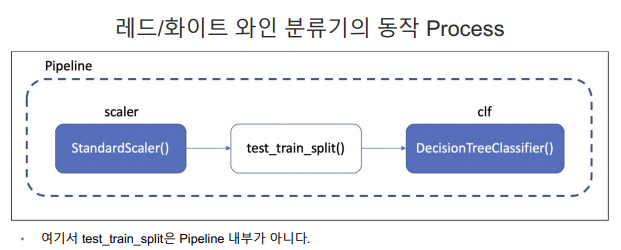

Y = wine['color']- 레드/화이트 와인 분류기의 동작 Process

[scaler] StandardScaler() → test_train_split() → [clf] DecisionTreeClassifier() - 여기서 test_train_split은 Pipeline 내부가 아니다

하이퍼파라미터 튜닝 - 교차검증

-

교차검증

- 나에게 주어진 ㅣ데이터에 적용한 모델의 성능을 정확히 표현하기 위해서도 유용하다. -

과적합 : 모델이 학습 데이터에만 과도하게 최적화된 현상. 그로 인해 일반화된 데이터에서는 예측 성능이 과하게 떨어지는 현상

-

holdout

-

k(숫자)-fold cross validation

-

stratified k-fold cross validation

-

검증 validation이 끝난 후 test용 데이터로 최종 평가

# holdout

# k(숫자)-fold cross validation

# stratified k-fold cross validation

# 검증 validation이 끝난 후 test용 데이터로 최종 평가# 교차검증 구현하기

# simple example

import numpy as np

from sklearn.model_selection import KFold

X = np.array([[1, 2], [3, 4], [1, 2], [3, 4]])

Y = np.array([1, 2, 3, 4])

kf = KFold(n_splits=2)

print(kf.get_n_splits(X))

print(kf)

for train_idx, test_idx in kf.split(X):

print('--- idx')

print(train_idx, test_idx),

print('--- train data')

print(X[train_idx])

print('--- val data')

print(X[test_idx])# 다시 와인 맛 분류하던 데이터로\

# 데이터 읽기

import pandas as pd

red_url = 'https://raw.githubusercontent.com/PinkWink/ML_tutorial/master/dataset/winequality-red.csv'

white_url = 'https://raw.githubusercontent.com/PinkWink/ML_tutorial/master/dataset/winequality-white.csv'

red_wine = pd.read_csv(red_url, sep=';')

white_wine = pd.read_csv(white_url, sep=';')

red_wine['color'] = 1

white_wine['color'] = 0

wine = pd.concat([red_wine, white_wine])# 와인 맛 분류기를 위한 데이터 정리

wine['taste'] = [1 if grade > 5 else 0 for grade in wine['quality']]

X = wine.drop(['taste', 'quality'], axis=1)

Y = wine['taste']# 지난번 의사 결정 나무 모델로는?

# 여기서 잠깐, 그러니까 누가, “데이터를 저렇게 분리하는 것이 최선인건가?”

# “저 acc를 어떻게 신뢰할 수 있는가?” 라고 묻는다면

from sklearn.model_selection import train_test_split

from sklearn.tree import DecisionTreeClassifier

from sklearn.metrics import accuracy_score

X_train, X_test, Y_train, Y_test = train_test_split(X, Y, test_size=0.2, random_state=13)

wine_tree = DecisionTreeClassifier(max_depth=2, random_state=13)

wine_tree.fit(X_train, Y_train)

y_pred_tr = wine_tree.predict(X_train)

y_pred_test = wine_tree.predict(X_test)

print('Train Acc : ', accuracy_score(Y_train, y_pred_tr))

print('Test Acc : ', accuracy_score(Y_test, y_pred_test))# KFold

from sklearn.model_selection import KFold

KFold = KFold(n_splits=5)

wine_tree_cv = DecisionTreeClassifier(max_depth=2, random_state=13)

# KFold는 index를 반환한다

for train_idx, test_idx in KFold.split(X):

print(len(train_idx), len(test_idx))# 각각의 fold에 대한 학습 후 acc

# 모델이 하나의 accuracy가 아닐 수 있다.

cv_accuracy = []

for train_idx, test_idx in KFold.split(X):

X_train, X_test = X.iloc[train_idx], X.iloc[test_idx]

Y_train, Y_test = Y.iloc[train_idx], Y.iloc[test_idx]

wine_tree_cv.fit(X_train, Y_train)

pred = wine_tree_cv.predict(X_test)

cv_accuracy.append(accuracy_score(Y_test, pred))

cv_accuracy# 각 acc의 분산이 크지 않다면 평균을 대표 값으로 한다

np.mean(cv_accuracy)# StratifiedKFold

# https://continuous-development.tistory.com/166

from sklearn.model_selection import StratifiedKFold

skfold = StratifiedKFold(n_splits=5)

wine_tree_cv = DecisionTreeClassifier(max_depth=2, random_state=13)

cv_accuracy = []

for train_idx, test_idx in skfold.split(X, Y):

X_train = X.iloc[train_idx]

X_test = X.iloc[test_idx]

Y_train = Y.iloc[train_idx]

Y_test = Y.iloc[test_idx]

wine_tree_cv.fit(X_train, Y_train)

pred = wine_tree_cv.predict(X_test)

cv_accuracy.append(accuracy_score(Y_test, pred))

cv_accuracy# acc의 평균이 더 나쁘다

np.mean(cv_accuracy)# cross validation을 보다 간편히 하는 방법

from sklearn.model_selection import cross_val_score

skfold = StratifiedKFold(n_splits=5)

wine_tree_cv = DecisionTreeClassifier(max_depth=2, random_state=13)

cross_val_score(wine_tree_cv, X, Y, scoring=None, cv=skfold)

# depth가 높다고 무조건 acc가 좋아지는 것도 아니다

wine_tree_cv = DecisionTreeClassifier(max_depth=5, random_state=13)

cross_val_score(wine_tree_cv, X, Y, scoring=None, cv=skfold)def skfold_dt(depth):

from sklearn.model_selection import cross_val_score

skfold = StratifiedKFold(n_splits=5)

wine_tree_cv = DecisionTreeClassifier(max_depth=depth, random_state=13)

print(cross_val_score(wine_tree_cv, X, Y, scoring=None, cv=skfold))skfold_dt(3)# train score와 함께 보고 싶을 경우

# 현재 우리는 과적합 현상도 함께 목격하고 있다

from sklearn.model_selection import cross_validate

cross_validate(wine_tree_cv, X, Y, scoring=None, cv=skfold, return_train_score=True)

하이퍼파라미터 튜닝

- 하이퍼파라미터 튜닝 : 모델의 성능을 확보하기 위해 조절하는 설정 값

- 튜닝 대상 : 결정나무에서 튜닝해 볼만한 것은 max_depth이다.

import pandas as pd

red_url = 'https://raw.githubusercontent.com/PinkWink/ML_tutorial/master/dataset/winequality-red.csv'

white_url = 'https://raw.githubusercontent.com/PinkWink/ML_tutorial/master/dataset/winequality-white.csv'

red_wine = pd.read_csv(red_url, sep=';')

white_wine = pd.read_csv(white_url, sep=';')

red_wine['color'] = 1

white_wine['color'] = 0

wine = pd.concat([red_wine, white_wine])

wine['taste'] = [1 if grade > 5 else 0 for grade in wine['quality']]

X = wine.drop(['taste', 'quality'], axis=1)

Y = wine['taste']# GridSearchCV

# cv는 cross validation

from sklearn.model_selection import GridSearchCV

from sklearn.tree import DecisionTreeClassifier

params = {'max_depth' : [2, 4, 7, 10]}

gridsearch = GridSearchCV(estimator=wine_tree, param_grid=params, cv = 5)

gridsearch.fit(X, Y)# GridSearchCV의 결과

import pprint

pp = pprint.PrettyPrinter(indent=4)

pp.pprint(gridsearch.cv_results_)# 최적의 성능을 가진 모델

gridsearch.best_estimator_gridsearch.best_score_gridsearch.best_params_# 만약 pipeline을 적용한 모델에 GridSearch를 적용하고 싶다면

from sklearn.pipeline import Pipeline

from sklearn.tree import DecisionTreeClassifier

from sklearn.preprocessing import StandardScaler

estimators = [('scaler', StandardScaler()), ('clf', DecisionTreeClassifier(random_state=13))]

pipe = Pipeline(estimators)

param_grid = [{'clf__max_depth': [2, 4, 7, 10]}]

GridSearch = GridSearchCV(estimator=pipe, param_grid=param_grid, cv=5)

GridSearch.fit(X, Y)# best 모델

GridSearch.best_estimator_# best_score_

GridSearch.best_score_GridSearch.cv_results_표로 성능 결과를 정리

# 잡기술 하나 - 표로 성능 결과를 정리

# accuracy의 평균과 표준편차를 확인

import pandas as pd

score_df = pd.DataFrame(GridSearch.cv_results_)

score_df[['params', 'rank_test_score', 'mean_test_score', 'std_test_score']]💻 출처 : 제로베이스 데이터 취업 스쿨