논문 제목: DeepLocalize: Fault Localization for Deep Neural Networks

https://arxiv.org/abs/2103.03376

📕 Summary

Abstract

- The paper proposes an approach and tool that automatically determines whether a deep neural network (DNN) model is buggy and identifies the root causes of DNN errors by analyzing historic trends in values propagated between layers.

- The approach is evaluated using a benchmark of 40 buggy models and patches, demonstrating its effectiveness in detecting and localizing faults compared to the existing debugging approach used in the Keras library.

Introduction

-

Deep neural networks (DNNs) are a class of machine learning algorithms that have gained popularity due to their success in tasks that traditional algorithms struggle with .

-

DNNs are organized in layers, with nodes called neurons that have adjustable weights .

-

The training process involves passing inputs through the network, comparing the output to the expected output, and adjusting the weights using backpropagation

DNNs are used in various software systems, making software engineering for DNNs essential -

Previous work has shown that DNNs can have bugs, and existing debugging techniques are not effective for localizing these bugs

-

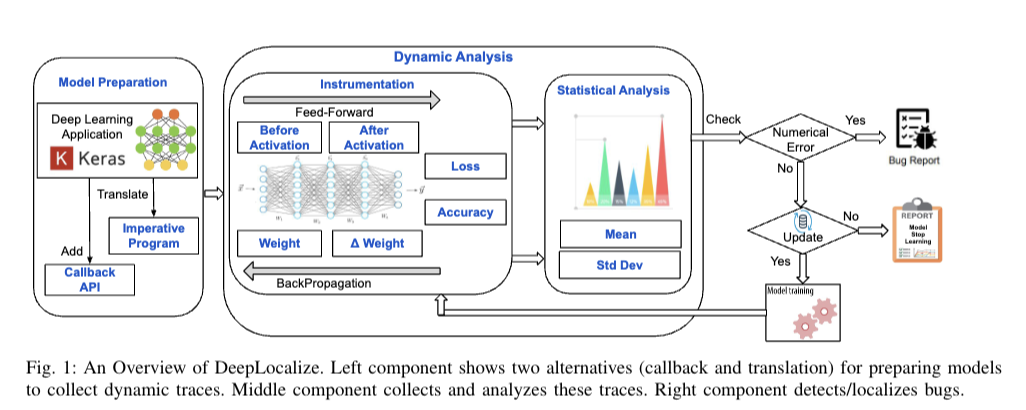

The paper proposes an approach and tool called DeepLocalize that automatically detects and localizes faults in DNN models .

The approach utilizes dynamic analysis and captures historic trends in values propagated between layers to identify faults and localize errors -

The evaluation of DeepLocalize using a benchmark of 40 buggy models shows that it outperforms the existing debugging approach used in the Keras library in terms of fault detection and localization

📕 Solution

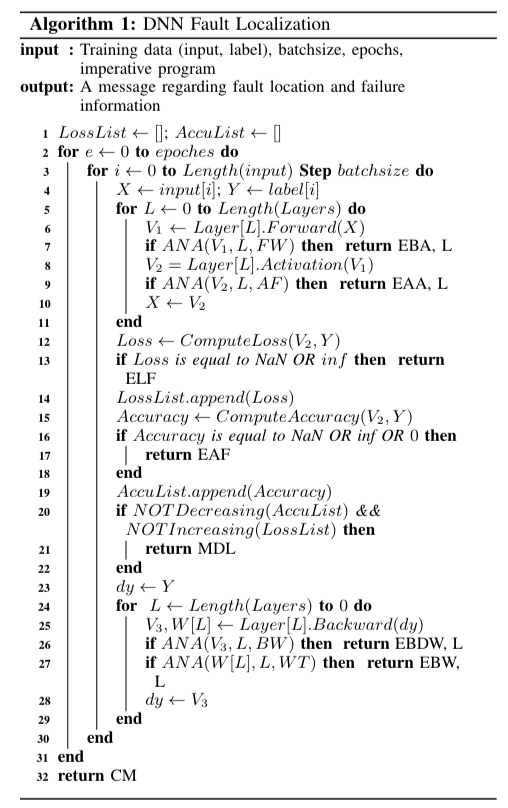

Algorithm 1: DNN Fault Localization

- The algorithm takes input in the form of training data, batch size, epochs, and an imperative program, and outputs a message regarding fault location and failure information.

- The algorithm starts by initializing two empty lists for Loss and Accuracy.

- It then runs a loop for the specified number of epochs and within each epoch, - it runs a loop for each batch of input data.

- For each batch, the algorithm computes the forward pass for each layer and checks for any numerical errors using the ANA function.

- If any error is detected, the algorithm returns an error message along with the layer number where the error occurred.

- The algorithm then computes the activation function for each layer and again checks for any numerical errors.

- If any error is detected, the algorithm returns an error message along with the layer number where the error occurred.

- The algorithm then computes the loss and accuracy for the batch and checks for any numerical errors or if the accuracy is zero.

- If any error is detected, the algorithm returns an error message.

- The loss and accuracy values are then appended to their respective lists.

- The algorithm then checks if the accuracy is not decreasing and the loss is not increasing. If this condition is met, the algorithm returns a message indicating a possible model degradation.

- The algorithm then computes the backward pass for each layer and checks for any numerical errors using the ANA function.

- If any error is detected, the algorithm returns an error message along with the layer number where the error occurred.

- The algorithm then checks for any numerical errors in the weight matrix for each layer.

- If any error is detected, the algorithm returns an error message along with the layer number where the error occurred.

- Finally, the algorithm returns a message indicating successful completion of the fault localization process.

Algorithm 2

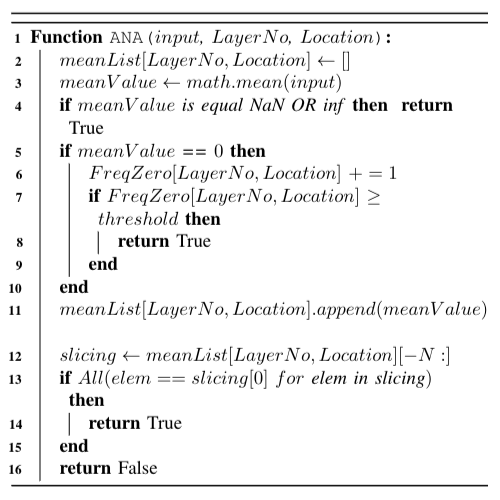

- The text presents a function called "ANA" that takes three inputs: "input", "LayerNo", and "Location".

- The function initializes an empty list called "meanList" with the dimensions of "LayerNo" and "Location".

- The function calculates the mean value of the "input" and assigns it to a variable called "meanValue".

- If the "meanValue" is equal to NaN (Not a Number) or infinity, the function returns True.

- If the "meanValue" is equal to zero, the function increments the value of "FreqZero" by 1 for the corresponding "LayerNo" and "Location".

- If the value of "FreqZero" for the corresponding "LayerNo" and "Location" is greater than or equal to a threshold value, the function returns True.

- The function appends the "meanValue" to the "meanList" for the corresponding "LayerNo" and "Location".

- The function creates a slicing of the "meanList" with the last "N" elements.

If all the elements in the slicing are equal to the first element, the function returns True. - Otherwise, the function returns False.

- Note: The purpose of this function is to identify faults in deep neural networks by analyzing the historic trends in values propagated between layers. - The function checks for numerical errors and monitors the model during training to find the relevance of every layer/parameter on the DNN outcome.

Result

-

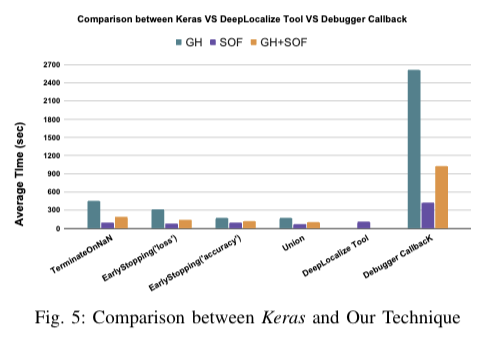

The proposed approach and tool, DeepLocalize, successfully identify and localize faults in deep neural network (DNN) models. It outperforms the existing debugging approach used in the Keras library, detecting a higher number of faults and localizing bugs that were previously undetected.

-

The evaluation of the approach using a benchmark of 40 buggy models and patches shows that DeepLocalize is able to identify bugs for 23 out of 29 buggy programs and successfully localize bugs for 34 out of 40 buggy programs. In comparison, the Keras methods, such as TerminateOnNaN(), EarlyStopping('loss'), EarlyStopping('accuracy'), and the three Keras methods together, were only able to identify 2, 24, 28, and 32 out of 40 bugs, respectively.

-

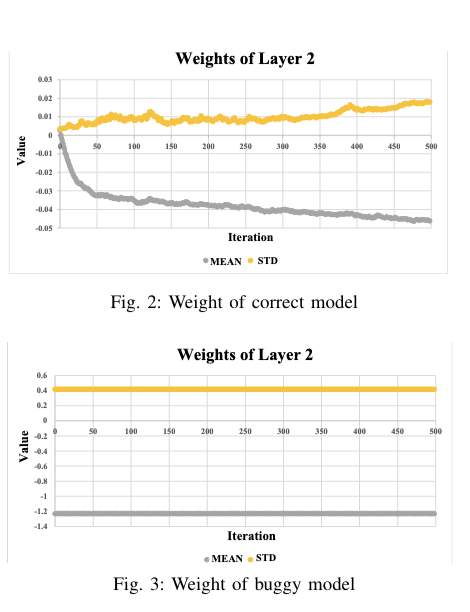

DeepLocalize's effectiveness is attributed to its use of a wider spectrum of important runtime values for analysis compared to the other methods, which only use one metric such as loss or accuracy. It also captures numerical errors and monitors the model during training to provide insights into the relevance of each layer and parameter on the DNN outcome.

📕 Conclusion

-

The paper presents an automated approach and tool for detecting and localizing faults in deep neural network (DNN) models, which outperforms the existing debugging approach used in the Keras library.

-

The approach utilizes dynamic analysis of DNN applications by converting them into an imperative representation and using a callback mechanism, enabling the insertion of probes for analyzing traces produced during training.

-

The researchers have developed an algorithm that captures numerical errors and monitors the model during training to identify the root causes of DNN errors.

-

An evaluation using a benchmark of 40 buggy models and patches shows that the proposed approach is more effective in detecting faults and localizing bugs compared to the state-of-the-practice Keras library.

-

Future work includes exploring techniques to repair DNN bugs, investigating cases where the approach was unable to detect faults and localize errors, and exploring the use of DNN structure analysis for better localization.

Contribution

- The paper proposes an approach and tool that automatically determines whether a deep neural network (DNN) model is buggy and identifies the root causes of DNN errors by analyzing historic trends in values propagated between layers.

- The approach enables dynamic analysis of deep learning applications by converting them into an imperative representation and using a callback mechanism, allowing the insertion of probes for dynamic analysis over the traces produced by the DNN during training.

- The paper introduces an algorithm for identifying root causes by capturing numerical errors and monitoring the model during training, providing insights into the relevance of each layer and parameter on the DNN outcome.

- The researchers have collected a benchmark of 40 buggy models and patches from Stack Overflow and GitHub, which can be used to evaluate automated debugging tools and repair techniques.

- The evaluation of the approach using this benchmark shows that it is more effective than the existing debugging approach used in the Keras library, detecting a higher number of faults and localizing bugs that were previously undetected.