encoder and scaler

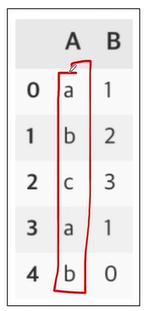

label_encoer

머신러닝은 숫자만 사용. 문자를 사용할 수 없으므로 문자로 되어있는 형태를 숫자로 라벨링하는 것.

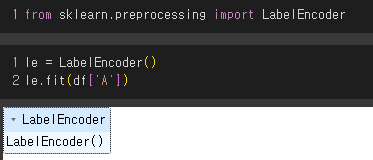

from sklearn.preprocessing import LabelEncoder

le = LabelEncoder()

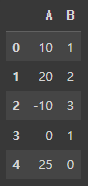

le.fit(df['A'])

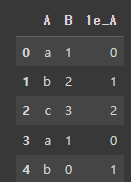

df['1e_A'] = le.transform(df['A'])

df

그런데, 숫자를 문자로 바꾸는것도 가능함

le.inverse_transform(df['1e_A'])min-max scaler

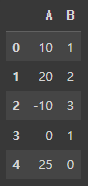

from sklearn.preprocessing import MinMaxScaler

mms = MinMaxScaler()

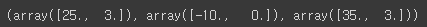

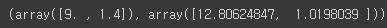

mms.fit(df)mms.data_max_, mms.data_min_, mms.data_range_

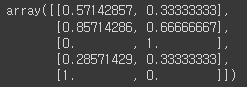

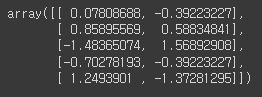

df_mms = mms.transform(df)

df_mms

역변환도 가능

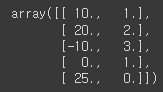

mms.inverse_transform(df_mms)

standard scaler

표준정규분포

from sklearn.preprocessing import StandardScaler

sss = StandardScaler()

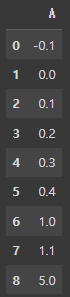

sss.fit(df)평균과 표준편차 확인

sss.mean_, sss.scale_

변환된 데이터 확인

df_sss = sss.transform(df)

df_sss

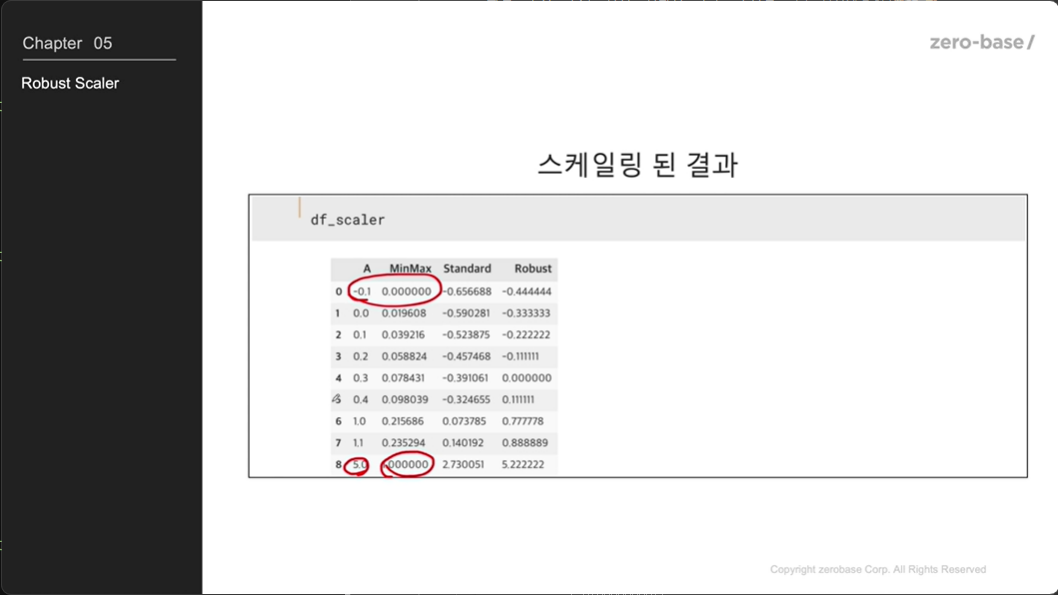

robust scaler

중앙값을 기준으로 정렬

from sklearn.preprocessing import RobustScalerrs = RobustScaler()

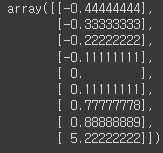

df_rs = rs.fit_transform(df)

df_rs

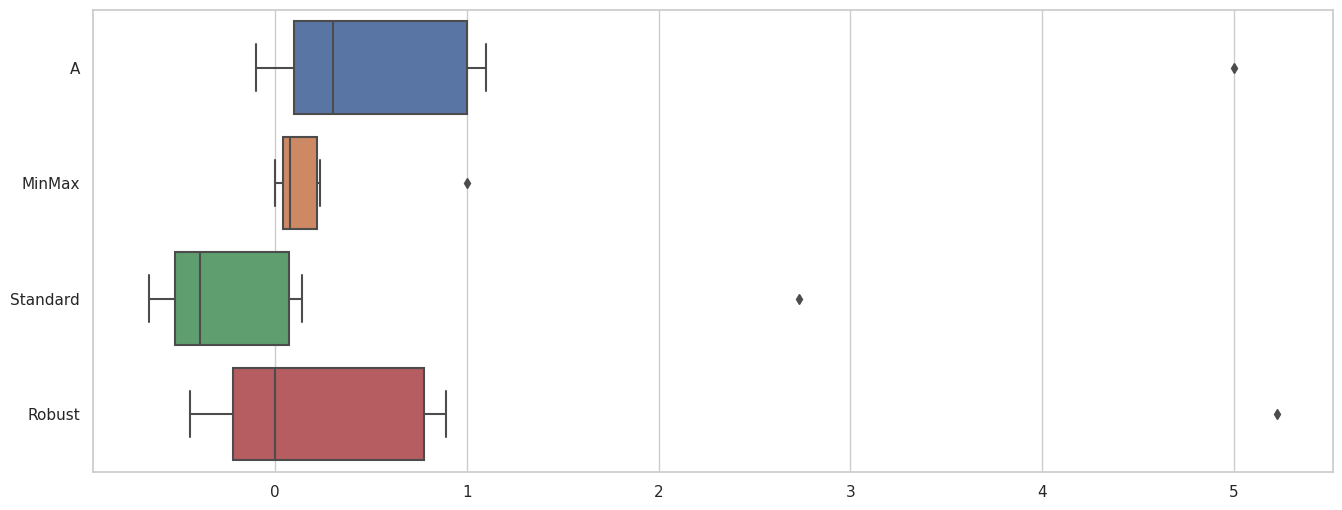

scale 비교

df_scaler['MinMax'] = mms.fit_transform(df)

df_scaler['Standard'] = sss.fit_transform(df)

df_scaler['Robust'] = rs.fit_transform(df)

sns.set_theme(style ='whitegrid')

plt.figure(figsize=(16,6))

sns.boxplot(data=df_scaler, orient='h')

- robust의 아웃라이어가 장난없다, median을 0으로 만들고 시작

- minmax는 아웃라이어 생각할 수 없을때 사용

- standard 다른 통계 데이터랑 같이 진행할 경우 비교 용이

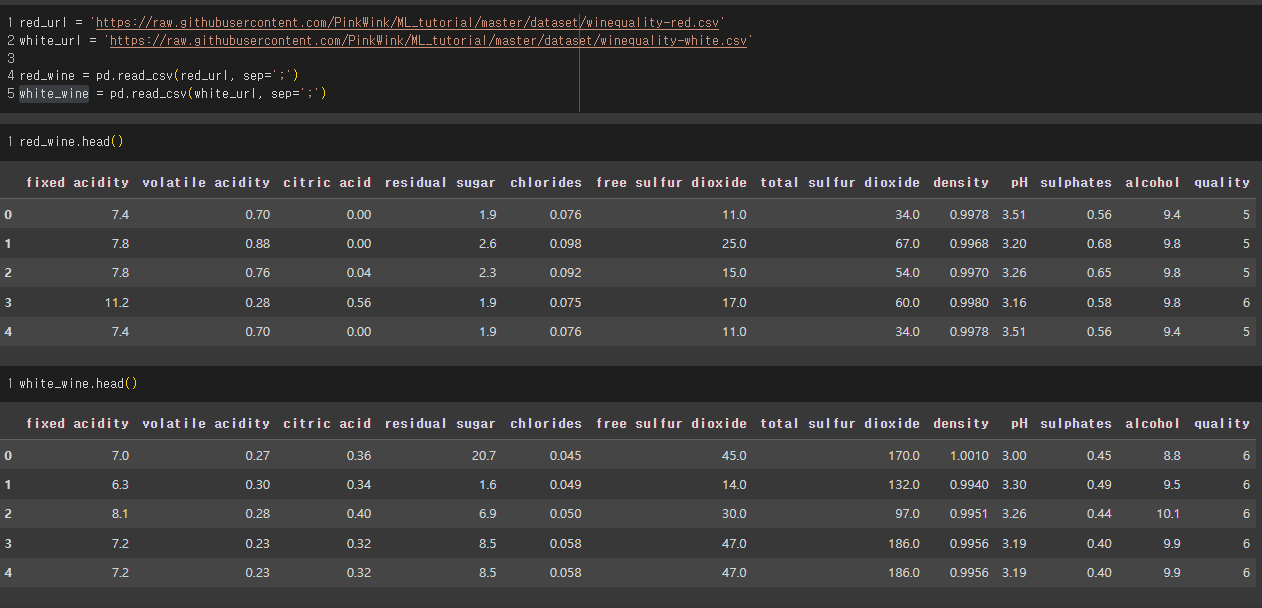

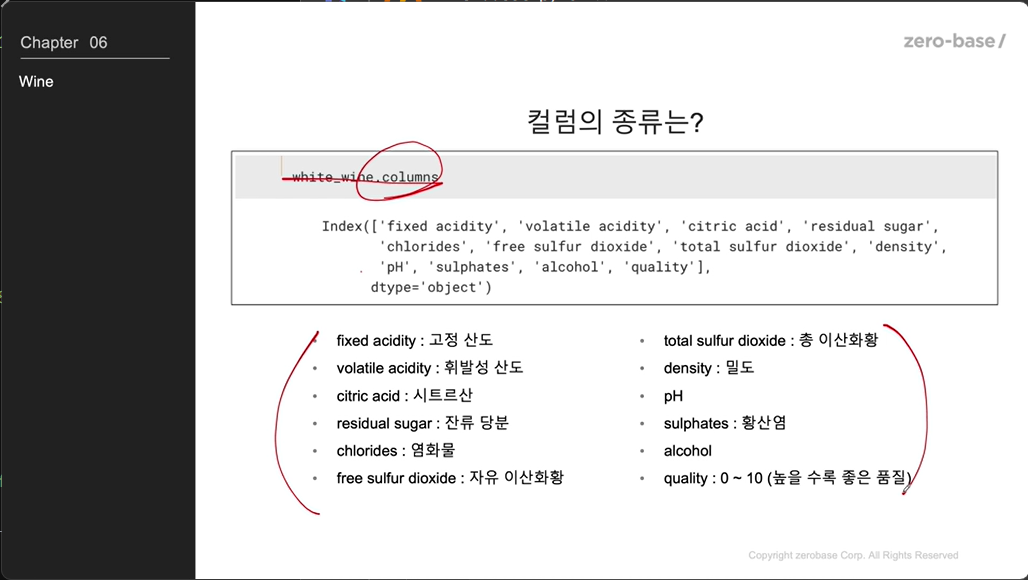

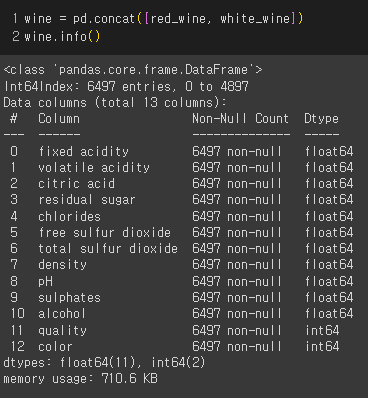

Decision Tree를 이용한 와인데이터 분석

두개 데이터 합치기

데이터 확인

wine['quality'].unique()array([5, 6, 7, 4, 8, 3, 9])

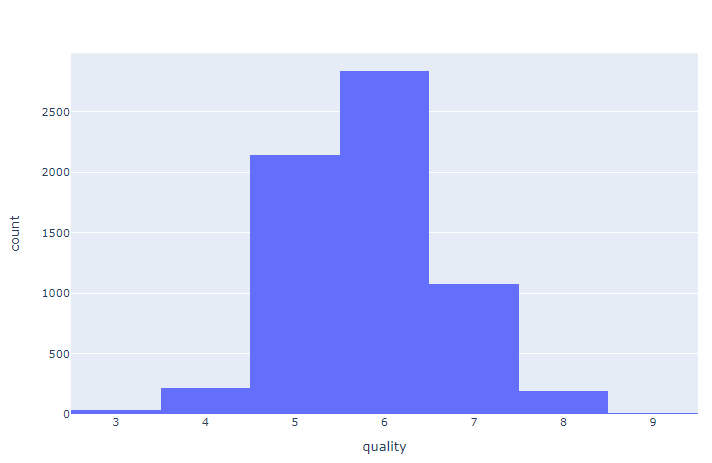

px.histogram(wine, x='quality')

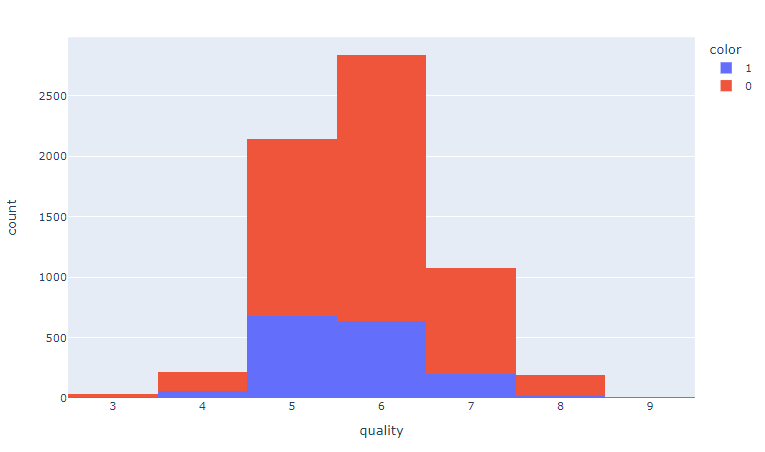

px.histogram(wine, x='quality', color = 'color') 0=red, 1=white

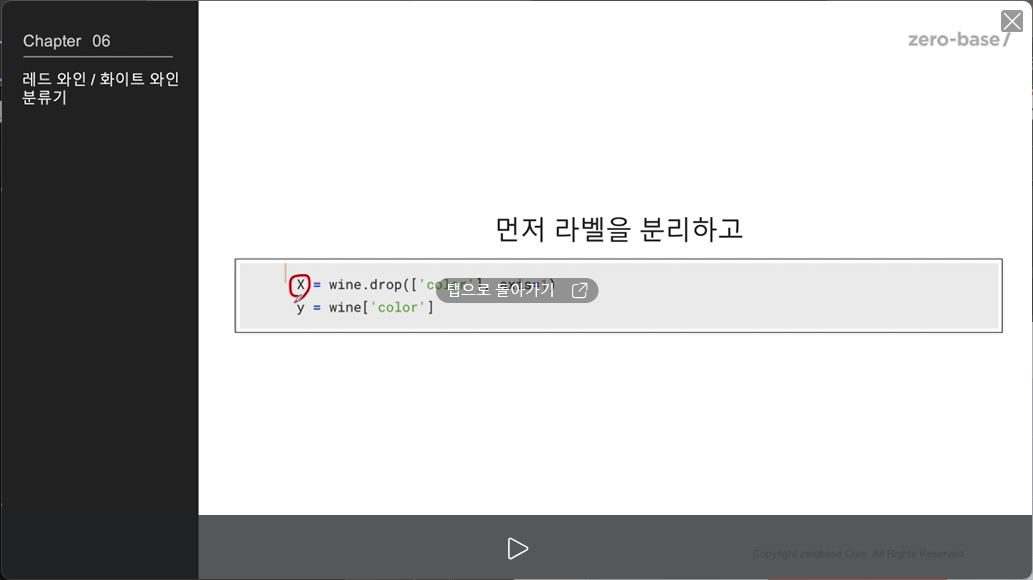

레드와인/화이트와인 분류기

라벨 정리

x : 레드냐 화이트냐 맞추기 위해서

데이터 나누기 (훈련용/테스트용)

x_train, x_test, y_train, y_test = train_test_split(x,y, test_size = 0.2, random_state = 13)

# test_size : 전체 데이터셋의 20%가 테스트 세트로 할당

np.unique(y_train, return_counts=True)(array([0, 1]), array([3913, 1284]))

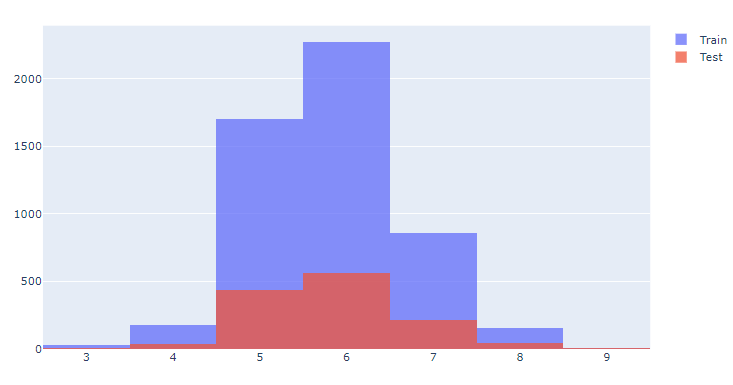

훈련용/테스트용 레드와 화이트와인에 따라 구분된 정도 확인

import plotly.graph_objects as go

fig = go.Figure()

fig.add_trace(go.Histogram(x=x_train['quality'], name='Train'))

fig.add_trace(go.Histogram(x=x_test['quality'], name='Test'))

fig.update_layout(barmode='overlay')

fig.update_traces(opacity=0.75)

fig.show()

Decision Tree 훈련

wine_tree = DecisionTreeClassifier(max_depth=2, random_state=13)

wine_tree.fit(x_train, y_train)학습 결과

y_pred_tr = wine_tree.predict(x_train)

y_pred_test = wine_tree.predict(x_test)

print('train : ', accuracy_score(y_train, y_pred_tr))

print('test : ', accuracy_score(y_test, y_pred_test))train : 0.9553588608812776

test : 0.9569230769230769

데이터 전처리

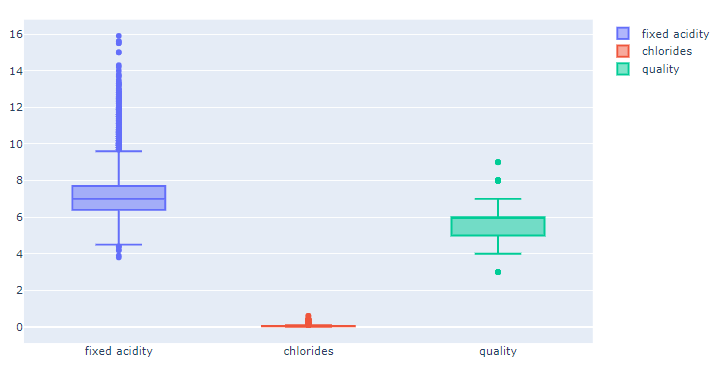

데이터 확인

fig = go.Figure()

fig.add_trace(go.Box(y=x['fixed acidity'], name = 'fixed acidity'))

fig.add_trace(go.Box(y=x['chlorides'], name = 'chlorides'))

fig.add_trace(go.Box(y=x['quality'], name = 'quality'))

fig.show()

격차가 심하다

- Decision Tree에서는 전처리가 사실 의미를 가지진 않는다.

- 주로 cost function을 최적화할 때 유효하다.

- Min max Scaler와 Standard Sclaer 중 어느것이 좋을지는 해봐야 안다

mms = MinMaxScaler()

sss = StandardScaler()

x_mms = mms.fit_transform(x)

x_sss = sss.fit_transform(x)

x_ss_pd = pd.DataFrame(x_sss, columns=x.columns)

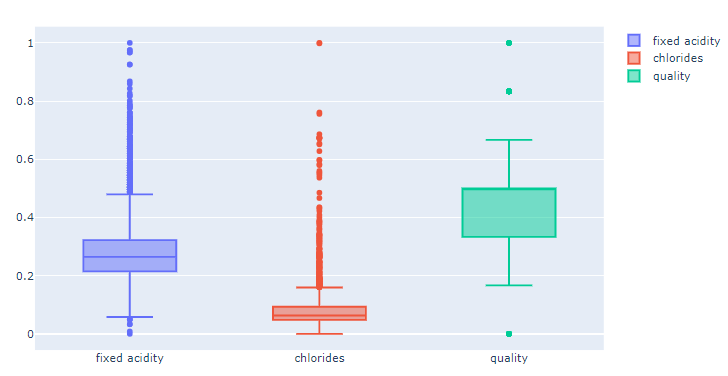

x_mms_pd = pd.DataFrame(x_mms, columns=x.columns)MinMaxScaler

fig = go.Figure()

fig.add_trace(go.Box(y=x_mms_pd['fixed acidity'], name = 'fixed acidity'))

fig.add_trace(go.Box(y=x_mms_pd['chlorides'], name = 'chlorides'))

fig.add_trace(go.Box(y=x_mms_pd['quality'], name = 'quality'))

fig.show()

최대 최솟값을 0과 1로 잘 맞추었다

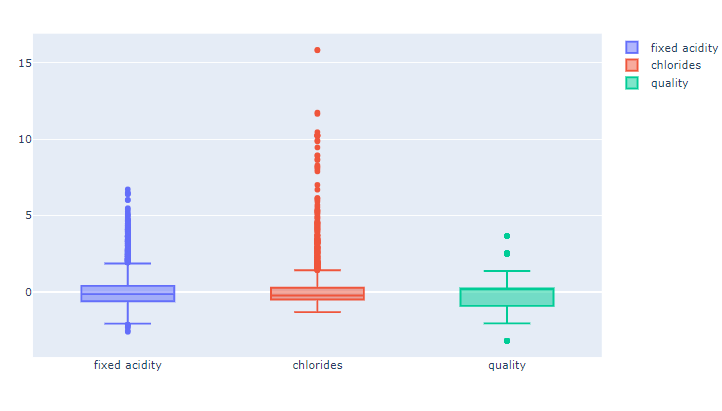

StandardScaler

fig = go.Figure()

fig.add_trace(go.Box(y=x_ss_pd['fixed acidity'], name = 'fixed acidity'))

fig.add_trace(go.Box(y=x_ss_pd['chlorides'], name = 'chlorides'))

fig.add_trace(go.Box(y=x_ss_pd['quality'], name = 'quality'))

fig.show()

평균을 0으로 잘맞췄다

그런데 저 3개의 값을 상호 비교하는건가? 그런거라면 MinMaxScaler가 좋아보인다

MinMaxScaler 적용하여 다시 학습

x_train, x_test, y_train, y_test = train_test_split(x_mms_pd,y, test_size = 0.2, random_state = 13)

wine_tree = DecisionTreeClassifier(max_depth=2, random_state=13)

wine_tree.fit(x_train, y_train)

y_pred_tr = wine_tree.predict(x_train)

y_pred_test = wine_tree.predict(x_test)

print('MinMaxScaler train : ', accuracy_score(y_train, y_pred_tr))

print('MinMaxScaler test : ', accuracy_score(y_test, y_pred_test))MinMaxScaler train : 0.9553588608812776

MinMaxScaler test : 0.9569230769230769

StandardScaler 적용하여 다시 학습

x_train, x_test, y_train, y_test = train_test_split(x_ss_pd,y, test_size = 0.2, random_state = 13)

wine_tree = DecisionTreeClassifier(max_depth=2, random_state=13)

wine_tree.fit(x_train, y_train)

y_pred_tr = wine_tree.predict(x_train)

y_pred_test = wine_tree.predict(x_test)

print('MinMaxScaler train : ', accuracy_score(y_train, y_pred_tr))

print('MinMaxScaler test : ', accuracy_score(y_test, y_pred_test))StandardScaler train : 0.9553588608812776

StandardScaler test : 0.9569230769230769

train : 0.9553588608812776

test : 0.9569230769230769

MinMaxScaler train : 0.9553588608812776

MinMaxScaler test : 0.9569230769230769

StandardScaler train : 0.9553588608812776

StandardScaler test : 0.9569230769230769

엥 걍 차이가 없다

결론 : 해봐야 안다

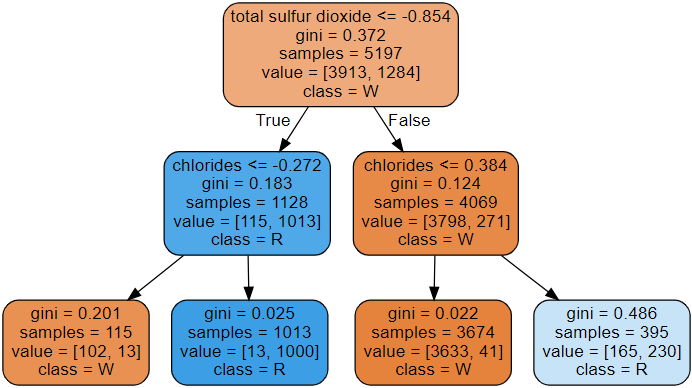

Decision Tree는 레드와인과 화이트와인을 어떻게 구분하는가?

Source(export_graphviz(wine_tree, feature_names=x_train.columns,

class_names=['W','R'],

rounded=True, filled=True))

- total sulfur dioxide가 중요한 역할을 하는것 같음 처음 분류를 잡는게 이거니까

그 외 구분 짓는 요소들

dict(zip(x_train.columns, wine_tree.feature_importances_)){'fixed acidity': 0.0,

'volatile acidity': 0.0,

'citric acid': 0.0,

'residual sugar': 0.0,

'chlorides': 0.24230360549660776,

'free sulfur dioxide': 0.0,

'total sulfur dioxide': 0.7576963945033922,

'density': 0.0,

'pH': 0.0,

'sulphates': 0.0,

'alcohol': 0.0,

'quality': 0.0}

chlorides

그러나, max depth를 바꾸면 위 수치들은 달라진다.

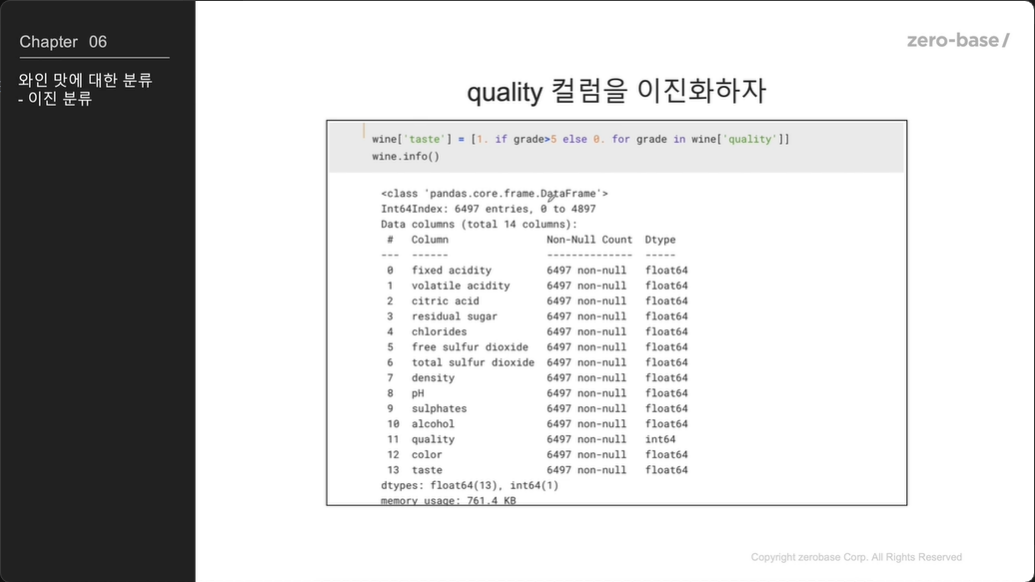

맛의 이진 분류

x = wine.drop(['taste'], axis=1)

y = wine['taste']

x_train, x_test, y_train, y_test = train_test_split(x,y, test_size = 0.2, random_state = 13)

wine_tree = DecisionTreeClassifier(max_depth=2, random_state=13)

wine_tree.fit(x_train, y_train)그런데 맛으로 화이트와 레드를 분류한다..?

y_pred_tr = wine_tree.predict(x_train)

y_pred_test = wine_tree.predict(x_test)

print('taste train : ', accuracy_score(y_train, y_pred_tr))

print('taste test : ', accuracy_score(y_test, y_pred_test))taste train : 1.0

taste test : 1.0

오잉

무조건 의심 on

어케 정한거니 물어보기

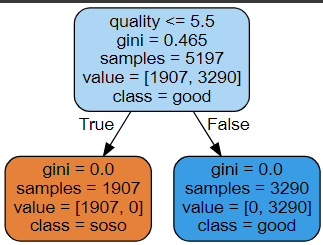

Source(export_graphviz(wine_tree, feature_names=x_train.columns,

class_names=['soso','good'],

rounded=True, filled=True))

퀄리티 컬럼으로 테이스트 컬럼을 만들었으니..

wine['taste'] = [1. if grade>5 else 0. for grade in wine['quality']]이렇게!

따라서 퀄리티 컬럼은 제거 했어야한다

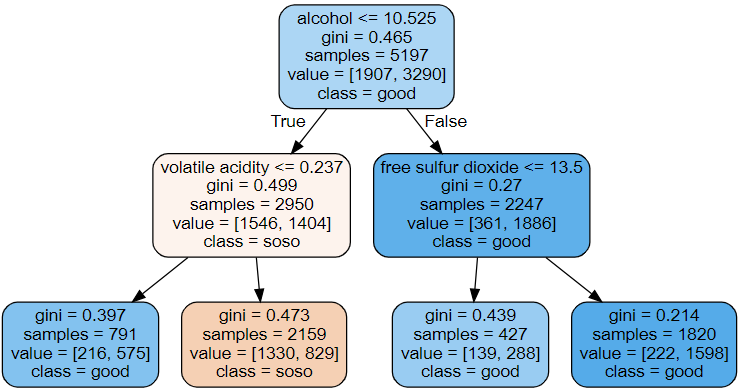

퀄리티 컬럼 제거 후 정확도 확인

x = wine.drop(['taste','quality'], axis=1)

y = wine['taste']

x_train, x_test, y_train, y_test = train_test_split(x,y, test_size = 0.2, random_state = 13)

wine_tree = DecisionTreeClassifier(max_depth=2, random_state=13)

wine_tree.fit(x_train, y_train)

y_pred_tr = wine_tree.predict(x_train)

y_pred_test = wine_tree.predict(x_test)

print('taste train : ', accuracy_score(y_train, y_pred_tr))

print('taste test : ', accuracy_score(y_test, y_pred_test))re_taste train : 0.7294593034442948

re_taste test : 0.7161538461538461

다시 뭐로 구분한건지 확인

Source(export_graphviz(wine_tree, feature_names=x_train.columns,

class_names=['soso','good'],

rounded=True, filled=True))

알코올로 시작 구분을 때렸으니 알코올이닷

엥 근데 맛이 알코올이 맛을 결정하나?

알코올 도수의 높낮이로..?

싱기하네