Problem until now…

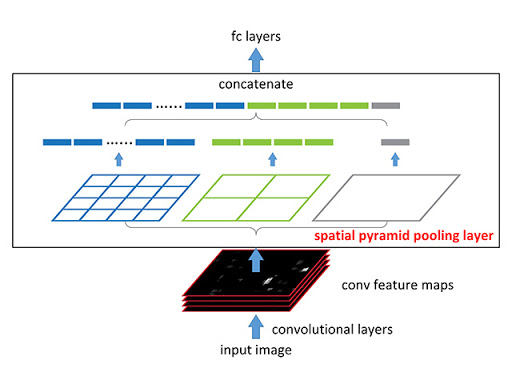

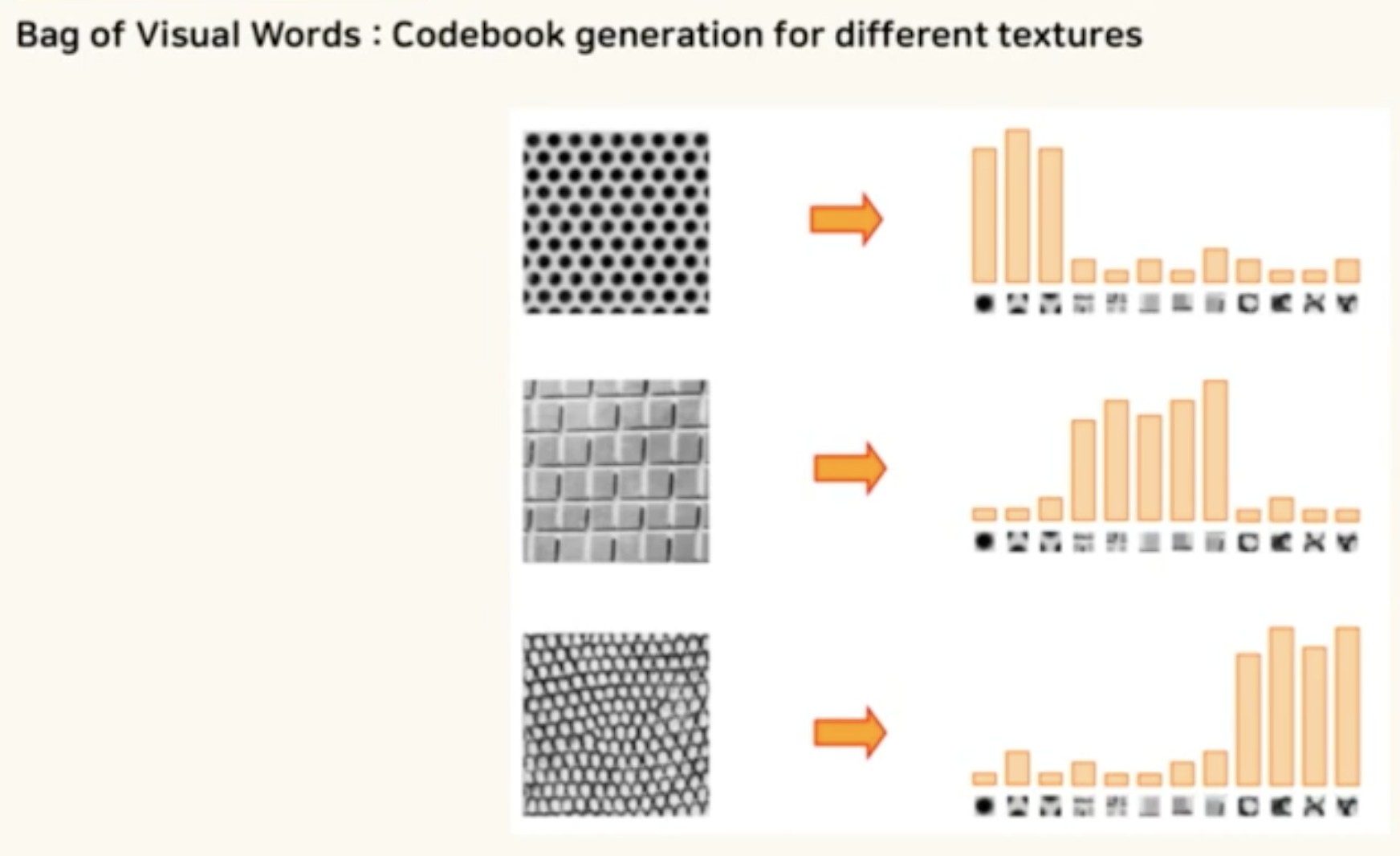

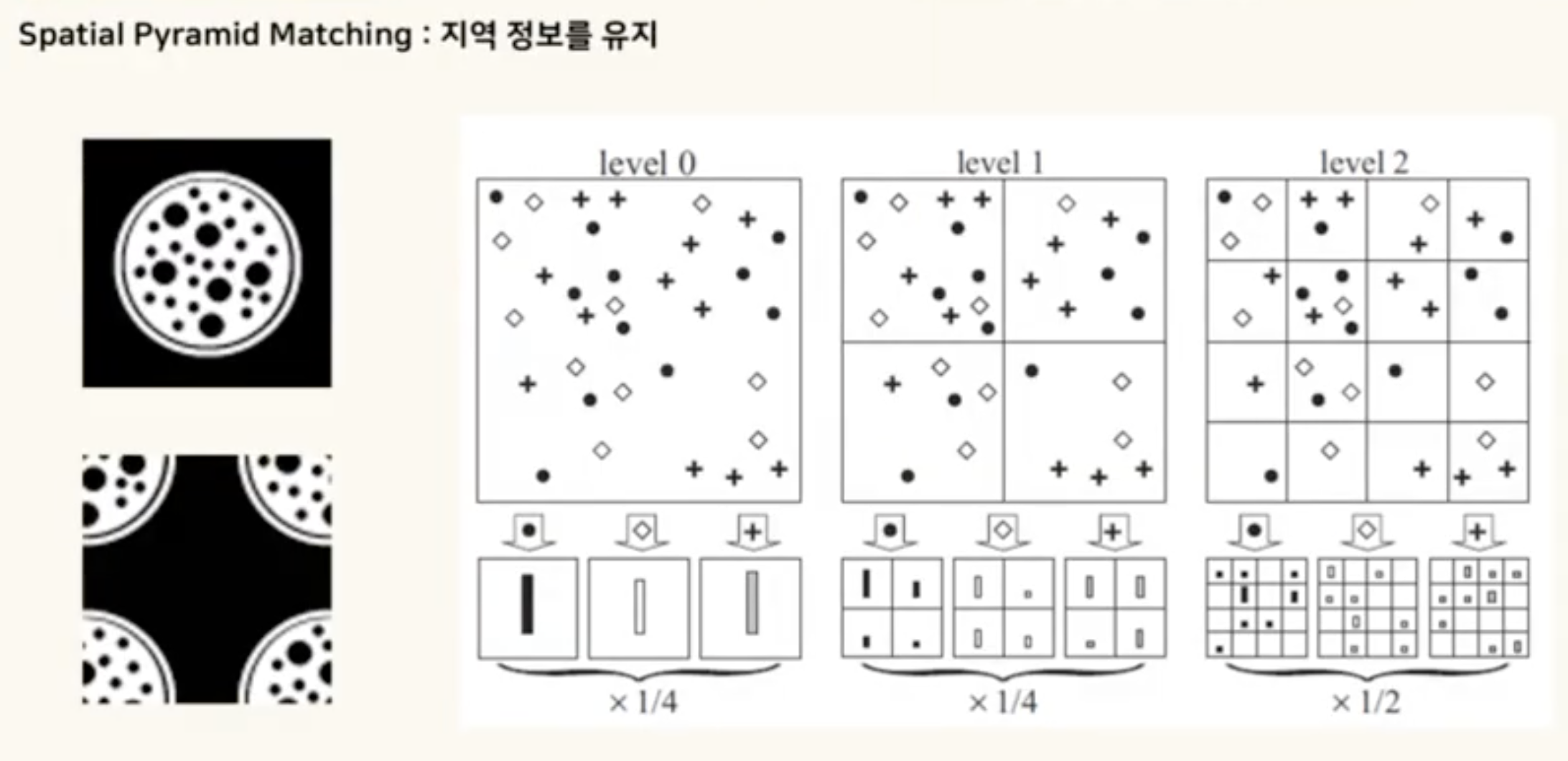

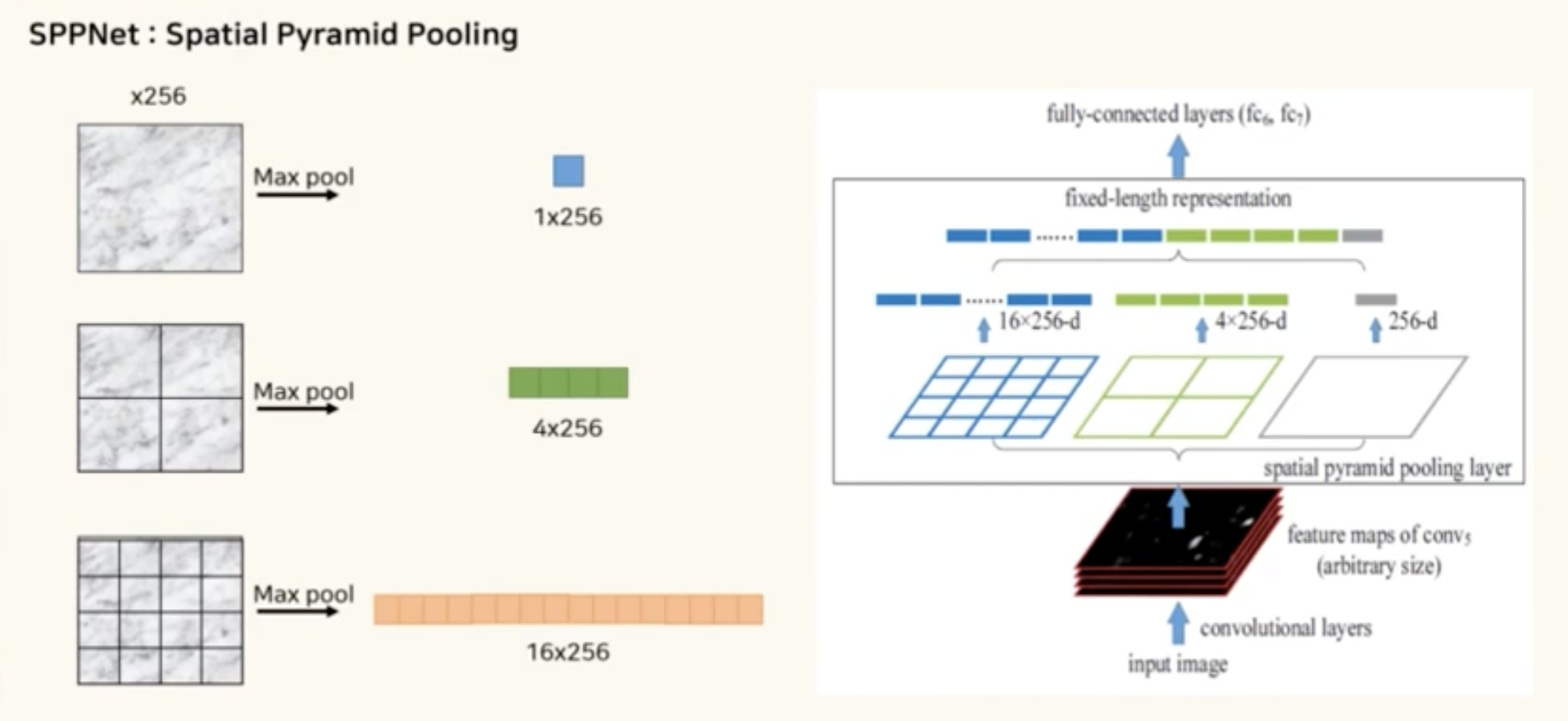

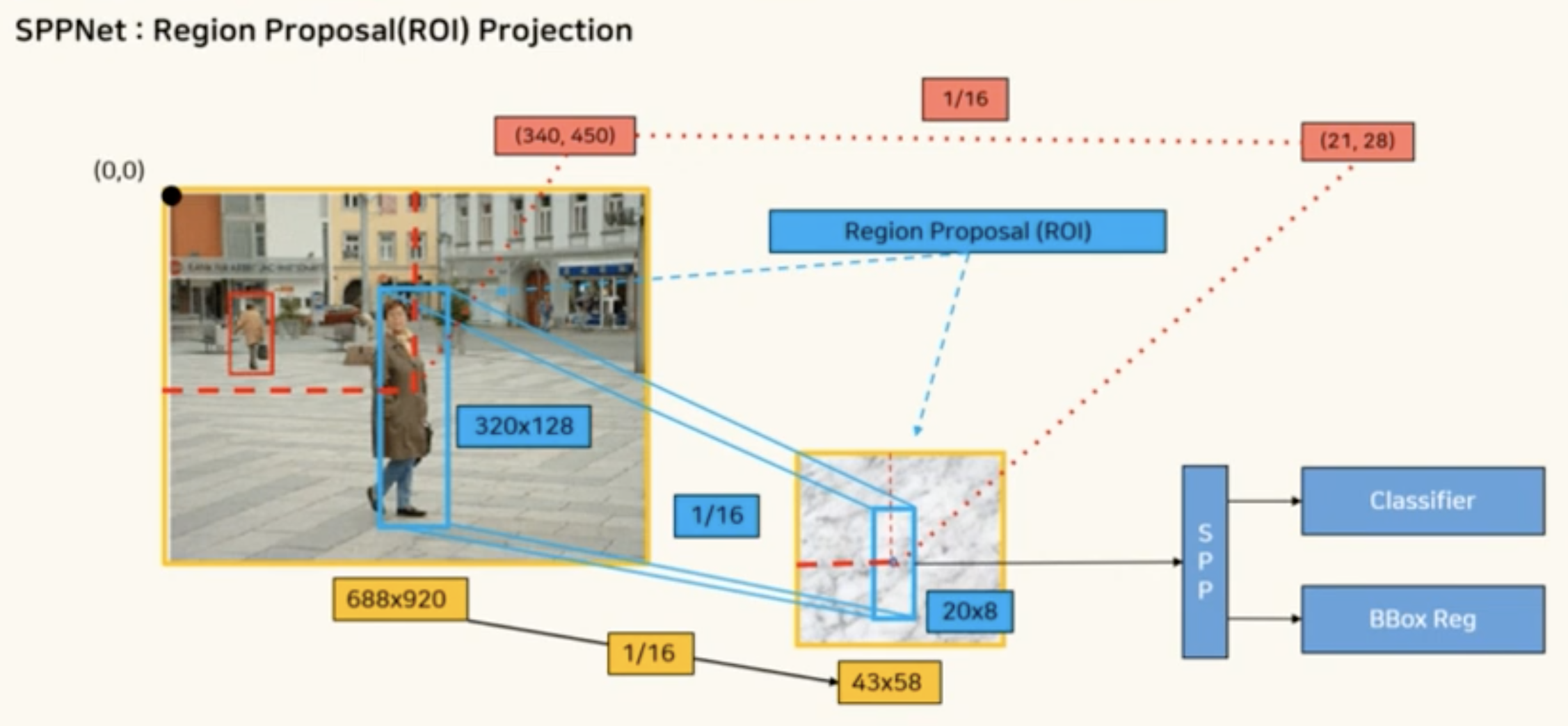

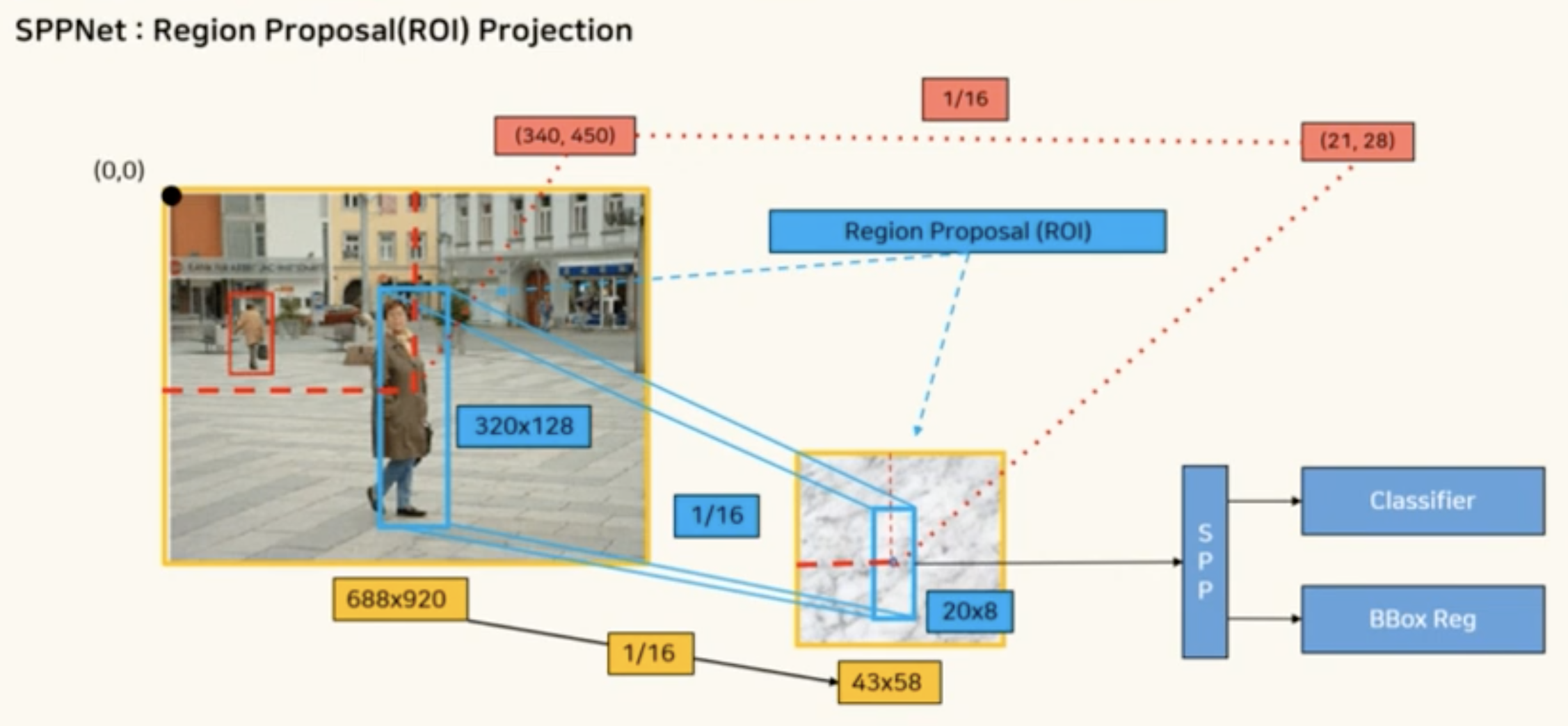

- 과거에 사용된 CNN 구조들은 Fully Connected Layer에 넣어야 하기 때문에 고정된 이미지 크기의 입력을 필요로 한다. cropping 과정이 들어가면서 이미지 전체를 담지 못하거나, 아니면 warping이 되면서 이미지가 왜곡이 됐다.

- 그래서 Fully Connected Layer에 별도의 cropping이나 왜곡 없이 먹여주기 위해서, Spatial Pyramid Pooling으로 같은 길이의 벡터를 만들어주려고 함.

Main Concept to solve the problem

Keywords and Definitions

import math

def spatial_pyramid_pool(self,previous_conv, num_sample, previous_conv_size, out_pool_size):

'''

Inputs are

* previous_conv: a tensor vector of previous convolution layer

* num_sample: an int number of image in the batch

* previous_conv_size: an int vector [height, width] of the matrix features size of previous convolution layer

* out_pool_size: a int vector of expected output size of max pooling layer

returns: a tensor vector with shape [1 x n] is the concentration of multi-level pooling

'''

for i in range(len(out_pool_size)):

h_wid = int(math.ceil(previous_conv_size[0] / out_pool_size[i]))

w_wid = int(math.ceil(previous_conv_size[1] / out_pool_size[i]))

h_pad = (h_wid*out_pool_size[i] - previous_conv_size[0] + 1)/2

w_pad = (w_wid*out_pool_size[i] - previous_conv_size[1] + 1)/2

maxpool = nn.MaxPool2d((h_wid, w_wid), stride=(h_wid, w_wid), padding=(h_pad, w_pad))

x = maxpool(previous_conv)

if(i == 0):

spp = x.view(num_sample,-1)

else:

spp = torch.cat((spp,x.view(num_sample,-1)), 1)

return spp

- Region Proposal은 그냥 본래 사진의 비율을 Pooling Layer에서도 반영을 하는 것.

SPPNet Structure Code

import torch

import torch.nn as nn

from torch.nn import init

import functools

from torch.autograd import Variable

import numpy as np

import torch.nn.functional as F

from spp_layer import spatial_pyramid_pool

class SPP_NET(nn.Module):

'''

A CNN model which adds spp layer so that we can input multi-size tensor

'''

def __init__(self, opt, input_nc, ndf=64, gpu_ids=[]):

super(SPP_NET, self).__init__()

self.gpu_ids = gpu_ids

self.output_num = [4,2,1]

self.conv1 = nn.Conv2d(input_nc, ndf, 4, 2, 1, bias=False)

self.conv2 = nn.Conv2d(ndf, ndf * 2, 4, 1, 1, bias=False)

self.BN1 = nn.BatchNorm2d(ndf * 2)

self.conv3 = nn.Conv2d(ndf * 2, ndf * 4, 4, 1, 1, bias=False)

self.BN2 = nn.BatchNorm2d(ndf * 4)

self.conv4 = nn.Conv2d(ndf * 4, ndf * 8, 4, 1, 1, bias=False)

self.BN3 = nn.BatchNorm2d(ndf * 8)

self.conv5 = nn.Conv2d(ndf * 8, 64, 4, 1, 0, bias=False)

self.fc1 = nn.Linear(10752,4096)

self.fc2 = nn.Linear(4096,1000)

def forward(self,x):

x = self.conv1(x)

x = self.LReLU1(x)

x = self.conv2(x)

x = F.leaky_relu(self.BN1(x))

x = self.conv3(x)

x = F.leaky_relu(self.BN2(x))

x = self.conv4(x)

spp = spatial_pyramid_pool(x,1,[int(x.size(2)),int(x.size(3))],self.output_num)

fc1 = self.fc1(spp)

fc2 = self.fc2(fc1)

s = nn.Sigmoid()

output = s(fc2)

return output

References