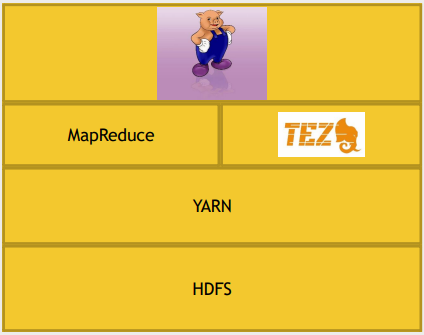

Pig 개요

What is Pig

- MapReduce 위에서 동작하며 mapper와 reducer를 작성하지 않고 Pig Latin이라고 불리는 scripting language를 사용해서 더 쉽게, 빠르게 개발할 수 있음

- Pig Latin은 SQL과 유사함, 그러나 Pig Latin은 procedural sort이며 SQL스러운 문법을 사용하기 위해 서로 다른 relation들에 대한 set up을 해야함(간단한 format의 script)

- Pig를 사용하는 것보다 MapReduce를 사용하는 것이 성능상으로 좋다고 생각할 수도 있는데, Pig는 TEZ위에서도 동작한다. 그래서 성능을 걱정할 필요는 없다. 오히려 더 좋다.

- TEZ에 대해 나중에 설명하겠지만 TEZ는 job에 대한 상호의존성을 파악한 후 최적화된 작업 경로를 dag형태로 제공함. 그래서 linear manner로 작업을 수행하는 MapReduce에 비해 굉장히(10배?)빠름

- UDF(User Define Function)을 이용해서 확장성있게 사용할 수 있음

- mapper와 reuducer가 정말 필요하다면 Pig와 혼합하여 사용가능

- 작은 작업에 대해선 Hadoop cluster 없이 혼자 작동할 수도 있음

Pig 활용법

Grunt: sort of a command line interpreter- Pig scripts one line at a time and execute them one line at a time

- if you have a small data set that you just want to experiment with quickly

Script: you can save a Pig script to a file and run that from the command line- also means you can run it from a Chron tab or whatever you want on a periodic basis and production

Ambari/Hue- there's an Ambari view for Pig that lets you very nicely write and run and save and load Pig scripts right from the web browser which is kind of a neat thing

실습

LOAD

MapReudce의 Mapping 단계와 유사

ratings = LOAD '/user/maria_dev/ml-100k/u.data' AS

(userID:int, movieID:int, rating:int, ratingTime:int);

DUMP ratings;

# 결과 sample: (660,229,2,891406212)

# {relation_name} = LOAD '{HDFS_path}' AS {schema};{relation_name} : 관계의 이름

LOAD : 디스크에서 데이터를 불러올때 쓰는 함수

{HDFS_path} : HDFS에서 읽어올 함수의 경로

{schema} : 데이터를 어떤 형태로 가져올지

- u.data는 tab으로 구분되어 있는데 pig는 tab이 구분자인 것을 자동으로 인식해 데이터를 나눔

metadata = LOAD '/user/maria_dev/ml-100k/u.item' USING

PigStorage('|')AS (movieID:int, movieTitle:chararray,

releaseDate:chararray, videoRelease:chararray,

imdbLink:chararray);

DUMP metadata;

# 결과 sample: (1,Toy Story (1995),01-Jan-1995,,http://us.imdb.com/M/title-exact?Toy%20Story%20(1995))

nameLookup = FOREACH metadata GENERATE movieID, movieTitle,

ToUnixTime(ToDate(releaseDate, 'dd-MMM-yyyy')) AS releaseTime;

DUMP nameLookup;

# 결과 sample: (1,Toy Story (1995),788918400)USING PigStorage('delimeter') : 구분자를 지정해줄 수 있음

DUMP : 결과를 보여줌. 보통 디버깅할때 씀

- u.item은

|으로 구분되어 있어 pig에게 알려줘야함 - FOREACH ~ GENERATE ~ 를 통해 원하는 형태로 Transform된 nameLookup 생성

GROUP BY

MapReduce의 Shuffle and sort와 유사한 단계

ratingsByMovie = GROUP ratings BY movieID;

DUMP ratingsByMovie;

# 결과 sample: (1,{(807,1,4,892528231),(554,1,3,876231938), … })

DESCRIBE ratingsByMovie;

# 결과: ratingsByMovie: {group: int,ratings: {(userID: int,movieID: int,rating: int,ratingTime: int)}}GROUP {relation} BY {attirbute};

- SQL의 group by 와 유사

- 결과가 (group,{group에 해당하는 모든 튜플})로 나옴

DESCRIBE: relation의 구조 및 type을 보여줌

Aggregate

MapReduce의 Reducing과 유사

avgRatings = FOREACH ratingsByMovie GENERATE group AS movieID, AVG(ratings.rating) AS avgRating;

DUMP avgRatings;

# 결과 sample: (1,3.8783185840707963) FILTER BY

fiveStarMovies = FILTER avgRatings BY avgRating > 4.0;FILTER : filtering things out into a relation based on some boolean expression

JOIN BY

fiveStarsWithData = JOIN fiveStarMovies BY movieID, nameLookup BY movieID;

DESCRIBE fiveStarsWithData;

# 결과: fiveStarsWithData: {fiveStarMovies::movieID: int,fiveStarMovies::avgRating: double,

nameLookup::movieID: int,nameLookup::movieTitle: chararray,nameLookup::releaseTime: long} JOIN ~ BY ~ (join type), ~ BY ~

- default로 inner join

(join type)으로 LEFT OUTER, RIGHT, FULL OUTER - attribute::relation 으로 attribute 이름이 바뀜

ORDER BY

oldestFiveStarMovies = ORDER fiveStarsWithData BY

nameLookup::releaseTime;

DUMP oldestFiveStarMovies;

# 결과 sample: (493,4.15,493,Thin Man, The (1934),-1136073600)Pig Latin 문법

STORE- disk에 realation을 write하는 기능

- 어떤 형식으로 저장할지 옵션이 많음

DISTINCT- gives you back the unique values within a relation for each generate

FOREACH/GENERATE- a way of creating a new relation from an existing one going through a one line at a time and transforming it in some way

MAPREDUCE- we have mapreduce which was actually lets you call explicit mappers and reducers on a relation.

- you can actually blend Pig and mapreduce together

STREAM- stream the results of Pig out to a process and just use standard in and standard out just like a mapreduce streaming

- also used for extensibility

SAMPLE- can be used to create a random sample from your relation.

COGROUP- just a variation of join

- creates a separate tuple for each key and it creates this nested structure.

CROSS: Cartesian productCUBE- Cube is even bigger than CROSS

- take more than two columns and do all the combinations

RANK: assigns a rank number to each row.LIMIT: if you don't want to dump the entire thing out you can create a new relation using limitUNION: squishes two relations togetherSPLIT: splits it up into more than one relation.

Diagnostics

EXPLAIN: give you a little insight into how Pig intends to actually execute a given queryILLUSTRATE: takes a sample from each relation and shows you exactly what it's doing with each piece of data

UDF

- this involves writing Java code and making jar files

REGISTER: import a jar file that contains user defined functionsDEFINE: assigns names to those functions so you can actually use them within your Pig scriptsIMPORT: macros for Pig file so you can actually have reusable bits of Pig code that you save office macros and you can import those macros into other Pig scripts so it makes a little bit easier

Aggregate function

- AVG

- CONCAT

- COUNT

- MIN / MAX

- SIZE

- SUM

Storage classes

- this assumes that you have some sort of delimiter on each row of your input or output data

TextLoader- even more basic so that just loads up one line of input data or output data per row.

- Kind of like what you do in mapreduce when you're doing your first mapper right.

JsonLoader: JSONAvroStorage- more specifically a serialization and deserialization format that's very popular with the Hadoop

- it's amenable to having a schema and also to being split able across your Hadoop cluster

ParquetLoader: column oriented data format.OrcStorage: compressed formatHBaseStorage: HBase