Ensembling

모델의 정확도와 성능을 높이는데 좋은 방식으로 다양한 모델을 결합하여 하나의 강력한 모델을 만드는 방식입니다.

Voting Classifier

단순하고 다양한 머신러닝 모델의 prediction를 합치는 방식입니다.(가장 단순함)

이를 통해서, 평균적인 예측값 결과를 할 수 있습니다.

from sklearn.ensemble import VotingClassifier

ensemble_lin_rbf = VotingClassifier(estimators=[('KNN',KNeighborsClassifier(n_neighbors=10)),

('RBF',svm.SVC(probability=True,kernel='rbf',C=0.5,gamma=0.1)),

('RFor',RandomForestClassifier(n_estimators=500,random_state=0)),

('LR',LogisticRegression(C=0.05)),

('DT',DecisionTreeClassifier(random_state=0)),

('NB',GaussianNB()),

('svm',svm.SVC(kernel='linear',probability=True))

],

voting='soft').fit(train_X,train_Y)

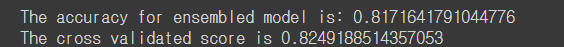

print('The accuracy for ensembled model is:',ensemble_lin_rbf.score(test_X,test_Y))

cross = cross_val_score(ensemble_lin_rbf,X,Y, cv = 10, scoring = "accuracy")

print('The cross validated score is', cross.mean())

Baaging

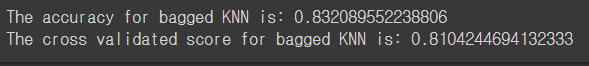

#Bagged KNN

from sklearn.ensemble import BaggingClassifier

model=BaggingClassifier(base_estimator=KNeighborsClassifier(n_neighbors=3),random_state=0,n_estimators=700)

model.fit(train_X,train_Y)

prediction=model.predict(test_X)

print('The accuracy for bagged KNN is:', metrics.accuracy_score(prediction,test_Y))

result=cross_val_score(model,X,Y,cv=10,scoring='accuracy')

print('The cross validated score for bagged KNN is:',result.mean())

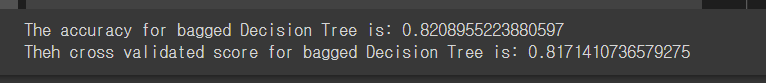

#Bagged DecisionTree

model=BaggingClassifier(base_estimator=DecisionTreeClassifier(),random_state=0,n_estimators=100)

model.fit(train_X,train_Y)

prediction=model.predict(test_X)

print('The accuracy for bagged Decision Tree is:',metrics.accuracy_score(prediction,test_Y))

result=cross_val_score(model,X,Y,cv=10,scoring='accuracy')

print('Theh cross validated score for bagged Decision Tree is:', result.mean())

Boosting

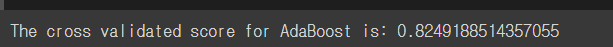

#AdaBoost(Adaptive Boosting)

from sklearn.ensemble import AdaBoostClassifier

ada=AdaBoostClassifier(n_estimators=200,random_state=0,learning_rate=0.1)

result=cross_val_score(ada,X,Y,cv=10,scoring='accuracy')

print('The cross validated score for AdaBoost is:', result.mean())

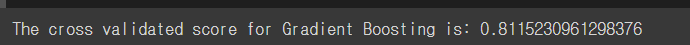

#Stochastic Gradient Boosting

from sklearn.ensemble import GradientBoostingClassifier

grad=GradientBoostingClassifier(n_estimators=500,random_state=0,learning_rate=0.1)

result=cross_val_score(grad,X,Y,cv=10,scoring='accuracy')

print('The cross validated score for Gradient Boosting is:', result.mean())

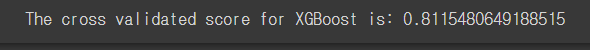

#XGBoost

import xgboost as xg

xgboost=xg.XGBClassifier(n_estimators=900,learning_rate=0.1)

result=cross_val_score(xgboost,X,Y,cv=10,scoring='accuracy')

print('The cross validated score for XGBoost is:', result.mean())

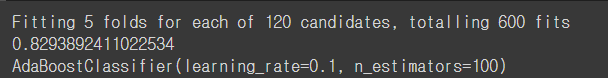

#Hyper-Parameter Tuning for AdaBoost

n_estimators = list(range(100,1100,100))

learn_rate=[0.05,0.1,0.2,0.3,0.25,0.4,0.5,0.6,0.7,0.8,0.9,1]

hyper={'n_estimators': n_estimators, 'learning_rate': learn_rate}

gd=GridSearchCV(estimator=AdaBoostClassifier(),param_grid=hyper,verbose=True)

gd.fit(X,Y)

print(gd.best_score_)

print(gd.best_estimator_)

Confusion Matrix for the Best Model

ada=AdaBoostClassifier(n_estimators=200,random_state=0,learning_rate=0.05)

result=cross_val_predict(ada,X,Y,cv=10)

sns.heatmap(confusion_matrix(Y,result),cmap='winter',annot=True,fmt='2.0f')

plt.show()

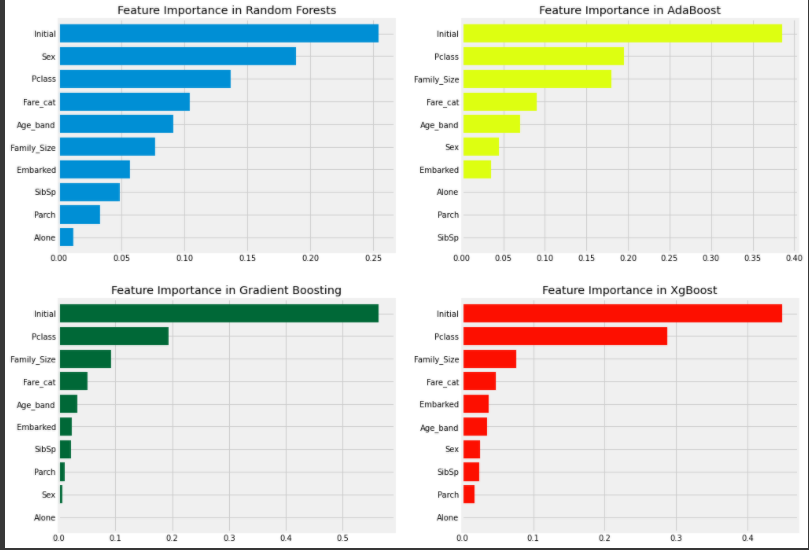

Feature Importance

f,ax=plt.subplots(2,2,figsize=(15,12))

model=RandomForestClassifier(n_estimators=500,random_state=0)

model.fit(X,Y)

pd.Series(model.feature_importances_,X.columns).sort_values(ascending=True).plot.barh(width=0.8,ax=ax[0,0])

ax[0,0].set_title('Feature Importance in Random Forests')

model=AdaBoostClassifier(n_estimators=200,learning_rate=0.05,random_state=0)

model.fit(X,Y)

pd.Series(model.feature_importances_,X.columns).sort_values(ascending=True).plot.barh(width=0.8,ax=ax[0,1],color='#ddff11')

ax[0,1].set_title('Feature Importance in AdaBoost')

model=GradientBoostingClassifier(n_estimators=500,learning_rate=0.1,random_state=0)

model.fit(X,Y)

pd.Series(model.feature_importances_,X.columns).sort_values(ascending=True).plot.barh(width=0.8,ax=ax[1,0],cmap='RdYlGn_r')

ax[1,0].set_title('Feature Importance in Gradient Boosting')

model=xg.XGBClassifier(n_estimators=900,learning_rate=0.1)

model.fit(X,Y)

pd.Series(model.feature_importances_,X.columns).sort_values(ascending=True).plot.barh(width=0.8,ax=ax[1,1],color='#FD0F00')

ax[1,1].set_title('Feature Importance in XgBoost')

plt.show()