Related Work

기존 메모리 저장 방식

- summaries and abstract high-level information from raw observations to capture key points and reduce information redundancy (Maharana et al., 2024)

- structuring memory as summaries, temporal events, or reasoning chains (Zhao et al., 2024a; Zhang et al., 2024; Zhu et al., 2023; Maharana et al., 2024; Anokhin et al., 2024; Liu et al., 2023a)

- semantic representations like sequence of events and historical event summaries (Zhong et al., 2023; Maharana et al., 2024)

- extract reusable workflows from canonical examples and integrate them into memory to assist test-time inference (Wang et al., 2024f)

기존 메모리 retrieve 방식

- generative retrieval models, which encode memory as dense vectors and retrieve the top-k relevant documents based on similarity search techniques (Zhong et al., 2023; Penha et al., 2024)

- Locality-Sensitive Hashing (LSH) are utilized to retrieve tuples containing related entries in memory (Hu et al., 2023b)

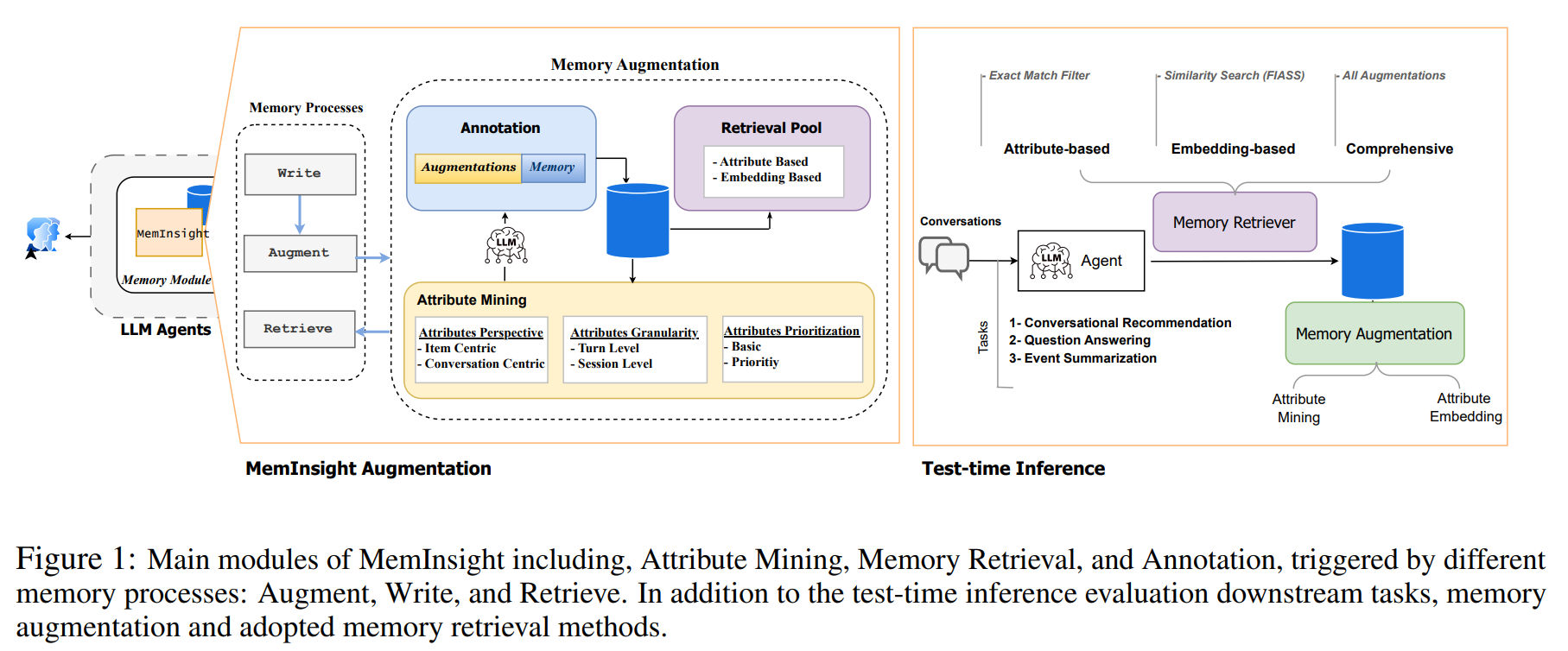

attribute mining, annotation, and memory retriever가 핵심

근데 그냥 기존 방법들처럼 정보(대화 내역)에 attribute 달아서 저장하는 건데 대신 human-designed attributes가 아닌 LLM agent가 attribute를 정의하는 거만 차이점인듯?

또 저장할 때 구조화시키니까

사실 나올 게 나온 것 같고 그닥 창의적이거나 혁신적인 방법은 아닌 것 같다.