옛날 코드라 작동하지 않음, 이해도 부족으로 인해 코드 수정이 불가하여 참고용으로만 사용

scrapy

- 웹사이트에서 데이터 수집을 하기위한 오픈소스 파이썬 프레임워크

- 멀티스레딩으로 데이터 수집

- gmarket 상품데이터 수집

Scrapy 설치

# %pip install scrapy ## 또는

# !pip install scrapy1. 스크레피 프로젝트 생성

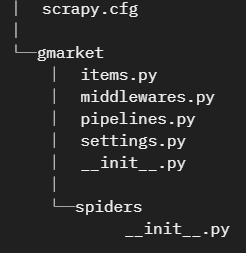

!scrapy startproject gmarket- 프로젝트 구조 확인

!tree gmarket

-

items : 데이터의 모양 정의

-

middewares : 수집할때 header 정보와 같은 내용을 설정

-

pipelines : 데이터를 수집한 후에 코드를 실행

-

settings : robots.txt 규칙, 크롤링 시간 텀등을 설정

-

spiders : 크롤링 절차를 정의

2. xpath 찾기 : 링크, 상세 페이지

- 요소의 XPath 링크는 개발자도구 --> 요소 --> Copy --> Copy XPath

import scrapy, requests # 라이브러리 임포트

from scrapy.http import TextResponse

# 링크 데이터

request = requests.get("http://corners.gmarket.co.kr/Bestsellers")

response = TextResponse(request.url, body=request.text, encoding="utf-8")

links = response.xpath('//*[@id="gBestWrap"]/div/div[3]/div/ul/li/a/@href').extract()# 상세 데이터 : 상품명, 가격

link = links[0]

request = requests.get(link)

response = TextResponse(request.url, body=request.text, encoding="utf-8")

title = response.xpath('//*[@id="itemcase_basic"]/div[1]/h1/text()')[0].extract()

price = response.xpath('//*[@id="itemcase_basic"]/div[1]/p/span/strong/text()')[0].extract()

print(title, price)3. items.py : model

- items.py 파일에 아래 내용 작성 (

import ~ Field())

%%writefile gmarket/gmarket/items.py

import scrapy

class GmarketItem(scrapy.Item):

title = scrapy.Field()

price = scrapy.Field()

link = scrapy.Field()4. spider.py : 크롤링 절차 정의

- spider.py 파일에 아래 내용 작성 (

import ~ item)

%%writefile gmarket/gmarket/spiders/spider.py

import scrapy

from gmarket.items import GmarketItem

class GMSpider(scrapy.Spider):

name = "GMB"

allow_domain = ["gmarket.co.kr"]

start_urls = ["http://corners.gmarket.co.kr/Bestsellers"]

def parse(self, response):

links = response.xpath('//*[@id="gBestWrap"]/div/div[3]/div/ul/li/a/@href').extract()

for link in links[:20]:

yield scrapy.Request(link, callback=self.parse_content)

def parse_content(self, response):

item = GmarketItem()

item["title"] = response.xpath('//*[@id="itemcase_basic"]/div[1]/h1/text()')[0].extract()

item["price"] = response.xpath('//*[@id="itemcase_basic"]/div[1]/p/span/strong/text()')[0].extract()

item["link"] = response.url

yield item5. 스크래피 실행

- gmarket 디렉토리에서 아래의 커멘드 실행

- scrapy crawl GMB -o items.csv

%ls gmarket/ # 프로젝트%pwd!cd C:\\Users\\User\\Desktop\\python_crawling\\day3\\code!scrapy crawl GMB -o items.csvimport pandas as pd

pd.read_csv("gmarket/items.csv").tail(2)