1. 오늘 내가 한 일!

카카오맵 데이터 크롤링🎡

크롤링한 데이터 db에 넣기✨

2. 프로젝트 자료

https://github.com/ksykma/DRF_Jeju_list_project/tree/main

3. 문무해알

- AttributeError: 'UserManager' object has no attribute 'normalize_username'

- 문제점

Traceback (most recent call last):

File "manage.py", line 22, in <module>

main()

File "manage.py", line 18, in main

execute_from_command_line(sys.argv)

File "C:\Users\ksh40\Desktop\DRF_Jeju_list_project\venv\lib\site-packages\django\core\management\__init__.py", line 446, in execute_from_command_line

utility.execute()

File "C:\Users\ksh40\Desktop\DRF_Jeju_list_project\venv\lib\site-packages\django\core\management\__init__.py", line 440, in execute

self.fetch_command(subcommand).run_from_argv(self.argv)

File "C:\Users\ksh40\Desktop\DRF_Jeju_list_project\venv\lib\site-packages\django\core\management\base.py", line 402, in run_from_argv

self.execute(*args, **cmd_options)

File "C:\Users\ksh40\Desktop\DRF_Jeju_list_project\venv\lib\site-packages\django\contrib\auth\management\commands\createsuperuser.py", line 88, in execute return super().execute(*args, **options)

File "C:\Users\ksh40\Desktop\DRF_Jeju_list_project\venv\lib\site-packages\django\core\management\base.py", line 448, in execute

output = self.handle(*args, **options)

File "C:\Users\ksh40\Desktop\DRF_Jeju_list_project\venv\lib\site-packages\django\contrib\auth\management\commands\createsuperuser.py", line 233, in handle self.UserModel._default_manager.db_manager(database).create_superuser(

File "C:\Users\ksh40\Desktop\DRF_Jeju_list_project\user\models.py", line 25, in create_superuser

user = self.create_user(

File "C:\Users\ksh40\Desktop\DRF_Jeju_list_project\user\models.py", line 17, in create_user

username=self.normalize_username(username),

AttributeError: 'UserManager' object has no attribute 'normalize_username'- 무엇을 몰랐는지(내가 한 시도)

creatersuperuser를 하려고 하니 저런 오류가 나서 튜터님께 여쭤보니

class UserManager(BaseUserManager):

def create_user(self, username, password=None):

if not username:

#이메일이 아니라면? -> username 검사 해봐 -> 없어? -> 에러 띄워 '이메일이나 아이디를 확인해라'

raise ValueError('Users must have an username')

user = self.model(

username=self.normalize_username(username),

)

user.set_password(password)

user.save(using=self._db)

return usernormalize_username을 수정하라고 하셨다!!

- 해결 방법

이 코드를

username=self.normalize_username(username),이렇게 바꾸니 슈퍼유저가 잘 생성이 되었다!!

username=username- 알게 된 것

normalize라는 함수는 정규화(평준화) 함수이다.

정규화: 관계형 데이터베이스에서 중복을 최소화하기 위해 데이터를 구조화시킴

때문에 email에 이 함수를 사용하면 대문자를 작성해도 소문자로 인식을 한다!!

- from selenium.webdriver.common.by import By(데이터 크롤링 하기)

- 문제점

- 무엇을 몰랐는지(내가 한 시도)

from selenium.webdriver.common.by import By

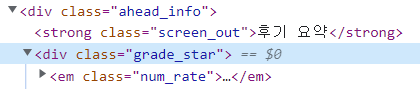

review_info['star'] = driver.find_element(By.CLASS_NAME,"ahead_info").find_element(By.CLASS_NAME,"grade_star").find_element(By.CLASS_NAME, "num_rate").text

reviews = driver.find_element(By.CLASS_NAME,"list_evaluation").find_elements(By.TAG_NAME, "li")- 해결 방법

- 알게 된 것

원래는 개발자 도구에서 크롤링 원하는 구역을 선택 후 copy selector로 불러왔었는데 튜터님께 class_name으로 크롤링 자료를 불러오는 새로운 방법을 배우게 되었다!!

from selenium.webdriver.common.by import By 를 import 한 후 위의 코드처럼 클래스를 타고타고 들어가는 식으로 코드를 작성하면 된다!!

- 크롤링한 데이터 db에 저장하기

- 문제점

- 무엇을 몰랐는지(내가 한 시도)

크롤링한 데이터를 어떤 방식으로 db에 저장을 해야하나 구글에서 여러 방법을 찾아보다가 튜터님께 질문들 드렸다.

가장 기본 방식은 views.py에 크롤링 코드 함수를 가져와 save를 시켜주면 된다고 하셨다.

- 해결 방법

- 알게 된 것

from django.shortcuts import render

from main.jeju import crawling

import pandas as pd

import numpy as np

from selenium import webdriver

from selenium.webdriver.common.keys import Keys

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.support import expected_conditions as EC

from selenium.common.exceptions import TimeoutException

from webdriver_manager.chrome import ChromeDriverManager

from selenium.common.exceptions import NoSuchElementException

from selenium.webdriver.common.by import By

from selenium.webdriver.support.ui import Select

from pprint import pprint

from selenium.common.exceptions import NoSuchElementException

from selenium.webdriver.chrome.service import Service

from tqdm import tqdm

import time

from main.models import Store

def crawling():

# df = pd.read_csv('main/jejulist.csv', encoding='cp949')

df1 = pd.read_csv('jejulist.csv', encoding='UTF-8')

df = df1.head(3)

jeju_store = df[['업소명','소재지','메뉴']]

print(jeju_store.head())

jeju_store.columns = [ 'name','address','menu']

chrome_options = webdriver.ChromeOptions()

driver = webdriver.Chrome(service=Service(ChromeDriverManager().install()), options=chrome_options)

jeju_store['kakao_keyword'] = jeju_store['address'] # "%20"는 띄어쓰기를 의미합니다.

store_detail_list = []

# 상세페이지 주소 따기

for i, keyword in enumerate(jeju_store['kakao_keyword'].tolist()):

print("이번에 찾을 키워드 :", i, f"/ {df.shape[0] -1} 행", keyword)

kakao_map_search_url = f"https://map.kakao.com/?q={keyword}"

driver.get(kakao_map_search_url)

time.sleep(3.5)

df.iloc[i,-1] = driver.find_element(By.CSS_SELECTOR,"#info\.search\.place\.list > li > div.info_item > div.contact.clickArea > a.moreview").get_attribute('href')

store_detail_list.append(df.iloc[i,-1])

# df.iloc[i,-1] = driver.find_element '//*[@id="info.search.place.list"]/li/div[5]/div[4]/a[1]').click()

print(store_detail_list)

for url in store_detail_list:

url = url.split('/')

print(url[-1])

Store(store_id=url[-1]).save()

store_info = []

for store in store_detail_list:

driver.get(store)

# driver.get(https://place.map.kakao.com/+f{'store_id'}) 주석풀고 이용하세요!

time.sleep(3)

review_info = {

'store_name': '',

'tags': [],

'contents': [],

}

review_info['star'] = driver.find_element(By.CLASS_NAME,"ahead_info").find_element(By.CLASS_NAME,"grade_star").find_element(By.CLASS_NAME, "num_rate").text

reviews = driver.find_element(By.CLASS_NAME,"list_evaluation").find_elements(By.TAG_NAME, "li")

for review in reviews:

review_content = {}

if not review.text : #or driver.find_element(By.CLASS_NAME,"group_likepoint").find_elements(By.TAG_NAME, "span"):

continue

try:

tags = review.find_element(By.CLASS_NAME, "group_likepoint").find_elements(By.TAG_NAME, "span")

review_content['tag'] = [x.text for x in tags]

except NoSuchElementException:

review_content['tag'] = None

try:

review_content['content'] = review.find_element(By.CLASS_NAME, "txt_comment").text

except NoSuchElementException:

# 식별값(있지만 없어도 되는 값)

review_content['content'] = None

review_info['tags'].append(review_content['tag'])

review_info['contents'].append(review_content['content'])

store_info.append(review_info)

pprint(store_info)

crawling()- from main.models import Store 로 모델 import

- 크롤링 코드를 함수값으로 가져와 제일 아래에 함수 작성해주기(crawling())

- 저장 원하는 데이터를 save한다.

for url in store_detail_list:

url = url.split('/')

print(url[-1])

Store(store_id=url[-1]).save()진짜 오랫도안 고민했는데 db에 저장하는게 이렇게 쉬울줄이야,,,ㅋㅋㅋㅋㅋ