논문리뷰

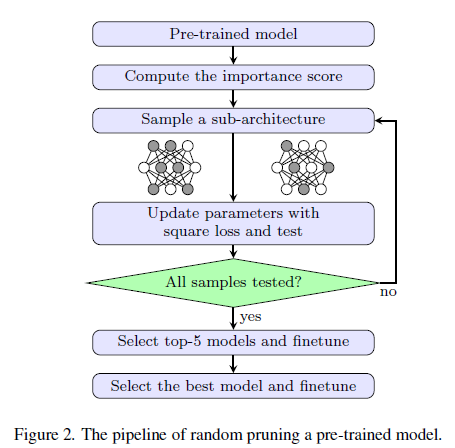

1.[논문리뷰] Revisiting Random Channel Pruning for Neural Network Compression

Revisiting Random Channel Pruning for Neural Network Compression 논문 리뷰

2022년 9월 16일

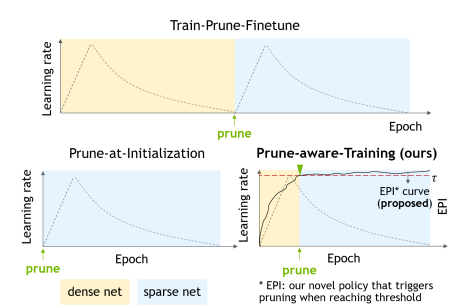

2.[논문리뷰] When to Prune? A Policy towards Early Structural Pruning

CVPR 2022에 실린 When to Prune? A Policy towards Early Structural Pruning 논문 리뷰

2023년 1월 5일

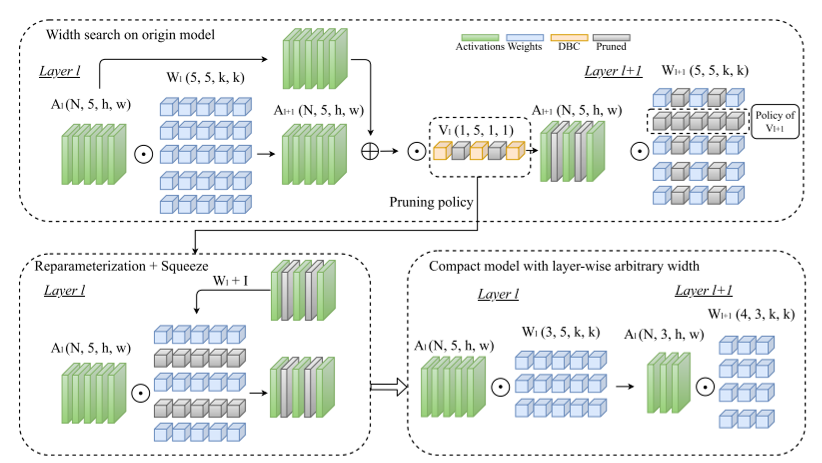

3.[논문리뷰] Pruning-as-Search: Efficient Neural Architecture Search via Channel Pruning and Structural Reparameterization

Pruning-as-Search paper review

2023년 2월 21일

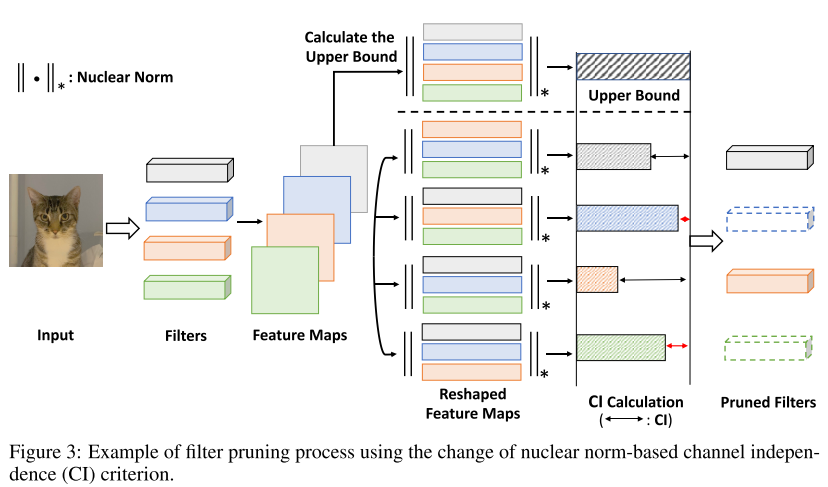

4.[논문리뷰] CHIP: CHannel Independence-based Pruning for Compact Neural Networks

feature map을 활용하여 Channel independence를 구하고 이를 pruning criterion으로 사용한다.

2023년 4월 18일

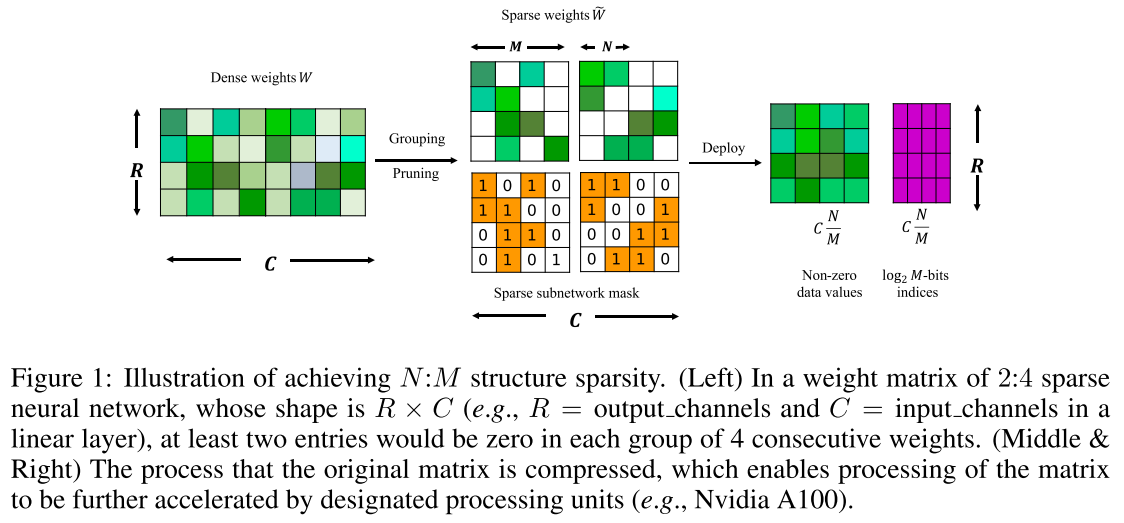

5.[논문 리뷰] LEARNING N:M FINE-GRAINED STRUCTURED SPARSE NEURAL NETWORKS FROM SCRATCH

Learning N:M Fine-grained Structured Sparse Neural Networks From Scratch 논문 리뷰

2023년 9월 6일

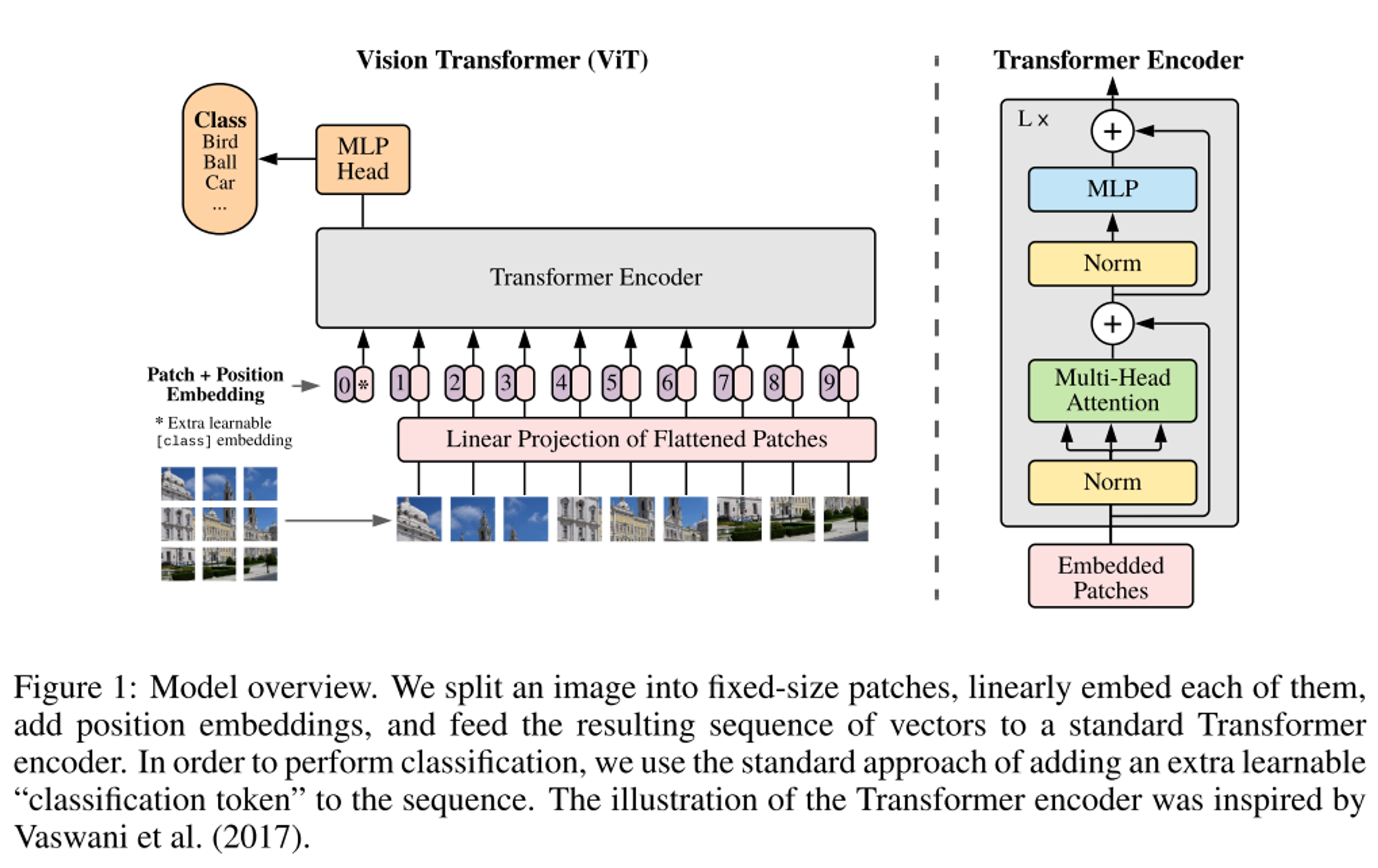

6.[논문 리뷰] An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale

ViT 논문 리뷰

2023년 10월 10일

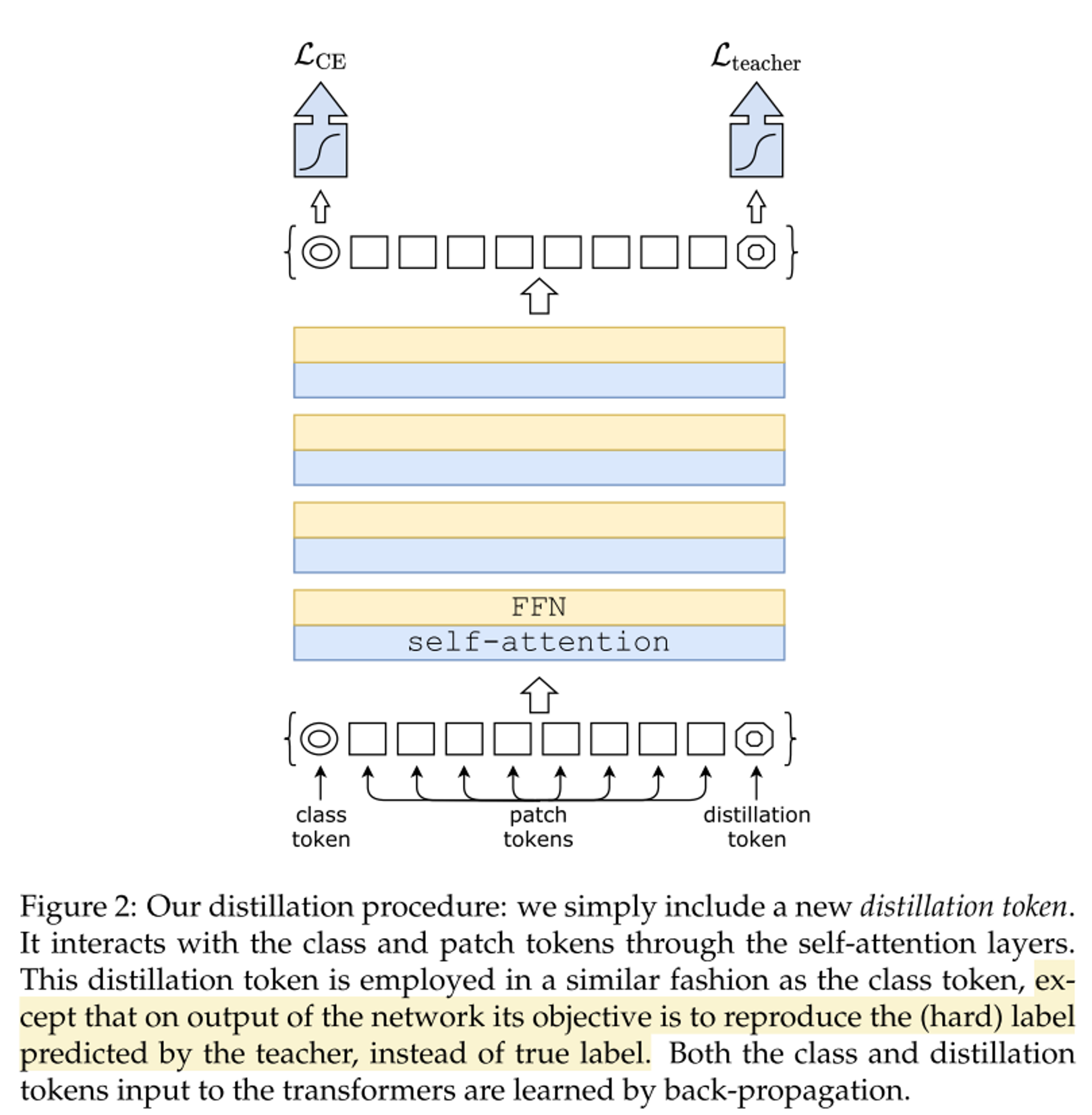

7.[논문 리뷰] Training data-efficient image transformers & distillation through attention

Training data-efficient image transformers & dstillation through attention 논문 리뷰

2023년 10월 12일

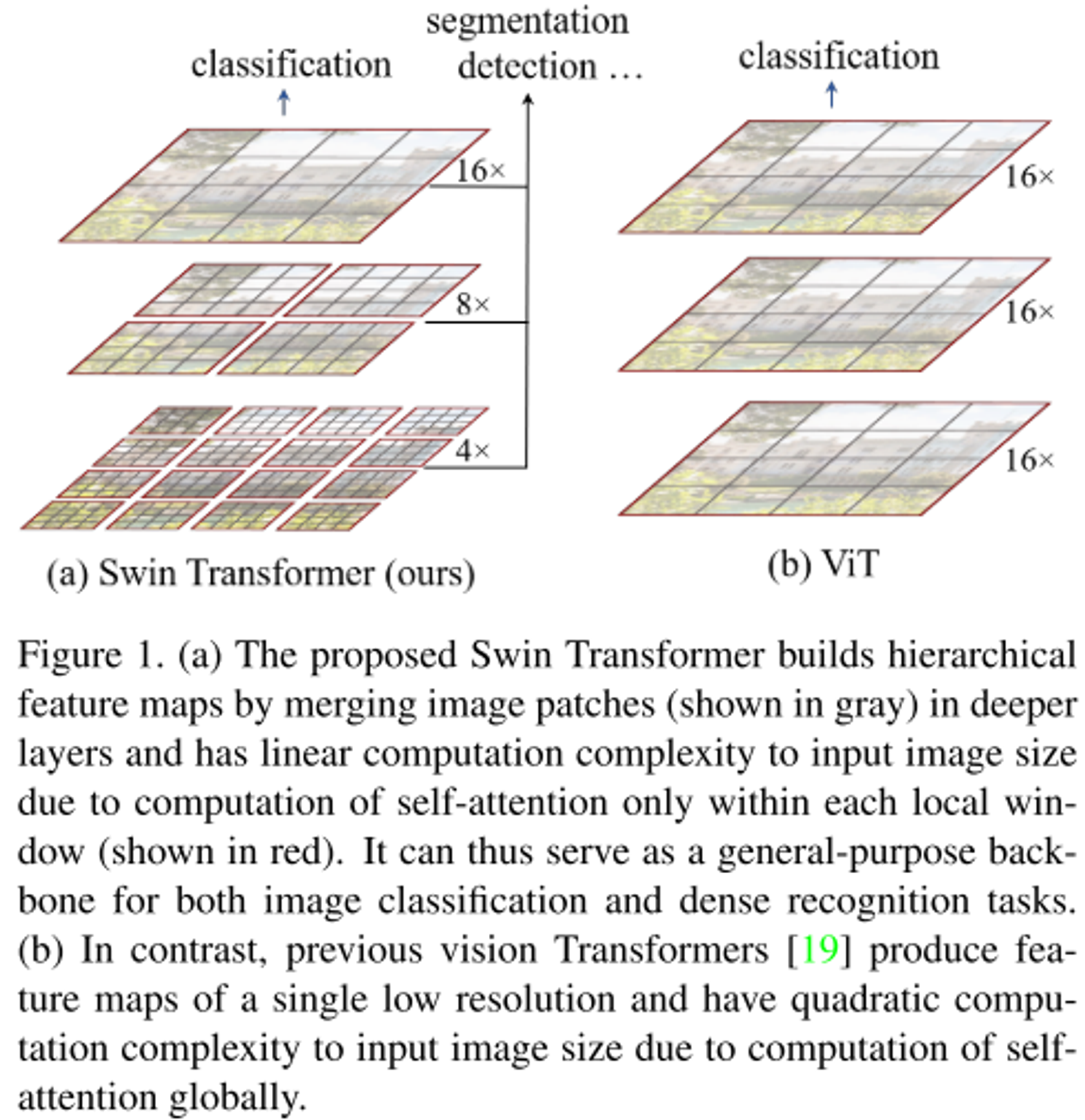

8.[논문 리뷰] Swin Transformer: Hierarchical Vision Transformer using Shifted Windows

Swin Transformer: Hierarchical Vision Transformer using Shifted Windows 논문 리뷰

2023년 10월 18일