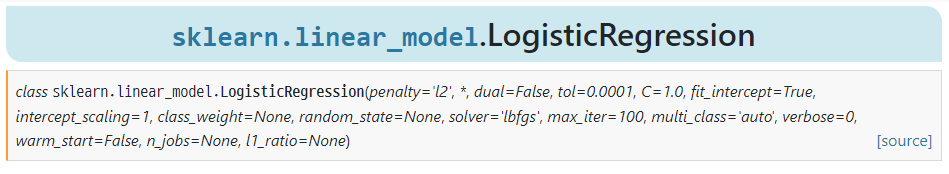

0. 핵심개념 및 사이킷런 알고리즘 API 링크

sklearn.linear_model.LogisticRegression

1. 분석 데이터 준비

import warnings

warnings.filterwarnings("ignore")

import pandas as pd

data=pd.read_csv('breast-cancer-wisconsin.csv', encoding='utf-8')

X=data[data.columns[1:10]]

y=data[["Class"]]

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test=train_test_split(X, y, stratify=y, random_state=42)

from sklearn.preprocessing import MinMaxScaler

scaler=MinMaxScaler()

scaler.fit(X_train) # 훈련데이터의 변수들 최소, 최대값을 기준으로 삼는다

X_scaled_train=scaler.transform(X_train) # 정규화 변환해라

X_scaled_test=scaler.transform(X_test)2. 기본모델 적용

from sklearn.linear_model import LogisticRegression

model=LogisticRegression()

model.fit(X_scaled_train, y_train)

pred_train=model.predict(X_scaled_train)

model.score(X_scaled_train, y_train)

0.97265625

from sklearn.metrics import confusion_matrix

confusion_train=confusion_matrix(y_train, pred_train)

print("훈련데이터 오차행렬:\n", confusion_train)

훈련데이터 오차행렬:

[[328 5]

[ 9 170]]

from sklearn.metrics import classification_report

cfreport_train=classification_report(y_train, pred_train)

print("분류예측 레포트:\n", cfreport_train)

분류예측 레포트:

precision recall f1-score support

0 0.97 0.98 0.98 333

1 0.97 0.95 0.96 179

accuracy 0.97 512

macro avg 0.97 0.97 0.97 512

weighted avg 0.97 0.97 0.97 512

pred_test=model.predict(X_scaled_test)

model.score(X_scaled_test, y_test)

0.9590643274853801

confusion_test=confusion_matrix(y_test, pred_test)

print("테스트데이터 오차행렬:\n", confusion_test)

테스트데이터 오차행렬:

[[106 5]

[ 2 58]]

from sklearn.metrics import classification_report

cfreport_test=classification_report(y_test, pred_test)

print("분류예측 레포트:\n", cfreport_test)

분류예측 레포트:

precision recall f1-score support

0 0.98 0.95 0.97 111

1 0.92 0.97 0.94 60

accuracy 0.96 171

macro avg 0.95 0.96 0.96 171

weighted avg 0.96 0.96 0.96 1713. Grid Search

param_grid={'C': [0.001, 0.01, 0.1, 1, 10, 100]}

from sklearn.model_selection import GridSearchCV

grid_search=GridSearchCV(LogisticRegression(), param_grid, cv=5)

grid_search.fit(X_scaled_train, y_train)

GridSearchCV(cv=5, estimator=LogisticRegression(),

param_grid={'C': [0.001, 0.01, 0.1, 1, 10, 100]})

print("Best Parameter: {}".format(grid_search.best_params_))

print("Best Score: {:.4f}".format(grid_search.best_score_))

print("TestSet Score: {:.4f}".format(grid_search.score(X_scaled_test, y_test)))

Best Parameter: {'C': 10}

Best Score: 0.9726

TestSet Score: 0.95914. Random Search

from scipy.stats import randint

param_distribs={'C': randint(low=0.001, high=100)}

from sklearn.model_selection import RandomizedSearchCV

random_search=RandomizedSearchCV(LogisticRegression(),

param_distributions=param_distribs, n_iter=100, cv=5)

random_search.fit(X_scaled_train, y_train)

RandomizedSearchCV(cv=5, estimator=LogisticRegression(), n_iter=100,

param_distributions={'C': <scipy.stats._distn_infrastructure.rv_frozen object at 0x000002621CED5FD0>})

print("Best Parameter: {}".format(random_search.best_params_))

print("Best Score: {:.4f}".format(random_search.best_score_))

print("TestSet Score: {:.4f}".format(random_search.score(X_scaled_test, y_test)))

Best Parameter: {'C': 11}

Best Score: 0.9745

TestSet Score: 0.9591