해당 글은 제로베이스데이터스쿨 학습자료를 참고하여 작성되었습니다

CNN_Feature_Maps

사용 데이터 및 모델

MNIST Load

import tensorflow as tf

mnist = tf.keras.datasets.mnist

(X_train, y_train),(X_test, y_test) = mnist.load_data()

X_train, X_test = X_train / 255.0, X_test / 255.0

X_train = X_train.reshape((60000, 28, 28, 1))

X_test = X_test.reshape((10000, 28, 28, 1))Simple Modeling

from tensorflow.keras import layers, models

model = models.Sequential([

layers.Conv2D(3, kernel_size=(3, 3), strides=(1,1), padding="same", activation="relu", input_shape=(28,28,1)),

layers.MaxPooling2D(pool_size=(2,2), strides=(2,2)),

layers.Dropout(0.25),

layers.Flatten(),

layers.Dense(1000, activation="relu"),

layers.Dense(10, activation="softmax")

])

model.summary()

--------------------------------------------------------------------

Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d (Conv2D) (None, 28, 28, 3) 30

max_pooling2d (MaxPooling2D (None, 14, 14, 3) 0

)

dropout (Dropout) (None, 14, 14, 3) 0

flatten (Flatten) (None, 588) 0

dense (Dense) (None, 1000) 589000

dense_1 (Dense) (None, 10) 10010

=================================================================

Total params: 599,040

Trainable params: 599,040

Non-trainable params: 0

_________________________________________________________________layer 조회

model.layers

--------------------------------------------------------------------

[<keras.layers.convolutional.conv2d.Conv2D at 0x1428ca6d310>,

<keras.layers.pooling.max_pooling2d.MaxPooling2D at 0x1428d08ead0>,

<keras.layers.regularization.dropout.Dropout at 0x1428e790150>,

<keras.layers.reshaping.flatten.Flatten at 0x1428e7b2a90>,

<keras.layers.core.dense.Dense at 0x1428e7b3690>,

<keras.layers.core.dense.Dense at 0x1428e793a10>]아직 학습하지 않은 Conv 레이어의 weigths

conv = model.layers[0]

conv_weights = conv.weights[0].numpy()

conv_weights.mean(), conv_weights.std()

------------------------------------------

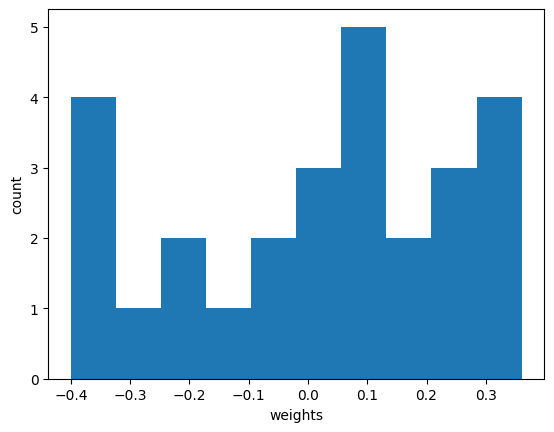

(0.012864082, 0.23187771)weights 분포도

import matplotlib.pyplot as plt

plt.hist(conv_weights.reshape(-1, 1))

plt.xlabel("weights")

plt.ylabel("count")

plt.show()

학습 전 conv filter

fig, ax = plt.subplots(1, 3, figsize=(15, 5))

for i in range(3):

ax[i].imshow(conv_weights[:,:,0,i], vmin=-0.5, vmax=0.5)

ax[i].axis("off")

plt.show()

모델 학습

model.compile(optimizer="adam", loss="sparse_categorical_crossentropy", metrics=["accuracy"])

hist = model.fit(X_train, y_train, epochs=5, verbose=1, validation_data = (X_test, y_test))학습 후 conv filter

fig, ax = plt.subplots(1, 3, figsize=(15, 5))

for i in range(3):

ax[i].imshow(conv_weights[:,:,0,i], vmin=-0.5, vmax=0.5)

ax[i].axis("off")

plt.show()

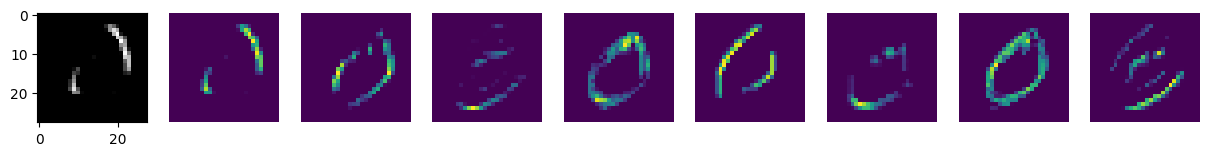

FeatureMaps

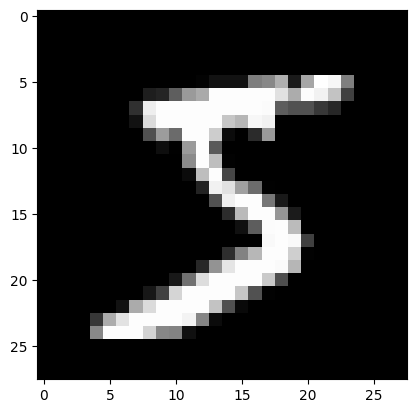

FeatureMaps에 사용할 데이터

plt.imshow(X_train[0], cmap="gray")

FeatureMaps 생성

inputs = X_train[0].reshape(-1, 28, 28, 1)

conv_layer_output = tf.keras.Model(model.input, model.layers[0].output)

feature_maps = conv_layer_output.predict(inputs)feature_maps

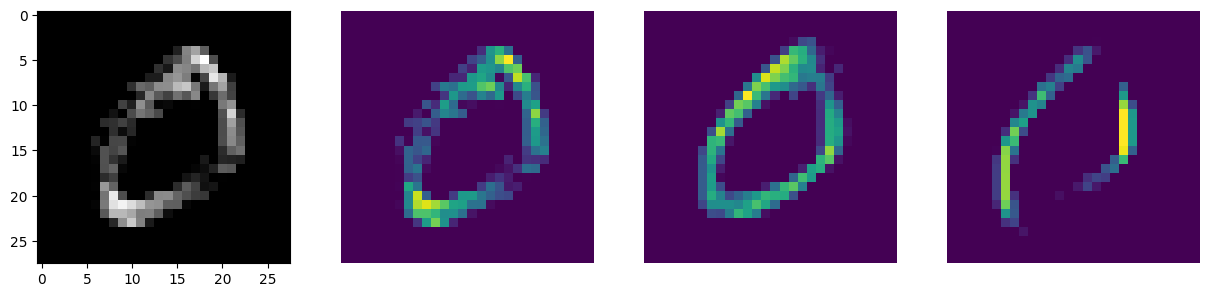

def draw_feature_maps(n):

inputs = X_train[n].reshape(-1, 28, 28, 1)

feature_maps = conv_layer_output.predict(inputs)

fig, ax = plt.subplots(1,4, figsize=(15,5))

ax[0].imshow(feature_maps[0,:,:,0], cmap="gray")

for i in range(1, 4):

ax[i].imshow(feature_maps[0,:,:,i-1])

ax[i].axis("Off")

plt.show()

draw_feature_maps(1)

draw_feature_maps(4)

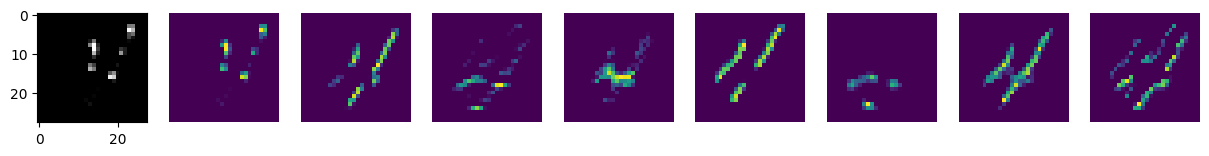

채널 증가

- 입력 수 3 -> 8

from tensorflow.keras import layers, models

model1 = models.Sequential([

layers.Conv2D(8, kernel_size=(3,3), strides=(1,1), padding="same", activation="relu", input_shape=(28,28,1)),

layers.MaxPooling2D(pool_size=(2,2), strides=(2,2)),

layers.Dropout(0.25),

layers.Flatten(),

layers.Dense(1000, activation="relu"),

layers.Dense(10, activation="softmax")

])

model1.compile(optimizer="adam", loss="sparse_categorical_crossentropy", metrics=["accuracy"])

hist = model1.fit(X_train, y_train, epochs=5, verbose=1, validation_data = (X_test, y_test))

---------------------------------------------------------------------------------------

Epoch 1/5

1875/1875 [==============================] - 46s 24ms/step - loss: 0.1633 - accuracy: 0.9499 - val_loss: 0.0579 - val_accuracy: 0.9810

Epoch 2/5

1875/1875 [==============================] - 45s 24ms/step - loss: 0.0680 - accuracy: 0.9784 - val_loss: 0.0442 - val_accuracy: 0.9856

Epoch 3/5

1875/1875 [==============================] - 47s 25ms/step - loss: 0.0460 - accuracy: 0.9854 - val_loss: 0.0403 - val_accuracy: 0.9876

Epoch 4/5

1875/1875 [==============================] - 50s 26ms/step - loss: 0.0350 - accuracy: 0.9884 - val_loss: 0.0353 - val_accuracy: 0.9881

Epoch 5/5

1875/1875 [==============================] - 52s 28ms/step - loss: 0.0270 - accuracy: 0.9909 - val_loss: 0.0422 - val_accuracy: 0.9870feature_maps

conv_layer_output = tf.keras.Model(model1.input, model1.layers[0].output)

def draw_feature_maps(n):

inputs = X_train[n].reshape(-1, 28, 28, 1)

feature_maps = conv_layer_output.predict(inputs)

fig, ax = plt.subplots(1,9, figsize=(15,5))

ax[0].imshow(feature_maps[0,:,:,0], cmap="gray")

for i in range(1, 9):

ax[i].imshow(feature_maps[0,:,:,i-1])

ax[i].axis("Off")

plt.show()

draw_feature_maps(1)

draw_feature_maps(9)