Kaggle Link : https://www.kaggle.com/code/yoontaeklee/titanic-survival-rate-random-forest

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import seaborn as sns

import missingno as msno

import warnings

warnings.filterwarnings('ignore')

%matplotlib inline

# 입력 데이터는 "../input/" 디렉토리 안에서만 사용 가능전체 프로세스

1. 데이터셋 확인 : null data 확인 및 수정

2. 탐색적 데이터 분석(Exploratory data analysis)

: 여러 feature들을 개별적으로 분석하고, feature들 간의 상관관계 확인, 여러 시각화 툴을 사용해 insight 얻기

3. Feature engineering

: 모델 세우기 전, 모델의 성능을 높일 수 있도록 feature들을 수정. One-hot encoding, class 나누기, 구간으로 나누기, 텍스트 데이터 처리 등

4. Model 만들기

: sklearn 사용하여 모델 만들기

5. 모델 학습 & 예측

: trainset으로 모델을 학습시킨 후, testset으로 prediction

6. 모델 평가

: 예측 성능이 원하는 수준인지 판단

1. 데이터셋 확인

df_train = pd.read_csv('../input/titanic/train.csv')

df_test = pd.read_csv('../input/titanic/test/csv')

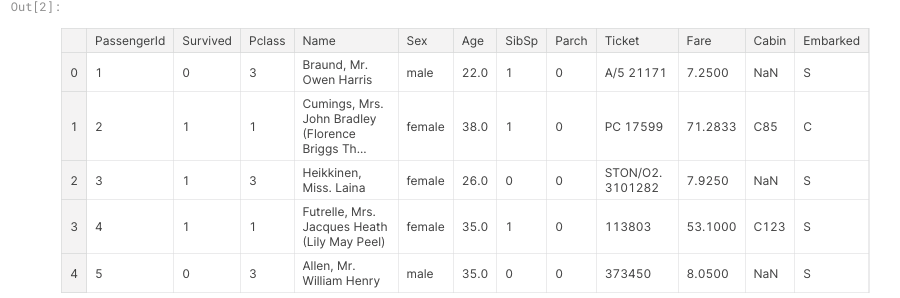

df_train.head()

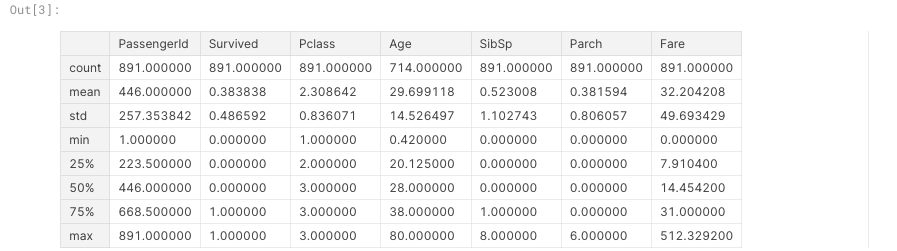

df_train.describe()

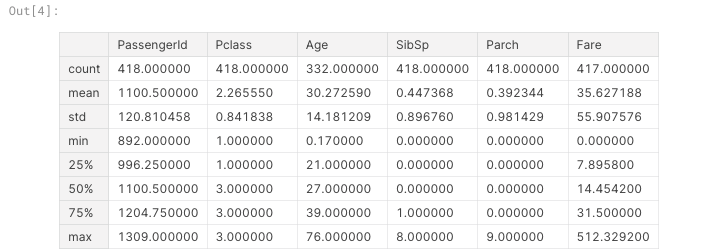

df_test.describe()

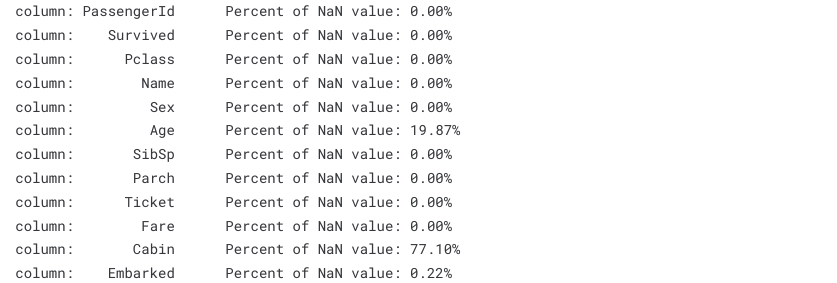

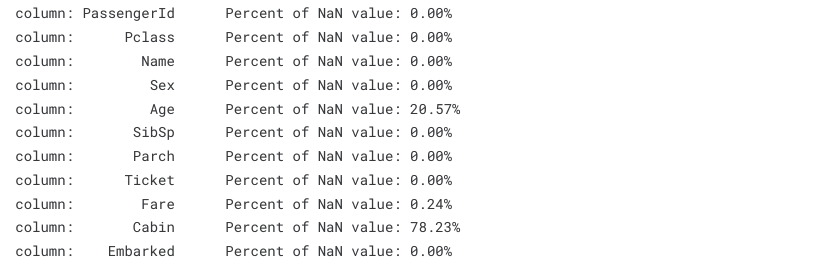

1.1 Null data check

for column in df_train.columns:

msg = 'column: {:>11}\t Percent of NaN value: {:.2f}%'.format(

column, 100 * (df_train[column].isnull().sum() / df_train[column].shape[0]))

print(msg)

for column in df_test.columns:

msg = 'column: {:>11}\t Percent of NaN value: {:.2f}%'.format(

column, 100 * (df_test[column].isnull().sum() / df_test[column].shape[0]))

print(msg)

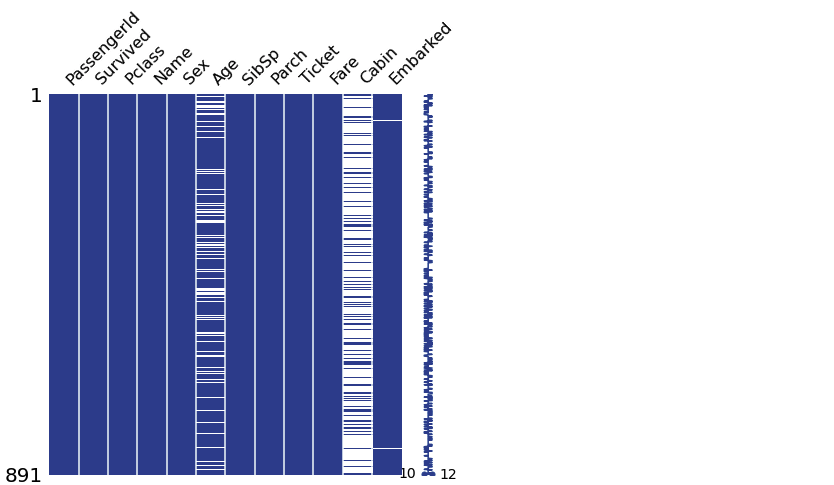

- missingno 라이브러리를 통해 더 쉽게 null data를 확인할 수 있다.

msno.matrix(df=df_train.iloc[:, :], figsize=(7,7), color=(0.01,0.3,0.6))

msno.bar(df=df_train.iloc[:,:], figsize=(7,7), color=(0.01,0.3,0.6))

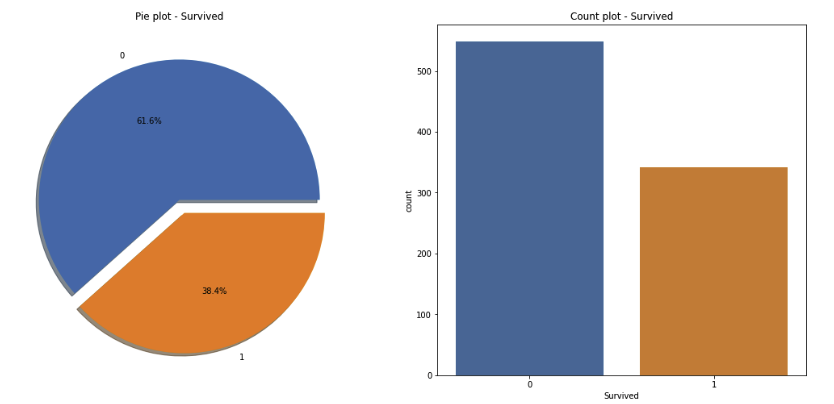

1.2 Target label 확인

- Target label이 어떤 distribution을 가지고 있는지 확인

- 지금과 같은 binary classification 문제의 경우, 1과 0의 분포에 따라 모델의 평가 방법이 달라질 수 있음

f, ax = plt.subplots(1,2,figsize=(18,8))

df_train['Survived'].value_counts().plot.pie(explode=[0,0.1], autopct='%1.1f%%',

ax=ax[0], shadow=True)

ax[0].set_title('Pie plot - Survived')

ax[0].set_ylabel('')

sns.countplot('Survived', data=df_train, ax=ax[1])

ax[1].set_title('Count plot - Survived')

plt.show()

2. 탐색적 데이터 분석(Exploratory data analysis)

- 시각화 라이브러리는 matplotlib, seaborn, plotly 등 목적에 맞게 사용하여 참고

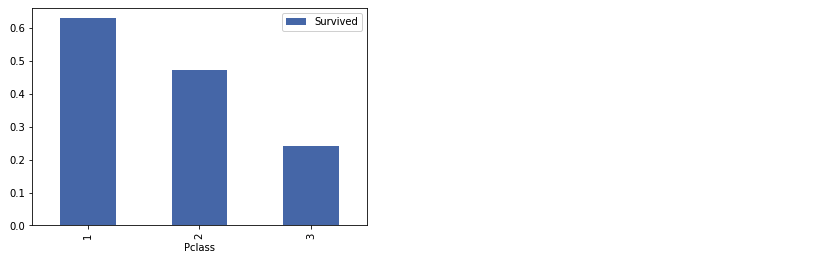

2.1 Pclass

- Pclass는 카테고리 이면서, 순서가 있는 데이터 타입

- Pclass에 따른 생존률을 살펴보기 위해, pandas dataframe의 groupby 사용

- ‘Pclass’와 ‘Survived’를 pclass로 묶는다. 각 pclass마다 0,1이 카운트 되는데, 이를 평균내어 각 pclass 별 생존률을 확인

df_train[['Pclass','Survived']].groupby(['Pclass'], as_index=True).count()

df_train[['Pclass','Survived']].groupby(['Pclass'],as_index=True).sum()- pandas의 crosstab을 사용하면 조금 더 쉽게 볼 수 있다

pd.crosstab(df_train['Pclass'],df_train['Survived'], margins=True)

.style.background_gradient(cmap='summer_r')

df_train[['Pclass','Survived']].groupby(['Pclass'],as_index=True).mean()

.sort_values(by='Survived',ascending=False).plot.bar()

- 더 좋은 클래스에 탄 승객이 생존할 확률이 더 높다

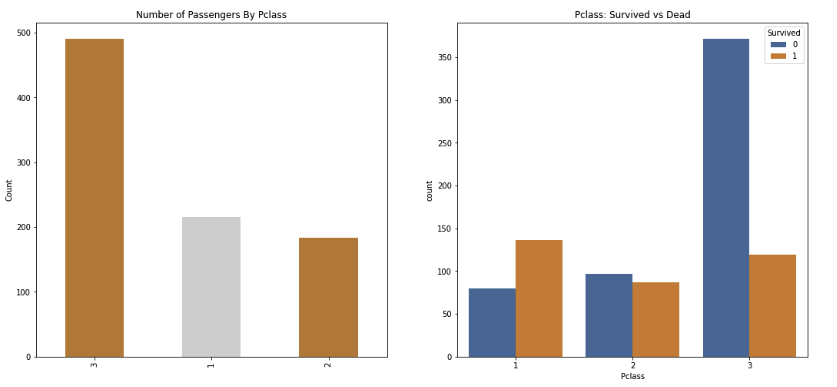

- seaborn의 countplot을 이용하여 특정 label에 따른 개수를 확인할 수 있다

y_position = 1.0

f, ax = plt.subplots(1,2,figsize=(18,8))

df_train['Pclass'].value_counts().plot.bar(color=['#CD7F32','#D3D3D3'], ax=ax[0])

ax[0].set_title('Number of Passengers By Pclass', y=y_position)

ax[0].set_ylabel('Count')

sns.countplot('Pclass', hue='Survived', data=df_train, ax=ax[1])

ax[1].set_title('Pclass: Survived vs Dead', y=y_position)

plt.show()

⇒ 현재까지 살펴본 결과, Pclass와 생존율 사이에 밀접한 관계가 있는 것을 확인하였다

따라서 후에 모델을 세울 때 Pclass feature를 사용한다

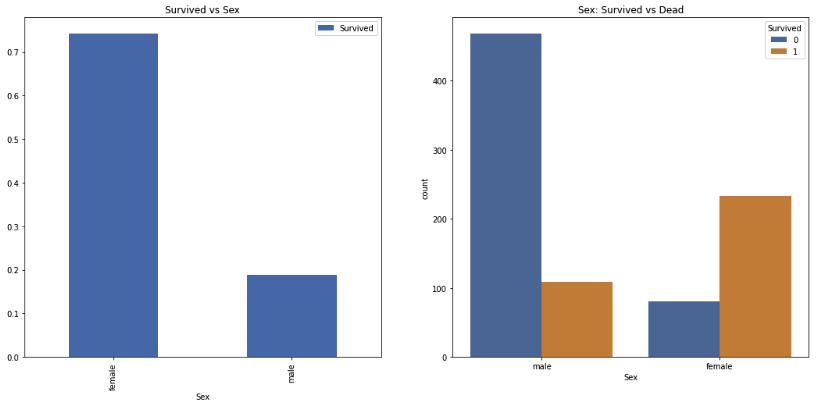

2.2 Sex

- 성별에 따른 생존률 확인

- 위와 마찬가지로 pandas groupby와 seaborn countplot을 사용하여 시각화한다

f, ax = plt.subplots(1,2,figsize=(18,8))

df_train[['Sex','Survived']].groupby(['Sex'],as_index=True).mean().plot.bar(ax=ax[0])

ax[0].set_title('Survived vs Sex')

sns.countplot('Sex',hue='Survived',data=df_train,ax=ax[1])

ax[1].set_title('Sex: Survived vd Dead')

plt.show()

df_train[['Sex','Survived']].groupby(['Sex'], as_index=False).mean()

.sort_values(by='Survived', ascending=False)

- 성별과 생존률 사이에 큰 상관관계를 보인다

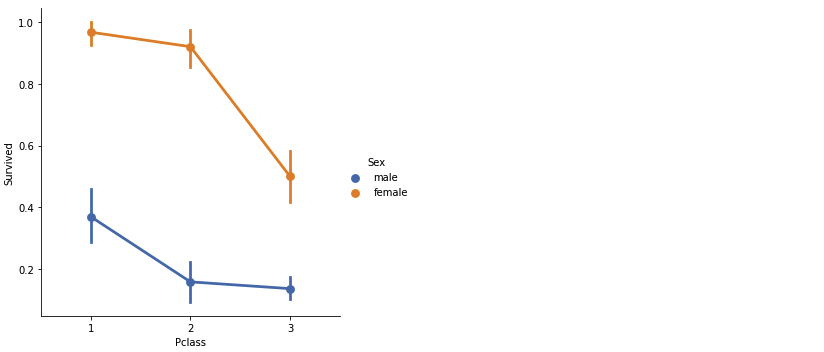

2.3 Both Sex and Pclass

- Sex와 Pclass 2개를 통합하여 생존률이 어떻게 달라지는지 확인

- seaborn의 factorplot을 이용하여 3차원으로 이루어진 그래프 확인

sns.factorplot('Pclass','Survived',hue='Sex',data=df_train)

- 모든 클래스에서 female이 생존할 확률이 높음

- male, female 상관 없이 클래스가 높을수록 생존할 확률 높음

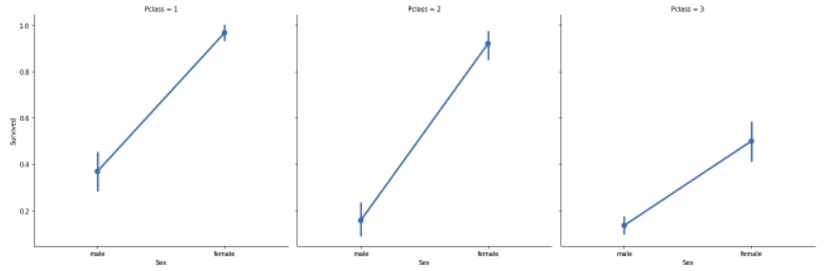

- 아래는 hue 대신 column으로 확인한 것

sns.factorplot(x='Sex',y='Survived',col='Pclass',data=df_train, satureation=.5,

size=6,aspect=1)

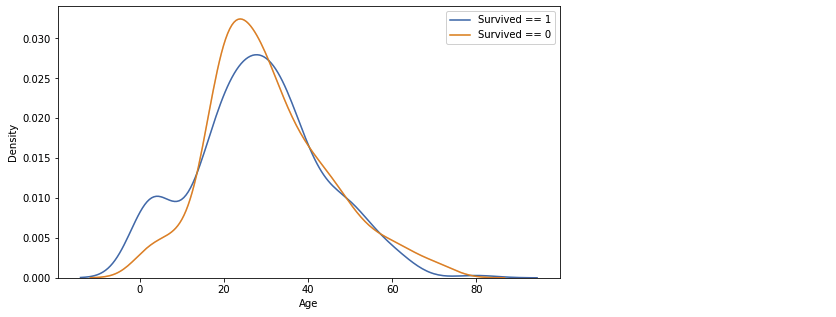

2.4 Age

print('나이가 가장 많은 탑승객 : {:.1f} Years'.format(df_train['Age'].max()))

print('나이가 적은 많은 탑승객 : {:.1f} Years'.format(df_train['Age'].min()))

print('탑승객 평균 나이 : {:.1f} Years'.format(df_train['Age'].mean()))

- 나이에 따른 생존 histogram

fig, ax = plt.subplots(1,1,figsize=(9,5))

sns.kdeplot(df_train[df_train['Survived'] == 1]['Age'],ax=ax)

sns.kdeplot(df_train[df_train['Survived'] == 0]['Age'],ax=ax)

plt.legend(['Survived == 1','Survived == 0'])

plt.show()

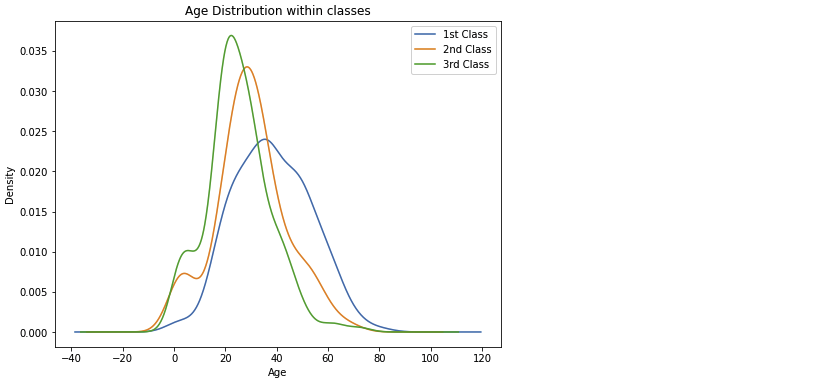

plt.figure(figsize=(8,6))

df_train['Age'][df_train['Pclass'] == 1].plot(kind='kde')

df_train['Age'][df_train['Pclass'] == 2].plot(kind='kde')

df_train['Age'][df_train['Pclass'] == 3].plot(kind='kde')

plt.xlabel('Age')

plt.title('Age Distribution within classes')

plt.legend(['1st Class','2nd Class','3rd Class'])

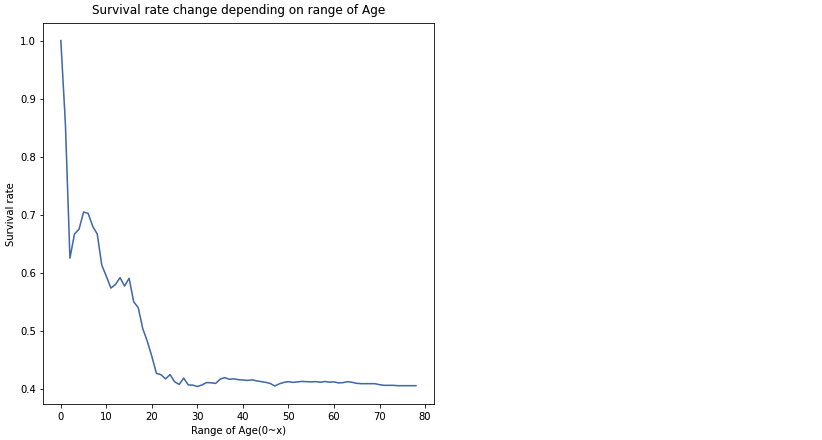

cummulate_survival_ratio = []

for i in range(1,80):

cummulate_survival_ratio.append(df_train[df_train['Age'] < i]['Survived'].sum()

/ len(df_train[df_train['Age'] < i]['Survived']))

plt.figure(figsize=(7,7))

plt.plot(cummulate_survival_ratio)

plt.title('Survival rate change depending on range of Age',y=1.01)

plt.ylabel('Survival rate')

plt.xlabel('Range of Age(0~x)')

plt.show()

- 나이가 어릴수록 생존률 높음

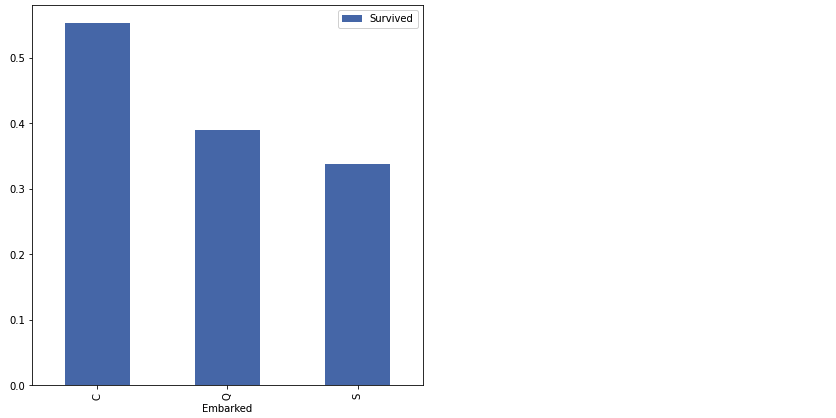

2.5 Embarked

- 탑승한 항구에 따른 생존률 확인

f, ax = plt.subplots(1,1,figsize=(7,7))

df_train[['Embarked','Survived']].groupby(['Survived',as_index=True).mean()

.sort_values(by='Survived',ascending=False).plot.bar(ax=ax)

- 생존률은 비슷하지만 조금의 차이는 있음

- 모델에 얼마나 큰 영향을 줄 지 모르지만, 일단 사용

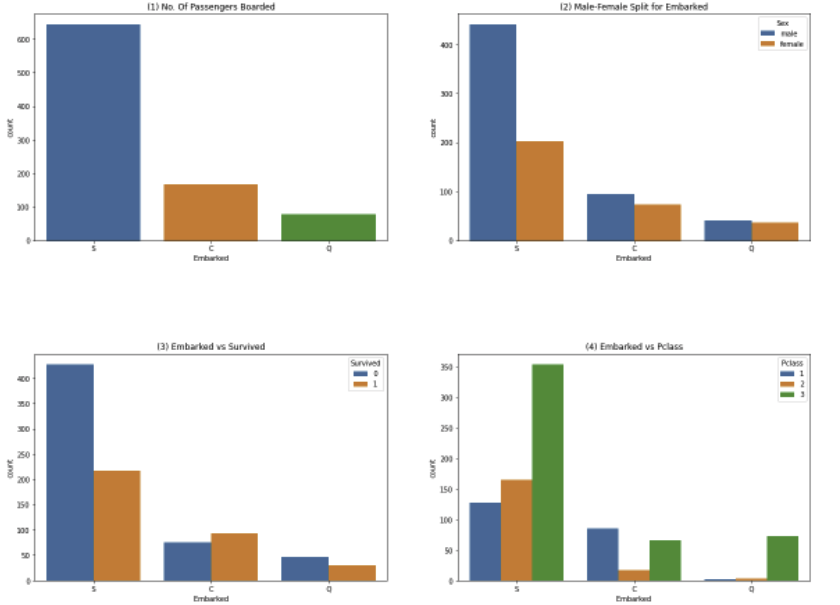

f, ax = plt.subplots(2,2,figsize=(20,15))

sns.countplot('Embarked',data=df_train,ax=ax[0,0])

ax[0,0].set_title('(1) No. Of Passengers Boarded')

sns.countplot('Embarked',hue='Sex',data=df_train,ax=ax[0,1])

ax[0,1].set_title('(2) Male-Female Split for Embarked')

sns.countplot('Embarked',hue='Survived',data=df_train,ax=ax[1,0])

ax[1,0].set_title('(3) Embarked vs Survived')

sns.countplot('Embarked',hue='Pclass',data=df_train,ax=ax[1,1])

ax[1,1].set_title('(4) Embarked vs Pclass')

plt.subplots_adjust(wspace=0.2, hspace=0.5)

plt.show()

- Figure1 : 전체적으로 S에서 가장 많은 승객이 탑승

- Figure2 : S는 남자 탑승객이 다수. C와 Q는 비슷

- Figure3 : S의 경우 생존률이 낮음. S에 남자 탑승객이 많은 것과 연관있을 수 있음

- Figure4 : Class별로 Split하여 확인한 결과 C에서 탑승한 승객의 생존률이 높은 것은, 클래스가 높기 때문

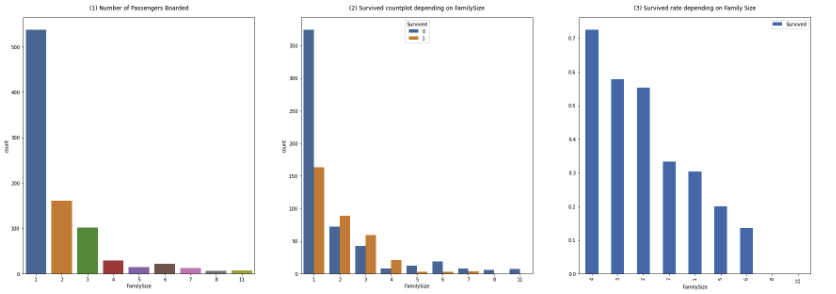

2.7 Family - SibSp(형제 자매) + Parch(부모 자녀)

df_train['FamilySize'] = df_train['SibSp'] + df_train['Parch'] + 1

df_test['FamilySize'] = df_test['SibSp'] + df_test['Parch'] + 1

print('Max size of Family: ',df_train['FamilySize'].max())

print('Max size of Family: ',df_test['FamilySize'].max())

f, ax = plt.subplots(1,3,figsize=(30,10))

sns.countplot('FamilySize',data=df_train,ax=ax[0])

ax[0].set_title('(1) Number of Passengers Boarded',y=1.02)

sns.countplot('FamilySize',hue='Survived',data=df_train,ax=ax[1])

ax[1].set_title('(2) Survived countplot depending on FamilySize',y=1.02)

df_train[['FamilySize','Survived']].groupby(['FamilySize'],as_index=True).mean()

.sort_values(by='Survived',ascending=False).plot.bar(ax=ax[2])

ax[2].set_title('(3) Survived rate depending on Family Size',y=1.02)

plt.subplots_adjust(wspace=0.2, hspace=0.5)

plt.show()

- 가족이 4명인 경우 생존 확률이 가장 높음

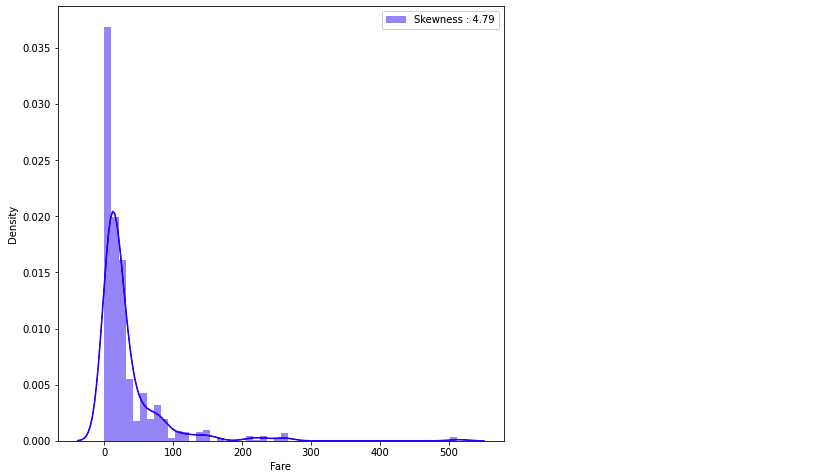

2.8 Fare

- 탑승 요금에 따른 histogram

fig, ax = plt.subplots(1,1,figsize=(8,8))

g = sns.distplot(df_train['Fare'], color='b', label='Skewness : {:.2f}'.format(

df_train['Fare'].skew()),ax=ax)

g=g.legend(loc='best')

- Distribution이 매우 비대칭(High skewness). 이 상태로 모델에 넣는다면 모델이 잘못 학습할 수 있음

- outlier의 영향을 줄이기 위해 Fare에 log 취하기

- Fare column의 모든 데이터를 log 취하기 위해 lambda 함수를 이용하여 map에 인수로 넣어주면 데이터에 그대로 적용

df_test.loc[df_test.Fare.isnull(),'Fare'] = df_test['Fare'].mean()

df_train['Fare'] = df_train['Fare'].map(lambda i: np.log(i) if i > 0 else 0)

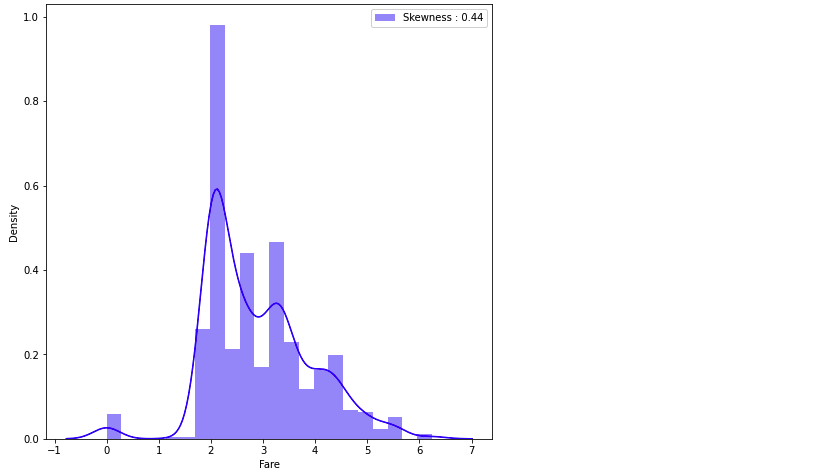

df_test['Fare'] = df_test['Fare'].map(lambda i: np.log(i) if i > 0 else 0)fig, ax = plt.subplots(1,1,figsize=(8,8))

g = sns.distplot(df_train['Fare'], color='b', label='Skewness : {:.2f}'.format(

df_train['Fare'].skew()),ax=ax)

g=g.legend(loc='best')

- log를 취하면서 비대칭성이 많이 사라짐

- 위와 같이 모델의 성능을 높이기 위해 feature들에 조작을 가하거나, 새로운 feature를 추가하는 것을 feature engineering이라고 한다

3. Feature engineering

- 가장 먼저, dataset에 존재하는 null data 채우기

- 해당 null data를 랜덤으로 넣으면 모델에 문제 생김 → null data를 포함하는 feature의 statistics를 참고하거나, 다른 아이디어 적요

- null data를 어떻게 채우느냐에 따라 모델의 성능이 갈림

3.1 Fill Null

3.1.1 Fill Null in Age using title

- Age에는 177개의 null data가 존재. 이를 채우기 위해 title + statistics 사용

- Ms, Mrr, Mrs와 같은 title을 사용하여 유추

- pandas series의 str method(data→string), extract method(정규표현식 사용)를 이용하여 유추

df_train['Initial'] = df_train.Name.str.extract('([A-Za-z]+\.')

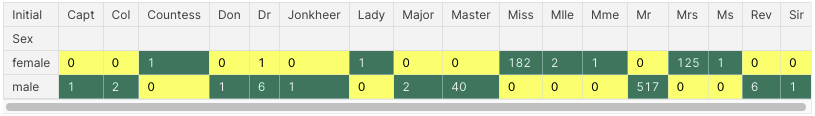

df_test['Initial'] = df_test.Name.str.extract('([A-Za-z]+\.')- pandas의 crosstab을 이용하여 추출한 Initial과 Sex와의 count 확인

pd.crosstab(df_train['Initial'],df_train['Sex']).T.style.background_gradient(cmap='summer_r')

df_train['Initial'].replace(['Mlle','Mme','Ms','Dr','Major','Lady',

'Countess','Jonkheer','Col','Rev','Capt','Sir','Don','Dona'],[

'Miss','Miss','Miss','Mr','Mr','Mrs','Mrs','Other','Other','Other',

'Mr','Mr','Mr','Mr'],inplace=True)

df_test['Initial'].replace(['Mlle','Mme','Ms','Dr','Major','Lady',

'Countess','Jonkheer','Col','Rev','Capt','Sir','Don','Dona'],[

'Miss','Miss','Miss','Mr','Mr','Mrs','Mrs','Other','Other','Other',

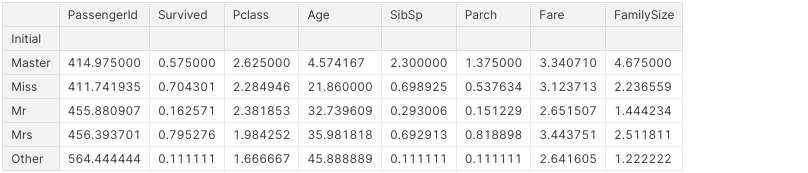

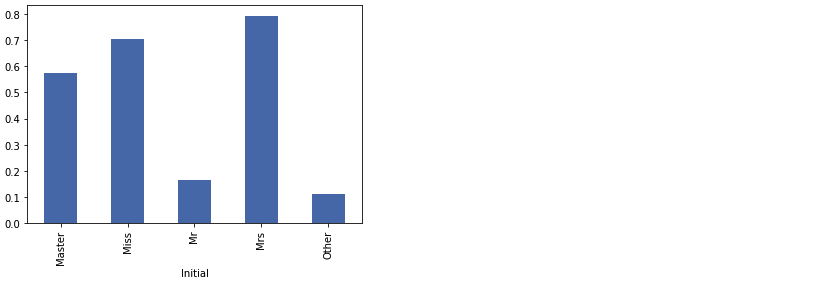

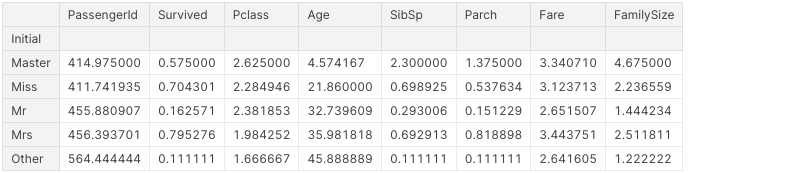

'Mr','Mr','Mr','Mr'],inplace=True)df_train.groupby('Initial').mean()

df_train.groupby('Initial')['Survived'].mean().plot.bar()

- Null data를 채우기 위해 statistics를 사용하는 방법, Null data가 없는 데이터를 기반으로 새로운 머신러닝 알고리즘을 만들어 예측하여 채워넣는 방법 등 여러가지가 있지만 이 프로젝트에서는 statistics 활용

df_train.groupby('Initial').mean()

- Age의 평균을 이용하여 Null value 채우기

- pandas dataframe을 다룰 때 boolean array를 이용하여 indexing하면 편함

df_train.loc[(df_train.Age.isnull())&(df_train.Initial=='Mr'),'Age'] = 33

df_train.loc[(df_train.Age.isnull())&(df_train.Initial=='Miss'),'Age'] = 22

df_train.loc[(df_train.Age.isnull())&(df_train.Initial=='Master'),'Age'] = 5

df_train.loc[(df_train.Age.isnull())&(df_train.Initial=='Mrs'),'Age'] = 36

df_train.loc[(df_train.Age.isnull())&(df_train.Initial=='Other'),'Age'] = 463.1.2 Fill Null in Embarked

print('Embarked has ',sum(df_train['Embarked'].isnull()),'Null values')

# Embarked has 2 Null values- Embarked는 Null value가 2개이고, S에서 가장 많은 탑승객이 있었으므로 간략하게 S로 채우기

- dataframe의 fillna method를 이용하면 쉽게 채울 수 있음. 여기서 inplace=True로 하면 df_train에 fillna를 실제로 적용 가능

df_train['Embarked'].fillna('S',inplace=True)3.2 Change Age (continuous to categorical)

- Age는 현재 continuous feature. 이대로 모델을 세울 수 있지만, 몇 개의 group으로 나누어 category화 시킬 수 있음. continuous를 categorical로 바꾸면 자칮 information loss가 생길 수 있으므로 조심

- dataframe의 indexing 방법인 loc를 사용하여 직접 할 수 있고, apply를 사용해 함수를 넣어줄 수도 있음

# dataframe의 indexing 방법인 loc 사용

df_train['Age_cat'] = 0

df_train.loc[df_train['Age'] < 10, 'Age_cat'] = 0

df_train.loc[(10 <= df_train['Age']) & (df_train['Age'] < 20),'Age_cat'] = 1

df_train.loc[(20 <= df_train['Age']) & (df_train['Age'] < 30),'Age_cat'] = 2

df_train.loc[(30 <= df_train['Age']) & (df_train['Age'] < 40),'Age_cat'] = 3

df_train.loc[(40 <= df_train['Age']) & (df_train['Age'] < 50),'Age_cat'] = 4

df_train.loc[(50 <= df_train['Age']) & (df_train['Age'] < 60),'Age_cat'] = 5

df_train.loc[(60 <= df_train['Age']) & (df_train['Age'] < 70),'Age_cat'] = 6

df_train.loc[70 <= df_train['Age'],'Age_cat'] = 7

df_test['Age_cat'] = 0

df_test.loc[df_test['Age'] < 10, 'Age_cat'] = 0

df_test.loc[(10 <= df_test['Age']) & (df_test['Age'] < 20),'Age_cat'] = 1

df_test.loc[(20 <= df_test['Age']) & (df_test['Age'] < 30),'Age_cat'] = 2

df_test.loc[(30 <= df_train['Age']) & (df_test['Age'] < 40),'Age_cat'] = 3

df_test.loc[(40 <= df_train['Age']) & (df_test['Age'] < 50),'Age_cat'] = 4

df_test.loc[(50 <= df_train['Age']) & (df_test['Age'] < 60),'Age_cat'] = 5

df_test.loc[(60 <= df_train['Age']) & (df_test['Age'] < 70),'Age_cat'] = 6

df_test.loc[70 <= df_train['Age'],'Age_cat'] = 7# apply 사용

def category_age(x):

if x < 10:

return 0

elif x < 20:

return 1

elif x < 30:

return 2

elif x < 40:

return 3

elif x < 50:

return 4

elif x < 60:

return 5

elif x < 70:

return 6

else:

return 7

df_train['Age_cat_2'] = df_train['Age'].apply(category_age)- 둘 다 같은 결과를 내는지 확인

- Series간 boolean 비교 후 all() 메소드 사용 → 하나라도 다르면 False 리턴

(df_train['Age_cat'] == df_train['Age_cat_2']).all()

# True- 중복되는 Age_cat 칼럼과 Age칼럼 제거

df_train.drop(['Age','Age_cat_2'], axis=1, inplace=True)

df_test.drop(['Age'], axis=1, inplace=True)3.3 Change Initial, Embarked and Sex (string to numerical)

- 현재 Initial은 Mr, Mrs, Miss, Master, Other 총 5개로 이루어져 있다. 이런 카테고리로 표현되어져 있는 데이터를 모델에 인풋으로 넣어줄 때 가장 먼저 해야할 것은 컴퓨터가 인식할 수 있도록 수치화 시키는 것

- map method를 사용하면 간단히 가능

df_train['Initial'] = df_train['Initial'].map({'Master':0,'Miss':1,'Mr':2,

'Mrs':3,'Other':4})

df_test['Initial'] = df_train['Initial'].map({'Master':0,'Miss':1,'Mr':2,

'Mrs':3,'Other':4})- Embarked 또한 C,Q,S로 이루어져 있으므로 map으로 변경

df_train['Embarked'].unique()

# array(['S','C','Q'], dtype=object)df_train['Embarked'].value_counts()

# S 646

# C 168

# Q 77

# Name: Embarked, dtype: int64df_train['Embarked'] = df_train['Embarked'].map({'C':0,'Q':1,'S':2})

df_test['Embarked'] = df_test['Embarked'].map({'C':0,'Q':1,'S':2})- Null이 사라졌는지 확인. Embarked Column만 가져온 것은 하나의 pandas Series 객체이므로, isnull() 메소드를 이용해 Series의 값들이 null인지 아닌지에 대한 boolean 값을 얻을 수 있음. 여기에 any()를 사용하여 True가 단 하나라도 있을 시(Null이 하나라도 있을 시) True 반환.

df_train['Embarked'].isnull().any()

# Falsedf_train['Sex'] = df_train['Sex'].map({'female':0,'male':1})

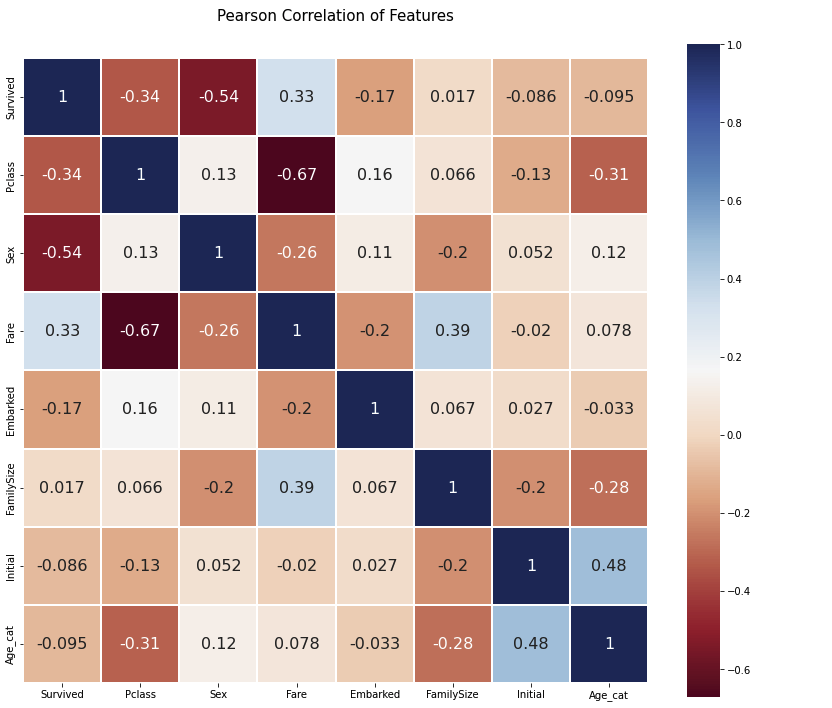

df_test['Sex'] = df_test['Sex'].map({'female':0,'male':1})- 각 feature간 상관관계 확인. 두 변수 사이의 Pearson correlation을 구하면 )-1,1) 사이의 값 구할 수 있음

heatmap_data = df_train[['Survived','Pclass','Sex','Fare','Embarked','FamilySize',

'Initial','Age_cat']]

colormap = plt.cm.RdBu

plt.figure(figsize=(14,12))

plt.title('Pearson Correlation of Features',y=1.05,size=15)

sns.heatmap(heatmap_data.astype(float).corr(),linewidths=0.1,vmax=1.0,square=True,

cmap=colormap,linecolor='white',annot=True,annot_kws={'size':16})

del heatmap_data

- EDA에서 확인했듯, Sex와 Pclass가 Survived와 관계가 있음

- 딱히 강한 상관관계를 가진 feature는 없음 ⇒ 이는, 모델을 학습시킬 때 불필요한 feature가 없다는 것을 의미

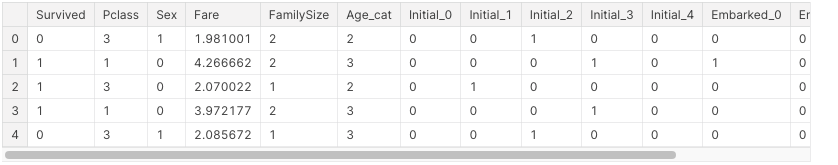

3.4 One-hot encoding on Initial and Embarked

- 수치화시킨 카테고리 데이터를 그대로 넣을 수 있지만, 모델의 성능을 높이기 위해 one-hot encoding

- 수치화는 간단히 Master==0, Miss==1, ... 로 매핑하는 것을 의미

- pandas의 get_dummies를 사용하여 작업

- Initial을 prefix로 두어 구분을 쉽게

df_train = pd.get_dummies(df_train, columns=['Initial'], prefix='Initial')

df_test = pd.get_dummies(df_test, columns=['Initial'], prefix='Initial')df_train = pd.get_dummies(df_train, columns=['Embarked'],prefix='Embarked')

df_test = pd.get_dummies(df_test, columns=['Embarked'],prefix='Embarked')- 위와 같은 one-hot encoding은 sklearn로 Labelencoder + OneHotencoder 이용해도 가능

- Category가 많은 경우에는 one-hot encoding을 사용하면 column이 지나치게 많아지므로 다른 방법 사용

3.5 Drop columns

- 불필요한 column 제거

df_train.drop(['PassengerId','Name','SibSp','Parch','Ticket','Cabin'],

axis=1,inplace=True)

df_test.drop(['PassengerId','Name','SibSp','Parch','Ticket','Cabin'],

axis=1,inplace=True)df_train.head()

4. Building machine learning model and prediction using the trained model

#importing all required ML packages

from sklearn.ensemble import RandomForestClassifier

from sklearn import metrics

from sklearn.model_selection import train_test_split4.1 Preparation - Split dataset into train, valid, test set

- 학습에 쓰일 데이터와, target label(Survived) 분리

X_train = df_train.drop('Survived',axis=1).values

target_label = df_train['Survived'].values

X_test = df_test.values- 보통 train, test만 언급되지만, 실제 좋은 모델을 만들기 위해 valid set을 따로 만들어 모델 평가

X_tr, X_vld, y_tr, y_vld = train_test_split(X_train, target_label, test_size=0.3,

random_state=2018)- 랜덤 포레스트 모델 사용

- 결정 트리 기반 모델이며, 여러 결정 트리들을 앙상블한 모델

4.2 Model generation and prediction

model = RandomForestClassifier()

model.fit(X_tr, y_tr)

prediction = model.predict(X_vld)print('총 {}명 중 {:.2f}% 정확도로 생존을 맞춤'.format(y_vld.shape[0],

100 * metrics.accuracy_score(prediction,y_vld)))

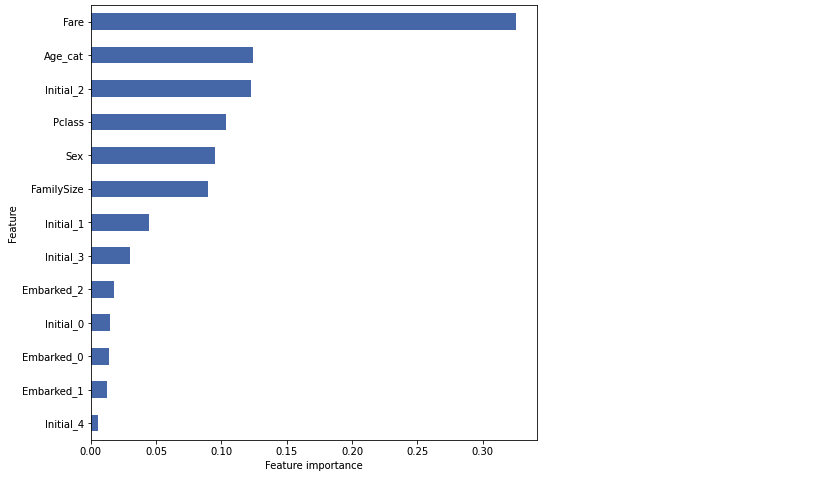

# 총 268명 중 81.72% 정확도로 생존을 맞춤4.3 Feature importance

- 학습된 모델은 feature importance를 가지게 되는데, 이것을 확인하여 지금 만든 모델이 어떤 feature에 영향을 많이 받았는지 확인 가능

from pandas import Series

feature_importance = model.feature_importances_

Series_feat_imp = Series(feature_importance, index=df_test.columns)plt.figure(figsize=(8,8))

Series_feat_imp.sort_values(ascending=True).plot.barh()

plt.xlabel('Feature importance')

plt.ylabel('Feature')

plt.show()