[pytorch] TENSORBOARD 시각화

0

Tensorboard

신경망의 복잡성을 줄이기 위해 TensorFlow 팀에서 만든 시각화 확장 프로그램. 손실 및 정확도와 같은 metric을 추적하고 모델 그래프 시각화, 저차원 공간에 임베딩 투영 등의 작업 수행이 가능.

1. CNN

(1) 데이터 불러오기 & 정규화

import matplotlib.pyplot as plt

import numpy as np

import torch

import torchvision

import torchvision.transforms as transforms

import torch.nn as nn

import torch.nn.functional as F

import torch.optim as optim

transform = transforms.Compose(

[transforms.ToTensor(),

transforms.Normalize((0.5,), (0.5,))])

trainset = torchvision.datasets.FashionMNIST('./data',

download=True,

train=True,

transform=transform)

testset = torchvision.datasets.FashionMNIST('./data',

download=True,

train=False,

transform=transform)

trainloader = torch.utils.data.DataLoader(trainset, batch_size=4,

shuffle=True, num_workers=2)

testloader = torch.utils.data.DataLoader(testset, batch_size=4,

shuffle=False, num_workers=2)

classes = ('T-shirt/top', 'Trouser', 'Pullover', 'Dress', 'Coat',

'Sandal', 'Shirt', 'Sneaker', 'Bag', 'Ankle Boot')(2) 이미지를 보여주기 위한 헬퍼 함수

def matplotlib_imshow(img, one_channel=False):

if one_channel:

img = img.mean(dim=0)

img = img / 2 + 0.5 # unnormalize

npimg = img.numpy()

if one_channel:

plt.imshow(npimg, cmap="Greys")

else:

plt.imshow(np.transpose(npimg, (1, 2, 0)))(3) 합성곱 신경망 정의

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv1 = nn.Conv2d(1, 6, 5)

self.pool = nn.MaxPool2d(2, 2)

self.conv2 = nn.Conv2d(6, 16, 5)

self.fc1 = nn.Linear(16 * 4 * 4, 120)

self.fc2 = nn.Linear(120, 84)

self.fc3 = nn.Linear(84, 10)

def forward(self, x):

x = self.pool(F.relu(self.conv1(x)))

x = self.pool(F.relu(self.conv2(x)))

x = x.view(-1, 16 * 4 * 4)

x = F.relu(self.fc1(x))

x = F.relu(self.fc2(x))

x = self.fc3(x)

return x

net = Net()(4) 손실함수, optimizer 정의

criterion = nn.CrossEntropyLoss()

optimizer = optim.SGD(net.parameters(), lr=0.001, momentum=0.9)2. TensorBoard 설정

(1) TensorBoard에 정보를 제공하는 SummaryWriter

from torch.utils.tensorboard import SummaryWriter

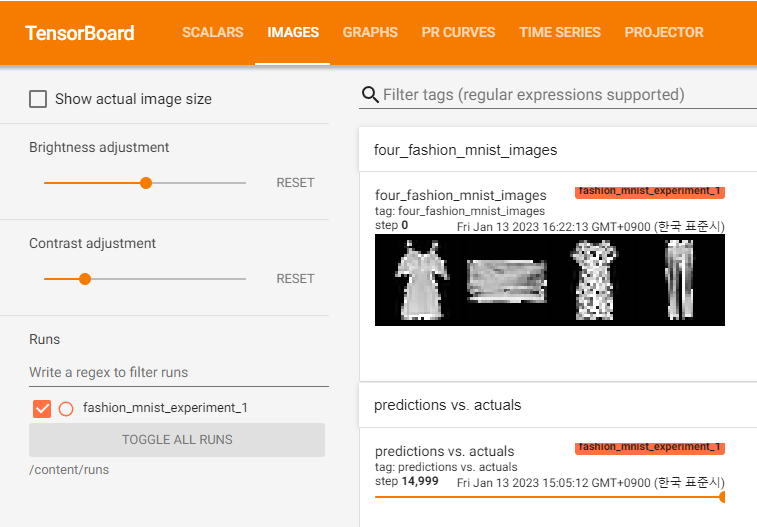

writer = SummaryWriter('runs/fashion_mnist_experiment_1')(2) TensorBoard grid

images, labels = next(iter(trainloader))

img_grid = torchvision.utils.make_grid(images)

matplotlib_imshow(img_grid, one_channel=True)

writer.add_image('four_fashion_mnist_images', img_grid)(3) 코랩에서 TensorBoard 실행

logdir 사용 시 중괄호 사이에 따옴표 필수

%load_ext tensorboard

%tensorboard --logdir {'/content/runs'}

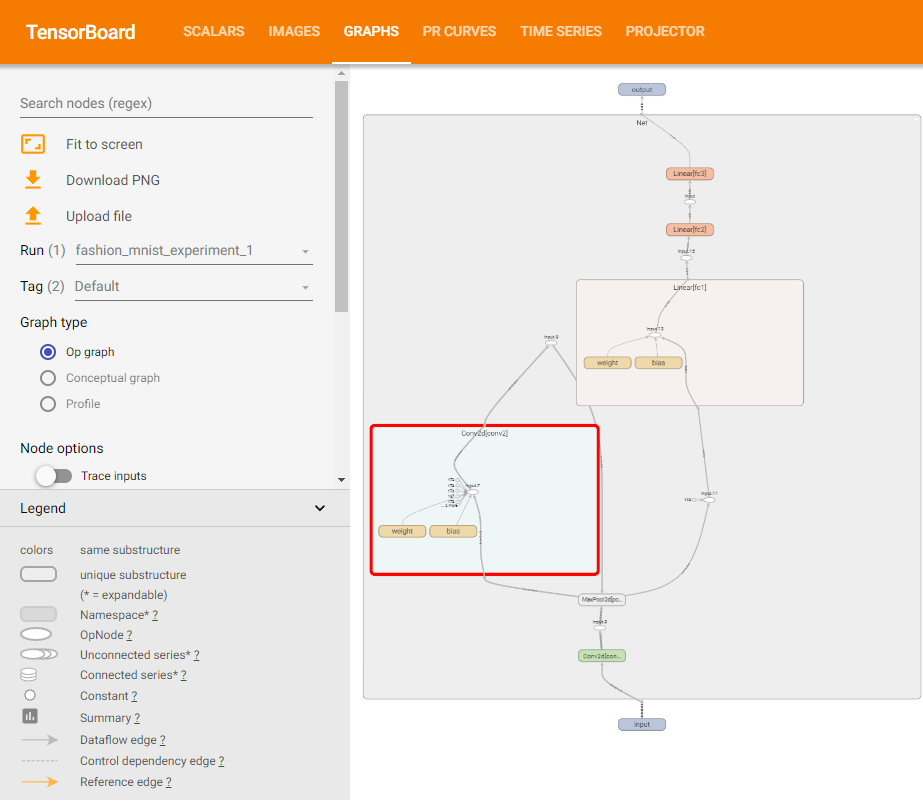

(4) 텐서보드에 그래프 추가

writer.add_graph(net, images)

writer.close()

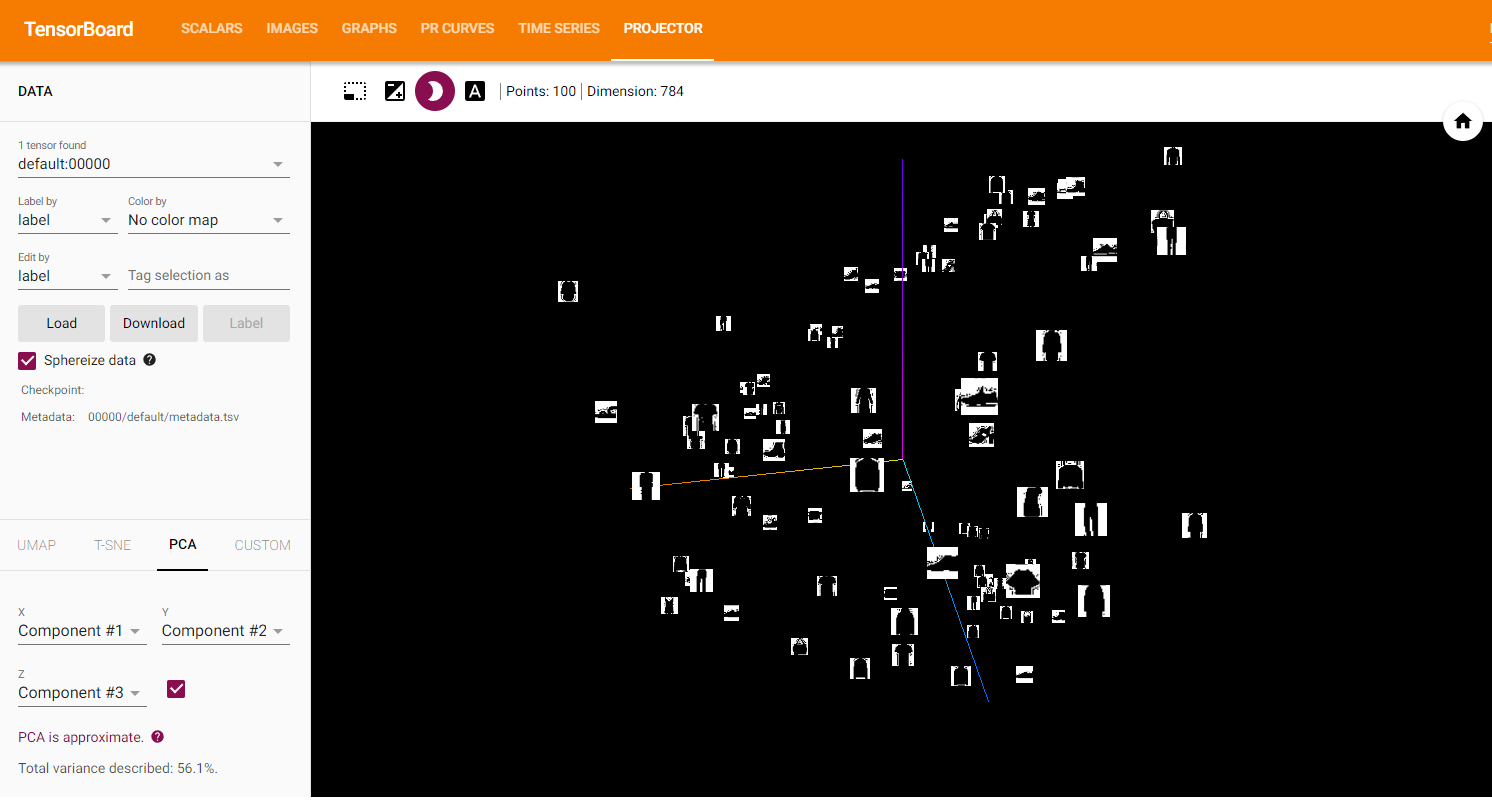

(5) 텐서보드에 Projector 추가

오류 방지 코드

import tensorflow as tf

import tensorboard as tb

tf.io.gfile = tb.compat.tensorflow_stub.io.gfileadd_embedding method를 통해 고차원 데이터의 representation을 시각화할 수 있다.

def select_n_random(data, labels, n=100):

assert len(data) == len(labels)

perm = torch.randperm(len(data))

return data[perm][:n], labels[perm][:n]

images, labels = select_n_random(trainset.data, trainset.targets)

class_labels = [classes[lab] for lab in labels]

features = images.view(-1, 28 * 28)

writer.add_embedding(features,

metadata=class_labels,

label_img=images.unsqueeze(1))

writer.close()

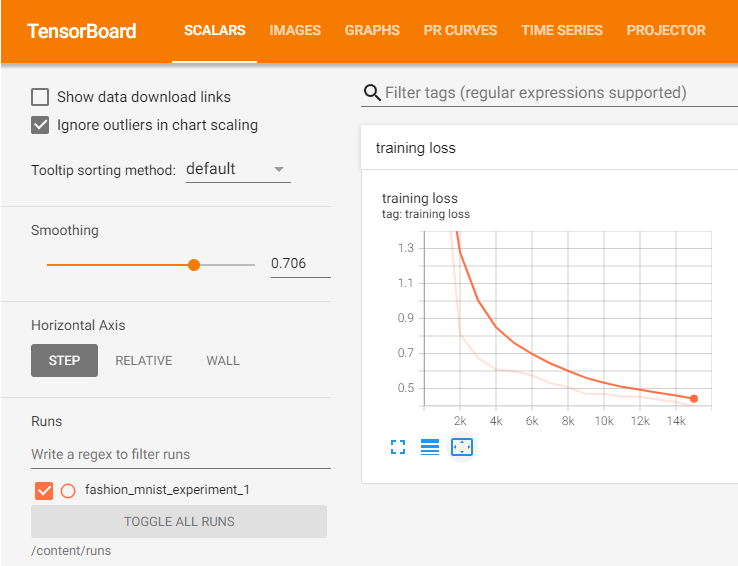

3. TensorBoard 손실 함수 추적

def images_to_probs(net, images):

output = net(images)

_, preds_tensor = torch.max(output, 1)

preds = np.squeeze(preds_tensor.numpy())

return preds, [F.softmax(el, dim=0)[i].item() for i, el in zip(preds, output)]

def plot_classes_preds(net, images, labels):

preds, probs = images_to_probs(net, images)

fig = plt.figure(figsize=(12, 48))

for idx in np.arange(4):

ax = fig.add_subplot(1, 4, idx+1, xticks=[], yticks=[])

matplotlib_imshow(images[idx], one_channel=True)

ax.set_title("{0}, {1:.1f}%\n(label: {2})".format(

classes[preds[idx]],

probs[idx] * 100.0,

classes[labels[idx]]),

color=("green" if preds[idx]==labels[idx].item() else "red"))

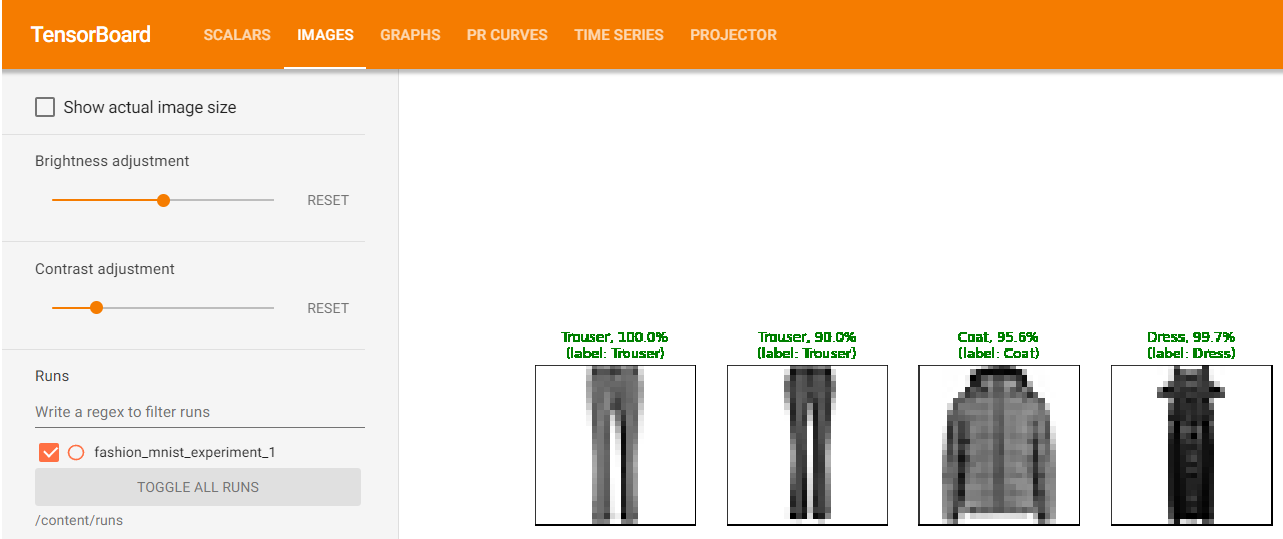

return fig학습을 진행하면서 배치에 포함된 4개의 이미지에 대한 모델의 예측 결과와 정답을 비교하여 보여주는 이미지를 생성

running_loss = 0.0

for epoch in range(1): # 데이터셋을 여러번 반복

for i, data in enumerate(trainloader, 0):

inputs, labels = data

optimizer.zero_grad()

outputs = net(inputs)

loss = criterion(outputs, labels)

loss.backward()

optimizer.step()

running_loss += loss.item()

if i % 1000 == 999:

writer.add_scalar('training loss',

running_loss / 1000,

epoch * len(trainloader) + i)

writer.add_figure('predictions vs. actuals',

plot_classes_preds(net, inputs, labels),

global_step=epoch * len(trainloader) + i)

running_loss = 0.0

print('Finished Training')

“Images” 탭에서 셔츠와 운동화, 코트와 같은 임의 배치에 대한 모델의 예측 결과를 확인할 수 있다.

4. TensorBoard로 모델 평가

class_probs = []

class_label = []

with torch.no_grad():

for data in testloader:

images, labels = data

output = net(images)

class_probs_batch = [F.softmax(el, dim=0) for el in output]

class_probs.append(class_probs_batch)

class_label.append(labels)

test_probs = torch.cat([torch.stack(batch) for batch in class_probs])

test_label = torch.cat(class_label)

def add_pr_curve_tensorboard(class_index, test_probs, test_label, global_step=0):

tensorboard_truth = test_label == class_index

tensorboard_probs = test_probs[:, class_index]

writer.add_pr_curve(classes[class_index],

tensorboard_truth,

tensorboard_probs,

global_step=global_step)

writer.close()

for i in range(len(classes)):

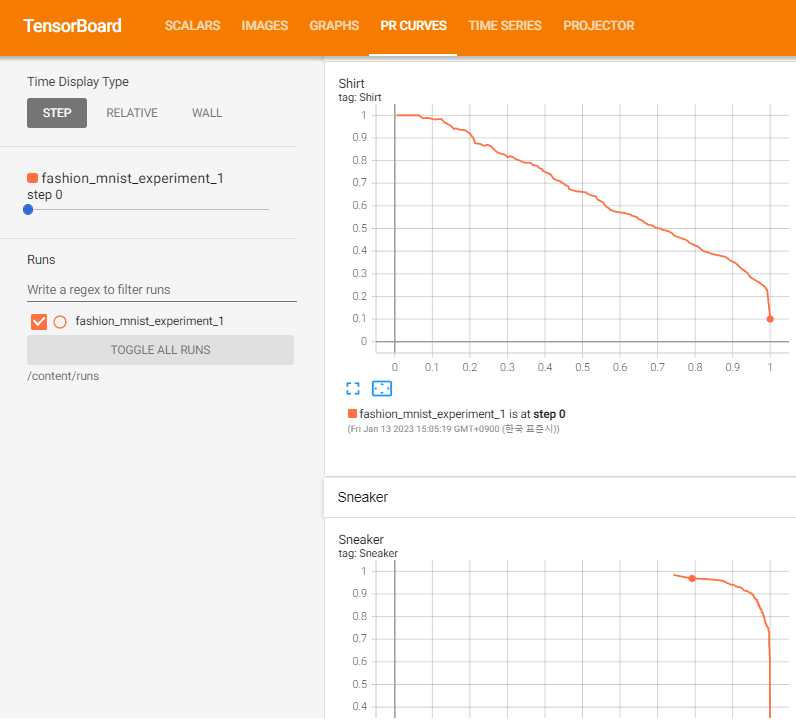

add_pr_curve_tensorboard(i, test_probs, test_preds)“PR Curves” 탭에서 각 분류별 정밀도-재현율 곡선 확인

자료 출처: https://pytorch.org/tutorials/intermediate/tensorboard_tutorial.html