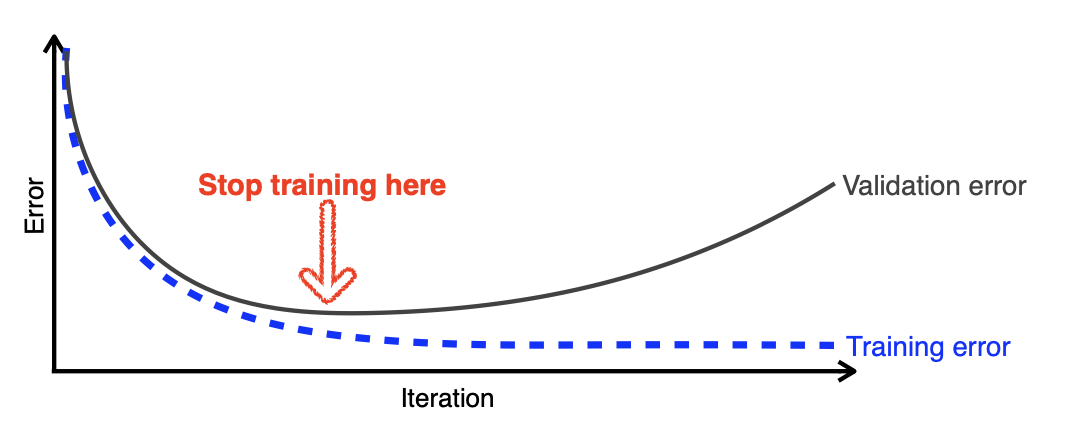

📖 Early Stopping

- Note that we need additional validation data to do early stopping.

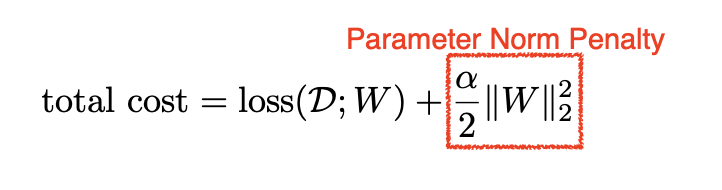

📖 Parameter Norm Penalty

- It adds smoothness to the function space.

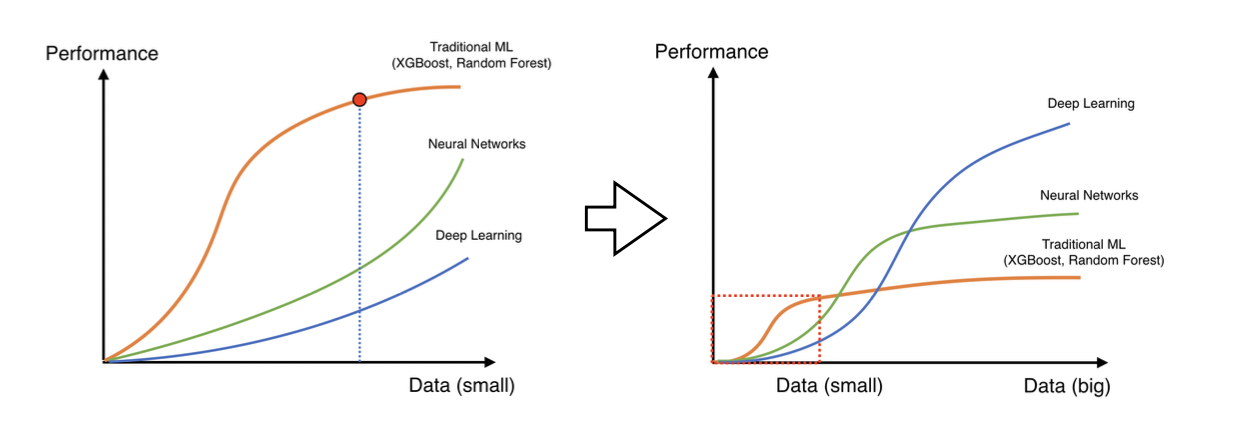

📖 Data Augmentation

-

More data are always welcomed.

-

However, in most cases, training data are given in advance.

-

In such cases, we need data augmentation.

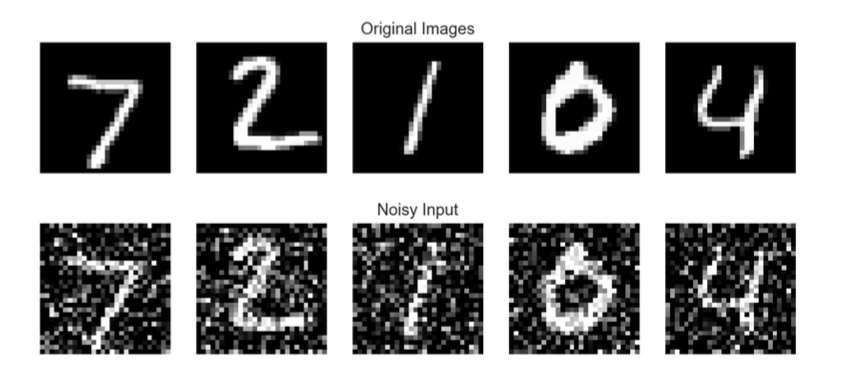

📖 Noise Robustness

- Add random noises inputs or weights.

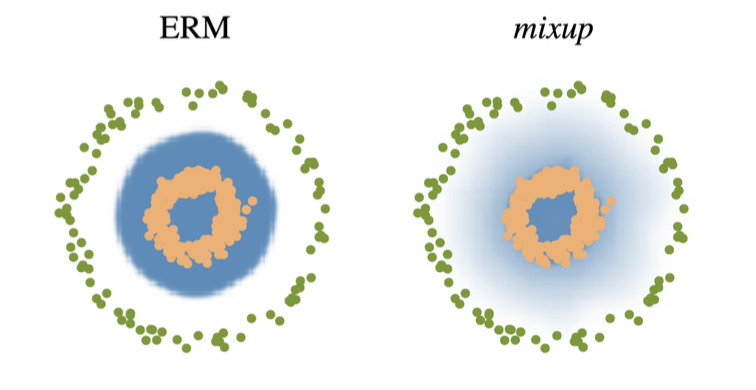

📖 Label Smoothing

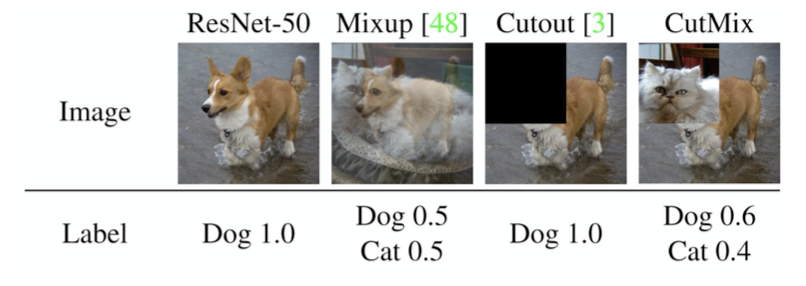

- Mix-up constructs augmented training examples by mixing both input and output of two randomly selected training data.

- CutMix constructs augmented training examples by mixing inputs with cut and paste and outputs with soft labels of two randomly selected training data.

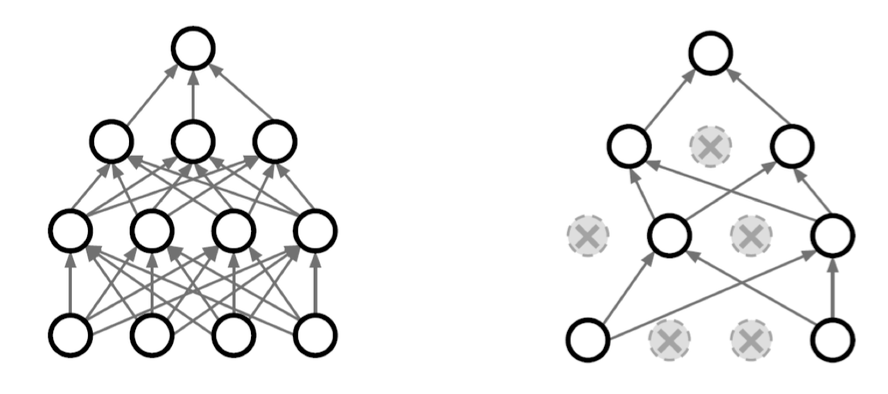

📖 Dropout

- In each forward pass, randomly set some neurons to zero.

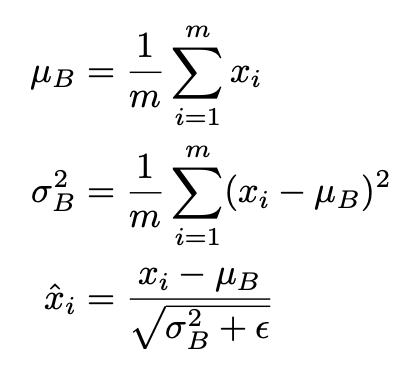

📖 Batch Normalization

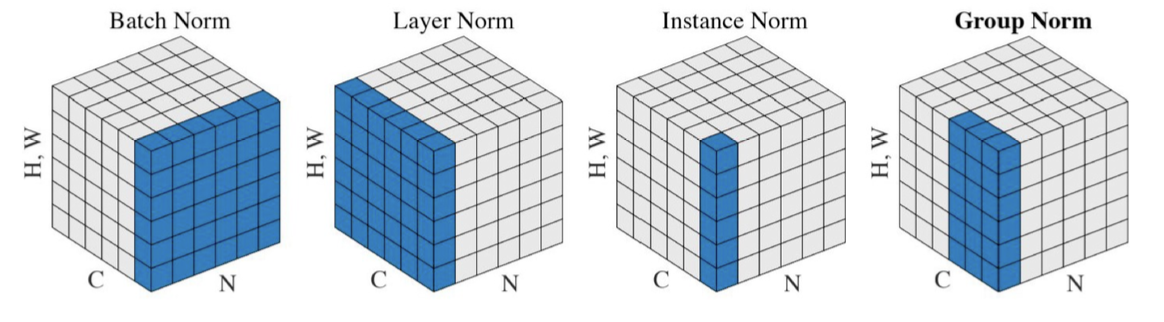

- Batch normalization compute the empirical mean and variance independently for each dimension (layers) and normalize.

- There are different variances of normalizations.

<이 게시물은 최성준 교수님의 'Regularization' 강의 자료를 참고하여 작성되었습니다.>

본 포스트의 학습 내용은 [부스트캠프 AI Tech 5기] Pre-Course 강의 내용을 바탕으로 작성되었습니다.

부스트캠프 AI Tech 5기 Pre-Course는 일정 기간 동안에만 운영되는 강의이며,

AI 관련 강의를 학습하고자 하시는 분들은 부스트코스 AI 강좌에서 기간 제한 없이 학습하실 수 있습니다.

(https://www.boostcourse.org/)