논문 제목: TensorFuzz: Debugging Neural Networks with Coverage-Guided Fuzzing

📕 Summary

Abstract

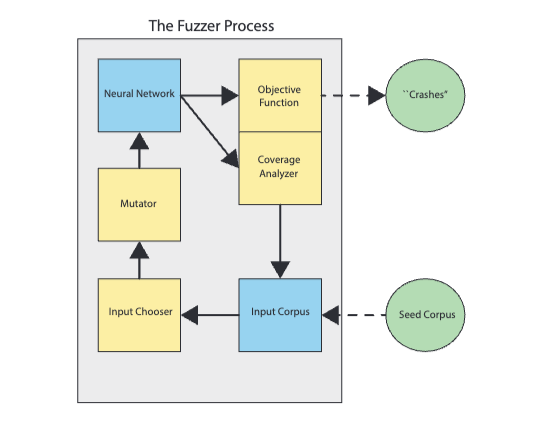

- The paper introduces testing techniques for neural networks using coverage-guided fuzzing (CGF) methods, which involve random mutations of inputs guided by a coverage metric to discover errors occurring only for rare inputs.

- The authors combine CGF methods with property-based testing (PBT) and apply them to practical goals such as surfacing broken loss functions and making performance improvements to TensorFlow. They also release an open-source library called TensorFuzz that implements these techniques.

Introduction

Table of Contents:

- Introduction

- Background and Related Work

- Coverage-Guided Fuzzing (CGF) for Neural Networks

- Property-Based Testing (PBT) for Neural Networks

- Combining CGF and PBT

- Practical Applications of the System

- Implementation: TensorFuzz

- Experimental Results

- Discussion and Future Work

- Conclusion

Key Techniques:

- Coverage-guided fuzzing (CGF) methods for neural networks, where random mutations of inputs are guided by a coverage metric to discover errors occurring only for rare inputs.

- Property-based testing (PBT) for neural networks, where properties that a function should satisfy are asserted and the system automatically generates tests exercising those properties.

- Use of approximate nearest neighbor (ANN) algorithms to provide the coverage metric for neural networks.

Combination of CGF and PBT techniques to improve the testing and debugging of neural networks. - Practical applications of the system, including surfacing broken loss functions in popular GitHub repositories and making performance improvements to TensorFlow.

- Release of an open-source library called TensorFuzz that implements the described techniques.

📕 Solution

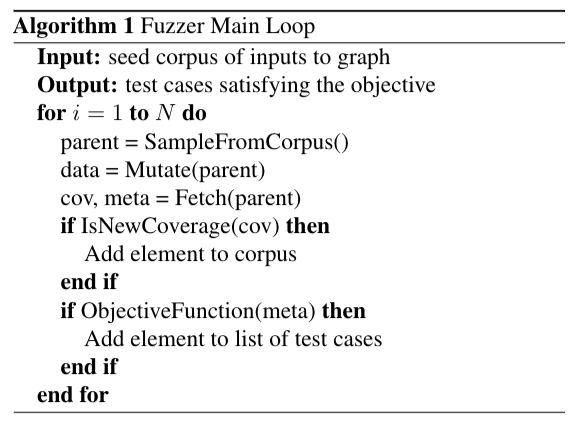

- The paper introduces coverage-guided fuzzing (CGF) methods for testing neural networks, where random - mutations of inputs are guided by a coverage metric to discover errors occurring only for rare inputs.

- The authors combine CGF methods with property-based testing (PBT), where properties that a function should satisfy are asserted and the system automatically generates tests exercising those properties.

- The paper describes how approximate nearest neighbor (ANN) algorithms can be used to provide the coverage metric for neural networks.

- The authors apply these methods to practical goals, including surfacing broken loss functions in popular GitHub repositories and making performance improvements to TensorFlow.

- The authors release an open-source library called TensorFuzz that implements the described techniques.

Algorithm

📕 Conclusion

(result of this paper)

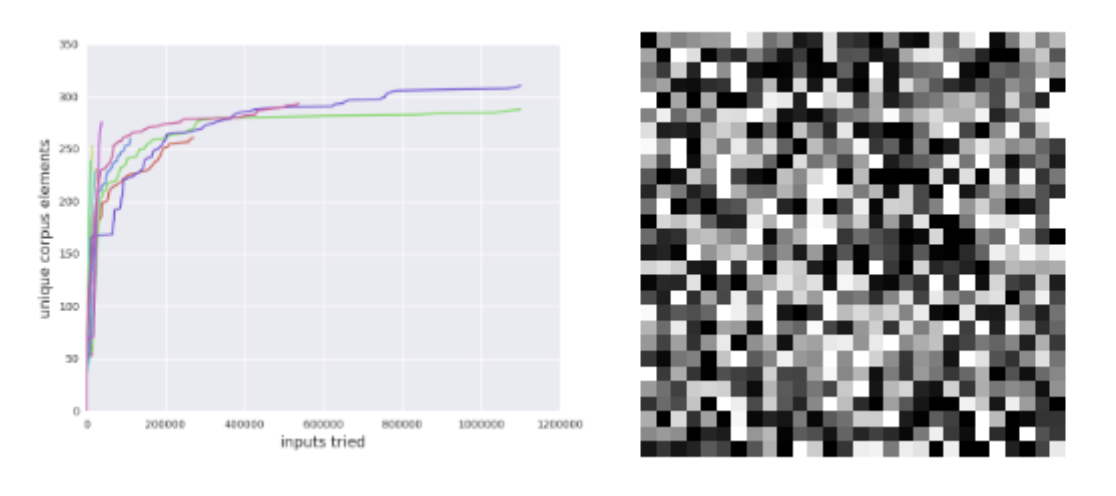

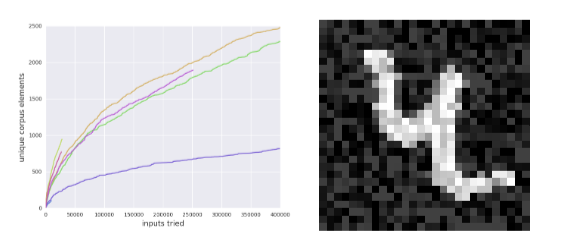

- The paper presents experimental results from four different settings, demonstrating the effectiveness of TensorFuzz as a general-purpose tool for testing and debugging neural networks.

- TensorFuzz is shown to efficiently find numerical errors in trained neural networks, particularly inputs that result in not-a-number (NaN) values. This is crucial as numerical errors can lead to dangerous behavior in important systems, and CGF can help identify these errors before deployment, reducing the risk of harmful errors occurring in real-world scenarios.

- The authors compare TensorFuzz with a random search baseline in some cases, establishing its usefulness as a general-purpose tool for testing and debugging neural networks.

- The goal of TensorFuzz is to provide a good general-purpose tool for neural network testing and debugging, similar to AFL, which is easy to use on new code-bases and tasks and performs acceptably well in most cases

Contribution

- The paper introduces coverage-guided fuzzing (CGF) techniques for testing neural networks, using random mutations of inputs guided by a coverage metric to discover errors occurring only for rare inputs.

- The authors combine CGF methods with property-based testing (PBT) to create a general system for testing neural networks.

- They describe how approximate nearest neighbor (ANN) algorithms can be used to check for coverage in a general way.

- The paper applies these techniques to practical goals, including surfacing broken loss functions in popular GitHub repositories and making performance improvements to TensorFlow.

- The authors release an open-source library called TensorFuzz that implements the described techniques