논문 제목: Weight Fixing Networks

📕 Summary

Abstract

The paper proposes a new method called Weight Fixing Networks (WFN) to minimize the information content of neural networks by reducing the number of unique weights and the weight-space entropy.

Introduction

-

Deep learning models have experienced significant growth in applicability, performance, investment, and optimism. However, this growth has also led to increasing energy and storage costs for training and making predictions.

-

The paper aims to address this issue by proposing a methodology to reduce the number of bits required to describe a network and the total number of unique weights in a network.

-

The motivation behind this approach is driven by practical considerations of accelerator designs and the theoretical persuasions of the Minimal Description Length (MDL) principle as a way of determining a good model .

-

The authors emphasize the need to reduce these costs without hindering task performance, and they introduce a new method called Weight Fixing Networks (WFN) to achieve this goal

-

The WFN method focuses on lossless whole-network quantization to minimize the entropy and number of unique parameters in a network, while still maintaining task performance .

-

The paper combines novel and well-established techniques to balance conflicting objectives and achieve lossless compression with significantly fewer unique weights and lower weight-space entropy compared to existing quantization approaches

📕 Solution

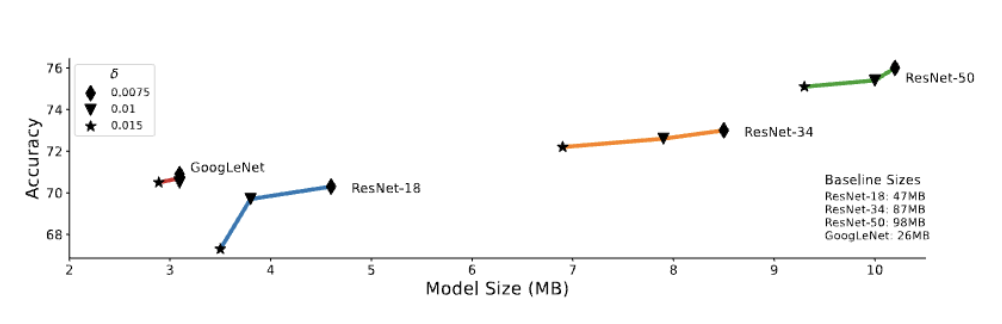

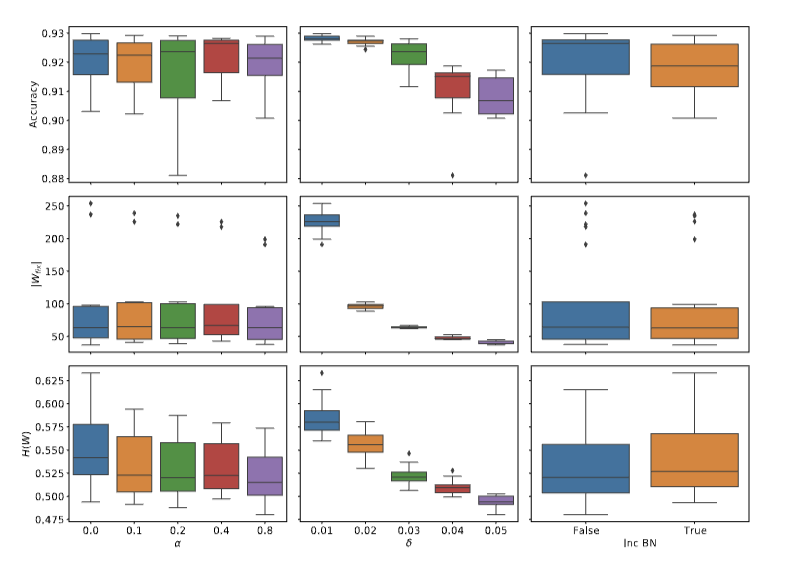

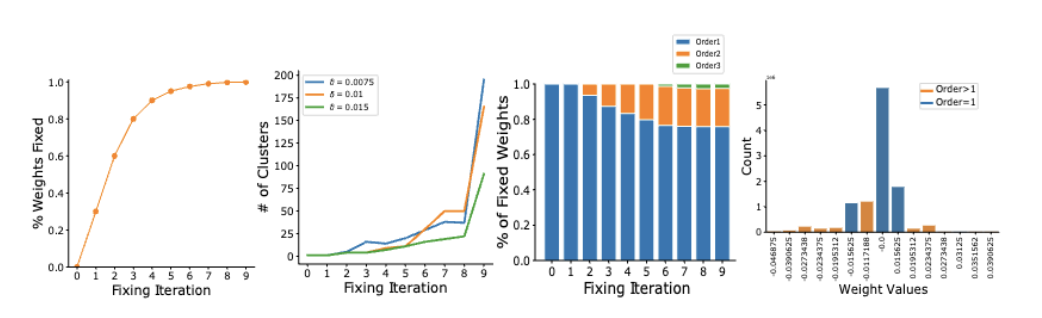

The accuracy vs model size trade-off can be controlled by the δ parameter. All experiments shown are using the ImageNet dataset, accuracy refers to top-1.

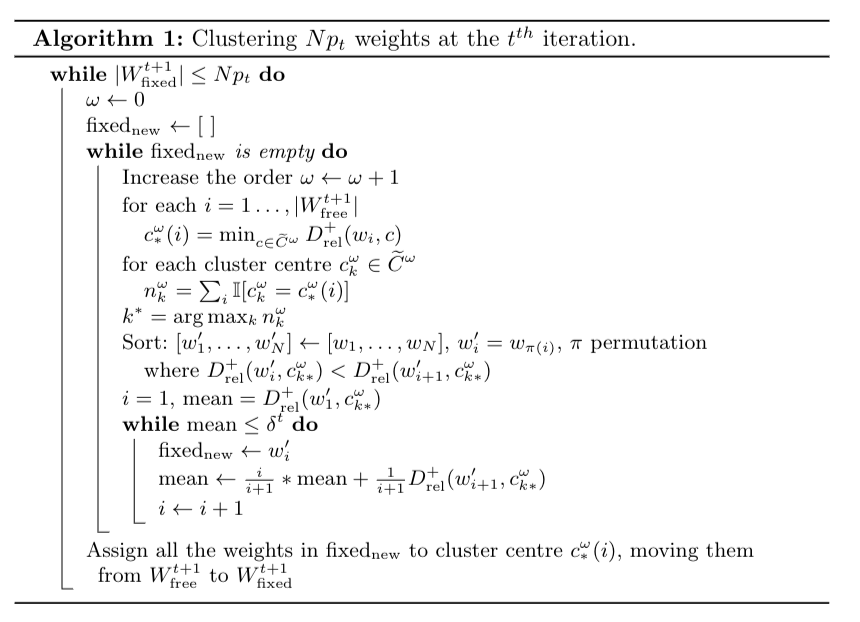

Algorithm

Clustering Stage

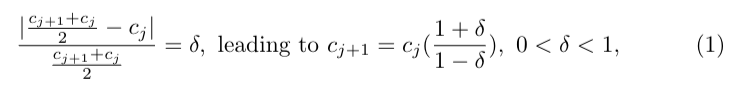

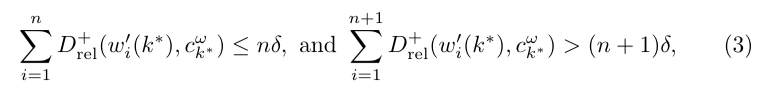

Generating the Proposed Cluster Centres.

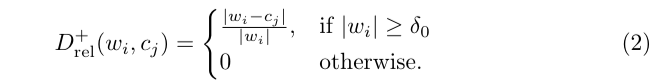

Reducing k with Additive Powers-of-two Approximations.

Minimalist Clustering

Training Stage.

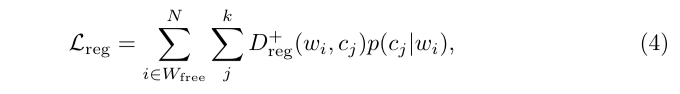

Cosying up to Clusters

- Exploring the hyper-parameter space with ResNet18 model trained on the CIFAR-10 dataset. Columns; Left: varying the regularisation ratio α,

- Middle: varying the distance change value δ, Right: whether we fix the batch-norm variables or not.

- Rows; Top: top-1 accuracy test-set CIFAR-10, middle: total number of unique weights,

- bottom: entropy of the weights

Method

-

The paper introduces a new method called Weight Fixing Networks (WFN) that aims to minimize the information content of networks through lossless whole-network quantization .

-

The WFN method combines a few novel and well-established techniques, including a novel regularisation term, a view of clustering cost as relative distance change, and a focus on whole-network re-use of weights

-

The quantization process involves replacing each connection weight in the original network with one of the cluster centers of weights, which are fixed to take on specific cluster center values .

-

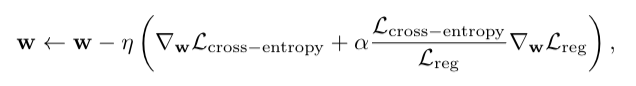

The training stage combines gradient descent on a cross-entropy classification loss with a regularisation term that encourages tight clusters to maintain lossless performance as more weights are fixed to cluster centers

-

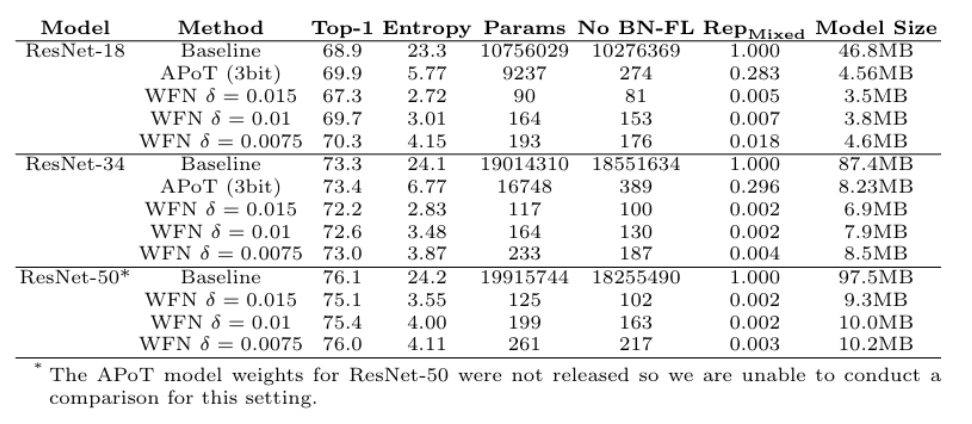

The experiments conducted on Imagenet demonstrate the effectiveness of WFN, achieving lossless compression using significantly fewer unique weights and lower weight-space entropy compared to state-of-the-art quantization approaches

📕 Conclusion

- Far left: We increase the number of weights in the network that are fixed to cluster centres with each fixing iteration.

- Middle left: Decreasing the δ threshold increases the number of cluster centres, but only towards the last few fixing iterations,

which helps keep the weight-space entropy down.- Middle right: The majority of all

weights are order 1 (powers-of-two), the increase in order is only needed for outlier weights in the final few fixing iterations.- Far right: The weight distribution (top-15

most used show) is concentrated around just four values

A full metric comparison of WFN Vs. APoT. P

Contribution

- The paper introduces a new method called Weight Fixing Networks (WFN) that aims to minimize the information content of neural networks by reducing the number of unique weights and the weight-space entropy. It achieves this through lossless whole-network quantization, resulting in significantly fewer unique weights and lower weight-space entropy compared to state-of-the-art quantization approaches.

- The authors propose a novel regularisation term, a view of clustering cost as relative distance change, and a focus on whole-network re-use of weights to balance conflicting objectives and achieve lossless task performance.

- The experiments conducted on Imagenet demonstrate the effectiveness of WFN, achieving lossless compression using 56x fewer unique weights and a 1.9x lower weight-space entropy compared to existing quantization approaches.