논문 제목: Can Differential Testing Improve Automatic Speech Recognition Systems?

📕 Summary

Abstract

The paper explores the use of differential testing to uncover failures in Automatic Speech Recognition (ASR) systems and then leverage the generated test cases to enhance the quality of these systems. The authors propose a novel idea called evolutionary differential testing, which involves fine-tuning both the target ASR system and a cross-referenced ASR system inside CrossASR++. Experimental results show that leveraging the test cases can significantly improve the target ASR system and CrossASR++ itself, with a decrease in the number of failed test cases and a drop in the word error rate of the improved target ASR system. Additionally, evolving just one cross-referenced ASR system allows CrossASR++ to find more failed test cases for multiple target ASR systems.

Introduction

📕 Solution

Method

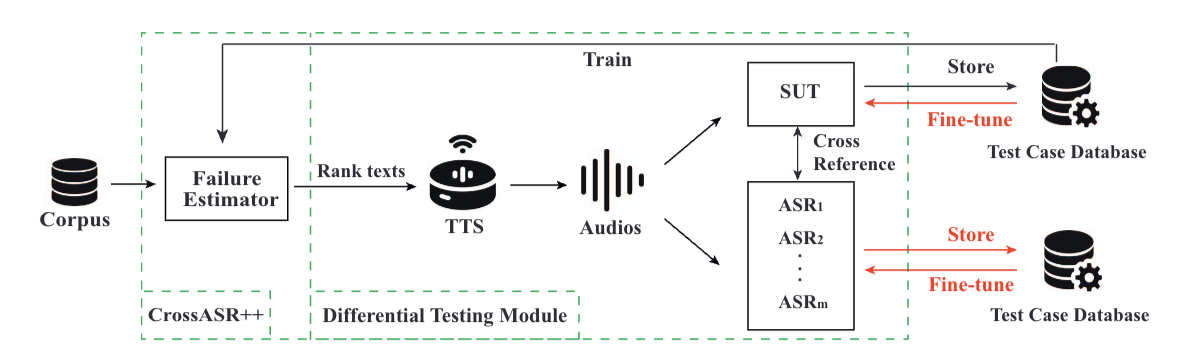

The paper utilizes the technique of differential testing to automatically generate test cases for Automatic Speech Recognition (ASR) systems

The authors propose a novel idea called evolutionary differential testing, which involves fine-tuning both the target ASR system and a cross-referenced ASR system inside CrossASR++ .

The generated test cases are used to improve the performance of the target ASR system by fine-tuning it on the corresponding test cases .

Additionally, the test cases are used to improve the performance of CrossASR++ in uncovering failed test cases by fine-tuning one of the cross-referenced ASR systems .

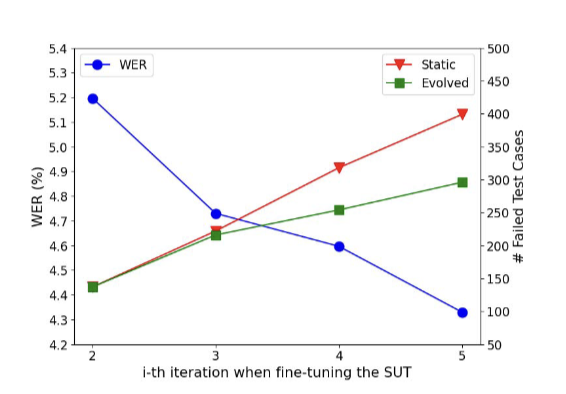

Experimental results show that leveraging the test cases through fine-tuning can substantially improve both the target ASR system and CrossASR++ itself, leading to a decrease in the number of failed test cases and a drop in the word error rate of the improved target ASR system

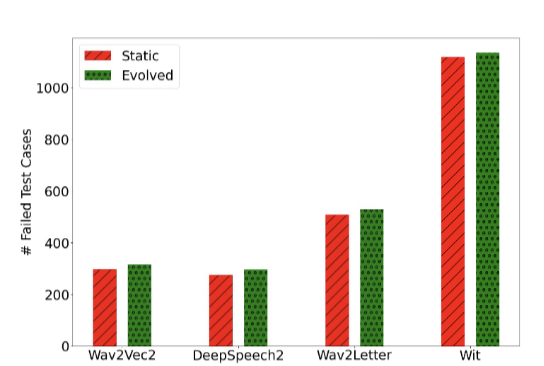

By evolving just one cross-referenced ASR system, CrossASR++ is able to find more failed test cases for multiple target ASR systems

📕 Conclusion

-

The paper demonstrates that leveraging the test cases generated through differential testing can lead to significant improvements in both the target ASR system and CrossASR++ itself. The number of failed test cases uncovered decreases by 25.81% and the word error rate of the improved target ASR system drops by 45.81% .

-

By fine-tuning one of the cross-referenced ASR systems with the generated test cases, CrossASR++ is able to find more failed test cases for multiple target ASR systems. The increase in the number of failed test cases found by CrossASR++ ranges from 1.52% to 7.25% for different target ASR systems

-

The authors plan to further investigate the relative performance of ASR systems when all of them are evolving and explore better ways to generate effective test cases that can enhance the reliability of ASR systems .

Overall, the paper highlights the potential of evolutionary differential testing in improving the quality and performance of ASR systems by leveraging automatically generated test cases