논문 제목: DeepArc: Modularizing Neural Networks for the Model Maintenance

📕 Summary

Abstract

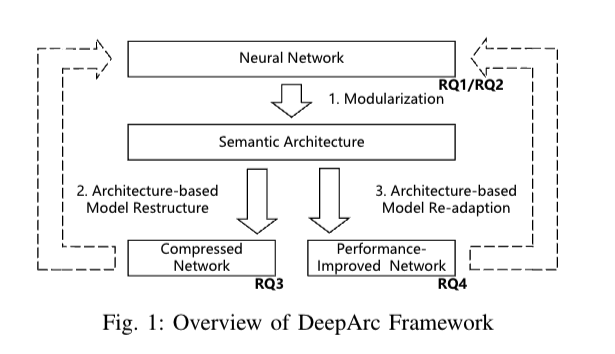

- The paper proposes DeepArc, a method to modularize neural networks, reducing the cost of model maintenance tasks by decomposing the network into consecutive modules with similar semantics.

- DeepArc allows for efficient model compression and faster training performance while achieving similar model prediction performance.

Introduction

- Deep neural network (DNN) models are widely used in various software systems but their monolithic structure makes model maintenance challenging. Existing practices often require retraining the entire model, which is computationally expensive.

- The paper introduces DeepArc, a novel modularization method for neural networks, to address the challenges of model maintenance. DeepArc decomposes a neural network into consecutive modules with similar semantics, allowing for localized restructuring or retraining of the model.

- The modularization and encapsulation provided by DeepArc enable tasks such as model compression and enhancement with minimal modifications. It reduces the cost of model maintenance by pruning and tuning localized neurons and layers.

- The paper demonstrates that DeepArc can significantly improve the runtime efficiency of model compression techniques and achieve faster training performance while maintaining similar model prediction performance

📕 Solution

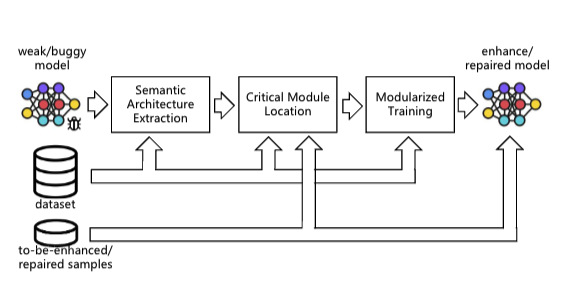

- The paper proposes the DeepArc framework, which is a novel modularization method for neural networks, to address the challenges of model maintenance.

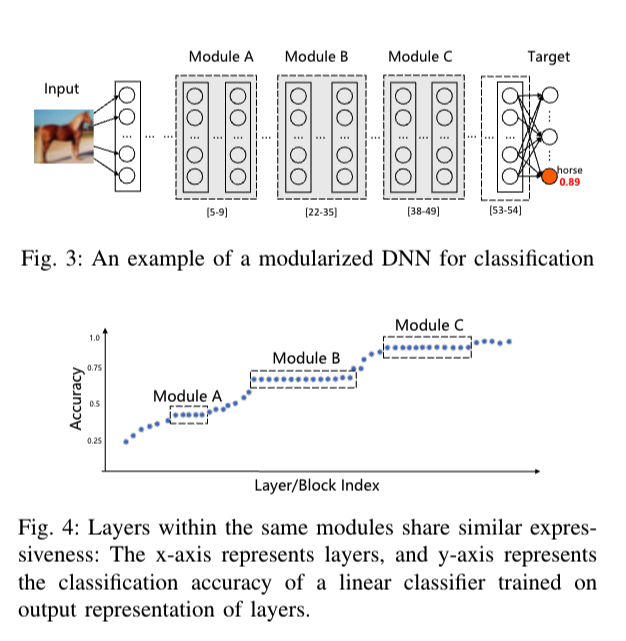

- DeepArc decomposes a neural network into consecutive modules, each encapsulating consecutive layers with similar semantics. This modularization allows for localized restructuring or retraining of the model by pruning and tuning specific neurons and layers.

- The paper introduces the concept of layer similarity and implements similarity measurements to quantify the similarity of output representations between layers and modules.

- The authors define a semantic architecture for a neural network model based on a similarity threshold, which partitions the model into modules with maximized intra-module similarity and minimized inter-module similarity.

- Overall, the methods used in this paper involve the development of the DeepArc framework for modularizing neural networks and the implementation of similarity measurements to guide the modularization process.

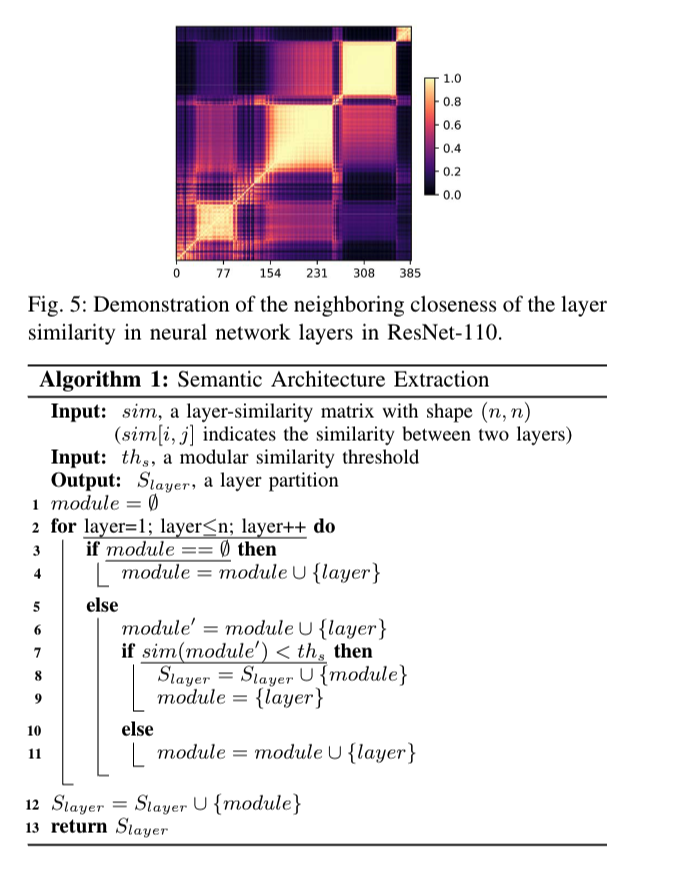

Algorithm 1: Semantic Architecture Extraction

- Algorithm 1, which is called Semantic Architecture Extraction.

- This algorithm takes two inputs: a layer-similarity matrix (sim) and a modular similarity threshold (ths).

The layer-similarity matrix has a shape of (n, n), where n is the number of layers in the neural network. The value of sim[i, j] indicates the similarity between two layers. - The modular similarity threshold (ths) is a user-defined value that determines the similarity threshold for creating a new module.

- The output of the algorithm is Slayer, which is a layer partition that represents the modularized neural network.

- The algorithm starts by initializing an empty module (module = ∅).

- Then, it iterates through each layer in the neural network (layer=1; layer≤n; layer++).

- If the module is empty, the current layer is added to the module (module = module ∪ {layer}).

- If the module is not empty, a new module is created by adding the current layer to the existing module (module′ = module ∪ {layer}).

- If the similarity between the layers in the new module (module′) is less than the modular similarity threshold (ths), the current module is added to Slayer (Slayer = Slayer ∪ {module}) and a new module is initialized with the current layer (module = {layer}).

- If the similarity between the layers in the new module (module′) is greater than or equal to the modular similarity threshold (ths), the current module is updated with the current layer (module = module ∪ {layer}).

- Finally, the algorithm adds the last module to Slayer (Slayer = Slayer ∪ {module}) and returns Slayer.

- The output of the algorithm (Slayer) represents a modularized neural network, where each module encapsulates consecutive layers with similar semantics.

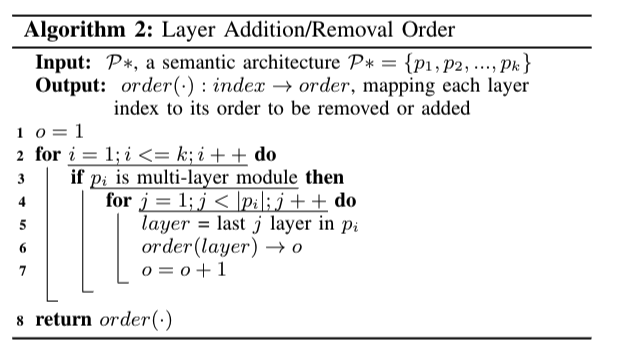

Algorithm 2: Layer Addition/Removal Order

- Algorithm 2, which is a method for determining the order in which layers should be added or removed from a neural network.

- The input to the algorithm is a semantic architecture P∗, which is a set of modules pi that make up the neural network.

- The output of the algorithm is a mapping function order(·) that assigns an order to each layer in the network, indicating the order in which it should be added or removed.

- The algorithm starts by initializing the order variable o to 1.

- It then iterates through each module pi in the architecture.

- If pi is a multi-layer module (i.e., it contains more than one layer), the algorithm iterates through each layer in the module, starting from the last layer.

- For each layer, the algorithm assigns it an order value of o and increments o by 1.

- Finally, the algorithm returns the order(·) mapping function.

- The purpose of this algorithm is to facilitate the modularization of neural networks, which can make it easier to perform maintenance tasks such as model compression and retraining. By determining the optimal order in which to add or remove layers, the algorithm can help to minimize the impact of these maintenance tasks on the overall performance of the network.

📕 Conclusion

-

The paper proposes DeepArc, a framework for modularizing neural networks, which allows for the extraction of the semantic architecture of a DNN and facilitates tasks such as model restructure and enhancement.

-

The modularization of network layers in DeepArc has several benefits, including preserving fitting, robustness, and linear separability of the model.

-

The paper demonstrates that tasks like model compression, enhancement, and repair can greatly benefit from the modularity of network layers provided by DeepArc.

-

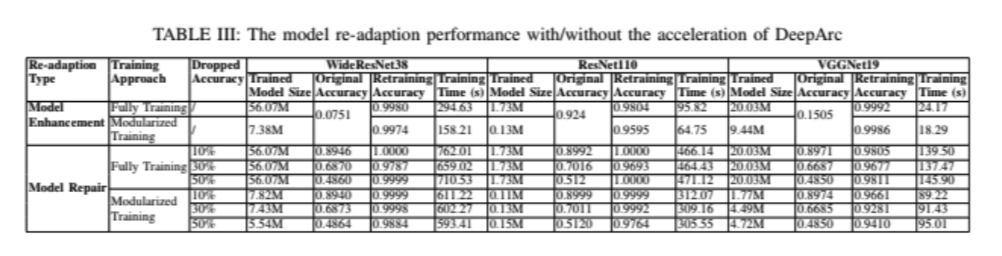

The experiments conducted in the paper show that DeepArc can significantly boost the runtime efficiency of state-of-the-art model compression techniques and achieve faster training performance while maintaining similar model prediction performance.

-

The authors suggest that future work will explore whether the DeepArc framework can facilitate modular reuse.

Contribution

- The paper proposes DeepArc, a novel modularization method for neural networks, which decomposes a neural network into consecutive modules with similar semantics, reducing the cost of model maintenance tasks.

- DeepArc allows for practical tasks such as model compression and enhancing the model with new features by restructuring or retraining the model through pruning and tuning localized neurons and layers.

- DeepArc can boost the runtime efficiency of state-of-the-art model compression techniques by 14.8%.

- Compared to traditional model retraining, DeepArc only needs to train less than 20% of the neurons on average to fit adversarial samples and repair under-performing models, leading to 32.85% faster training performance while achieving similar model prediction performance.

- The paper focuses on the encapsulation nature of modularization and investigates the possibility of changing only a few modularized neurons and layers in model maintenance tasks.

- The proposed method aims to provide a solution for restructuring a model while preserving its behaviors or improving its performance with minimal modifications