What are Containers?

- Containers are a lightweight form of virtualization that allows you to run applications in isolated environments.

- They are like small, self-contained packages that hold everything an application needs to run: the code, libraries, and system tools.

- They package an application and its dependencies together into a single unit that can run consistently across different computing environments.

- Think of them as a "to-go" box for software, making sure it runs the same no matter where it is.

- Unlike traditional virtual machines (VMs), containers share the host system's operating system (OS) kernel but have their own isolated user space.

Key Features

Isolation

- Containers provide process and file system isolation, ensuring that applications running in different containers do not interfere with each other.

- Imagine you have two apps that need different versions of the same library. Containers make sure each app has what it needs without conflict.

Consistency

- Containers ensure that an application runs the same way regardless of where it is deployed, be it a developer's laptop, a test environment, or production.

- No more "it works on my machine" problems.

Lightweight

- Containers share the main operating system, making them faster to start and less heavy on resources compared to VMs, which need their own OS.

Portability

- Containers encapsulate all dependencies of an application, making it portable across different environments and infrastructure.

- Containers can run anywhere without changing the code

Container runtime engine

- A container runtime engine is the software that executes and manages the lifecycle of containers.

- It is responsible for running containerized applications, managing container images, and providing the necessary isolation between containers. Essentially, it's the core software that makes containers work.

Cluster Architecture

Cluster consists of nodes, both physical or virtual, on premise or on cloud. Nodes are physical or virtual machines within the cluster that provides computing resources. Nodes host applications in the form of containters.

-

Worker nodes

- host applciation as containers

- ex. ships that can load containers

-

Master node

- manage, plan, schedule, monitor nodes

- master node manages worker nodes using the

control playing components

-

Control playing components

etcd

- highly available key value store (a database that stores data in key value format)

- stores information about cluster

kube-scheduler

- schedules applications or containers on nodes

- identifies the right node to place a container based on the containers resource requirements, the worker nodes capacity, or any other policies/restrains (taints, tolerations, node affinity rules)

- ex. crane

node controller

- takes care of nodes

- responsible for onboarding new nodes to the cluster, handling situations where nodes become unavailable or get destroyed

replication controller

- ensures the desired number of containers are running at all times in a replication group

Kube API server

- primary management component of Kubernentes

- responsible for orchestrating all operations within the cluster

- exposes Kubernetes API

- for external users to perform management operations on the cluster, various controllers

- for various controllers to monitor the state of the cluster, make necessary changes

- for worker nodes to communicate with the server

container runtime engine

- ex. docker

kubelet

- an agent that runs on each node in a cluster

- ex. ship captain

- listens for instructions from the Kube API server and deploys/destroys containers on the nodes as required

- Kube API server periodically fetches status reports from the kubelet to monitor node and container status

Kube Proxy Service

- allows applications running on worker nodes to communicate with each other

- ensures necessary rules are in place on worker nodes so that containers running on them to reach each other.

Docker vs ContainerD

ctr | nerdctl | crictl | |

|---|---|---|---|

| purpose | debugging | general purpose | debugging |

| community | ContainerD | ContainerD | Kubernetes |

| works with | ContainerD | ContainerD | All CRI Compatibale Runtimes |

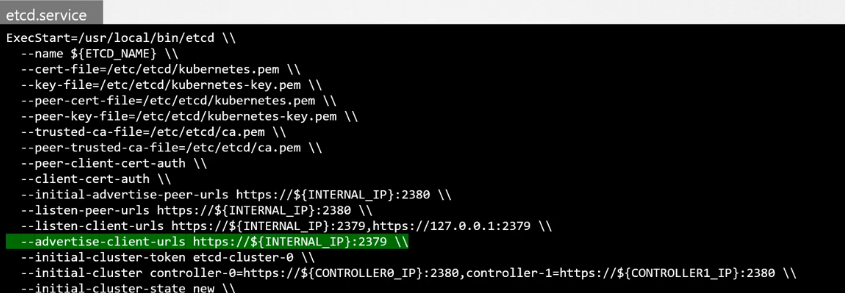

ETCD

ETCD is a distributed reliable key-value store that is Simple, Secure & Fast

- it stores information regarding the cluser

- ex. nodes, pods, secrets, accounts, roles, role bindings, etc.

- every information you see when you run kube control get command is from the ETCD server

- every change you make to your cluster are updated in the ETCD server. only once it's upated in ETCD server is the change considered complete.

- ex. add additional nodes, deploying pods or replica sets

Types of ETCD Deployment

ETCD can be deployed in 2 ways.

Deployed from scratch

download the etcd binaries manually

this is the url that etcd listens to

this is the url that etcd listens to

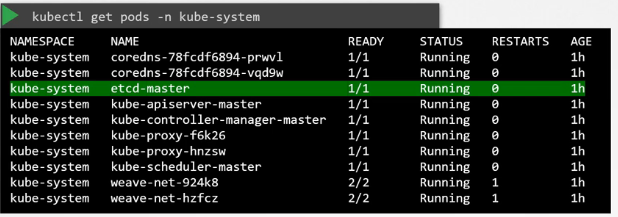

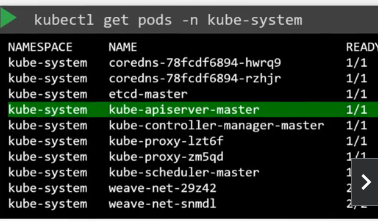

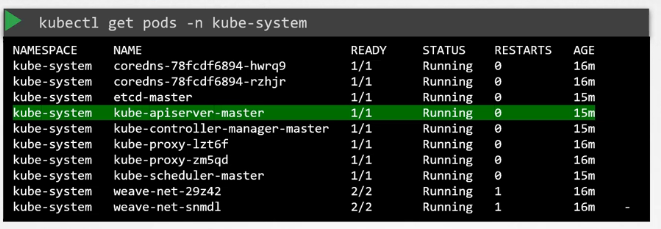

Deployed using kubeadm

Kubeadm deploys the etcd server for you as a pod in the kube system namespace. you can explore the etcd databse using the etcd control utility within this pod.

To list all keys stroed by Kubernetes, run the etcd control get command like below.!

Kube API server

- primary management component in Kubernetes

- responsible for orchestrating all operations within the cluster

- exposes Kubernetes API

- for external users to perform management operations on the cluster, various controllers

- for various controllers to monitor the state of the cluster, make necessary changes

- for worker nodes to communicate with the server

- exposes Kubernetes API

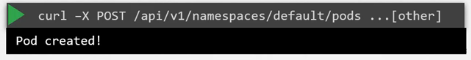

How it works

You either

- run a kubectl command

- or send a direct request to the server

When you run a kubectl command, the kubectl utility is in fact reaching the kube-api server. It's sending a request to the server. Users can directly send requests to the server too. So, how does the Kube API server work when it receives a request?

- Authenticate user

- Validate request

- Retrieve data

- Update ETCD

- Scheduler

Example: send a POST request to create a pod

1. the server authenticates user

2. and then validate it. In this case, the API server creates a pod object without assigning it to a node

3. updates the user that the pod has been created

4. and updates the information in the etcd server.

5. the scheduler identifies the right node to place the new pod on and communicates that back to the kube-api server. The API server then updates the information in the etcd cluster. the API server then passes that information to the kubelet in the appropriate worker node. the kubelet then creates the pod on the node and instructs the container runtime engine to deploy the application image. Once done, the kubelet updates the status back to the API server and the API server then updates the data back in the etcd cluster.

A similar pattern is followed every time a change is requested.

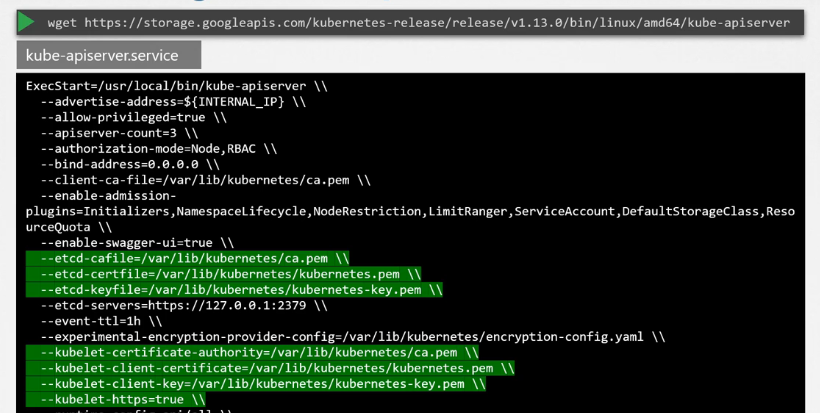

Parameters of kube-api server

- The kube-apiserver is run with a lot of parameters.

- These parameters define different modes of authentication, authorization, encryption and security.

- A lot of them are certificates that are used to secure the connectivity between different components.

--etcd-servers=https://127.0.0.1:2379- this option specifies the location of the etcd servers

- this is how the kube-api server connects to the etcd servers

How to view parameters of kube-api server

if deployed cluster with kubeadmin tool

- the kubeadmin deploys the kubeadmin-apiserver as a pod in the kube-system namespace on the master node.

- You can see the options within the pod definition file, located at etc/kubernetes/manifest folder.

in non-kubeadmin setup

- you can inspect the options by viewing the kube-apiserver service located at

etc/systemd/system/kube-apiserver.service.

- you can inspect the options by viewing the kube-apiserver service located at

Kube Controller Manager

- Kube controller manager is a process that continuously monitors the state of various components within the system and works towards bringing the whole system to the desired functioning state

- kube controller manager does this through the kube api server.

- = brain of kubernenetes

ex. node controller

- resonsible for monitoring the status fo the notes and taking necessary actions to keep the applications running

- node monitor period: tests node health every 5 sec

- node monitor grace period: watis 40 sec before making node unreachable

- POD evicion timeout: if node is marked unreachable, it is given 5 min to come back up. if it doesn't, the controller removes the pods assigned to that note and providions them on the healthy ondes if the pods are part of a replica set.

ex. replication controller

- responsible for monitoring the status of replica sets and ensuring that the desired number of pods are available at all times within the set

- if pod dies, it creates another one

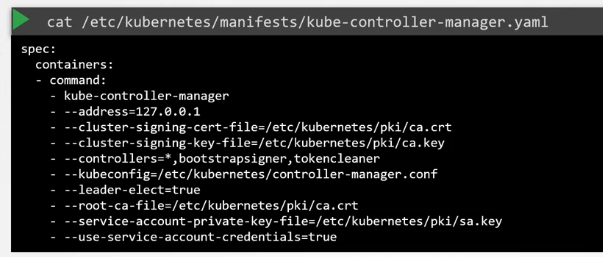

How to view & install Kubernentes controller manager

- controllers are all packaged into a single process known as the Kubernetes Controller Manager.

- When you install the Kubernetes controller manager the different controllers get installed as well.

Installment

downlaod the kube controller manager fromt he kubernetes release page, extract it, and run it as a service.

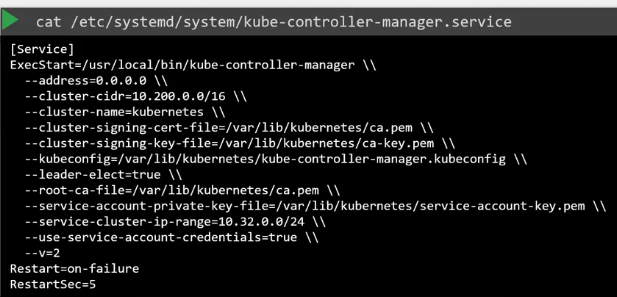

View Kube controller server manager options

if setup with kubeadm tool

- Kube admin deploys the Kube controller manager as a POD in the Kube system namespace on the master node.

- can view options in pod defnition file located in

etc/kubernetes/manifestsfolder

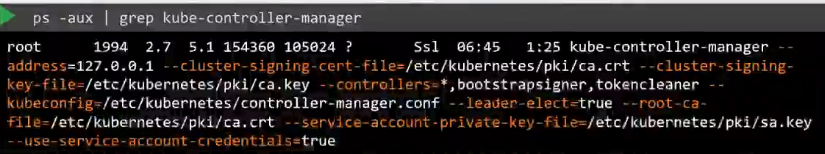

non kubeadm setup

- can inspect the options by viewing the Kube Controller Managers service located at the services directory.

- can also see the running process and the effective options by listing the process on the master node and searching for Kube Controller Manager.

- can inspect the options by viewing the Kube Controller Managers service located at the services directory.

Kube scheduler

- schedules applications or containers on nodes

- identifies the right node to place a container based on the containers resource requirements, the worker nodes capacity, or any other policies/restrains (taints, tolerations, node affinity rules)

- decides which pod goes on which node

- X place the pod on the nodes (job of kubelet)

필요성

- In Kubernetes, the scheduler decides which nodes the pods are placed on depending on certain criteria.

- when there are many ships and many containers, you wanna make sure that the right container ends up on the right ship.

- ex. You may have pods with different resource requirements.

- ex. You can have nodes in the cluster dedicated to certain applications.

How scheduler assigns these pods

The scheduler looks at each pod and tries to find the best node for it.

1. Filter nodes

- filter out nodes that don't fit the profile for this pod

- ex. the nodes that do not have sufficient CPU and memory resources requested by the pod.

- Rank nodes

- ranks nodes to identify the best filt for th epod

- uses priroity function to assign a score to the nodes from 0 ~ 10

- ex. the scheduler calculates the amount of resources that would be free on the nodes after placing the pod on them.

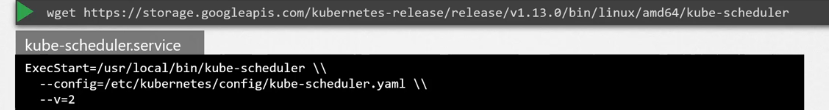

How to install Kube scheduler

- download kube-scheduler binary from the kubernentes release page

- When you run it as a service, you specify the scheduler configuration file.

View kube scheduler server options

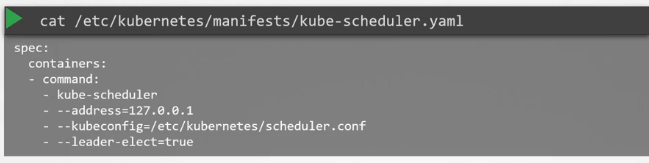

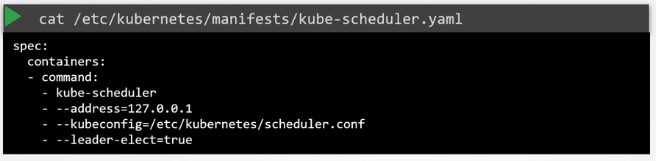

if setup with kubeadm tool

- Kube admin deploys the kube scheduler server as a POD in the Kube system namespace on the master node.

- can view options in pod defnition file located in

etc/kubernetes/manifestsfolder

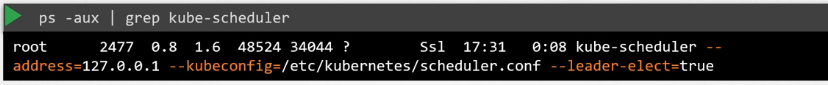

non kubeadm setup

- can also see the running process and the effective options by listing the process on the master node and searching for kube scheduler.

- can also see the running process and the effective options by listing the process on the master node and searching for kube scheduler.

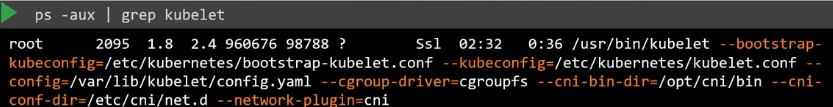

Kubelet

- an agent that runs on each node in a cluster

- listens for instructions from the Kube API server and deploys/destroys containers on the nodes as required

- When it receives instructions to load a container or a pod on the node, it requests the container runtime engine, which may be Docker, to pull the required image and run an instance.

- Kube API server periodically fetches status reports from the kubelet to monitor node and container status

- ex. ship captain: responsible for doing all the paperwork necessary to become part of the cluster.

How to install kubelet

using kubeadm tool to deploy your cluster

Kube proxy

- allows applications running on worker nodes to communicate with each other

- ensures necessary rules are in place on worker nodes so that containers running on them to reach each other.

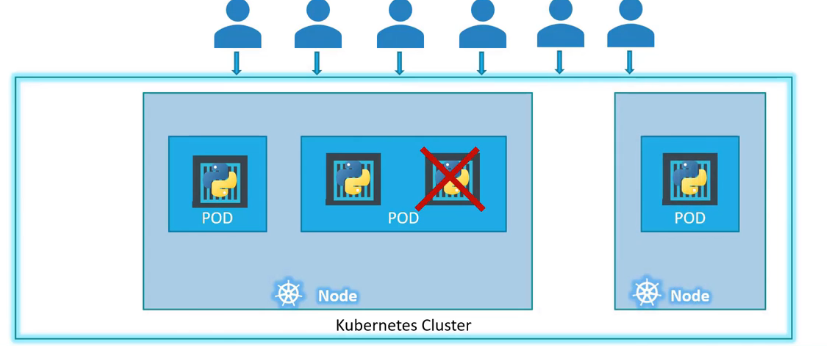

POD

- kubernetes deosn't deploy containers directly on the worker nodes.

- the containers are encapsulated into a Kubernetes object known as pods

- pod

= single instance of an application

= smallest object that you can create in Kubernetes

= a logical unit of deploytment that runs one or more containers - when you need additional instances for application, kubernetes creates a new pod altogether with a new instance of the same application

- these new pods can be added to an existing node or be put in a new node to expand the cluster's physical capacity.

- container 개수

- 대부분: container와 pod는 일대일 관계

- 예외: single pod can have multiple containers

- usually not multiple containers of the same kind

- the additional container is a helper container that might be doing some kind of supporting task for the main application and needs to live alongside the application container

- ex. processing user and theri data, processing a file uploaded by the user, etc.

- kubernetes automatically deploys both application and helpter container in the same pod so that they share smae storage, network namespace, and will be destroyed/created together.

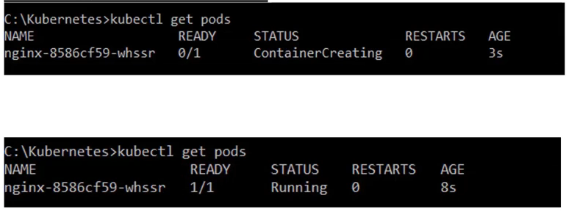

POD deploy 방법

-

kubectl run {POD_NAME} --image {IMAGE_NAME}- creates a pod automatically and deploys an instance of the NGINX docker image (application image)

- need to specify the image name using the dash dash image parameter.

- application image is downloaded from the Docker Hub repository

Docker Hub repository

public repository where latest Docker images of various applications are stored

-

kubectl get pods- lets us see the list of pods available

- lets us see the list of pods available