Kubernetes 설치

NFS 설치

Controll Plane에서..

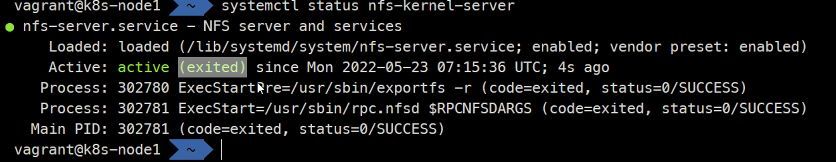

sudo apt install nfs-kernel-server -y #nfs설치

sudo mkdir /nfsvolume #nfs디렉토리 생성

echo "<h1> Hello NFS Volume </h1>" | sudo tee /nfsvolume/index.html #html파일 생성

sudo chown -R www-data:www-data /nfsvolume #nfs디렉토리의 소유자 그룹 변경

sudo vi /etc/exports/nfsvolume 192.168.100.0/24(rw,sync,no_subtree_check,no_root_squash)

### 이렇게 수정ansible all -i ~/kubespray/inventory/mycluster/inventory.ini -m apt -a 'name=nfs-common' -b

### 다른 노드에도 nfs client를 위한 패키지를 설치해준다.sudo systemctl restart nfs-kernel-server

systemctl status nfs-kernel-serverexited라고 뜨는게 정상이다.

yaml파일 작성

cd ~

mkdir nfs

cd nfs

vi mypv.yamlapiVersion: v1

kind: PersistentVolume

metadata:

name: mypv

spec:

accessModes:

- ReadWriteMany

capacity:

storage: 1G

persistentVolumeReclaimPolicy: Retain

nfs:

path: /nfsvolume

server: 192.168.100.100vi mypvc.yamlapiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: mypvc

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 1G

storageClassName: '' # For Static Provisioning

volumeName: mypvvi myweb-rs.yamlapiVersion: apps/v1

kind: ReplicaSet

metadata:

name: myweb-rs

spec:

replicas: 3

selector:

matchLabels:

app: web

template:

metadata:

labels:

app: web

spec:

containers:

- name: myweb

image: httpd

volumeMounts:

- name: myvol

mountPath: /usr/local/apache2/htdocs

volumes:

- name: myvol

persistentVolumeClaim:

claimName: mypvc서비스 생성 및 확인

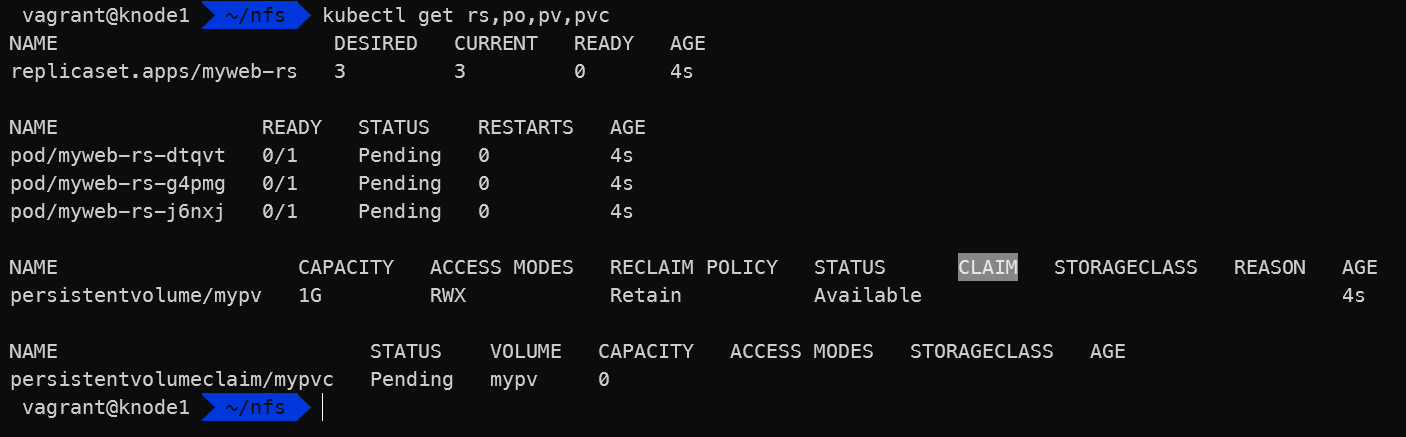

kubectl create -f .

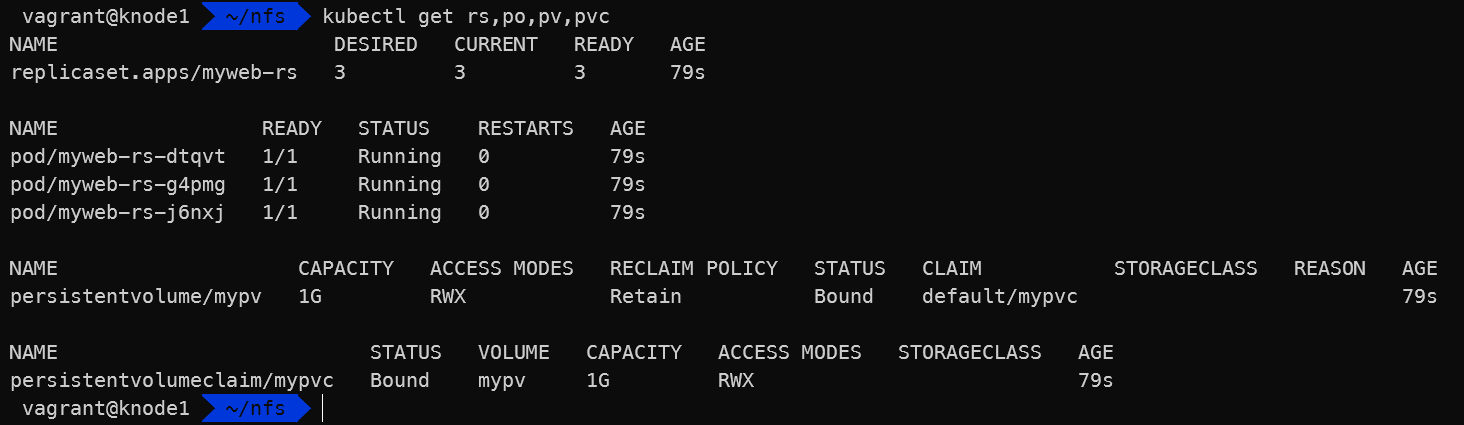

kubectl get rs,po,pv,pvc

CLAIM에 보면 pvc가 연결된 것을 확인할 수 있음

pvc의 상태도 Bound상태로 바뀌었음

다른 노드에 접속해서 마운트

sudo mount -t nfs 192.168.100.100:/nfsvolume /mntvagrant@knode2:~$ sudo mount -t nfs 192.168.100.100:/nfsvolume /mnt

vagrant@knode2:~$ ls /

bin etc lib32 lost+found opt run srv usr

boot home lib64 media proc sbin sys vagrant

dev lib libx32 mnt root snap tmp var

vagrant@knode2:~$ ls /mnt

index.html

vagrant@knode2:~$ cat /mnt/index.html

<h1> Hello NFS Volume </h1>

vagrant@knode2:~$서비스 삭제

Controll Plane에서..

kubectl delete -f .