Simple modeling

Cross validate models

가장 유명한 classifiers를 비교하여 정확성을 평가하고 cross validation procedure를 거칠 것입니다.

SVC

Decision Tree

AdaBoost

Random Forest

Extra Trees

Gradient Boosting

Multiple layer perceprton (neural network)

KNN

Logistic regression

Linear Discriminant Analysis

10 types Cross validation scores

#Cross validate model with Kfold stratified cross val

kfold = StratifiedKFold(n_splits=10)

#Modeling step Test differencts algorithms

# Modeling step Test differents algorithms

from sklearn.svm import SVC

from sklearn.tree import DecisionTreeClassifier

random_state = 2

classifiers = []

classifiers.append(SVC(random_state=random_state))

classifiers.append(DecisionTreeClassifier(random_state=random_state))

classifiers.append(AdaBoostClassifier(DecisionTreeClassifier(random_state=random_state),random_state=random_state,learning_rate=0.1))

classifiers.append(RandomForestClassifier(random_state=random_state))

classifiers.append(ExtraTreesClassifier(random_state=random_state))

classifiers.append(GradientBoostingClassifier(random_state=random_state))

classifiers.append(MLPClassifier(random_state=random_state))

classifiers.append(KNeighborsClassifier())

classifiers.append(LogisticRegression(random_state = random_state))

classifiers.append(LinearDiscriminantAnalysis())

cv_results = []

for classifier in classifiers :

cv_results.append(cross_val_score(classifier, X_train, y = Y_train, scoring = "accuracy", cv = kfold, n_jobs=4))

cv_means = []

cv_std = []

for cv_result in cv_results:

cv_means.append(cv_result.mean())

cv_std.append(cv_result.std())

cv_res = pd.DataFrame({"CrossValMeans":cv_means,"CrossValerrors": cv_std,"Algorithm":["SVC","DecisionTree","AdaBoost",

"RandomForest","ExtraTrees","GradientBoosting","MultipleLayerPerceptron","KNeighboors","LogisticRegression","LinearDiscriminantAnalysis"]})

g = sns.barplot("CrossValMeans","Algorithm",data = cv_res, palette="Set3",orient = "h",**{'xerr':cv_std})

g.set_xlabel("Mean Accuracy")

g = g.set_title("Cross validation scores")

Hyperparameter tunning for best models

그리디 기법 최적화

AdaBoost

### META MODELING WITH ADABOOST, RF, EXTRATREES and GRADIENTBOOSTING

#Adaboost

DTC = DecisionTreeClassifier()

adaDTC = AdaBoostClassifier(DTC, random_state=7)

ada_param_grid = {"base_estimator__criterion" : ["gin1","entropy"],"base_estimator__splitter" : ["best", "random"], "algorithm" : ["SAMME","SAMME.R"], "n_estimators" :[1,2], "learning_rate": [0.0001,0.001,0.01,0.1,0.2,0.3,1.5]}

gsadaDTC = GridSearchCV(adaDTC, param_grid = ada_param_grid, cv = kfold, scoring="accuracy", n_jobs = 4, verbose=1)

gsadaDTC.fit(X_train,Y_train)

ada_best = gsadaDTC.best_estimator_

gsadaDTC.best_score_ #0.8275536261491316ExtraTrees

#ExtraTrees

ExtC = ExtraTreesClassifier()

##Searchh grid for optimal parameters

ex_param_grid = {"max_depth": [None], "max_features":[1,3,10],"min_samples_split":[2,3,10],"min_samples_leaf":[1,3,10],"bootstrap":[False],"n_estimators":[100,300], "criterion":["gini"]}

gsExtC = GridSearchCV(ExtC, param_grid = ex_param_grid, cv=kfold, scoring="accuracy", n_jobs=4, verbose=1)

gsExtC.fit(X_train, Y_train)

ExtC_best = gsExtC.best_estimator_

#Best score

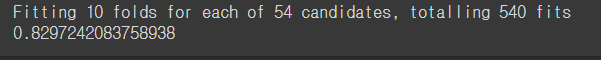

gsExtC.best_score_

RandomForest

# RFC Parameters tunning

RFC = RandomForestClassifier()

## Search grid for optimal parameters

rf_param_grid = {"max_depth": [None],

"max_features": [1, 3, 10],

"min_samples_split": [2, 3, 10],

"min_samples_leaf": [1, 3, 10],

"bootstrap": [False],

"n_estimators" :[100,300],

"criterion": ["gini"]}

gsRFC = GridSearchCV(RFC,param_grid = rf_param_grid, cv=kfold, scoring="accuracy", n_jobs= 4, verbose = 1)

gsRFC.fit(X_train,Y_train)

RFC_best = gsRFC.best_estimator_

# Best score

gsRFC.best_score_

GradientBoosting and SVC classifiers

#GradientBoostingClassifier()

GBC = GradientBoostingClassifier()

gb_param_grid = {'loss': ["deviance"], "n_estimators": [100,200,300], "learning_rate":[0.1,0.05,0.01],"max_depth": [4,8], "min_samples_leaf":[100,150],"max_features":[0.3,0.1]}

gsGBC = GridSearchCV(GBC, param_grid = gb_param_grid, cv=kfold, scoring="accuracy", n_jobs=4, verbose=1)

gsGBC.fit(X_train, Y_train)

GBC_best = gsGBC.best_estimator_

#Best score

gsGBC.best_score_

#SVC Classifier

SVMC = SVC(probability = True)

svc_param_grid = {'kernel' : ['rbf'], 'gamma': [0.001,0.01,0.1,1], 'C': [1,10,50,100,200,300,1000]}

gsSVMC = GridSearchCV(SVMC, param_grid = svc_param_grid, cv=kfold, scoring="accuracy", n_jobs = 4, verbose=1)

gsSVMC.fit(X_train, Y_train)

SVMC_best = gsSVMC.best_estimator_

#Best score

gsSVMC.best_score_

Plot learning curves

def plot_learning_curve(estimator, title, X, y, ylim=None, cv=None,

n_jobs=-1, train_sizes=np.linspace(.1, 1.0, 5)):

"""Generate a simple plot of the test and training learning curve"""

plt.figure()

plt.title(title)

if ylim is not None:

plt.ylim(*ylim)

plt.xlabel("Training examples")

plt.ylabel("Score")

train_sizes, train_scores, test_scores = learning_curve(

estimator, X, y, cv=cv, n_jobs=n_jobs, train_sizes=train_sizes)

train_scores_mean = np.mean(train_scores, axis=1)

train_scores_std = np.std(train_scores, axis=1)

test_scores_mean = np.mean(test_scores, axis=1)

test_scores_std = np.std(test_scores, axis=1)

plt.grid()

plt.fill_between(train_sizes, train_scores_mean - train_scores_std,

train_scores_mean + train_scores_std, alpha=0.1,

color="r")

plt.fill_between(train_sizes, test_scores_mean - test_scores_std,

test_scores_mean + test_scores_std, alpha=0.1, color="g")

plt.plot(train_sizes, train_scores_mean, 'o-', color="r",

label="Training score")

plt.plot(train_sizes, test_scores_mean, 'o-', color="g",

label="Cross-validation score")

plt.legend(loc="best")

return plt

g = plot_learning_curve(gsRFC.best_estimator_,"RF mearning curves",X_train,Y_train,cv=kfold)

g = plot_learning_curve(gsExtC.best_estimator_,"ExtraTrees learning curves",X_train,Y_train,cv=kfold)

g = plot_learning_curve(gsSVMC.best_estimator_,"SVC learning curves",X_train,Y_train,cv=kfold)

g = plot_learning_curve(gsadaDTC.best_estimator_,"AdaBoost learning curves",X_train,Y_train,cv=kfold)

g = plot_learning_curve(gsGBC.best_estimator_,"GradientBoosting learning curves",X_train,Y_train,cv=kfold)

Feature importance of tree based classifiers

nrows = ncols = 2

fig, axes = plt.subplots(nrows = nrows, ncols = ncols, sharex="all", figsize=(15,15))

names_classifiers = [("AdaBoosting", ada_best),("ExtraTrees",ExtC_best),("RandomForest",RFC_best),("GradientBoosting",GBC_best)]

nclassifier = 0

for row in range(nrows):

for col in range(ncols):

name = names_classifiers[nclassifier][0]

classifier = names_classifiers[nclassifier][1]

indices = np.argsort(classifier.feature_importances_)[::-1][:40]

g = sns.barplot(y=X_train.columns[indices][:40],x = classifier.feature_importances_[indices][:40] , orient='h',ax=axes[row][col])

g.set_xlabel("Relative importance",fontsize=12)

g.set_ylabel("Features",fontsize=12)

g.tick_params(labelsize=9)

g.set_title(name + " feature importance")

nclassifier += 1

According to the feature importance of this 4 classifiers, the prediction of the survival seems to be more associated with the Age, the Sex, the family size and the social standing of the passengers more than the location in the boat.

test_Survived_RFC = pd.Series(RFC_best.predict(test), name="RFC")

test_Survived_ExtC = pd.Series(ExtC_best.predict(test), name="ExtC")

test_Survived_SVMC = pd.Series(SVMC_best.predict(test), name="SVC")

test_Survived_AdaC = pd.Series(ada_best.predict(test), name="Ada")

test_Survived_GBC = pd.Series(GBC_best.predict(test), name="GBC")

# Concatenate all classifier results

ensemble_results = pd.concat([test_Survived_RFC,test_Survived_ExtC,test_Survived_AdaC,test_Survived_GBC, test_Survived_SVMC],axis=1)

g= sns.heatmap(ensemble_results.corr(),annot=True)

Ensemble modeling

votingC = VotingClassifier(estimators=[('rfc', RFC_best), ('extc', ExtC_best),

('svc', SVMC_best), ('adac',ada_best),('gbc',GBC_best)], voting='soft', n_jobs=4)

votingC = votingC.fit(X_train, Y_train)Prediction

test_Survived = pd.Series(votingC.predict(test), name="Survived")

results = pd.concat([IDtest, test_Survived], axis=1)

results.to_csv("ensemble_python_voting.csv", index=False)