Key-points

-

텐서(Tensor)

-

넘파이(NumPy)

-

텐서 조작(Tensor Manipulation)

-

브로드캐스팅(Broadcasting)

Learning

-

Vector, Matrix and Tensor

-

Numpy Review

-

PyTorch Tensor Allocation

-

Matrix Multiplication

-

Other Basic Ops

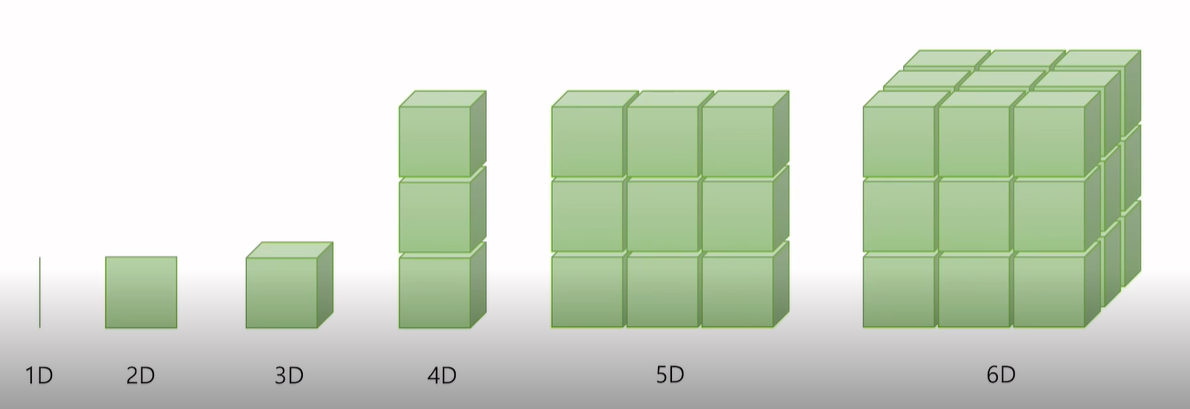

Vector, Matrix and Tensor

*Preview

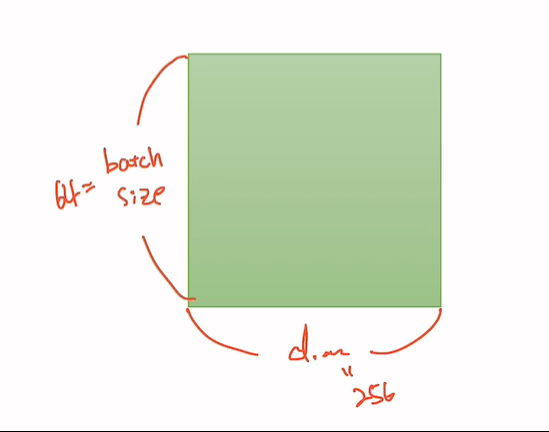

*2D Tensor(Typical Simple Setting)

- |t| = (batch size, dim)

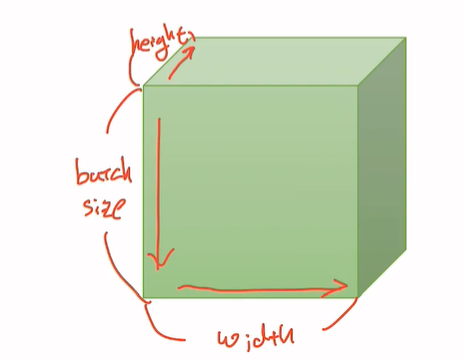

*3D Tensor(Typical Computer Vision)

- |t| = (batch size, width, height)

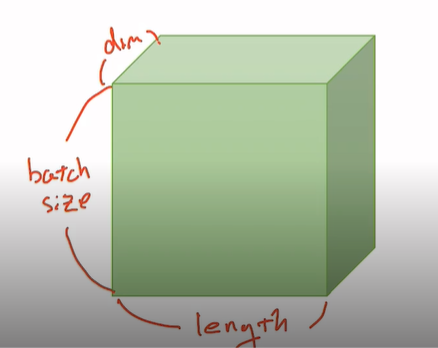

*3D Tensor(Typical NLP)

- |t| = (batch size, length, dim)

Numpy Review

Numpy와 Pytorch는 서로 호환이 너무 잘 되어서 직관적 이해가 편합니다.

#파이토치 설치

! pip install torchvision

#필요 라이브러리 import

import numpy as np

import torch#1D Array with Numpy

t = np.array([0., 1., 2., 3., 4., 5., 6.])

print(t) #[0,1,2,3,4,5,6]

print('Rank of t :', t.ndim) #t의 차원, Rank of t: 1

print('Shape of t:', t.shape)#행렬 형태, Shape of : (7,)

print('t[0] t[1] t[-1] = ', t[0], t[1], t[-1])#Element, t[0] t[1] t[-1] = 0.0 1.0 6.0

print('t[2:5] t[4:-1] = ', t[2:5], t[4:-1]) #Slicing, t[2:5] t[4:-1] = [2. 3. 4.] [4. 5.]

print('t[:2] t[3:] = ', t[:2], t[3:])#Slicing, t[:2] t[3:] = [0. 1.] [3. 4. 5. 6.]

#1D Array with PyTorch

a = torch.FloatTensor([0.,1.,2.,3.,4.,5.,6.])

print(a) #tensor([0., 1., 2., 3., 4., 5., 6.])

print(a.dim()) # rank, 차원 , 1

print(a.shape) #shape 행렬, torch.Size([7])

print(a.size()) # shape, torch.Size([7])

print(a[0], a[1], a[-1]) #Element, tensor(0.) tensor(1.) tensor(6.)

print(a[2:5], a[4:-1]) #Slicing, tensor(0.) tensor(1.) tensor(6.)

print(a[:2], a[3:])#Slicing, tensor([0., 1.]) tensor([3., 4., 5., 6.])#2D Array with Numpy

k = np.array([[1., 2., 3.], [4., 5., 6.],[7.,8.,9.], [10.,11.,12.]])

print(k)

#[[ 1. 2. 3.]

[ 4. 5. 6.]

[ 7. 8. 9.]

[10. 11. 12.]]

print('Rank of k: ', k.ndim)#k차원, Rank of k: 2

print('Shape of k: ', k.shape)#행렬 형태, Shape of k: (4, 3)#2D Array with PyTorch

b = torch.FloatTensor([[1., 2., 3.],

[4., 5., 6.],

[7., 8., 9.],

[10., 11., 12.]])

print(b)

##tensor([[ 1., 2., 3.],

[ 4., 5., 6.],

[ 7., 8., 9.],

[10., 11., 12.]])

print(b.dim()) #rank

print(b.size()) #shape

print(b[:, 1])

print(b[:, 1].size())

print(b[:, :-1])

#

2

torch.Size([4, 3])

tensor([ 2., 5., 8., 11.])

torch.Size([4])

tensor([[ 1., 2.],

[ 4., 5.],

[ 7., 8.],

[10., 11.]])Broadcasting

#Same shape

a = torch.FloatTensor([[3,3]])

b = torch.FloatTensor([[2,2]])

print(a + b) # tensor([[5., 5.]])

#Vector + Scalar

a1 = torch.FloatTensor([[1, 2]])

a2 = torch.FloatTensor([3]) # 3 --> [[3,3]]

# 사이즈가 달라도 사이즈를 맞혀준다

print(a1 + a2) #tensor([[4., 5.]])

# 2 x 1 vector + 1 x 2 vector

b1 = torch.FloatTensor([[1, 2]])

b2 = torch.FloatTensor([[3], [4]])#[3,3], [4,4]

print(b1 + b2)

#tensor([[4., 5.],

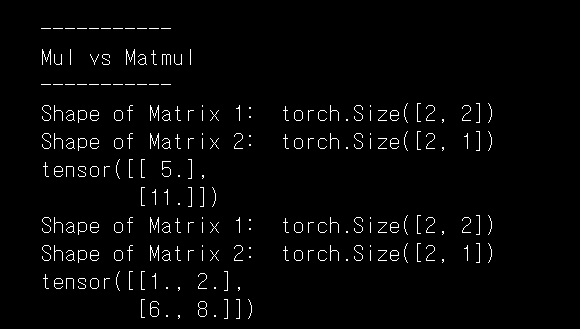

[5., 6.]])Multiplication vs Matrix Multiplication

print()

print('-----------')

print('Mul vs Matmul')

print('-----------')

a = torch.FloatTensor([[1, 2], [3, 4]])

b = torch.FloatTensor([[1], [2]])

print('Shape of Matrix 1: ', a.shape) #2x2

print('Shape of Matrix 2: ', b.shape) #2x1

print(a.matmul(b)) #2x1

c = torch.FloatTensor([[1, 2], [3, 4]])

d = torch.FloatTensor([[1], [2]])

print('Shape of Matrix 1: ', c.shape) #2x2

print('Shape of Matrix 2: ', d.shape) #2x1

print(c.mul(d)) #2x1

Mean

k = torch.FloatTensor([1,2])

print(k.mean()) #tensor(1.5000)

#Can't use mean() on integers

k1 = torch.LongTensor([1, 2])

try:

print(k1.mean())

except Exception as exc:

print(exc)

#mean(): input dtype should be either floating point or complex dtypes. Got Long instead.

k2 = torch.FloatTensor([[1, 2], [3, 4]])

print(k2)

# tensor([[1., 2.],

[3., 4.]])

print(k2.mean())

print(k2.mean(dim=0))

print(k2.mean(dim=1))

print(k2.mean(dim=-1))

#결과

tensor(2.5000)

tensor([2., 3.])

tensor([1.5000, 3.5000])

tensor([1.5000, 3.5000])

Max and Argmax

r = torch.FloatTensor([[10, 20], [30, 40]])

print(r)

#결과

tensor([[10., 20.],

[30., 40.]])

print(r.max())# Returns on value: max , tensor(40.)

print(r.max(dim = 0))

print('Max: ', r.max(dim=0)[0])

print('Argmax: ', r.max(dim=0)[1])

#결과

torch.return_types.max(

values=tensor([30., 40.]),

indices=tensor([1, 1]))

Max: tensor([30., 40.])

Argmax: tensor([1, 1])

print(r.max(dim=1))

print(r.max(dim=-1))

#결과

torch.return_types.max(

values=tensor([20., 40.]),

indices=tensor([1, 1]))

torch.return_types.max(

values=tensor([20., 40.]),

indices=tensor([1, 1]))