Cross validation

from sklearn.model_selection import KFold #for K-fold cross validation

from sklearn.model_selection import cross_val_score # score evaluation

from sklearn.model_selection import cross_val_predict # prediction

kfold = KFold(n_splits=10, random_state=None)

xyz=[]

accuracy=[]

std=[]

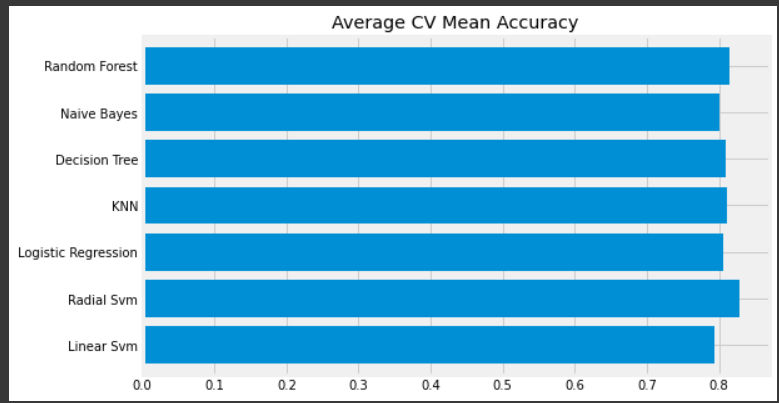

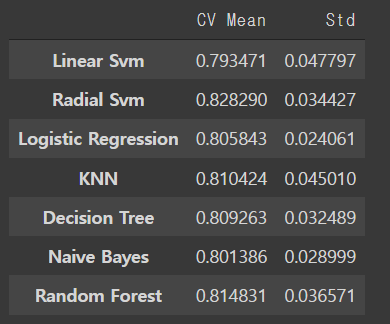

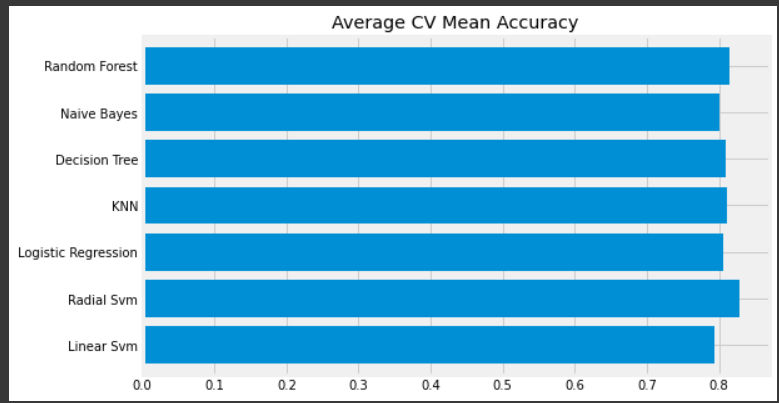

classifiers=['Linear Svm', 'Radial Svm','Logistic Regression','KNN','Decision Tree','Naive Bayes','Random Forest']

models=[svm.SVC(kernel='linear'),svm.SVC(kernel='rbf'),LogisticRegression(),KNeighborsClassifier(n_neighbors=9),DecisionTreeClassifier(),GaussianNB(),RandomForestClassifier(n_estimators=100)]

for i in models:

model = i

cv_result = cross_val_score(model,X,Y, cv = kfold,scoring="accuracy")

cv_result = cv_result

xyz.append(cv_result.mean())

std.append(cv_result.std())

accuracy.append(cv_result)

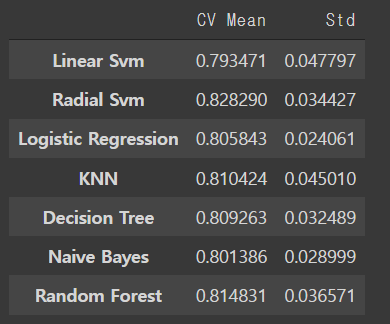

new_models_dataframe2=pd.DataFrame({'CV Mean':xyz, 'Std':std},index=classifiers)

new_models_dataframe2

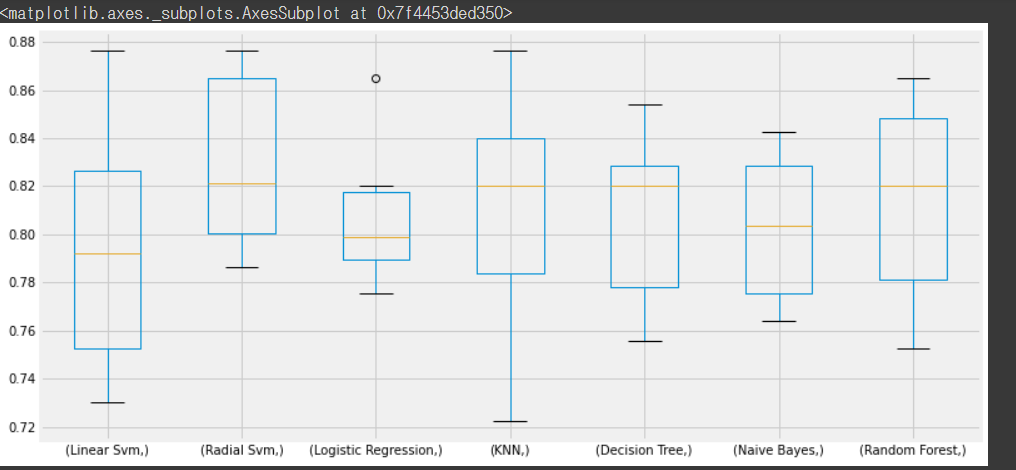

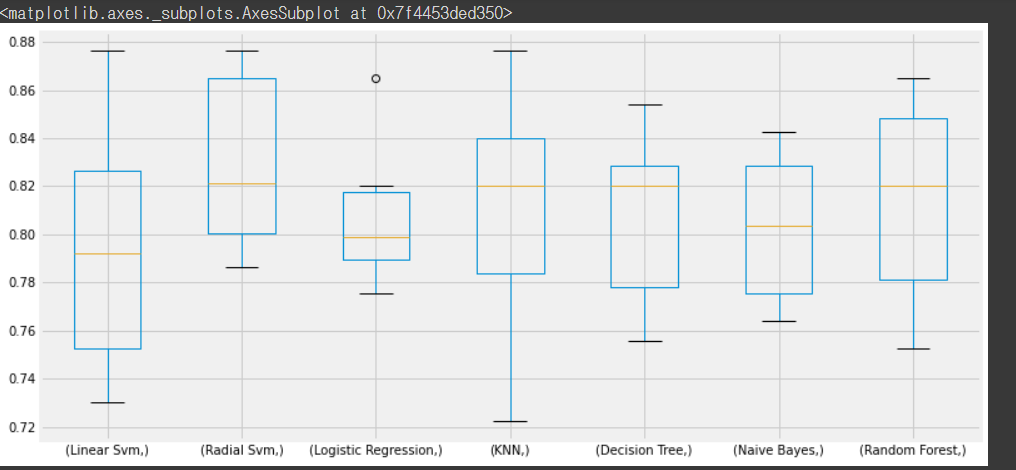

plt.subplots(figsize=(12,6))

box=pd.DataFrame(accuracy,index=[classifiers])

box.T.boxplot()

new_models_dataframe2['CV Mean'].plot.barh(width=0.8)

plt.title('Average CV Mean Accuracy')

fig=plt.gcf()

fig.set_size_inches(8,5)

plt.show()