-

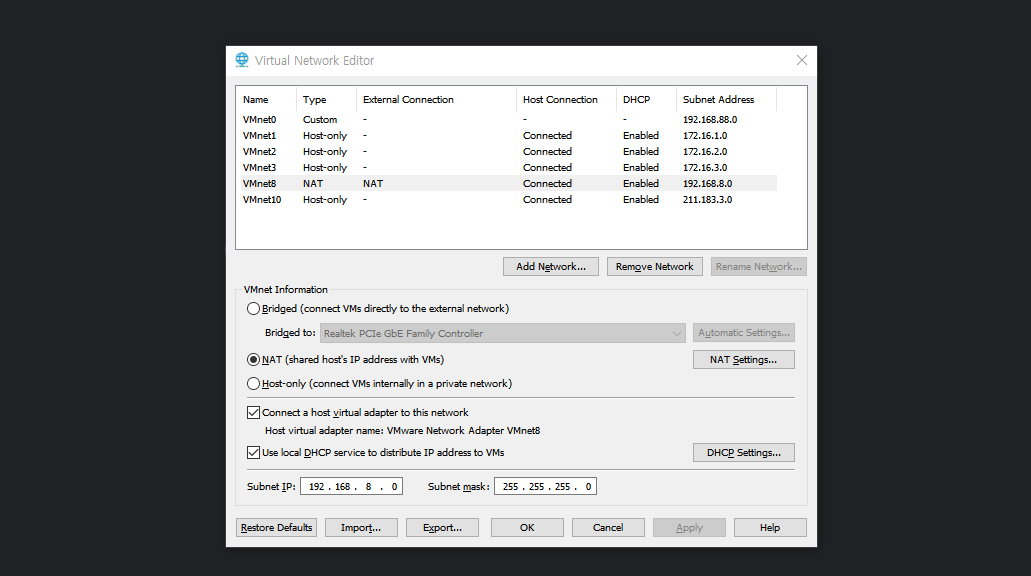

실습 환경 구성

- VMnet8을 NAT으로, 192.168.8.0/24로 설정

- VMnet8을 NAT으로, 192.168.8.0/24로 설정

-

네트워크 설정

- /etc/netplan/01-network-manager-all.yaml

# /etc/netplan/01-network-manager-all.yaml

network:

ethernets:

ens32:

addresses: [192.168.8.100/24]

gateway4: 192.168.8.2

nameservers:

addresses: [8.8.8.8, 168.126.63.1]

version: 2 - 네트워크 설정 적용

netplan apply -> 네트워크 설정 완료 후 VM full clone 진행하여 worker 노드 3개 생성

- 각 worker 노드의 ip 주소 변경

- worker1: 192.168.8.101

- worker2: 192.168.8.102

- worker3: 192.168.8.103

- netplan apply로 적용

- 구성 확인

kubectl get no

kubectl get ns

kubectl get pod -n kube-system

docker login - calico 설치

kubectl apply -f https://docs.projectcalico.org/manifests/calico.yaml- metallb 배포

참고: https://velog.io/@ptah0414/쿠버네티스-volume#step-5-metallb-구성

apiVersion: v1

kind: ConfigMap

metadata:

namespace: metallb-system

name: config

data:

config: |

address-pools:

- name: default

protocol: layer2

addresses:

- 192.168.8.201-192.168.8.239실습

- deploy-nginx.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-rollout

spec:

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx-ctn

image: brian24/testweb:bluetestweb:blue

- lb-nginx.yaml

apiVersion: v1

kind: Service

metadata:

name: nginxlb

labels:

app: nginx

spec:

externalTrafficPolicy: Local

ports:

- name: http

port: 80

protocol: TCP

targetPort: 80

selector:

app: nginx

type: LoadBalancer- 배포

root@master:~/lab1# k apply -f .

deployment.apps/nginx-rollout created

service/nginxlb created- 배포 확인

root@master:~/lab1# k get deploy,svc

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/nginx-rollout 3/3 3 3 11s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 152m

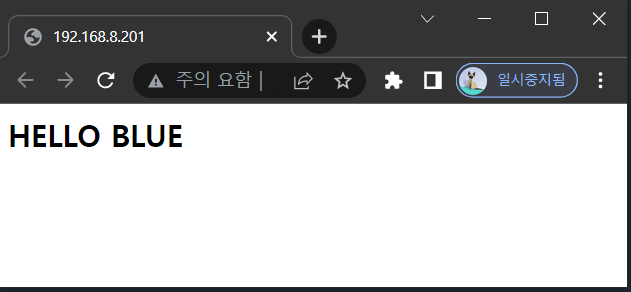

service/nginxlb LoadBalancer 10.103.2.109 192.168.8.201 80:30558/TCP 85s- lb 접속

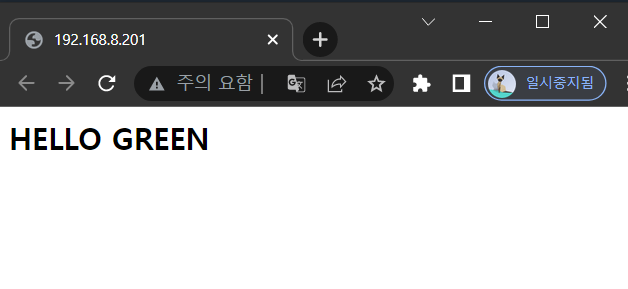

- 이미지 업데이트

kubectl set image deploy nginx-rollout \

nginx-ctn=brian24/testweb:green --record - 이미지 변경 확인

- 삭제

root@master:~/lab1# kubectl delete -f .

deployment.apps "nginx-rollout" deleted

service "nginxlb" deletedCI/CD

root@master:~/lab2# git clone https://github.com/beomtaek/cicd_samplecode.git

Cloning into 'cicd_samplecode'...

remote: Enumerating objects: 66, done.

remote: Counting objects: 100% (66/66), done.

remote: Compressing objects: 100% (66/66), done.

remote: Total 66 (delta 34), reused 0 (delta 0), pack-reused 0

Unpacking objects: 100% (66/66), 20.51 KiB | 954.00 KiB/s, done.root@master:~/lab2# tree

.

└── cicd_samplecode

├── grafana-install.sh

├── grafana-preconfig.sh

├── grafana-volume.yaml

├── helm-install.sh

├── jenkins-config.yaml

├── jenkins-install.sh

├── jenkins-volume.yaml

├── kustomize-install.sh

├── metallb-l2config.yaml

├── metallb.yaml

├── namespace.yaml

├── nfs-exporter.sh

├── prometheus-install.sh

├── prometheus-server-preconfig.sh

└── prometheus-server-volume.yaml

1 directory, 15 filesroot@master:~/lab2# cd cicd_samplecode/

root@master:~/lab2/cicd_samplecode# mkdir metallb

root@master:~/lab2/cicd_samplecode# mv kustomize-install.sh metallb-l2config.yaml metallb.yaml namespace.yaml metallb

root@master:~/lab2/cicd_samplecode# tree

.

├── grafana-install.sh

├── grafana-preconfig.sh

├── grafana-volume.yaml

├── helm-install.sh

├── jenkins-config.yaml

├── jenkins-install.sh

├── jenkins-volume.yaml

├── metallb

│ ├── kustomize-install.sh

│ ├── metallb-l2config.yaml

│ ├── metallb.yaml

│ └── namespace.yaml

├── nfs-exporter.sh

├── prometheus-install.sh

├── prometheus-server-preconfig.sh

└── prometheus-server-volume.yaml

1 directory, 15 filesroot@master:~/lab2/cicd_samplecode# cd metallb/

root@master:~/lab2/cicd_samplecode/metallb# tree

.

├── kustomize-install.sh

├── metallb-l2config.yaml

├── metallb.yaml

└── namespace.yaml

0 directories, 4 files- metallb-l2config.yaml

apiVersion: v1

kind: ConfigMap

metadata:

namespace: metallb-system

name: config

data:

config: |

address-pools:

- name: metallb-ip-range

protocol: layer2

addresses:

- 192.168.8.201-192.168.8.239- namespace.yaml

root@master:~/lab2/cicd_samplecode/metallb# cat namespace.yaml

apiVersion: v1

kind: Namespace

metadata:

name: metallb-system

labels:

app: metallb- metallb.yaml

apiVersion: policy/v1beta1

kind: PodSecurityPolicy

metadata:

labels:

app: metallb

name: speaker

namespace: metallb-system

spec:

allowPrivilegeEscalation: false

allowedCapabilities:

- NET_ADMIN

- NET_RAW

- SYS_ADMIN

fsGroup:

rule: RunAsAny

hostNetwork: true

hostPorts:

- max: 7472

min: 7472

privileged: true

runAsUser:

rule: RunAsAny

seLinux:

rule: RunAsAny

supplementalGroups:

rule: RunAsAny

volumes:

- '*'

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

app: metallb

name: controller

namespace: metallb-system

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

app: metallb

name: speaker

namespace: metallb-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

app: metallb

name: metallb-system:controller

rules:

- apiGroups:

- ''

resources:

- services

verbs:

- get

- list

- watch

- update

- apiGroups:

- ''

resources:

- services/status

verbs:

- update

- apiGroups:

- ''

resources:

- events

verbs:

- create

- patch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

app: metallb

name: metallb-system:speaker

rules:

- apiGroups:

- ''

resources:

- services

- endpoints

- nodes

verbs:

- get

- list

- watch

- apiGroups:

- ''

resources:

- events

verbs:

- create

- patch

- apiGroups:

- extensions

resourceNames:

- speaker

resources:

- podsecuritypolicies

verbs:

- use

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

labels:

app: metallb

name: config-watcher

namespace: metallb-system

rules:

- apiGroups:

- ''

resources:

- configmaps

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

app: metallb

name: metallb-system:controller

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: metallb-system:controller

subjects:

- kind: ServiceAccount

name: controller

namespace: metallb-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

app: metallb

name: metallb-system:speaker

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: metallb-system:speaker

subjects:

- kind: ServiceAccount

name: speaker

namespace: metallb-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

app: metallb

name: config-watcher

namespace: metallb-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: config-watcher

subjects:

- kind: ServiceAccount

name: controller

- kind: ServiceAccount

name: speaker

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

labels:

app: metallb

component: speaker

name: speaker

namespace: metallb-system

spec:

selector:

matchLabels:

app: metallb

component: speaker

template:

metadata:

annotations:

prometheus.io/port: '7472'

prometheus.io/scrape: 'true'

labels:

app: metallb

component: speaker

spec:

containers:

- args:

- --port=7472

- --config=config

env:

- name: METALLB_NODE_NAME

valueFrom:

fieldRef:

fieldPath: spec.nodeName

- name: METALLB_HOST

valueFrom:

fieldRef:

fieldPath: status.hostIP

image: metallb/speaker:v0.8.2

imagePullPolicy: IfNotPresent

name: speaker

ports:

- containerPort: 7472

name: monitoring

resources:

limits:

cpu: 100m

memory: 100Mi

securityContext:

allowPrivilegeEscalation: false

capabilities:

add:

- NET_ADMIN

- NET_RAW

- SYS_ADMIN

drop:

- ALL

readOnlyRootFilesystem: true

hostNetwork: true

nodeSelector:

beta.kubernetes.io/os: linux

serviceAccountName: speaker

terminationGracePeriodSeconds: 0

tolerations:

- effect: NoSchedule

key: node-role.kubernetes.io/master

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: metallb

component: controller

name: controller

namespace: metallb-system

spec:

revisionHistoryLimit: 3

selector:

matchLabels:

app: metallb

component: controller

template:

metadata:

annotations:

prometheus.io/port: '7472'

prometheus.io/scrape: 'true'

labels:

app: metallb

component: controller

spec:

containers:

- args:

- --port=7472

- --config=config

image: metallb/controller:v0.8.2

imagePullPolicy: IfNotPresent

name: controller

ports:

- containerPort: 7472

name: monitoring

resources:

limits:

cpu: 100m

memory: 100Mi

securityContext:

allowPrivilegeEscalation: false

capabilities:

drop:

- all

readOnlyRootFilesystem: true

nodeSelector:

beta.kubernetes.io/os: linux

securityContext:

runAsNonRoot: true

runAsUser: 65534

serviceAccountName: controller

terminationGracePeriodSeconds: - kustomize-install.sh

#!/usr/bin/env bash

curl -L \

https://github.com/kubernetes-sigs/kustomize/releases/download/kustomize%2Fv3.6.1/kustomize_v3.6.1_linux_amd64.tar.gz -o /tmp/kustomize.tar.gz

tar -xzf /tmp/kustomize.tar.gz -C /usr/local/bin

echo "kustomize install successfully"- kustomize 실행

root@master:~/lab2/cicd_samplecode/metallb# sh kustomize-install.sh

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

0 0 0 0 0 0 0 0 --:--:-- --:--:-- --:--:-- 0

100 12.4M 100 12.4M 0 0 18.7M 0 --:--:-- --:--:-- --:--:-- 18.7M

kustomize install successfully- kustomize 생성

root@master:~/lab2/cicd_samplecode/metallb# kustomize create --namespace=metallb-system --resources namespace.yaml,metallb.yaml,metallb-l2config.yaml root@master:~/lab2/cicd_samplecode/metallb# tree

.

├── kustomization.yaml

├── kustomize-install.sh

├── metallb-l2config.yaml

├── metallb.yaml

└── namespace.yamlkustomization.yaml 파일 생성되었음

- kustomization.yaml 확인

root@master:~/lab2/cicd_samplecode/metallb# cat kustomization.yaml

apiVersion: kustomize.config.k8s.io/v1beta1

kind: Kustomization

resources:

- namespace.yaml

- metallb.yaml

- metallb-l2config.yaml

namespace: metallb-system- 배포

root@master:~/lab2/cicd_samplecode/metallb# kustomize build | kubectl apply -f -

Warning: resource namespaces/metallb-system is missing the kubectl.kubernetes.io/last-applied-configuration annotation which is required by kubectl apply. kubectl apply should only be used on resources created declaratively by either kubectl create --save-config or kubectl apply. The missing annotation will be patched automatically.

namespace/metallb-system configured

serviceaccount/controller unchanged

serviceaccount/speaker unchanged

Warning: policy/v1beta1 PodSecurityPolicy is deprecated in v1.21+, unavailable in v1.25+

podsecuritypolicy.policy/speaker configured

role.rbac.authorization.k8s.io/config-watcher unchanged

clusterrole.rbac.authorization.k8s.io/metallb-system:controller configured

clusterrole.rbac.authorization.k8s.io/metallb-system:speaker configured

rolebinding.rbac.authorization.k8s.io/config-watcher configured

clusterrolebinding.rbac.authorization.k8s.io/metallb-system:controller unchanged

clusterrolebinding.rbac.authorization.k8s.io/metallb-system:speaker unchanged

configmap/config configured

deployment.apps/controller configured

daemonset.apps/speaker configuredkustomize build 결과를 kubectl apply -f 에 넘김

- 배포 확인

root@master:~/lab2/cicd_samplecode/metallb# kubectl get pod -n metallb-system -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

controller-675d6c9976-rgbsk 1/1 Running 0 5m50s 172.16.189.84 worker2 <none> <none>

speaker-7d5zm 1/1 Running 0 5m50s 192.168.8.101 worker1 <none> <none>

speaker-mpqz4 1/1 Running 0 5m50s 192.168.8.103 worker3 <none> <none>

speaker-qb9tq 1/1 Running 0 5m50s 192.168.8.100 master <none> <none>

speaker-w4hlr 1/1 Running 0 5m50s 192.168.8.102 worker2 <none> <none>Helm

- helm 설치

root@master:~/lab2/cicd_samplecode# curl -fsSL -o get_helm.sh \

> https://raw.githubusercontent.com/helm/helm/main/scripts/get-helm-3

root@master:~/lab2/cicd_samplecode# chmod 700 get_helm.sh

root@master:~/lab2/cicd_samplecode# export DESIRED_VERSION=v3.2.1

root@master:~/lab2/cicd_samplecode# ./get_helm.sh

Downloading https://get.helm.sh/helm-v3.2.1-linux-amd64.tar.gz

Verifying checksum... Done.

Preparing to install helm into /usr/local/bin

helm installed into /usr/local/bin/helm- edu repo 등록

root@master:~/lab2/cicd_samplecode# helm repo add edu https://iac-source.github.io/helm-charts

"edu" has been added to your repositories- helm chart 설치

helm install metallb edu/metallb \

--namespace=metallb-system \

--create-namespace \

--set controller.tag=<Version> \

--set speaker.tag=<Version> \

--set configmap.ipRange=<IP Range> - helm chart를 통한 metallb 설치

helm install metallb edu/metallb \

--namespace=metallb-system \

--create-namespace \

--set controller.tag=v0.8.2 \

--set speaker.tag=v0.8.2 \

--set configmap.ipRange=192.168.8.201-192.168.8.239 Kubernets 환경에서 Jenkins 설치

사설 저장소 구축하기

root@master:~/lab2/cicd_samplecode# docker container run -d --restart=always --name registry -p 5000:5000 registry

Unable to find image 'registry:latest' locally

latest: Pulling from library/registry

213ec9aee27d: Pull complete

4583459ba037: Pull complete

6f6a6c5733af: Pull complete

b136d5c19b1d: Pull complete

fd4a5435f342: Pull complete

Digest: sha256:2e830e8b682d73a1b70cac4343a6a541a87d5271617841d87eeb67a824a5b3f2

Status: Downloaded newer image for registry:latest

aade15e30fac34468cfe13bdb54fe6505614e89c46560a7525e3cbad3129931b

root@master:~/lab2/cicd_samplecode# docker run -d -p 8888:8080 --restart always --name registry-web --link registry -e REGISTRY_URL=http://192.168.8.100:5000/v2 -e REGISTRY_NAME=192.168.8.100:5000 hyper/docker-registry-web

Unable to find image 'hyper/docker-registry-web:latest' locally

latest: Pulling from hyper/docker-registry-web

04c996abc244: Pull complete

d394d3da86fe: Pull complete

bac77aae22d4: Pull complete

b48b86b78e97: Pull complete

09b3dd842bf5: Pull complete

69f4c5394729: Pull complete

b012980650e9: Pull complete

7c7921c6fda1: Pull complete

e20331c175ea: Pull complete

40d5e82892a5: Pull complete

a414fa9c865a: Pull complete

0304ae3409f3: Pull complete

13effc1a664f: Pull complete

e5628d0e6f8c: Pull complete

0b0e130a3a52: Pull complete

d0c73ab65cd2: Pull complete

240c0b145309: Pull complete

f1fd6f874e5e: Pull complete

40b5e021928e: Pull complete

88a8c7267fbc: Pull complete

f9371a03010e: Pull complete

Digest: sha256:723ffa29aed2c51417d8bd32ac93a1cd0e7ef857a0099c1e1d7593c09f7910ae

Status: Downloaded newer image for hyper/docker-registry-web:latest

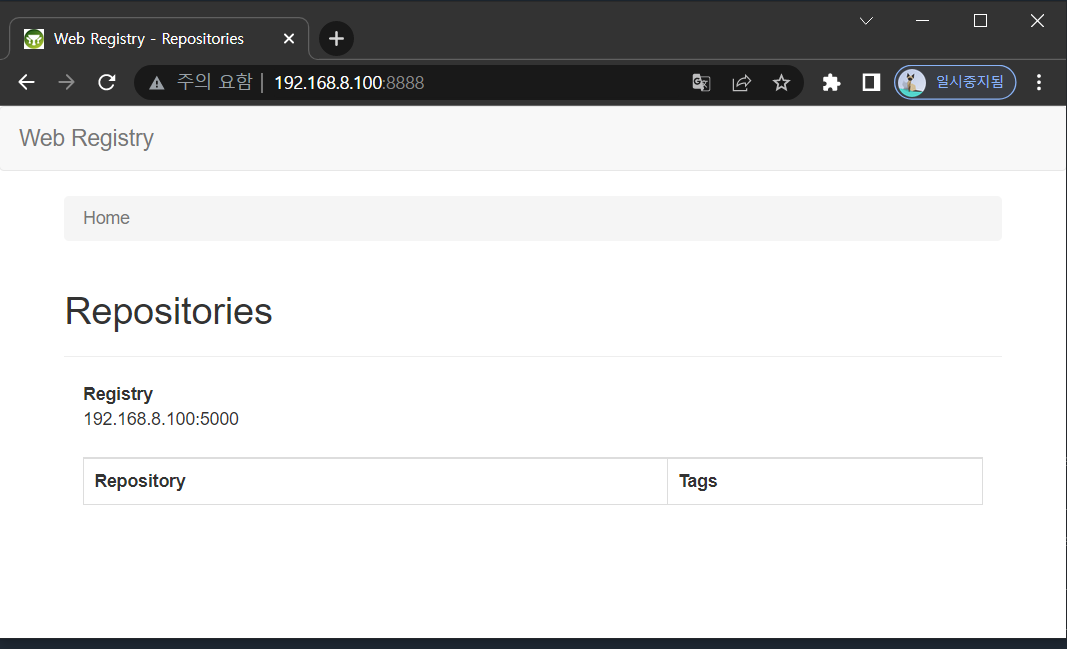

95ddcb73fac4847e5bbeb1eb3b422e42f15d264964bd6fe23109d96d685515d8- registry 접속

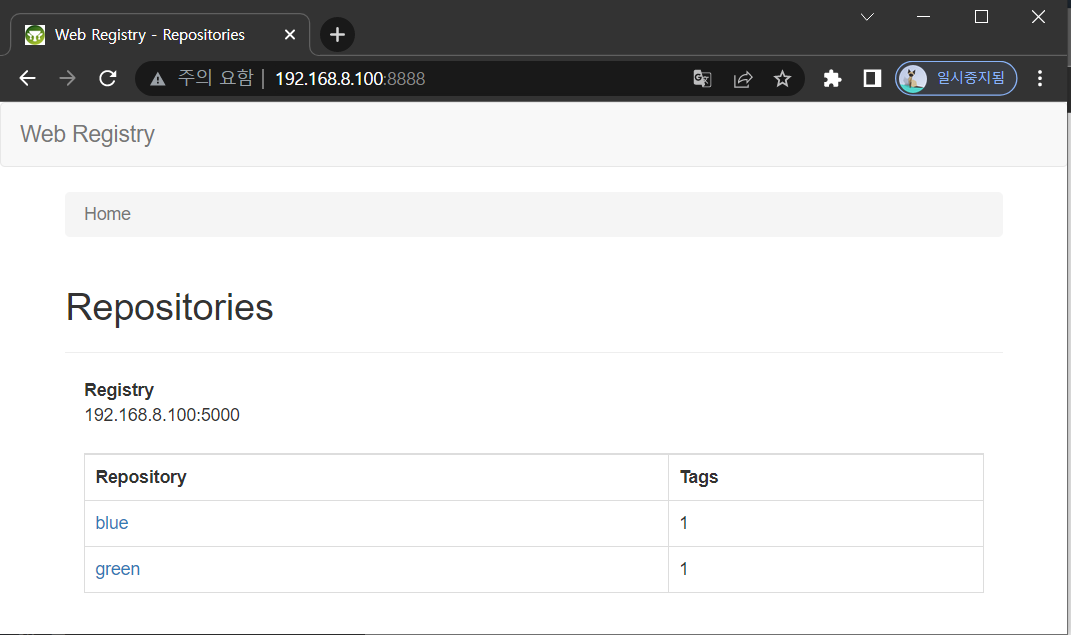

- 이미지 태그 설정

root@master:~/lab2/cicd_samplecode# docker tag brian24/testweb:blue 192.168.8.100:5000/blue:1.0 root@master:~/lab2/cicd_samplecode# docker tag brian24/testweb:green \

> 192.168.8.100:5000/green:1.0 - 이미지 push

root@master:~/lab2/cicd_samplecode# docker push 192.168.8.100:5000/green:1.0

The push refers to repository [192.168.8.100:5000/green]

b1098ce707da: Pushed

d6a3537fc36a: Pushed

819eb3a45632: Pushed

5eda6fa69be4: Pushed

6f4f3ce1dca0: Pushed

58a06a0d345c: Pushed

fe7b1e9bf792: Pushed

1.0: digest: sha256:f9f120945e33c8a2210265bad427ae579ea0e26db7ee9c96f9e07009f43caa48 size: 1777root@master:~/lab2/cicd_samplecode# docker push 192.168.8.100:5000/blue:1.0

The push refers to repository [192.168.8.100:5000/blue]

2e0ce84a59b2: Pushed

d6a3537fc36a: Mounted from green

819eb3a45632: Mounted from green

5eda6fa69be4: Mounted from green

6f4f3ce1dca0: Mounted from green

58a06a0d345c: Mounted from green

fe7b1e9bf792: Mounted from green

1.0: digest: sha256:58b69906e0eb099851b0ab3b3f03dfdf0e48020741ff51daeba5480969ac90f6 size: 1777- registry 등록 확인

- 비보안 접속 허용

root@master:~/lab2/cicd_samplecode# vi /etc/docker/daemon.json{

"insecure-registries" : [ "192.168.8.100:5000" ]

}systemctl restart docker모든 노드에 적용

- nfs-exporter.sh

#!/usr/bin/env bash

nfsdir=/nfs_shared/$1

if [ $# -eq 0 ]; then

echo "usage: nfs-exporter.sh <name>"; exit 0

fi

if [[ ! -d $nfsdir ]]; then

mkdir -p $nfsdir

echo "$nfsdir 192.168.8.0/24(rw,sync,no_root_squash)" >> /etc/exports

if [[ $(systemctl is-enabled nfs) -eq "disabled" ]]; then

systemctl enable nfs-server

fi

systemctl restart nfs-server

fi- nfs-exporter 실행

root@master:~/lab2/cicd_samplecode# ./nfs-exporter.sh jenkins

Failed to get unit file state for nfs.service: No such file or directory

root@master:~/lab2/cicd_samplecode# ./nfs-exporter.sh jenkins- 적용 확인

root@master:~/lab2/cicd_samplecode# cat /etc/exports

# /etc/exports: the access control list for filesystems which may be exported

# to NFS clients. See exports(5).

#

# Example for NFSv2 and NFSv3:

# /srv/homes hostname1(rw,sync,no_subtree_check) hostname2(ro,sync,no_subtree_check)

#

# Example for NFSv4:

# /srv/nfs4 gss/krb5i(rw,sync,fsid=0,crossmnt,no_subtree_check)

# /srv/nfs4/homes gss/krb5i(rw,sync,no_subtree_check)

#

/nfs_shared/jenkins 192.168.8.0/24(rw,sync,no_root_squash)/nfs_shared/jenkins 192.168.8.0/24(rw,sync,no_root_squash) 가 추가되었음

root@master:~/lab2/cicd_samplecode# ls -nl /nfs_shared/

total 4

drwxr-xr-x 2 0 0 4096 10월 27 16:23 jenkinsjenkins 디렉토리가 생성되었음

- jenkins 디렉토리 퍼미션 조정 (매우 중요!!)

root@master:~/lab2/cicd_samplecode# chown 1000:1000 /nfs_shared/jenkins/

root@master:~/lab2/cicd_samplecode# ls -nl /nfs_shared/

total 4

drwxr-xr-x 2 1000 1000 4096 10월 27 16:23 jenkinsjenkins 관련해서 문제가 발생하면 퍼미션 문제가 아닌지 확인해보자

volume

- jenkins-volume.yaml

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: jenkins

spec:

capacity:

storage: 5Gi

accessModes:

- ReadWriteMany

persistentVolumeReclaimPolicy: Retain

nfs:

server: 192.168.8.100

path: /nfs_shared/jenkins

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: jenkins

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 5Gi- 배포

root@master:~/lab2/cicd_samplecode# kubectl apply -f jenkins-volume.yaml

persistentvolume/jenkins created

persistentvolumeclaim/jenkins created- 적용 확인

root@master:~/lab2/cicd_samplecode# kubectl get pv,pvc

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

persistentvolume/jenkins 5Gi RWX Retain Bound default/jenkins 29s

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/jenkins Bound jenkins 5Gi RWX 29sjenkins-install.sh

#!/usr/bin/env bash

jkopt1="--sessionTimeout=1440"

jkopt2="--sessionEviction=86400"

jvopt1="-Duser.timezone=Asia/Seoul"

jvopt2="-Dcasc.jenkins.config=https://raw.githubusercontent.com/beomtaek/cicd_samplecode/4b32b6f7a3ab3cb11fa02847fa0aca6b7f2309fc/jenkins-config.yaml"

jvopt3="-Dhudson.model.DownloadService.noSignatureCheck=true"

helm install jenkins edu/jenkins \

--set persistence.existingClaim=jenkins \

--set master.adminPassword=admin \

--set master.nodeSelector."kubernetes\.io/hostname"=master \

--set master.tolerations[0].key=node-role.kubernetes.io/master \

--set master.tolerations[0].effect=NoSchedule \

--set master.tolerations[0].operator=Exists \

--set master.runAsUser=1000 \

--set master.runAsGroup=1000 \

--set master.tag=2.249.3-lts-centos7 \

--set master.serviceType=LoadBalancer \

--set master.servicePort=80 \

--set master.jenkinsOpts="$jkopt1 $jkopt2" \

--set master.javaOpts="$jvopt1 $jvopt2 $jvopt3"root@master:~/lab2/cicd_samplecode# chmod +x jenkins-install.sh

root@master:~/lab2/cicd_samplecode# ./jenkins-install.sh

NAME: jenkins

LAST DEPLOYED: Thu Oct 27 16:38:20 2022

NAMESPACE: default

STATUS: deployed

REVISION: 1

NOTES:

1. Get your 'admin' user password by running:

printf $(kubectl get secret --namespace default jenkins -o jsonpath="{.data.jenkins-admin-password}" | base64 --decode);echo

2. Get the Jenkins URL to visit by running these commands in the same shell:

NOTE: It may take a few minutes for the LoadBalancer IP to be available.

You can watch the status of by running 'kubectl get svc --namespace default -w jenkins'

export SERVICE_IP=$(kubectl get svc --namespace default jenkins --template "{{ range (index .status.loadBalancer.ingress 0) }}{{ . }}{{ end }}")

echo http://$SERVICE_IP:80/login

3. Login with the password from step 1 and the username: admin

4. Use Jenkins Configuration as Code by specifying configScripts in your values.yaml file, see documentation: http:///configuration-as-code and examples: https://github.com/jenkinsci/configuration-as-code-plugin/tree/master/demos

For more information on running Jenkins on Kubernetes, visit:

https://cloud.google.com/solutions/jenkins-on-container-engine

For more information about Jenkins Configuration as Code, visit:

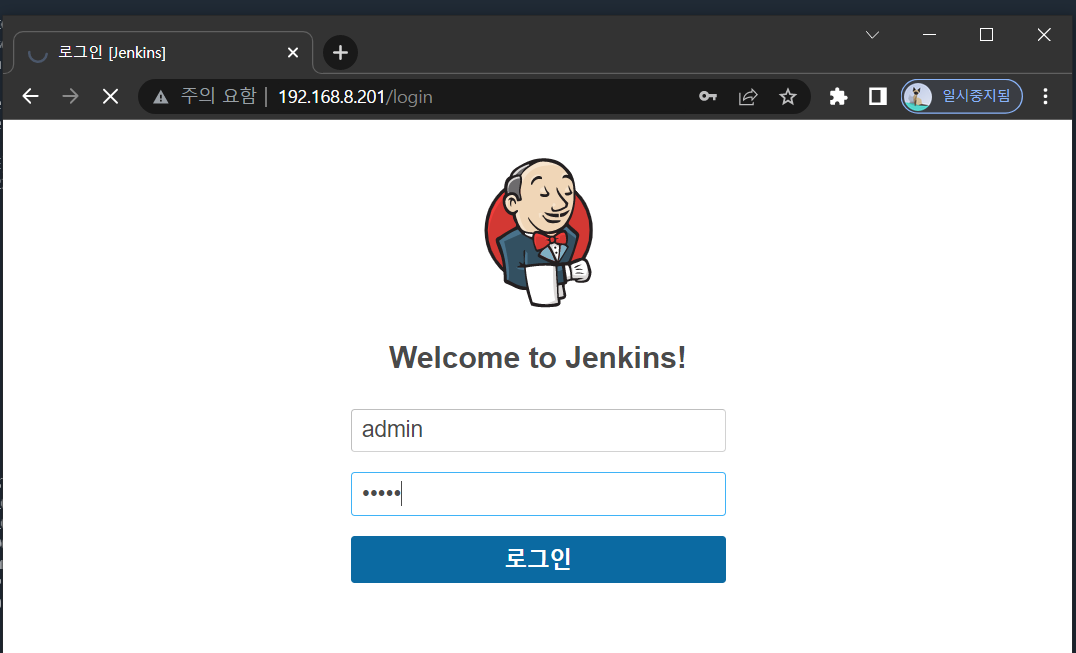

https://jenkins.io/projects/jcasc/root@master:~/lab2/cicd_samplecode# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

jenkins LoadBalancer 10.101.100.100 192.168.8.201 80:30782/TCP 37s

jenkins-agent ClusterIP 10.106.40.101 <none> 50000/TCP 37s

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 6h47m

- jenkins 서비스 생성 확인

- 192.168.8.201:80

- jenkins 디렉토리에 파일 생성 확인

root@master:~/lab2/cicd_samplecode# tree /nfs_shared/jenkins/

/nfs_shared/jenkins/

├── jenkins.install.InstallUtil.lastExecVersion

├── jenkins.install.UpgradeWizard.state

└── plugins.txt

0 directories, 3 files- jenkins 접속

- jenkins 설치가 되지 않는다면 master 노드에 직접 이미지 pull하기

docker pull jenkins/inbound-agent:4.3-4

docker pull jenkins/jenkins:2.249.3-lts-centos7

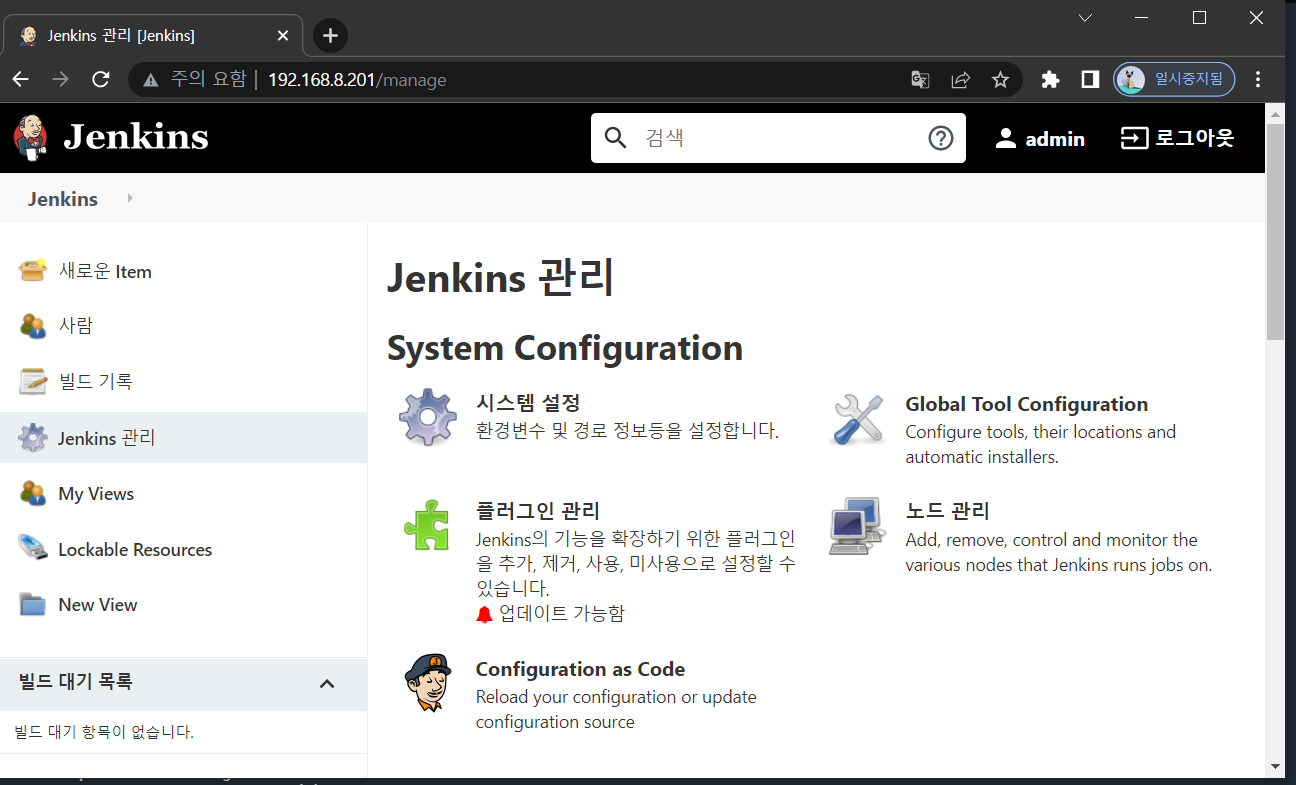

docker pull kiwigrid/k8s-sidecar:0.1.193- 플러그인 설치

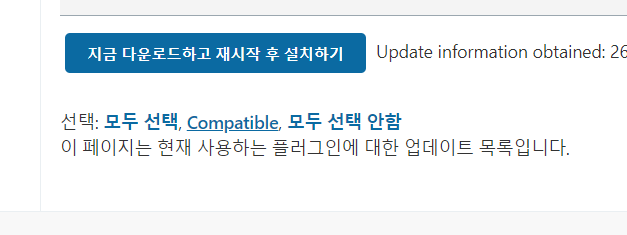

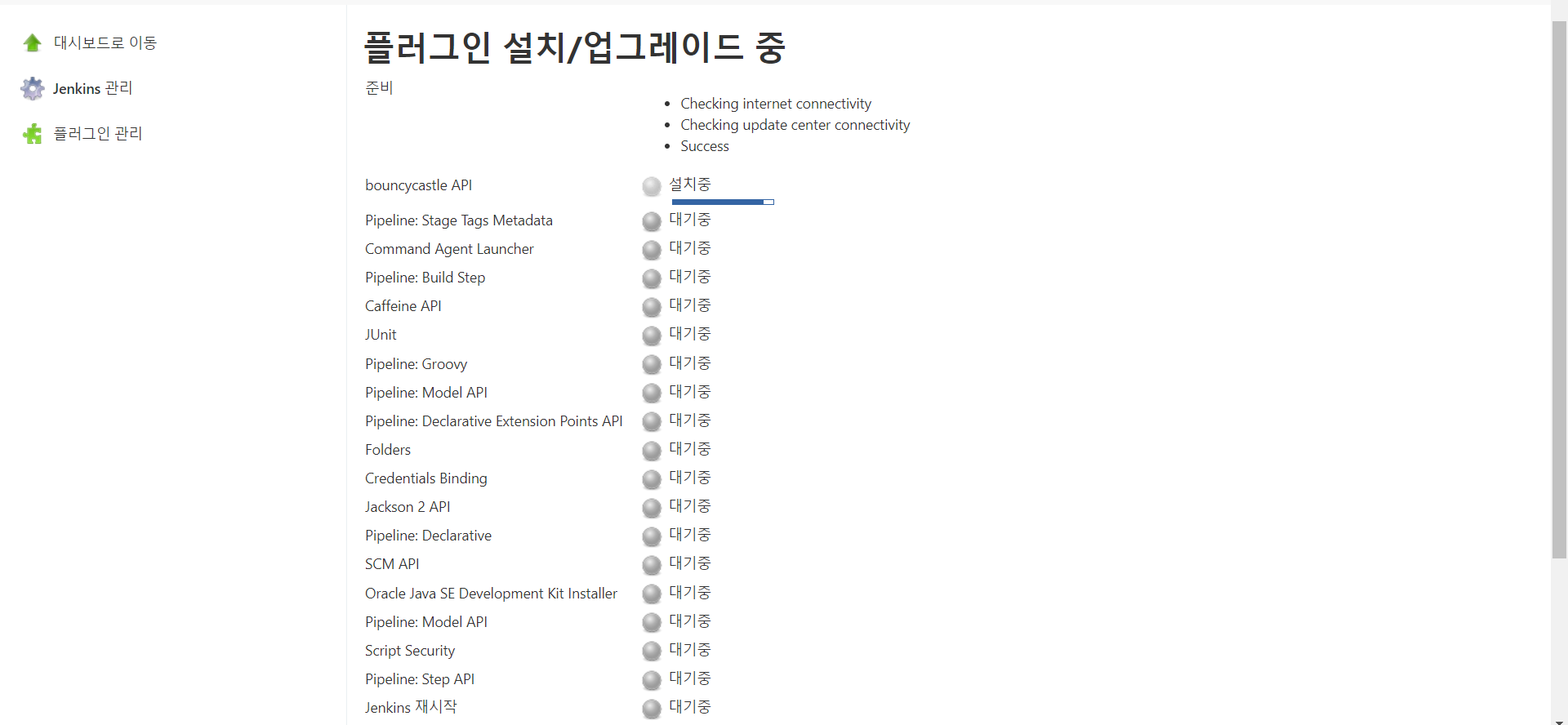

- 호환 가능한 플러그인 설치 (Compatible 선택)

-

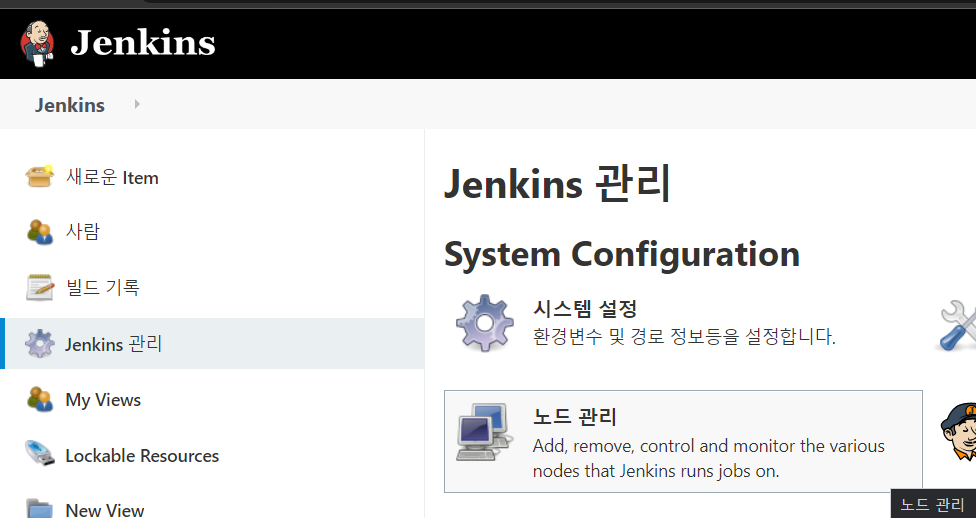

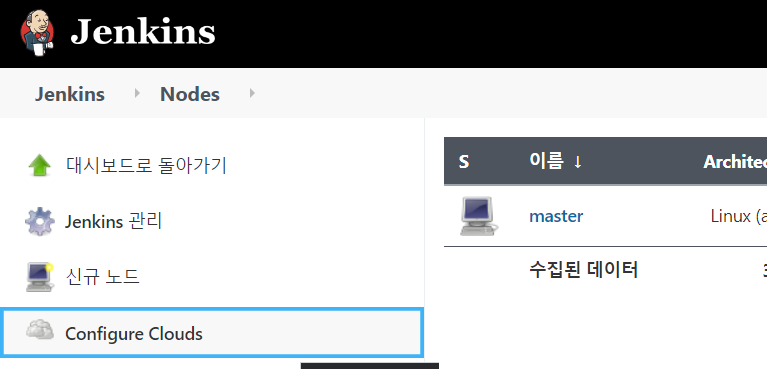

노드 관리 선택

-

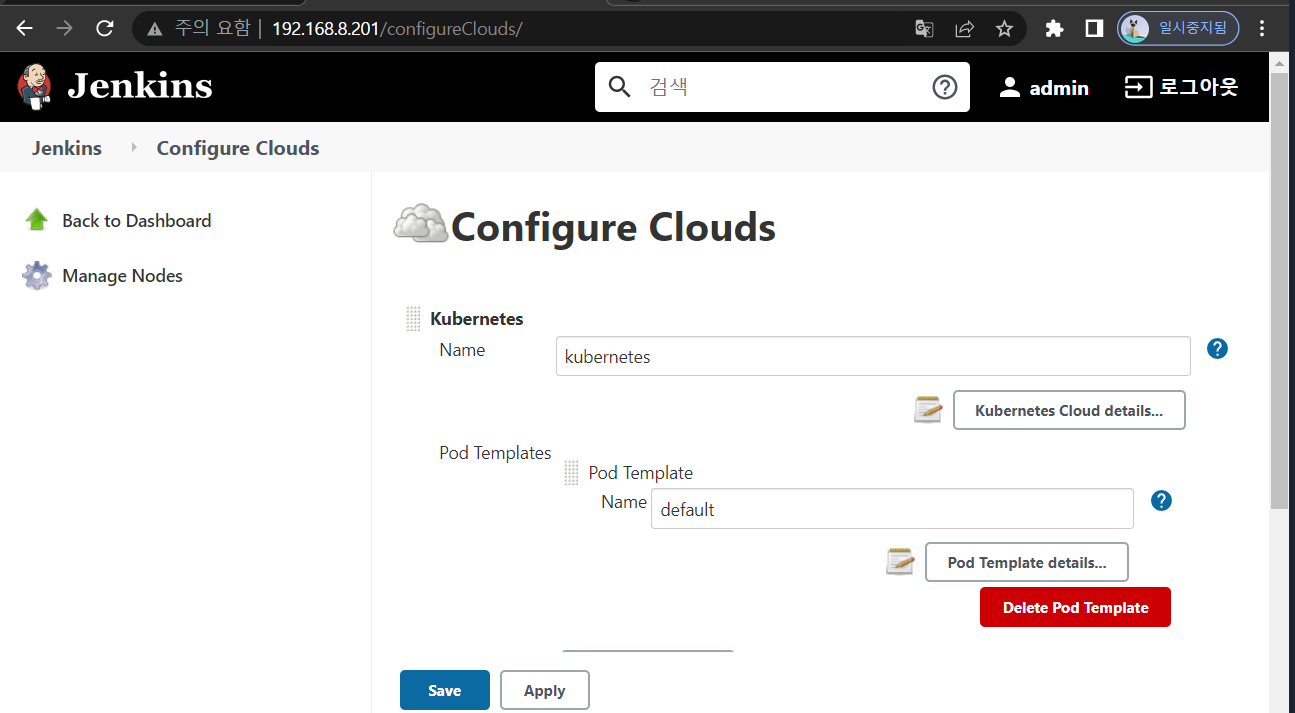

configure clouds 선택

- pod template details 선택

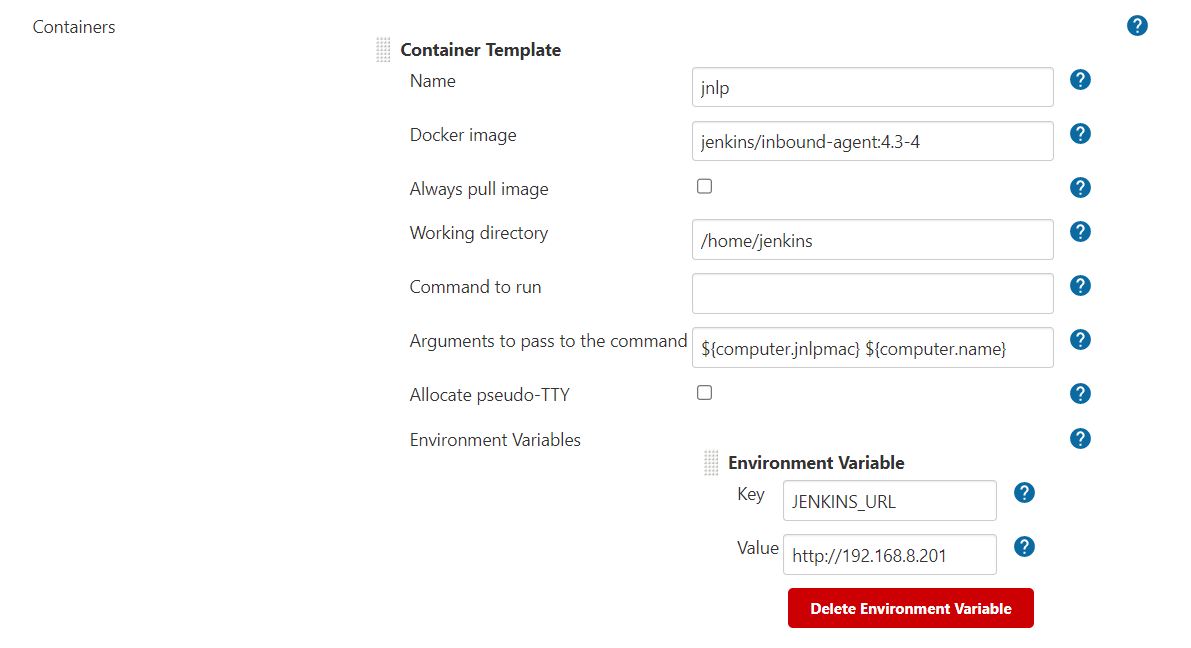

- 환경 변수 수정

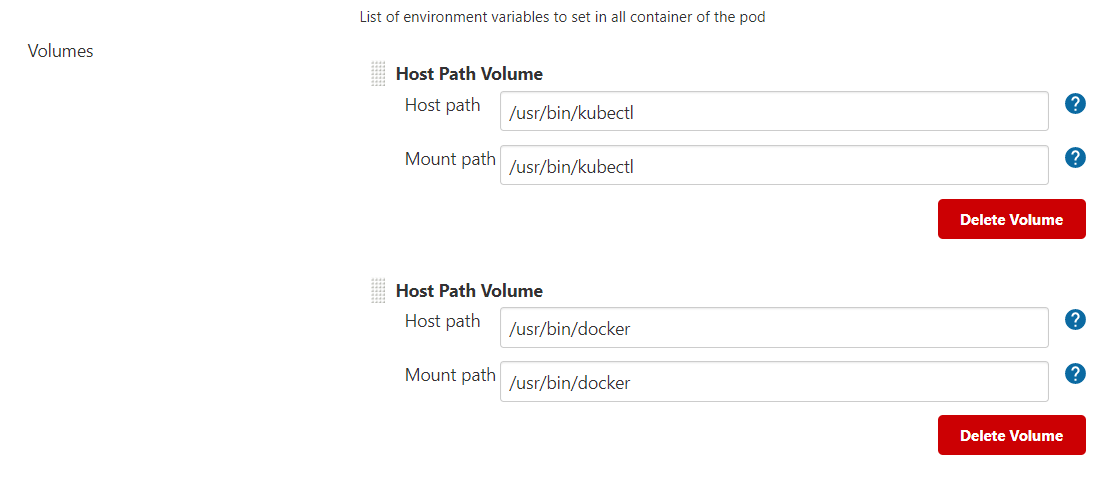

- 도커 경로 수정

root@master:~# which docker

/usr/bin/docker

/usr/bin/docker 로 수정