1. CountVectorizer(문장 사이의 거리)

- CountVectorizer: 문장을 벡터로 변환하는 함수

- 거리를 구하는 것이므로 지도할 내용이 없다

- 벡터로 만들기, 만들어진 벡터 사이의 거리를 계산하는 것이 중요

min-df는 DF(document-frequency)의 최소 빈도값analyzer = 'word'라고 설정시, 학습의 단위를 단어로 설정(ex - home, go, my ...)analyzer = 'char'라고 설정시, 학습의 단위를 글자로 설정(ex - a, b, c, d ...)sublinear_tf파라미터는 TF (Term-Frequency, 단어빈도) 값의 스무딩(smoothing) 여부를 결정하는 파라미터 (True/False) -> outlier 많을 경우 사용n-gram: 단어의 묶음 ex) ngram_range = (1, 2) → 단어 묶음 1개 ~ 2개 'go', 'home', 'go home'max_feature는 tf-idf vector의 최대 feature를 설정해주는 파라미터

from sklearn.feature_extraction.text import CountVectorizer

from konlpy.tag import Okt #한글은 형태소 분석이 필수

vectorizer = CountVectorizer(min_df=1)

contents = [

'상처받은 아이들은 너무 일찍 커버려',

'내가 상처받은 거 아는 사람 불편해',

'잘 사는 사람들은 좋은 사람 되기 쉬워',

'아무 일도 아니야 괜찮아'

]

t= Okt()

contents_tokens = [t.morphs(row) for row in contents]

# 형태소 분석된 결과를 다시 하나의 문장씩으로 합친다

contents_for_vectorize = []

for content in contents_tokens:

sentence = ''

for word in content:

sentence = sentence + ' ' + word

contents_for_vectorize.append(sentence)

X = vectorizer.fit_transform(contents_for_vectorize)

vectorizer.get_feature_names_out()

num_samples, num_features = X.shape # (4, 17) -> 네 개 문장에 전체 말뭉치의 단어가 17개

new_post = ['상처받기 싫어 괜찮아']

new_post_tokens = [t.morphs(row) for row in new_post]

new_post_for_vectorize = []

for content in new_post_tokens:

sentence = ''

for word in content:

sentence = sentence + ' ' + word

new_post_for_vectorize.append(sentence)

new_post_vec = vectorizer.transform(new_post_for_vectorize)

new_post_vec.toarray() # 벡터로 표현 array([[1, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0]])

import scipy as sp

def dist_raw(v1, v2):

delta = v1 - v2

return sp.linalg.norm(delta.toarray())

dist = [dist_raw(each, new_post_vec) for each in X]

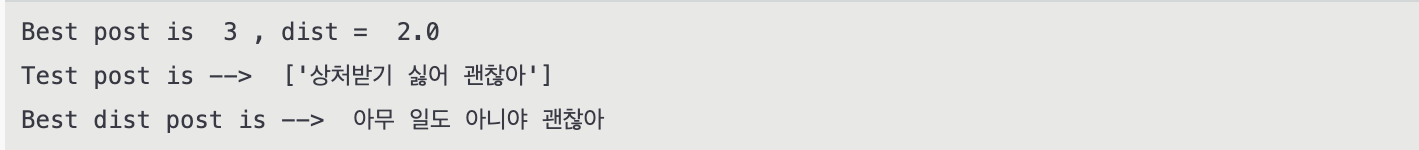

print('Best post is ', dist.index(min(dist)), ', dist= ', min(dist))

print('Test post is -->', new_post)

print('Best dist post is ', contents[dist.index(min(dist))])

2. TF-IDF

- 한 문서에서 많이 등장한 단어에 가중치를 주어서 출력

from sklearn.feature_extraction.text import TfidfVectorizer

vectorizer = TfidfVectorizer(min_df=1, decode_error='ignore')

X = vectorizer.fit_transform(contents_for_vectorize)

new_post_vec = vectorizer.transform(new_post_for_vectorize)

new_post_vec.toarray() # 벡터로 표현

'''

array([[0.78528828, 0. , 0. , 0. , 0. ,

0. , 0. , 0.6191303 , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. ]])

'''

# 정규화시켜서 한쪽 특성이 과하게 측정을 막아줌

def dist_norm(v1, v2):

v1_normalized = v1 /sp.linalg.norm(v1.toarray())

v2_normalized = v1 /sp.linalg.norm(v2.toarray())

delta = v1_normalized - v2_normalized

return sp.linalg.norm(delta.toarray())

dist = [dist_norm(each, new_post_vec) for each in X]

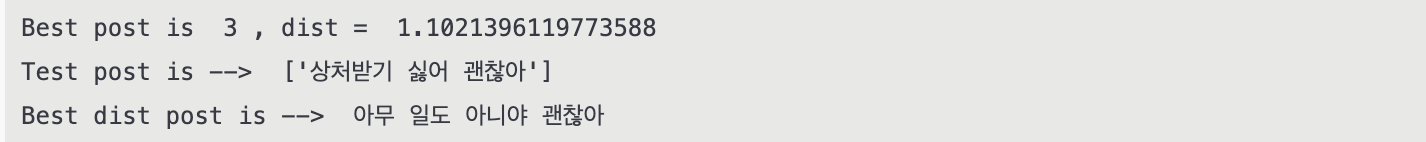

print('Best post is ', dist.index(min(dist)), ', dist= ', min(dist))

print('Test post is -->', new_post)

print('Best dist post is ', contents[dist.index(min(dist))])

3. 네이버 지식인 검색 결과에서 유사한 문장 찾기

1) 네이버 API

import urllib.request

import json

import datetime

def gen_search_url(api_node, search_text, start_num, disp_num):

base = "https://openapi.naver.com/v1/search"

node = "/" + api_node + ".json"

param_query = "?query=" + urllib.parse.quote(search_text)

param_start = "&start=" + str(start_num)

param_disp = "&display=" + str(disp_num)

return base + node + param_query + param_start + param_disp

client_id = 'client_id'

client_secret = 'client_secret'

def get_result_onepage(url):

request = urllib.request.Request(url)

request.add_header("X-Naver-Client-Id",client_id)

request.add_header("X-Naver-Client-Secret",client_secret)

response = urllib.request.urlopen(request)

print("[%s] Url Request Suceess" % datetime.datetime.now())

return json.loads(response.read().decode("utf-8"))

def delete_tag(input_str): # 태그 제거기 호출

input_str = input_str.replace("<b>", "")

input_str = input_str.replace("</b>", "")

return input_str

def get_description(pages):

contents = []

for sentences in pages['items']:

contents.append(delete_tag(sentences['description']))

return contents

url = gen_search_url('kin', '파이썬', 10, 10)

one_result = get_result_onepage(url) # 문장 수집

contents = get_description(one_result) # 컨텐츠를 list로 변환2) 형태소 분석 & vectorize 후 다시 합치기

from sklearn.feature_extraction.text import CountVectorizer

from konlpy.tag import Okt #한글은 형태소 분석이 필수 ~

t= Okt()

vectorizer = CountVectorizer(min_df=1)

contents_tokens = [t.morphs(row) for row in contents]

contents_for_vectorize = []

for content in contents_tokens:

sentence = ''

for word in content:

sentence = sentence + ' ' + word

contents_for_vectorize.append(sentence)

X = vectorizer.fit_transform(contents_for_vectorize)3) 테스트 문장 형태소 분석 & 벡터 변환

new_post = ['파이썬을 배우는데 좋은 방법이 어떤 것인지 추천해주세요']

new_post_tokens = [t.morphs(row) for row in new_post]

new_post_for_vectorize = []

for content in new_post_tokens:

sentence = ''

for word in content:

sentence = sentence + ' ' + word

new_post_for_vectorize.append(sentence)

new_post_vec = vectorizer.transform(new_post_for_vectorize)

new_post_vec.toarray()4) 유클리드 거리

import scipy as sp

def dist_raw(v1, v2):

delta = v1 - v2

return sp.linalg.norm(delta.toarray())

dist = [dist_raw(each, new_post_vec) for each in X]

print('Best post is ', dist.index(min(dist)), ', dist= ', min(dist))

print('Test post is -->', new_post)

print('Best dist post is ', contents[dist.index(min(dist))])

'''

Best post is 7 , dist= 5.916079783099616

Test post is --> ['파이썬을 배우는데 좋은 방법이 어떤 것인지 추천해주세요']

Best dist post is 점프 투 파이썬을 공부 했는데 그 다음에 무엇을 해야하나요?

'''5) Normalize

def dist_norm(v1, v2): # 정규화시켜서 한쪽 특성이 과하게 측정을 막아줌

v1_normalized = v1 /sp.linalg.norm(v1.toarray())

v2_normalized = v1 /sp.linalg.norm(v2.toarray())

delta = v1_normalized - v2_normalized

return sp.linalg.norm(delta.toarray())

dist = [dist_norm(each, new_post_vec) for each in X]

print('Best post is ', dist.index(min(dist)), ', dist= ', min(dist))

print('Test post is -->', new_post)

print('Best dist post is ', contents[dist.index(min(dist))])

'''

Best post is 7 , dist= 2.0413812651491097

Test post is --> ['파이썬을 배우는데 좋은 방법이 어떤 것인지 추천해주세요']

Best dist post is 점프 투 파이썬을 공부 했는데 그 다음에 무엇을 해야하나요?

'''6) TF-IDF

from sklearn.feature_extraction.text import TfidfVectorizer

vectorizer = TfidfVectorizer(min_df=1, decode_error='ignore')

X = vectorizer.fit_transform(contents_for_vectorize)

new_post_vec = vectorizer.transform(new_post_for_vectorize)

dist = [dist_norm(each, new_post_vec) for each in X]

print('Best post is ', dist.index(min(dist)), ', dist= ', min(dist))

print('Test post is -->', new_post)

print('Best dist post is ', contents[dist.index(min(dist))])

'''

Best post is 1 , dist= 0.0

Test post is --> ['파이썬을 배우는데 좋은 방법이 어떤 것인지 추천해주세요']

Best dist post is 책 내용대로 pyworks라는 파이썬 작업한거 폴더만들아놓은게 있는데 그 폴더를 열고 파이썬 인터렉티브

'''Rerference

1) 제로베이스 데이터스쿨 강의자료

2) https://chan-lab.tistory.com/27