- secret을 활용한 https 구성

## 이동

kevin@k8s-master:~/LABs$ mkdir sec-https && cd $_

## 인증서 환경 구성

kevin@k8s-master:~/LABs/sec-https$ mkdir -p ./secret/cert

kevin@k8s-master:~/LABs/sec-https$ mkdir -p ./secret/config

kevin@k8s-master:~/LABs/sec-https$ mkdir -p ./secret/kubetmp

## cert 디렉터리로 이동

kevin@k8s-master:~/LABs/sec-https$ cd ./secret/cert

## https.key 생성

kevin@k8s-master:~/LABs/sec-https/secret/cert$ openssl genrsa -out https.key 2048

Generating RSA private key, 2048 bit long modulus (2 primes)

.................................................+++++

...............................................+++++

e is 65537 (0x010001)

## https.cert 생성

kevin@k8s-master:~/LABs/sec-https/secret/cert$ openssl req -new -x509 -key https.key -out https.cert -days 360 -subj /CN=*.kakao.io

## https.key와 https.cert를 이용하여 secret 생성

kevin@k8s-master:~/LABs/sec-https/secret/cert$ kubectl create secret generic dshub-https --from-file=https.key --from-file=https.cert

## 생성 확인

kevin@k8s-master:~/LABs/sec-https/secret/cert$ kubectl get secret/dshub-https

NAME TYPE DATA AGE

dshub-https Opaque 2 32s

## config 디렉터리로 이동

kevin@k8s-master:~/LABs/sec-https/secret/cert$ cd ../config/

## conf 파일 생성

kevin@k8s-master:~/LABs/sec-https/secret/config$ vi custom-nginx-config.conf

server {

listen 8080;

listen 443 ssl;

server_name www.kakao.io;

ssl_certificate certs/https.cert;

ssl_certificate_key certs/https.key;

ssl_protocols TLSv1 TLSv1.1 TLSv1.2;

ssl_ciphers HIGH:!aNULL:!MD5;

gzip on;

gzip_types text/plain application/xml;

location / {

root /usr/share/nginx/html;

index index.html index.htm;

}

}

## sleep-interval 파일 생성

kevin@k8s-master:~/LABs/sec-https/secret/config$ vi sleep-interval

5

## config 디렉터리 밑에 있는 파일로 configmap 생성

kevin@k8s-master:~/LABs/sec-https/secret$ kubectl create cm dshub-config --from-file=./config

## 확인

kevin@k8s-master:~/LABs/sec-https$ kubectl describe cm dshub-config

...

Data

====

custom-nginx-config.conf:

----

server {

listen 8080;

listen 443 ssl;

server_name www.kakao.io;

ssl_certificate certs/https.cert;

ssl_certificate_key certs/https.key;

ssl_protocols TLSv1 TLSv1.1 TLSv1.2;

ssl_ciphers HIGH:!aNULL:!MD5;

gzip on;

gzip_types text/plain application/xml;

location / {

root /usr/share/nginx/html;

index index.html index.htm;

}

}

sleep-interval:

----

5

...

## sec-https 디렉터리로 이동

kevin@k8s-master:~/LABs/sec-https/secret$ cd ..

## yaml 파일 작성

kevin@k8s-master:~/LABs/sec-https$ vi https-pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: dshub-https

spec:

containers:

- image: dbgurum/k8s-lab:env

env:

- name: INTERVAL

valueFrom:

configMapKeyRef:

name: dshub-config

key: sleep-interval

name: html-generator

volumeMounts:

- name: html

mountPath: /var/htdocs

- image: nginx:alpine

name: web-server

volumeMounts:

- name: html

mountPath: /usr/share/nginx/html

readOnly: true

- name: config

mountPath: /etc/nginx/conf.d

readOnly: true

- name: certs

mountPath: /etc/nginx/certs/

readOnly: true

ports:

- containerPort: 80

- containerPort: 443

volumes:

- name: html

emptyDir: {}

- name: config

configMap:

name: dshub-config

items:

- key: custom-nginx-config.conf

path: https.conf

- name: certs

secret:

secretName: dshub-https

## apply

kevin@k8s-master:~/LABs/sec-https$ kubectl apply -f https-pod.yaml

## 확인

kevin@k8s-master:~/LABs/sec-https$ kubectl get po dshub-https -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

dshub-https 2/2 Running 0 20s 10.109.131.29 k8s-node2 <none> <none>

## port-forward

kevin@k8s-master:~/LABs/sec-https$ kubectl port-forward dshub-https 8443:443 &

[1] 14297

kevin@k8s-master:~/LABs/sec-https$ Forwarding from 127.0.0.1:8443 -> 443

Forwarding from [::1]:8443 -> 443

Handling connection for 8443

## 다른 터미널에서 수행

kevin@k8s-master:~$ curl https://localhost:8443 -k -v

* Trying 127.0.0.1:8443...

* TCP_NODELAY set

* Connected to localhost (127.0.0.1) port 8443 (#0)

* ALPN, offering h2

* ALPN, offering http/1.1

* successfully set certificate verify locations:

* CAfile: /etc/ssl/certs/ca-certificates.crt

CApath: /etc/ssl/certs

* TLSv1.3 (OUT), TLS handshake, Client hello (1):

* TLSv1.3 (IN), TLS handshake, Server hello (2):

* TLSv1.2 (IN), TLS handshake, Certificate (11):

* TLSv1.2 (IN), TLS handshake, Server key exchange (12):

* TLSv1.2 (IN), TLS handshake, Server finished (14):

* TLSv1.2 (OUT), TLS handshake, Client key exchange (16):

* TLSv1.2 (OUT), TLS change cipher, Change cipher spec (1):

* TLSv1.2 (OUT), TLS handshake, Finished (20):

* TLSv1.2 (IN), TLS handshake, Finished (20):

* SSL connection using TLSv1.2 / ECDHE-RSA-AES256-GCM-SHA384

* ALPN, server accepted to use http/1.1

* Server certificate:

* subject: CN=*.kakao.io

* start date: Oct 7 00:09:43 2022 GMT

* expire date: Oct 2 00:09:43 2023 GMT

* issuer: CN=*.kakao.io

* SSL certificate verify result: self signed certificate (18), continuing anyway.

> GET / HTTP/1.1

> Host: localhost:8443

> User-Agent: curl/7.68.0

> Accept: */*

>

* Mark bundle as not supporting multiuse

< HTTP/1.1 200 OK

< Server: nginx/1.23.1

< Date: Fri, 07 Oct 2022 00:24:45 GMT

< Content-Type: text/html

< Content-Length: 31

< Last-Modified: Fri, 07 Oct 2022 00:24:43 GMT

< Connection: keep-alive

< ETag: "633f71cb-1f"

< Accept-Ranges: bytes

<

You will be run over by a bus.

* Connection #0 to host localhost left intact

kevin@k8s-master:~$ curl https://localhost:8443 -k -v

* Trying 127.0.0.1:8443...

* TCP_NODELAY set

* Connected to localhost (127.0.0.1) port 8443 (#0)

* ALPN, offering h2

* ALPN, offering http/1.1

* successfully set certificate verify locations:

* CAfile: /etc/ssl/certs/ca-certificates.crt

CApath: /etc/ssl/certs

* TLSv1.3 (OUT), TLS handshake, Client hello (1):

* TLSv1.3 (IN), TLS handshake, Server hello (2):

* TLSv1.2 (IN), TLS handshake, Certificate (11):

* TLSv1.2 (IN), TLS handshake, Server key exchange (12):

* TLSv1.2 (IN), TLS handshake, Server finished (14):

* TLSv1.2 (OUT), TLS handshake, Client key exchange (16):

* TLSv1.2 (OUT), TLS change cipher, Change cipher spec (1):

* TLSv1.2 (OUT), TLS handshake, Finished (20):

* TLSv1.2 (IN), TLS handshake, Finished (20):

* SSL connection using TLSv1.2 / ECDHE-RSA-AES256-GCM-SHA384

* ALPN, server accepted to use http/1.1

* Server certificate:

* subject: CN=*.kakao.io

* start date: Oct 7 00:09:43 2022 GMT

* expire date: Oct 2 00:09:43 2023 GMT

* issuer: CN=*.kakao.io

* SSL certificate verify result: self signed certificate (18), continuing anyway.

> GET / HTTP/1.1

> Host: localhost:8443

> User-Agent: curl/7.68.0

> Accept: */*

>

* Mark bundle as not supporting multiuse

< HTTP/1.1 200 OK

< Server: nginx/1.23.1

< Date: Fri, 07 Oct 2022 00:24:46 GMT

< Content-Type: text/html

< Content-Length: 31

< Last-Modified: Fri, 07 Oct 2022 00:24:43 GMT

< Connection: keep-alive

< ETag: "633f71cb-1f"

< Accept-Ranges: bytes

<

You will be run over by a bus.

* Connection #0 to host localhost left intact

kevin@k8s-master:~$ curl https://localhost:8443 -k -v

* Trying 127.0.0.1:8443...

* TCP_NODELAY set

* Connected to localhost (127.0.0.1) port 8443 (#0)

* ALPN, offering h2

* ALPN, offering http/1.1

* successfully set certificate verify locations:

* CAfile: /etc/ssl/certs/ca-certificates.crt

CApath: /etc/ssl/certs

* TLSv1.3 (OUT), TLS handshake, Client hello (1):

* TLSv1.3 (IN), TLS handshake, Server hello (2):

* TLSv1.2 (IN), TLS handshake, Certificate (11):

* TLSv1.2 (IN), TLS handshake, Server key exchange (12):

* TLSv1.2 (IN), TLS handshake, Server finished (14):

* TLSv1.2 (OUT), TLS handshake, Client key exchange (16):

* TLSv1.2 (OUT), TLS change cipher, Change cipher spec (1):

* TLSv1.2 (OUT), TLS handshake, Finished (20):

* TLSv1.2 (IN), TLS handshake, Finished (20):

* SSL connection using TLSv1.2 / ECDHE-RSA-AES256-GCM-SHA384

* ALPN, server accepted to use http/1.1

* Server certificate:

* subject: CN=*.kakao.io

* start date: Oct 7 00:09:43 2022 GMT

* expire date: Oct 2 00:09:43 2023 GMT

* issuer: CN=*.kakao.io

* SSL certificate verify result: self signed certificate (18), continuing anyway.

> GET / HTTP/1.1

> Host: localhost:8443

> User-Agent: curl/7.68.0

> Accept: */*

>

* Mark bundle as not supporting multiuse

< HTTP/1.1 200 OK

< Server: nginx/1.23.1

< Date: Fri, 07 Oct 2022 00:25:15 GMT

< Content-Type: text/html

< Content-Length: 94

< Last-Modified: Fri, 07 Oct 2022 00:25:13 GMT

< Connection: keep-alive

< ETag: "633f71e9-5e"

< Accept-Ranges: bytes

<

"You have been in Afghanistan, I perceive."

-- Sir Arthur Conan Doyle, "A Study in Scarlet"

* Connection #0 to host localhost left intact- Ingress LAB: NodePort를 이용한 LoadBalancer

## Deployment 생성

## 이동

kevin@k8s-master:~/LABs$ mkdir ing-https && cd $_

## deployment yaml 파일 작성

kevin@k8s-master:~/LABs/ing-https$ vi phpserver-go-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: phpserver-deployment

labels:

app: phpserver

spec:

replicas: 3

selector:

matchLabels:

app: phpserver

template:

metadata:

labels:

app: phpserver

spec:

containers:

- name: phpserver-container

image: dbgurum/phpserver:2.0

ports:

- containerPort: 80

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: goapp-deployment

labels:

app: goapp

spec:

replicas: 3

selector:

matchLabels:

app: goapp

template:

metadata:

labels:

app: goapp

spec:

containers:

- name: goapp-container

image: oeckikekk/goapp:1.0

ports:

- containerPort: 9090

## service yaml 파일 작성

kevin@k8s-master:~/LABs/ing-https$ vi phpserver-go-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: phpserver-lb

spec:

type: NodePort

ports:

- port: 80

targetPort: 80

selector:

app: phpserver

---

apiVersion: v1

kind: Service

metadata:

name: goapp-lb

spec:

type: NodePort

ports:

- port: 80

targetPort: 9090

selector:

app: goapp

## apply

kevin@k8s-master:~/LABs/ing-https$ kubectl apply -f phpserver-go-deployment.yaml

kevin@k8s-master:~/LABs/ing-https$ kubectl apply -f phpserver-go-svc.yaml

## server.key 생성

kevin@k8s-master:~/LABs/ing-https$ openssl genrsa -out server.key 2048

## server.cert 생성

kevin@k8s-master:~/LABs/ing-https$ openssl req -new -x509 -key server.key -out server.cert -days 360 -subj /CN=php.kakao.io,goapp.kakao.io

## server.key와 server.cert로 secret 생성

kevin@k8s-master:~/LABs/ing-https$ kubectl create secret tls k8s-secret --cert=server.cert --key=server.key

kevin@k8s-master:~/LABs/ing-https$ kubectl get secrets

NAME TYPE DATA AGE

k8s-secret kubernetes.io/tls 2 29s

## ingress yaml 파일 작성

kevin@k8s-master:~/LABs/ing-https$ vi phpserver-go-ingress.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: phpserver-goapp-ingress

spec:

tls:

- hosts:

- php.kakao.io

- goapp.kakao.io

secretName: k8s-secret

rules:

- host: php.kakao.io

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: phpserver-lb

port:

number: 80

- host: goapp.kakao.io

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: goapp-lb

port:

number: 80

## apply

kevin@k8s-master:~/LABs/ing-https$ kubectl apply -f phpserver-go-ingress.yaml

## ingress 확인

kevin@k8s-master:~/LABs/ing-https$ kubectl get ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

phpserver-goapp-ingress <none> php.kakao.io,goapp.kakao.io 80, 443 94s

kevin@k8s-master:~/LABs/ing-https$ kubectl get deploy,po,svc,ingress -o wide

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

deployment.apps/goapp-deployment 3/3 3 3 10m goapp-container oeckikekk/goapp:1.0 app=goapp

deployment.apps/phpserver-deployment 3/3 3 3 10m phpserver-container dbgurum/phpserver:2.0 app=phpserver

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/goapp-deployment-765f4f49c-4sqgg 1/1 Running 0 10m 10.109.131.30 k8s-node2 <none> <none>

pod/goapp-deployment-765f4f49c-pggb6 1/1 Running 0 10m 10.111.156.83 k8s-node1 <none> <none>

pod/goapp-deployment-765f4f49c-q4gpt 1/1 Running 0 10m 10.111.156.84 k8s-node1 <none> <none>

pod/phpserver-deployment-c45649987-cxm6m 1/1 Running 0 10m 10.111.156.81 k8s-node1 <none> <none>

pod/phpserver-deployment-c45649987-mhp6z 1/1 Running 0 10m 10.109.131.28 k8s-node2 <none> <none>

pod/phpserver-deployment-c45649987-xbkgd 1/1 Running 0 10m 10.111.156.102 k8s-node1 <none> <none>

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/goapp-lb NodePort 10.96.213.155 <none> 80:31875/TCP 8m13s app=goapp

service/phpserver-lb NodePort 10.97.89.37 <none> 80:31728/TCP 8m13s app=phpserver

NAME CLASS HOSTS ADDRESS PORTS AGE

ingress.networking.k8s.io/phpserver-goapp-ingress <none> php.kakao.io,goapp.kakao.io 80, 443 2m11s

## host 접근을 하기 위한 추가 설정

kevin@k8s-master:~/LABs/ing-https$ sudo vi /etc/hosts

127.0.0.1 localhost

127.0.1.1 k8s-master

192.168.56.100 k8s-master php.kakao.io goapp.kakao.io

192.168.56.101 k8s-node1 php.kakao.io goapp.kakao.io

192.168.56.102 k8s-node2 php.kakao.io goapp.kakao.io

192.168.56.103 k8s-node3 php.kakao.io goapp.kakao.io

...

## goapp 접근 테스트 - 각 pod로 접근하는 것을 확인

kevin@k8s-master:~/LABs/ing-https$ curl goapp.kakao.io:31875

Hostname: goapp-deployment-765f4f49c-4sqgg

IP: [10.109.131.30]

kevin@k8s-master:~/LABs/ing-https$ curl goapp.kakao.io:31875

Hostname: goapp-deployment-765f4f49c-pggb6

IP: [10.111.156.83]

kevin@k8s-master:~/LABs/ing-https$ curl goapp.kakao.io:31875

Hostname: goapp-deployment-765f4f49c-q4gpt

IP: [10.111.156.84]

## phpserver 접근 테스트 - 각 pod로 접근하는 것을 확인

kevin@k8s-master:~/LABs/ing-https$ curl goapp.kakao.io:31728

Container hostName & Port#: phpserver-deployment-c45649987-xbkgd

kevin@k8s-master:~/LABs/ing-https$ curl goapp.kakao.io:31728

Container hostName & Port#: phpserver-deployment-c45649987-mhp6z

kevin@k8s-master:~/LABs/ing-https$ curl goapp.kakao.io:31728

Container hostName & Port#: phpserver-deployment-c45649987-cxm6mDeployment

: 상위 추상화 개념. pod를 다루는 가장 큰 개념

Pod, Deployment는 stateless object이다. <=> stateful object도 존재

ReplicaSet(rs)의 old version이 Replication controller(rc)이다.

ㄴ pod 복제 => 서비스 안정성(무중단), HA(고가용성), LB 구현

ㄴ replicas=3과 같이 설정한다 => 사용자가 기대하는 상태를 유지하는 기능

ㄴ 항상 지정된 Pod 수를 유지한다.

Rolling update

ㄴ 무중단으로 업데이트를 진행한다.

Rollout(undo -> revision(이전 값을 10개까지 보유한다.))

ㄴ 언제든지 원하는 곳으로 되돌릴 수 있다.

spec

-

replicas

: 복제 수 유지 -

selector

: label과 매칭되는 pod가 있는지 확인 후 연결 -

template

: 매칭되는 pod가 없으면 template 양식(format)에 맞는 신규 pod 생성 -

nginx deployment

## 이동

kevin@k8s-master:~/LABs$ mkdir deploy && cd $_

## yaml 파일 작성

kevin@k8s-master:~/LABs/deploy$ vi nginx-deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deploy

labels:

app: myweb

spec:

replicas: 3

selector:

matchLabels:

app: myweb

template:

metadata:

labels:

app: myweb

spec:

containers:

- image: nginx:1.14

name: myweb-container

ports:

- containerPort: 80

## apply

kevin@k8s-master:~/LABs/deploy$ kubectl apply -f nginx-deploy.yaml

## 확인

kevin@k8s-master:~/LABs/deploy$ kubectl get deploy,po,rs -o wide

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

deployment.apps/nginx-deploy 3/3 3 3 76s myweb-container nginx:1.14 app=myweb

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/nginx-deploy-7fccc9d87f-6bnjx 1/1 Running 0 76s 10.111.156.85 k8s-node1 <none> <none>

pod/nginx-deploy-7fccc9d87f-fkcph 1/1 Running 0 76s 10.109.131.36 k8s-node2 <none> <none>

pod/nginx-deploy-7fccc9d87f-tmvtp 1/1 Running 0 76s 10.109.131.33 k8s-node2 <none> <none>

NAME DESIRED CURRENT READY AGE CONTAINERS IMAGES SELECTOR

replicaset.apps/nginx-deploy-7fccc9d87f 3 3 3 76s myweb-container nginx:1.14 app=myweb,pod-template-hash=7fccc9d87f

## 다른 터미널에서 watch

kevin@k8s-master:~$ kubectl get po -w

## delete를 하면 terminating 되는 것 확인 가능

kevin@k8s-master:~/LABs/deploy$ kubectl delete -f nginx-deploy.yaml

nginx-deploy-7fccc9d87f-tmvtp 1/1 Terminating 0 4m52s

nginx-deploy-7fccc9d87f-6bnjx 1/1 Terminating 0 4m52s

nginx-deploy-7fccc9d87f-fkcph 1/1 Terminating 0 4m52s

nginx-deploy-7fccc9d87f-6bnjx 1/1 Terminating 0 4m52s

nginx-deploy-7fccc9d87f-tmvtp 1/1 Terminating 0 4m52s

nginx-deploy-7fccc9d87f-fkcph 1/1 Terminating 0 4m52s

nginx-deploy-7fccc9d87f-fkcph 0/1 Terminating 0 4m53s

nginx-deploy-7fccc9d87f-fkcph 0/1 Terminating 0 4m53s

nginx-deploy-7fccc9d87f-fkcph 0/1 Terminating 0 4m53s

nginx-deploy-7fccc9d87f-6bnjx 0/1 Terminating 0 4m53s

nginx-deploy-7fccc9d87f-6bnjx 0/1 Terminating 0 4m53s

nginx-deploy-7fccc9d87f-6bnjx 0/1 Terminating 0 4m53s

nginx-deploy-7fccc9d87f-tmvtp 0/1 Terminating 0 4m53s

nginx-deploy-7fccc9d87f-tmvtp 0/1 Terminating 0 4m53s

nginx-deploy-7fccc9d87f-tmvtp 0/1 Terminating 0 4m53s

nginx-deploy-7fccc9d87f-5xfxd 0/1 Pending 0 0s

nginx-deploy-7fccc9d87f-5xfxd 0/1 Pending 0 0s

nginx-deploy-7fccc9d87f-2q4pd 0/1 Pending 0 0s

nginx-deploy-7fccc9d87f-nkmqs 0/1 Pending 0 0s

nginx-deploy-7fccc9d87f-2q4pd 0/1 Pending 0 1s

nginx-deploy-7fccc9d87f-nkmqs 0/1 Pending 0 1s

## 다시 apply

kevin@k8s-master:~/LABs/deploy$ kubectl apply -f nginx-deploy.yaml

nginx-deploy-7fccc9d87f-5xfxd 0/1 ContainerCreating 0 1s

nginx-deploy-7fccc9d87f-2q4pd 0/1 ContainerCreating 0 1s

nginx-deploy-7fccc9d87f-nkmqs 0/1 ContainerCreating 0 1s

nginx-deploy-7fccc9d87f-nkmqs 0/1 ContainerCreating 0 1s

nginx-deploy-7fccc9d87f-2q4pd 0/1 ContainerCreating 0 1s

nginx-deploy-7fccc9d87f-5xfxd 0/1 ContainerCreating 0 1s

nginx-deploy-7fccc9d87f-2q4pd 1/1 Running 0 2s

nginx-deploy-7fccc9d87f-5xfxd 1/1 Running 0 3s

nginx-deploy-7fccc9d87f-nkmqs 1/1 Running 0 3s

## pod 하나 삭제하면 바로 새로운 것 만든다

kevin@k8s-master:~/LABs/deploy$ kubectl delete pod/nginx-deploy-7fccc9d87f-2q4pd

nginx-deploy-7fccc9d87f-2q4pd 1/1 Terminating 0 2m20s

nginx-deploy-7fccc9d87f-jjtwf 0/1 Pending 0 0s

nginx-deploy-7fccc9d87f-jjtwf 0/1 Pending 0 0s

nginx-deploy-7fccc9d87f-jjtwf 0/1 ContainerCreating 0 0s

nginx-deploy-7fccc9d87f-2q4pd 1/1 Terminating 0 2m21s

nginx-deploy-7fccc9d87f-jjtwf 0/1 ContainerCreating 0 1s

nginx-deploy-7fccc9d87f-2q4pd 0/1 Terminating 0 2m22s

nginx-deploy-7fccc9d87f-2q4pd 0/1 Terminating 0 2m22s

nginx-deploy-7fccc9d87f-2q4pd 0/1 Terminating 0 2m22s

nginx-deploy-7fccc9d87f-jjtwf 1/1 Running 0 3s

## pod 정보 json으로 보기

kevin@k8s-master:~/LABs/deploy$ kubectl get po nginx-deploy-7fccc9d87f-5xfxd -o json- pod image 버전 바꾸기

## pod의 image 알아내기

kevin@k8s-master:~/LABs/deploy$ kubectl get deploy nginx-deploy -o=jsonpath='{.spec.template.spec.containers[0].image}{"\n"}'

nginx:1.14

## pod image version 바꾸기

kevin@k8s-master:~/LABs/deploy$ kubectl set image deploy nginx-deploy myweb-container=nginx:1.17

## 확인

kevin@k8s-master:~/LABs/deploy$ kubectl get deploy nginx-deploy -o=jsonpath='{.spec.template.spec.containers[0].image}{"\n"}'

nginx:1.17

## nginx:1.19 버전으로 바꾸기

kevin@k8s-master:~/LABs/deploy$ kubectl set image deploy nginx-deploy myweb-container=nginx:1.19

## 확인

kevin@k8s-master:~/LABs/deploy$ kubectl get deploy nginx-deploy -o=jsonpath='{.spec.template.spec.containers[0].image}{"\n"}'

nginx:1.19

## 이전버전으로 다시 돌아가기 위해 history 보기

kevin@k8s-master:~/LABs/deploy$ kubectl rollout history deploy nginx-deploy

deployment.apps/nginx-deploy

REVISION CHANGE-CAUSE

1 <none> ## 1.14

2 <none> ## 1.17

3 <none> ## 1.19

## 바로 이전 버전으로 돌아가기 - 현재 1.19니까 1.17로 돌아가야 한다

kevin@k8s-master:~/LABs/deploy$ kubectl rollout undo deploy nginx-deploy

## 확인

kevin@k8s-master:~/LABs/deploy$ kubectl get deploy nginx-deploy -o=jsonpath='{.spec.template.spec.containers[0].image}{"\n"}'

nginx:1.17

## 2번을 가져와서 4번을 만들었다는 것이다

kevin@k8s-master:~/LABs/deploy$ kubectl rollout history deploy nginx-deploy

deployment.apps/nginx-deploy

REVISION CHANGE-CAUSE

1 <none> ## 1.14

3 <none> ## 1.19

4 <none> ## 1.17

## nginx:1.21.1-alpine로 버전 바꾸기

kevin@k8s-master:~/LABs/deploy$ kubectl set image deploy nginx-deploy myweb-container=nginx:1.21.1-alpine

## 확인

kevin@k8s-master:~/LABs/deploy$ kubectl get deploy nginx-deploy -o=jsonpath='{.spec.template.spec.containers[0].image}{"\n"}'

nginx:1.21.1-alpine

## history - 5번 생성됨

kevin@k8s-master:~/LABs/deploy$ kubectl rollout history deploy nginx-deploy

deployment.apps/nginx-deploy

REVISION CHANGE-CAUSE

1 <none> ## 1.14

3 <none> ## 1.19

4 <none> ## 1.17

5 <none> ## 1.21.1-alpine

## REVISION 번호에 따른 image version 확인

kevin@k8s-master:~/LABs/deploy$ kubectl rollout history deployment nginx-deploy --revision=1

Image: nginx:1.14

kevin@k8s-master:~/LABs/deploy$ kubectl rollout history deployment nginx-deploy --revision=3

Image: nginx:1.19

kevin@k8s-master:~/LABs/deploy$ kubectl rollout history deployment nginx-deploy --revision=4

Image: nginx:1.17

kevin@k8s-master:~/LABs/deploy$ kubectl rollout history deployment nginx-deploy --revision=5

Image: nginx:1.21.1-alpine

## 3번을 가져와서 6번을 만든다

kevin@k8s-master:~/LABs/deploy$ kubectl rollout undo deployment nginx-deploy --to-revision=3

## 확인

kevin@k8s-master:~/LABs/deploy$ kubectl get deploy nginx-deploy -o=jsonpath='{.spec.template.spec.containers[0].image}{"\n"}'

nginx:1.19

## history

kevin@k8s-master:~/LABs/deploy$ kubectl rollout history deployment nginx-deploy

deployment.apps/nginx-deploy

REVISION CHANGE-CAUSE

1 <none> ## 1.14

4 <none> ## 1.17

5 <none> ## 1.21.1-alpine

6 <none> ## 1.19

## 1.23.1-alpine 버전으로 바꾸기

kevin@k8s-master:~/LABs/deploy$ kubectl set image deploy nginx-deploy myweb-container=nginx:1.23.1-alpine

## 확인

kevin@k8s-master:~/LABs/deploy$ kubectl get deploy nginx-deploy -o=jsonpath='{.spec.template.spec.containers[0].image}{"\n"}'

nginx:1.23.1-alpine

## history

kevin@k8s-master:~/LABs/deploy$ kubectl rollout history deployment nginx-deploy

deployment.apps/nginx-deploy

REVISION CHANGE-CAUSE

1 <none> ## 1.14

4 <none> ## 1.17

5 <none> ## 1.21.1-alpine

6 <none> ## 1.19

7 <none> ## 1.23.1-alpine

## 5의 image version 확인

kevin@k8s-master:~/LABs/deploy$ kubectl rollout history deployment nginx-deploy --revision=5

Image: nginx:1.21.1-alpine

## 5번을 가져와서 8을 만든다

kevin@k8s-master:~/LABs/deploy$ kubectl rollout undo deployment nginx-deploy --to-revision=5

## 확인

kevin@k8s-master:~/LABs/deploy$ kubectl get deploy nginx-deploy -o=jsonpath='{.spec.template.spec.containers[0].image}{"\n"}'

nginx:1.21.1-alpinerevisionHistoryLimit=10

history data가 10개까지 적힌다. yaml 파일에서 설정해서 바꿔줄 수 있다.

- scale out / in

## 두배로 늘리기

kevin@k8s-master:~/LABs/deploy$ kubectl scale deploy nginx-deploy --replicas=6

## 확인

kevin@k8s-master:~/LABs/deploy$ kubectl get deploy,po -o wide | grep nginx

deployment.apps/nginx-deploy 6/6 6 6 39m myweb-container nginx:1.21.1-alpine app=myweb

pod/nginx-deploy-74fb76486-2cmpw 1/1 Running 0 9s 10.109.131.54 k8s-node2 <none> <none>

pod/nginx-deploy-74fb76486-5gjs8 1/1 Running 0 9s 10.111.156.113 k8s-node1 <none> <none>

pod/nginx-deploy-74fb76486-hjcjv 1/1 Running 0 18m 10.111.156.112 k8s-node1 <none> <none>

pod/nginx-deploy-74fb76486-t7s7h 1/1 Running 0 9s 10.109.131.45 k8s-node2 <none> <none>

pod/nginx-deploy-74fb76486-x7dcq 1/1 Running 0 18m 10.109.131.47 k8s-node2 <none> <none>

pod/nginx-deploy-74fb76486-x9fh2 1/1 Running 0 18m 10.111.156.111 k8s-node1 <none> <none>

## 줄이기

kevin@k8s-master:~/LABs/deploy$ kubectl scale deploy nginx-deploy --replicas=1

## 확인

kevin@k8s-master:~/LABs/deploy$ kubectl get deploy,po -o wide | grep nginx

deployment.apps/nginx-deploy 1/1 1 1 39m myweb-container nginx:1.21.1-alpine app=myweb

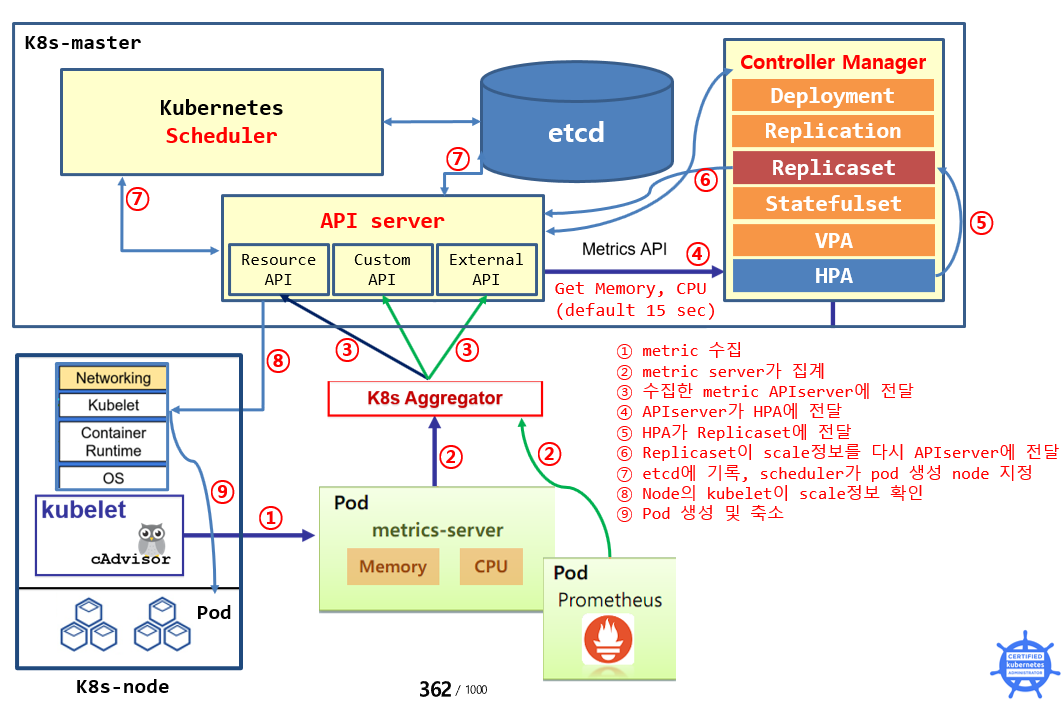

pod/nginx-deploy-74fb76486-x7dcq 1/1 Running 0 19m 10.109.131.47 k8s-node2 <none> <none>HPA

: CPU, Memory 사용률 계산을 통해 적정한 Pod 수를 지정한다.(scale out/in)

확장성 object. 수평적(scale-out) Pod 자동 확장기 <=> VPA

기준은 직접 정한다.(ex. cpu > 50)

ㄴ Deployment, ReplicaSet, DaemonSet이 대상이 된다.

사용 이유

사용 패턴을 분석해서 패턴에 맞게 resource를 알아서 배치한다.

워크로드의 크기를 수요에 맞게 자동으로 스케일링하는 것을 목표로 한다.

ex. 온라인 쇼핑물의 접속자수

적은 날에는 애플리케이션 인스턴스(pod) 수를 2개로 운영

Black Friday 등 사용자가 증가하는 날에는 동적으로 10개로 증가시켜서 운영

조정을 위한 계산은?

원하는 Replica 수 = ceil[현재 Replica 수 * (현재 Metric 값 / 원하는 Metric 값)]

ceil : 최대 정수

1.3 => 2

HPA 동작원리

- 버전 확인

kevin@k8s-master:~/LABs/deploy$ kubectl api-resources | grep -i horizon

horizontalpodautoscalers hpa autoscaling/v2 true HorizontalPodAutoscaler- metrics-server 설치

## apply

kevin@k8s-master:~$ kubectl apply -f https://github.com/kubernetes-sigs/metrics-server/releases/latest/download/components.yaml

## 설정 바꾸기

kevin@k8s-master:~$ kubectl edit deployment -n kube-system metrics-server

39 - args:

40 - --cert-dir=/tmp

41 - --secure-port=4443

42 - --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname

## 추가

43 - --kubelet-insecure-tls

44 - --kubelet-use-node-status-port

45 - --metric-resolution=15s

## True 확인

kevin@k8s-master:~$ kubectl get apiservices | grep metrics

v1beta1.metrics.k8s.io kube-system/metrics-server True 8m40s

## 아래가 실행되면 잘 설정된 것

kevin@k8s-master:~$ kubectl top node

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

k8s-master 363m 9% 1611Mi 42%

k8s-node1 462m 11% 1965Mi 51%

k8s-node2 412m 10% 2021Mi 52%- HPA 사용해보기

## 이동

kevin@k8s-master:~/LABs$ mkdir hpa && cd $_

## deployment, service yaml 파일 작성

kevin@k8s-master:~/LABs/hpa$ vi hpa-cpu50.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: hpa-cpu50

labels:

resource: cpu

spec:

replicas: 2

selector:

matchLabels:

resource: cpu

template:

metadata:

labels:

resource: cpu

spec:

containers:

- image: dbgurum/k8s-lab:hpa

name: hpa-cpu50

ports:

- containerPort: 8080

resources:

requests:

cpu: 10m

limits:

cpu: 20m

---

apiVersion: v1

kind: Service

metadata:

name: hpa-cpu50-svc

spec:

selector:

resource: cpu

ports:

- port: 8080

targetPort: 8080

nodePort: 30008

type: NodePort

## apply

kevin@k8s-master:~/LABs/hpa$ kubectl apply -f hpa-cpu50.yaml

## 정보 확인

kevin@k8s-master:~/LABs/hpa$ kubectl describe deployments.apps hpa-cpu50

## hpa yaml 파일 생성

kevin@k8s-master:~/LABs/hpa$ kubectl autoscale deployment hpa-cpu50 --cpu-percent=50 --min=1 --max=10 --dry-run=client -o yaml > hpa-cpu50-autos.yaml

## 복사

kevin@k8s-master:~/LABs/hpa$ cp hpa-cpu50-autos.yaml hpa-cpu50-autos-2.yaml

## yaml 파일 확인

kevin@k8s-master:~/LABs/hpa$ vi hpa-cpu50-autos.yaml

apiVersion: autoscaling/v1

kind: HorizontalPodAutoscaler

metadata:

creationTimestamp: null

name: hpa-cpu50

spec:

maxReplicas: 10

minReplicas: 1

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: hpa-cpu50

targetCPUUtilizationPercentage: 50

status:

currentReplicas: 0

desiredReplicas: 0

## yaml 파일 2 수정

kevin@k8s-master:~/LABs/hpa$ vi hpa-cpu50-autos-2.yaml

apiVersion: autoscaling/v1

kind: HorizontalPodAutoscaler

metadata:

creationTimestamp: null

name: hpa-cpu50

spec:

maxReplicas: 10

minReplicas: 1

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: hpa-cpu50

metrics:

- type: Resource

resource:

name: cpu

target:

type: Utilization

averageUtilization: 50

## apply

kevin@k8s-master:~/LABs/hpa$ kubectl apply -f hpa-cpu50-autos.yaml

## hpa watch

kevin@k8s-master:~$ kubectl get hpa -w

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

hpa-cpu50 Deployment/hpa-cpu50 0%/50% 1 10 2 20s

## 요청 많이 하기

kevin@k8s-master:~/LABs/hpa$ while true; do curl 192.168.56.101:30008/hostname; sleep 0.05; done

^c

## REPICAS가 점점 늘어나는 것을 볼 수 있다

## 요청이 안 오면 다시 줄어든다

kevin@k8s-master:~$ kubectl get hpa -w

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

hpa-cpu50 Deployment/hpa-cpu50 0%/50% 1 10 2 20s

hpa-cpu50 Deployment/hpa-cpu50 125%/50% 1 10 2 2m30s

hpa-cpu50 Deployment/hpa-cpu50 70%/50% 1 10 4 2m45s

hpa-cpu50 Deployment/hpa-cpu50 55%/50% 1 10 5 3m

hpa-cpu50 Deployment/hpa-cpu50 62%/50% 1 10 5 3m16s

hpa-cpu50 Deployment/hpa-cpu50 46%/50% 1 10 7 3m31s

## 삭제

kevin@k8s-master:~/LABs/hpa$ kubectl delete deployments.apps hpa-cpu50

kevin@k8s-master:~/LABs/hpa$ kubectl delete hpa hpa-cpu50- Apply the autoscaling to this deployment(webapp) with minimum 10 and maximum 20 replicas and target CPU of 85% and verify hpa is created and replicas are increased to 10 from 1.

Clean the cluster by deleting deployment and hpa you just Created.

실습

- redis를 이용한 버전관리

redis:5 버전의 deployment "redis-db" 생성 (replicas=2)

watch로 관찰(-w)

버전관리 수행 -> redis:5,6,7 버전 활용

## deploy yaml 파일 작성

kevin@k8s-master:~/LABs/deploy$ vi redis-db-deply.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: redis-db

labels:

app: redisdb

spec:

revisionHistoryLimit: 3

replicas: 2

selector:

matchLabels:

app: redisdb

template:

metadata:

labels:

app: redisdb

spec:

containers:

- image: redis:5

name: redis-container

ports:

- containerPort: 6379

## apply

kevin@k8s-master:~/LABs/deploy$ kubectl apply -f redis-db-deply.yaml

## 확인

kevin@k8s-master:~/LABs/deploy$ kubectl get deploy,po,rs -o wide

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

deployment.apps/redis-db 2/2 2 2 16s redis-container redis:5 app=redisdb

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/redis-db-86b4959cf8-9jtn6 1/1 Running 0 16s 10.109.131.53 k8s-node2 <none> <none>

pod/redis-db-86b4959cf8-fg87h 1/1 Running 0 16s 10.111.156.119 k8s-node1 <none> <none>

NAME DESIRED CURRENT READY AGE CONTAINERS IMAGES SELECTOR

replicaset.apps/redis-db-86b4959cf8 2 2 2 16s redis-container redis:5 app=redisdb,pod-template-hash=86b4959cf8

## watch

kevin@k8s-master:~$ kubectl get po -w

## 버전 바꾸기

kevin@k8s-master:~/LABs/hpa$ kubectl set image deploy redis-db redis-container=redis:6

## 확인

kevin@k8s-master:~/LABs/hpa$ kubectl get deploy redis-db -o=jsonpath='{.spec.template.spec.containers[0].image}{"\n"}'

redis:6- create로 deployment 만들기

## deployment 생성

kevin@k8s-master:~/LABs/deploy$ kubectl create deployment redis-deploy --image=nginx:1.23.1-alpine --dry-run=client -o yaml > redis-deploy.yaml

## yaml 파일 확인

kevin@k8s-master:~/LABs/deploy$ vi redis-deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

creationTimestamp: null

labels:

app: redis-deploy

name: redis-deploy

spec:

replicas: 1

selector:

matchLabels:

app: redis-deploy

strategy: {}

template:

metadata:

creationTimestamp: null

labels:

app: redis-deploy

spec:

containers:

- image: nginx:1.23.1-alpine

name: nginx

resources: {}

status: {}- create로 service 생성하기

## deployment 생성

kevin@k8s-master:~/LABs/deploy$ kubectl create deployment myweb88 --image=nginx:1.23.1-alpine --port=80

## 확인

kevin@k8s-master:~/LABs/deploy$ kubectl get deploy,po,svc | grep -i myweb88

deployment.apps/myweb88 1/1 1 1 25s

pod/myweb88-75b5d55ccd-xmgqr 1/1 Running 0 25s

## deployment 생성

kevin@k8s-master:~/LABs/deploy$ kubectl create deployment myweb77 --image=nginx:1.23.1-alpine --port=80 --replicas=3

## 확인

kevin@k8s-master:~/LABs/deploy$ kubectl get deploy,po,svc | grep -i myweb77

deployment.apps/myweb77 3/3 3 3 3s

pod/myweb77-dd9477d68-22cql 1/1 Running 0 3s

pod/myweb77-dd9477d68-dd4qg 1/1 Running 0 3s

pod/myweb77-dd9477d68-n2xws 1/1 Running 0 3s

## service 생성

## default는 ClusterIP

kevin@k8s-master:~/LABs/deploy$ kubectl expose deploy myweb77 --name=myweb-svc --port=8765 --target-port=80 --dry-run=client -o yaml > myweb-svc.yaml

## yaml 파일 확인

kevin@k8s-master:~/LABs/deploy$ vi myweb-svc.yaml

apiVersion: v1

kind: Service

metadata:

creationTimestamp: null

labels:

app: myweb77

name: myweb-svc

spec:

ports:

- port: 8765

protocol: TCP

targetPort: 80

selector:

app: myweb77

status:

loadBalancer: {}

## apply

kevin@k8s-master:~/LABs/deploy$ kubectl apply -f myweb-svc.yaml

## 확인

kevin@k8s-master:~/LABs/deploy$ kubectl get deploy,po,svc -o wide

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

deployment.apps/myweb77 3/3 3 3 2m58s nginx nginx:1.23.1-alpine app=myweb77

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/myweb77-dd9477d68-22cql 1/1 Running 0 2m58s 10.109.131.57 k8s-node2 <none> <none>

pod/myweb77-dd9477d68-dd4qg 1/1 Running 0 2m58s 10.111.156.121 k8s-node1 <none> <none>

pod/myweb77-dd9477d68-n2xws 1/1 Running 0 2m58s 10.111.156.123 k8s-node1 <none> <none>

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/myweb-svc ClusterIP 10.109.210.137 <none> 8765/TCP 2s app=myweb77

## 접속해서 확인

kevin@k8s-master:~/LABs/deploy$ curl 10.109.210.137:8765

## index.html 작성

kevin@k8s-master:~/LABs/deploy$ vi index.html

<h1> pod1 </h1>

## 한 pod에 넣는다

kevin@k8s-master:~/LABs/deploy$ kubectl cp index.html myweb77-dd9477d68-22cql:/usr/share/nginx/html/index.html

## 로드밸런싱으로 다른 index.html이 나온다

kevin@k8s-master:~/LABs/deploy$ curl 10.109.210.137:8765

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

## 로드밸런싱으로 다른 index.html이 나온다

kevin@k8s-master:~/LABs/deploy$ curl 10.109.210.137:8765

<h1> pod1 </h1>

- Create a new service named front-end-svc exposing the container port http.

configure the new service to also expose the individual Pods via a NodePort on the nodes on which they are scheduled.

Reconfigure the existing deployment front-end and add a port specification named http exposing port 80/tcp of the existing container nginx.

## deployment 생성

kevin@k8s-master:~/LABs/deploy$ kubectl create deployment front-end --image=nginx --port=80

## 확인

kevin@k8s-master:~/LABs/deploy$ kubectl get deploy,po

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/front-end 1/1 1 1 24s

NAME READY STATUS RESTARTS AGE

pod/front-end-79cb78fb4d-wdtfl 1/1 Running 0 24s

## service 생성

kevin@k8s-master:~/LABs/deploy$ kubectl expose deployment front-end --name=front-end-svc --type=NodePort --port=80 --target-port=80 --dry-run=client -o yaml > front-end-svc.yaml

## yaml 파일 확인

kevin@k8s-master:~/LABs/deploy$ vi front-end-svc.yaml

## apply

kevin@k8s-master:~/LABs/deploy$ kubectl apply -f front-end-svc.yaml

## 확인

kevin@k8s-master:~/LABs/deploy$ kubectl get po,svc -o wide | grep front-end

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/front-end-79cb78fb4d-wdtfl 1/1 Running 0 25m 10.109.131.56 k8s-node2 <none> <none>

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/front-end-svc NodePort 10.110.161.141 <none> 80:30734/TCP 37s app=front-end

## endpoint 확인

kevin@k8s-master:~/LABs/deploy$ kubectl describe service front-end-svc

Endpoints: 10.109.131.56:80

- Create a deployment as follows

Name: nginx-app

Using container nginx with version 1.11.10-alpine

The deployment should contain 3 replicas

Next, deploy the application with new version 1.11.13-alpine. by performing a rollng update. finally, rollback that update to the previous version 1.11.10-alpine

## deployment 생성

kevin@k8s-master:~/LABs/deploy$ kubectl create deployment nginx-app --image=nginx:1.11.10-alpine --replicas=3

## version 바꾸기

kevin@k8s-master:~/LABs/deploy$ kubectl set image deploy nginx-app nginx=nginx:1.11.13-alpine

## 확인

kevin@k8s-master:~/LABs/deploy$ kubectl get deploy nginx-app -o=jsonpath='{.spec.template.spec.containers[0].image}{"\n"}'

nginx:1.11.13-alpine

## history 확인

kevin@k8s-master:~/LABs/deploy$ kubectl rollout history deployment nginx-app

deployment.apps/nginx-app

REVISION CHANGE-CAUSE

1 <none>

2 <none>

## 바로 이전 버전으로 rollout

kevin@k8s-master:~/LABs/deploy$ kubectl rollout undo deployment nginx-app --to-revision=1

## 확인

kevin@k8s-master:~/LABs/deploy$ kubectl get deploy nginx-app -o=jsonpath='{.spec.template.spec.containers[0].image}{"\n"}'

nginx:1.11.10-alpine- Create a deployment spec file that will

Launch 7 replicas of the nginx Image with the label app_runtime_stage=dev

deployment name: kual00201

Save a copy of this spec file to /opt/KUNW00601/spec_deployment.yaml

When you are done, clean up(delete) any new kubernetes API object that you produced during this task.

## deployment 생성

kevin@k8s-master:~/LABs/deploy$ kubectl create deployment kual00201 --image=nginx --replicas=7 --dry-run=client -o yaml > spec_deployment.yaml

## label 바꾸기

kevin@k8s-master:~/LABs/deploy$ vi spec_deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

creationTimestamp: null

labels:

app_runtime: dev

name: kual00201

spec:

replicas: 7

selector:

matchLabels:

app_runtime: dev

strategy: {}

template:

metadata:

creationTimestamp: null

labels:

app_runtime: dev

spec:

containers:

- image: nginx

name: nginx

resources: {}

status: {}

## apply

kevin@k8s-master:~/LABs/deploy$ kubectl apply -f spec_deployment.yaml

## 확인

kevin@k8s-master:~/LABs/deploy$ kubectl get deploy,po -o wide | grep -i kual

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

deployment.apps/kual00201 7/7 7 7 32s nginx nginx app_runtime=dev

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/kual00201-6d479bc984-8qlgj 1/1 Running 0 32s 10.109.131.63 k8s-node2 <none> <none>

pod/kual00201-6d479bc984-dbbf8 1/1 Running 0 32s 10.111.156.108 k8s-node1 <none> <none>

pod/kual00201-6d479bc984-mlflv 1/1 Running 0 32s 10.109.131.62 k8s-node2 <none> <none>

pod/kual00201-6d479bc984-q6v4v 1/1 Running 0 32s 10.109.131.46 k8s-node2 <none> <none>

pod/kual00201-6d479bc984-vd56n 1/1 Running 0 32s 10.111.156.118 k8s-node1 <none> <none>

pod/kual00201-6d479bc984-xzf9k 1/1 Running 0 32s 10.111.156.109 k8s-node1 <none> <none>

pod/kual00201-6d479bc984-z7kp5 1/1 Running 0 32s 10.109.131.55 k8s-node2 <none> <none>

## 삭제

kevin@k8s-master:~/LABs/deploy$ kubectl delete -f spec_deployment.yaml- Create a deployment as follows

Name: nginx-random

Exposed via a service nginx-random

Ensure that the service & pod are accessible via their respective DNS records

The container(s) within any pod(s) running as a part of this deployment should use the image nginx.

Next, use the utility to look up the DNS records of the service & pod and write the output to nslookup and respectively(using busybox).

service.dns, pod.dns

## deployment 생성

kevin@k8s-master:~/LABs/deploy$ kubectl create deployment nginx-random --image=nginx --dry-run=client -o yaml > nginx-random-deploy.yaml

## apply

kevin@k8s-master:~/LABs/deploy$ kubectl apply -f nginx-random-deploy.yaml

## service 생성

kevin@k8s-master:~/LABs/deploy$ kubectl expose deployment nginx-random --name=nginx-random-svc --port=8090 --target-port=80 --dry-run=client -o yaml > nginx-random-svc.yaml

## apply

kevin@k8s-master:~/LABs/deploy$ kubectl apply -f nginx-random-svc.yaml

## 생성 확인

kevin@k8s-master:~/LABs/deploy$ kubectl get deploy,po,svc -o wide

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

deployment.apps/nginx-random 1/1 1 1 43s nginx nginx app=nginx-random

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/nginx-random-8688874dbd-rmrj9 1/1 Running 0 43s 10.109.131.58 k8s-node2 <none> <none>

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/nginx-random-svc ClusterIP 10.107.238.197 <none> 8090/TCP 14s app=nginx-random

## nslookup 결과 파일로 저장

kevin@k8s-master:~/LABs/deploy$ kubectl run dnspod-verify --image=busybox --restart=Never --rm -it -- nslookup 10.107.238.197 > service.dns

kevin@k8s-master:~/LABs/deploy$ kubectl run dnspod-verify --image=busybox --restart=Never --rm -it -- nslookup 10.109.131.58 > pod.dns

## service.dns 확인

kevin@k8s-master:~/LABs/deploy$ cat service.dns

Server: 10.96.0.10

Address: 10.96.0.10:53

197.238.107.10.in-addr.arpa name = nginx-random-svc.default.svc.cluster.local

pod "dnspod-verify" deleted

## pod.dns 확인

kevin@k8s-master:~/LABs/deploy$ cat pod.dns

Server: 10.96.0.10

Address: 10.96.0.10:53

58.131.109.10.in-addr.arpa name = 10-109-131-58.nginx-random-svc.default.svc.cluster.local

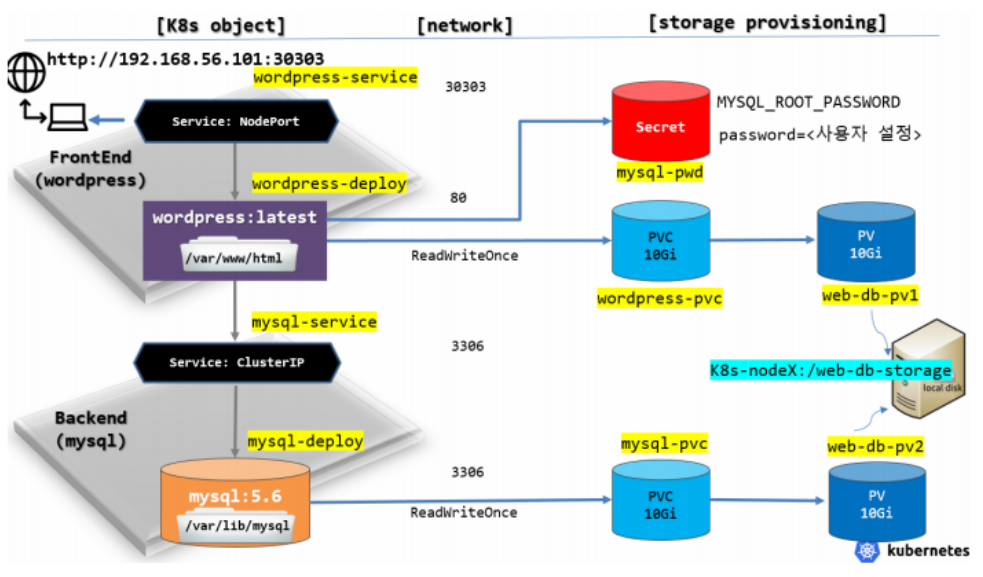

pod "dnspod-verify" deleted- VM -> microservice

Web(wordpress) + DB(mysql) + Volume(PV,PVC)

## 이동

kevin@k8s-master:~/LABs$ mkdir web-db && cd $_

## PV생성 - mysql, wordpress 전용 2개 생성

## ReadWriteOnce/10Gi

## pv1 yaml 피일 작성

kevin@k8s-master:~/LABs/web-db$ vi web-db-pv1.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: web-db-pv1

labels:

type: local

spec:

capacity:

storage: 10Gi

accessModes:

- ReadWriteOnce

hostPath:

path: "/web-db-storage/pv01"

## pv2 yaml 피일 작성

kevin@k8s-master:~/LABs/web-db$ vi web-db-pv2.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: web-db-pv2

labels:

type: local

spec:

capacity:

storage: 10Gi

accessModes:

- ReadWriteOnce

hostPath:

path: "/web-db-storage/pv02"

## apply

kevin@k8s-master:~/LABs/web-db$ kubectl apply -f web-db-pv1.yaml

kevin@k8s-master:~/LABs/web-db$ kubectl apply -f web-db-pv2.yaml

## 생성 확인

kevin@k8s-master:~/LABs/web-db$ kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

web-db-pv1 10Gi RWO Retain Available 1s

web-db-pv2 10Gi RWO Retain Available 112s

## 생성한 PV를 사용할 PVC 생성

## 용량과 접근권한을 비교하여 자동 지정

## wordpress pvc yaml 파일 작성

kevin@k8s-master:~/LABs/web-db$ vi wordpress-pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: wordpress-pvc

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 5Gi

kevin@k8s-master:~/LABs/web-db$ vi mysql-pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: mysql-pvc

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 5Gi

## apply

kevin@k8s-master:~/LABs/web-db$ kubectl apply -f wordpress-pvc.yaml

kevin@k8s-master:~/LABs/web-db$ kubectl apply -f mysql-pvc.yaml

## 확인

kevin@k8s-master:~/LABs/web-db$ kubectl get pv,pvc

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

persistentvolume/web-db-pv1 10Gi RWO Retain Bound default/wordpress-pvc 4m34s

persistentvolume/web-db-pv2 10Gi RWO Retain Bound default/mysql-pvc 6m25s

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/mysql-pvc Bound web-db-pv2 10Gi RWO 15s

persistentvolumeclaim/wordpress-pvc Bound web-db-pv1 10Gi RWO 21s

## mysql 암호 저장용 secret object 생성

kevin@k8s-master:~/LABs/web-db$ kubectl create secret generic mysql-pwd --from-literal=password=Passw0rD

## 확인

kevin@k8s-master:~/LABs/web-db$ kubectl describe secret mysql-pwd

Type: Opaque

Data

====

password: 8 bytes

## mysql pod를 배포할 Deployment 생성

kevin@k8s-master:~/LABs/web-db$ vi mysql-deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: mysql

labels:

app: mysql

spec:

replicas: 1

selector:

matchLabels:

app: mysql

template:

metadata:

labels:

app: mysql

spec:

containers:

- image: mysql:5.6

name: mysql

env:

- name: MYSQL_ROOT_PASSWORD

valueFrom:

secretKeyRef:

name: mysql-pwd

key: password

- name: MYSQL_DATABASE

value: kube-db

- name: MYSQL_USER

value: kube-user

- name: MYSQL_ROOT_HOST

value: '%'

- name: MYSQL_PASSWORD

value: Pass0rD

ports:

- containerPort: 3306

name: mysql

volumeMounts:

- name: mysql-persistent-storage

mountPath: /var/lib/mysql

volumes:

- name: mysql-persistent-storage

persistentVolumeClaim:

claimName: mysql-pvc

## apply

kevin@k8s-master:~/LABs/web-db$ kubectl apply -f mysql-deploy.yaml

## 확인

kevin@k8s-master:~/LABs/web-db$ kubectl get deploy,pods -o wide

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

deployment.apps/mysql 1/1 1 1 27s mysql mysql:5.6 app=mysql

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/mysql-558db45f48-gj9ft 1/1 Running 0 27s 10.109.131.36 k8s-node2 <none> <none>

## mysql service 생성

kevin@k8s-master:~/LABs/web-db$ vi mysql-service.yaml

apiVersion: v1

kind: Service

metadata:

name: mysql

labels:

app: mysql

spec:

type: ClusterIP

ports:

- port: 3306

selector:

app: mysql

## apply

kevin@k8s-master:~/LABs/web-db$ kubectl apply -f mysql-service.yaml

## 확인

kevin@k8s-master:~/LABs/web-db$ kubectl get svc -o wide

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 5d2h <none>

mysql ClusterIP 10.98.31.151 <none> 3306/TCP 14s app=mysql

## wordpress를 배포할 Deployment yaml 파일 작성

kevin@k8s-master:~/LABs/web-db$ vi wordpress-deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: wordpress

labels:

app: wordpress

spec:

replicas: 1

selector:

matchLabels:

app: wordpress

template:

metadata:

labels:

app: wordpress

spec:

containers:

- image: wordpress

name: wordpress

env:

- name: WORDPRESS_DB_HOST

value: mysql:3306

- name: WORDPRESS_DB_NAME

value: kube-db

- name: WORDPRESS_DB_USER

value: kube-user

- name: WORDPRESS_DB_PASSWORD

value: Passw0rD

ports:

- containerPort: 80

name: wordpress

volumeMounts:

- name: wordpress-persistent-storage

mountPath: /var/www/html

volumes:

- name: wordpress-persistent-storage

persistentVolumeClaim:

claimName: wordpress-pvc

## apply

kevin@k8s-master:~/LABs/web-db$ kubectl apply -f wordpress-deploy.yaml

## 확인

kevin@k8s-master:~/LABs/web-db$ kubectl get deploy,pods -o wide

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

deployment.apps/mysql 1/1 1 1 7m3s mysql mysql:5.6 app=mysql

deployment.apps/wordpress 1/1 1 1 29s wordpress wordpress app=wordpress

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/mysql-558db45f48-gj9ft 1/1 Running 0 7m3s 10.109.131.36 k8s-node2 <none> <none>

pod/wordpress-57876c655d-rfntv 1/1 Running 0 29s 10.111.156.70 k8s-node1 <none> <none>

## wordpress service yaml 파일 작성

kevin@k8s-master:~/LABs/web-db$ vi wordpress-service.yaml

apiVersion: v1

kind: Service

metadata:

name: wordpress

labels:

app: wordpress

spec:

type: NodePort

ports:

- port: 80

targetPort: 80

protocol: TCP

selector:

app: wordpress

## apply

kevin@k8s-master:~/LABs/web-db$ kubectl apply -f wordpress-service.yaml

## service 확인

kevin@k8s-master:~/LABs/web-db$ kubectl get svc -o wide

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

mysql ClusterIP 10.98.31.151 <none> 3306/TCP 6m38s app=mysql

wordpress NodePort 10.111.251.169 <none> 80:31040/TCP 20s app=wordpress

## pod 확인

kevin@k8s-master:~/LABs/web-db$ kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

mysql-558db45f48-gj9ft 1/1 Running 0 10m 10.109.131.36 k8s-node2 <none> <none>

wordpress-57876c655d-rfntv 1/1 Running 0 3m40s 10.111.156.70 k8s-node1 <none> <none>

## pod 한개(wordpress) 확인

kevin@k8s-master:~/LABs/web-db$ kubectl get pod/wordpress-57876c655d-rfntv -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

wordpress-57876c655d-rfntv 1/1 Running 0 4m28s 10.111.156.70 k8s-node1 <none> <none>

## describe

kevin@k8s-master:~/LABs/web-db$ kubectl describe pod/wordpress-57876c655d-rfntv

Name: wordpress-57876c655d-rfntv

Namespace: default

Priority: 0

Node: k8s-node1/192.168.56.101

...

## 전체 확인

kevin@k8s-master:~/LABs/web-db$ kubectl get po,svc,deploy

NAME READY STATUS RESTARTS AGE

pod/mysql-558db45f48-gj9ft 1/1 Running 0 12m

pod/wordpress-57876c655d-rfntv 1/1 Running 0 5m36s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/mysql ClusterIP 10.98.31.151 <none> 3306/TCP 9m3s

service/wordpress NodePort 10.111.251.169 <none> 80:31040/TCP 2m45s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/mysql 1/1 1 1 12m

deployment.apps/wordpress 1/1 1 1 5m36s

## mysql pod로 들어가기

kevin@k8s-master:~/LABs/web-db$ kubectl exec -it mysql-558db45f48-gj9ft -- bash

## 환경변수 확인

root@mysql-558db45f48-gj9ft:/# env | grep MYSQL

MYSQL_PASSWORD=Pass0rD

MYSQL_DATABASE=kube-db

MYSQL_ROOT_PASSWORD=Passw0rD

MYSQL_MAJOR=5.6

MYSQL_USER=kube-user

MYSQL_VERSION=5.6.51-1debian9

MYSQL_ROOT_HOST=%

root@mysql-558db45f48-gj9ft:/# mysql -uroot -p

mysql> show databases;

+--------------------+

| Database |

+--------------------+

| information_schema |

| kube-db |

| mysql |

| performance_schema |

+--------------------+

mysql> use kube-db;

Database changed

mysql> show tables;

Empty set

##

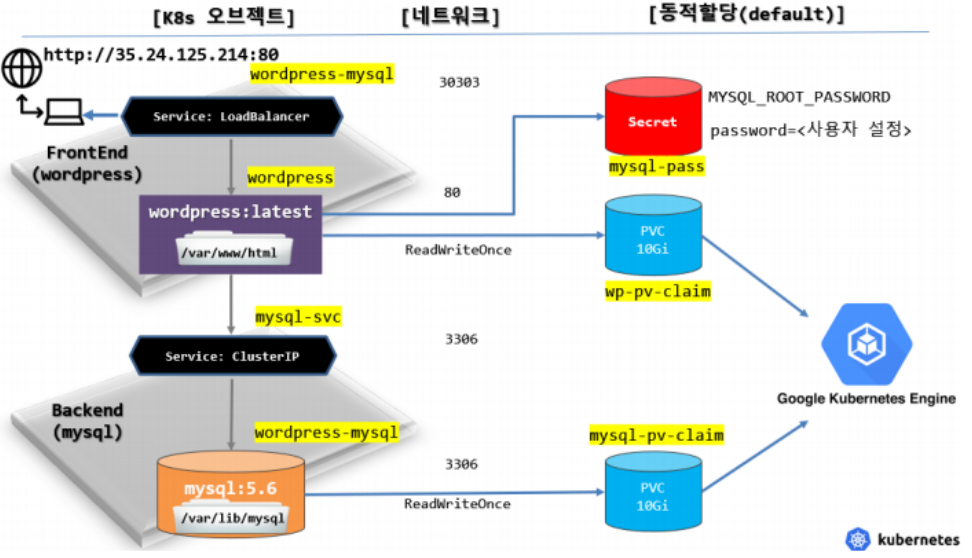

- GCE 기반 GKE -> microservice

## mysql service yaml 파일 작성

apiVersion: v1

kind: Service

metadata:

name: wordpress-mysql

labels:

app: wordpress

spec:

ports:

- port: 3306

selector:

app: wordpress

tier: mysql

ClusterIP: None

## mysql pvc yaml 파일 작성

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: mysql-pv-claim

labels:

app: wordpress

spec:

accessModes:

- ReadWriteOnde

resources:

requests:

storage: 20Gi

## mysql deployment yaml 파일 작성

apiVersion: apps/v1

kind: Deployment

metadata:

name: wordpress-mysql

labels:

app: wordpress

spec:

selector:

matchLabels:

app: wordpress

tier: mysql

strategy:

type: Recreate

template:

metadata:

labels:

app: wordpress

tier: mysql

spec:

containers:

- image: mysql:5.6

name: mysql

env:

- name: MYSQL_ROOT_PASSWORD

valueFrom:

secretKeyRef:

name: mysql-pass

key: password

ports:

- containerPort: 3306

name: mysql

volumeMounts:

- name: mysql-persistent-storage

mountPath: /var/lib/mysql

volumes:

- name: mysql-persistent-storage

persistentVolumeClaim:

claimName: mysql-pv-claim

## 외부 서비스를 위한 LB 구성

apiVersion: v1

kind: Service

metadata:

name: wordpress

labels:

app: wordpress

spec:

ports:

- port: 80

selector:

app: wordpress

tier: frontend

type: LoadBalancer

## mysql 관리자 암호 저장을 위한 secret 생성

C:\k8s>kubectl create secret generic mysql-pass --from-literal=password=Passw0rD

## 확인

C:\k8s>kubectl describe secret mysql-pass

...

Type: Opaque

Data

====

password: 8 bytes

## wordpress를 위한 PVC 구성

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: wp-pv-claim

labels:

app: wordpress

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 20Gi

## wordpress deployment 구성

apiVersion: apps/v1

kind: Deployment

metadata:

name: wordpress

labels:

app: wordpress

spec:

selector:

matchLabels:

app: wordpress

tier: frontend

strategy:

tyep: Recreate

template:

metadata:

labels:

app: wordpress

tier: frontend

spec:

containers:

- image: wordpress:4.8-apache

name: wordpress

env:

- name: WORDPRESS_DB_HOST

value: wordpress-mysql

- name: WORDPRESS_DB_PASSWORD

valueFrom:

secretKeyRef:

name: mysql-pass

key: password

ports:

- containerPort: 80

name: wordpress

volumeMounts:

- name: wordpress-persistent-storage

mountPath: /var/www/html

volumes:

- name: wordpress-persistent-storage

persistentVolumeClaim:

claimName: wp-pv-claim

## apply

C:\k8s>kubectl apply -f wordpress-service.yaml

C:\k8s>kubectl apply -f mysql-pvc.yaml

C:\k8s>kubectl apply -f wordpress-deploy.yaml

C:\k8s>kubectl apply -f wordpress-lb.yaml

C:\k8s>kubectl apply -f wp-pvc.yaml

## 정보 조회

C:\k8s>kubectl get deploy,po,svc -o wide

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

deployment.apps/wordpress 0/1 1 0 11s wordpress wordpress:4.8-apache app=wordpress,tier=frontend

deployment.apps/wordpress-mysql 1/1 1 1 5m2s mysql mysql:5.6 app=wordpress,tier=mysql

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/wordpress-5994d99f46-lgjrc 0/1 ContainerCreating 0 11s <none> gke-k8s-cluster-k8s-nodepool-bc541242-jgo3 <none> <none>

pod/wordpress-mysql-6c479567b-rn46w 1/1 Running 0 5m2s 10.12.3.12 gke-k8s-cluster-k8s-nodepool-bc541242-o1ji <none> <none>

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/kubernetes ClusterIP 10.16.0.1 <none> 443/TCP 7d23h <none>

service/wordpress LoadBalancer 10.16.0.68 34.64.160.112 80:30704/TCP 4m11s app=wordpress,tier=frontend

service/wordpress-mysql ClusterIP None <none> 3306/TCP 7m12s app=wordpress,tier=mysql