Kubernetes for microservice

microservice를 하기 위한 최적의 환경을 제공하는 것이 container

container orchestration 도구 -> kubernetes

kubernetes

ㄴ open source

ㄴ automating deployment

ㄴ containerized application의 배포, 확장

Container 이해와 Kubernetes 구축

Why Kubernetes?

: 컨테이너화(Pod)된 워크로드와 서비스를 관리하기 위한 이식성(어디든 옮겨서 사용할 수 있다)이 있고, 확장 가능한 오픈소스 플랫폼

컨테이너를 pod라는 곳에 담는다. pod 속에는 하나 이상의 컨테이너를 담을 수 있다.

소유는 CNCF에서 하고 있다.

Cloud-Native - DevOps, Continuous Delivery, Microservices, Containers

Kubernetes는 선언적 구성(YAML)과 자동화(Automation) 처리가 용이하다.

전통적인 배포 시대 -> 가상화 배포 시대 -> 컨테이너 개발 시대

컨테이너는 애플리케이션을 포장하고 실행하는 좋은 방법을 제공한다.

운영 환경에서는 애플리케이션을 실행하는 컨테이너를 관리하고 가동 중지 시간이 없는지 확인해야 한다.

Kubernetes는 분산 시스템(cluster)을 탄력적으로 실행하기 위한 프레임워크를 제공한다. 이는 애플리케이션의 확장과 장애 조치를 처리하고, 배포 패턴 등을 의미한다.

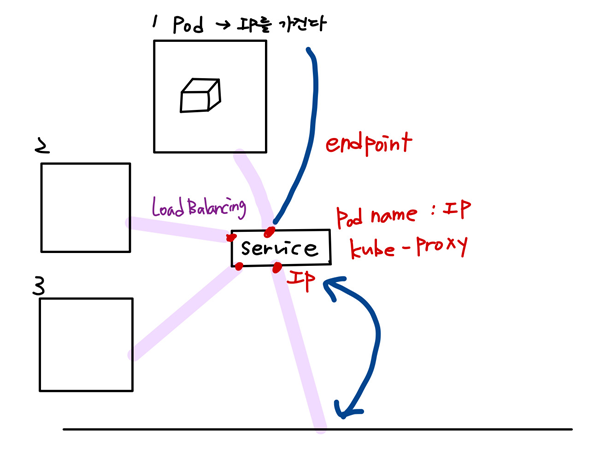

pod는 worker node 안에 만들어진다. pod는 ip를 가진다.

제한 없는 인프라 프레임워크!

: Kubernetes는 제한없이 컨테이너에 핵심 기능을 제공하여 인프라 종속을 제거하고, Pod 및 Service를 포함한 Kubernetes 플랫폼 내의 기능 조합을 통해 이를 달성한다.

모듈화를 통한 더 나은 관리

: kubernetes의 service 는 유사한 기능을 수행하는 Pod 모음을 그룹화 하는데 사용, 검색 및 관찰 가능성, 수평적 확장 및 로드 밸런싱을 위해 서비스를 쉽게 구성한다.

대규모 소프트웨어 배포 및 업데이트!

: Kubernetes는 상태 비저장(Stateless), 상태 저장(stateful) 및 데이터 처리 워크로드를 비롯한 다양한 워크로드를 지원한다.

Cloud Native App의 기반 마련!

: Kubernetes를 통해 컨테이너에서 모든 유틸리티를 도출하고 클라우드 별 요구 사항과 관계없이 어디서나 실행 가능하다. 용량 추정이 필요없는 Cloud Native Applications 개발에 최적이다.(Serverless computing)

=> Goal

: Desired State Management

사용자가 정의한 구성(*.YAML)에 맞춰 사용자가 기대하는 상태로 동작하도록 안정적으로 유지해주는 것

kubernetes 1.24.* 버전부터는 docker engine에서 containerd-shim을 사용하지 않는 이슈가 발생했다.

Docker, Kubernetes 모두 Paas !!

설치(환경구성)

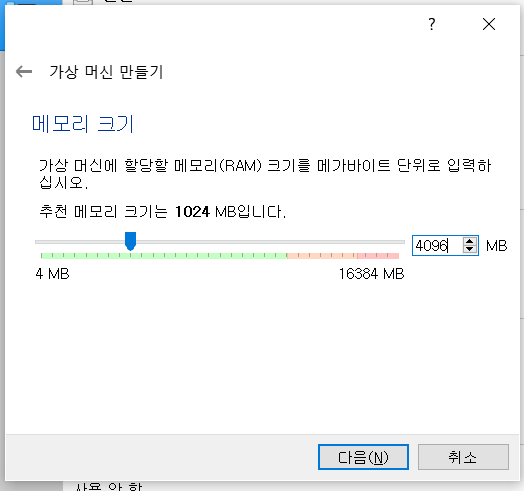

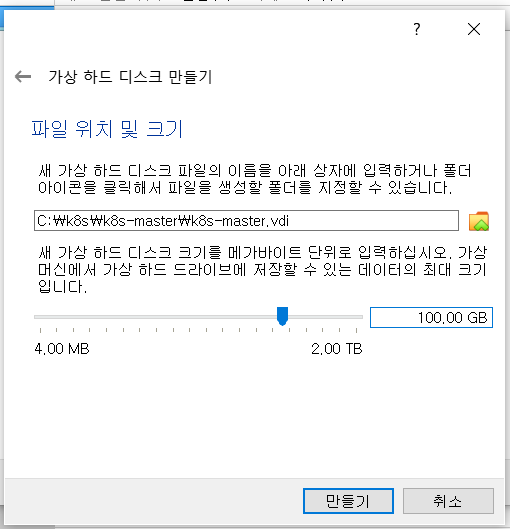

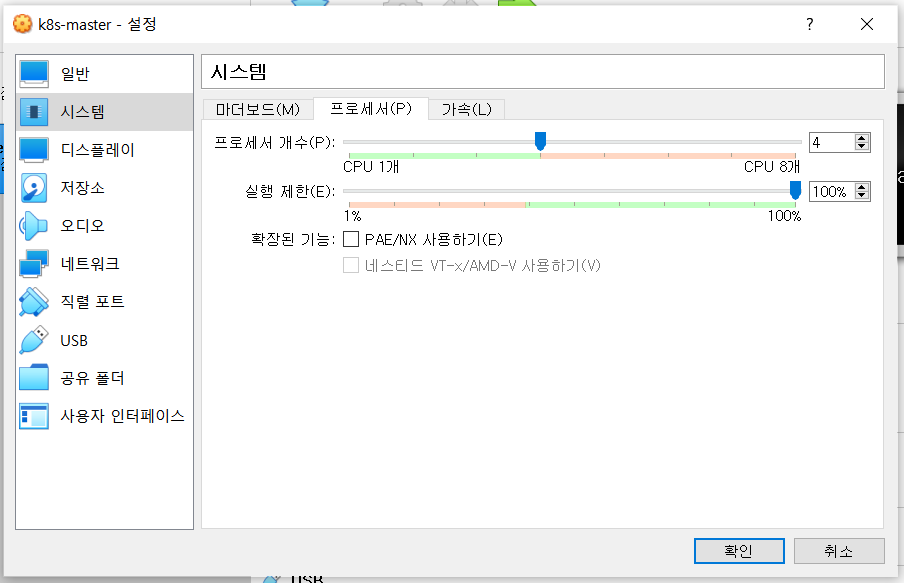

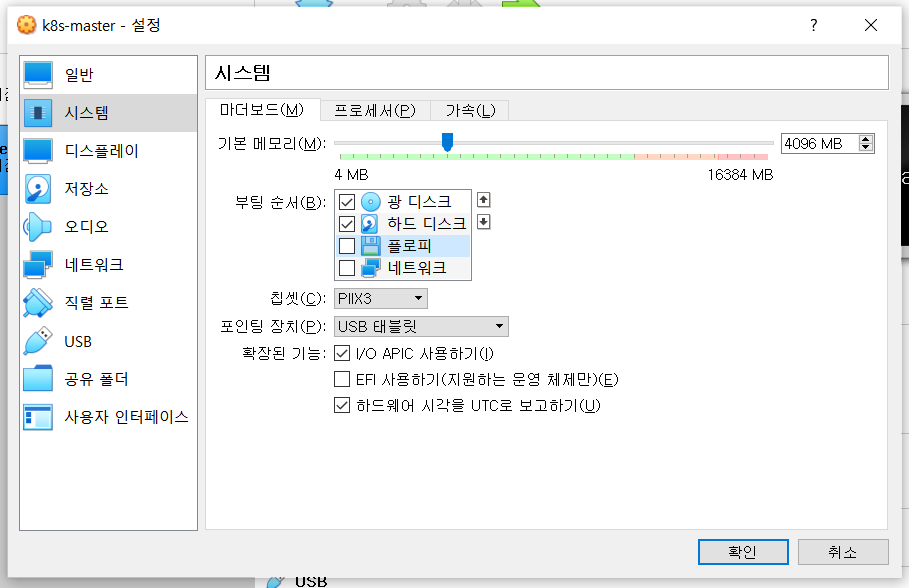

kubernetes 최소 사양

: CPU * 2, Memory * 4G

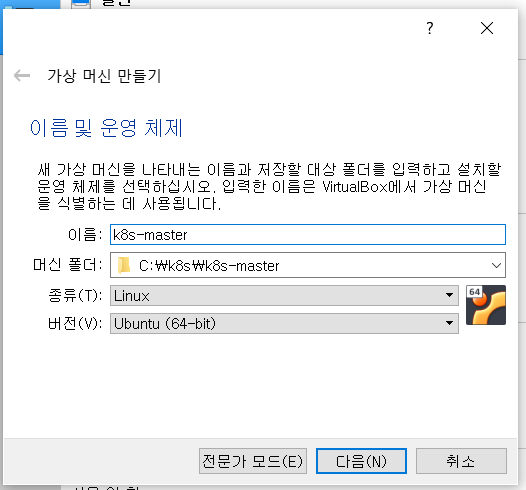

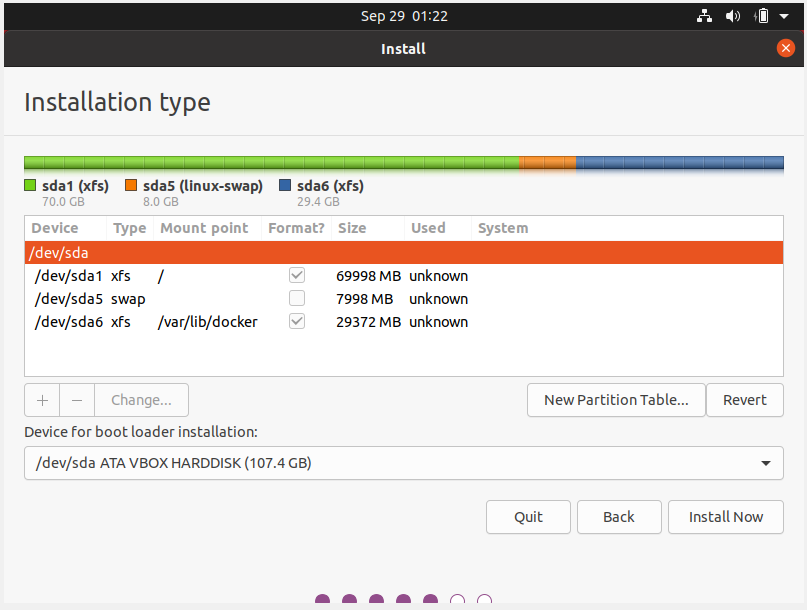

Ubuntu 설치

ubuntu image는 20.04.5 LTS 사용

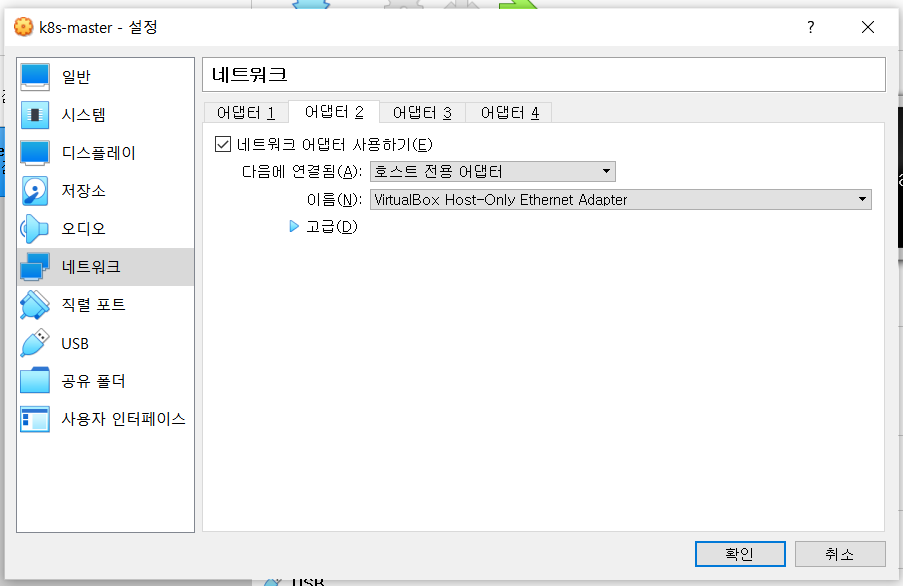

어댑터1은 NAT. 어댑터2가 호스트 전용 어댑터!!

OS 환경 구성

- 보안 구성 해제

: firewall-cmd, Selinux

## firewalld 설치

kevin@k8s-master:~$ sudo apt -y install firewalld

## disable 시킨다

kevin@k8s-master:~$ sudo systemctl daemon-reload

kevin@k8s-master:~$ sudo systemctl disable firewalld.service

## stop 시킨다

kevin@k8s-master:~$ sudo systemctl stop firewalld.service

## 확인

kevin@k8s-master:~$ sudo firewall-cmd --reload

FirewallD is not running- swap 해제

kubernetes는 swap을 사용하지 않는다. container 자체가 process(=pod)이다. 성능이 떨어질 수 있기 때문에 사용하지 않는다.

## -a 현재 부팅된 상태에서 못 쓰게 한다.

kevin@k8s-master:~$ sudo swapoff -a

## swap 라인을 아예 주석처리를 한다.

kevin@k8s-master:~$ sudo vi /etc/fstab

#UUID=841db93e-653c-45e5-b9e9-f851864f59db none swap sw 0 0시간 동기화

prometheus는 시간이 안 맞으면 잘 안돌아간다.kevin@k8s-master:~$ sudo apt -y install ntp kevin@k8s-master:~$ sudo systemctl daemon-reload kevin@k8s-master:~$ sudo systemctl enable ntp kevin@k8s-master:~$ sudo systemctl restart ntp ## 상태 확인 kevin@k8s-master:~$ sudo systemctl status ntp ● ntp.service - Network Time Service Loaded: loaded (/lib/systemd/system/ntp.service; enabled; vendor preset: enabled) Active: active (running) kevin@k8s-master:~$ sudo ntpq -p

- network forward 설정

[ERROR FileContent--proc-sys-net-ipv4-ip_forward]: /proc/sys/net/ipv4/ip_forward contents are not set to 1

이 에러를 해결 할 수 있다.

kevin@k8s-master:~$ sudo su -

root@k8s-master:~# cat /proc/sys/net/ipv4/ip_forward

0

root@k8s-master:~# echo '1' > /proc/sys/net/ipv4/ip_forward

root@k8s-master:~# cat /proc/sys/net/ipv4/ip_forward

1docker install

docker를 설치하면 자동으로 containerd가 생긴다. 이것을 kubernetes에 연결한다.

-> 1.24.x 버전부터 이렇게 사용한다.

kevin@k8s-master:~# sudo apt -y install apt-transport-https ca-certificates curl software-properties-common gnupg2

kevin@k8s-master:~# curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo apt-key --keyring /etc/apt/trusted.gpg.d/docker.gpg add -

OK

kevin@k8s-master:~# sudo add-apt-repository "deb [arch=amd64] https://download.docker.com/linux/ubuntu $(lsb_release -cs) stable"

kevin@k8s-master:~# tail /etc/apt/sources.list

...

deb [arch=amd64] https://download.docker.com/linux/ubuntu focal stable

# deb-src [arch=amd64] https://download.docker.com/linux/ubuntu focal stable

kevin@k8s-master:~# sudo apt -y update

kevin@k8s-master:~# apt-cache policy docker-ce

docker-ce:

Installed: (none)

Candidate: 5:20.10.18~3-0~ubuntu-focal

kevin@k8s-master:~# sudo apt-get -y install docker-ce

kevin@k8s-master:~$ sudo docker versioncgroupfs

자원할당, 제한 -> request & limit 옵션으로 제한한다.kubernetes는 cgroup을 사용하지 않고, cgroup의 상위 process인 systemd를 사용해야 한다. 바꾸지 않으면 설치가 되지 않는다.

kevin@k8s-master:~$ sudo docker info | grep -i cgroup Cgroup Driver: cgroupfs kevin@k8s-master:~$ sudo vi /etc/docker/daemon.json { "exec-opts": ["native.cgroupdriver=systemd"], "log-driver": "json-file", "log-opts": { "max-size": "100m" }, "storage-driver": "overlay2", "storage-opts": [ "overlay2.override_kernel_check=true" ] } ## 설정 적용하기 kevin@k8s-master:~$ sudo mkdir -p /etc/systemd/system/docker.service.d kevin@k8s-master:~$ sudo systemctl daemon-reload kevin@k8s-master:~$ sudo systemctl enable docker kevin@k8s-master:~$ sudo systemctl restart docker kevin@k8s-master:~$ sudo systemctl status docker ● docker.service - Docker Application Container Engine Loaded: loaded (/lib/systemd/system/docker.service; enabled; vendor preset: enabled) Active: active (running) ## 바뀌었는지 확인 kevin@k8s-master:~$ sudo docker info | grep -i cgroup Cgroup Driver: systemd

kubernetes tool

- kubeadm

: bootstrap, init(초기화), worker node JOIN, engine 자체의 upgrade - kubectl

: kubernetes CLI - kubelet

: process(daemon) 항상 살아있어야 한다.

## key 가져오기

kevin@k8s-master:~$ curl -s https://packages.cloud.google.com/apt/doc/apt-key.gpg | sudo apt-key add -

[sudo] password for kevin:

OK

kevin@k8s-master:~$ cat <<EOF | sudo tee /etc/apt/sources.list.d/kubernetes.list

> deb https://apt.kubernetes.io/ kubernetes-xenial main

> EOF

kevin@k8s-master:~$ sudo apt -y update

kevin@k8s-master:~$ sudo apt-cache policy kubeadm

kubeadm:

Installed: (none)

Candidate: 1.25.2-00

## 버전 체크

kevin@k8s-master:~$ sudo apt-cache policy kubeadm | grep 1.24

## 설치

kevin@k8s-master:~$ sudo apt -y install kubeadm=1.24.5-00 kubelet=1.24.5-00 kubectl=1.24.5-00

## 설치 되었는지 확인

kevin@k8s-master:~$ sudo apt list | grep kubernetes

kubeadm/kubernetes-xenial 1.25.2-00 amd64 [upgradable from: 1.24.5-00]

kubectl/kubernetes-xenial 1.25.2-00 amd64 [upgradable from: 1.24.5-00]

kubelet/kubernetes-xenial 1.25.2-00 amd64 [upgradable from: 1.24.5-00]

## 항상 살아있을 수 있게 설정

kevin@k8s-master:~$ sudo systemctl daemon-reload

kevin@k8s-master:~$ sudo systemctl enable --now kubelet

kevin@k8s-master:~$ sudo vi /etc/hosts

127.0.0.1 localhost

127.0.1.1 k8s-master

192.168.56.100 k8s-master

192.168.56.101 k8s-node1

192.168.56.102 k8s-node2

192.168.56.103 k8s-node3

...1 - Major

24 - Minor

5 - patchset

여기까지 진행하고 복제 * 3

k83-node1, k83-node2, k83-node3

- 모든 master, node에서 ssh 접속 해서 키 얻어놓기

kevin@k8s-master:~$ ssh k8s-node1

kevin@k8s-master:~$ ssh k8s-node2

kevin@k8s-master:~$ cat .ssh/known_hosts- 모든 master, node에서 실행

kevin@k8s-master:~$ cd /etc/containerd/

kevin@k8s-master:/etc/containerd$ ls

config.toml

kevin@k8s-master:/etc/containerd$ sudo mv config.toml config.toml.org

kevin@k8s-master:/etc/containerd$ ls

config.toml.org

## 재시작

kevin@k8s-master:~$ sudo systemctl restart containerd.service

kevin@k8s-master:~$ sudo systemctl restart kubelet- 초기화

kevin@k8s-master:~$ sudo kubeadm init --pod-network-cidr=10.96.0.0/12 --apiserver-advertise-address=192.168.56.100

I0929 14:03:20.876603 6773 version.go:255] remote version is much newer: v1.25.2; falling back to: stable-1.24

[init] Using Kubernetes version: v1.24.6

[preflight] Running pre-flight checks

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [k8s-master kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 192.168.56.100]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [k8s-master localhost] and IPs [192.168.56.100 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [k8s-master localhost] and IPs [192.168.56.100 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 10.505155 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Skipping phase. Please see --upload-certs

[mark-control-plane] Marking the node k8s-master as control-plane by adding the labels: [node-role.kubernetes.io/control-plane node.kubernetes.io/exclude-from-external-load-balancers]

[mark-control-plane] Marking the node k8s-master as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule node-role.kubernetes.io/control-plane:NoSchedule]

[bootstrap-token] Using token: 75bfhx.sh3bwbg376x2nyim

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to get nodes

[bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] Configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] Configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.56.100:6443 --token 75bfhx.sh3bwbg376x2nyim \

--discovery-token-ca-cert-hash sha256:b2e586d37e73c8dfc4d1f74a03f12fc243af372899a8c9e88aec69e3a8bb12fa

## 클러스터 사용을 시작하기 위해 일반사용자로 다음 명령 수행

kevin@k8s-master:~$ mkdir -p $HOME/.kube

kevin@k8s-master:~$ sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

kevin@k8s-master:~$ sudo chown $(id -u):$(id -g) $HOME/.kube/config

## 포트 연결 확인

kevin@k8s-master:~$ sudo apt -y install net-tools

kevin@k8s-master:~$ sudo netstat -nlp | grep LISTEN

tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 714/sshd: /usr/sbin

tcp 0 0 127.0.0.1:46779 0.0.0.0:* LISTEN 6277/containerd

tcp 0 0 127.0.0.1:2379 0.0.0.0:* LISTEN 7777/etcd

tcp 0 0 127.0.0.1:2381 0.0.0.0:* LISTEN 7777/etcd

tcp 0 0 127.0.0.1:10259 0.0.0.0:* LISTEN 7749/kube-scheduler

tcp 0 0 127.0.0.1:10257 0.0.0.0:* LISTEN 7722/kube-controlle

tcp 0 0 127.0.0.1:10248 0.0.0.0:* LISTEN 7899/kubelet

tcp 0 0 127.0.0.1:10249 0.0.0.0:* LISTEN 8042/kube-proxy

tcp 0 0 192.168.56.100:2379 0.0.0.0:* LISTEN 7777/etcd

tcp 0 0 192.168.56.100:2380 0.0.0.0:* LISTEN 7777/etcd

tcp 0 0 127.0.0.1:631 0.0.0.0:* LISTEN 591/cupsd

tcp 0 0 127.0.0.53:53 0.0.0.0:* LISTEN 552/systemd-resolve

tcp6 0 0 :::10256 :::* LISTEN 8042/kube-proxy

tcp6 0 0 :::22 :::* LISTEN 714/sshd: /usr/sbin

tcp6 0 0 :::10250 :::* LISTEN 7899/kubelet

tcp6 0 0 :::6443 :::* LISTEN 7789/kube-apiserver

...

## bash 자동완성 기능을 위해 설치

kevin@k8s-master:~$ sudo apt -y install bash-completion

kevin@k8s-master:~$ source <(kubectl completion bash)

kevin@k8s-master:~$ echo "source <(kubectl completion bash)" >> .bashrc

## 각 node에서 실행해서 연결

kevin@k8s-node1:~$ kubeadm join 192.168.56.100:6443 --token 75bfhx.sh3bwbg376x2nyim \

> --discovery-token-ca-cert-hash sha256:b2e586d37e73c8dfc4d1f74a03f12fc243af372899a8c9e88aec69e3a8bb12fa

kevin@k8s-node2:~$ sudo kubeadm join 192.168.56.100:6443 --token 75bfhx.sh3bwbg376x2nyim \

> --discovery-token-ca-cert-hash sha256:b2e586d37e73c8dfc4d1f74a03f12fc243af372899a8c9e88aec69e3a8bb12fa

## master에서 연결이 되었는지 확인

kevin@k8s-master:~$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master NotReady control-plane 7m1s v1.24.5

k8s-node1 NotReady <none> 22s v1.24.5

k8s-node2 NotReady <none> 16s v1.24.5

## running 중인지 확인

kevin@k8s-master:~$ sudo systemctl status kubelet.service

## 확인

kevin@k8s-master:~$ kubectl get po --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-6d4b75cb6d-bmsx5 0/1 Pending 0 8m28s

kube-system coredns-6d4b75cb6d-jk88g 0/1 Pending 0 8m28s

kube-system etcd-k8s-master 1/1 Running 0 8m41s

kube-system kube-apiserver-k8s-master 1/1 Running 0 8m43s

kube-system kube-controller-manager-k8s-master 1/1 Running 0 8m43s

kube-system kube-proxy-d84gc 1/1 Running 0 2m

kube-system kube-proxy-kxswb 1/1 Running 0 2m6s

kube-system kube-proxy-qwp4g 1/1 Running 0 8m28s

kube-system kube-scheduler-k8s-master 1/1 Running 0 8m41s

## 한 개만 확인

kevin@k8s-master:~$ kubectl describe po kube-proxy-d84gc -n kube-system

## alias로 pall 설정

kevin@k8s-master:~$ alias pall='kubectl get po --all-namespaces'

kevin@k8s-master:~$ vi .bashrc

kevin@k8s-master:~$ . .bashrc

## 더 자세하게 확인

kevin@k8s-master:/etc/kubernetes/manifests$ kubectl get no -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

k8s-master NotReady control-plane 16m v1.24.5 192.168.56.100 <none> Ubuntu 20.04.4 LTS 5.15.0-48-generic containerd://1.6.8

k8s-node1 NotReady <none> 9m26s v1.24.5 192.168.56.101 <none> Ubuntu 20.04.4 LTS 5.15.0-48-generic containerd://1.6.8

k8s-node2 NotReady <none> 9m20s v1.24.5 192.168.56.102 <none> Ubuntu 20.04.4 LTS 5.15.0-48-generic containerd://1.6.8join 실패시 모든 노드(master 포함)에서 아래 명령 수행

sudo kubeadm reset

sudo systemctl restart kubelet

CNI

- calico plugin

: cluster network를 구성한다. L3 기술을 사용한다.

## 다운로드 받기

kevin@k8s-master:~$ curl -O https://docs.projectcalico.org/manifests/calico.yaml

## 실행하기

kevin@k8s-master:~$ kubectl apply -f calico.yaml

## 과정 확인

kevin@k8s-master:~$ pall

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system calico-kube-controllers-6799f5f4b4-rm2mx 0/1 Pending 0 1s

kube-system calico-node-gv25g 0/1 Init:0/3 0 1s

kube-system calico-node-qkwj7 0/1 Init:0/3 0 1s

kube-system calico-node-vqmc7 0/1 Init:0/3 0 1s

kube-system coredns-6d4b75cb6d-bmsx5 0/1 Pending 0 20m

kube-system coredns-6d4b75cb6d-jk88g 0/1 Pending 0 20m

kube-system etcd-k8s-master 1/1 Running 0 20m

kube-system kube-apiserver-k8s-master 1/1 Running 1 (4m38s ago) 20m

kube-system kube-controller-manager-k8s-master 1/1 Running 1 (4m57s ago) 20m

kube-system kube-proxy-d84gc 1/1 Running 0 13m

kube-system kube-proxy-kxswb 1/1 Running 0 13m

kube-system kube-proxy-qwp4g 1/1 Running 0 20m

kube-system kube-scheduler-k8s-master 1/1 Running 1 (4m57s ago) 20m

kevin@k8s-master:~$ kubectl describe po -n kube-system calico-node-gv25gImagePullBackOff 발생시 sudo docker login 수행

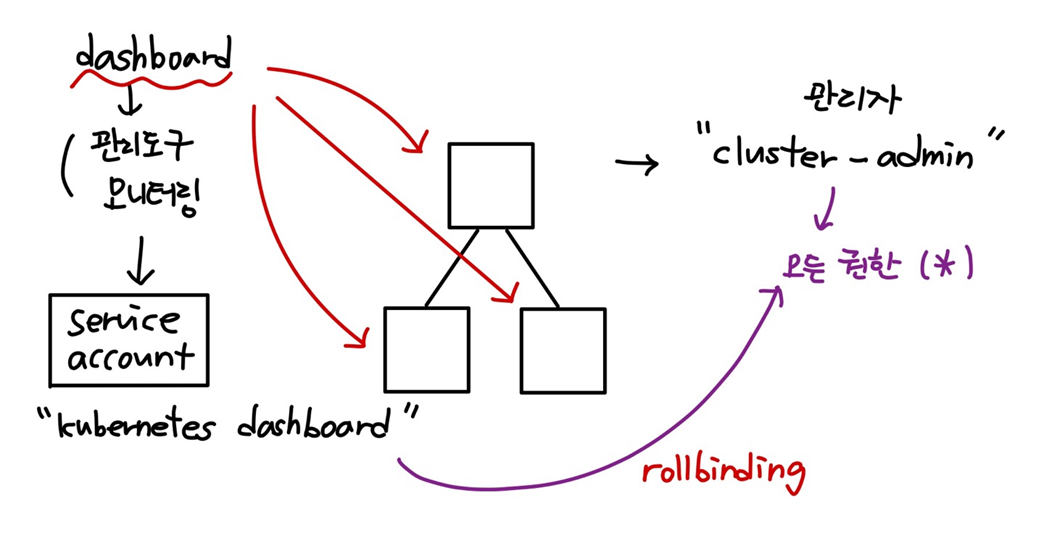

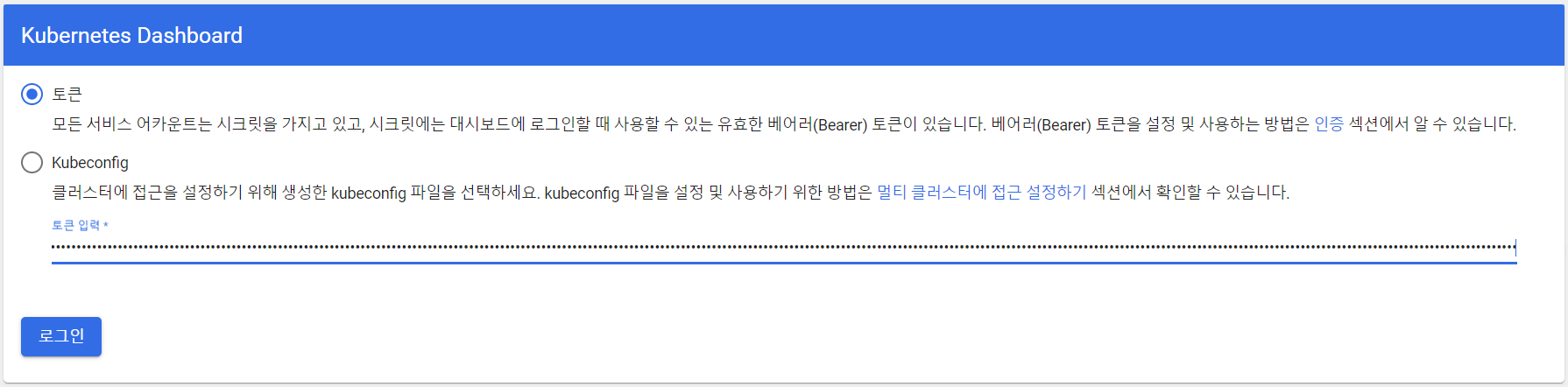

dashboard

- PKI 인증서

: windows의 certmgr.msc - prometheus & exporter & grafana

단계

1. rolebind

: cluster-admin의 *을 kubernetes-dashboard에게 연결

2. token 생성

3. 인증서 생성 -> client

윈도우에 주기 위한 인증서

## ca key 확인하기

kevin@k8s-master:~$ cd /etc/kubernetes/pki

kevin@k8s-master:/etc/kubernetes/pki$ ls

apiserver.crt apiserver-etcd-client.key apiserver-kubelet-client.crt ca.crt etcd front-proxy-ca.key front-proxy-client.key sa.pub

apiserver-etcd-client.crt apiserver.key apiserver-kubelet-client.key ca.key front-proxy-ca.crt front-proxy-client.crt sa.key

kevin@k8s-master:~$ kubectl apply -f https://raw.githubusercontent.com/kubernetes/dashboard/v2.6.1/aio/deploy/recommended.yaml

## 안되는 경우

kevin@k8s-master:~$ curl -O https://raw.githubusercontent.com/kubernetes/dashboard/v2.6.1/aio/deploy/recommended.yaml

kevin@k8s-master:~$ vi recommended.yaml

## image 찾아서 직접 pull 받는다

kevin@k8s-master:~$ sudo docker pull kubernetesui/dashboard:v2.6.1

kevin@k8s-master:~$ sudo docker pull kubernetesui/metrics-scraper:v1.0.8

kevin@k8s-master:~$ kubectl delete -f recommended.yaml

kevin@k8s-master:~$ kubectl apply -f recommended.yaml

kevin@k8s-master:~$ kubectl get clusterrole cluster-admin

NAME CREATED AT

cluster-admin 2022-09-29T05:10:05Z

## 자세히 확인하기

kevin@k8s-master:~$ kubectl describe clusterrole cluster-admin

Name: cluster-admin

Labels: kubernetes.io/bootstrapping=rbac-defaults

Annotations: rbac.authorization.kubernetes.io/autoupdate: true

PolicyRule:

Resources Non-Resource URLs Resource Names Verbs

--------- ----------------- -------------- -----

*.* [] [] [*]

[*] [] [*]

## 1.24.x부터는 dashboard token을 직접 생성해서 사용해야 한다.

kevin@k8s-master:~$ kubectl describe sa -n kubernetes-dashboard kubernetes-dashboard

Name: kubernetes-dashboard

Namespace: kubernetes-dashboard

Labels: k8s-app=kubernetes-dashboard

Annotations: <none>

Image pull secrets: <none>

Mountable secrets: <none>

Tokens: <none>

Events: <none>

## dashboard_token 디렉터리 생성

kevin@k8s-master:~$ mkdir dashboard_token && cd $_

## ClusterRoleBind.yaml 작성

kevin@k8s-master:~/dashboard_token$ vi ClusterRoleBind.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kubernetes-dashboard2

labels:

k8s-app: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

## kubectl create

kevin@k8s-master:~/dashboard_token$ kubectl create -f ClusterRoleBind.yaml

clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard2 created

## 토큰 조회

kevin@k8s-master:~/dashboard_token$ kubectl get -n kubernetes-dashboard secrets

NAME TYPE DATA AGE

kubernetes-dashboard-certs Opaque 0 45m

kubernetes-dashboard-csrf Opaque 1 45m

kubernetes-dashboard-key-holder Opaque 2 45m

## 여기까지는 1.23.* 이전 버전까지이다.

## 토큰 직접 생성

## ClusterRoleBind-admin-user.yaml 작성

kevin@k8s-master:~/dashboard_token$ vi ClusterRoleBind-admin-user.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kubernetes-dashboard

kevin@k8s-master:~/dashboard_token$ kubectl create -f ClusterRoleBind-admin-user.yaml

clusterrolebinding.rbac.authorization.k8s.io/admin-user created

## sa-admin-user.yaml 작성

kevin@k8s-master:~/dashboard_token$ vi sa-admin-user.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kubernetes-dashboard

## apply - rollbinding

kevin@k8s-master:~/dashboard_token$ kubectl apply -f sa-admin-user.yaml

serviceaccount/admin-user created

## 토큰 발급받기

kevin@k8s-master:~/dashboard_token$ kubectl -n kubernetes-dashboard create token admin-user

eyJhbGciOiJSUzI1NiIsImtpZCI6IjZEYUJJZHNDUzlyMlRXTFlfamtGUUxKb2V6NktMNWZWNXNwVi02b2pTNkUifQ.eyJhdWQiOlsiaHR0cHM6Ly9rdWJlcm5ldGVzLmRlZmF1bHQuc3ZjLmNsdXN0ZXIubG9jYWwiXSwiZXhwIjoxNjY0NDM4MDM2LCJpYXQiOjE2NjQ0MzQ0MzYsImlzcyI6Imh0dHBzOi8va3ViZXJuZXRlcy5kZWZhdWx0LnN2Yy5jbHVzdGVyLmxvY2FsIiwia3ViZXJuZXRlcy5pbyI6eyJuYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsInNlcnZpY2VhY2NvdW50Ijp7Im5hbWUiOiJhZG1pbi11c2VyIiwidWlkIjoiMTRhZmFlZjctNGQ3YS00NTliLWFhMjctN2U3MTMzYmQyMjIxIn19LCJuYmYiOjE2NjQ0MzQ0MzYsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDprdWJlcm5ldGVzLWRhc2hib2FyZDphZG1pbi11c2VyIn0.UXGZaUayPqwrpVgIHV_vrm_mJil9qxcp5rHkkG3TfLd3giN2rnCcefDM12P14e0FcM-srLws1rY0y0wfbyVsoZudDTuaM8KRoOlTU_iMqzXNMj-6BzZY6E9Qh-heztw7iUmbucXynFeeET8FEGvpvZPCV3qc2wTlMKDtu6TCGjbtUOUUpQgUXWLppS4ss2KY_8AdlAbRO7rnGCrsXqVOVgeJoBvBGvh0FljgvxEqo-D0-SoGkGHBALqXJ8cjcYrs9EBatcbZ-efP4Tmajq9vYdNXM4iRO9vgrOYeSRY7aU3Jq0XMCT9NItKZrOUV34U_YsXy5t5yO9xg2pSK_ghtIA

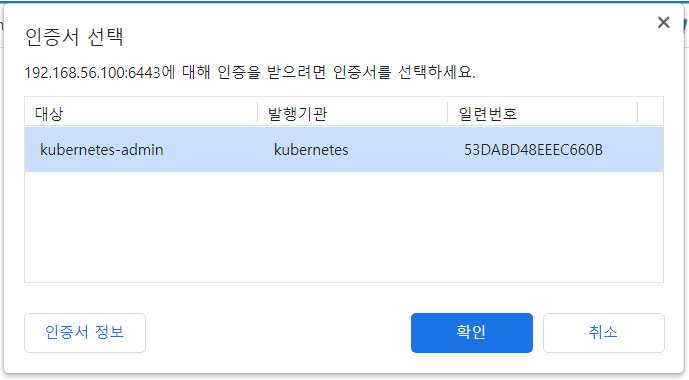

## 인증서 생성

kevin@k8s-master:~/dashboard_token$ grep 'client-certificate-data' ~/.kube/config | head -n 1 | awk '{print $2}' | base64 -d >> kubecfg.crt

kevin@k8s-master:~/dashboard_token$ grep 'client-key-data' ~/.kube/config | head -n 1 | awk '{print $2}' | base64 -d >> kubecfg.key

kevin@k8s-master:~/dashboard_token$ openssl pkcs12 -export -clcerts -inkey kubecfg.key -in kubecfg.crt -out kubecfg.p12 -name "kubernetes-admin"

kevin@k8s-master:~/dashboard_token$ cp /etc/kubernetes/pki/ca.crt ./

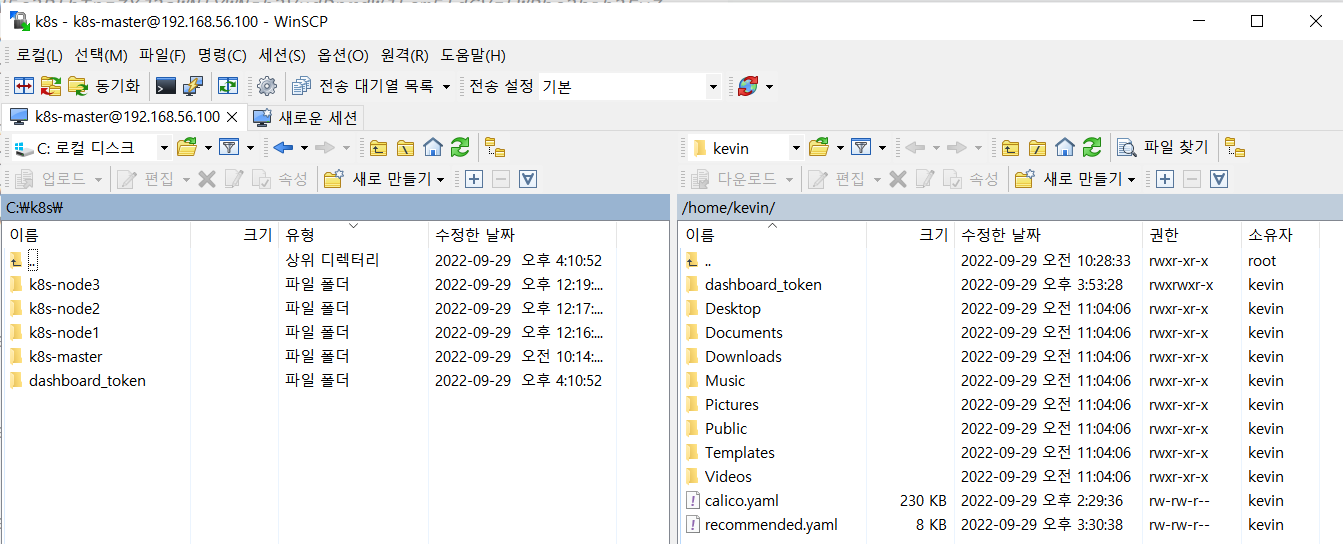

## winSCP를 이용해서 windows의 c:\k8s\dashboard_token 이전한다.

## certmgr.msc에 등록한다.

## powershell 관리자로 연다.

PS C:\k8s\dashboard_token> certutil.exe -addstore "Root" ca.crt

PS C:\k8s\dashboard_token> certutil.exe -p k8spass# -user -importPFX .\kubecfg.p12

## https://192.168.56.100:6443/api/v1/namespaces/kubernetes-dashboard/services/https:kubernetes-dashboard:/proxy/#/login 접속한다.

## 토큰으로 로그인한다

: winSCP로 dashboard_token 옮기기

-

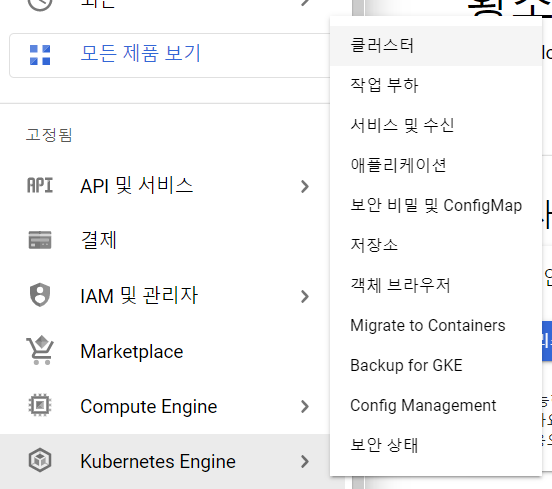

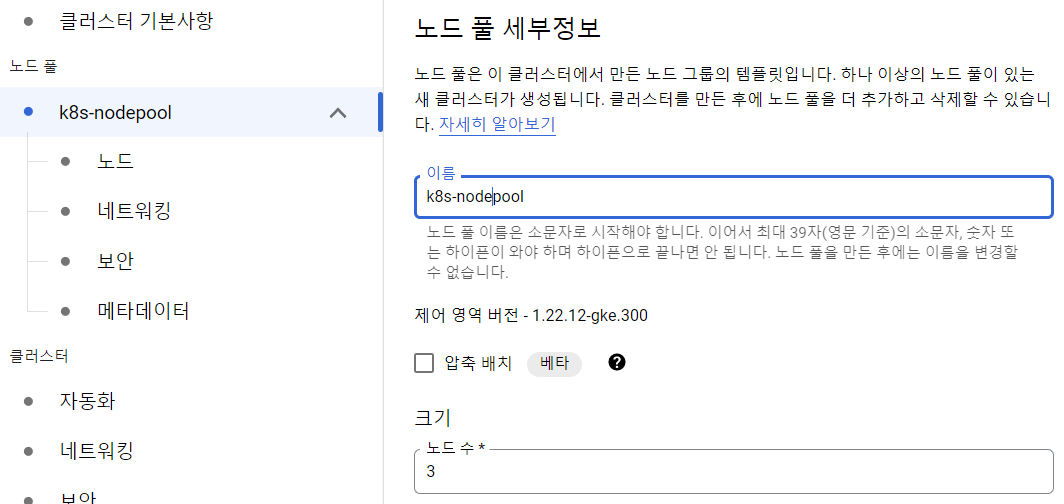

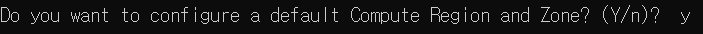

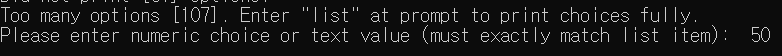

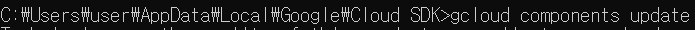

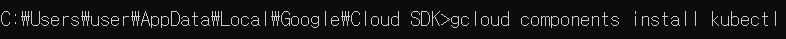

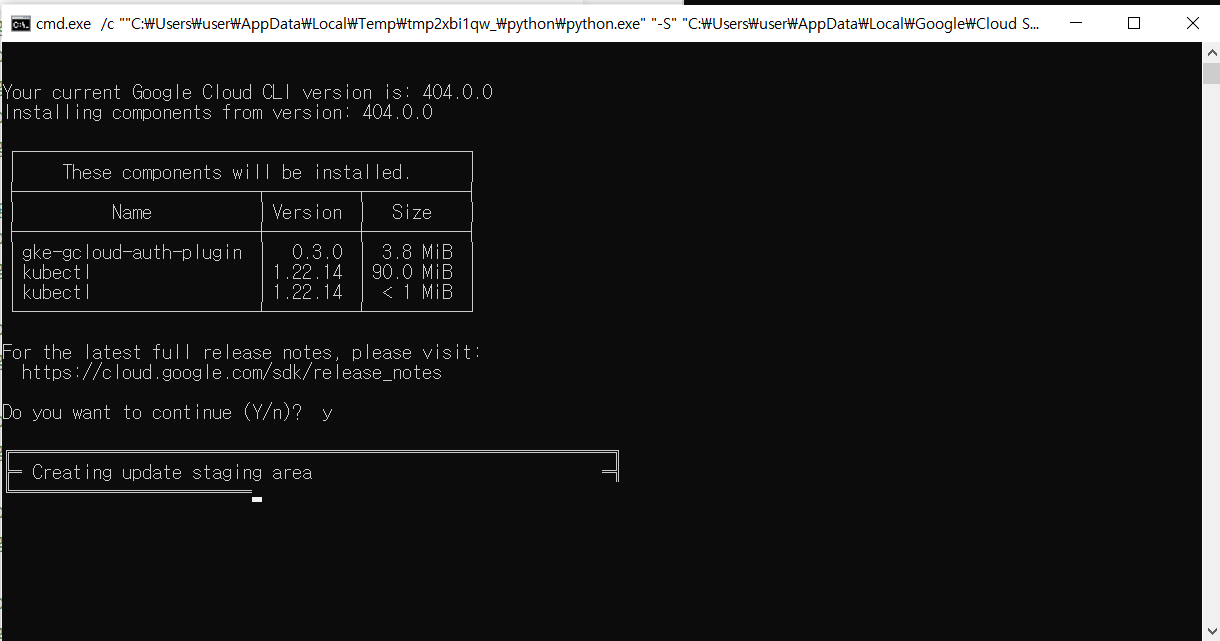

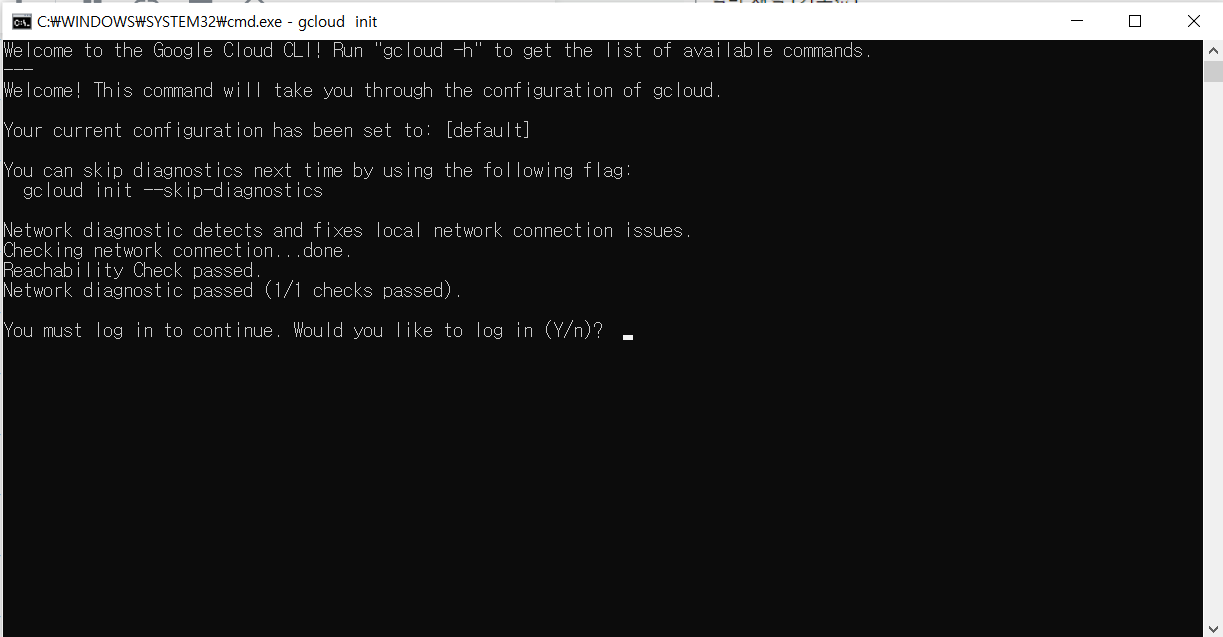

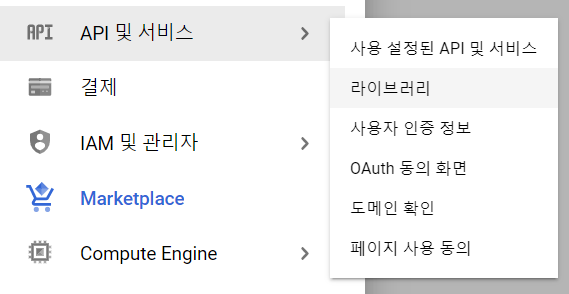

Google cloud 접속

-

새 프로젝트 만들고 선택하기

-

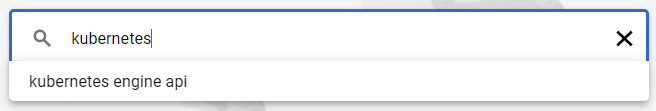

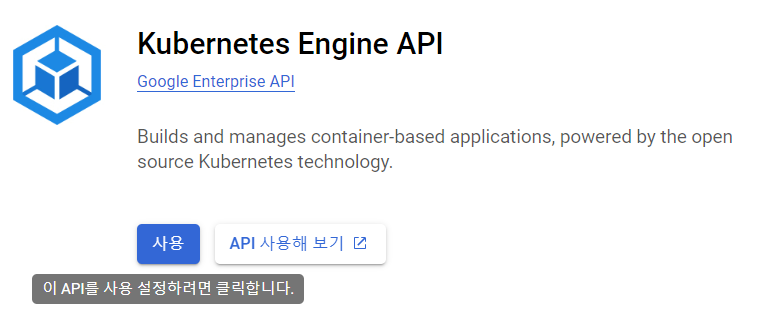

kubernetes engne api 사용 누르기

-

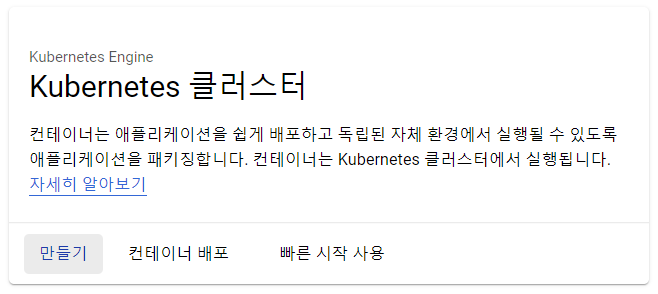

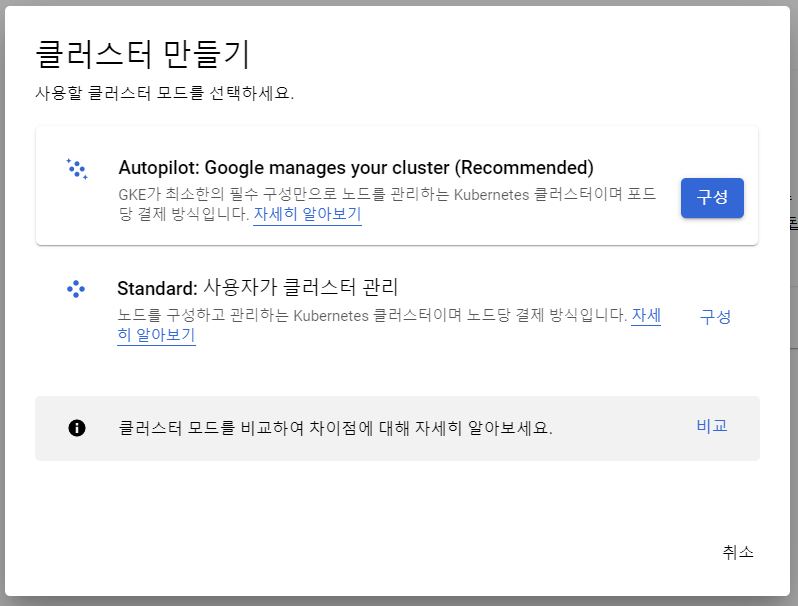

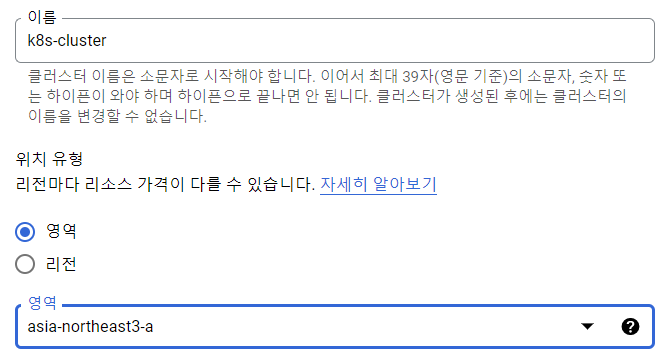

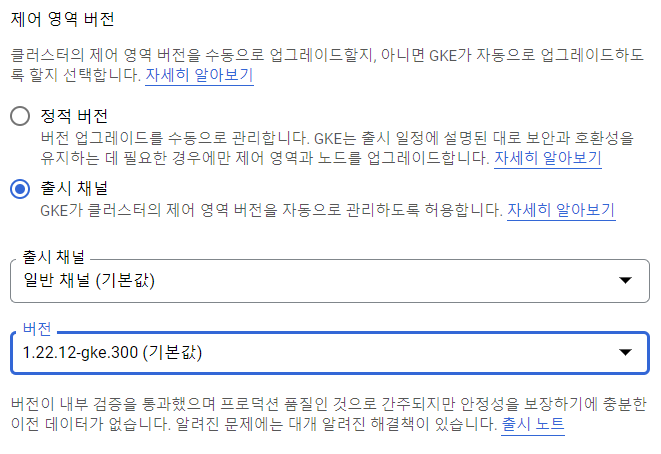

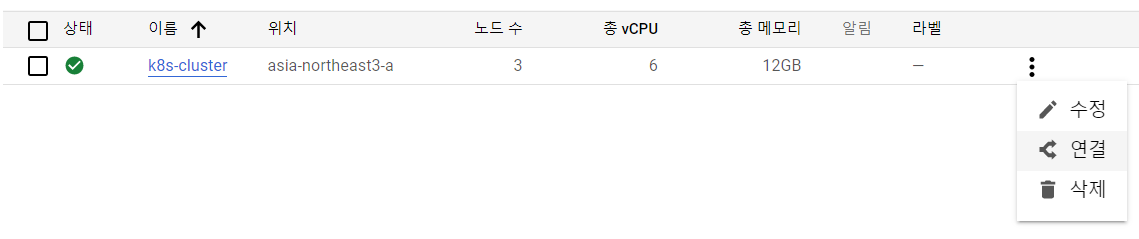

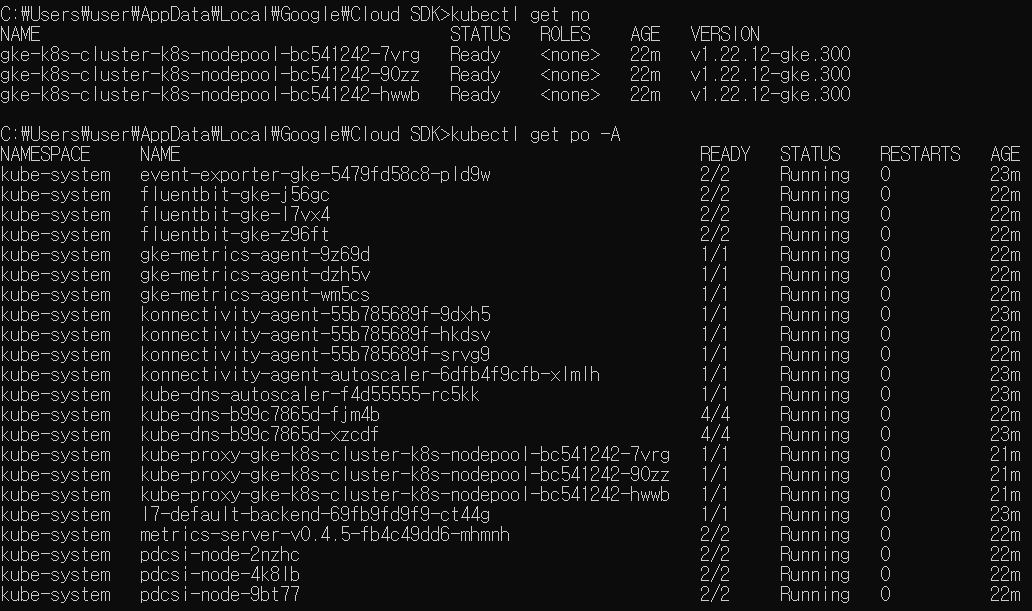

kubernetes cluster 만들기