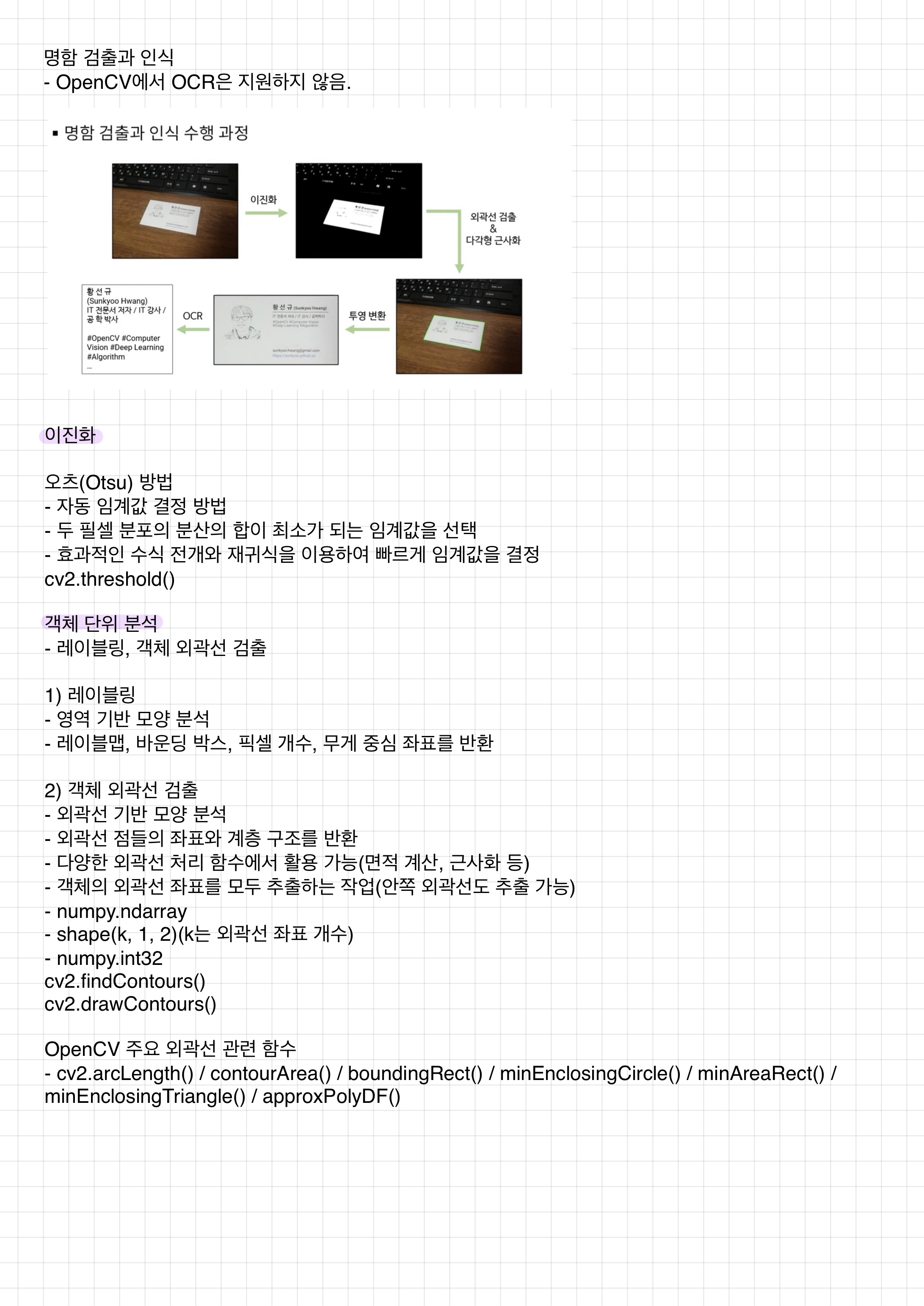

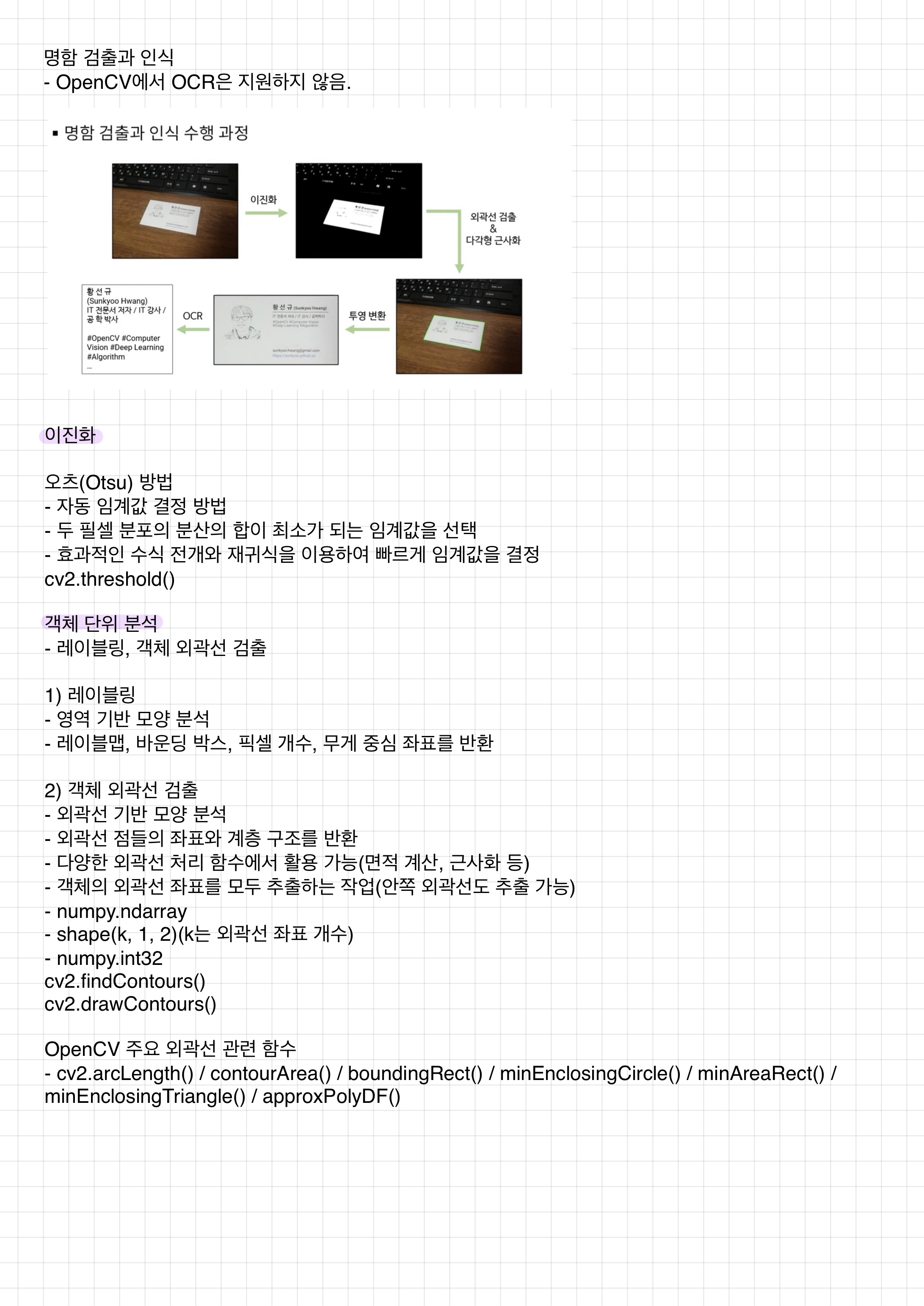

명함 검출과 인식

src = cv2.imread('/Users/nayoung/Documents/ds_study/data/namecard1.jpg')

if src is None:

print('Image load failed!')

sys.exit()

src_gray = cv2.cvtColor(src, cv2.COLOR_BGR2GRAY)

th, src_bin = cv2.threshold(src_gray, 0, 255, cv2.THRESH_BINARY | cv2.THRESH_OTSU)

contours, _ = cv2.findContours(src_bin, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_NONE)

'''

# 이진 영상을 컬러 영상 형식으로 변환

dst = cv2.cvtColor(src_bin, cv2.COLOR_GRAY2BGR)

# 검출된 외곽선 모두 그리기

for i in range(len(contours)):

c = (random.randint(0,255), random.randint(0,255), random.randint(0,255))

cv2.drawContours(dst, contours, i, c, 2) # 2: 픽셀 두께

cv2.imshow('src', src)

cv2.imshow('dst', dst)

cv2.waitKey()

cv2.destroyAllWindows()

'''

for pts in contours:

if cv2.contourArea(pts) < 1000:

continue

approx = cv2.approxPolyDP(pts, cv2.arcLength(pts, True)*0.02, True)

if len(approx) == 4:

cv2.polylines(src, [approx], True, (0,255,0), 2, cv2.LINE_AA)

cv2.imshow('src', src)

cv2.waitKey()

cv2.destroyAllWindows()

명함 똑바로 피기1

src = cv2.imread('/Users/nayoung/Documents/ds_study/data/namecard1.jpg')

w, h = 720, 400

srcQuad = np.array([[324,308], [760,369], [718,611], [231,517]], np.float32)

dstQuad = np.array([[0,0], [w-1,0], [w-1,h-1], [0,h-1]], np.float32)

dst = np.zeros((h,w), np.uint8)

pers = cv2.getPerspectiveTransform(srcQuad, dstQuad)

dst = cv2.warpPerspective(src, pers, (w,h))

cv2.imshow('src', src)

cv2.imshow('dst', dst)

cv2.waitKey()

cv2.destroyAllWindows()

명함 똑바로 피기2

def reorderPts(pts):

idx = np.lexsort((pts[:,1], pts[:,0]))

pts = pts[idx]

if pts[0,1] > pts[1,1]:

pts[[0,1]] = pts[[1,0]]

if pts[2,1] < pts[3,1]:

pts[[2,3]] = pts[[3,2]]

return pts

src = cv2.imread('/Users/nayoung/Documents/ds_study/data/namecard1.jpg')

w, h = 720, 400

srcQuad = np.array([[0,0], [0,h], [w,h], [w,0]], np.float32)

dstQuad = np.array([[0,0], [0,h], [w,h], [w,0]], np.float32)

dst = np.zeros((h,w), np.uint8)

src_gray = cv2.cvtColor(src, cv2.COLOR_BGR2GRAY)

_, src_bin = cv2.threshold(src_gray, 0, 255, cv2.THRESH_BINARY | cv2.THRESH_OTSU)

contours, _ = cv2.findContours(src_bin, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_NONE)

for pts in contours:

if cv2.contourArea(pts) < 1000:

continue

approx = cv2.approxPolyDP(pts, cv2.arcLength(pts, True)*0.02, True)

if len(approx) == 4:

corners = approx.reshape(4,2).astype(np.float32)

srcQuad = reorderPts(corners)

pers = cv2.getPerspectiveTransform(srcQuad, dstQuad)

dst = cv2.warpPerspective(src, pers, (w,h))

cv2.imshow('src', src)

cv2.imshow('dst', dst)

cv2.waitKey()

cv2.destroyAllWindows()

얼굴 검출과 응용

얼굴 검출

import sys

import numpy as np

import cv2

model = '/Users/nayoung/Documents/ds_study/data/res10_300x300_ssd_iter_140000_fp16.caffemodel'

config = '/Users/nayoung/Documents/ds_study/data/deploy.prototxt.txt'

cap = cv2.VideoCapture(0)

if not cap.isOpened():

print('Camera open failed!')

sys.exit()

net = cv2.dnn.readNet(model, config)

if net.empty():

print('Net open failed!')

sys.exit()

while True:

ret, frame = cap.read()

if not ret:

break

blob = cv2.dnn.blobFromImage(frame, 1, (300, 300), (104, 177, 123))

net.setInput(blob)

out = net.forward()

detect = out[0, 0, :, :]

(h, w) = frame.shape[:2]

for i in range(detect.shape[0]):

confidence = detect[i, 2]

if confidence < 0.5:

break

x1 = int(detect[i, 3] * w)

y1 = int(detect[i, 4] * h)

x2 = int(detect[i, 5] * w)

y2 = int(detect[i, 6] * h)

cv2.rectangle(frame, (x1, y1), (x2, y2), (0, 255, 0))

label = f'Face: {confidence:4.2f}'

cv2.putText(frame, label, (x1, y1 - 1), cv2.FONT_HERSHEY_SIMPLEX, 0.8, (0, 255, 0), 1, cv2.LINE_AA)

cv2.imshow('frame', frame)

if cv2.waitKey(1) == 27:

break

cap.release()

cv2.destroyAllWindows()

모자이크

import sys

import numpy as np

import cv2

model = '/Users/nayoung/Documents/ds_study/data/res10_300x300_ssd_iter_140000_fp16.caffemodel'

config = '/Users/nayoung/Documents/ds_study/data/deploy.prototxt.txt'

cap = cv2.VideoCapture(0)

if not cap.isOpened():

print('Camera open failed!')

sys.exit()

net = cv2.dnn.readNet(model, config)

if net.empty():

print('Net open failed!')

sys.exit()

while True:

ret, frame = cap.read()

if not ret:

break

blob = cv2.dnn.blobFromImage(frame, 1, (300, 300), (104, 177, 123))

net.setInput(blob)

out = net.forward()

detect = out[0, 0, :, :]

(h, w) = frame.shape[:2]

for i in range(detect.shape[0]):

confidence = detect[i, 2]

if confidence < 0.5:

break

x1 = int(detect[i, 3] * w)

y1 = int(detect[i, 4] * h)

x2 = int(detect[i, 5] * w)

y2 = int(detect[i, 6] * h)

face_img = frame[y1:y2, x1:x2]

fh, fw = face_img.shape[:2]

face_img2 = cv2.resize(face_img, (0, 0), fx=1./16, fy=1./16)

cv2.resize(face_img2, (fw, fh), dst=face_img, interpolation=cv2.INTER_NEAREST)

cv2.imshow('frame', frame)

if cv2.waitKey(1) == 27:

break

cap.release()

cv2.destroyAllWindows()